Enhanced Drone Detection Model for Edge Devices Using Knowledge Distillation and Bayesian Optimization †

Abstract

1. Introduction

2. Literature Review

2.1. Related Works

2.2. Research Gap

3. Materials and Methods

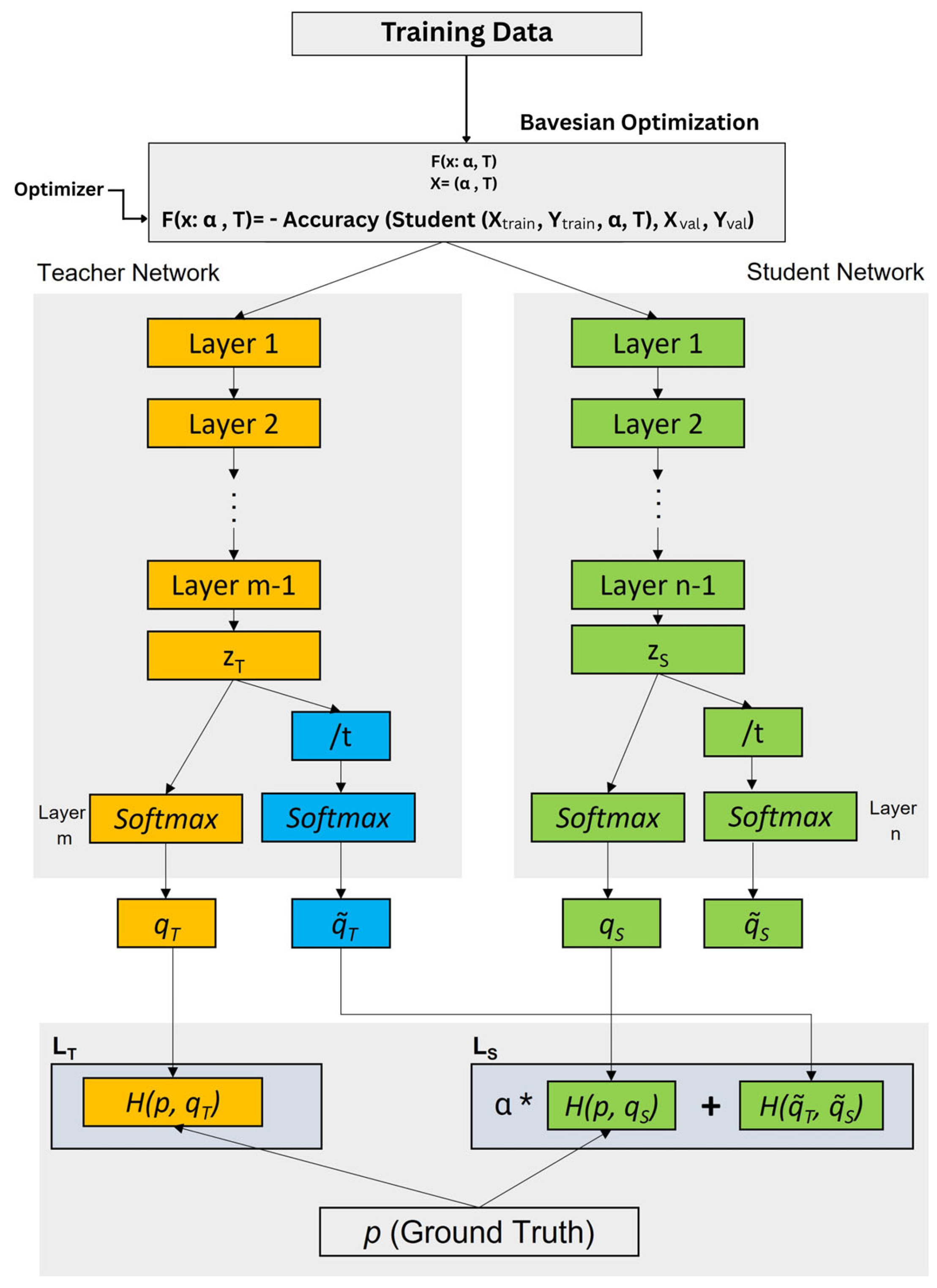

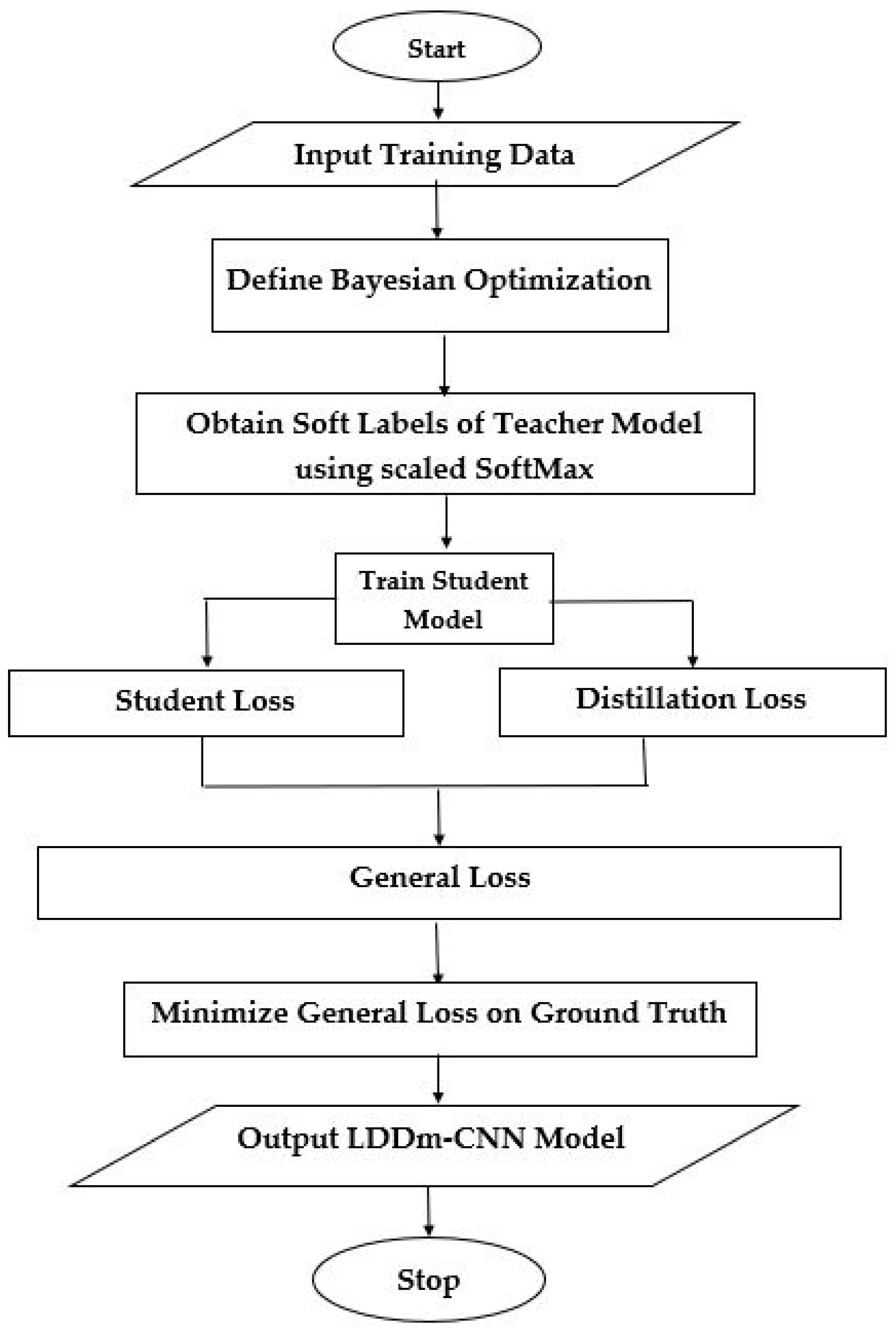

3.1. Proposed LDDm-CNN Model

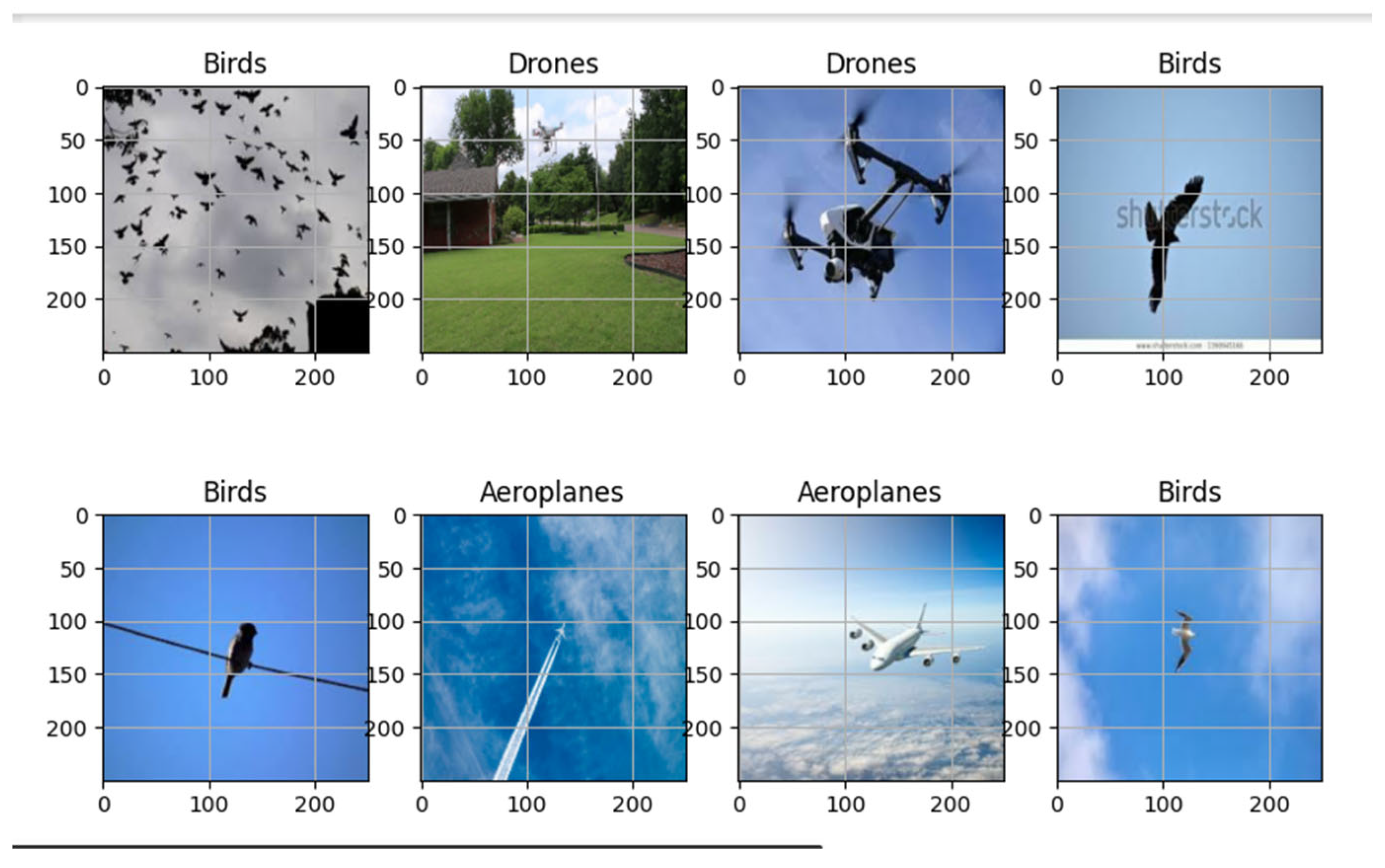

3.2. Dataset Collection

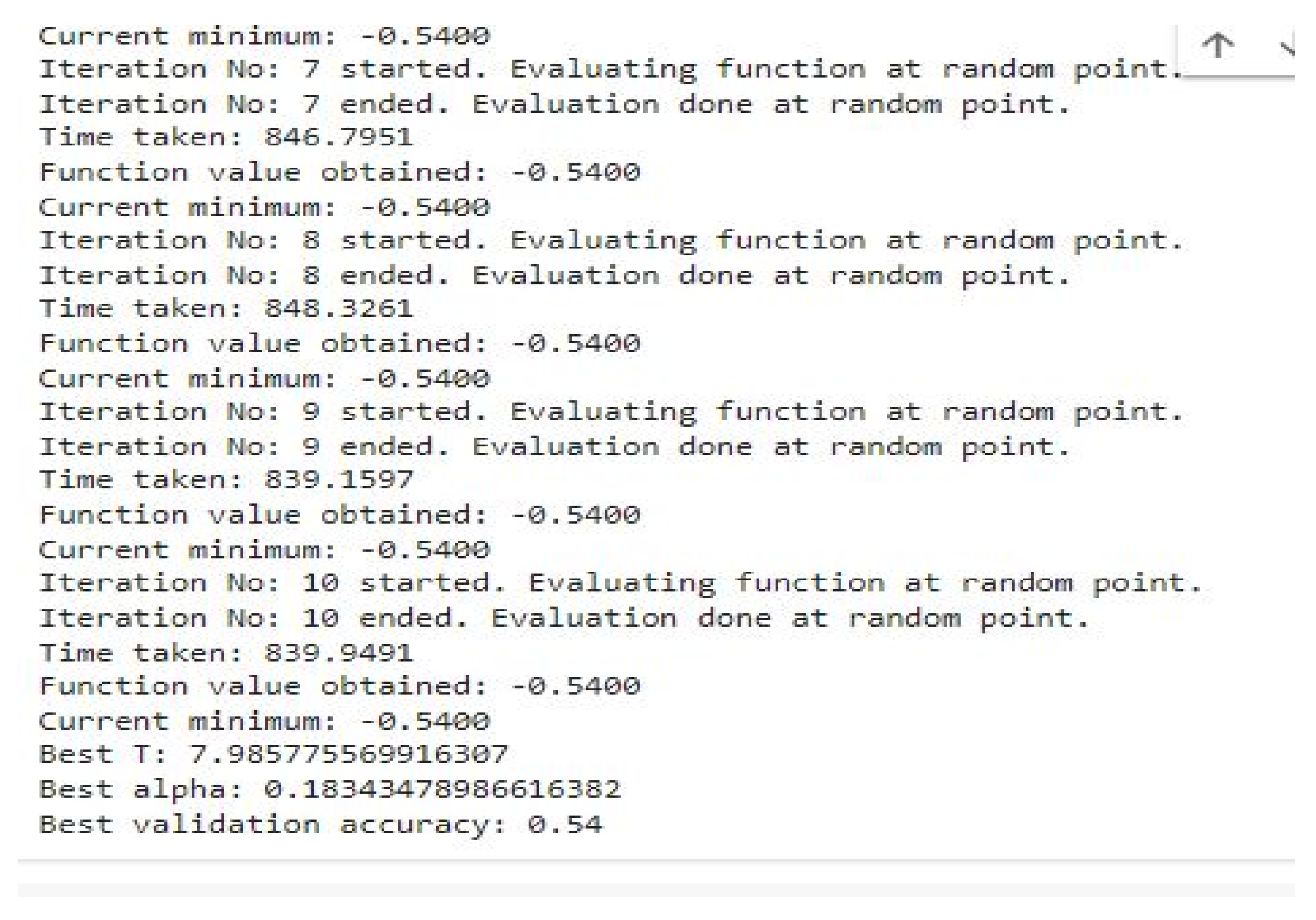

3.3. Model Formulation

3.4. Experimental Setup

3.5. Evaluation Metrics

3.5.1. Accuracy

3.5.2. Mean Average Precision (mAP)

3.5.3. F1-Score

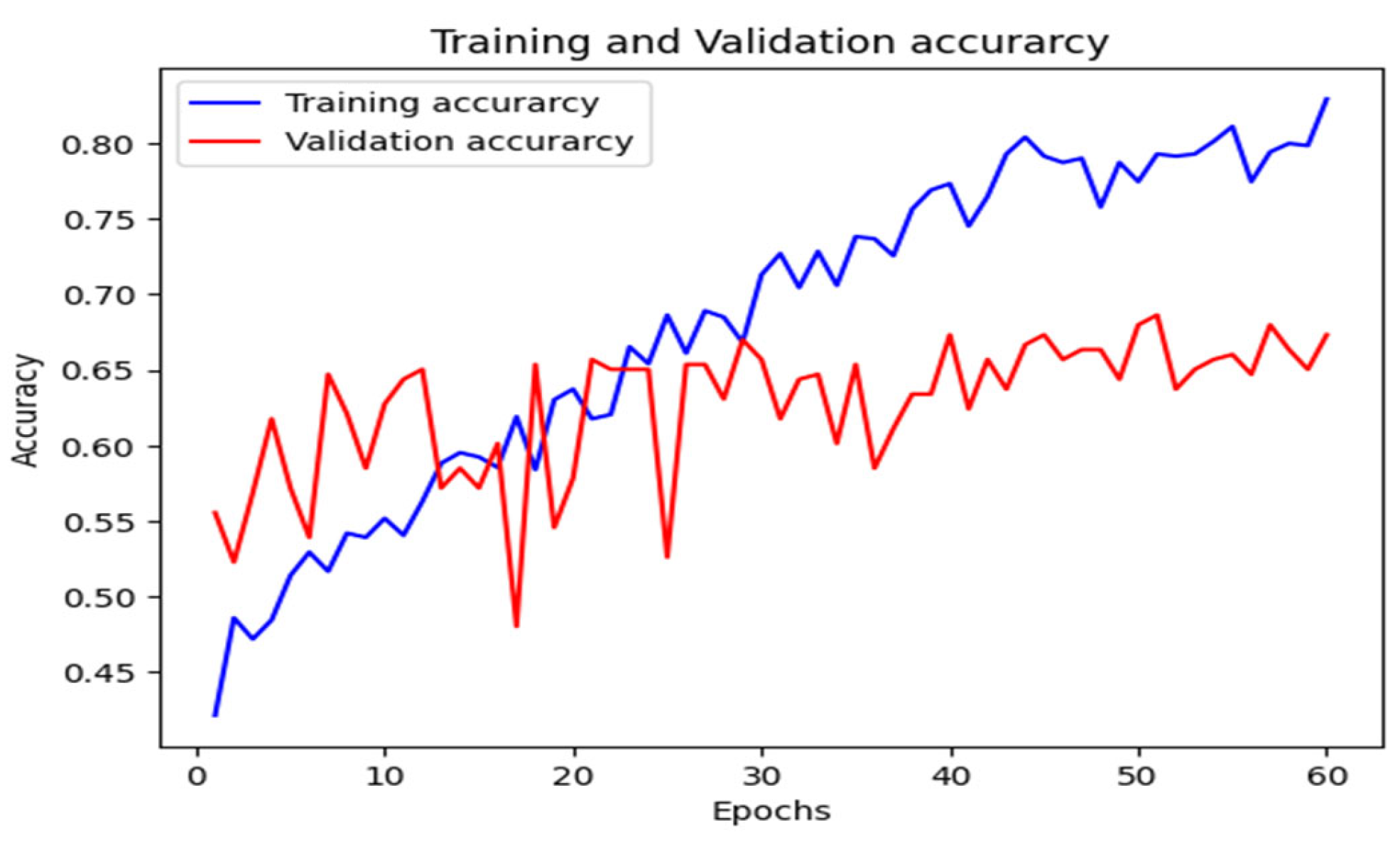

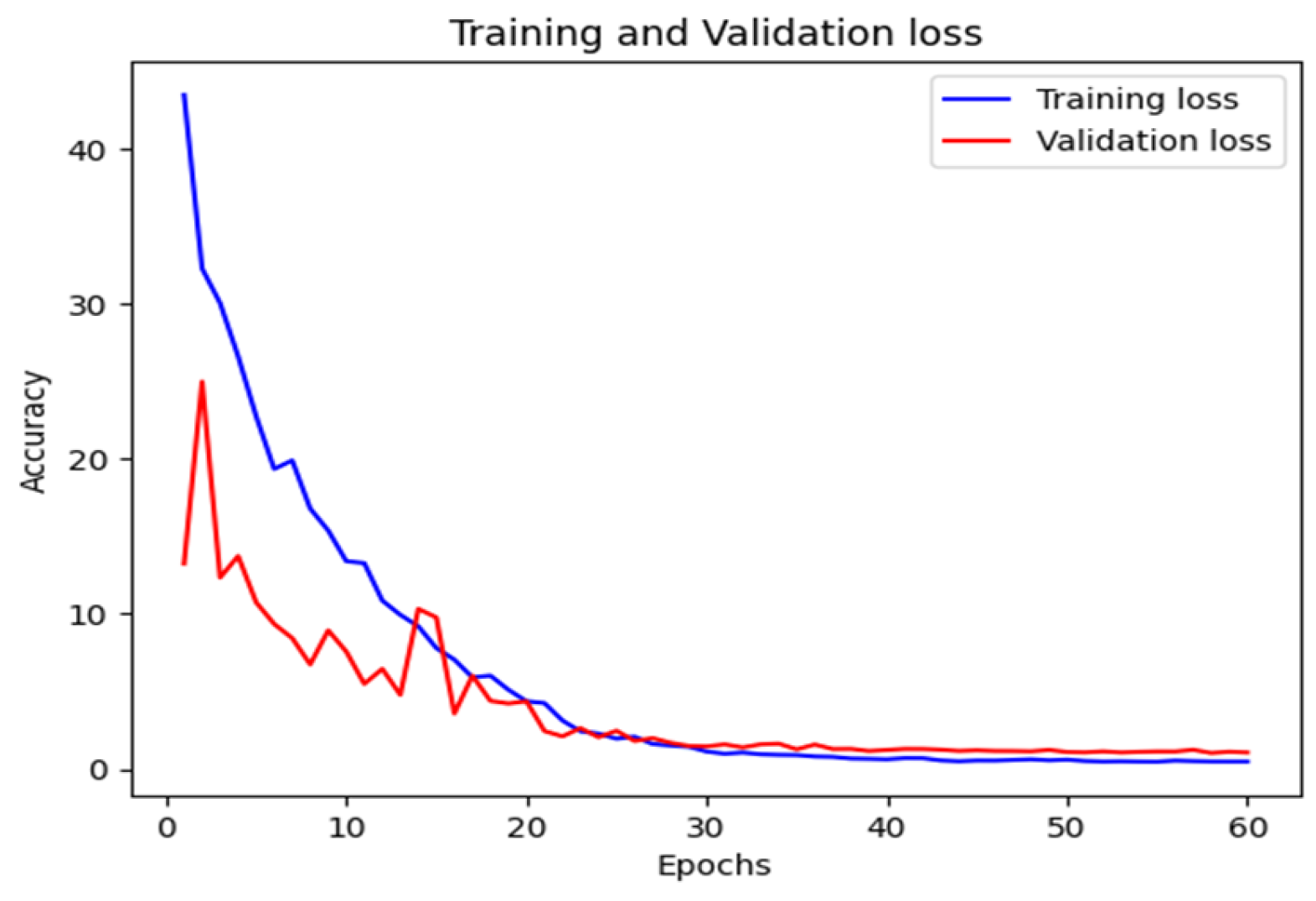

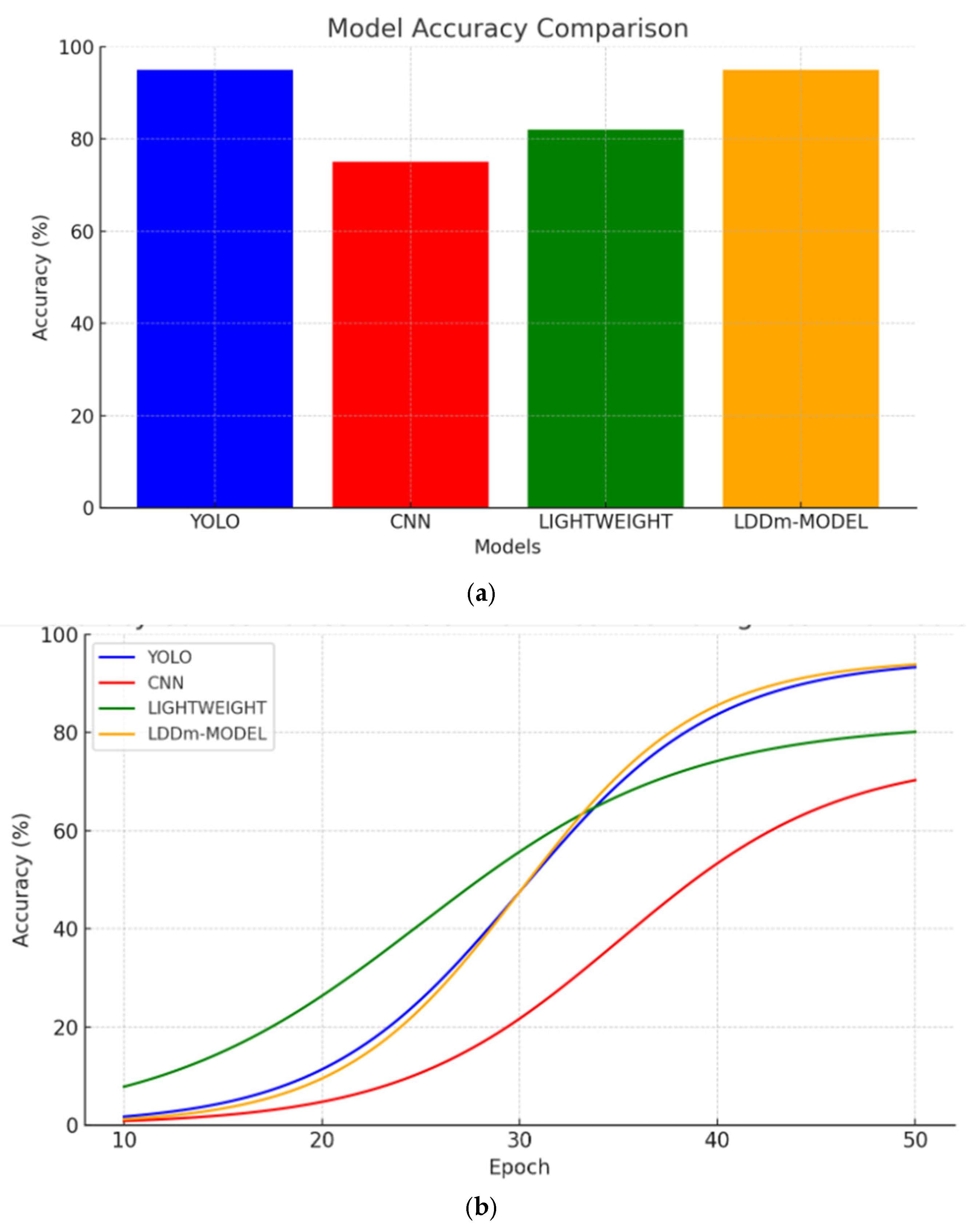

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kardasz, P.; Doskocz, J. Drones and Possibilities of Their Using. J. Civ. Environ. Eng. 2016, 6, 233. [Google Scholar] [CrossRef]

- Hossain, R. A Short Review of the Drone Technology. Int. J. Mechatron. Manuf. Technol. 2022, 7, 53–67. [Google Scholar]

- Al-Sa’d, M.F.; Al-Ali, A.; Mohamed, A.; Khattab, T.; Erbad, A. RF-based drone detection and identification using deep learning approaches: An initiative towards a large open source drone database. Future Gener. Comput. Syst. 2019, 100, 86–97. [Google Scholar] [CrossRef]

- Bera, B.; Das, A.K.; Sutrala, A.K. Private blockchain-based access control mechanism for unauthorized UAV detection and mitigation in Internet of Drones environment. Comput. Commun. 2021, 166, 91–109. [Google Scholar] [CrossRef]

- Musa, A.; Hamada, M.; Hassan, M. A Theoretical Framework Towards Building a Lightweight Model for Pothole Detection using Knowledge Distillation Approach. SHS Web Conf. 2022, 139, 03002. [Google Scholar] [CrossRef]

- Taha, B.; Member, S.; Shoufan, A. Machine Learning-Based Drone Detection and Classification: State-of-the-Art in Research. IEEE Access 2019, 7, 138669–138682. [Google Scholar] [CrossRef]

- Musa, A.; Hassan, M.; Hamada, M.; Aliyu, F. Low-Power Deep Learning Model for Plant Disease Detection for Smart-Hydroponics Using Knowledge Distillation Techniques. J. Low Power Electron. Appl. 2022, 12, 24. [Google Scholar] [CrossRef]

- Loka, N.R.B.S.; Couckuyt, I.; Garbuglia, F.; Spina, D.; Van Nieuwenhuyse, I.; Dhaene, T. Bi-objective Bayesian optimization of engineering problems with cheap and expensive cost functions. Eng. Comput. 2022, 39, 1923–1933. [Google Scholar] [CrossRef]

- Dong, Y.; Ma, Y.; Li, Y.; Li, Z. High-precision real-time UAV target recognition based on improved YOLOv4. Comput. Commun. 2023, 206, 124–132. [Google Scholar] [CrossRef]

- Medaiyese, O.O.; Ezuma, M.; Lauf, A.P.; Guvenc, I. Wavelet transform analytics for RF-based UAV detection and identification system using machine learning. Pervasive Mob. Comput. 2022, 82, 101569. [Google Scholar] [CrossRef]

- Balachandran, V.; Sarath, S. A Novel Approach to Detect Unmanned Aerial Vehicle using Pix2Pix Generative Adversarial Network. In Proceedings of the 2022 Second International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 23–25 February 2022. [Google Scholar] [CrossRef]

- Unlu, E.; Zenou, E.; Riviere, N.; Dupouy, P.-E. Deep learning-based strategies for the detection and tracking of drones using several cameras. IPSJ Trans. Comput. Vis. Appl. 2019, 11, 7. [Google Scholar] [CrossRef]

- Schumann, A.; Sommer, L.; Klatte, J.; Schuchert, T.; Beyerer, J. Deep cross-domain flying object classification for robust UAV detection. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; Available online: https://ieeexplore.ieee.org/abstract/document/8078558 (accessed on 12 December 2024).

- Mahdavi, F.; Rajabi, R. Drone Detection Using Convolutional Neural Networks. In Proceedings of the 6th Iranian Conference on Signal Processing and Intelligent Systems (ICSPIS), Mashhad, Iran, 23–24 December 2020. [Google Scholar]

- Shi, Z.; Chang, X.; Yang, C.; Wu, Z.; Wu, J. An Acoustic-Based Surveillance System for Amateur Drones Detection and Localization. IEEE Trans. Veh. Technol. 2020, 69, 2731–2739. [Google Scholar] [CrossRef]

- Tong, J.; Xie, W.; Hu, Y.-H.; Bao, M.; Li, X.; He, W. Estimation of low-altitude moving target trajectory using single acoustic array. J. Acoust. Soc. Am. 2016, 139, 1848–1858. [Google Scholar] [CrossRef] [PubMed]

- Busset, J.; Perrodin, F.; Wellig, P.; Ott, B.; Heutschi, K.; Rühl, T.; Nussbaumer, T. Detection and tracking of drones using advanced acoustic cameras. In Proceedings of the Unmanned/Unattended Sensors and Sensor Networks XI; and Advanced Free-Space Optical Communication Techniques and Applications, Toulouse, France, 13 October 2015; Volume 9647. [Google Scholar] [CrossRef]

- Zulkifli, S.; Balleri, A. Design and Development of K-Band FMCW Radar for Nano-Drone Detection. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21–25 September 2020; Available online: https://ieeexplore.ieee.org/abstract/document/9266538 (accessed on 14 November 2022).

- Sun, H.; Oh, B.-S.; Guo, X.; Lin, Z. Improving the Doppler Resolution of Ground-Based Surveillance Radar for Drone Detection. IEEE Trans. Aerosp. Electron. Syst. 2019, 55, 3667–3673. [Google Scholar] [CrossRef]

- Akbal, E.; Akbal, A.; Dogan, S.; Tuncer, T. An automated accurate sound-based amateur drone detection method based on skinny pattern. Digit. Signal Process. 2023, 136, 104012. [Google Scholar] [CrossRef]

- Akter, R.; Doan, V.-S.; Lee, J.-M.; Kim, D.-S. CNN-SSDI: Convolution neural network inspired surveillance system for UAVs detection and identification. Comput. Netw. 2021, 201, 108519. [Google Scholar] [CrossRef]

- Nalamati, M.; Kapoor, A.; Saqib, M.; Sharma, N.; Blumenstein, M. Drone Detection in Long-Range Surveillance Videos. In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taipei, Taiwan, 18–21 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Wu, M.; Xie, W.; Shi, X.; Shao, P.; Shi, Z. Real-Time Drone Detection Using Deep Learning Approach. In Machine Learning and Intelligent Communications; Springer: Cham, Swizterland, 2018; pp. 22–32. [Google Scholar] [CrossRef]

- Singha, S.; Aydin, B. Automated Drone Detection Using YOLOv4. Drones 2021, 5, 95. [Google Scholar] [CrossRef]

- Cheng, Q.; Wang, H.; Zhu, B.; Shi, Y.; Xie, B. A Real-Time UAV Target Detection Algorithm Based on Edge Computing. Drones 2023, 7, 95. [Google Scholar] [CrossRef]

- Kishore, J.; Mukherjee, S. Autotuning Student Models via Bayesian Optimization with Knowledge Distilled from Self-Supervised Teacher Models. January 2023. Available online: https://ssrn.com/abstract=4579155 (accessed on 12 December 2024).

- Agnihotri, A.; Batra, N. Exploring Bayesian Optimization. Distill 2020, 5, e26. [Google Scholar] [CrossRef]

- Luo, H.; Chen, T.; Li, X.; Li, S.; Zhang, C.; Zhao, G.; Liu, X. 2023. KeepEdge: A Knowledge Distillation Empowered Edge Intelligence Framework for Visual Assisted Positioning in UAV Delivery. IEEE Trans. Mob. Comput. 2023, 22, 4729–4741. [Google Scholar] [CrossRef]

- Zhao, Y.; Ju, Z.; Sun, T.; Dong, F.; Li, J.; Yang, R.; Fu, Q.; Lian, C.; Shan, P. TGC-YOLOv5: An Enhanced YOLOv5 Drone Detection Model Based on Transformer, GAM & CA Attention Mechanism. Drones 2023, 7, 446. [Google Scholar] [CrossRef]

- Musa, A.; Adam, F.M.; Ibrahim, U.; Zandam, A.Y. Learning from small datasets: An efficient deep learning model for COVID-19 detection from chest X-Ray using dataset distillation technique. In Proceedings of the 2022 IEEE Nigeria 4th International Conference on Disruptive Technologies for Sustainable Development (NIGERCON), Lagos, Nigeria, 5–7 April 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Zhao, Q.; Liu, B.; Lyu, S.; Wang, C.; Zhang, H. TPH-YOLOv5++: Boosting Object Detection on Drone-Captured Scenarios with Cross-Layer Asymmetric Transformer. Remote Sens. 2023, 15, 1687. [Google Scholar] [CrossRef]

- Liu, Y.; Zhao, Y.; Zhang, X.; Wang, X.; Lian, C.; Li, J.; Shan, P.; Fu, C.; Lyu, X.; Li, L.; et al. MobileSAM-Track: Lightweight One-Shot Tracking and Segmentation of Small Objects on Edge Devices. Remote Sens. 2023, 15, 5665. [Google Scholar] [CrossRef]

- Li, G.; Liu, E.; Wang, Y.; Yang, Y.; Liu, H. UAV-YOLOv5: A Lightweight Object Detection Algorithm on Drone-Captured Scenarios. Sci. J. Intell. Syst. Res. 2024, 6, 24–33. [Google Scholar] [CrossRef]

- Available online: https://www.kaggle.com/datasets/harshwalia/birds-vs-drone-dataset (accessed on 12 December 2024).

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond Accuracy, F-Score and ROC: A Family of Discriminant Measures for Performance Evaluation. In AI 2006: Advances in Artificial Intelligence; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4304, pp. 1015–1021. [Google Scholar] [CrossRef]

- Ajakwe, S.; Arkter, R.; Kim, D.; Kim, D.; Lee, J.M. Lightweight cnn model for detection of unauthorized uav in military reconnaissance operations. Korea Inst. Commun. Sci. 2021, 11, 113–115. [Google Scholar]

| Layer (Type) | Output Shape | Parameter |

|---|---|---|

| Conv2d (conv2D) | (None, 248, 248, 32) | 896 |

| activation_12 (Activation) | (None, 248, 248, 32) | 0 |

| max_poling2d_14 (Maxpooling2D) | (None, 124, 124, 32) | 0 |

| dropout_23 (Dropout) | (None, 124, 124, 32) | 0 |

| batch_normalization_10 (BatchNormalization) | (None, 124, 124, 32) | 128 |

| dropout_24 (Dropout) | (None, 124, 124, 32) | 0 |

| flatten_10 (Flatten) | (None, 492,032) | 0 |

| dense_24 (Dense) | (None, 3) | 1,476,099 |

| Models | Precision (%) | Recall (%) | F1 Score (%) | Accuracy | Model Size | Training Time | No. Params |

|---|---|---|---|---|---|---|---|

| Proposed model | 0.89 | 0.90 | 0.89 | 0.95 | 5.63 MB | 10 min | 1,477,123 |

| Teacher model | 0.70 | 0.74 | 0.73 | 0.74 | 281.35 MB | 14 min | 73,755,403 |

| Models | Accuracy (%) | Recall (%) | Precision (%) | Model Size | Training Time | No. Params |

|---|---|---|---|---|---|---|

| [24] | - | 0.68 | 0.95 | 244 MB | 6 h | 64 million |

| [35] | 0.996 | 0.998 | 0.997 | 1.5 MB | 68 min | 300,000 |

| [22]. | 0.975 | 0.980 | 0.980 | 12 MB | 8.9 h | 2,444,928 |

| Proposed model | 0.95 | 0.90 | 0.89 | 5.63 MB | 10 min | 1,477,123 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salisu, M.L.; Gambo, F.L.; Musa, A.; Abdullahi, A.A. Enhanced Drone Detection Model for Edge Devices Using Knowledge Distillation and Bayesian Optimization. Eng. Proc. 2025, 87, 71. https://doi.org/10.3390/engproc2025087071

Salisu ML, Gambo FL, Musa A, Abdullahi AA. Enhanced Drone Detection Model for Edge Devices Using Knowledge Distillation and Bayesian Optimization. Engineering Proceedings. 2025; 87(1):71. https://doi.org/10.3390/engproc2025087071

Chicago/Turabian StyleSalisu, Maryam Lawan, Farouk Lawan Gambo, Aminu Musa, and Aminu Aliyu Abdullahi. 2025. "Enhanced Drone Detection Model for Edge Devices Using Knowledge Distillation and Bayesian Optimization" Engineering Proceedings 87, no. 1: 71. https://doi.org/10.3390/engproc2025087071

APA StyleSalisu, M. L., Gambo, F. L., Musa, A., & Abdullahi, A. A. (2025). Enhanced Drone Detection Model for Edge Devices Using Knowledge Distillation and Bayesian Optimization. Engineering Proceedings, 87(1), 71. https://doi.org/10.3390/engproc2025087071