Fruit and Vegetable Recognition Using MobileNetV2: An Image Classification Approach †

Abstract

1. Introduction

1.1. Gap Analysis

1.2. Problem Statement

- How can we develop an efficient and accurate food recognition model using MobileNetV2?

- What are the performance metrics of our model on a diverse dataset of fruits and vegetables?

- How can the recognized food items be used to recommend relevant recipes?

1.3. Novelty of Our Work

1.4. Our Solutions

2. Materials and Methods

2.1. Dataset

2.2. Experimental Settings

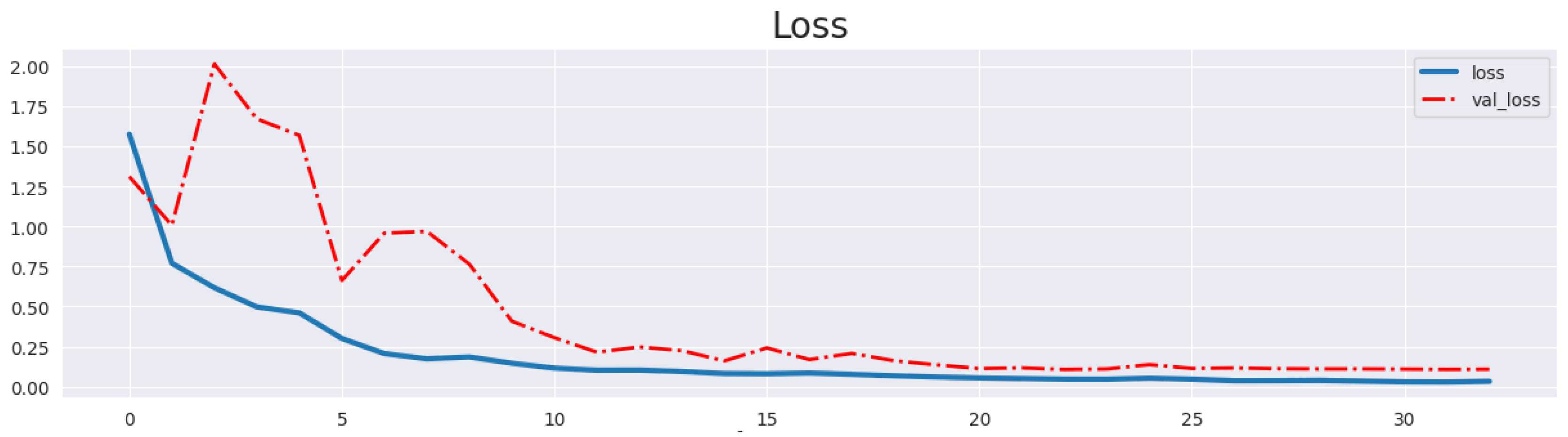

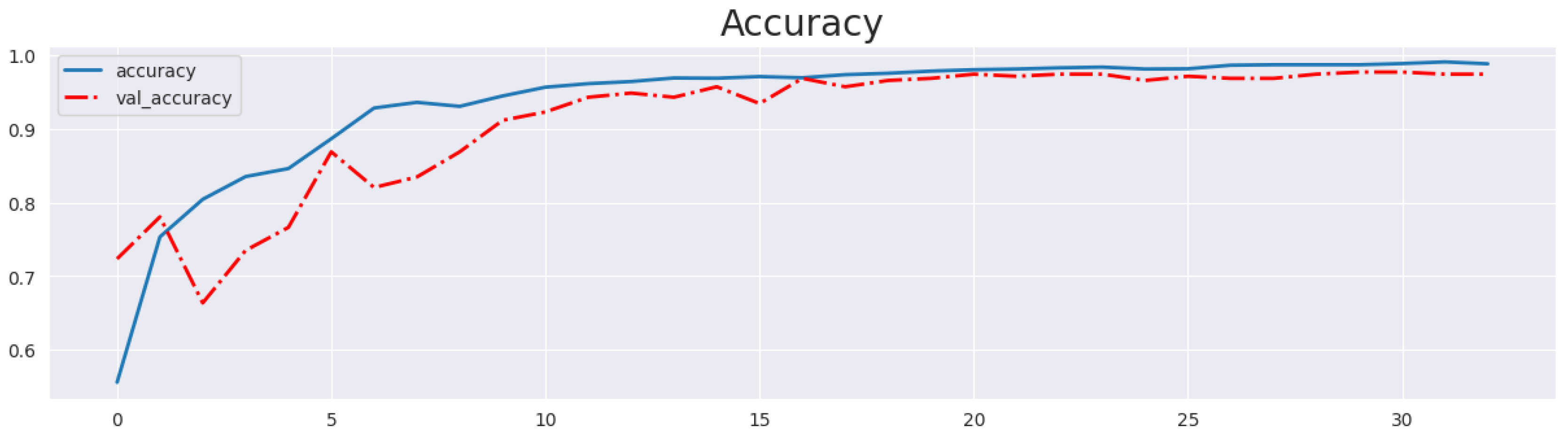

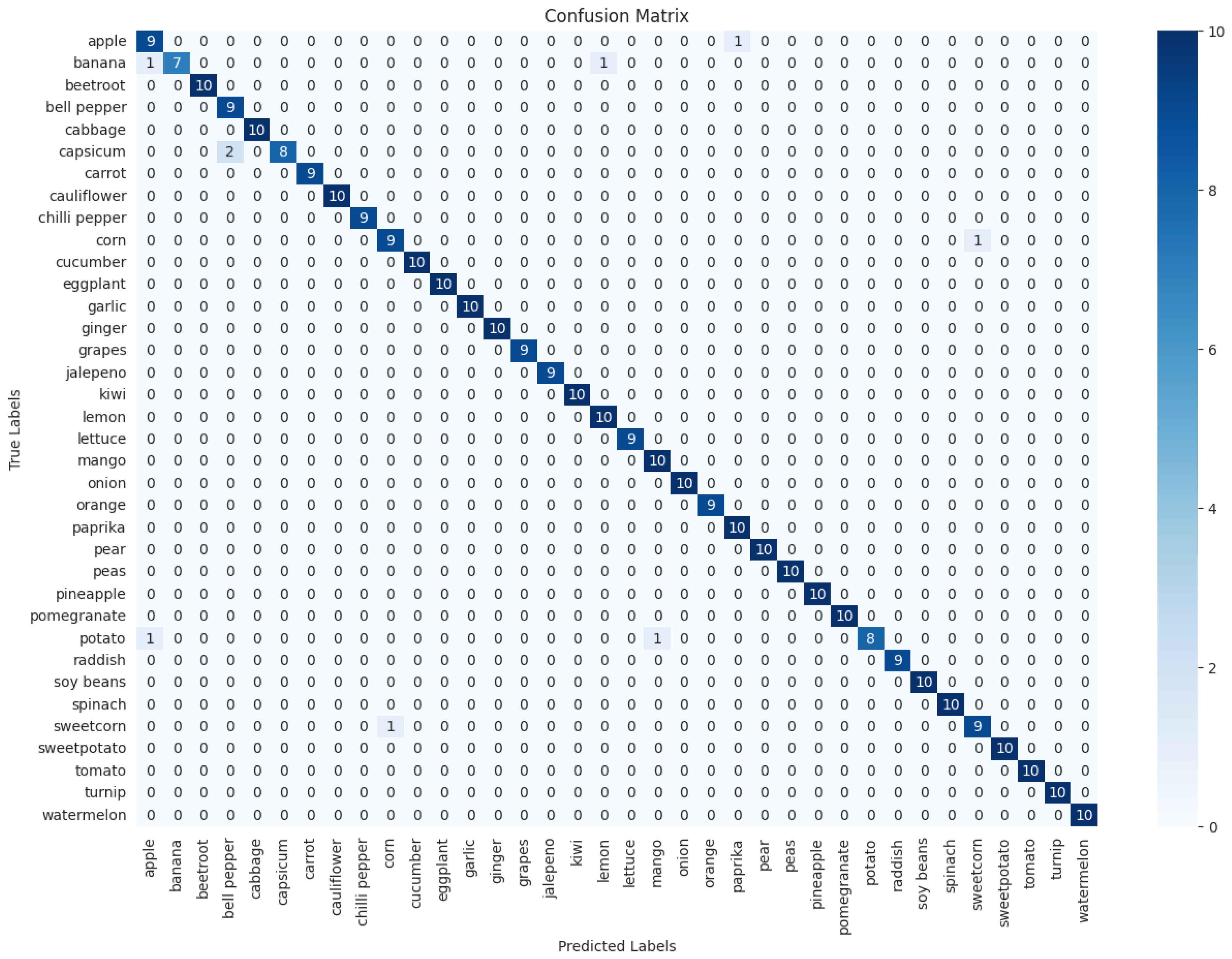

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Min, W.; Wang, Z.; Liu, Y.; Luo, M.; Kang, L.; Wei, X.; Wei, X.; Jiang, S. Large scale visual food recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9932–9949. [Google Scholar] [CrossRef] [PubMed]

- Mohanty, S.P.; Singhal, G.; Scuccimarra, E.A.; Kebaili, D.; Héritier, H.; Boulanger, V.; Salathé, M. The food recognition benchmark: Using deep learning to recognize food in images. Front. Nutr. 2022, 9, 875143. [Google Scholar] [CrossRef] [PubMed]

- Khan, T.A.; Ling, S.H. A novel hybrid gravitational search particle swarm optimization algorithm. Eng. Appl. Artif. Intell. 2021, 102, 104263. [Google Scholar] [CrossRef]

- Rizvi, A.A.; Yang, D.; Khan, T.A. Optimization of biomimetic heliostat field using heuristic optimization algorithms. Knowl.-Based Syst. 2022, 258, 110048. [Google Scholar] [CrossRef]

- Khan, T.A.; Ling, S.H.; Rizvi, A.A. Optimisation of electrical Impedance tomography image reconstruction error using heuristic algorithms. Artif. Intell. Rev. 2023, 56, 15079–15099. [Google Scholar] [CrossRef]

- Siddique, A.B.; Bakar, M.A.; Ali, R.H.; Arshad, U.; Ali, N.; Abideen, Z.U.; Khan, T.A.; Ijaz, A.Z.; Imad, M. Studying the Effects of Feature Selection Approaches on Machine Learning Techniques for Mushroom Classification Problem. In Proceedings of the 2023 International Conference on IT and Industrial Technologies (ICIT), Chiniot, Pakistan, 9–10 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Ahmad, I.; Alqarni, M.A.; Almazroi, A.A.; Tariq, A. Experimental evaluation of clickbait detection using machine learning models. Intell. Autom. Soft Comput. 2020, 26, 1335–1344. [Google Scholar] [CrossRef]

- Bhardwaj, S.; Salim, S.M.; Khan, T.A.; JavadiMasoudian, S. Automated music generation using deep learning. In Proceedings of the 2022 International Conference Automatics and Informatics (ICAI), Varna, Bulgaria, 6–8 October 2022; pp. 193–198. [Google Scholar] [CrossRef]

- Khan, R.; Kumar, S.; Dhingra, N.; Bhati, N. The use of different image recognition techniques in food safety: A study. J. Food Qual. 2021, 2021, 7223164. [Google Scholar] [CrossRef]

- Carrillo, H.; Clément, M.; Bugeau, A.; Simo-Serra, E. Diffusart: Enhancing Line Art Colorization with Conditional Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 3485–3489. [Google Scholar]

- Muhammad, D.; Ahmed, I.; Naveed, K.; Bendechache, M. An explainable deep learning approach for stock market trend prediction. Heliyon 2024, 10, e40095. [Google Scholar] [CrossRef] [PubMed]

- Khan, T.A.; Ling, S.H.; Mohan, A.S. Advanced gravitational search algorithm with modified exploitation strategy. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 1056–1061. [Google Scholar] [CrossRef]

- Huang, Z.; Xie, H.; Fukusato, T.; Miyata, K. AniFaceDrawing: Anime Portrait Exploration during Your Sketching. arXiv 2023, arXiv:2306.07476. [Google Scholar]

- Karunanithi, M.; Chatasawapreeda, P.; Khan, T.A. A predictive analytics approach for forecasting bike rental demand. Decis. Anal. J. 2024, 11, 100482. [Google Scholar] [CrossRef]

- Karunanithi, M.; Braitea, A.A.S.; Rizvi, A.A.; Khan, T.A. Forecasting solar irradiance using machine learning methods. In Proceedings of the 2023 IEEE 64th International Scientific Conference on Information Technology and Management Science of Riga Technical University (ITMS), Riga, Latvia, 5–6 October 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Ishaq, M.H.; Mustafa, R.; Arshad, U.; ul Abideen, Z.; Ali, R.H.; Habib, A. Deciphering Faces: Enhancing Emotion Detection with Machine Learning Techniques. In Proceedings of the 2023 18th International Conference on Emerging Technologies (ICET), Peshawar, Pakistan, 6–7 November 2023; pp. 310–314. [Google Scholar] [CrossRef]

- Ahmad, I.; Yousaf, M.; Yousaf, S.; Ahmad, M.O. Fake news detection using machine learning ensemble methods. Complexity 2020, 2020, 8885861. [Google Scholar] [CrossRef]

- Ahmad, I.; Akhtar, M.U.; Noor, S.; Shahnaz, A. Missing link prediction using common neighbor and centrality based parameterized algorithm. Sci. Rep. 2020, 10, 364. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, I.; Ahmad, M.O.; Alqarni, M.A.; Almazroi, A.A.; Khalil, M.I.K. Using algorithmic trading to analyze short term profitability of Bitcoin. PeerJ Comput. Sci. 2021, 7, e337. [Google Scholar] [CrossRef] [PubMed]

- Haider, A.; Siddique, A.B.; Ali, R.H.; Imad, M.; Ijaz, A.Z.; Arshad, U.; Ali, N.; Saleem, M.; Shahzadi, N. Detecting Cyberbullying Using Machine Learning Approaches. In Proceedings of the 2023 International Conference on IT and Industrial Technologies (ICIT), Chiniot, Pakistan, 9–10 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Mashhood, A.; ul Abideen, Z.; Arshad, U.; Ali, R.H.; Khan, A.A.; Khan, B. Innovative Poverty Estimation through Machine Learning Approaches. In Proceedings of the 2023 18th International Conference on Emerging Technologies (ICET), Peshawar, Pakistan, 6–7 November 2023; pp. 154–158. [Google Scholar] [CrossRef]

- Khan, W.A.; Khan, T.A.; Ali, M.A.; Abbas, S. High level modeling of an ultra wide-band baseband transmitter in MATLAB. In Proceedings of the 2009 International Conference on Emerging Technologies (ICET09), Islamabad, Pakistan, 19–20 October 2009; pp. 194–199. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, X.; Wan, S.; Ren, W.; Zhao, L.; Shen, L. Generative adversarial and self-supervised dehazing network. IEEE Trans. Ind. Inform. 2023, 20, 4187–4197. [Google Scholar] [CrossRef]

- Liu, Y.; Yan, Z.; Ye, T.; Wu, A.; Li, Y. Single nighttime image dehazing based on unified variational decomposition model and multi-scale contrast enhancement. Eng. Appl. Artif. Intell. 2022, 116, 105373. [Google Scholar] [CrossRef]

- Seth, K. Fruits and Vegetables Image Recognition Dataset. 2022. Available online: https://www.kaggle.com/datasets/kritikseth/fruit-and-vegetable-image-recognition (accessed on 10 February 2025).

| Author(s) | Methodology | Applied on | Dataset | Accuracy | No. of Bits | |||

|---|---|---|---|---|---|---|---|---|

|

Conv.

Layer |

Skip

Layer |

Trans.

Layer |

Fully Conn.

Layer | |||||

| Min et al. (2023) [1] | MobileNetV2 | ✓ | ✓ | |||||

| Mohanty et al. (2022) [2] | Custom CNN | ✓ | ✓ | MNIST, SVHN, CIFAR-10 | 10 | |||

| Bhardwaj et al. (2022) [8] | EfficientNet | ✓ | ✓ | MNIST, CIFAR-10 | 12 | |||

| Khan et al. (2021) [9] | EfficientNet | ✓ | ✓ | ✓ | Pascal VOC 2012 | 2, 3, 4, 5 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khalid, S.; Ali, R.H.; Khalid, H.B. Fruit and Vegetable Recognition Using MobileNetV2: An Image Classification Approach. Eng. Proc. 2025, 87, 108. https://doi.org/10.3390/engproc2025087108

Khalid S, Ali RH, Khalid HB. Fruit and Vegetable Recognition Using MobileNetV2: An Image Classification Approach. Engineering Proceedings. 2025; 87(1):108. https://doi.org/10.3390/engproc2025087108

Chicago/Turabian StyleKhalid, Sidra, Raja Hashim Ali, and Hassan Bin Khalid. 2025. "Fruit and Vegetable Recognition Using MobileNetV2: An Image Classification Approach" Engineering Proceedings 87, no. 1: 108. https://doi.org/10.3390/engproc2025087108

APA StyleKhalid, S., Ali, R. H., & Khalid, H. B. (2025). Fruit and Vegetable Recognition Using MobileNetV2: An Image Classification Approach. Engineering Proceedings, 87(1), 108. https://doi.org/10.3390/engproc2025087108