1. Introduction

Three-dimensional printing is the process of creating objects by depositing layers of material on top of each other. This technology has been around for about four decades, having been invented in the early 80s. While it initially started as a slow and costly technique, significant technological advances have made current technologies more affordable and faster than ever. It offers several advantages, with the most significant being the ability to produce highly complex designs that are otherwise impossible [

1]. Besides professional use for prototyping and low-volume manufacturing, 3D printers are becoming widespread among end users, starting with the so-called Maker Movement. The most common type of consumer-grade 3D printer is fused deposition modeling (FDM, also known as FFF—fused filament fabrication). This work focuses on FDM machinery due to its widespread use and numerous open issues such as precision and failure. These 3D printers can fail to print objects at a statistically dependent speed, depending on the printer’s manufacturer and model. Failures may occur due to misalignment of the print bed or print head, motor slippage, material deformation, lack of adhesion, or other reasons [

2]. The process of 3D printing must be monitored throughout to prevent the loss of printing material and operator time and to ensure product quality. While some 3D printers come with basic built-in monitors, there are currently no advanced remote control and real-time monitoring systems for 3D printers. The monitoring of failures and job completion during 3D printing processes must be carried out on-site, either by operators or video cameras. A solution to oversee the entire 3D printing process in real-time is remote video surveillance. This solution works well for small-scale production but can be a challenge for larger and more sensitive production. The amount of video data generated by more than six 3D printers can be overwhelming to transmit and analyze. Additionally, sending all these data for external cloud processing would require significant bandwidth and low-latency communications [

3].

2. Materials, Theory, and Methods

2.1. Materials

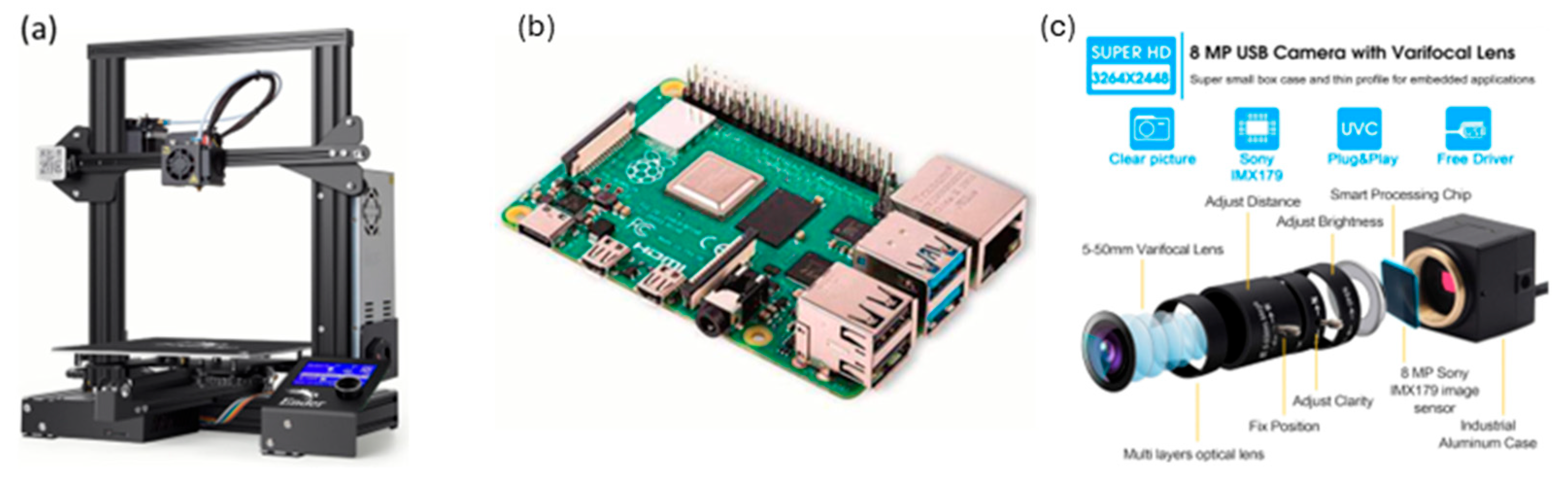

In the execution of their project, the team utilized an “Ender 3” 3D printer (

Figure 1a), well-known for its efficiency. The system was integrated with a Raspberry Pi 4 model B with 4 GB RAM serving as a remote control hub (

Figure 1b), and a manually focused camera (

Figure 1c) was employed to capture detailed images during the printing process. Importantly, the necessary parts for the dataset were produced using PLA filament, an environmentally friendly and easy-to-handle material. This material choice ensured the acquisition of high-quality data for the precise training of the neural network, playing a crucial role in the overall success of the project.

2.2. Theory

2.2.1. 3D Printing Errors

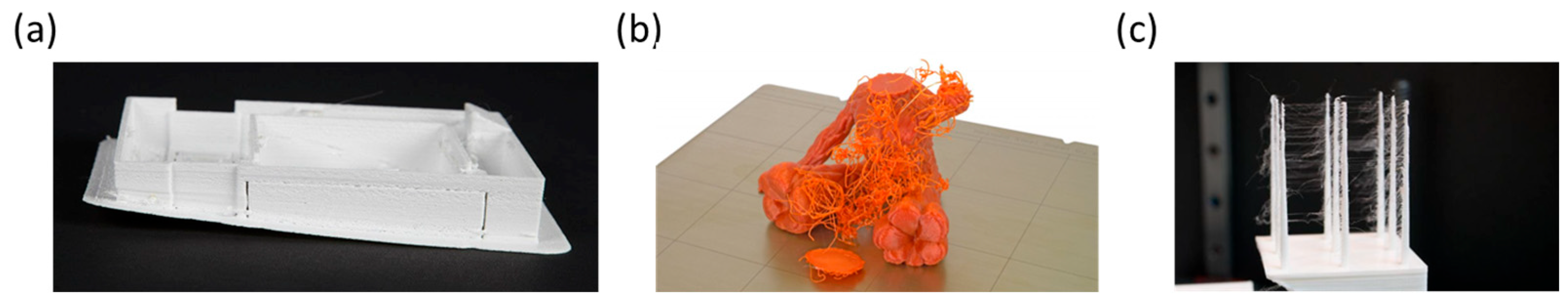

Warping: this is one of the most common problems encountered in 3D printing. It causes the corners of your prints to curl up, making them look worse, and can even cause your print to pop off the heated bed and fail completely [

4] (

Figure 2a).

Spaghetti: this print issue looks exactly like it sounds, a big mess of “spaghetti” on and around your print. It is extruded filament that was misplaced by the print head (extruder) because at a certain point during the print the object below moved or collapsed [

5] (

Figure 2b).

Stringing basically occurs when using filaments; essentially, the strings are fine, melted filament threads that settle in places other than where they are supposed to be on the 3D-printed object. This can usually happen due to incorrect settings, so that the filament continues to drop out of the nozzle even though the extruder is about to move to another location to continue FDM 3D printing [

6] (

Figure 2c).

2.2.2. 3D Printer Communication

Serial communication is a method to send data one bit at a time in the form of binary pulses. The binary means that zero represents 0 volts or a logic LOW while one represents 5 volts or a logic HIGH. The full-duplex mode is a mode where both the transmitter and receiver can send and receive the data simultaneously (

Figure 3). To put it simply, it is a simultaneous two-way communication method. The smartphone, or a phone unit, is an easy example that we can find [

7].

G-code is simply a programming language for CNC (computer numerically controlled) machines like 3D printers, CNC mills, etc. It contains a set of commands that the firmware uses to control the printer’s operation and the printhead’s motion (

Table 1). G-code for 3D printers is created using a special application called a slicer. This program takes your 3D model and slices it into thin 2D layers.

It then specifies the coordinates or path for the printhead to pass through to build up these layers. It also controls and sets specific printer functions like turning on the heater, fans, cameras, etc. [

8].

2.2.3. IoT Technologies—MQTT

MQTT is an OASIS standard messaging protocol for the Internet of Things (IoT). It is designed as an extremely lightweight publish/subscribe messaging transport that is ideal for connecting remote devices with a small code footprint and minimal network bandwidth (

Figure 4). MQTT today is used in a wide variety of industries, such as automotive, manufacturing, telecommunications, oil and gas, etc. [

9].

2.2.4. YoloV5 Pretrained Model

The identification of objects in an image is considered a common assignment for the human brain, though it is not so trivial for a machine. The identification and localization of objects in photos is a computer vision task called ”object detection”, and several algorithms have emerged in the past few years to tackle the problem. One of the most popular algorithms to date for real-time object detection is YOLO (You Only Look Once) [

10].

YOLOv5 is the world’s most loved vision AI, representing Ultralytics open-source research into future vision AI methods, incorporating the lessons learned and the best practices evolved over thousands of hours of research and development [

11] (

Figure 5).

2.3. Methods

2.3.1. Data Collection

For the model training, various images of commonly known calibration pieces for 3D printers were used. These pieces contain the necessary features to validate that a printer does not produce specific failures. In the case of the present project, they were used to generate the failures that need to be detected. Taking advantage of the wide range of errors that can be generated by poor parameterization, the model was trained with additional errors such as layer shifting, detachment, and the detection of human interaction on the print bed (

Figure 6).

After obtaining a dataset of approximately 2500 images, labeling was performed using the LabelImg v1.8.6 software, a tool that allows for extracting the coordinates of the object to be detected within each image (

Figure 7).

2.3.2. Control System

For the control system, the Python library PySerial was employed, which, when provided with the port and baud rate, automatically establishes the connection with the printer. Subsequently, using various programming techniques, the printer control logic was developed, enabling the management of temperatures, movement, and monitoring. A user interface was designed with VueJS, responsible for sending actions via MQTT from any computer to the device (

Figure 8).

In this same control system, the trained error detection model was implemented. Using the OpenCV and PyTorch libraries, real-time detections were performed during printing. Due to the high bandwidth and low latency required for the video transmission of the printing process, an option replaced by the computer vision system, the capability to capture a photo of the print was added. Additionally, the option to enable the sending of model detection images through the Telegram API was included. All notifications can also be observed in the user interface as console messages.

3. Results

Through the conducted detection tests, an understanding of the model’s behavior and environmental characteristics was gained to ensure its optimal performance. Within the matrix in

Figure 9, it can be observed that the model performs well in terms of error detection accuracy, particularly in the case of ”spaghetti”, where it demonstrated an 89% accuracy in detecting this error and only a 1% chance of misidentifying other types of errors, such as detachment, for instance. This was the only instance where the model showed a slight confusion with another error type. This can be explained by the fact that in 100% of cases where detachment occurs, spaghetti is also present due to the printing of the piece in mid-air. The spaghetti error was labeled under circumstances where detachment was always present, indicating that it does not represent a misclassification concerning the potential warnings that such detections may generate in the future.

For this analysis, various errors were intentionally generated with the device in operation to compare the detected images with the actual errors. It is noteworthy that despite the model being equipped for the detection of various error types, the analysis focused solely on the project’s scope due to the higher number of images during training.

To evaluate the results of the developed neural network’s detections, various methods were employed for metric analysis. In addition to the confusion matrix used in the previous analysis, the intersection over union analysis was also employed (

Figure 10). Since the trained model utilizes bounding boxes to display detections, these boxes can be compared to the actual image to obtain a percentage of accuracy (

Figure 11).

The IoU formula refers to the ratio of the shared areas between the total area of both bounding boxes. If the IoU is less than 0.5, it is considered a false positive.

4. Discussion

The device has demonstrated its capability to fulfill all previously established functions. With the successful design of the control strategy, it enabled the real-time pausing and halting of the printer, as well as axis control, monitoring, and temperature adjustment. Through the integration of the control strategy with the computer vision system, error correction was facilitated, preventing material and time losses. Validation tests showcased the developed device’s high proficiency in issuing alerts, enabling users to reliably halt failed prints. This accomplishment is particularly valuable in production and manufacturing environments, where efficiency and quality are paramount.

Future research is suggested to explore the possibility of addressing detachment and layershifting errors more specifically, as they currently pose challenges in real-time correction. Investigating additional strategies, such as extruder and fan speed control, could enhance the device’s versatility and correction capabilities.

5. Conclusions

This project has culminated in a highly effective remote-control device for the real-time detection and correction of errors in FDM 3D printers. By implementing a Raspberry Pi computer and training a neural network with the pretrained YOLOv5 model using a dataset of self-authored images, it successfully detected errors such as warping, spaghetti, stringing, and additional ones like detachment and layershifting to a greater extent. Furthermore, the detection of human interaction through hand detection adds an extra level of supervision and control during the printing process.

In summary, this project has achieved a significant milestone in improving the FDM 3D printing process while opening the door to future research and enhancements promising greater efficiency and precision in additive manufacturing. The ongoing expansion and refinement of the remote-control device have the potential to have a significant impact on the 3D printing industry.

Author Contributions

Conceptualization, H.R. and K.P.; methodology, H.R. and C.D.; software, H.R. and K.P.; validation, C.D., H.R. and J.C.; formal analysis, C.D., H.R. and J.C.; investigation, H.R. and K.P.; resources, H.R. and K.P.; data curation, H.R.; writing—original draft preparation, H.R. and C.D.; writing—review and editing, H.R.; visualization, H.R.; supervision, C.D. and J.C.; project administration, H.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets presented in this article are not readily available because they are part of the development of a minimum viable product currently under development. Requests to access the datasets should be directed to

henry.requena@uac.edu.co.

Conflicts of Interest

The authors declare no conflict of interest.

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).