1. Introduction

Localization is a critical challenge in autonomous vehicle navigation [

1]. In this paper, we propose a high-definition (HD) map-based approach to enhancing localization accuracy. The HD map is a directed graph of GPS coordinates linked to lidar scans and can be expanded with sensor data, such as cameras and radar, for greater detail [

2,

3]. The core issue addressed is whether a lidar-generated point cloud, combined with similarity metrics, can accurately determine the vehicle’s location. We assert that this is feasible using computationally efficient algorithms, while human drivers often rely on visual matching to locate themselves, computer-based visual matching offers unique advantages and challenges. Our focus here is on lidar point cloud matching [

4]. Lidar output consists of three-dimensional point sets, which are generally considered more computationally tractable than processing color images. This research builds on our previous work [

5,

6,

7], funded by National Science Foundation(NSF) and Florida Polytechnic University. Using our autonomous vehicle platform, we collected over 30 min of synchronized lidar scans and GPS coordinates during campus-wide driving. This dataset is employed to validate the proposed point cloud matching algorithms, similarity metrics, and localization methods.

2. Data Collection

A point cloud is defined as a finite set of points in

; namely, any set of the following form is a point cloud:

In an AV application, the lidar sensor output will be a point cloud. Even though the point

can be indexed by an integer, any permutation will result in the same point cloud object. Furthermore, even if these points are stored in an array-like structure, with each point having an index (e.g., a list or matrix), we still consider the point cloud to be a set.

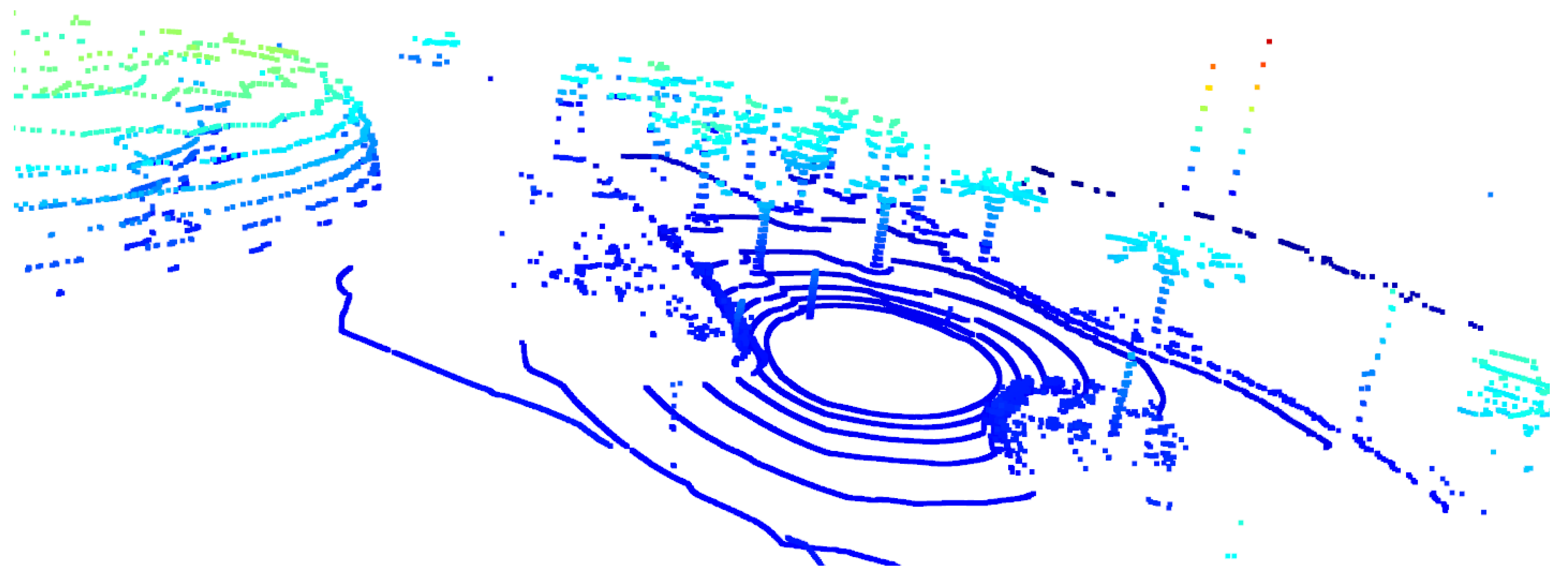

An example point cloud is shown in

Figure 1, and the Google street view of a nearby location is shown in

Figure 2. This is the entrance of the Innovation Science and Technology (IST) building at Florida Polytechnic University.

The point cloud shown in

Figure 1 is one of our 19,500 point clouds collected while we drove on the Florida Poly campus using our AV research vehicle. This particular point cloud consists of 18,165 three-dimensional vectors; in other words, it can be stored as a 18,165

real-valued matrix. The three-dimensional (3D) visualization shown in

Figure 1 was generated using the Python

Open3D library.

Almost all of the 19,500 point clouds have a different number of points. In

Figure 3, a histogram of the number of points is shown. We see that the mean value of the number of points is 21,533 and the standard deviation is 1685 points. The Velodyne VLP-16 lidar sensor used in this application has 16 lasers, each scanning a different polar angle. In this study, a 360-degree lidar scan was completed in 0.1 s; in other words, the lidar frame rate was 10 fps.

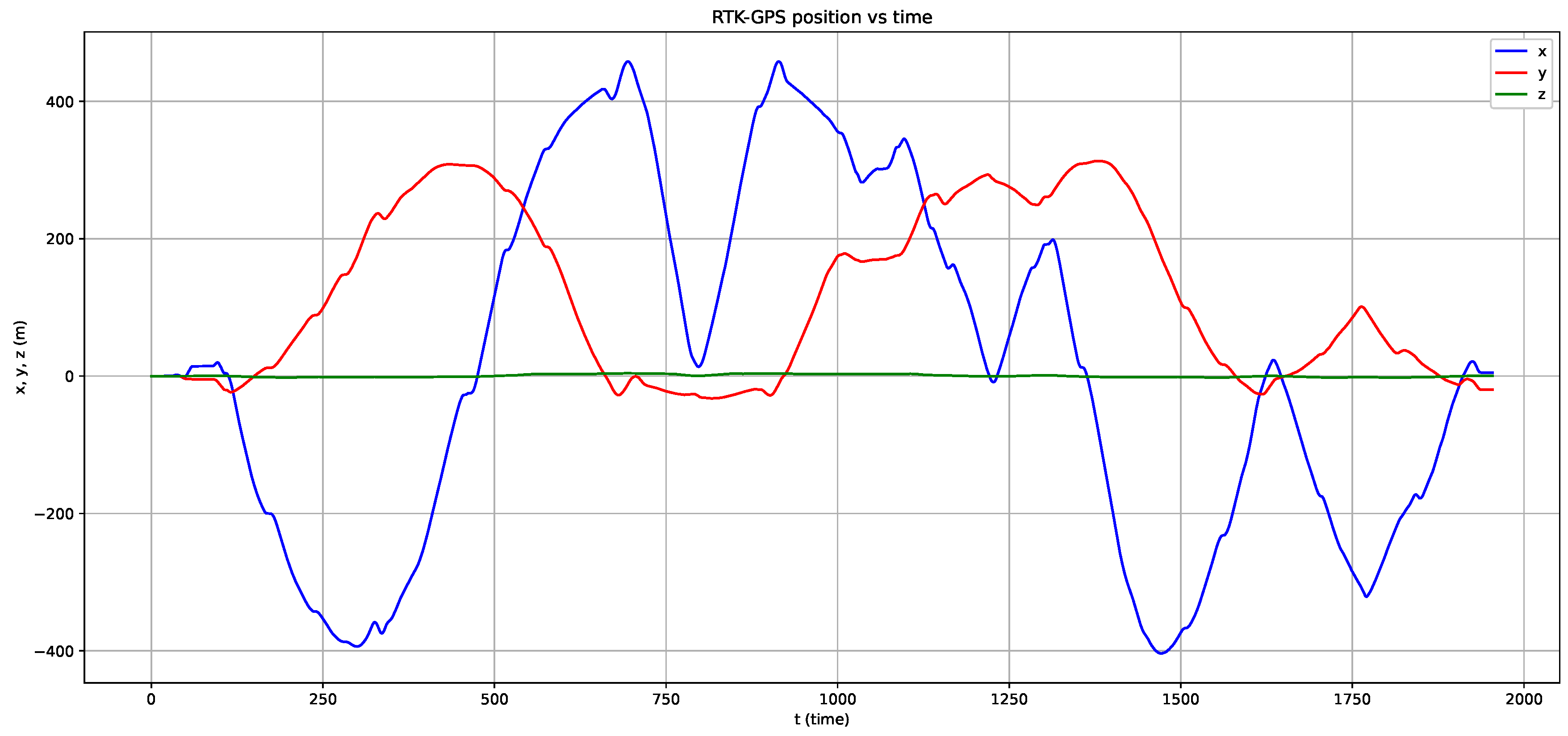

While driving on the Florida Poly campus, we also recorded our AV research vehicle’s GPS location using an RTK-GPS. The particular RTK-GPS system used in this study comprised the ublox ZED-F9P sensor and the C099-F9P application board. The RTK-GPS output rate was set to 2 Hz, and Florida Department of Transportation’s (FDOT) Florida Primary Reference Network was used as base stations. In

Figure 4, the path followed by our AV research vehicle is shown in Cartesian coordinates, and the origin is a point close to the newly constructed Barnett Applied Research Center(BARC). The “map” view is shown in

Figure 5. The RTK-GPS output is basically in text format, as described in the National Marine Electronics Association(NMEA) 0183 standard. If this is saved in KMZ format, it can be viewed on Google Earth, as shown in

Figure 5.

The data collection process took approximately 1950 s, a little over half an hour. In the end, we had several lidar point clouds and RTK-GPS location measurements, as follows:

where

N = 19,500,

’s are point clouds, and

’s are the location tags as three dimensional vectors. Since the RTK-GPS frame rate is 2 fps and the lidar frame rate is 10 fps, we first synchronized the clocks of these two sensors and then interpolated the RTK-GPS data by a factor of 5 to transform it into a 10 fps rate.

3. Point Cloud Similarity Metrics

In this section, we will consider the problem of measuring the similarity between two point clouds. If

and

are two-point clouds, and if it is possible to find a translation vector

and a rotation matrix

, such that the following significantly overlaps:

then, it is reasonable to call

and

as similar. In other words, one can say that

and

match. The main idea behind this lidar-based localization is point cloud similarity or matching. Defining an unambiguous and computationally simple method is of extreme importance.

Since rotations do not change the center of mass of the point cloud, without a loss of generality, we may assume that point clouds have their centers already aligned. To measure the degree of overlap, we propose the use of a distance metric defined for point clouds. Therefore, if

is a measure of distance between two point clouds, our proposed similarity metric will be as follows:

Because of the already assumed center of mass alignment between

and

, we drop the

term. In this equation,

represents the set of rotation matrices.

In the literature, there are various point cloud distance metrics used, such as Chamfer distance, Hausdorff distance, one-sided Hausdorff distance, and Sinkhorn distance. In this study, we adopt the Chamfer distance, which is defined as follows:

where

is the point cloud

, consisting of

three dimensional points, and

is the point cloud

, consisting of

three dimensional points. The function

is called the nearest neighbor function and is defined as follows:

where

is the Euclidean distance. In other words,

is the point in the point cloud

P that is closest to

x in Euclidean distance.

4. A Simplified Similarity Metric

One problem with the “ideal” similarity definition given in the previous section is the complexity of the search over SO(3) and the computational costs associated with Chamfer distance. Since our point clouds have around 20,000 points, the computation of the function will require a for loop of size N. The Chamfer distance formula has a sum over N as well, i.e., another for loop of size N. On top of all of this, we have a search over SO(3), i.e., a two-dimensional search over .

If the AV vehicle is traveling at higher speeds, we have a very short time interval in which to finish all localization calculations. Therefore, we would like to propose less “ideal” but computationally simpler similarity metrics that are likely to result in similar matching scores.

First, consider each point cloud in spherical coordinates, as follows:

Then, drop the radius info and generate the following set of two-dimensional points:

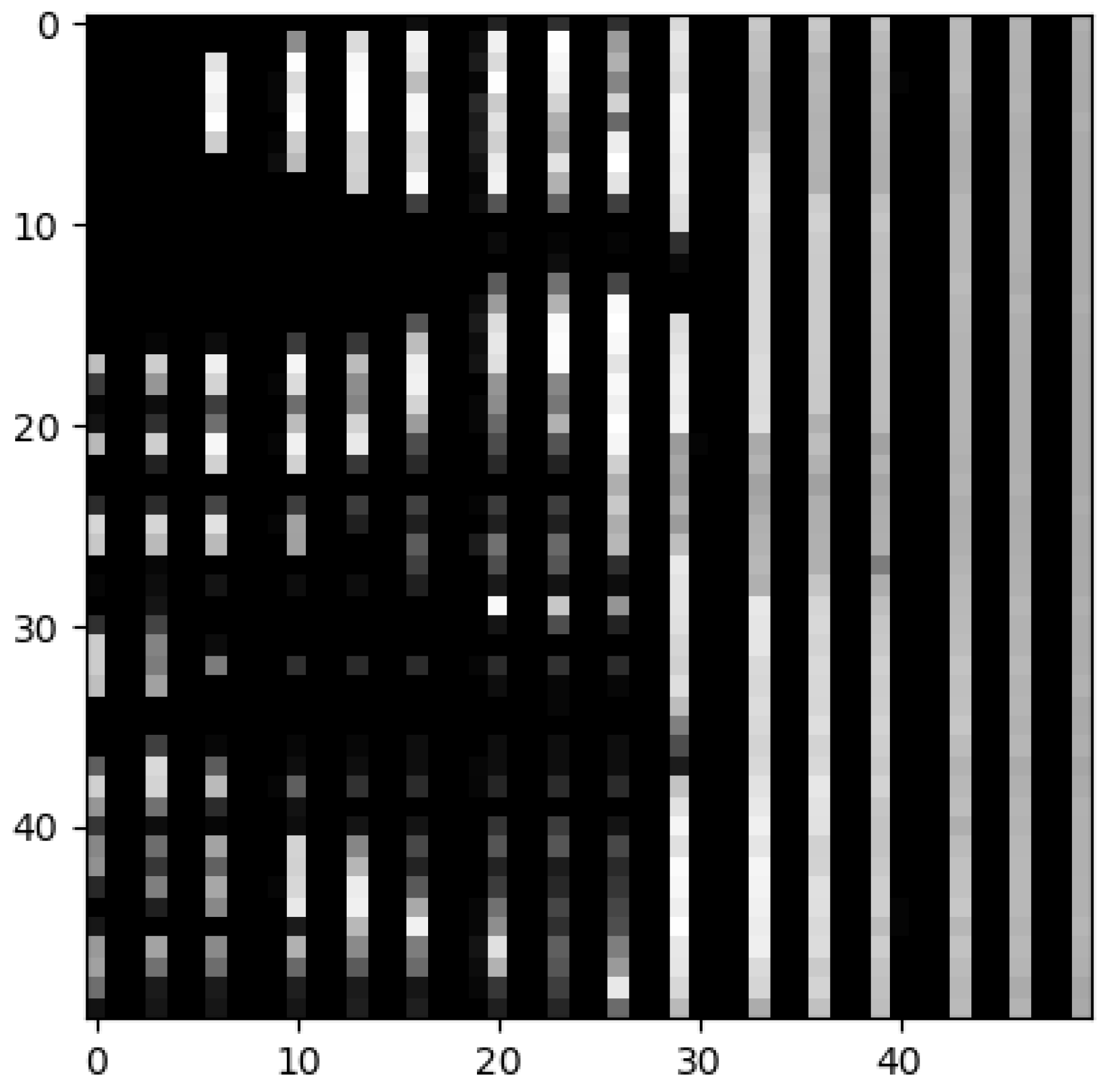

Dropping the radius info will result in some loss of information, but greatly simplifies the whole process. Then, consider the two-dimensional (2D) histogram of this set

Q, which will be effectively a matrix,

H, of size

, where

b is the bin size used in 2D histogram generation. For a typical

50–100, the resulting

matrix can be viewed as an image. For example, for the lidar scan shown in

Figure 1, histogram analysis will result in the image shown in

Figure 6.

Rotations of a point cloud

P correspond to translations in the

Q representation. Since we are using the spherical coordinate system, all translations are modulo

in the first and the second coordinates. If we have two point clouds,

and

, instead of using the similarity metric defined in the previous section, we use the following:

This can be used as a computationally simpler but not necessarily equivalent alternative. Note that all

H matrix indices are considered as modulo

b. The double summation used in the above equation is the well-known correlation formula commonly used in image processing. To capture all possible rotations, we allow row and column circular shifts and choose the maximum possible value as the similarity score. The double summation, as a function of

, can be computed using Fast Fourier Transform(FFT) techniques.

In

Figure 7, the image representations of two point clouds are shown. The one on the left is for the 4000th point cloud, and the one on the right is for the

point cloud.

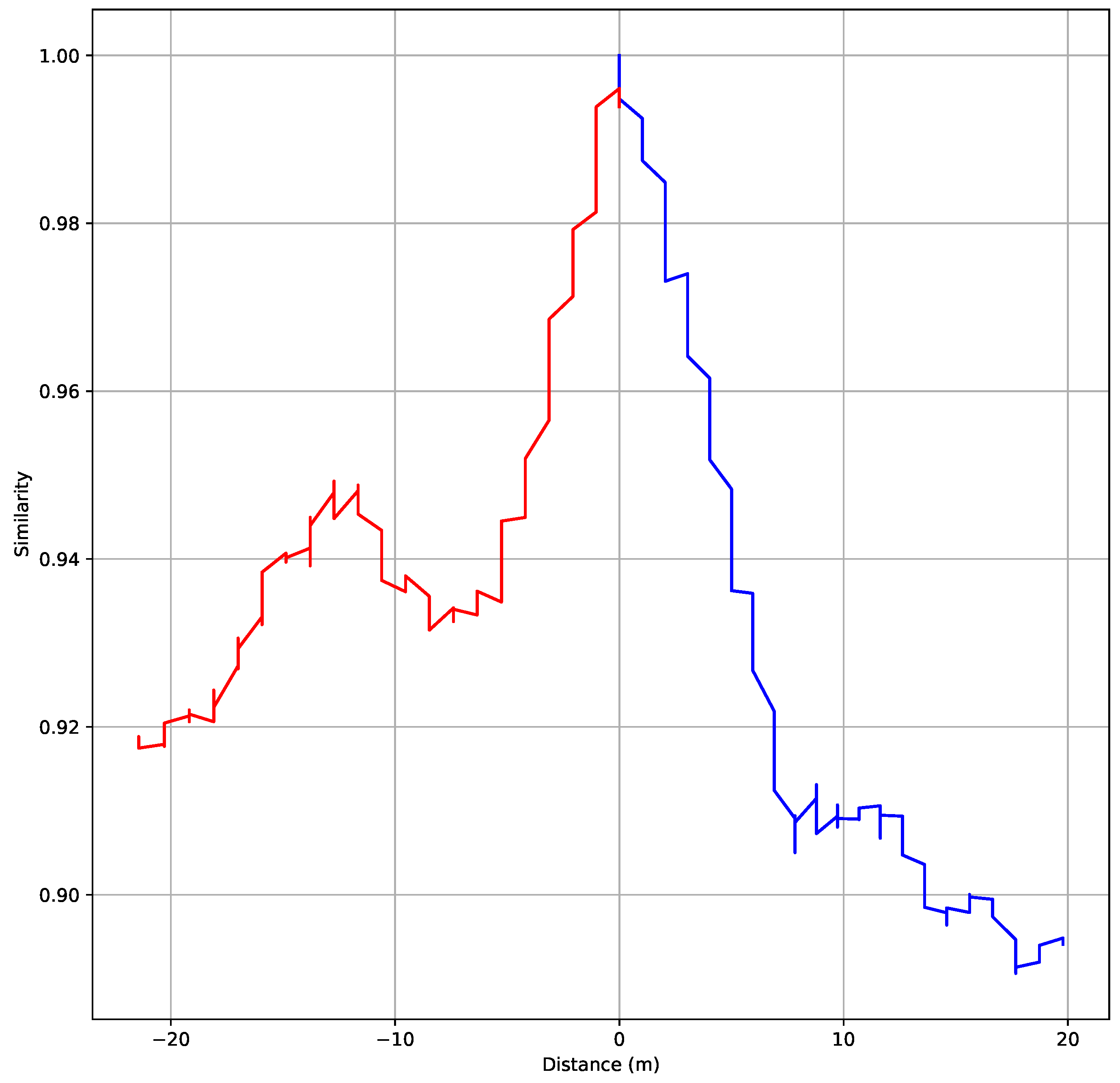

Figure 8 shows how the newly defined similarity changes with the distance. It is clear that, as we move away from the point corresponding to the

lidar scan, or the location at time

, the similarity between the

lidar scan and the lidar scan at the new location drops sharply.

Let us perform the same analysis at time

. In

Figure 9, the image representations of two point clouds are shown. The one on the left is for the

point cloud, and the one on the right is for the

point cloud.

Figure 10 shows how the newly defined similarity changes with the distance. It is clear that, as we move away from the point corresponding to the

lidar scan, or the location at time

, the similarity between the

lidar scan and the lidar scan at the new location drops sharply.

Proposed Localization Approach

The analysis presented above clearly shows the feasibility of a two-dimensional binary search type approach for localization. We assume that we do know our current location and we assume that, after 1 s, we obtain a new lidar scan. By using this new lidar scan and the proposed similarity scoring methodology, we can update our location. Among all the possible locations that can be reached within 1 s, we choose the location that results in the maximum similarity. More formally, we can write the following:

- S1:

Start with , and a known current location, .

- S2:

After 1 s, obtain a new lidar scan, .

- S3:

Let be the set of all possible GPS locations in the high-definition map that can be reached from within 1 s.

- S4:

Let be the set of lidar point clouds for the GPS locations in . Recall that the database that maps a GPS location to a point cloud will be called the high-definition map.

- S5:

Find the point cloud in that is the most similar to .

- S6:

Update as the GPS location corresponding to the point cloud found in Step 5.

- S7:

Increase k by 1, and go to Step 2.