Abstract

Civil aviation safety is crucial to the airline transportation industry, and the effective prevention and analysis of accidents are essential. This paper delves into the mining of unstructured textual information within accident reports, tracing the evolution from manual rules to machine learning and then to advanced deep learning techniques. We particularly highlight the advantages of text extraction methods that leverage large language models. We propose an innovative approach that integrates TF-IDF keyword extraction with large language model prompted filtering to scrutinize the causes of accidents involving civil transport aircraft. By analyzing the keywords before and after filtering, this method significantly enhances the efficiency of information extraction, minimizes the need for manual annotation, and thus improves the overall effectiveness of accident prevention and analysis. This research is not only pivotal in preventing similar incidents in the future but also introduces new perspectives for conducting aviation accident investigations and promotes the sustainable development of the civil aviation industry.

1. Introduction

Civil aviation safety is regarded as the lifeline of the airline industry, with the effective prevention of aviation accidents consistently being a key focus of civil aviation operations. The analysis of accident causation plays a pivotal role in this regard as it thoroughly examines the various factors involved in accidents, thereby revealing potential systemic flaws and operational errors. The effective analysis of accidents not only prevents the recurrence of similar incidents but also strengthens public confidence in air travel safety, thus fostering the sustained and healthy development of the entire civil aviation industry.

A critical aspect of accident analysis is the in-depth exploration of unstructured textual information contained within accident reports. These reports, comprising detailed descriptions, encompass a wealth of key information and event-related factors, invaluable for statistical and causal analyses. Due to its rich content and high density of information, the management and analysis of unstructured text have become significant challenges in the study of accident causation.

This paper introduces a novel method to analyze the causes of civil transport aircraft accidents. In the initial stage, keywords are extracted from the original accident text, employing the technique of term frequency–inverse document frequency (TF-IDF) [1]. This is followed by the application of a large model prompted filtering approach to refine the information present in the accident text. Subsequently, the refined text undergoes another round of TF-IDF keyword extraction. A comparative analysis of the results is then conducted. Specifically designed prompts, tailored to various types of accidents, enable the large model to focus the filtered text on key factors related to the accidents.

2. Development of Research Questions

Research into the extraction of unstructured text information dates back to 1991, when Lisa F. Rua and others utilized manually crafted rules to identify and extract company names from English texts. Initially, such studies primarily relied on manually created rules, dictionaries, orthographic features, and ontologies. For example, Hu Yan [2] and others developed a framework based on an HTML structure for web information extraction technologies, creating a system to acquire specialized web-based knowledge by using regular expressions to match web pages with thematic content. S. Mukherjea [3] introduced a biological annotation system, BioAnnotator, which used domain-specific dictionary lookups to identify known terms and rule engines to discover new terms. This method, requiring minimal training data, could accurately identify entities, was relatively easy to implement, and had strong interpretability. However, its reliance on the accuracy and completeness of rules limited its ability to generalize and handle complex linguistic phenomena.

As machine learning technology [4] has advanced, methods based on machine learning have become mainstream. These methods employ extensive annotated data to train models, using supervised learning to automatically extract features from text. Machine learning models such as support vector machines (SVMs), maximum entropy models (MEMs), hidden Markov models (HMMs), and conditional random fields (CRFs) have found widespread use in text information extraction tasks. M Choi [5] proposed an extraction system based on an SVM that identified and categorized social relationships within sentences. G Somprasertsri [6] extracted features from customer review corpora, then trained a maximum entropy model with these annotated corpora to extract product features from customer comments. X Yu [7] used hidden Markov models to analyze statements related to causative events, effects, and cues in medical literature, substantially reducing the workload of manually extracting risk information and enhancing efficiency. K Liu [8] addressed the issue of unutilized detailed data on bridge conditions and maintenance measures in bridge inspection reports by proposing a semi-supervised CRF method based on ontologies to extract entities describing bridge defects and maintenance actions. Nevertheless, traditional machine learning methods for information extraction still face challenges such as the complexity of feature engineering and a limited ability to handle complex linguistic structures and semantic expressions. To overcome these issues, deep-learning-based methods have recently gained significant attention.

Deep-learning-based methods for information extraction primarily involve building deep neural network models. These models often utilize convolutional neural networks (CNNs) [9], recurrent neural networks (RNNs) [10], and long short-term memory networks (LSTMs) [11] to extract features from text sequences and employ models like conditional random fields to model and decode label sequences. P Li [12] introduced a knowledge-oriented CNN method to extract causal relationships, effectively integrating human prior-knowledge cues with data-derived features to effectively extract causal relationships from texts. S Gupta [13] developed a semi-supervised RNN model to extract information about adverse drug reactions. W Wang [14] introduced a dependency-based deep neural network model that incorporated dependency techniques into a bidirectional LSTM, achieving outstanding results in biomedical text relation-identification tasks. These methods not only avoided the complex feature engineering typical of traditional approaches but also better captured the semantic and contextual information within text sequences, addressing the challenges involved in extracting information from unstructured text.

However, deep neural networks, due to their vast number of free parameters, heavily rely on extensive annotated corpora for training, which poses a significant challenge in practical applications due to the high costs of manual annotation. Despite improvements in deep-learning-based information extraction methods thanks to the introduction of pre-trained language models like BERT [15], the necessity for manual annotation remains unavoidable. The emergence of large language models such as ChatGPT has provided a solution to this issue.

Large models, utilizing deep learning technology, are advanced tools designed to understand and generate human language. Typically based on the transformer architecture and trained with massive datasets, these models are adept at capturing the complex structures and semantics of language. Scholars across various fields have begun employing these large models to perform information extraction from unstructured texts. J Dagdelen [16] proposed a method for joint entity and relation extraction, using GPT-3 and Llama-2 to extract details about the composition, phase state, form, and applications of materials from texts in materials chemistry. Y Tang [17] applied large models combined with prompt-based methods to extract critical medical information from medical literature. By using prompts and other similar methods to filter textual information, these models leveraged their extensive semantic understanding capabilities, potentially reducing the burden of manual annotation.

3. Methodology

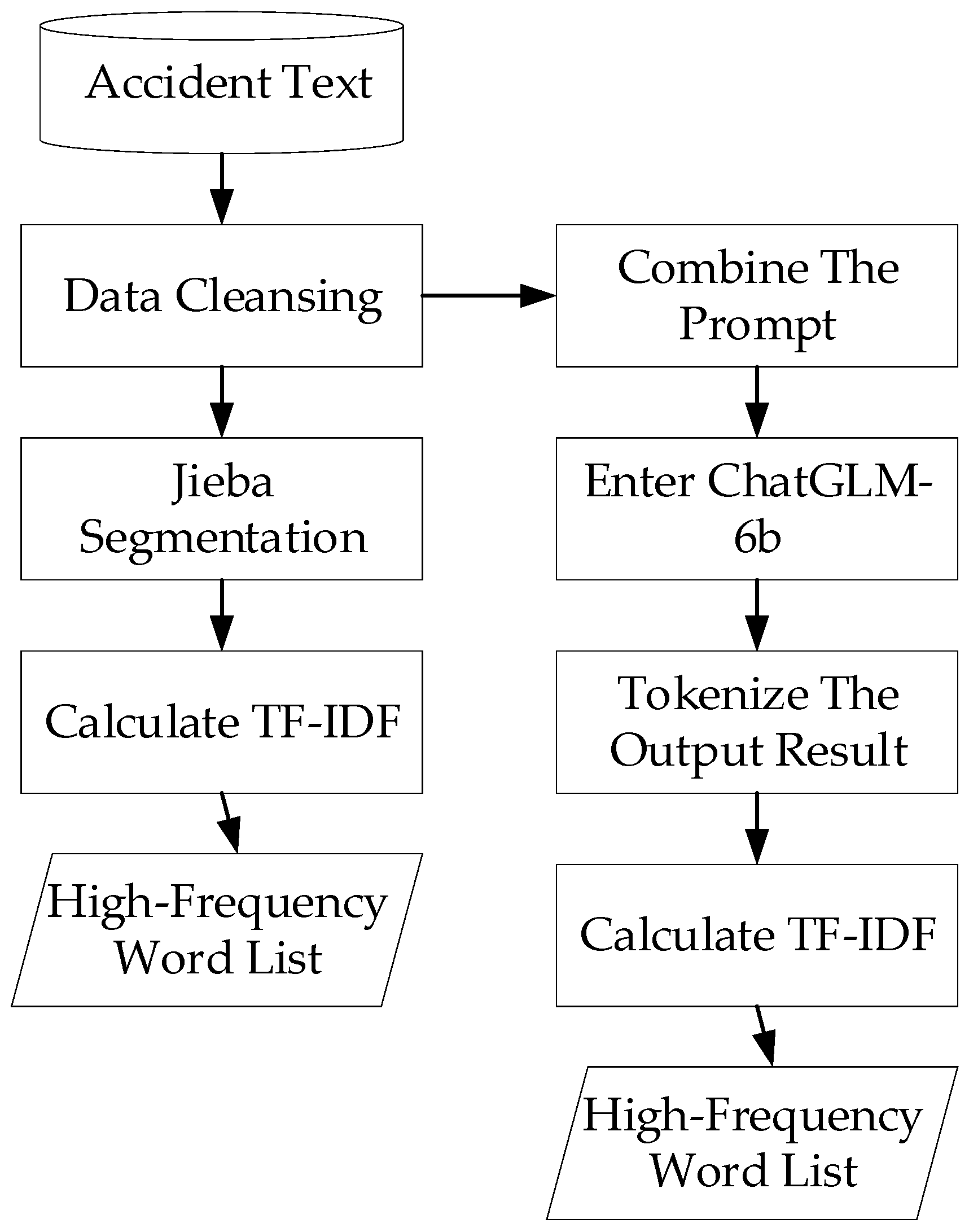

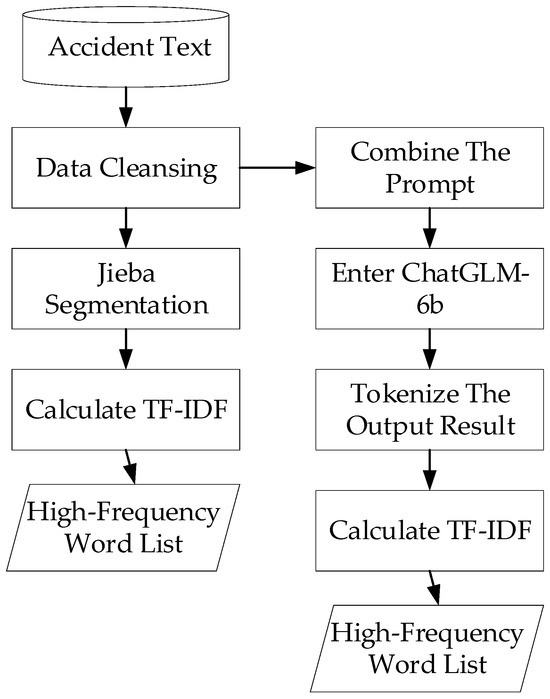

A data-cleaning process was applied to over 11,000 accident reports of civil transport aircraft in China. Initially, traditional methods were used to segment the original accident text using Jieba word segmentation, incorporating a stop-word list to eliminate meaningless words. The segmented words were then evaluated using the TF-IDF method to establish a list of keywords. Subsequently, a large model combined with prompt engineering [18] was employed to filter the textual corpus containing mechanical and human-factor accident reports. The filtered text was processed using the same method to generate a corresponding list of keywords. The results were compared, and the keywords were analyzed to decipher the underlying information they contained. The technical roadmap for this process is depicted in Figure 1.

Figure 1.

Methods for keyword extraction using large model information extraction.

3.1. Jieba Chinese Word Segmentation Technology

Jieba [19] is an open-source Python library used to segment Chinese text. In Chinese processing, word segmentation becomes the first and fundamental step in natural language processing because Chinese texts do not have clear space delimiters like English. The Jieba library offers an effective solution to this, supporting three main segmentation modes (precise mode, full mode, and search engine mode), each implemented by corresponding algorithms. Given that this paper required the precise segmentation of sentences without the need for comprehensiveness or search capabilities, the precise mode was utilized. The basic principles of Jieba word segmentation are as follows:

- Jieba segmentation employs an algorithm based on the trie tree structure. Utilizing this algorithm, Jieba efficiently performs word graph scanning. It uses the scanning to identify all possible word formations from the characters in a sentence, creating a directed acyclic graph (DAG) of these possibilities, which lays the foundation for the next step.

- The implementation of the maximum probability path in Jieba segmentation uses a dynamic programming approach. This dynamic programming method identifies the combination with the highest word frequency for optimal segmentation.

- For words not found in the built-in dictionary of Jieba segmentation, the Viterbi algorithm is employed, incorporating the hidden Markov model (HMM) used to form words from Chinese characters.

3.2. Large Models and Prompt Engineering

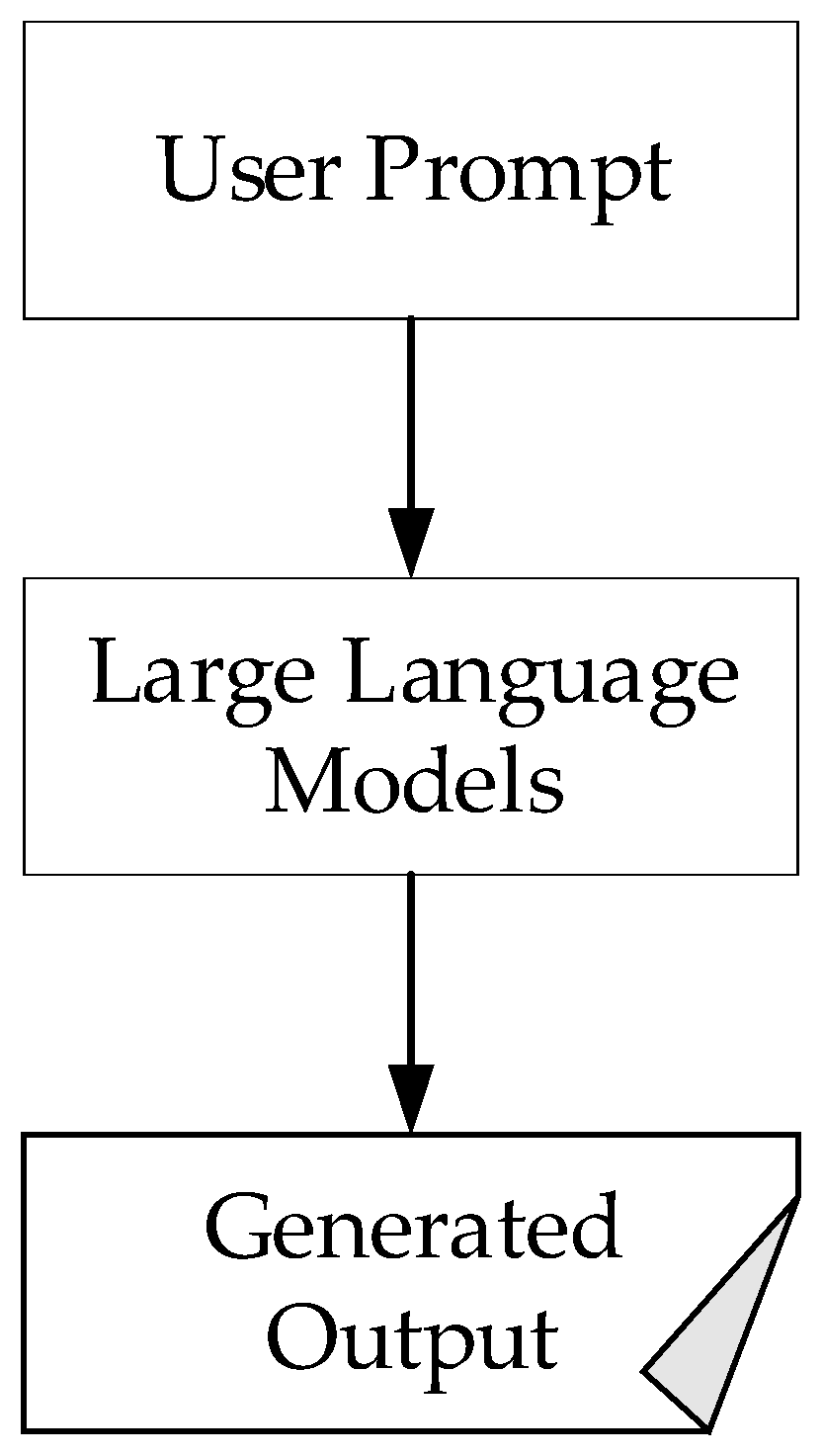

Large models, also referred to as large language models, are characterized by their substantial architectural capacity, numerous parameters, and the extensive datasets used for pre-training. Examples of such models include GPT (generative pre-trained transformer) [20] and BERT (bidirectional encoder representations from transformers). These models are distinguished by their ability to learn general linguistic patterns and structures through advanced pre-training on extensive textual data. A “prompt” is a piece of text input into the language model designed to steer the model’s response in a specific direction. The general workflow is depicted in Figure 2.

Figure 2.

Large language model prompt response process.

The large language model foundation used in this paper was ChatGLM-6b [21], jointly developed by Tsinghua University and Zhipu AI. It is an open-source dialogue language model that supports bilingual question-answering in both Chinese and English and has been specifically optimized for the Chinese language. This model is based on the general language model (GLM) architecture and possesses 6.2 billion parameters. Prompt words were designed for different types of incidents, as illustrated in Table 1.

Table 1.

Prompts used for different types of accidents.

3.3. Term Frequency–Inverse Document Frequency (TF-IDF)

Term frequency–inverse document frequency (TF-IDF) is a common weighting technique used in information retrieval and text mining. TF-IDF measures the importance of a word to a document in a collection or corpus. The importance of a word proportionally increases with the number of times it appears in the document but is offset by the frequency of the word in the corpus. The TF-IDF algorithm consists of two components, the term frequency (TF) and inverse document frequency (IDF).

Term frequency (TF) signifies the frequency of a word in a text. This figure is a measure of the number of times a word appears in the text. If a term appears multiple times, it is considered to be of greater importance to the text (assuming no other adjustments). The basic formula for TF is as follows:

In this formula, represents the number of occurrences of the term in document , while the denominator is the sum of the occurrences of all terms in document .

Inverse document frequency (IDF) is a measure of the general importance of a term. The IDF for a specific term is obtained by taking the logarithm of the quotient of the total number of documents divided by the number of documents containing the term, as follows:

In the formula, represents the total number of documents in the corpus, while denotes the number of documents that contain the term (i.e., ). To avoid a zero denominator when a term is not present in the data, a common practice is to add 1 to the denominator.

Combining TF and IDF, we can derive the following formula to calculate TF-IDF:

This formula combines two statistical methods to evaluate the importance of a word within a document. The TF-IDF value calculated for each document is directly proportional to both the term frequency and its inverse document frequency. Thus, TF-IDF tends to filter out common words while retaining significant ones.

4. Results and Analysis

4.1. Mechanical Accidents

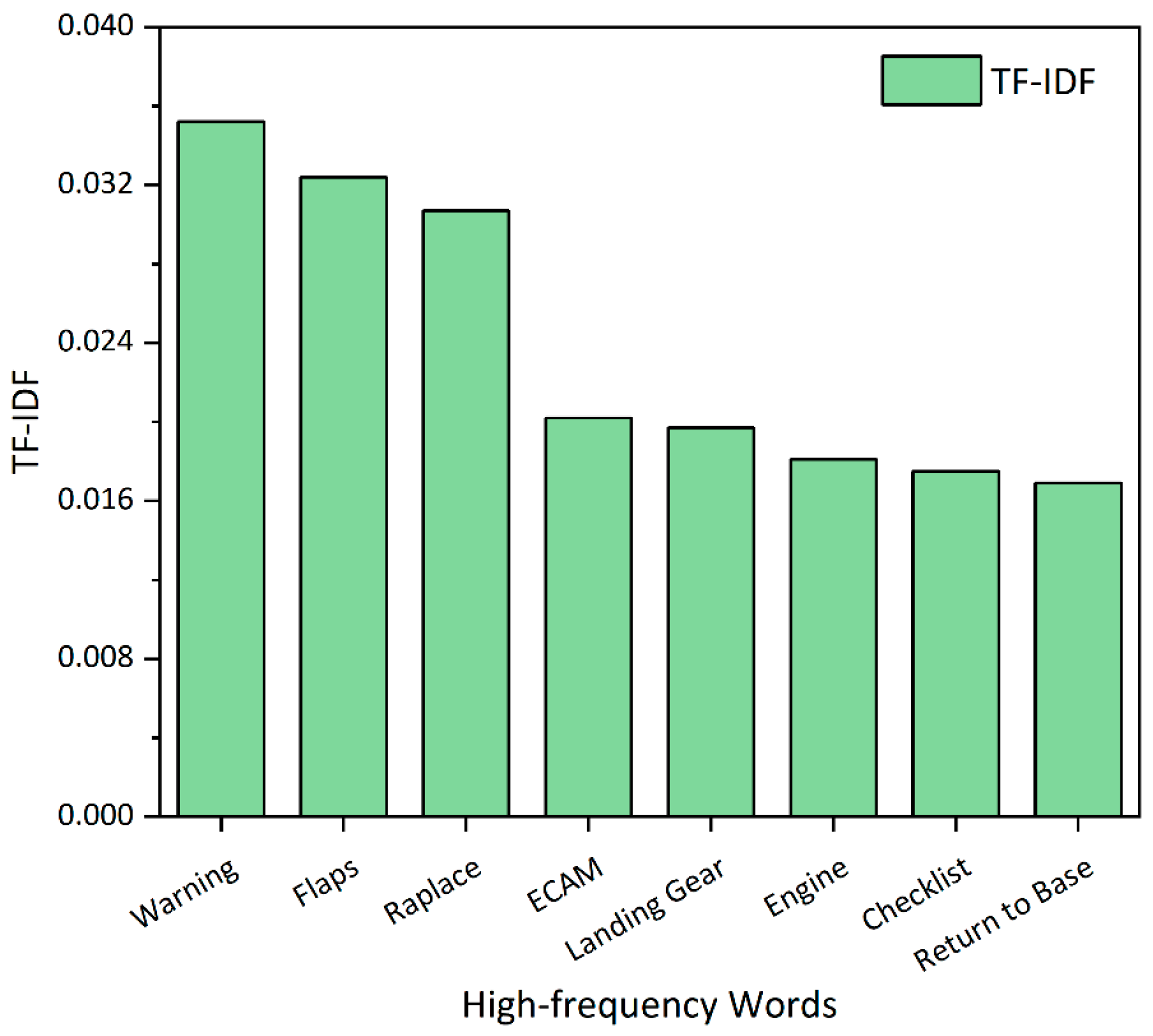

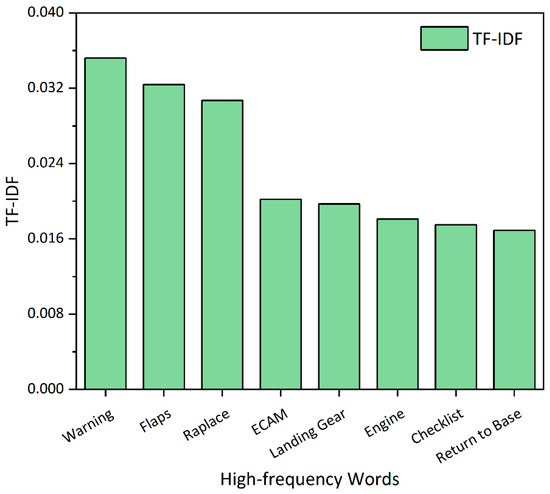

In civil transportation aviation accidents, incidents classified as mechanical accidents refer to those related to malfunctions and failures in the aircraft’s mechanical systems. This includes engine failures, flight control system malfunctions, structural failures, and failures of other critical mechanical components. Mechanical accidents were segmented into specific terms and, after the removal of stop words, the TF-IDF values were calculated and sorted. The results are presented in Figure 3.

Figure 3.

High-frequency words in mechanical accident texts.

The TF-IDF values revealed several high-frequency words that reflect the most common key issues and fault points in accidents. The frequent appearance of the word “warning” indicates that pilots often face multiple warning signals from aircraft systems. These warnings could involve critical systems such as the landing gear and engine, highlighting the importance of sensitivity to anomalies during flight operations. Additionally, the frequent occurrence of “flaps” and “landing gear” points to common failure points in the aircraft’s mechanical operating system. Flaps, as critical components controlling the aircraft’s ascent and descent, directly affect the safety of takeoffs and landings when malfunctioning. Problems with the landing gear are common serious risks in accidents, and if these issues are not promptly detected and repaired, they could potentially lead to safety incidents. The significant frequency of the words “replace” and “checklist” point to some routine practices and emphases in daily aircraft maintenance. Evidently, ongoing inspections and replacements of parts are crucial to maintain optimal functionality and ensure flight safety. The frequent references to the ECAM system indicate modern aircraft’s heavy reliance on electronic monitoring systems to diagnose and pre-warn of potential mechanical failures. Lastly, the occurrence of the words “return to base” reminds us of the risk-avoidance measures taken by crews when encountering technical issues that cannot be immediately resolved. This action not only ensures the safety of passengers and crew but also demonstrates the pilots’ emergency handling abilities when faced with mechanical failures.

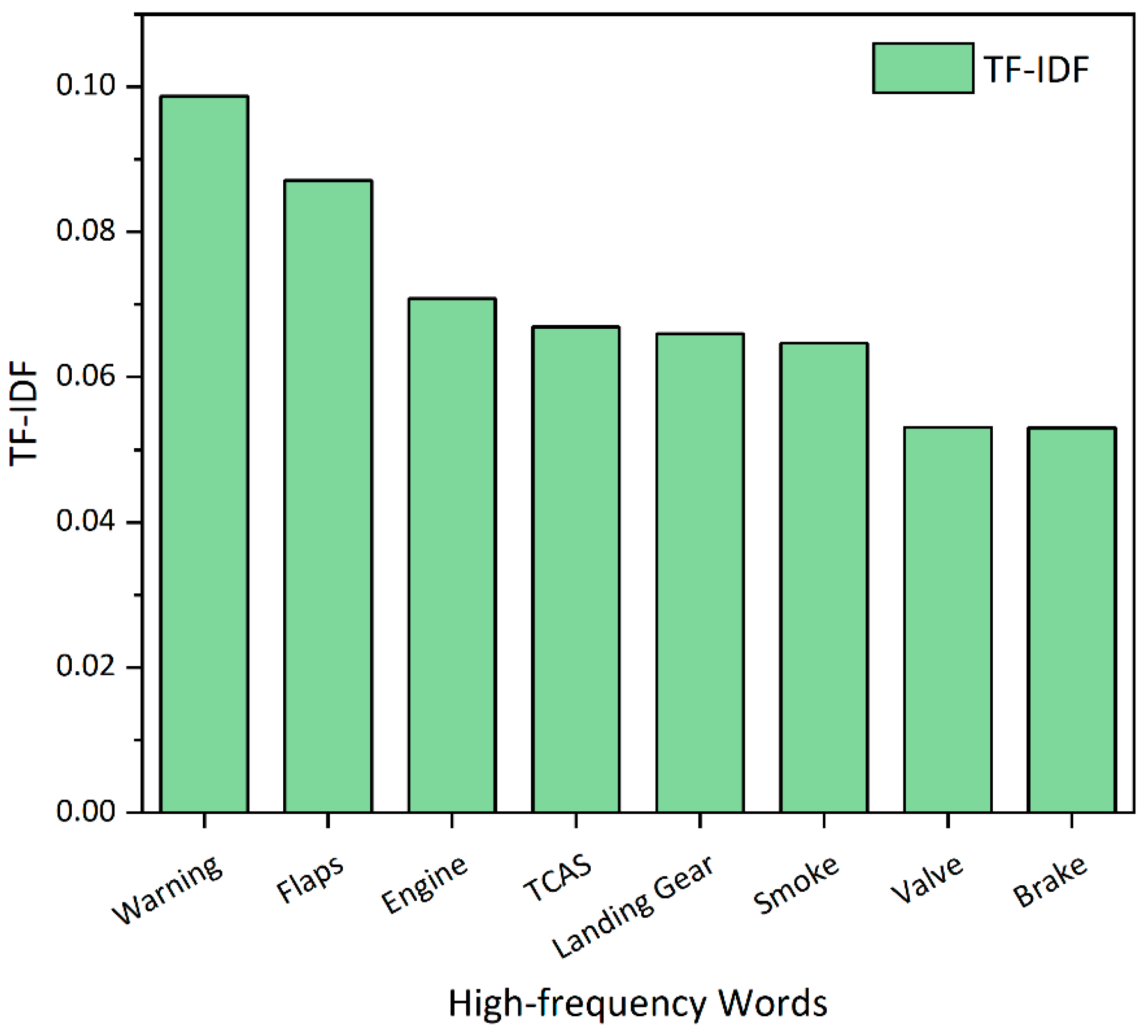

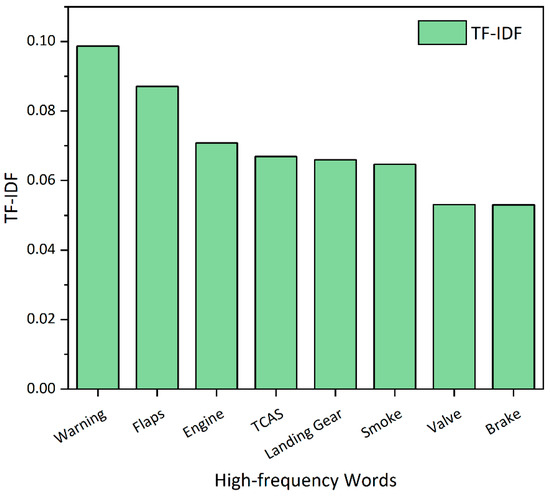

Using large language model prompts to inquire about mechanical accidents and processing the responses to calculate TF-IDF values provided insights. For such incidents, our primary concern was which systems on the aircraft malfunctioned or which mechanical components were damaged. By focusing on these aspects in our queries, we obtained the results shown in Figure 4.

Figure 4.

High-frequency words derived from mechanical accident texts after filtering with ChatGLM3-6b.

Compared with previous results, we observed some overlaps and newly emerging key components and systems. Overlapping terms included “warning”, “flaps”, “engine”, and “landing gear”, which underlined their importance once again. The traffic collision avoidance system (TCAS) emerged as a new keyword related to the aircraft’s anti-collision system. A malfunction in TCAS could impair a pilot’s ability to perceive other aircraft in the surrounding airspace, thereby increasing the risk of in-flight conflicts with other aircraft. The term “smoke” indicated potential fire alarms or electrical failures; the presence of smoke inside an aircraft represents a serious safety threat, necessitating the immediate identification and resolution of its source. Actuators play a crucial role in regulating the pressure balance inside and outside the cabin, while brakes are essential for safely decelerating the aircraft after landing. Failures in these components directly impact the normal operation of the aircraft and passenger safety.

4.2. Human-Caused Accidents

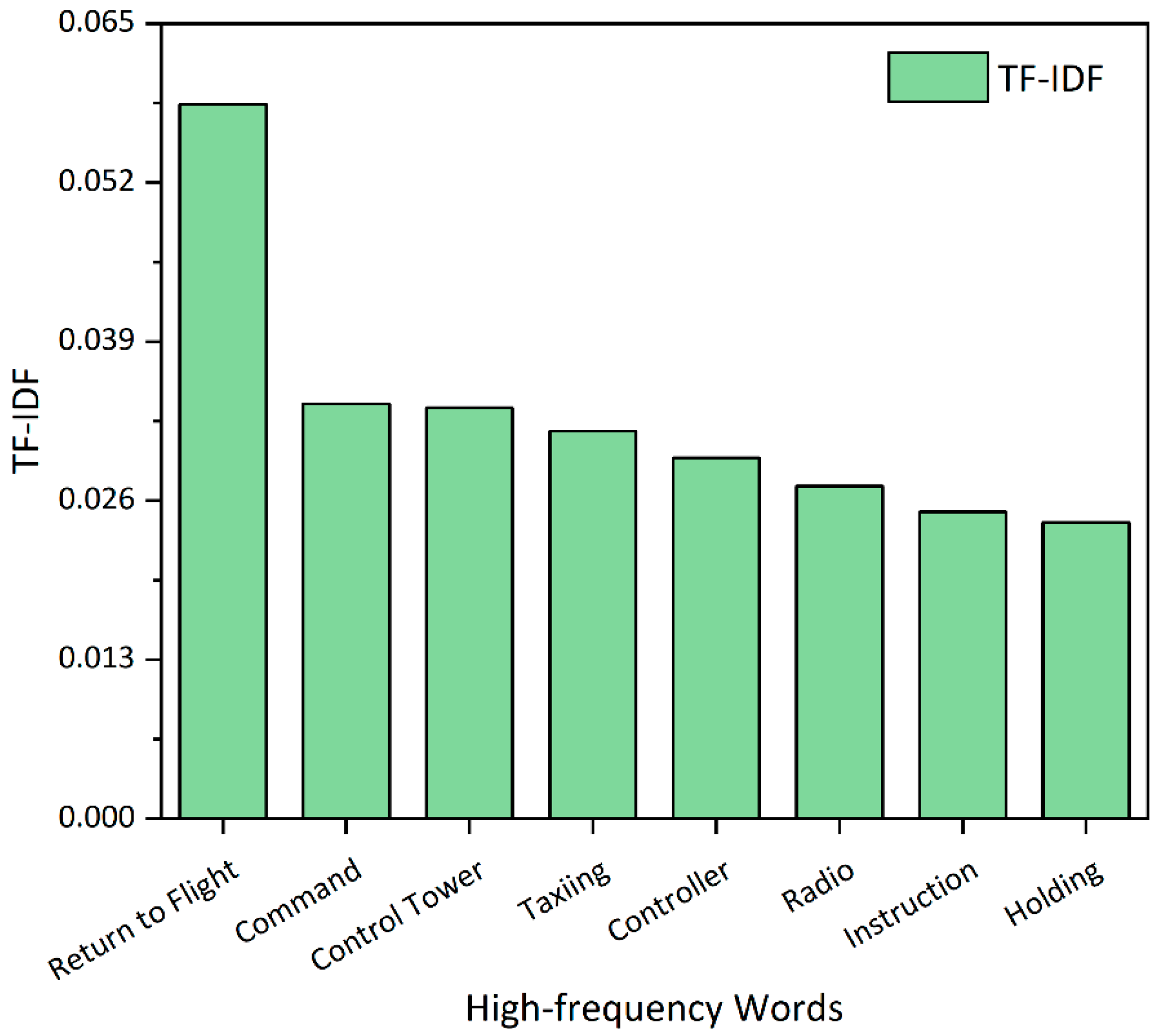

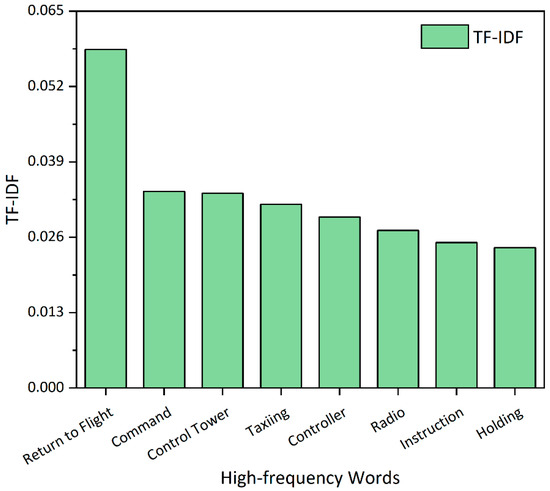

In civil aviation accidents, human factors remain one of the common causes of incidents. These accidents often involve multiple roles, including aircraft operators (such as pilots), ground support staff, and air traffic controllers. This paper conducted keyword extraction research on accidents classified into two categories, flight crew and maintenance crew. Initially, we extracted keywords from the original accident reports related to the flight crew, and the results are presented in Figure 5.

Figure 5.

High-frequency words in aircraft-crew-related accidents.

These keywords revealed several critical aspects that potentially affect flight safety, primarily involving issues of communication coordination and response speed. The frequent appearance of the term “return to flight” reflects the challenges faced by the crew when executing emergency or non-routine operations, often requiring quick decision-making during difficult situations during takeoff or landing to safely resume flight or return. In such instances, the crew’s response speed, judgment abilities, and the quality of communication with the control tower are crucial. Meanwhile, “command” and “control tower”, as two core operational elements, underscore the importance of communication between air traffic controllers and aircraft crews. Effective communication ensures that aircraft operate along the correct flight paths and helps to avoid potential aerial conflicts. However, “radio”, as a medium of communication, has a significant impact on safety, where the quality of the signal and clear understanding between parties are vital. Under high-pressure conditions, any miscommunication or error in information processing could lead to adverse consequences. The terms “taxiing” and “wait” are usually associated with ground traffic management, which likewise requires precise control and command to prevent ground collisions. At busy airports, balancing efficiency and safety is particularly critical, and this is where clear communication and commands play a key role. Incidents often occur due to unclear waiting instructions or execution errors, which is why the word “instruction” frequently appeared.

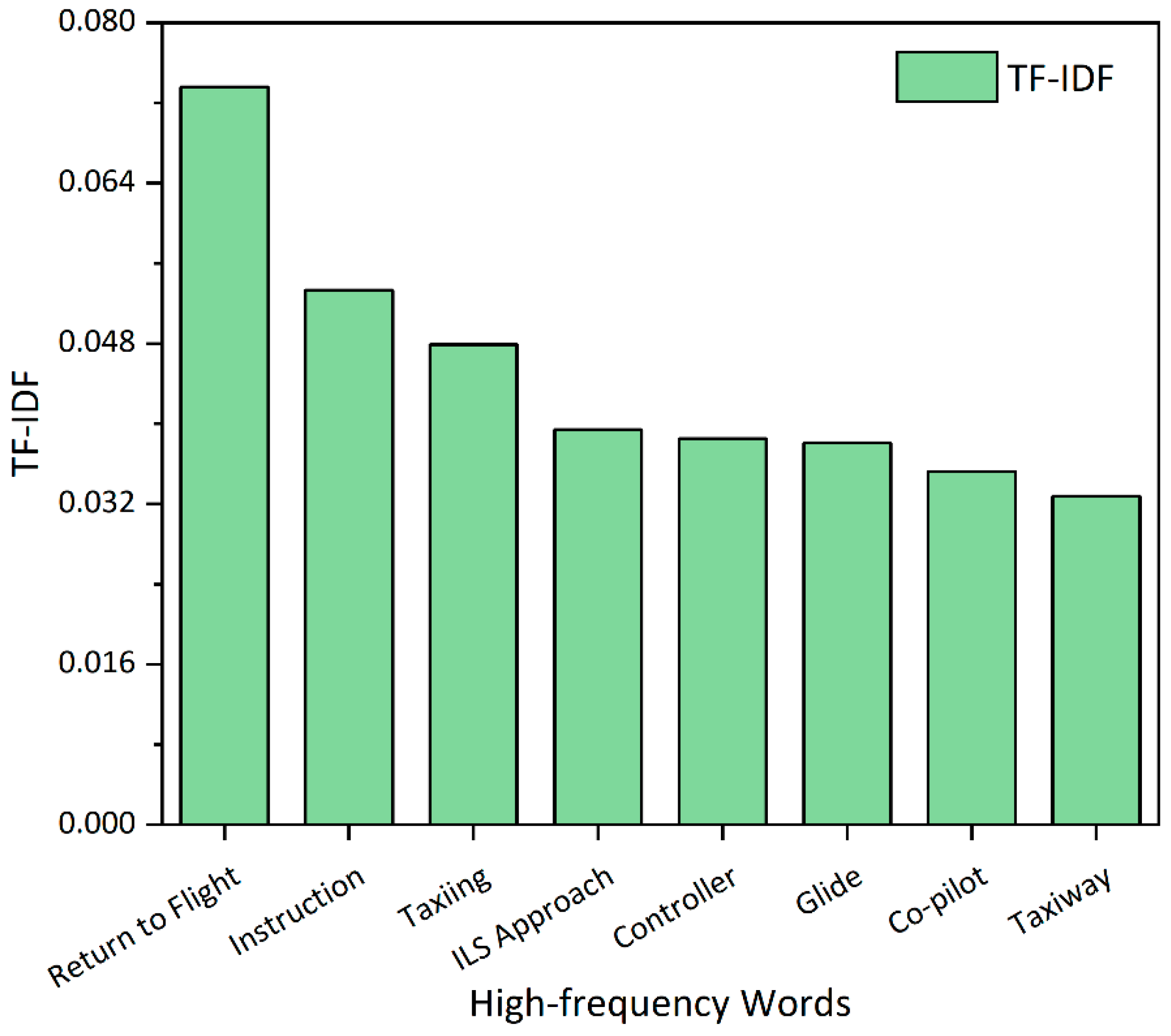

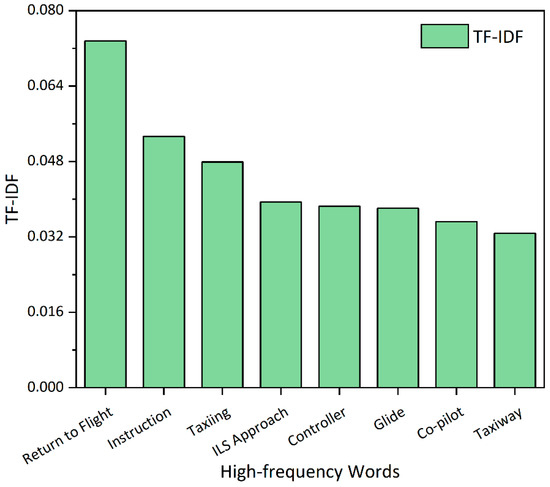

To further analyze aircraft-crew-related accidents, queries using a large language model with specific prompts were made, asking about specific human errors or operational mistakes in the incidents. The responses were then tokenized and analyzed using TF-IDF scores to identify key terms, and the results are displayed in Figure 6.

Figure 6.

High-frequency words derived from aircraft-crew-related accident texts after filtering with ChatGLM3-6b.

The keywords concentrated on several principal areas of aviation operations and communicative interactions. The terms “return to flight” and “glide” suggest that the accidents may have been related to the landing phase, where problems with the glide path could indicate inappropriate angles or speeds as the aircraft approached the runway. This may have been due to flight crew errors in handling the aircraft or inappropriate responses to control instructions under complex or emergency circumstances. The frequent occurrence of the words “instruction” and “controller” focus on the interactions between aircraft crews and air or ground control, where errors or misinterpretations of controller instructions could lead to incorrect operations such as inappropriate flight paths, altitude adjustments, or other related actions. This also points to controllers giving unclear or untimely instructions. The terms “taxiing” and “taxiway” indicate that the accident may have involved improper operations of the aircraft during ground movement at the airport. Potential errors could include, but are not limited to, crew misjudgments in taxiway selection, conflicts with other aircraft or ground vehicles, or unauthorized runway incursions. Precise control over the path and speed during the taxi phase is crucial. “ILS (instrument landing system) approach” usually refers to an automatic landing conducted under conditions of very low visibility, relying on flight instruments rather than visual judgment. Accidents in this context may involve the improper use of the autopilot system or misinterpretations of instrument readings by the crew under extreme weather conditions. The term “co-pilot” appeared most frequently among the crew results, highlighting the role and actions as significant points of focus, possibly due to operational errors by the co-pilot or improper collaboration with the captain.

Through the analysis of the keywords related to aircraft crew accidents, it was evident that incidents involved complex flight operations and human errors in high-pressure environments, including landing, taxiing, and responses to control instructions. Improvement measures should include enhancing crew members’ responsiveness to emergency and complex situations, strengthening training on instrument use, and improving the quality of communication and response mechanisms between ground and air crew to ensure the clear transmission and correct execution of instructions.

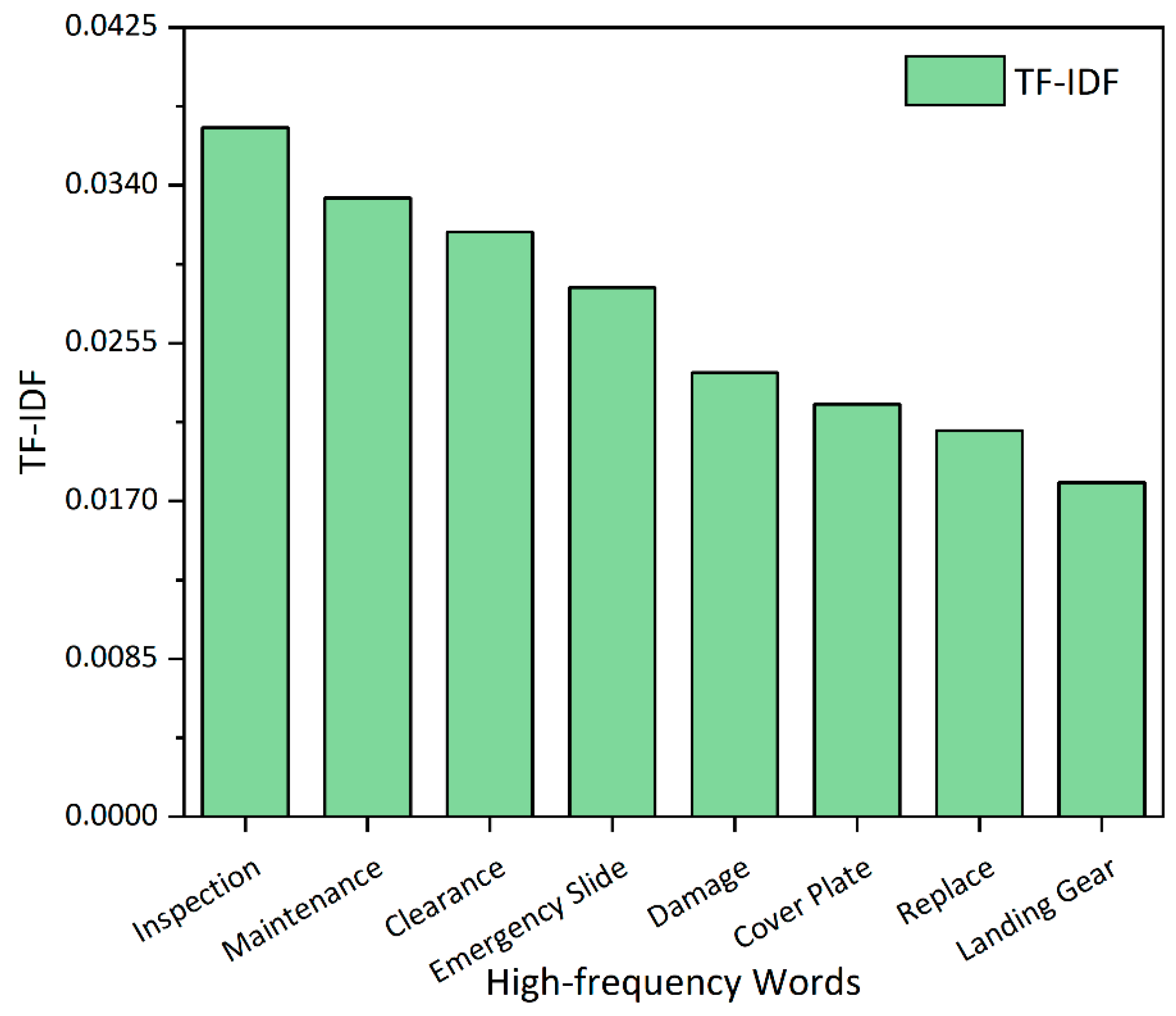

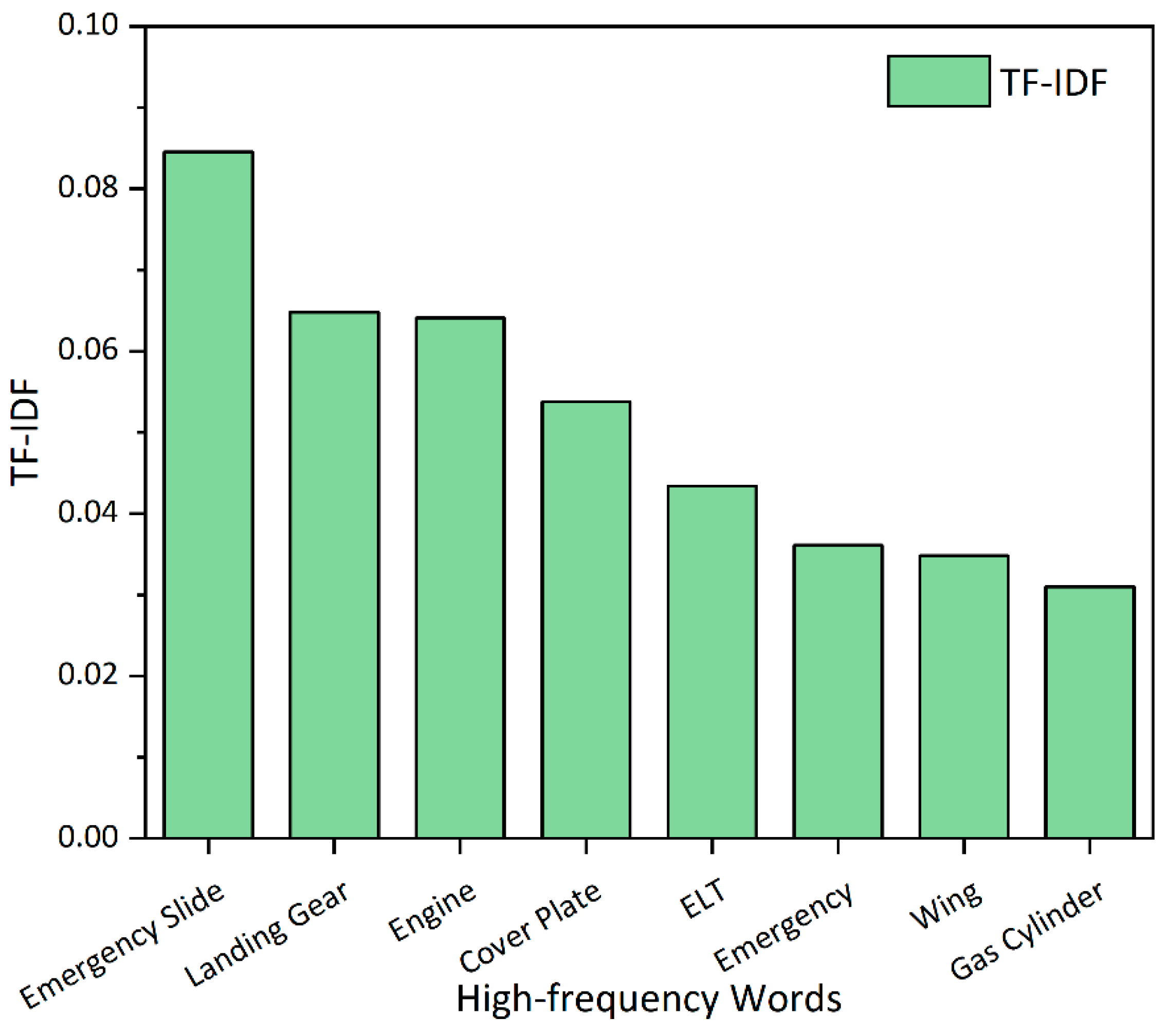

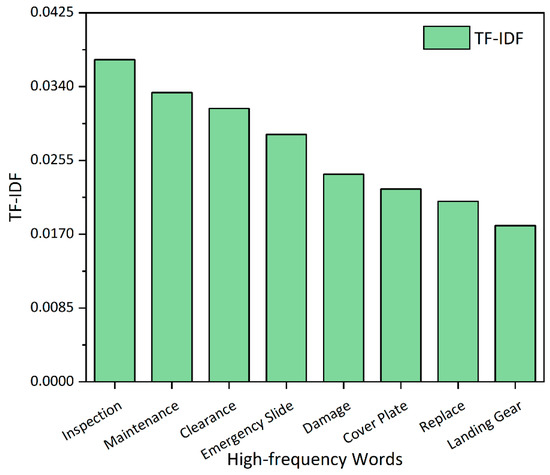

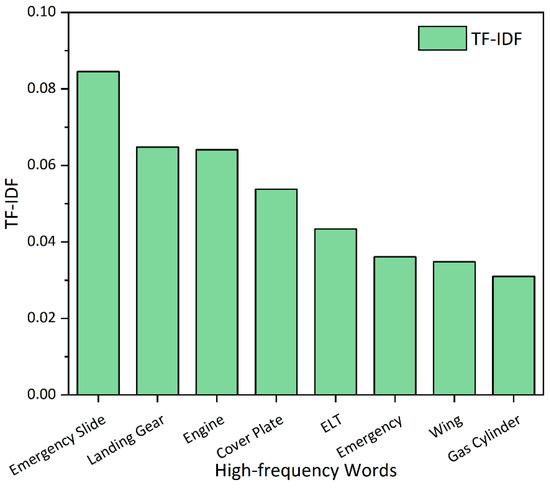

Similarly, a keyword extraction was directly performed on the original texts of accidents attributed to maintenance reasons (Figure 7). A large model was then used to filter the original text information, querying which components of the aircraft experienced failure or were repaired and replaced during the accidents. Keywords were then extracted from the results (Figure 8).

Figure 7.

High-frequency words in maintenance-related accidents.

Figure 8.

High-frequency words derived from maintenance-related accident texts after filtering with ChatGLM3-6b.

After synthesizing the results from the two TF-IDF analyses, it was evident that key maintenance issues involved in accidents included failures of crucial components such as the landing gear, engines, and slides. Additionally, covers, ELTs (emergency locator transmitters), emergency equipment, wings, and gas cylinders were also identified as critical objects for inspection and maintenance. The condition of these components is directly related to the aircraft’s flight safety and emergency-response capabilities. Accident analyses should focus on the maintenance records of these components, their operational conditions prior to failure, and the compliance of maintenance processes. Moreover, improvements may be needed to enhance the quality monitoring of maintenance, ensuring the timeliness and quality of part replacements, as well as the ongoing education and training of maintenance personnel.

5. Discussion and Conclusions

This paper employed a calculation of TF-IDF values for keyword extraction, utilizing both direct original text segmentation and large model filtering methodologies to achieve favorable outcomes in both mechanical and human-factor accident categories. Using natural language processing (NLP) alone provided a broad coverage of keywords but lacked focus, while keywords obtained from large model filtering were more specific, although they also faced limitations in understanding.

For mechanical accidents, tokenization and TF-IDF analyses revealed several important high-frequency keywords, including “warning”, “flaps”, “engine”, and “landing gear”. These indicated the prevalence and severity of failures in these systems and components in aviation accidents. Additionally, the appearance of keywords such as “TCAS”, “smoke”, “actuator”, and “brake” further highlighted other potential risk areas such as failures in the aircraft’s collision avoidance system, internal fires or electrical issues, and failures of critical flight operation components, all of which could seriously compromise flight safety.

For human-factor accidents, crew-related incidents frequently involved complex flight operations and human errors under high-pressure conditions, especially during landing, taxiing, and the execution of control instructions. Furthermore, an analysis of maintenance crew accidents revealed critical issues in maintenance and failure management, particularly those directly related to failures of the landing gear, engines, and other key safety equipment.

Author Contributions

Conceptualization, T.S. and X.C.; methodology, T.S.; software, T.S.; validation, T.S., X.C. and J.Y.; formal analysis, T.S.; investigation, T.S.; resources, T.S.; data curation, T.S.; writing—original draft preparation, T.S.; writing—review and editing, X.C.; visualization, T.S.; supervision, J.Y.; project administration, J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Qaiser, S.; Ali, R. Text mining: Use of TF-IDF to examine the relevance of words to documents. Int. J. Comput. Appl. 2018, 181, 25–29. [Google Scholar] [CrossRef]

- Hu, Y. Research on the Methods of Professional Knowledge Acquisition Based on Web Information Extraction. Ph.D. Thesis, Wuhan University of Technology, Wuhan, China, 2007. [Google Scholar]

- Mukherjea, S.; Subramaniam, L.V.; Chanda, G.; Sankararaman, S.; Kothari, R.; Batra, V.; Bhardwaj, D.; Srivastava, B. Enhancing a biomedical information extraction system with dictionary mining and context disambiguation. IBM J. Res. Dev. 2004, 48, 693–701. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef] [PubMed]

- Choi, M.; Kim, H. Social relation extraction from texts using a support-vector-machine-based dependency trigram kernel. Inf. Process. Manag. 2013, 49, 303–311. [Google Scholar] [CrossRef]

- Somprasertsri, G.; Lalitrojwong, P. A maximum entropy model for product feature extraction in online customer reviews. In Proceedings of the 2008 IEEE Conference on Cybernetics and Intelligent Systems, Chengdu, China, 21–24 September 2008; IEEE: New York, NY, USA, 2008; pp. 575–580. [Google Scholar]

- Yu, X.; Zhang, J. Medical risk information extraction based on Hidden Markov Model. In Proceedings of the 2016 2nd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 14–17 October 2016; IEEE: New York, NY, USA, 2016; pp. 778–782. [Google Scholar]

- Liu, K.; El-Gohary, N. Ontology-based semi-supervised conditional random fields for automated information extraction from bridge inspection reports. Autom. Constr. 2017, 81, 313–327. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, S.F.; Bin Alam, S.; Hassan, M.; Rozbu, M.R.; Ishtiak, T.; Rafa, N.; Mofijur, M.; Ali, A.B.M.S.; Gandomi, A.H. Deep learning modelling techniques: Current progress, applications, advantages, and challenges. Artif. Intell. Rev. 2023, 56, 13521–13617. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, Q.Q.; Yan, R.; Liu, J. A survey on deep learning-based open-domain dialogue systems. Chin. J. Comput. 2019, 42, 1439–1466. [Google Scholar]

- Li, P.; Mao, K. Knowledge-oriented convolutional neural network for causal relation extraction from natural language texts. Expert Syst. Appl. 2019, 115, 512–523. [Google Scholar] [CrossRef]

- Gupta, S.; Pawar, S.; Ramrakhiyani, N.; Palshikar, G.K.; Varma, V. Semi-supervised recurrent neural network for adverse drug reaction mention extraction. BMC Bioinform. 2018, 19, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Yang, X.; Yang, C.; Guo, X.; Zhang, X.; Wu, C. Dependency-based long short term memory network for drug-drug interaction extraction. BMC Bioinform. 2017, 18, 99–109. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint 2018, arXiv:1810.04805. [Google Scholar]

- Dagdelen, J.; Dunn, A.; Lee, S.; Walker, N.; Rosen, A.S.; Ceder, G.; Persson, K.A.; Jain, A. Structured information extraction from scientific text with large language models. Nat. Commun. 2024, 15, 1418. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Xiao, Z.; Li, X.; Zhang, Q.; Chan, E.W.; Wong, I.C. Research Data Collaboration Task Force. Large Language Model in Medical Information Extraction from Titles and Abstracts with Prompt Engineering Strategies: A Comparative Study of GPT-3.5 and GPT-4. medRxiv 2024. [Google Scholar] [CrossRef]

- Giray, L. Prompt engineering with ChatGPT: A guide for academic writers. Ann. Biomed. Eng. 2023, 51, 2629–2633. [Google Scholar] [CrossRef] [PubMed]

- Xing, B.; Gen, R.; Ji, D. E-commerce shopping system based on Jieba segmentation search and SSM framework. Inf. Comput. (Theor. Ed.) 2018, 7, 104–105, 108. [Google Scholar]

- Floridi, L.; Chiriatti, M. GPT-3: Its nature, scope, limits, and consequences. Minds Mach. 2020, 30, 681–694. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, X.; Yu, Y. ChatGLM-6B Fine-Tuning for Cultural and Creative Products Advertising Words. In Proceedings of the 2023 International Conference on Culture-Oriented Science and Technology (CoST), Xi’an, China, 11–14 October 2023; IEEE: New York, NY, USA, 2023; pp. 291–295. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).