A CNN–BiLSTM Architecture for Macroeconomic Time Series Forecasting †

Abstract

1. Introduction

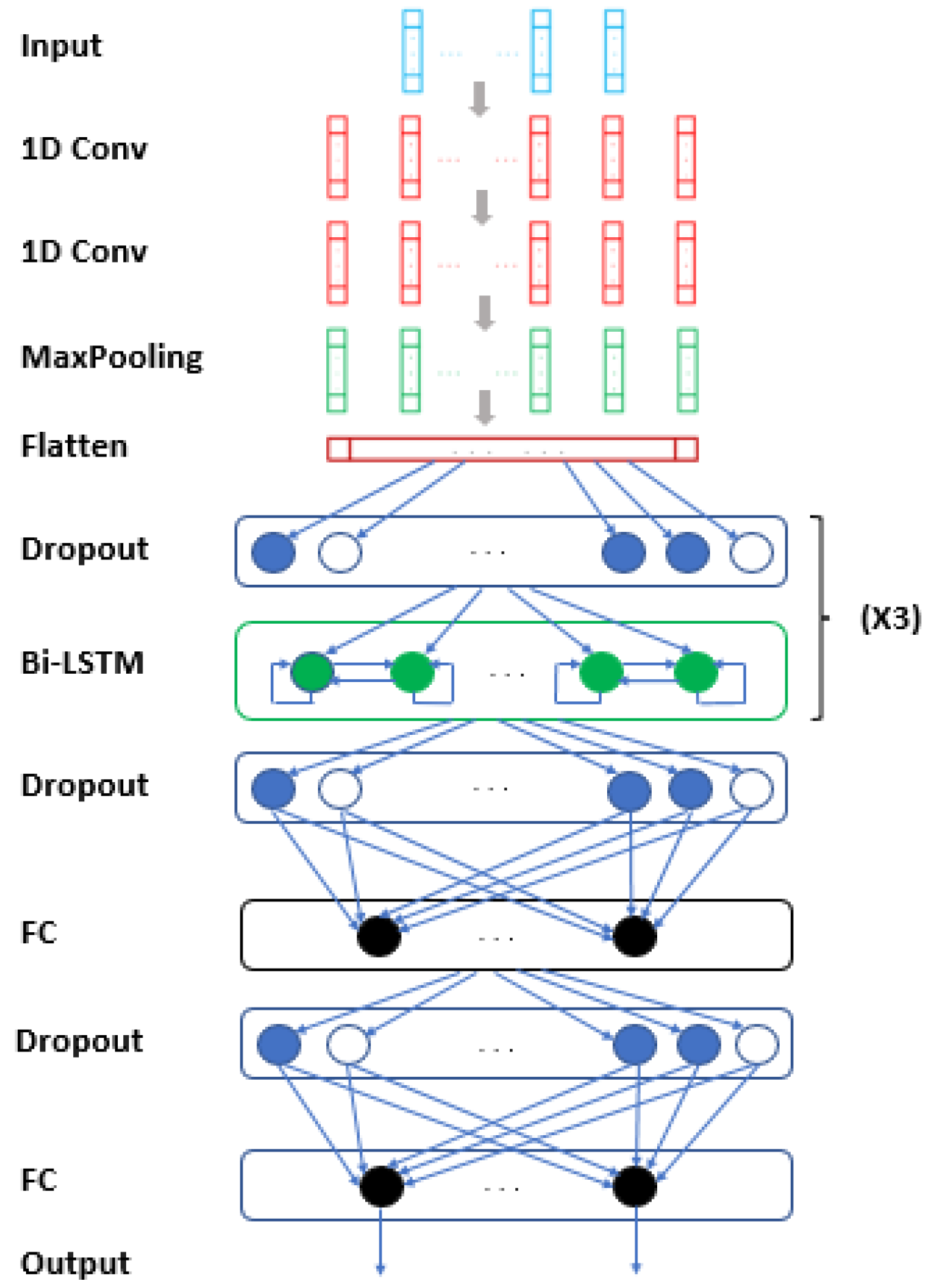

2. The Model

3. Empirical Analysis

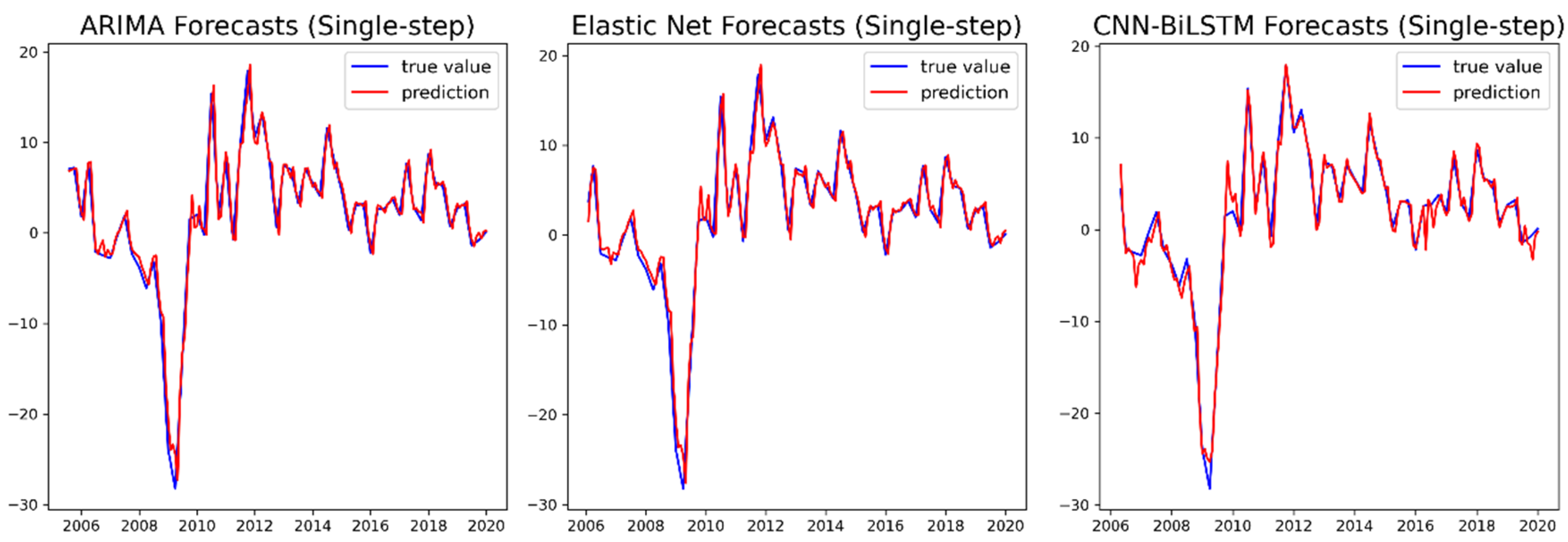

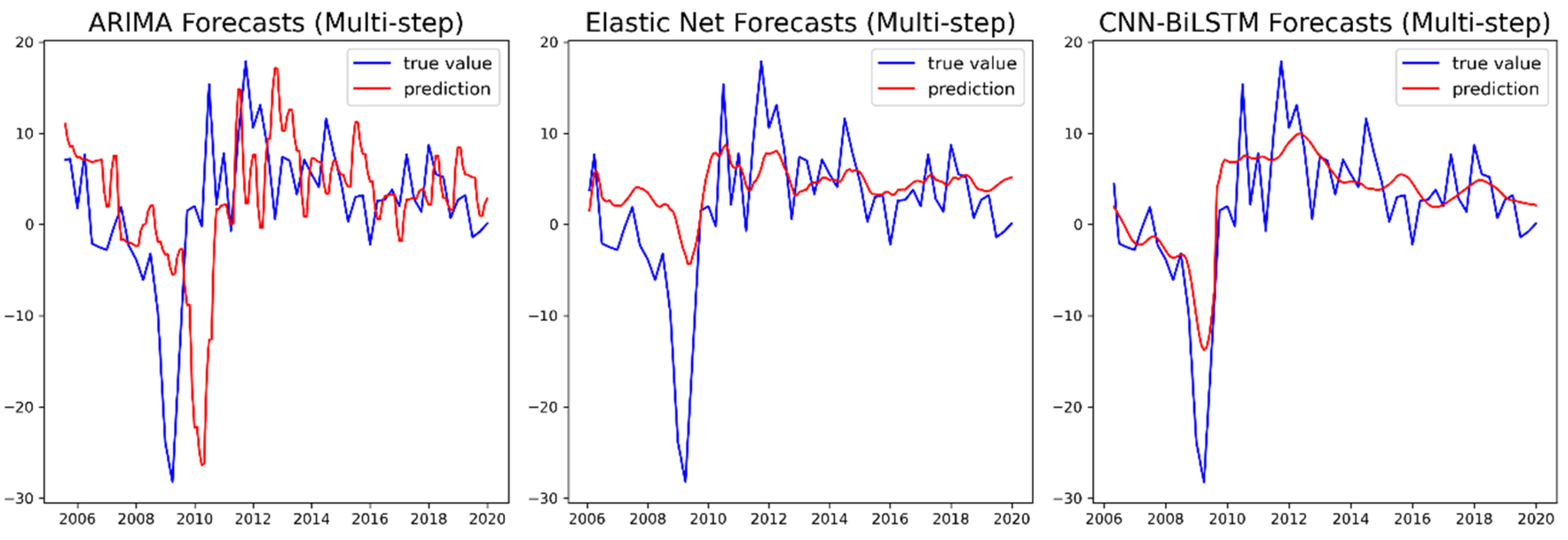

4. Results

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix

Appendix A.1

| consumption | inflation, per capita GDP, corporate taxation, private savings, expen. durable goods, household income, unemployment rate, unempl. over 27 weeks |

| investments | consumption, imports, exports, per capita GDP, firms’ profits, expen. durable goods, GDP, house prices, employment rate, unemployment rate, unempl. over 27 weeks |

| imports | consumption, exports, inflation, GDP, expen. durable goods, household income, government expenditure, house prices |

| exports | consumption, investments, inflation, GDP, expen. durable goods, government expenditure, unemployment rate |

| inflation | corporate taxation, private savings, GDP, government expenditure, unempl. over 27 weeks |

| wages | per capita GDP, firms’ profits, corporate taxation, household income |

| per capita GDP | investments, wages, firms’ profits, corporate taxation, private savings, GDP, household income, employment rate |

| firms’ profits | wages, per capita GDP, Private savings, GDP |

| corporate taxation | wages, firms’ profits, private savings, unemployment rate |

| private savings | wages, per capita GDP, firms’ profits, household income |

| expen. durable good | inflation, wages, firms’ profits, private savings, employment rate, unemployment rate, unempl. over 27 weeks |

| GDP | consumption, investments, per capita GDP, firms’ profits, expen. durable goods, household income, government expenditure, house prices, unemployment rate |

| household income | consumption, imports, inflation, wages, firms’ profits, private savings, expen. durable goods, house prices, employment rate, unemployment rate, unempl. over 27 weeks |

| government expenditure | investments, exports, inflation, expen. durable goods, household income, unemployment rate |

| house prices | imports, exports, inflation, corporate taxation, expen. durable goods, GDP, government expenditure, employment rate, unemployment rate |

| employment rate | consumption, inflation, per capita GDP, GDP, household income, government expenditure |

| unemployment rate | consumption, inflation, expen. durable goods, GDP, employment rate |

| unempl. over 27 weeks | private savings, imports, exports, GDP, house prices, unemployment rate |

Appendix A.2. Specifications of the ARIMA, ARIMAX, and VAR Models

References

- Fan, J.; Ma, C.; Zhong, Y. A selective overview of deep learning. Stat Sci. 2021, 36, 264–290. [Google Scholar] [CrossRef] [PubMed]

- Mathew, A.; Amudha, P.; Sivakumari, S. Deep Learning Techniques: An Overview. In Advanced Machine Learning Technologies and Applications, Proceedings of the International Conference on Advances in Intelligent Systems and Computing (AMLTA 2020), Jaipur, India, 13–15 February 2020; Hassanien, A., Bhatnagar, R., Darwish, A., Eds.; Springer: Singapore, 2020; Volume 1141, p. 1141. [Google Scholar] [CrossRef]

- Chakraborty, C.; Joseph, A. Machine Learning at Central Banks; Working Paper 674; Bank of England: London, UK, 2017. [Google Scholar]

- Espeholt, L.; Agrawal, S.; Sonderby, C.; Kumar, M.; Heek, J.; Bromberg, C.; Gazen, C.; Carver, R.; Andrychowicz, M.; Hickey, J.; et al. Skillful twelve hour precipitation forecasts using large context neural networks. arXiv 2021, arXiv:2111.07470. [Google Scholar]

- Chen, G.; Liu, S.; Jiang, F. Daily Weather Forecasting Based on Deep Learning Model: A Case Study of Shenzhen City, China. Atmosphere 2022, 13, 1208. [Google Scholar] [CrossRef]

- Corsaro, S.; De Simone, V.; Marino, Z.; Scognamiglio, S. l1-Regularization in portfolio selection with machine learning. Mathematics 2022, 10, 540. [Google Scholar] [CrossRef]

- Asawa, Y.S. Modern Machine Learning Solutions for Portfolio Selection. IEEE Eng. Manag. Rev. 2022, 50, 94–112. [Google Scholar] [CrossRef]

- Kumbure, M.M.; Lohrmann, C.; Luukka, P.; Porras, J. Machine learning techniques and data for stock market forecasting: A literature review. Expert Syst. Appl 2022, 197, 116659. [Google Scholar] [CrossRef]

- Khalid, A.; Huthaifa, K.; Hamzah, A.A.; Anas, R.A.; Laith, A. A New Stock Price Forecasting Method Using Active Deep Learning Approach. J. Open Innov. Technol. Mark. Complex. 2022, 8, 96, ISSN 2199-8531. [Google Scholar] [CrossRef]

- Staffini, A. Stock Price Forecasting by a Deep Convolutional Generative Adversarial Network. Front. Artif. Intell. 2022, 5, 837596. [Google Scholar] [CrossRef]

- Namini, S.S.; Tavakoli, N.; Namin, A.S. A Comparison of ARIMA and LSTM in Forecasting Time Series. In Proceedings of the 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 1394–1401. [Google Scholar] [CrossRef]

- Zulfany, E.R.; Reina, S.; Andy, E. A Comparison: Prediction of Death and Infected COVID-19 Cases in Indonesia Using Time Series Smoothing and LSTM Neural Network. Procedia Comput. Sci. 2021, 179, 982–988, ISSN 1877-0509. [Google Scholar] [CrossRef]

- Jin, X.; Yu, X.; Wang, X.; Bai, Y.; Su, T.; Kong, J. Prediction for Time Series with CNN and LSTM. In Proceedings of the 11th International Conference on Modelling, Identification and Control (ICMIC2019); Lecture Notes in Electrical Engineering; Wang, R., Chen, Z., Zhang, W., Zhu, Q., Eds.; Springer: Singapore, 2020; Volume 582. [Google Scholar] [CrossRef]

- Jama, M. Time Series Modeling and Forecasting of Somaliland Consumer Price Index: A Comparison of ARIMA and Regression with ARIMA Errors. Am. J. Theor. Appl. Stat. 2020, 9, 143–153. [Google Scholar] [CrossRef]

- Hall, A.S. Machine Learning Approaches to Macroeconomic Forecasting. Econ. Rev. Fed. Reserve Bank Kans. City 2018, 103, 63–81. [Google Scholar] [CrossRef]

- Khan, M.A.; Abbas, K.; Su’ud, M.M.; Salameh, A.A.; Alam, M.M.; Aman, N.; Mehreen, M.; Jan, A.; Hashim, N.A.A.B.N.; Aziz, R.C. Application of Machine Learning Algorithms for Sustainable Business Management Based on Macro-Economic Data: Supervised Learning Techniques Approach. Sustainability 2022, 14, 9964. [Google Scholar] [CrossRef]

- Coulombe, P.G.; Leroux, M.; Stevanovic, D.; Surprenant, S. How is machine learning useful for macroeconomic forecasting? J. Appl. Econom. 2022, 37, 920–964. [Google Scholar] [CrossRef]

- Nosratabadi, S.; Mosavi, A.; Duan, P.; Ghamisi, P.; Filip, F.; Band, S.S.; Reuter, U.; Gama, J.; Gandomi, A.H. Data Science in Economics: Comprehensive Review of Advanced Machine Learning and Deep Learning Methods. Mathematics 2020, 8, 1799. [Google Scholar] [CrossRef]

- Yoon, J. Forecasting of Real GDP Growth Using Machine Learning Models: Gradient Boosting and Random Forest Approach. Comput. Econ. 2021, 57, 247–265. [Google Scholar] [CrossRef]

- Vafin, A. Forecasting macroeconomic indicators for seven major economies using the ARIMA model. Sage Sci. Econ. Rev. 2020, 3, 1–16. [Google Scholar] [CrossRef]

- Shijun, C.; Xiaoli, H.; Yunbin, S.; Chong, Y. Application of Improved LSTM Algorithm in Macroeconomic Forecasting. Comput. Intell. Neurosci. 2021, 2021, 4471044. [Google Scholar] [CrossRef]

- Makridakis, S.; Spiliotis, E.; Assimakopoulos, V. Statistical and Machine Learning forecasting methods: Concerns and ways forward. PLoS ONE 2018, 13, e0194889. [Google Scholar] [CrossRef]

- Staffini, A.; Svensson, T.; Chung, U.-I.; Svensson, A.K. Heart Rate Modeling and Prediction Using Autoregressive Models and Deep Learning. Sensors 2021, 22, 34. [Google Scholar] [CrossRef]

- Springenberg, J.; Dosovitskiy, A.; Brox, T.; Riedmiller, M. Striving for Simplicity: The All Convolutional Net. arXiv 2015, arXiv:1412.6806. [Google Scholar]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM networks. In Proceedings of the IEEE International Joint Conference on Neural Networks 2005, Montreal, QC, Canada, 31 July–4 August 2005; Volume 4, pp. 2047–2052. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Yuanyuan, C.; Zhang, Y. Adaptive sparse dropout: Learning the certainty and uncertainty in deep neural networks. Neurocomputing 2021, 450, 354–361, ISSN 0925-2312. [Google Scholar] [CrossRef]

- Bikash, S.; Angshuman, P.; Dipti, P.M. Deterministic dropout for deep neural networks using composite random forest. Pattern Recognit. Lett. 2020, 131, 205–212, ISSN 0167-8655. [Google Scholar] [CrossRef]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. J. Mach. Learn. Res. 2010, 9, 249–256. [Google Scholar]

- Le, Q.; Jaitly, N.; Hinton, G. A Simple Way to Initialize Recurrent Networks of Rectified Linear Units. arXiv 2015, arXiv:1504.00941. [Google Scholar]

- Calin, O. Deep Learning Architectures: A Mathematical Approach; Springer Series in the Data Sciences; Springer International Publishing: New York, NY, USA, 2020; ISBN 978-3-030-36721-3. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980v9. [Google Scholar]

- Ruder, S. An Overview of Multi-Task Learning in Deep Neural Networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Ge, R.; Huang, F.; Jin, C.; Yuan, Y. Escaping from Saddle Points---Online Stochastic Gradient for Tensor Decomposition. arXiv 2015, arXiv:1503.02101. [Google Scholar]

- Bengio, Y. Practical recommendations for gradient-based training of deep architectures. In Tricks of the Trade, 2nd ed.; Volume 7700 of Theoretical Computer Science and General Issues; Springer: Berlin/Heidelberg, Germany, 2012; pp. 437–478. [Google Scholar]

- Masters, D.; Luschi, C. Revisiting Small Batch Training for Deep Neural Networks. arXiv 2018, arXiv:1804.07612. [Google Scholar]

- Gholamy, A.; Kreinovich, V.; Kosheleva, O. Why 70/30 or 80/20 Relation Between Training and Testing Sets: A Pedagogical Explanation; University of Texas at El Paso Departmental Technical Reports (CS): El Paso, TX, USA, 2018. [Google Scholar]

- Willmott, C.J.; Matsuura, K. Advantages of the Mean Absolute Error (MAE) over the Root Mean Square Error (RMSE) in Assessing Average Model Performance. Clim. Res. 2005, 30, 79. [Google Scholar] [CrossRef]

| Variable | ARIMA | Elastic Net | XGBoost | BiLSTM | Our CNN-BiLSTM |

|---|---|---|---|---|---|

| RMSE MAE | RMSE MAE | RMSE MAE | RMSE (std. dev.) MAE (std. dev.) | RMSE (std. dev.) MAE (std. dev.) | |

| consumption | 4.0965 | 2.9343 | 3.0921 | 2.9725 (0.0686) | 1.9736 (0.0463) |

| 2.8597 | 1.9814 | 2.0763 | 2.0737 (0.0217) | 1.5941 (0.0459) | |

| investments | 8.8201 | 6.0735 | 6.1441 | 6.2068 (0.7248) | 3.7446 (0.1710) |

| 6.0830 | 4.0524 | 3.9671 | 4.1441 (0.4170) | 2.9190 (0.1372) | |

| imports | 11.088 | 8.3575 | 7.7900 | 7.8201 (0.4894) | 4.3390 (0.1758) |

| 7.1216 | 6.1214 | 5.2379 | 5.1850 (0.4264) | 3.3621 (0.1416) | |

| exports | 10.599 | 7.1607 | 7.2648 | 7.1996 (0.2561) | 5.1139 (0.1191) |

| 6.6301 | 5.0656 | 4.9356 | 4.6305 (0.4166) | 3.3972 (0.0941) | |

| inflation | 2.2526 | 1.5051 | 1.5849 | 1.5760 (0.0418) | 0.8927 (0.0305) |

| 1.4850 | 0.9833 | 0.9830 | 1.0704 (0.0357) | 0.6801 (0.0404) | |

| wages | 206.60 | 148.31 | 166.21 | 164.75 (8.7017) | 141.20 (2.4414) |

| 143.02 | 101.07 | 127.92 | 130.71 (13.638) | 95.986 (3.4415) | |

| per capita GDP | 145.61 | 102.61 | 114.19 | 148.54 (9.9937) | 86.209 (2.9799) |

| 107.06 | 76.707 | 87.994 | 125.79 (11.249) | 68.618 (3.3470) | |

| firms’ profits | 39.849 | 28.078 | 29.166 | 29.548 (1.0818) | 23.911 (0.9131) |

| 28.648 | 19.137 | 19.690 | 19.660 (0.7318) | 18.481 (0.5890) | |

| corporate taxation | 4.0462 | 3.2166 | 3.7096 | 4.5836 (0.6401) | 3.0049 (0.0761) |

| 3.1330 | 2.4978 | 2.9433 | 3.6909 (0.2091) | 2.3460 (0.0533) | |

| private savings | 47.670 | 37.938 | 38.543 | 39.569 (1.5244) | 36.840 (03155) |

| 35.448 | 27.982 | 28.764 | 29.538 (1.2444) | 27.392 (0.3602) | |

| expen. durable goods | 8.5890 | 6.0217 | 6.3203 | 6.5361 (0.1523) | 4.2486 (0.0985) |

| 5.9085 | 3.8684 | 4.2891 | 4.4667 (0.1151) | 3.2753 (0.0737) | |

| GDP | 2.9405 | 2.2276 | 2.2654 | 2.1998 (0.1925) | 1.3007 (0.0669) |

| 2.0696 | 1.6256 | 1.5530 | 1.4258 (0.0305) | 0.9965 (0.0445) | |

| household income | 4.4913 | 3.0415 | 3.3690 | 3.0825 (0.0092) | 2.9496 (0.0172) |

| 2.9752 | 2.0485 | 2.1789 | 1.9547 (0.0143) | 1.8623 (0.0161) | |

| government expenditure | 2.4130 | 2.4078 | 2.6883 | 2.4379 (0.0322) | 1.9379 (0.0894) |

| 1.9668 | 1.8971 | 2.0271 | 2.0667 (0.0413) | 1.5853 (0.0892) | |

| house prices | 5.5399 | 2.8767 | 3.4029 | 3.6669 (0.1034) | 2.3246 (0.0597) |

| 4.2488 | 1.9062 | 2.1275 | 2.5121 (0.1170) | 1.8021 (0.0506) | |

| employment rate | 0.9396 | 0.4462 | 0.6097 | 1.0915 (0.1840) | 0.8562 (0.0315) |

| 0.5943 | 0.2688 | 0.3803 | 0.6800 (0.2310) | 0.7342 (0.0032) | |

| unemployment rate | 1.2203 | 0.5638 | 0.7087 | 0.6782 (0.0911) | 0.4307 (0.0561) |

| 0.8644 | 0.4000 | 0.4847 | 0.4163 (0.0714) | 0.3565 (0.0417) | |

| unemp. over 27 weeks | 5.8469 | 3.9508 | 11.856 | 5.4433 (0.8422) | 1.9146 (0.1641) |

| 3.8736 | 2.9983 | 8.7233 | 4.0504 (0.6085) | 1.4947 (0.1287) |

| Variable | ARIMA | Elastic Net | XGBoost | BiLSTM | Our CNN-BiLSTM |

|---|---|---|---|---|---|

| RMSE MAE | RMSE MAE | RMSE MAE | RMSE (std. dev.) MAE (std. dev.) | RMSE (std. dev.) MAE (std. dev.) | |

| consumption | 0.9643 | 0.9553 | 1.3197 | 0.9081 (0.0280) | 0.8653 (0.0238) |

| 0.7120 | 0.6583 | 0.9289 | 0.6078 (0.0211) | 0.5385 (0.0168) | |

| investments | 1.4326 | 1.4369 | 2.3368 | 1.4349 (0.0392) | 1.3400 (0.0365) |

| 0.9711 | 0.9419 | 1.4167 | 0.9849 (0.0383) | 0.9246 (0.0147) | |

| imports | 1.7607 | 2.3095 | 2.5519 | 1.7439 (0.1267) | 1.5588 (0.0478) |

| 1.1129 | 1.6583 | 1.7599 | 1.1268 (0.0544) | 1.0276 (0.0273) | |

| exports | 2.283 | 2.2169 | 2.7194 | 2.3930 (0.1076) | 2.0841 (0.0452) |

| 1.6165 | 1.5940 | 2.0555 | 1.5815 (0.0847) | 1.3116 (0.0749) | |

| inflation | 0.4846 | 0.9553 | 0.6456 | 0.6115 (0.0376) | 0.5554 (0.0149) |

| 0.2919 | 0.6593 | 0.4136 | 0.3695 (0.0223) | 0.3225 (0.0204) | |

| wages | 117.50 | 98.920 | 127.54 | 126.99 (4.5553) | 121.86 (1.1490) |

| 50.580 | 44.557 | 78.866 | 81.072 (9.1984) | 71.469 (2.7451) | |

| per capita GDP | 65.879 | 65.311 | 78.466 | 80.204 (5.5873) | 64.167 (0.4711) |

| 37.380 | 32.470 | 46.829 | 56.759 (6.7406) | 40.493 (1.7013) | |

| firms’ profits | 20.872 | 19.399 | 23.328 | 20.771 (1.5599) | 17.165 (0.0945) |

| 10.754 | 9.1343 | 13.805 | 12.611 (0.8903) | 9.4561 (0.1147) | |

| corporate taxation | 2.2362 | 2.2831 | 2.3848 | 2.5278 (0.1010) | 2.0766 (0.0557) |

| 1.3484 | 1.2905 | 1.4029 | 1.7611 (0.1016) | 1.1868 (0.0732) | |

| private savings | 26.963 | 28.001 | 34.706 | 31.628 (2.3708) | 25.765 (0.3857) |

| 18.998 | 20.012 | 23.230 | 21.659 (1.8836) | 15.805 (08.280) | |

| expen. durable goods | 2.1026 | 2.0493 | 3.2429 | 1.9780 (0.1184) | 1.7694 (0.0524) |

| 1.3557 | 1.4233 | 2.1295 | 1.4542 (0.0796) | 1.2527 (0.0757) | |

| GDP | 0.6006 | 0.6097 | 0.7673 | 0.5439 (0.0094) | 0.5086 (0.0291) |

| 0.4002 | 0.3924 | 0.5539 | 0.3475 (0.0222) | 0.3165 (0.0240) | |

| household income | 0.9904 | 1.1643 | 1.7724 | 1.1304 (0.0906) | 0.8914 (0.0281) |

| 0.5434 | 0.6927 | 0.9226 | 0.6783 (0.0442) | 0.6085 (0.0277) | |

| government expenditure | 0.5634 | 0.6093 | 0.7855 | 0.5975 (0.0394) | 0.5191 (0.0098) |

| 0.4001 | 0.4405 | 0.6336 | 0.4525 (0.0304) | 0.3923 (0.0062) | |

| house prices | 0.7943 | 0.7671 | 1.2116 | 0.9762 (0.0797) | 0.8293 (0.0291) |

| 0.5159 | 0.4363 | 0.7466 | 0.6241 (0.0592) | 0.5085 (0.0313) | |

| employment rate | 0.1316 | 0.1373 | 0.2263 | 0.3539 (0.0297) | 0.3716 (0.0058) |

| 0.1029 | 0.1059 | 0.1703 | 0.2360 (0.0404) | 0.2422 (0.0141) | |

| unemployment rate | 0.1547 | 0.1592 | 0.2037 | 0.1552 (0.0022) | 0.1569 (0.0007) |

| 0.1238 | 0.1279 | 0.1526 | 0.1230 (0.0027) | 0.1247 (0.0011) | |

| unemp. over 27 weeks | 1.2845 | 1.2896 | 9.7659 | 1.3294 (0.0988) | 1.5099 (0.0656) |

| 0.9715 | 0.9756 | 6.6189 | 1.0333 (0.0757) | 1.1661 (0.0587) |

| Variable | ARIMAX | VAR | Elastic Net | XGBoost | BiLSTM | Our CNN-BiLSTM |

|---|---|---|---|---|---|---|

| RMSE MAE | RMSE MAE | RMSE MAE | RMSE MAE | RMSE (std. dev.) MAE (std. dev.) | RMSE (std. dev.) MAE (std. dev.) | |

| consumption | 4.0460 | 4.1935 | 3.6708 | 3.4773 | 3.4177 (0.1048) | 3.2332 (0.0232) |

| 2.7761 | 3.0393 | 2.6287 | 2.3817 | 2.5099 (0.0734) | 2.2925 (0.0376) | |

| investments | 8.3121 | 11.113 | 6.9493 | 6.4072 | 6.2005 (0.3294) | 5.6495 (0.0631) |

| 5.4483 | 8.8338 | 4.5236 | 4.2031 | 4.2836 (0.2893) | 3.8215 (0.1032) | |

| imports | 9.5076 | 8.7729 | 7.6435 | 7.8722 | 6.9976 (0.1286) | 6.5982 (0.0757) |

| 7.1720 | 6.8316 | 5.4115 | 5.5894 | 4.3225 (0.1295) | 4.0813 (0.0615) | |

| exports | 8.6620 | 8.7729 | 7.6435 | 7.3792 | 7.3524 (0.1209) | 6.9591 (0.1499) |

| 6.4796 | 6.8316 | 5.4115 | 5.3136 | 5.2083 (0.2321) | 4.7479 (0.1894) | |

| inflation | 1.9595 | 1.7622 | 1.6580 | 1.5018 | 1.5093 (0.0164) | 1.5165 (0.0353) |

| 1.5155 | 1.1454 | 1.2344 | 0.9855 | 1.0497 (0.0339) | 1.0930 (0.0473) | |

| wages | 173.51 | 164.74 | 142.60 | 147.79 | 154.29 (7.3877) | 141.65 (0.4800) |

| 135.74 | 123.20 | 94.343 | 99.890 | 108.36 (11.398) | 91.314 (1.1180) | |

| per capita GDP | 131.39 | 127.81 | 108.66 | 109.46 | 104.87 (2.6097) | 97.836 (0.5310) |

| 103.27 | 99.720 | 80.566 | 84.470 | 79.508 (2.7268) | 72.670 (0.4794) | |

| firms’ profits | 29.515 | 29.606 | 29.169 | 28.808 | 28.881 (0.1190) | 28.029 (0.0803) |

| 20.162 | 20.127 | 20.061 | 19.758 | 19.517 (0.1231) | 18.927 (0.1183) | |

| corporate taxation | 4.1696 | 3.9330 | 3.0939 | 3.5095 | 3.2635 (0.0528) | 2.9983 (0.0300) |

| 3.0078 | 2.8608 | 2.3600 | 2.7044 | 2.5187 (0.0696) | 2.2922 (0.0125) | |

| private savings | 39.156 | 40.942 | 37.644 | 37.657 | 36.509 (0.3576) | 35.862 (0.2169) |

| 29.315 | 30.340 | 27.215 | 27.197 | 26.957 (0.1213) | 26.416 (0.0249) | |

| expen. durable goods | 7.5644 | 6.9866 | 6.7296 | 6.6235 | 6.7366 (0.1282) | 6.3273 (0.1463) |

| 5.0644 | 5.3048 | 4.3630 | 4.4179 | 4.7585 (0.2162) | 4.2023 (0.0660) | |

| GDP | 3.2227 | 3.3951 | 2.5851 | 2.3209 | 2.2353 (0.1251) | 2.0479 (0.0587) |

| 2.4332 | 2.3968 | 1.8965 | 1.6542 | 1.6550 (0.2241) | 1.3924 (0.0620) | |

| household income | 3.5447 | 3.0540 | 3.1000 | 3.4116 | 3.0019 (0.0269) | 2.8639 (0.1174) |

| 2.5716 | 2.0809 | 2.1340 | 2.1781 | 2.0218 (0.0438) | 1.9530 (0.0519) | |

| government expen. | 3.5540 | 4.8023 | 2.0151 | 2.3825 | 2.3299 (0.0241) | 1.5517 (0.0455) |

| 2.7296 | 3.4904 | 1.5048 | 1.8464 | 1.8869 (0.0480) | 1.2469 (0.0295) | |

| house prices | 4.1156 | 5.2339 | 3.4730 | 3.4337 | 3.6274 (0.0694) | 3.2234 (0.0437) |

| 2.7895 | 4.0954 | 2.5971 | 2.4875 | 2.7029 (0.3359) | 2.3406 (0.0359) | |

| employment rate | 2.0070 | 4.2618 | 0.4970 | 0.5008 | 0.5733 (0.1733) | 0.3754 (0.0168) |

| 1.5946 | 3.9164 | 0.3281 | 0.3830 | 0.4789 (0.1207) | 0.2988 (0.0182) | |

| unemployment rate | 2.0894 | 2.3794 | 0.5858 | 0.5554 | 0.6595 (0.0508) | 0.4636 (0.0306) |

| 1.6533 | 1.7583 | 0.4443 | 0.4153 | 0.4667 (0.0342) | 0.3147 (0.0314) | |

| unemp. over 27 w. | 17.772 | 17.696 | 2.9366 | 9.5743 | 3.7248 (0.6547) | 2.8321 (0.0330) |

| 15.120 | 17.218 | 2.2811 | 6.9341 | 3.1515 (0.5486) | 2.2403 (0.0075) |

| Variable | ARIMAX | VAR | Elastic Net | XGBoost | BiLSTM | Our CNN-BiLSTM |

|---|---|---|---|---|---|---|

| RMSE MAE | RMSE MAE | RMSE MAE | RMSE MAE | RMSE (std. dev.) MAE (std. dev.) | RMSE (std. dev.) MAE (std. dev.) | |

| consumption | 1.7566 | 2.9969 | 1.1707 | 1.6084 | 1.9307 (0.1019) | 2.1592 (0.1323) |

| 1.2117 | 2.4505 | 0.8992 | 1.2032 | 1.4358 (0.1144) | 1.6629 (0.1393) | |

| investments | 5.3641 | 10.213 | 2.7433 | 2.8168 | 2.5233 (0.1986) | 2.2397 (0.2277) |

| 3.6766 | 9.2144 | 2.2516 | 1.8298 | 1.8002 (0.1657) | 1.6682 (0.1700) | |

| imports | 5.3600 | 6.0002 | 2.8470 | 3.4139 | 3.2036 (0.2811) | 2.3958 (0.0599) |

| 4.3036 | 4.7285 | 2.1585 | 2.0686 | 2.4632 (0.1612) | 1.7573 (0.0653) | |

| exports | 7.5044 | 7.2302 | 3.9126 | 3.9077 | 4.4484 (0.3598) | 3.6799 (0.1089) |

| 5.1107 | 5.4215 | 2.8886 | 2.7276 | 3.0564 (0.1857) | 2.7379 (0.1062) | |

| inflation | 2.4004 | 5.8533 | 0.6923 | 0.7326 | 1.3577 (0.1675) | 1.1152 (0.2807) |

| 1.9318 | 4.6454 | 0.4677 | 0.4734 | 1.0098 (0.1148) | 0.8316 (0.2151) | |

| wages | 144.23 | 138.73 | 117.72 | 124.43 | 118.26 (2.1950) | 114.22 (2.0562) |

| 107.37 | 92.062 | 68.958 | 75.590 | 68.691 (2.4276) | 63.500 (1.9796) | |

| per capita GDP | 87.899 | 78.678 | 64.985 | 83.200 | 71.752 (2.4801) | 61.601 (1.3644) |

| 62.521 | 59.400 | 45.917 | 54.800 | 49.911 (0.9571) | 42.292 (2.1096) | |

| firms’ profits | 23.038 | 24.186 | 22.729 | 28.622 | 21.102 (0.6201) | 18.358 (0.3645) |

| 16.984 | 17.132 | 12.229 | 18.731 | 13.122 (0.3800) | 11.501 (0.6107) | |

| corporate taxation | 3.5300 | 3.2948 | 2.3399 | 2.5744 | 2.4300 (0.1239) | 2.2880 (0.0093) |

| 2.6559 | 2.6556 | 1.5964 | 1.5975 | 1.6751 (0.0506) | 1.5749 (0.0094) | |

| private savings | 29.915 | 32.496 | 28.248 | 37.090 | 33.859 (1.1122) | 25.749 (0.4926) |

| 21.136 | 22.814 | 16.479 | 23.940 | 22.989 (1.0929) | 15.895 (0.2147) | |

| expen. durable goods | 7.6315 | 15.597 | 5.2789 | 5.0983 | 7.0292 (0.3995) | 4.2094 (0.1529) |

| 4.9930 | 12.497 | 3.4234 | 3.2737 | 5.0255 (0.7138) | 3.1987 (0.1189) | |

| GDP | 1.3641 | 2.2758 | 0.9309 | 0.9349 | 1.1034 (0.0647) | 0.8727 (0.0372) |

| 0.9897 | 1.9223 | 0.7082 | 0.7232 | 0.7880 (0.0315) | 0.6515 (0.0343) | |

| household income | 3.1828 | 7.3236 | 2.1474 | 1.9454 | 2.2263 (0.1348) | 2.2520 (0.1713) |

| 2.2418 | 5.7770 | 1.4936 | 1.0294 | 1.5581 (0.1717) | 1.6404 (0.1257) | |

| government expen. | 3.8283 | 2.8563 | 1.2604 | 1.0056 | 1.1806 (0.1617) | 1.0664 (0.0420) |

| 3.1686 | 2.2076 | 1.0073 | 0.8085 | 0.8747 (0.1080) | 0.8037 (0.0320) | |

| house prices | 4.0064 | 3.2791 | 0.7930 | 1.3561 | 1.3317 (0.1338) | 1.2537 (0.0430) |

| 2.7954 | 2.7633 | 0.6006 | 0.9131 | 1.0029 (0.1015) | 0.9268 (0.0668) | |

| employment rate | 2.0802 | 0.6177 | 0.1319 | 0.2055 | 0.2540 (0.0274) | 0.2182 (0.0099) |

| 1.6528 | 0.4981 | 0.1027 | 0.1618 | 0.1981 (0.0160) | 0.1700 (0.0067) | |

| unemployment rate | 1.8229 | 2.2742 | 0.1414 | 0.3147 | 0.2357 (0.0259) | 0.2013 (0.0022) |

| 1.3537 | 1.8059 | 0.1287 | 0.2085 | 0.1915 (0.0240) | 0.1566 (0.0021) | |

| unemp. over 27 w. | 19.020 | 12.429 | 1.1769 | 10.796 | 1.9132 (0.2058) | 1.7467 (0.0925) |

| 16.049 | 11.726 | 0.9036 | 7.5981 | 1.4810 (0.2187) | 1.3394 (0.0701) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Staffini, A. A CNN–BiLSTM Architecture for Macroeconomic Time Series Forecasting. Eng. Proc. 2023, 39, 33. https://doi.org/10.3390/engproc2023039033

Staffini A. A CNN–BiLSTM Architecture for Macroeconomic Time Series Forecasting. Engineering Proceedings. 2023; 39(1):33. https://doi.org/10.3390/engproc2023039033

Chicago/Turabian StyleStaffini, Alessio. 2023. "A CNN–BiLSTM Architecture for Macroeconomic Time Series Forecasting" Engineering Proceedings 39, no. 1: 33. https://doi.org/10.3390/engproc2023039033

APA StyleStaffini, A. (2023). A CNN–BiLSTM Architecture for Macroeconomic Time Series Forecasting. Engineering Proceedings, 39(1), 33. https://doi.org/10.3390/engproc2023039033