Abstract

The forecasting of a signal that locally satisfies linear recurrence relations (LRRs) with slowly changing coefficients is considered. A method that estimates the local LRRs using the subspace-based method, predicts their coefficients and constructs a forecast using the LRR with the predicted coefficients is proposed. This method is implemented for time series that have the form of a noisy sum of sine waves with modulated frequencies. Linear and sinusoidal frequency modulations are considered. The application of the algorithm is demonstrated with numerical examples.

1. Introduction

Let us consider the problem of forecasting time series using singular spectrum analysis (SSA) [1,2,3,4,5,6]. The theory of SSA is quite well-developed; there are many papers with applications of SSA to real-life time series (see, for example, [6] and [7] (Section 1.7) with short reviews). SSA does not require a time-series model to construct the decomposition into interpretable components such as the trend, periodicities, and noise. However, for prediction in SSA, it is assumed that the signal (the deterministic component of the time series) satisfies some model, in particular, a linear recurrence relation (LRR) with constant coefficients (maybe, approximately):

This assumption is valid for signals in the form of a sum of products of polynomial, exponential, and sinusoidal time series, in particular, a sum of exponentially modulated periodic components. SSA also works for the case of trend extraction and the general case of amplitude-modulated harmonics, where the model is satisfied approximately. However, SSA is not applicable if the signal locally satisfies a changing LRR. An example of such a signal is sinusoidal frequency-modulated time series. This paper aims to construct a method for the prediction of time series locally governed by changing LRRs, staying within the framework of SSA.

Let us consider the model of time series in the form of a noisy signal, where the signal is locally governed by LRRs with slowly time-varying coefficients. A local version of SSA has already been considered earlier for signal estimation [8]. However, it results in different approximations of the segments of the time series and the prediction can be performed based on the last segment only. In this paper, a local modification of the recurrent SSA prediction, based on the construction of a prediction of the coefficients of the local LRRs, is proposed. This modification was applied to time series in which the signal is a sum of sinusoids with time-varying frequencies having non-intersecting frequency ranges, where the instantaneous frequency of each summand is slowly varying.

2. Basic Notions

2.1. Linear Recurrence Relations

Let us introduce several definitions. By time series of length N we mean a sequence of real numbers . Consider a time series in the form of the sum of a signal and noise and state the problem of signal forecasting.

A time series satisfies a linear recurrence relation (LRR) of order d if there exists a sequence such that and (1) takes place.

A time series governed by an LRR satisfies a set of LRRs, one of which has minimal order; we will call it minimal LRR. Among the set of the governing LRRs, there is the so-called min-norm LRR with the minimum norm of coefficients, which suppresses noise in the best way [5] and is used for the recurrent SSA forecasting.

The characteristic polynomial of LRR (1) is defined as

The roots of the characteristic polynomial of the minimal LRR are called signal roots. The characteristic polynomial corresponding to an LRR governing the signal includes the signal roots, among others.

Remark 1.

Since , the roots of the characteristic polynomial provides the LRR coefficients, and vice versa.

The following result [9] together with Remark 1 shows how to find the roots of the governing minimal LRR using the common term of the time series . Let be the roots of the LRR characteristic polynomial with multiplicities . The time series satisfies the LRR if and only if , where depends on .

Example 1.

Consider the time series with , . Since , we have , . Therefore, the characteristic polynomial is . Thus, , and .

Remark 2.

The method for constructing the min-norm LRR of a given order with the given signal roots is described in [10]. This method will be used in the algorithm proposed in Section 3.2.

2.2. Harmonic Signal with Time-Varying Frequency: Instantaneous Frequency

In this paper, the basic form of signals will be the discrete-time version of

where , , are slowly changing functions. Note that if are linear functions, the signal satisfies an LRR; see [4] (Section 2.2) and Remark 1.

Let . The instantaneous frequency of the signal is defined as . The instantaneous period is the function . If for some a, we put . The frequency range of the signal is the range , that is, the image of .

Example 2.

For the signal , the instantaneous frequency is a linear function; the frequency range equals . For the signal , the instantaneous frequency equals and is a periodic function with period equal to ; the frequency range is

We assume that on short segments of time, the signals considered in Example 2 are well-enough approximated by a sinusoid with a frequency equal to the instantaneous frequency in the middle of the segment. This assumption will be used for the construction of the signal-forecasting algorithm.

2.3. SSA, Signal Subspace and Recurrent SSA Forecasting

In the version for signal extraction, SSA has two parameters, the window length L, , where N is the time-series length, and the number of elementary components r. At the first step of SSA, the trajectory matrix of size , where , is constructed and then decomposed into elementary components using the SVD. The leading r SVD components are used for the estimation of the signal and the signal subspace basis, which is used for constructing the forecasting LRR. Here is the scheme of the method given in [6]:

Thus, a concise form of the SSA algorithm for signal extraction is

where is the projector to the set of Hankel matrices, that is, the set of trajectory matrices.

The L-rank of a time series is the rank of its trajectory matrix , or, equivalently, the dimension of the column space of . For infinite time series and , the rank is equal to the order of the minimal LRR governing the time series. For example, the rank of the signal with the common term and equals two.

The construction of the forecasting min-norm LRR of order based on the SSA decomposition follows the formula for the LRR coefficients [4] (Equation (2.1)):

where is the vector without the last coordinate, which is denoted by . The forecast is constructed as .

In addition to forecasting, the SSA decomposition allows one to estimate the signal roots. Let us describe the ESPRIT (see [7] (Algorithm 3.3) and [11]) for signal-root estimation. We define , the matrix without the first row and the matrix without the last row. The ESPRIT estimates of the signal roots are the eigenvalues of a matrix that is an approximate solution of the equation ; e.g., for the LS-ESPRIT version.

3. Signal Forecasting by Forecasting of Local LRRs

3.1. General Model of Signals

Let us describe a general model of time series, for which the developed approach to forecasting will be applied. Consider the signal and take some natural Z, . For a time series , we denote the series .

The signal model is

where we assume that

- 1.

- For the time series , on its sequential segments of length Z,, every summand in (4) is well-approximated by a series in the form , where and is the middle point of the segment.

- 2.

- The series and behave regularly in n, and there exist methods that can forecast such kinds of series.

To construct a forecast one needs to find the instantaneous frequencies at the point .

3.2. Algorithm LocLRR SSA Forecast

Hereinafter, we will consider the model , where satisfies the conditions described above and is white Gaussian noise with zero mean and standard deviation . Let , , where . The estimates of instantaneous roots, frequencies, moduli and LRR coefficients will be enumerated according to the middle of the local segment. In particular, denote the coefficients of the minimal LRR that approximates the local segment of the series with the center in .

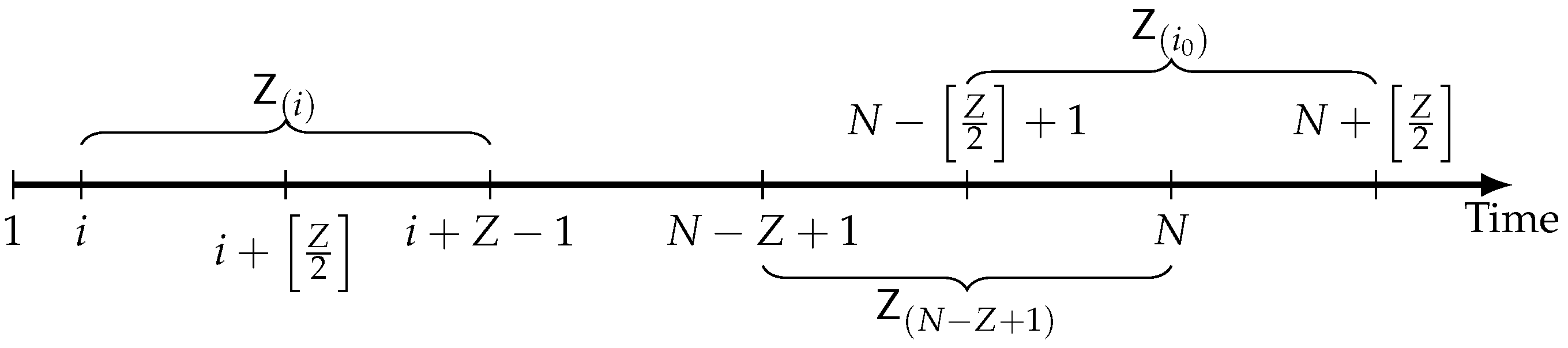

The local segment of length Z has the structure depicted in Figure 1, where the middle of is .

Figure 1.

Scheme of moving segments.

3.2.1. Scheme

In the scheme below, we consider , where is the number of signal roots with nonnegative imaginary parts (note that signal roots with negative imaginary parts are conjugate to signal roots with positive imaginary parts):

Here , where is the sequence of coefficients of the forecasting minimal LRRs. If the required length m of the forecasting LRRs is larger than r, then we lengthen each , , to of the min-norm LRR of order m; see Remark 2.

The result is a sequence of coefficients of the forecasting min-norm LRRs of order m. To obtain the results, the series of moduli and frequencies should be forecasted using some algorithms FOR MODs and FOR ARGs for each j.

After the sequence of coefficients is constructed, the forecasting values are taken from the time series

where , is the signal estimate obtained using SSA.

3.2.2. Algorithm in Detail

Let us formally describe the algorithm for forecasting the minimal LRRs (Algorithm 1).

| Algorithm 1: LocLRR SSA Forecast |

Input:

Steps:

Output: The sequence of coefficients of minimal LRRs of order r approximately governing the future signal segments . |

Remark 3.

If real-valued roots are obtained on some segments of , , we replace the values of the roots with missing values. That is, for and such that we put . Possible gaps in the series of frequency estimates can be filled in; e.g., one can fill them with the iterative gap-filling method [12]; see also the description of the igapfill algorithm in [7] (Algorithm 3.7).

An appropriate choice of the algorithms and depends on the form of the frequency modulation.

Finally, each LRR of is enlarged to a min-norm LRR of length m with coefficients (see Remark 2) and this enlarged LRR is used for the prediction of .

4. Examples

4.1. Description

In this section, we demonstrate the forecasting using the LocLRR SSA Forecast algorithm. The following types of time series were considered.

4.1.1. Sinusoid with Linearly Modulated Frequency

The signal has the form

The instantaneous frequency is ; the frequency range is . We will consider such values of the parameter a and series length N at which the frequency range . Since the instantaneous frequency is a linear function, we will take the linear-regression-prediction algorithm as .

4.1.2. Sinusoid with Sinusoidal Frequency

The signal is

, where is much smaller than . The instantaneous frequency equals ; the frequency range is . We will consider values of signal parameters and series lengths such that . The rank of the time series of the series of instantaneous frequencies with terms , , is equal to 3. Therefore, we take the recurrent SSA forecasting algorithm with as ; the window length L is chosen to be half of the length of the frequency-estimation series following the general recommendations.

Since we do not consider time-varying amplitude modulation in the examples, we take the forecast of moduli series using the average value over local intervals as algorithm .

4.1.3. Sum of Sinusoids

The signal is

, , , , . We will consider the signal parameters and the time-series length N such that the frequency ranges of the summands are not mutually intersected and the frequency range of each summand belongs to the interval .

The frequencies corresponding to the signal summands of will be forecasted either with linear regression or with SSA, based on the type of obtained estimates of the instantaneous frequencies on the local segments. The moduli will be predicted using the average of the estimates over the local segments.

In numerical examples, we will consider the signal parameters such that the instantaneous frequencies are slow-varying functions. Since the examples under consideration satisfy the general time-series model (Section 3.1), the LocLRR SSA-forecast algorithm can be used for forecasting.

In real-life problems, the value of the optimal LRR order m can be chosen based on the training data. In the model examples, the value of the optimal LRR order m will be chosen by trying all possible values of m in the range from r to Z and comparing the mean squared errors (MSE) of the predictions.

The following approach was used for choosing the length Z of local segments. We take such a value of the parameter Z that most of the local segments , , contain at least 2–3 instantaneous periods of each summand of the signal satisfying model (5). For small Z, we obtain estimates of the instantaneous frequency with large variability, whereas for large Z, we have a considerable bias. The necessary condition for appropriate values of Z is that there are no (or a few) segments providing real-valued roots.

4.2. Numerical Experiments

Consider the following numerical examples:

- Sinusoid with linearly modulated frequency,(denoted by );

- Sinusoid with sinusoidal frequency modulation,(denoted by );

- Sum of sinusoids with linear and sinusoidal frequency modulations,(denoted by );

- Sum of two sinusoids with sinusoidal frequency modulation,(denoted by ).

We compare the proposed LocLRR SSA-forecast algorithm (denoted by “alg”) with two simple methods:

- Forecasting by constant, which forecasts by zero, since we consider time series with zero average (denoted by ‘by 0’).

- Forecasting using the last local segment, which is performed with the min-norm LRR computed using the roots of the last local segment (denoted by ‘last’).

Let us consider accuracies of step ahead forecasts for time series of length . For each example, the prediction is performed for the pure signal and the noisy signal, where the noise is white Gaussian with standard deviation . In the noisy case, a sample of size is used for the estimation of accuracy.

Let be the time-series sample. The prediction error is estimated as .

The results are shown in Table 1, where the best results are highlighted in bold. They confirm the advantage of the proposed method over the simple methods under consideration.

Table 1.

RMSE of forecasts; ‘alg’ is the proposed algorithm; m is the optimal length of the forecasting LRRs.

4.3. Detailed Example

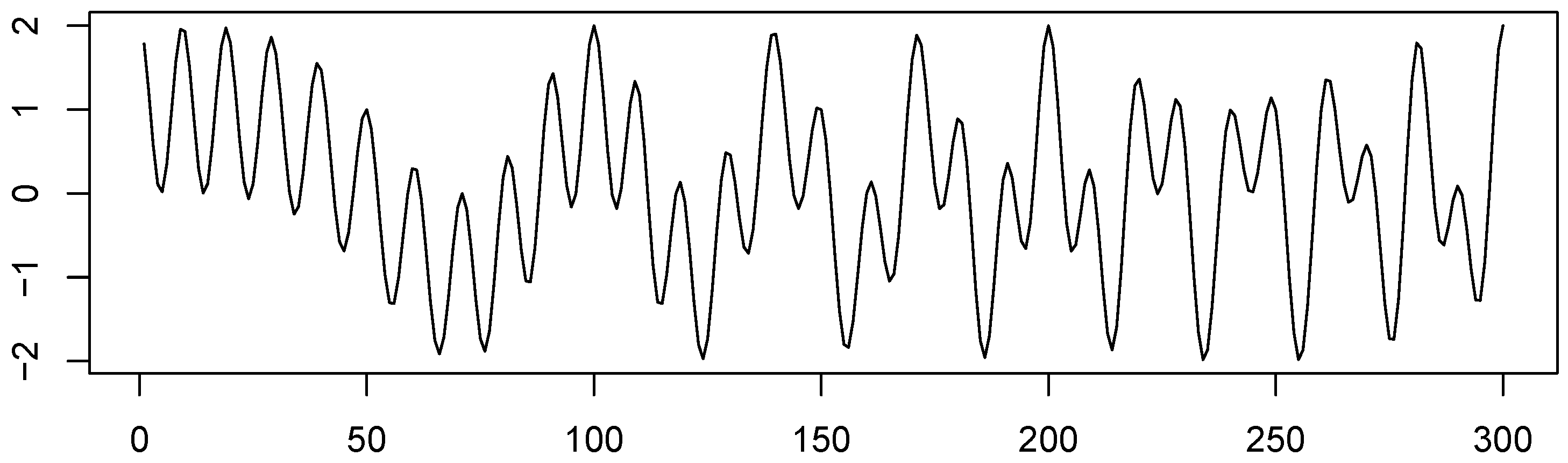

To demonstrate the approach more clearly, let us consider the example (6) without noise; see Figure 2. Take , , .

Figure 2.

Initial signal .

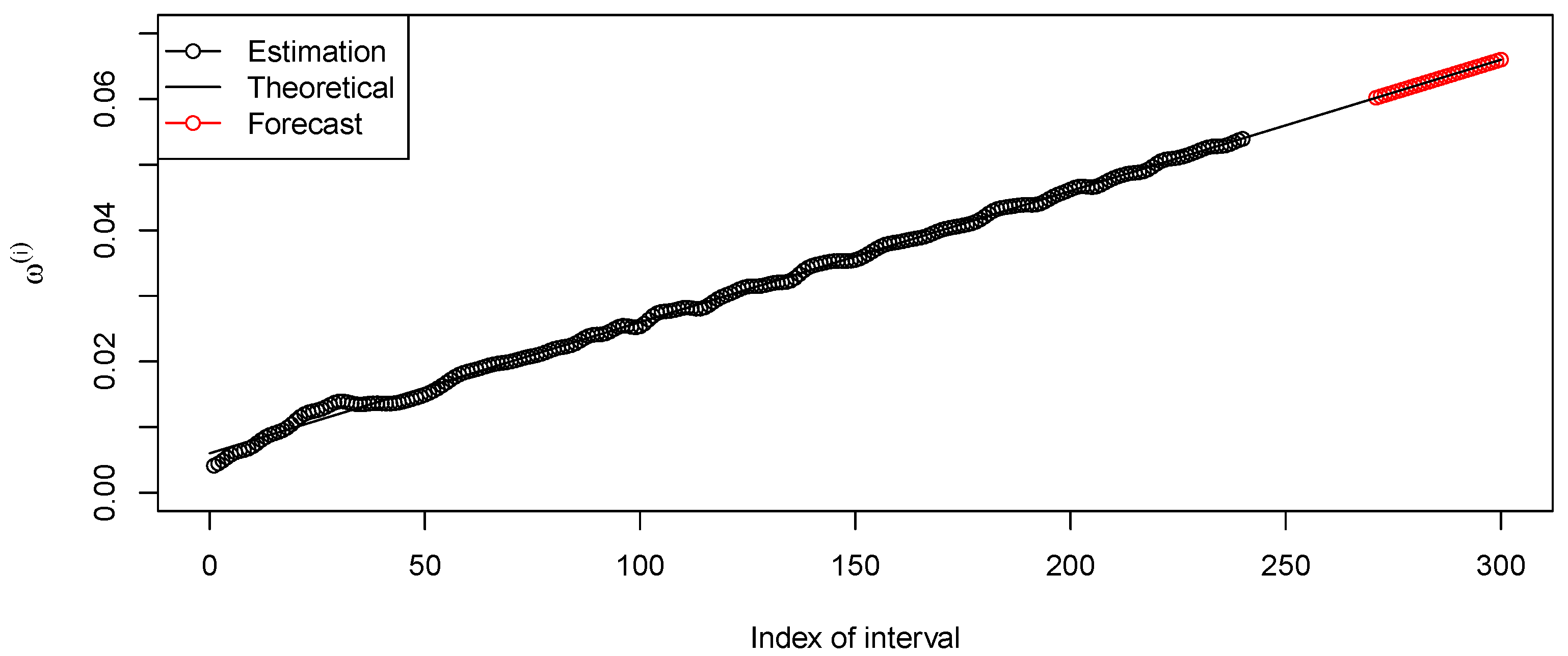

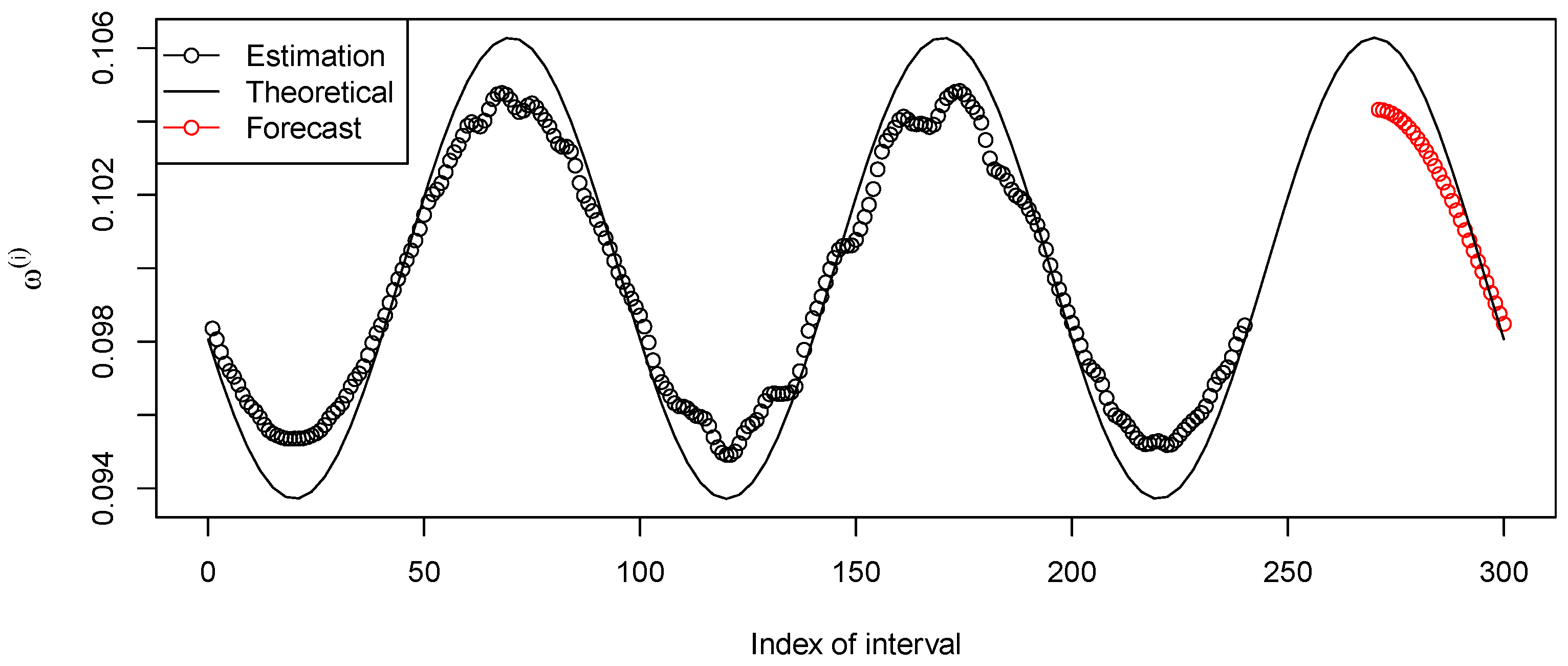

The results of forecasting the signal root corresponding to the first summand are shown in Figure 3, and the results of forecasting the signal root corresponding to the second summand can be seen in Figure 4. The frequency ranges of modulations in the summands are approximately and , respectively. The forecasts are depicted in Figure 5 (forecasting with the last segment) and Figure 6 (forecasting with the proposed algorithm). Since there is no noise, the optimal LRR length m is small; here, .

Figure 3.

Forecasting the series of linear instantaneous frequencies for the summand .

Figure 4.

Forecasting the series of sinusoidal instantaneous frequencies for the summand .

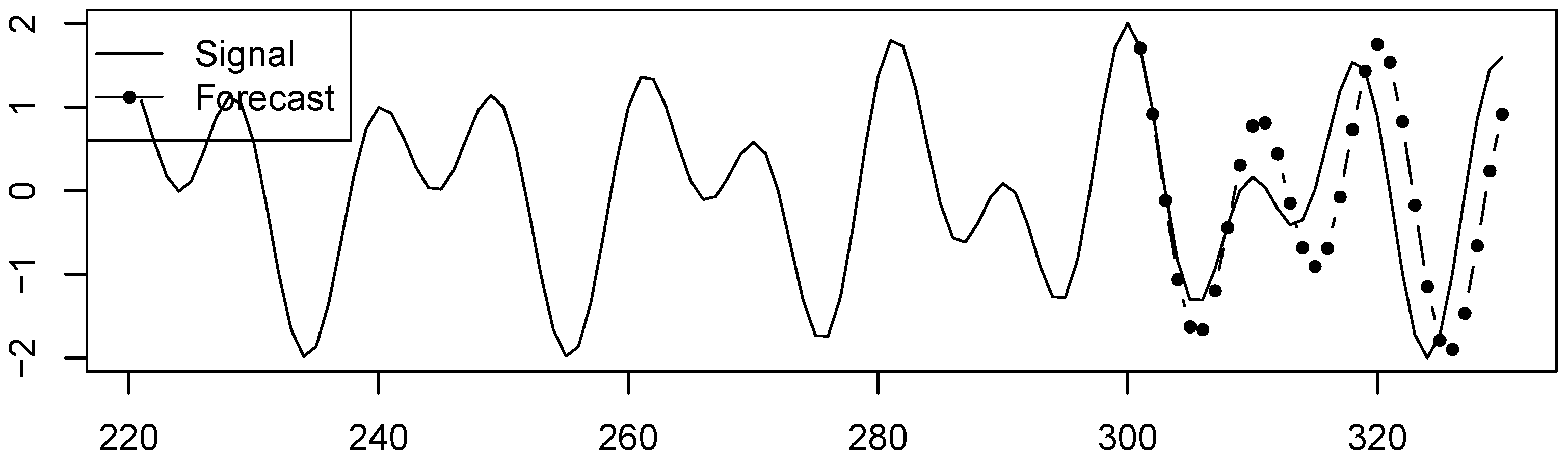

Figure 5.

Forecasting using the last local segment, . A shift is clearly seen.

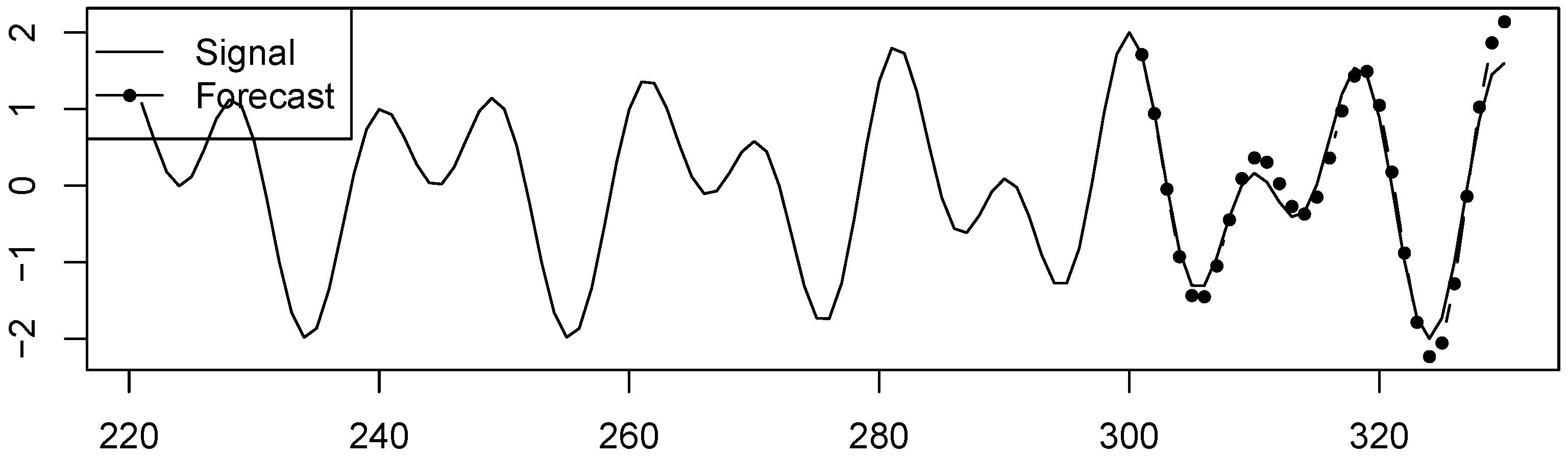

Figure 6.

Forecasting using LocLRR SSA forecast, . There is no shift.

5. Conclusions

In this paper, we proposed a method for forecasting time series that extends the capabilities of SSA and allows one to predict time series in which the signal just locally satisfies LRRs. A regular behaviour of the coefficients of the governed LRRs was assumed. In [6] (page 9), it was stated for the considered type of time series that “[t]he problem is how to forecast the extracted signal, since its local estimates may have different structures on different time intervals. Indeed, by using local versions of SSA, we do not obtain a common nonlinear model but instead we have a set of local linear models”. In this paper, we proposed an answer to the problem of prediction of local structures of time series for some class of signals.

We constructed an algorithm for predicting local structures of time series that are the sum of frequency-modulated sinusoids and showed that the proposed forecasting method gives reasonable results for the cases of linear and sinusoidal frequency modulations.

Certainly, the considered comparison with a couple of simple methods is not enough; a more extensive comparison should be performed in the future. However, the results of this work show that the proposed approach based on the prediction of the coefficients of LRRs is promising.

Author Contributions

Conceptualization, N.G.; methodology, N.G. and E.S.; software, E.S.; validation, N.G. and E.S.; formal analysis, N.G. and E.S.; investigation, N.G. and E.S.; resources, N.G.; data curation, N.G. and E.S.; writing—original draft preparation, N.G. and E.S.; writing—review and editing, N.G. and E.S.; visualization, N.G. and E.S.; supervision, N.G.; project administration, N.G.; funding acquisition, N.G. All authors have read and agreed to the published version of the manuscript.

Funding

The work is supported by the Russian Science Foundation (project No. 23-21-00222).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available. The R-code for data generation and result replication can be found at https://zenodo.org/record/8087608, doi 10.5281/zenodo.8087608.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Broomhead, D.; King, G. Extracting qualitative dynamics from experimental data. Physica D 1986, 20, 217–236. [Google Scholar] [CrossRef]

- Vautard, R.; Ghil, M. Singular spectrum analysis in nonlinear dynamics, with applications to paleoclimatic time series. Physica D 1989, 35, 395–424. [Google Scholar] [CrossRef]

- Elsner, J.B.; Tsonis, A.A. Singular Spectrum Analysis: A New Tool in Time Series Analysis; Plenum Press: New York, NY, USA, 1996. [Google Scholar]

- Golyandina, N.; Nekrutkin, V.; Zhigljavsky, A. Analysis of Time Series Structure: SSA and Related Techniques; Chapman & Hall/CRC: Boca Raton, FL, USA, 2001. [Google Scholar]

- Golyandina, N.; Zhigljavsky, A. Singular Spectrum Analysis for Time Series, 2nd ed.; Springer Briefs in Statistics; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Golyandina, N. Particularities and commonalities of singular spectrum analysis as a method of time series analysis and signal processing. Wiley Interdiscip. Rev. Comput. Stat. 2020, 12, e1487. [Google Scholar] [CrossRef]

- Golyandina, N.; Korobeynikov, A.; Zhigljavsky, A. Singular Spectrum Analysis with R; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Leles, M.; Sansão, J.; Mozelli, L.; Guimarães, H. Improving reconstruction of time-series based in Singular Spectrum Analysis: A segmentation approach. Digit. Signal Process. 2018, 77, 63–76. [Google Scholar] [CrossRef]

- Hall, M.J. Combinatorial Theory; Wiley: New York, NY, USA, 1998. [Google Scholar]

- Usevich, K. On signal and extraneous roots in Singular Spectrum Analysis. Stat. Interface 2010, 3, 281–295. [Google Scholar] [CrossRef]

- Roy, R.; Kailath, T. ESPRIT-estimation of signal parameters via rotational invariance techniques. IEEE Trans. Acoust. Speech Signal Process. 1989, 37, 984–995. [Google Scholar] [CrossRef]

- Kondrashov, D.; Ghil, M. Spatio-temporal filling of missing points in geophysical data sets. Nonlinear Process. Geophys. 2006, 13, 151–159. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).