StyleVision: AI-Integrated Stylist System with Intelligent Wardrobe Management and Outfit Visualization †

Abstract

1. Introduction

2. Proposed System

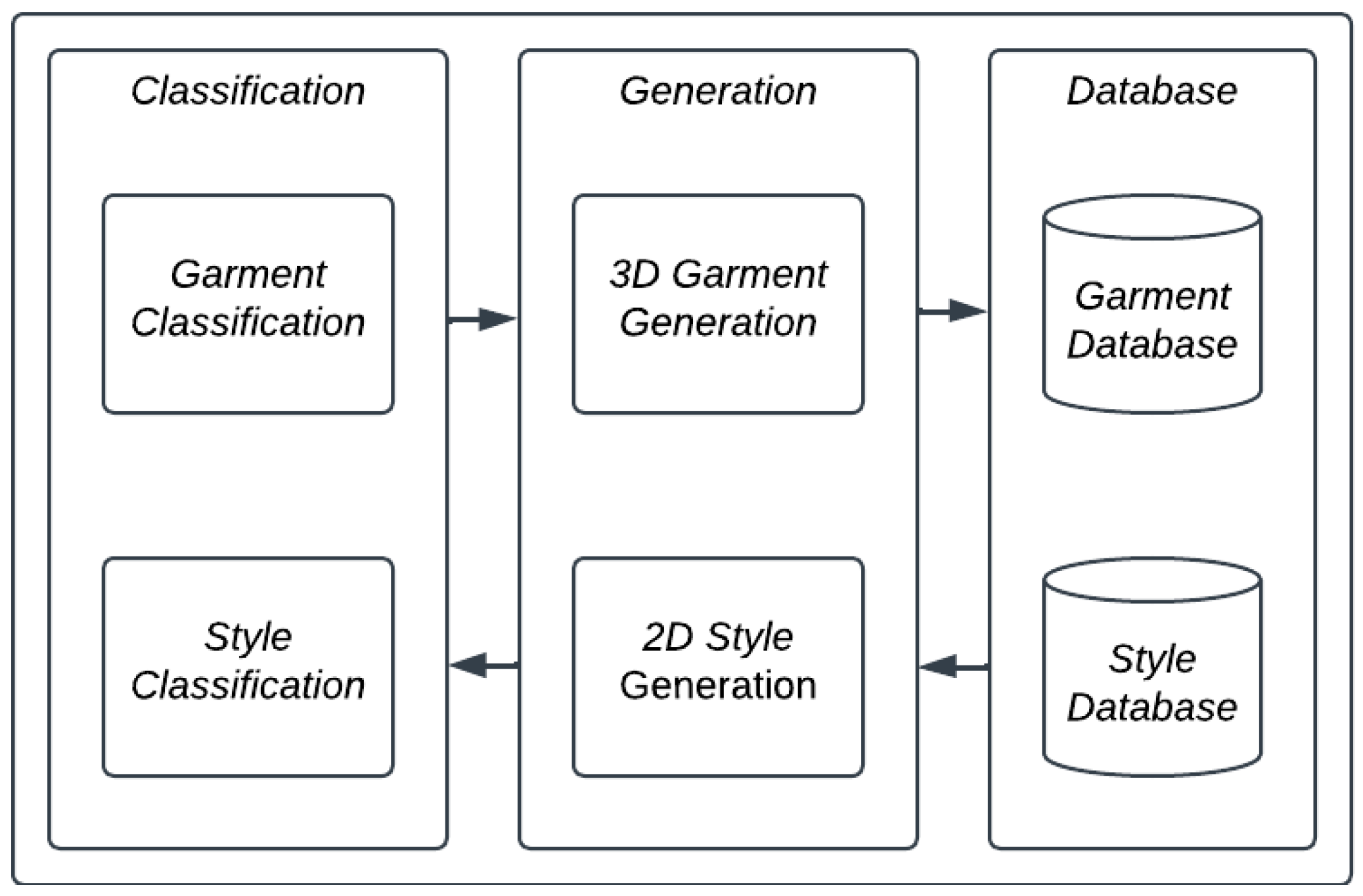

2.1. System Architecture and Workflow

- Adding a garment: This is the foundational process for populating the user’s digital wardrobe and pre-computing potential outfits.

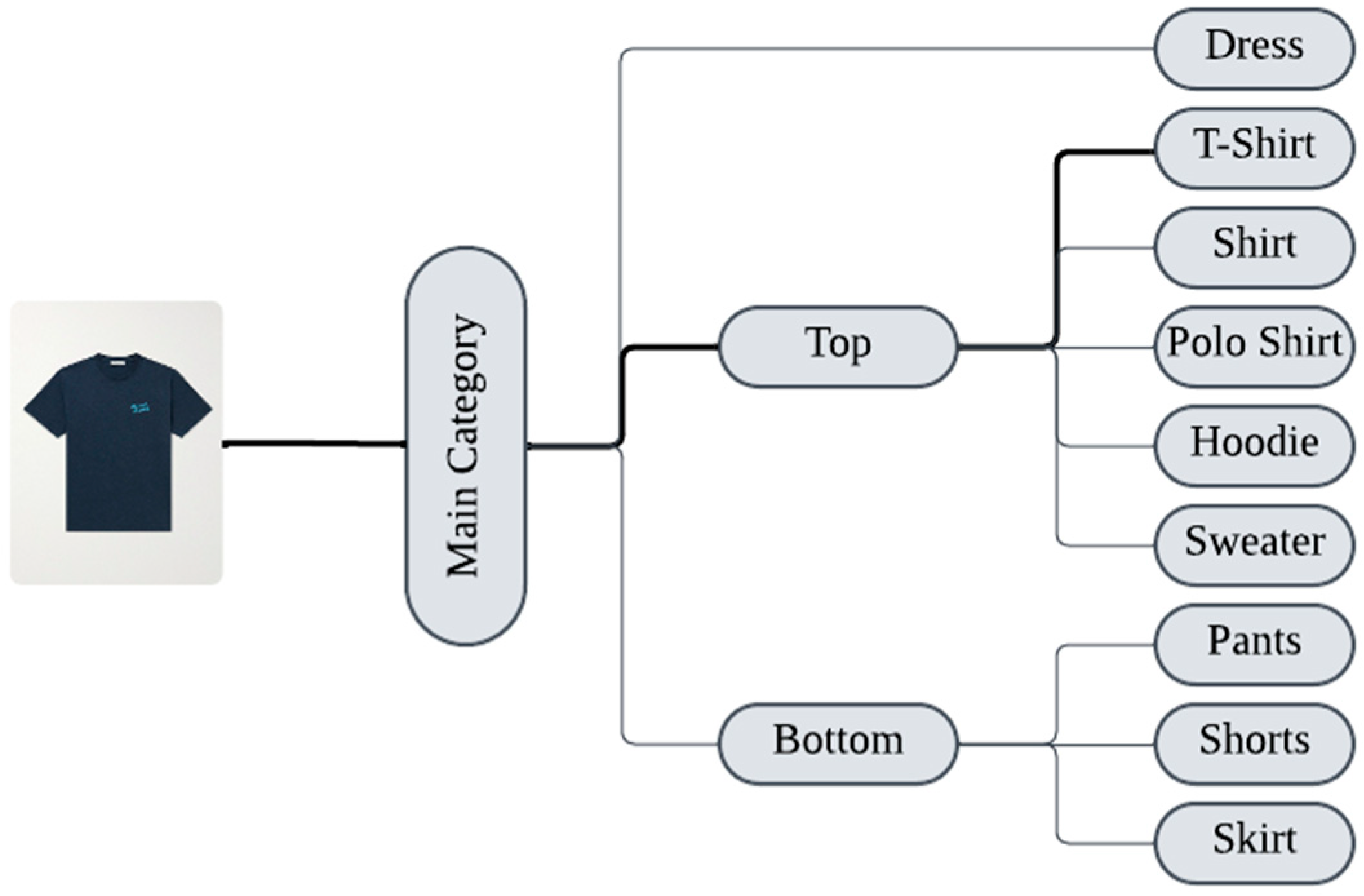

- Input and classification: The user uploads front and rear-view images of a clothing item (upload garment images). The images are preprocessed, and the frontal view is passed to the Garment Classification Model to determine its hierarchical category (classify into categories).

- Concurrent generation: Following classification, the system initiates two concurrent data-generation pathways:

- ○

- Three-dimensional garment modeling: The 3D Visualization Model processes both front and rear images to generate an interactive 3D model of the garment with a static geometry (Generate 3D visualization of the garment). this 3d model, which the user can interactively view, is stored along with its classification labels in the categorized garment database.

- ○

- Two-dimensional outfit synthesis and styling: The system uses the newly added item to synthesize potential 2D outfit combinations with other compatible garments from the users wardrobe (generate 2D outfit). Each resulting 2D composite is immediately evaluated by the style classification model (classified into styles), and the successfully styled outfits are stored in the styled outfits database.

- Browsing and visualizing an outfit: This workflow allows the user to explore the pre-computed outfit combinations from their wardrobe.

- Style-based retrieval: The user selects a desired style category, such as casual (select an outfit style). The system queries the styled outfits database and retrieves all combinations matching that style (retrieve matching outfit).

- Selection and 3D assembly: The matching outfits are displayed to the user (display matching outfits). After the user chooses a specific outfit (choose an outfit), the system retrieves the individual 3D models for each garment in that outfit from the categorized garments database (retrieve 3D object). These models are then assembled and rendered to present a complete 3D outfit visualization to the user (display 3D outfit visualization).

2.2. Garment Classification Model

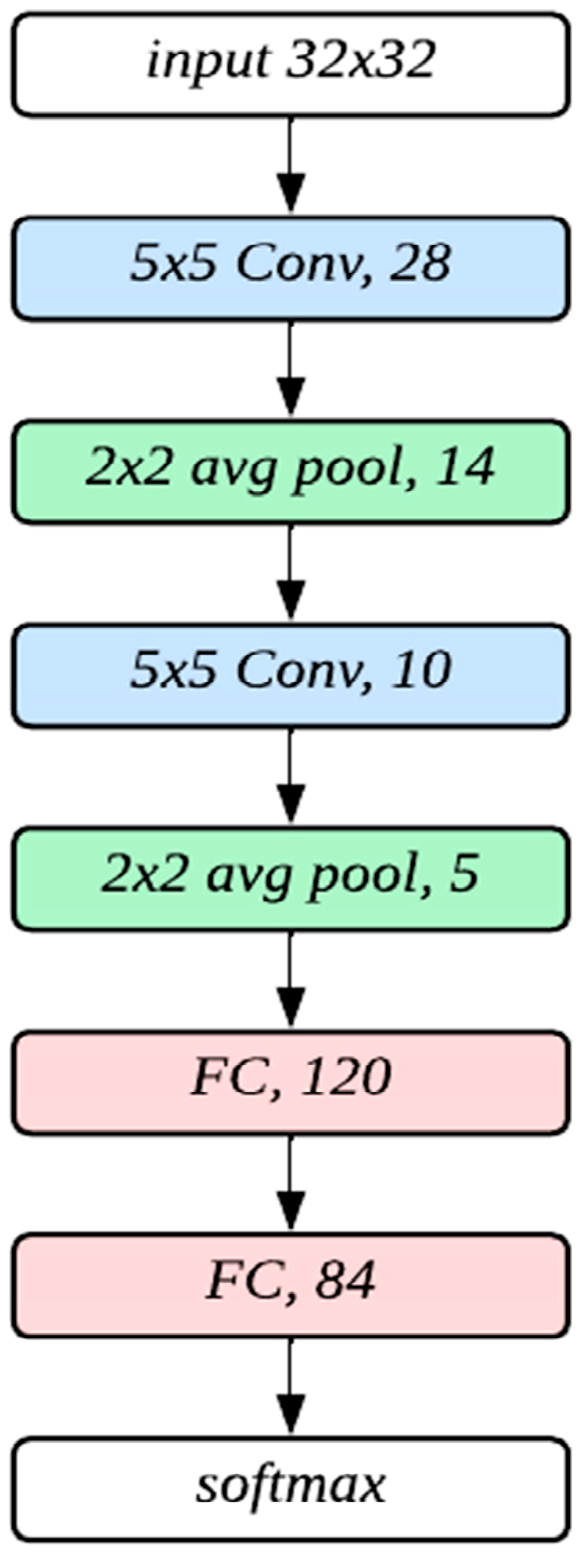

2.2.1. Architecture and Training

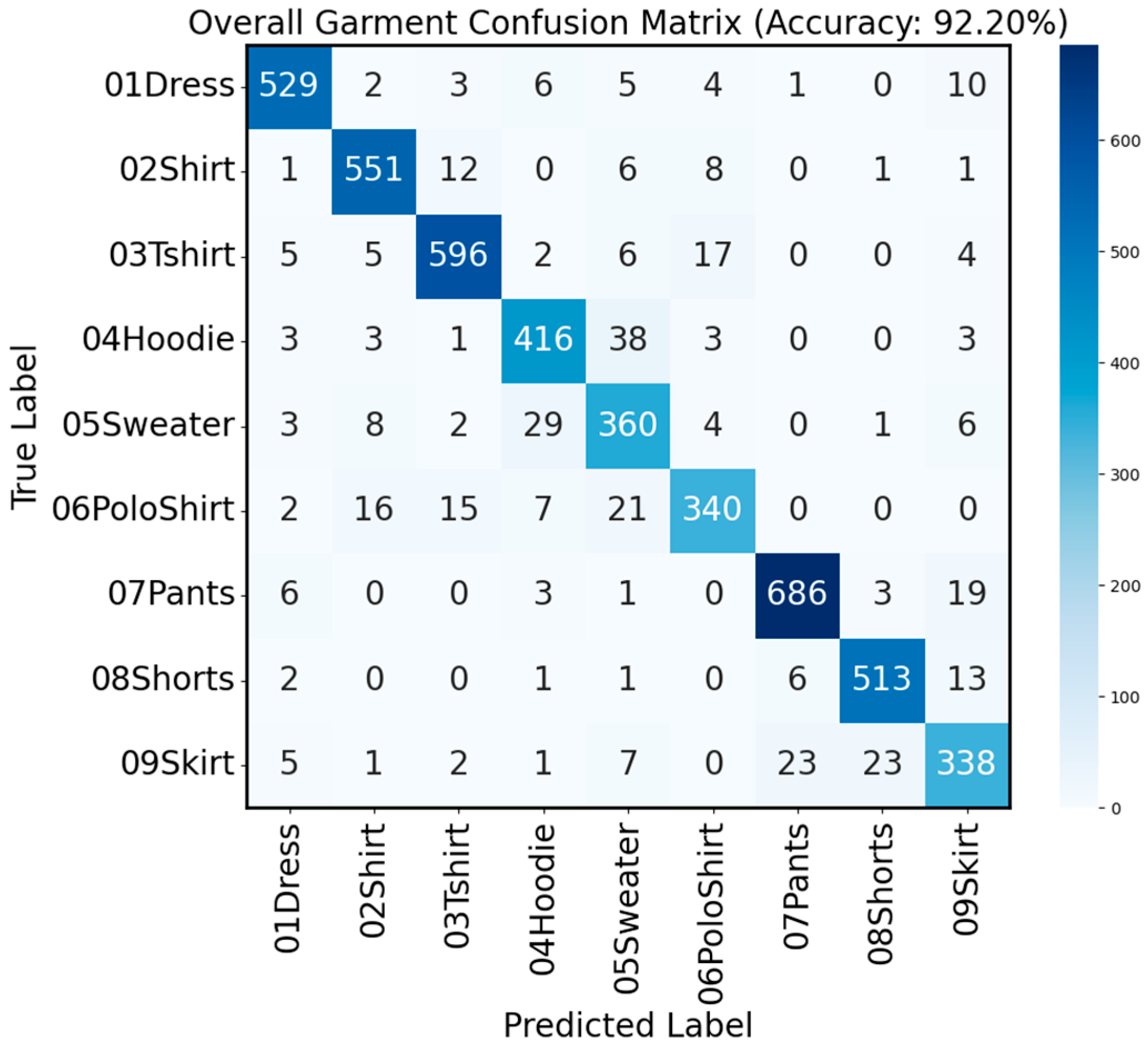

2.2.2. Output Processing and Confidence Thresholding

2.3. Two-Dimensional Visualization Model

- Stage 1: composite generation for style analysis

- To create a consistent input for the downstream style classifier, the model generates 2D composites of every valid outfit combination. It does so by programmatically warping and placing the constituent garment images–a process that draws conceptual inspiration from the virtual try-on models such as Improving diffusion models for authentic virtual try-on [12]–onto a set of five distinct, static person templates sourced from the Dress Code dataset [10]. This multi-template approach offers a comprehensive visual representation of the outfit, facilitating a more robust style evaluation.

- Stage 2: user-centric visualization

- Following style classification, the model leverages the same garment warping and placement techniques to render the outfit onto a user’s uploaded full-body photograph. This provides a personalized “virtual try-on” feature, allowing users to see how a generated outfit might look on their own figure.

2.4. Style Classification Model

2.4.1. Architecture and Preprocessing

2.4.2. Custom Dataset and Training

2.5. Three-Dimensional Outfit Visualization Model

- Shape matching: This stage deforms a template mesh to match the input garment’s geometry. Landmark detection is performed using an HRNet-based architecture [17] (pre-trained on DeepFashion2 [18]), which was adapted to a curated subset of keypoints (6–11 per view) relevant to StyleVisions garment types. Precise garment silhouettes are extracted using a model adapted from “cloths-virtual-try-on” [19]. The shape fitting is then performed via deformation graph optimization, which aligns the templates’ 3D keypoints with the detected 2D landmarks and matches the rendered silhouette to the extracted mask. To ensure plausible geometry and prevent unrealistic shapes, As-Rigid-As-Possible (ARAP) regularization [20] is incorporated into the optimization process.

- Coarse texture generation: Following shape matching, an image-based optimization generates the garment’s texture. This process utilizes a differentiable renderer (e.g., SoftRas [21]) to iteratively update the deformed mesh’s UV texture map. The optimization minimizes the photometric difference between the rendered model and the original input images. To encourage plausible color filling in occluded regions and reduce visual artifacts, a total variation loss [22] is also included to penalize high-frequency noise in the final texture.

2.6. Computational Environment

3. Results

3.1. Garment Classification

3.2. Two-Dimensional Outfit Visualization Model Performance

- Composite generation for analysis: The model was confirmed to correctly generate the standardized 2D composite images for every valid outfit combination. These images, depicting outfits on multiple static templates, served as the necessary visual input for the subsequent style analysis pipeline.

- User-centric visualization: The model’s capability to render outfits onto a user’s uploaded full-body photograph was also functionally validated. This confirmed the successful implementation of the personalized virtual try-on feature.

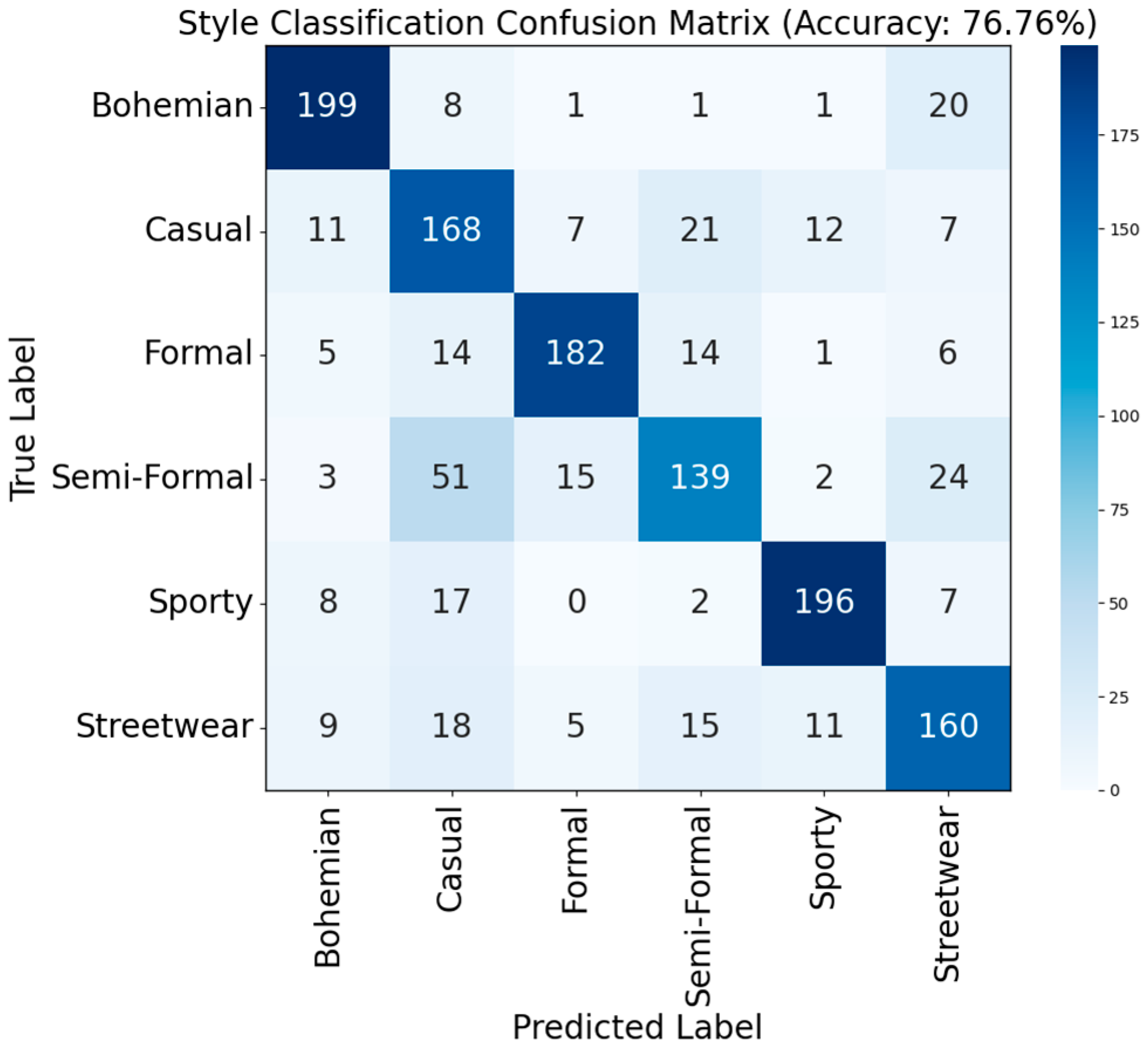

3.3. Style Classification Performance

3.4. Three-Dimensional Visualization Model Performance

- Functional validation: Operational testing confirmed the model successfully processed front and rear images to generate interactive, rotatable 3D meshes for all supported garment types. Qualitatively, the generated models accurately captured the overall shape, color, and prominent patterns from the 2D input images, producing recognizable representations suitable for outfit exploration.

- Generation time: On the specified hardware environment, the average time required to generate a single textured 3D garment model was approximately 4 min. This latency is acceptable for the system’s intended workflow, where 3D model generation is a one-time, offline process performed when a user first adds an item to their wardrobe.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kwon, T.A.; Choo, H.J.; Kim, Y. Why Do We Feel Bored with Our Clothing and Where Does It End Up? Int. J. Consum. Stud. 2020, 44, 1–13. [Google Scholar] [CrossRef]

- Shaw, D.; Duffy, K. Save Your Wardrobe: Digitalising Sustainable Clothing Consumption; University of Glasgow: Glasgow, UK, 2019. [Google Scholar] [CrossRef]

- Lookastic: Your Personal AI Stylist. Available online: https://lookastic.com/ (accessed on 15 May 2025).

- Stylebook Closet App: A Closet and Wardrobe Fashion App for the iPhone and iPad. Available online: https://www.stylebookapp.com/ (accessed on 15 May 2025).

- Whering | The Social Wardrobe & Styling App. Available online: https://whering.co.uk/ (accessed on 15 May 2025).

- Kayed, M.; Anter, A.; Mohamed, H. Classification of Garments from Fashion MNIST Dataset Using CNN LeNet-5 Architecture. In Proceedings of the 2020 International Conference on Innovative Trends in Communication and Computer Engineering (ITCE), Aswan, Egypt, 12–14 February 2020; pp. 238–243. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. arXiv 2019. [Google Scholar] [CrossRef]

- Kaggle: Your Machine Learning and Data Science Community. Available online: https://www.kaggle.com/ (accessed on 22 February 2025).

- Roboflow Universe: Computer Vision Datasets. Available online: https://universe.roboflow.com/ (accessed on 5 March 2025).

- Morelli, D.; Fincato, M.; Cornia, M.; Landi, F.; Cesari, F.; Cucchiara, R. Dress Code: High-Resolution Multi-category Virtual Try-On. arXiv 2022. [Google Scholar] [CrossRef]

- Pinterest. Available online: https://www.pinterest.com/ (accessed on 3 April 2025).

- Choi, Y.; Kwak, S.; Lee, K.; Choi, H.; Shin, J. Improving Diffusion Models for Authentic Virtual Try-on in the Wild. arXiv 2024. [Google Scholar] [CrossRef]

- Chen, X.; Deng, Y.; Di, C.; Li, H.; Tang, G.; Cai, H. High-Accuracy Clothing and Style Classification via Multi-Feature Fusion. Appl. Sci. 2022, 12, 10062. [Google Scholar] [CrossRef]

- The PyTorch Maintainers and Contributors. TorchVision: PyTorchs Computer Vision Library. Available online: https://github.com/pytorch/vision (accessed on 13 March 2025).

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision–ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Gao, D.; Zhu, Y.; Zhou, Y.; Wu, J.; Li, Y.; Liu, S. Cloth2Tex: A Customized Cloth Texture Generation Pipeline for 3D Virtual Try-On. arXiv 2023. [Google Scholar] [CrossRef]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5686–5696. [Google Scholar] [CrossRef]

- Ge, Y.; Zhang, R.; Wang, X.; Tang, X.; Luo, P. DeepFashion2: A Versatile Benchmark for Detection, Pose Estimation, Segmentation and Re-Identification of Clothing Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5332–5340. [Google Scholar] [CrossRef]

- Swayam. SwayamInSync/Clothes-Virtual-Try-On. Available online: https://github.com/SwayamInSync/clothes-virtual-try-on (accessed on 20 April 2025).

- Sorkine, O.; Alexa, M. As-Rigid-As-Possible Surface Modeling. In Proceedings of the Fifth Eurographics Symposium on Geometry Processing, Barcelona, Spain, 4–6 July 2007. [Google Scholar]

- Liu, S.; Chen, W.; Li, T.; Li, H. Soft Rasterizer: A Differentiable Renderer for Image-Based 3D Reasoning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7707–7716. [Google Scholar] [CrossRef]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear Total Variation Based Noise Removal Algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

| Accuracy After Confidence Thresholding | Confidence Threshold | Accuracy Before Confidence Thresholding | Class |

|---|---|---|---|

| 99.34% | 0.9 | 97.97% | Main category |

| 91.54% | 0.53 | 88.99% | Top subcategory |

| 94.28% | 0.59 | 92.34% | Bottom subcategory |

| 92.20% | N/A | 89.81% | Overall system classification |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Chang, I.-C.; Jonathan, E.; Johan, M.; Lee, S.Q.; Pilota, P. StyleVision: AI-Integrated Stylist System with Intelligent Wardrobe Management and Outfit Visualization. Eng. Proc. 2025, 120, 15. https://doi.org/10.3390/engproc2025120015

Chang I-C, Jonathan E, Johan M, Lee SQ, Pilota P. StyleVision: AI-Integrated Stylist System with Intelligent Wardrobe Management and Outfit Visualization. Engineering Proceedings. 2025; 120(1):15. https://doi.org/10.3390/engproc2025120015

Chicago/Turabian StyleChang, I-Cheng, Elvio Jonathan, Marcel Johan, Shao Qi Lee, and Phoebe Pilota. 2025. "StyleVision: AI-Integrated Stylist System with Intelligent Wardrobe Management and Outfit Visualization" Engineering Proceedings 120, no. 1: 15. https://doi.org/10.3390/engproc2025120015

APA StyleChang, I.-C., Jonathan, E., Johan, M., Lee, S. Q., & Pilota, P. (2025). StyleVision: AI-Integrated Stylist System with Intelligent Wardrobe Management and Outfit Visualization. Engineering Proceedings, 120(1), 15. https://doi.org/10.3390/engproc2025120015