Abstract

As cities strive to become more sustainable and livable in the age of smart urban development, there is a tendency toward urban landscaping concepts that combine ecological benefits and esthetic appeal. Within this context, artistic landscaping, the deliberate spatial arrangement of plant species to create visual compositions, has emerged as a valuable aspect of modern urban green infrastructure. While cutting-edge Unmanned Ground Vehicle (UGV) development has primarily focused on large-scale precision agriculture, its potential for artistic and small-scale urban landscaping remains unexplored. Furthermore, integrating Internet of Things (IoT) technology into UGVs for autonomous seeding presents an interesting research point. Addressing these challenges, this paper introduces a compact design of an IoT-enabled UGV specifically for artistic landscaping applications. The system includes an effective full seeding mechanism with dedicated modules for soil digging, sowing, water spraying, and backfilling. These operational modules are coordinated using a microcontroller-based control system to ensure reliability and repeatability. Additionally, in this study, a web-based interface has been developed to support both autonomous and manual operation modes, allowing users to customize path planning for geometric seeding patterns as well as real-time monitoring. A fully functional prototype was built and tested under controlled conditions to confirm the core modules’ effectiveness. This development provides a practical solution for supporting the realization of smart and sustainable cities.

1. Introduction

While climate change and biodiversity loss remain central to global environmental discourse, urban expansion and land-use conversion are increasingly recognized as major contributors to ecosystem degradation. As cities continue to grow, with over 68% of the global population projected to live in urban areas by 2050, the challenge lies in developing urban systems that harmonize built infrastructure with ecological sustainability [1]. Urban sprawl often fragments or completely replaces natural landscapes, reducing ecological integrity and functionality [2,3]. The consequences of these transformations include rising urban heat, disrupted hydrological cycles, and reduced biodiversity—conditions that compromise both environmental health and human well-being [4,5]. The challenge lies in developing urban systems that harmonize built infrastructure with ecological sustainability [1]. These converging pressures underline the urgent need for integrative urban planning solutions that reconcile infrastructure development with ecological preservation [3].

Urban green infrastructure has emerged as a critical strategy for addressing these multifaceted challenges while delivering measurable ecosystem services to urban communities [6]. Furthermore, green infrastructure serves as a nature-based solution for climate adaptation, enhancing urban resilience to extreme weather events while supporting biodiversity conservation in increasingly fragmented urban landscapes [7].

Plant landscaping offers a promising strategy for mitigating the ecological impacts of urbanization while enhancing urban livability. Vegetation in cities performs multiple roles: it reduces surface temperatures, improves microclimates, filters air pollutants, and provides psychological and recreational benefits [8]. These benefits position urban planting systems as more than esthetic elements; they function as critical green infrastructure with measurable contributions to environmental quality and public health. Therefore, effective integration of planting systems into urban design is not merely decorative but essential for building resilient, human-centered cities.

Despite their ecological and cultural potential, artistic planting designs remain underutilized in urban environments due to implementation constraints. Artistic landscaping—characterized by deliberate spatial arrangements of diverse plant species—requires time-intensive planning, skilled labor, and context-sensitive execution. These constraints limit its scalability in fast-growing urban areas where demand often exceeds human resource availability [8]. Without automation, such intricate planting designs are difficult to execute consistently or cost-effectively at scale. This implementation bottleneck restricts the practical adoption of ecologically expressive landscaping. Thus, there is a critical need for technological innovations that can translate artistic design principles into scalable, deployable solutions for modern cities.

Robotic systems have been developed for agricultural automation over the past decade, but most are ill-suited for the nuanced demands of artistic landscape planting. Several studies have introduced UGVs capable of multi-task operations such as planting, weeding, and monitoring [9,10]. Advanced agricultural robotics have demonstrated significant capabilities in precision agriculture, with comprehensive reviews highlighting their applications in harvesting, seeding, weeding, and crop monitoring [11,12]. For instance, ref. [13] developed a modular robot for seeding and mapping, while a weeding robot was introduced in [14], and ref. [15] presented a semi-autonomous system for seeding and harvesting. However, these platforms often lack holistic automation frameworks, rely heavily on human control, and are optimized for uniform monocultures rather than complex seeding patterns. These limitations reflect a gap between current agricultural robotic capabilities and the flexibility required for artistic landscaping, which demands high spatial precision, contextual adaptability, and creative interpretation of seeding plans. Consequently, while agricultural robotics offers foundational tools, a new class of robots is needed to bridge the divide between technical functionality and artistic ecological design.

This study introduces the conceptual and technical foundation for an intelligent robotic system tailored specifically for artistic landscape seeding in urban contexts. The proposed system is designed to autonomously interpret and execute seeding plans as spatially expressive compositions. It integrates IoT-enabled environmental sensing, modular hardware architecture, and a web-based user interface to enhance automation and adaptability. By fusing robotic engineering with landscape architectural principles, the system addresses the dual challenge of ecological fidelity and labor scalability. Unlike existing agricultural machines, it prioritizes design complexity, automation, task and site variability, and esthetic intent. This research contributes a novel framework for transforming artistic seeding from a manual, resource-intensive process into an intelligent, scalable component of urban green infrastructure.

To facilitate understanding of the proposed system, the remainder of this paper is organized as follows. Section 2 presents the overall system architecture, including hardware components and firmware integration. Section 3 describes the design of the web-based user interface used to control seeding schemes. Section 4 offers conclusions and discusses future research directions.

2. ARTgriculture System Design

The proposed system, named ARTgriculture, combines “art” and “agriculture” to reflect its dual focus on esthetic seeding design and autonomous execution. It was developed to overcome the labor and scalability barriers of artistic landscaping in urban environments by automating key seeding tasks.

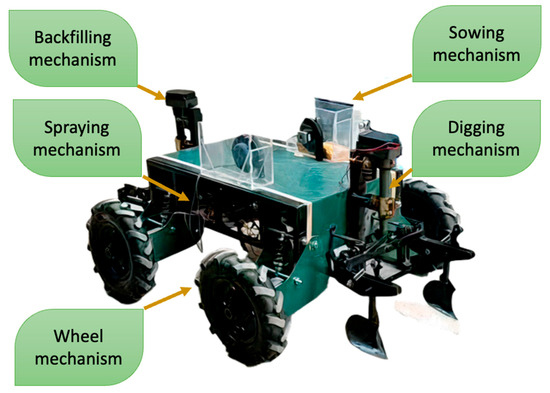

As shown in Figure 1, ARTgriculture is a modular UGV equipped with various mechanisms for digging, sowing, backfilling, and spraying. Following the design specification on Table 1, the system integrates multiple subsystems, all managed by the STM32F401 microcontroller, which serves as the central controller. The digging and backfilling systems utilize linear motors for automated height adjustments. Meanwhile, the sowing system incorporates a stepper motor to precisely control the dispensing of seeds, allowing the user to specify the desired quantity. Additionally, the spraying system employs a water pump to irrigate the soil after backfilling. Finally, the navigation system features a four-wheel-drive mechanism powered by four DC motors. The following subsections provide a detailed explanation of each subsystem, including their hardware configurations and control algorithms.

Figure 1.

Real-life prototype of the ARTgriculture.

Table 1.

ARTgriculture Specification.

2.1. Digging and Backfilling System

The digging and backfilling processes are essential tasks that ARTgriculture is designed to perform, forming critical components of the seeding workflow. Digging is the first task in the full seeding sequence, where precise hole locations are prepared for sowing. Following sowing, backfilling ensures that the seeds are properly covered with soil, completing the seeding process. ARTgriculture also offers flexibility, allowing users to perform these tasks independently based on specific requirements. For instance, digging alone may be needed for transplanting saplings, or backfilling may be used to address unfinished tasks.

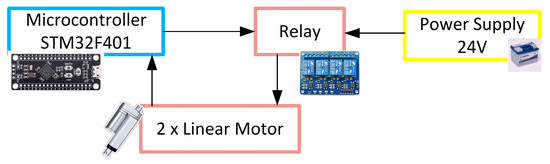

The digging system is mounted at the front of the UGV, while the backfilling system is positioned at the rear. Although both systems share similar core components, as illustrated in Figure 2, each is equipped with distinct tool heads designed for their specific tasks. Each system is powered by a dedicated linear motor, a DC 24 V unit with a 10 W power rating, a 1 A current draw, and a 30 cm stroke length. These motors are equipped with Hall effect sensors, providing precise position feedback for accurate height adjustments. Each motor operates independently through its own relay, controlled by the STM32F401 microcontroller. This configuration ensures precise and automated height control for the digging and backfilling rods, enhancing operational efficiency and enabling adaptability to diverse field conditions.

Figure 2.

Digging and backfilling hardware diagram.

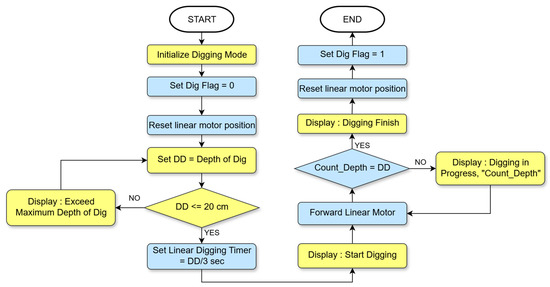

The ARTgriculture’s digging process shown in Figure 3 uses color coding to indicate where each action takes place: yellow represents operations performed on the web page, while blue corresponds to tasks handled by the STM32 microcontroller. It begins when the system initiate Digging Mode. Initially, the Dig Flag is set to 0, and the linear motor responsible for digging is reset to its starting position. The user inputs the desired digging depth (DD), which is validated against a maximum allowable depth of 20 cm. If the specified depth exceeds this limit, the system halts the operation and displays a warning message: “Exceed Maximum Depth of Dig.” If the depth is within the allowable range, the system calculates the required digging time (Linear Digging Timer) as DD/3 s, which represents the time needed to reach the specified depth.

Figure 3.

Flow chart of digging process, similar to backfilling process.

Once the parameters are set, the system initiates the digging process with the message “Start Digging.” The ARTgriculture’s linear motor advances incrementally, continuously monitoring the current depth (Count_Depth) in real-time. Progress updates are displayed with the message “Digging in Progress, Count_Depth.” The ARTgriculture continues the operation until the current depth equals the desired depth (Count_Depth = DD). Upon reaching the desired depth, the system resets the linear motor to its initial position, sets the Dig Flag to 1, and displays the message “Digging Finish.” This concludes the digging operation, preparing the ARTgriculture for the next task. The same procedure also applies to the backfilling process, where the ARTgriculture fills holes created during digging and sowing tasks, ensuring a uniform surface for subsequent operations.

2.2. Sowing System

Sowing is one of the four main tasks in ARTgriculture’s seeding process, performed sequentially after the digging process and before backfilling. This critical step ensures that seeds are accurately placed within the prepared holes. ARTgriculture’s sowing system allows users to specify the size of the seed (small, medium, or large) and the system automatically determines the corresponding number of seeds to be placed in each hole, ensuring consistent and efficient seeding.

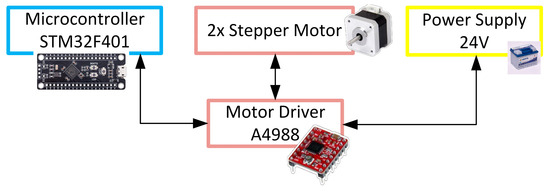

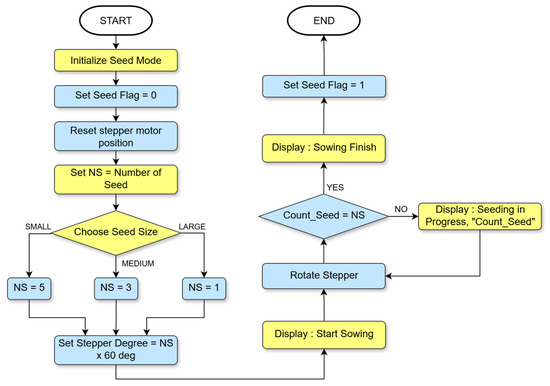

The sowing system, as shown in Figure 4, utilizes a NEMA 17 stepper motor, which operates at 1.8° per step and provides a holding torque of 45 N·cm, controlled by an A4988 driver. ARTgriculture’s sowing process shown in Figure 5 begins by initializing Seed Mode. During this step, the Seed Flag is set to 0, and the stepper motor controlling the sowing mechanism is reset to its initial position. The system then determines the number of seeds (NS) to be dispensed. The user selects the seed size—small, medium, or large—corresponding to the following values of NS: 5 for small seeds, 3 for medium seeds, and 1 for large seeds. Based on the selected seed size, the system calculates the rotation degree of the stepper motor as NS × 60 degrees.

Figure 4.

Sowing hardware core components.

Figure 5.

Flow chart of sowing process.

Once these parameters are configured, the system initiates the sowing process and displays “Start Sowing” on the web page. The stepper motor rotates incrementally to dispense the seeds, while the system monitors the count of dispensed seeds (Count_Seed) in real-time. Progress updates are shown with the message “Sowing in Progress, Count_Seed.” ARTgriculture continues the operation until the number of dispensed seeds matches the predefined value. Once sowing is complete, the Seed Flag is set to 1, and the web page displays “Sowing Finish.”

2.3. Spraying System

In the autonomous seeding sequence, spraying is carried out after the backfilling process to ensure proper spraying of the planted seeds. However, ARTgriculture also provides the flexibility for users to perform spraying as an independent operation, allowing the system to water the field as needed without executing other tasks.

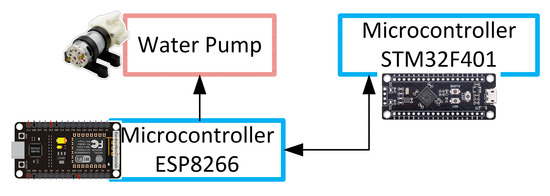

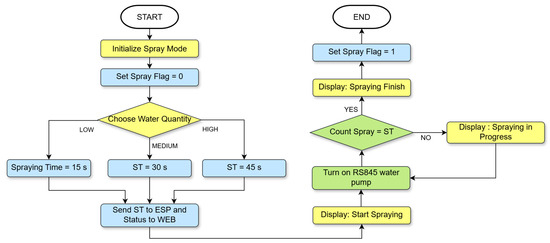

The water spraying system, as shown in Figure 6, utilizes an R385 water pump, which operates at a voltage range of 6–12 V and a flow rate of up to 1.5 L per minute, managed by the ESP8266 microcontroller. The spraying process, as shown in Figure 7, where the yellow color represents actions on the web page, the blue color corresponds to processes executed by the STM32 main microcontroller, and the green color highlights tasks managed by the ESP8266 module.

Figure 6.

Spraying hardware core components.

Figure 7.

Flow chart of spraying process.

During initialization step, the Spray Flag is set to 0, preparing the system for operation. The user selects the desired water quantity, which determines the spraying time. The system offers three levels of water quantity: LOW (15 s), MEDIUM (30 s), and HIGH (45 s). Once the water quantity is selected, the spraying time (ST) is set accordingly. This value is then sent to the ESP module for processing, and the status is updated on the web interface to provide real-time feedback to the user.

With the spraying time established, the system activates the spraying mechanism. The R385 water pump, powered by a 12 V supply, is turned on, and the system displays the message “Start Spraying.” During the spraying process, the ESP8266 tracks the timer (Count_Spray) and updates the interface with progress messages such as “Spraying in Progress.” The process continues until the Count_Spray matches the ST. Once the spraying is complete, the Spray Flag is set to 1, and the system displays the message “Spraying Finish.”

2.4. Navigation System

The navigation system is a critical component of ARTgriculture, enabling precise and efficient movement across agricultural fields to perform its seeding tasks. This system ensures that tasks such as digging, sowing, backfilling, and spraying are performed according to users’ requirements while minimizing errors and maximizing productivity. Effective navigation is essential for achieving the precision and consistency required in modern farming, making it a cornerstone of ARTgriculture’s functionality.

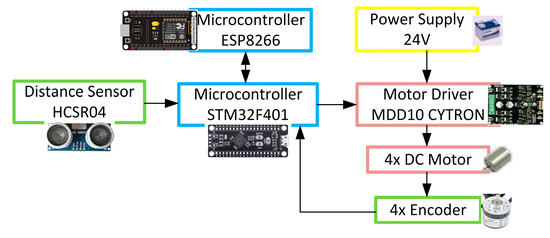

For navigation and mobility, ARTgriculture features a 4-wheel drive mechanism powered by four DC motors, each rated at 24 V, 1.6 A, and capable of delivering a torque of 9 N·m with a 65.5:1 gear ratio and a speed of 40 rpm. These motors are controlled by an MDD10 CYTRON motor driver, which supports dual-channel motor control with a continuous current capacity of 10 A per channel. The movement is monitored by four DC 5 V–24 V 360 P/R incremental rotary encoders with AB 2-phase output and a 6 mm shaft, offering 360 pulses per revolution for precise tracking and adjustments. Additionally, ARTgriculture is equipped with an obstacle avoidance system using an HCSR04 ultrasonic distance sensor. Wireless communication is facilitated by the ESP8266 microcontroller, allowing for remote control and monitoring through a web-based interface. Figure 8 illustrates the comprehensive navigation hardware.

Figure 8.

Navigation hardware core components.

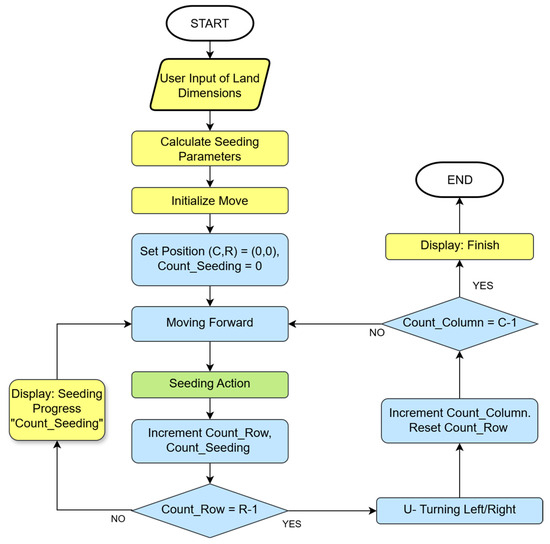

The flowchart in Figure 9 uses color coding to represent where each action occurs: yellow indicates processes happening on the web page, blue represents calculations and commands executed by the main microcontroller, and green highlights tasks performed directly by the UGV. The navigation process of ARTgriculture start with specifying parameters like land dimensions, including length (L) and width (W), the spacing between seeding points, digging depth, and the water volume for spraying. The seeding parameters, such as the total number of rows (R) and columns (C), the number and type of required turns, and the total seeding points were calculated afterward.

Figure 9.

Flow chart of navigation process.

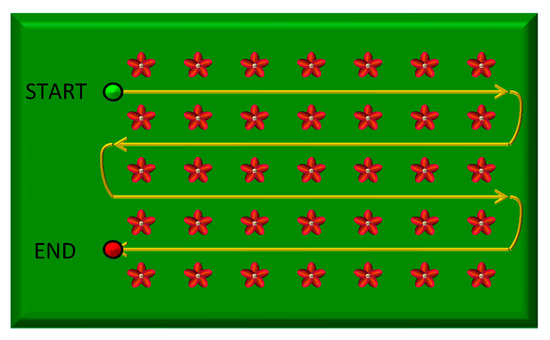

The grid size is determined using the formulas and . Once the parameters are set, ARTgriculture initializes its position at (0, 0), with all counters set to zero. The robot moves forward along the grid, performing seeding actions at each node while incrementing both the seeding counter (Count_Seeding) and the row counter (Count_Row). When ARTgriculture reaches the end of a row (i.e., Count_Row = R − 1), it executes a U-turn to align itself with the next column. If additional columns remain (i.e., Count_Column < C − 1), the column counter is incremented, and the row counter resets to zero, allowing the robot to begin seeding along the next row. This process continues until all rows and columns are traversed, as shown in Figure 10. Upon completion, a “Finish” message.

Figure 10.

Illustration of path planning ARTgriculture.

3. ARTgriculture Web-Based User Interface

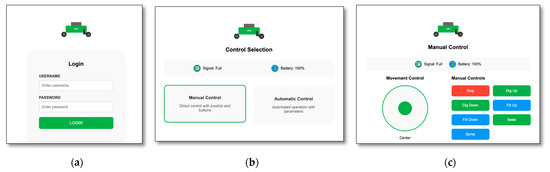

A web application illustrated in Figure 11 provides a user-friendly interface for managing and controlling all processes of the seeding task. The front-end of the web application is developed using the Flutter 3.10 framework, while back-end operations are handled by the Laravel 10 framework, an open-source and robust PHP 8.1 framework.

Figure 11.

Web-based User Interface of (a) login screen, (b) control selection, and (c) manual control interface.

Upon successful login, the dashboard enables the user to choose between manual and autonomous modes. In manual mode, the web application functions as a digital joystick, enabling the user to remotely navigate the UGV. For autonomous mode, users can configure commands and define parameters on the web platform specific to the selected operation. These parameters include the shape and dimensions of the land, the spacing between seeding points, the intensity of spraying, and the size of the seed to be planted. Commands are sent to the ESP8266 module on ARTgriculture, which relays confirmation to the cloud server. If ARTgriculture encounters an obstacle, the user is notified via an alert on the dashboard.

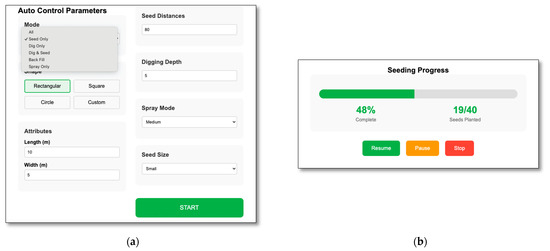

The autonomous control system of ARTgriculture supports six operational modes, as shown in Figure 12a. The “All” mode performs a full sequence of tasks, including digging, sowing, backfilling, and spraying. Users can customize movement patterns to suit specific field layouts, such as squares, rectangles, or other shapes, by specifying the length and width of the land. The depth of digging can also be specified, with a maximum allowable depth of 20 cm. For spraying, users can choose from three quantity options, and for sowing, they can select from three predefined seed sizes.

Figure 12.

Autonomous seeding control user interface (a) parameter setting page, (b) progress tracker page.

The “Dig Only” mode allows users to utilize ARTgriculture for applications beyond seed planting. For instance, users may need to dig holes for planting small plants, shrubs, or saplings that require manual placement. This mode adapts ARTgriculture to diverse planting needs beyond traditional seeding.

Similarly, the “Spray Only” mode is particularly useful after the full seeding process is completed. Users may need to water the planted seeds daily or apply fertilizers or pesticides without triggering other tasks such as digging or sowing. This flexibility ensures that ARTgriculture can support ongoing maintenance and care for crops beyond the initial seeding stage.

These specialized modes make ARTgriculture a highly adaptable tool, capable of catering to a wide range of farming requirements and offering flexibility to suit specific operational needs.

A progress tracker, as shown Figure 12b, keeps the user informed of task completion status (e.g., “19 out of 40 seeds planted”). The interface also includes control buttons that allow the user to resume paused tasks, pause ongoing operations, or stop them entirely, ensuring flexibility and user control throughout the process.

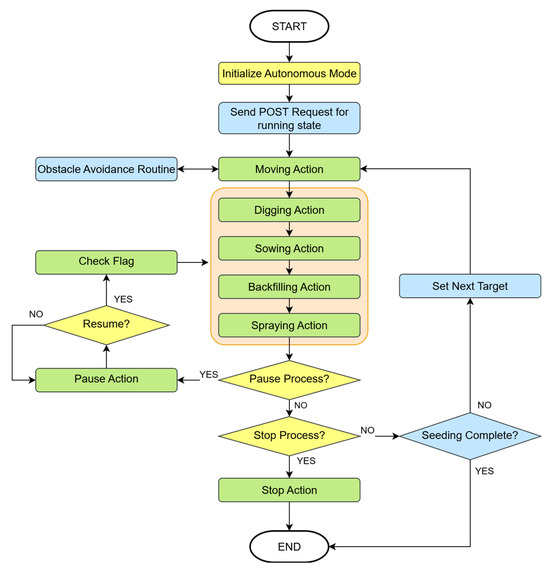

As shown in Figure 13, the process begins with the user activating Autonomous Mode. The chart employs color coding to delineate the locations of each step: yellow signifies processes occurring on the web page, blue corresponds to actions performed by the primary microcontroller, and green indicates tasks directly executed by the UGV. ARTgriculture then performs its primary tasks, including navigating to the target location, digging, planting seeds, leveling soil, and spraying water. In the event of an obstacle detection, the UGV executes an obstacle avoidance protocol before resuming its trajectory. Throughout this process, ARTgriculture monitors user inputs to initiate pauses or halt the operation. If a pause is requested, the UGV ceases its operations and awaits a resume command. Conversely, upon receiving a stop command, all actions are terminated promptly. This iterative process continues at each target location until all tasks have been successfully executed.

Figure 13.

Flow chart of autonomous seeding mechanism.

4. Conclusions

This study has successfully achieved its primary objective of developing and modularly testing the ARTgriculture system. The core modules were successfully constructed and validated under controlled conditions, demonstrating their ability to perform essential agricultural operations like digging, sowing, backfilling, and spraying. These results confirm the significant success of meeting the research’s fundamental objectives.

However, it is important to acknowledge the current limitations of this work. While individual modules have shown promising performance, the comprehensive integration and testing of the entire ARTgriculture system have not yet been completed. Additionally, the current path planning algorithm is suitable for the initial development phase but requires further advancement to handle more complex agricultural scenarios.

Looking ahead, future work will focus on expanding the system’s operational capacity and integrating advanced functionalities. For instance, adopting machine vision technology will enable autonomous weed and pest detection and removal, further strengthening the system’s potential to revolutionize modern farming practices.

Author Contributions

Conceptualization, R.M., A.S.S., and S.A.E.; methodology, R.M., N.E.E., and A.S.S.; software, A.R.A.; validation, R.M., N.E.E., A.S.S., and S.A.E.; formal analysis, R.M., and S.A.E.; investigation, R.M., N.E.E., and S.A.E.; resources, N.E.E., A.S.S. and S.A.E.; data curation, R.M.; writing—original draft preparation, R.M. and A.R.A.; writing—review and editing, N.E.E., A.S.S., and S.A.E.; visualization, A.R.A.; supervision, R.M., A.S.S., and N.E.E.; project administration, S.A.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

Thanks for students that make this happen.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- United Nations Department of Economic and Social Affairs. World Urbanization Prospects: The 2018 Revision; UN: New York, NY, USA, 2019. [Google Scholar]

- Liu, R.; Dong, X.; Wang, X.C.; Zhang, P.; Liu, M.; Zhang, Y. Study on the Relationship among the Urbanization Process, Ecosystem Services and Human Well-Being in an Arid Region in the Context of Carbon Flow: Taking the Manas River Basin as an Example. Ecol. Indic. 2021, 132, 108248. [Google Scholar] [CrossRef]

- Niinemets, Ü.; Peñuelas, J. Gardening and Urban Landscaping: Significant Players in Global Change. Trends Plant Sci. 2008, 13, 60–65. [Google Scholar] [CrossRef] [PubMed]

- Venter, Z.S.; Barton, D.N. Linking Green Infrastructure to Urban Heat and Human Health Risk Mitigation in Oslo, Norway. Sci. Total Environ. 2020, 709, 136193. [Google Scholar] [CrossRef] [PubMed]

- Pandey, B.; Ghosh, A. Urban Ecosystem Services and Climate Change: A Dynamic Interplay. Front. Sustain. Cities 2023, 5, 1281430. [Google Scholar] [CrossRef]

- Cameron, R.W.F.; Blanuša, T. Green Infrastructure and Ecosystem Services–Is the Devil in the Detail? Ann. Bot. 2016, 118, 377–391. [Google Scholar] [CrossRef] [PubMed]

- Leal Filho, W.; Wolf, F.; Castro-Díaz, R.; Li, C.; Ojeh, V.N.; Gutiérrez, N.; Nagy, G.J.; Savić, S.; Natenzon, C.E.; Quasem Al-Amin, A.; et al. Addressing the Urban Heat Islands Effect: A Cross-Country Assessment of the Role of Green Infrastructure. Sustainability 2021, 13, 753. [Google Scholar] [CrossRef]

- Tian, L. Analysis of the Artistic Effect of Garden Plant Landscaping in Urban Greening. Comput. Intell. Neurosci. 2022, 2022, 2430067. [Google Scholar] [CrossRef] [PubMed]

- Vasconez, J.P.; Kantor, G.A.; Auat Cheein, F.A. Human–Robot Interaction in Agriculture: A Survey and Current Challenges. Biosyst. Eng. 2019, 179, 35–48. [Google Scholar] [CrossRef]

- Cheng, C.; Fu, J.; Su, H.; Ren, L. Recent Advancements in Agriculture Robots: Benefits and Challenges. Machines 2023, 11, 48. [Google Scholar] [CrossRef]

- Oliveira, L.F.P.; Moreira, A.P.; Silva, M.F. Advances in Agriculture Robotics: A State-of-the-Art Review and Challenges Ahead. Sensors 2021, 10, 52. [Google Scholar] [CrossRef]

- Fountas, S.; Mylonas, N.; Malounas, I.; Rodias, E.; Hellmann Santos, C.; Pekkeriet, E. Agricultural Robotics for Field Operations. Sensors 2020, 20, 2672. [Google Scholar] [CrossRef] [PubMed]

- Grimstad, L.; Pham, C.D.; Phan, H.T.; From, P.J. On the Design of a Low-Cost, Light-Weight, and Highly Versatile Agricultural Robot. In Proceedings of the 2015 IEEE International Workshop on Advanced Robotics and Its Social Impacts (ARSO), Lyon, France, 30 June–2 July 2015; IEEE: New York, NY, USA, 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Thomas, M.J.; Goud, R.S.B.K.; Jayaprakash, N.; Mohan, S. Conceptual Design and Structural Analysis of a Multipurpose Agricultural Robot. In Intelligent Manufacturing and Energy Sustainability. ICIMES 2023; Springer: Singapore, 2024; pp. 127–137. [Google Scholar] [CrossRef]

- Otani, T.; Itoh, A.; Mizukami, H.; Murakami, M.; Yoshida, S.; Terae, K.; Tanaka, T.; Masaya, K.; Aotake, S.; Funabashi, M.; et al. Agricultural Robot under Solar Panels for Sowing, Pruning, and Harvesting in a Synecoculture Environment. Agriculture 2022, 13, 18. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).