1. Introduction

Web applications represent one of the primary targets of cyberattacks due to their high exposure in connected environments and the constant exchange of sensitive information such as personal data, access credentials, and financial transactions. This has turned web application security into a critical concern for both developers and organizations. These threats have not only increased in volume but also evolved in sophistication, employing advanced techniques that exploit vulnerabilities across the design, development, and deployment stages of systems [

1]. Initiatives such as the Open Web Application Security Project (OWASP) have extensively documented these risks, with its Top-Ten list serving as a global reference for identifying and mitigating the most critical threats [

2]. However, despite cutting-edge standards and best practices, many applications continue to be deployed with flaws that allow attacks such as code injection, identity spoofing, privilege escalation, sensitive data exposure, and denial of service [

3].

Furthermore, the accelerated development of web applications—driven by agile methodologies along with an increasing reliance on third-party libraries and cloud services, as well as the growing pressure to reduce delivery times—have contributed to security being treated as a secondary priority [

4]. As a result, traditional protection mechanisms such as firewalls or antivirus solutions are insufficient against attacks specifically targeting the logical and functional layers of web applications.

To mitigate these risks, specialized solutions such as Web Application Firewalls (WAFs) have emerged, designed to monitor potentially malicious HTTP traffic [

5]. Positive security logic-based WAFs are designed to identify malicious traffic by detecting anomalous behaviors with respect to previously learned patterns. In this context, various studies propose the application of machine learning techniques to enhance their adaptability [

6,

7,

8]. In contrast, negative security logic-based WAFs focus on detecting known attacks through predefined signatures or rules that match specific threat patterns, such as the OWASP Core Rule Set (CRS) [

9].

Recent research efforts have started to investigate emerging technologies such as Coraza WAF. This technology is described as an open-source WAF with a modern architecture written in Go and native integration with the OWASP CRS, positioning it as a lightweight alternative to legacy solutions such as ModSecurity [

10]. Additionally, while it offers preview support for multiple reverse proxies, its integration is considered stable for the Caddy Reverse Proxy and for Proxy-WASM extensions (e.g., Envoy), whereas other connectors are still in an experimental stage.

Studies related to Coraza WAF have served two purposes: first, it has been compared among negative security logic WAFs, namely ModSecurity and NAXSI, by analyzing real-time performance and processed latency data [

11]; second, it has been considered as a part of a series of educational laboratories that integrate Coraza WAF with Caddy in order to simulate risks defined by the OWASP Top Ten, promoting a practical approach in controlled environments [

12]. The former does not analyze each WAF in terms of detection rules, configurable sensitivity levels, or false positive rates, which are critical for understanding real-world effectiveness. In the latter, the use of Coraza is limited to a functional demonstration scenario and does not include performance benchmarking, error rate evaluation (e.g., false positives), or sensitivity configuration.

The impact of both false positives and false negatives on network intrusion systems has been widely recognized, as they can lead to operational inefficiencies or undetected threats [

13,

14,

15]. This issue has been addressed from various perspectives, such as in [

16], where the impact of detection errors on the robustness of different types of complex networks is analyzed based on a mathematical perspective. Although the study does not specifically focus on WAFs, it provides clear evidence that false positives and false negatives must be carefully handled to avoid compromising the assessment of network robustness under attack conditions. While this approach is framed within the context of abstract complex networks, it is conceptually applicable to real-world defense systems. In this context, concerning WAFs, it is critical to minimize false positives while maintaining robust detection [

17], particularly under increasing rule sensitivity such as the OWASP CRS paranoia levels.

Despite these developments, to the best of the authors’ knowledge, there is still a lack of academic research that analyzes Coraza WAF’s detection capabilities under variable sensitivity settings such as the OWASP CRS paranoia levels, especially in realistic environments involving reverse proxies.

This study addresses this gap by evaluating the behavior of Coraza WAF integrated with two different reverse proxies within controlled environments that utilize vulnerable backend servers corresponding to each item of the OWASP Top-Ten framework, as well as an HTTPS backend server. The aims are to assess performance and false positive detection. This research employs the four paranoia levels defined in the OWASP CRS.

This article is organized as follows. The methodology employed in this study along with the configuration of the scenarios, the experimental design, and the statistical applied techniques are described in

Section 2. The obtained results and their corresponding analysis are presented in

Section 3, while the conclusions drawn from this study are outlined in

Section 4.

2. Methodology

In this section, the methodological design applied to evaluate the behavior of Coraza WAF integrated with the reverse proxies Caddy and Envoy is described. The testing laboratory setup, experimental procedure, and analysis criteria applied to measure both the attack blocking capacity and the generation of false positives under different sensitivity levels of the OWASP CRS are presented.

2.1. Testing Laboratory Setup

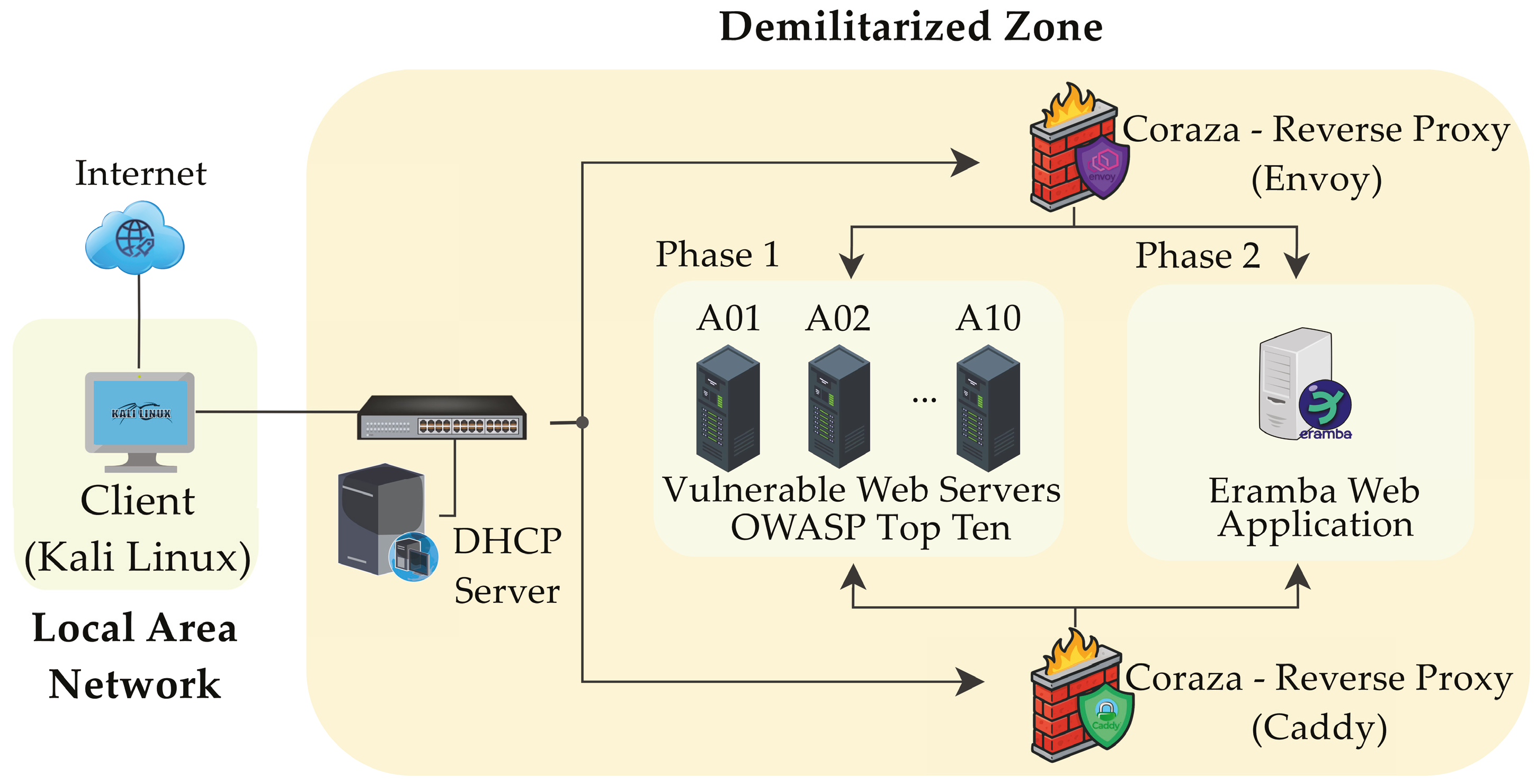

The testing laboratory implemented for this study is shown in

Figure 1. This virtualized approach consists of a client (Kali Linux); ten training web servers, A01, A02, …, A10, downloaded from the VulnHub platform, one for each category of the OWASP Top-Ten 2021 framework; an HTTPS Eramba v3.26.3 server; and two reverse proxies (Envoy and Caddy) responsible for filtering and redirecting traffic through the Coraza WAF v3.3.3 solution.

The implemented reverse proxies are Caddy version 2.10.0 and Envoy version 1.34.1. These are selected as the only officially supported and stable environments according to Coraza’s current official documentation [

10]. Both reverse proxies involved in this study are evaluated separately in different testing scenarios, acting as inspection points for incoming traffic towards the backend servers. In the case of Envoy, Coraza is integrated using the WebAssembly (WASM) module, while in Caddy, it is incorporated through a native installation process. The installation and integration steps for both proxies are carried out according to that given in the official Coraza WAF documentation. For Caddy, the Coraza plugin is compiled utilizing the coraza-caddy repository guidelines [

18], enabling native integration. For Envoy, the WebAssembly module is configured according to the coraza-proxy-wasm repository instructions [

19].

For both proxy implementations, OWASP CRS version 4.15.0 is utilized for threat detection. In all scenarios, the client is a Kali Linux instance that generates HTTP/HTTPS requests directed to the back-end servers depending on the phase of the applied test.

2.2. Experimental Design

The experimental design is developed in two phases. In the first phase, Coraza WAF’s threat detection capacity is analyzed against structured attacks based on the OWASP Top-Ten categories [

20]. In the second phase, the analysis is reoriented towards the evaluation of false positives generated by legitimate traffic under controlled conditions. For each test phase, attacks are executed that evaluate WAF behavior under the four sensitivity levels (paranoia levels) defined by OWASP CRS (PL1, PL2, PL3, and PL4).

During the first phase, controlled interactions are carried out from the Kali Linux instance towards the vulnerable servers defined in the Table 1 reported in [

21], each corresponding to an OWASP Top-Ten category. The tests are executed separately for each reverse proxy, initially evaluating the environment with Caddy and subsequently with Envoy. In each environment, blocking events, activated rules, and HTTP codes generated by the WAF are recorded. In the second phase, the vulnerable servers are replaced by the Eramba web application, accessible via secure port 443. From Kali Linux, HTTPS requests are generated towards the backend, simulating legitimate user interactions that are intercepted and utilized as a basis to construct a set of POST requests with controlled modifications to the input fields. Variants incorporating the insertion of special characters (*, =, , among others) are generated without altering the format or meaning of the requests, ensuring that only valid traffic is utilized.

A definition of a tuning process in the proxies for each paranoia level from PL2 to PL4 is needed before starting the tests following the OWASP CRS recommendations to avoid blocking valid incoming requests [

22]. Subsequently, an experimental random block repetition strategy is designed to ensure the statistical validity of the results. The set of tests is segmented into 30 sequential blocks, where each block contains an increasing number of requests, from 10 to 300, with increments of 10 in each step.

The proposed methodology ensures 95% confidence through 30 blocks carried out 20 times, supported by a power analysis, separately for Caddy and Envoy, while maintaining Coraza inspection active under each Paranoia Level (PL1 to PL4) of the CRS. From the set of means obtained, Levene’s test is applied to assess variance homogeneity between the two proxies for each data set. Based on the results, Student’s t-test or Welch’s t-test is utilized to compare false positive rates and determine whether the observed differences are statistically significant.

3. Results and Discussion

The results obtained by carrying out the first phase are summarized in

Table 1. At PL1, Coraza successfully blocks attacks in 90% of the cases (i.e., 10% representing false negatives) while still allowing for full loading of interfaces (GET requests) and legitimate operation of the system for loading information (POST requests). However, from PL2 to PL3, increased inspection sensitivity becomes evident, resulting in the blocking of POST requests and partially affecting server operability, introducing false positives. Finally, in PL4, all traffic is fully blocked, preventing even basic access to web interfaces.

Although these results demonstrate Coraza’s capacity to respond to attack vectors, they do not allow for a clear distinction between the evaluated proxies, as both exhibit practically identical behaviors in terms of detection and response. Furthermore, the logs generated by Coraza do not contain sufficient internal metrics (such as processing times), which limits the possibility of making a more technical and detailed comparison between the environments.

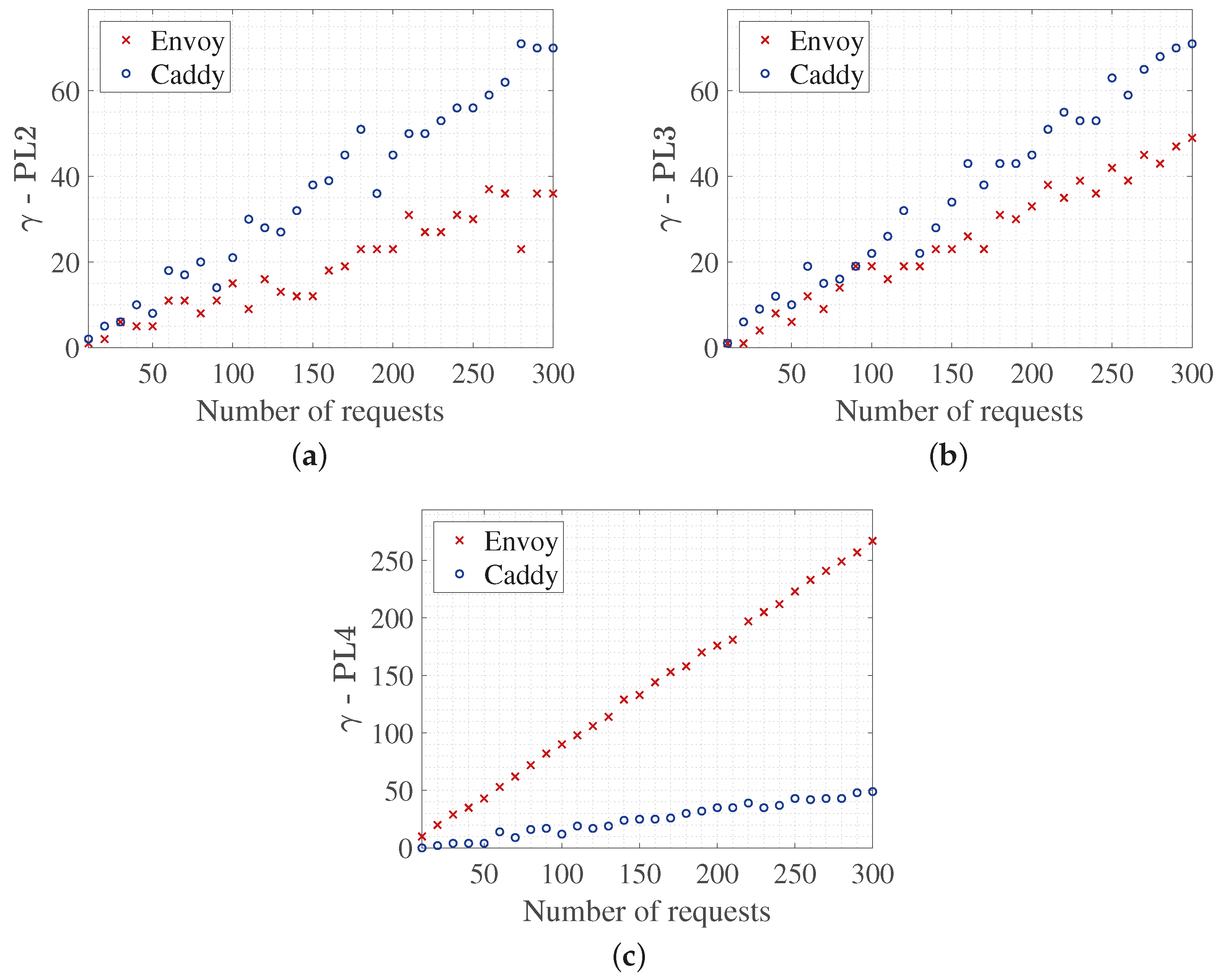

Figure 2 shows the trend of the mean false positive values (

), calculated over 20-observation blocks of requests.

Table 2 summarizes the statistical analysis applied to the mean vectors from the 20 observations for each request set, including standard deviation, Levene’s test for variance equality, the results of both the

t-test and Welch’s

t-test, and power analysis. At PL1, both Caddy and Envoy correctly process all legitimate requests, generating no false positives, which reflects a stable and conservative configuration of the CRS rule set. Since both proxies exhibit identical behavior without variation across repetitions, no graphical representation of this result is included, as it does not offer relevant comparative information.

The power analysis exceeds 0.66 in all conditions and reaches 1.00 for PL4, demonstrating that the sample size is sufficient to detect moderate to large effects with 95% confidence. This supports the adequacy of our experimental replication strategy.

Figure 2a illustrates the differences between proxies when evaluating false positives under PL2. Caddy shows a significant increase in false positives as the number of requests grows, reaching a mean of 36.3 blocks with a standard deviation of 21.127. In contrast, Envoy keeps more controlled behavior, with a mean of 18.567 and a deviation of 11.038. Levene’s test indicates that the variances are unequal (

p-value = 0.00019), prompting the use of Welch’s

t-test, which confirms a statistically significant difference (

p-value = 0.00019) in favor of Envoy. At PL3, shown in

Figure 2b, this trend remains with Caddy, registering a higher mean of false positives (

= 36.367) compared to Envoy (

= 24.967). Again, Levene’s test rejects equality of variances (

p-value = 0.0096), and Welch’s test indicates a statistically significant difference between the means (

p-value = 0.0182), favoring Envoy.

However, at PL4 (

Figure 2c), an inverse behavior is observed. Envoy becomes excessively restrictive, with a mean of 138 false positives and a very high standard deviation (

= 77.977), while Caddy maintains a low and stable level (

= 24.933;

= 14.839). This result is clearly visualized in

Figure 2c, where Envoy’s curve increases almost linearly, whereas Caddy’s remains constant. The statistical analysis corroborates this observation: Levene’s test yields a very low

p-value (

), indicating unequal variances, and Welch’s

t-test confirms a significant difference (

p-value =

) in favor of Caddy.

4. Conclusions

This study evaluated the behavior of Coraza WAF integrated with the reverse proxies Caddy and Envoy under different sensitivity levels of the OWASP CRS v4.15.0 rule set. The experimental scenarios were carried out in two phases: the first aimed at validating the blocking capacity against attack vectors defined by the OWASP Top-Ten, and the second focused on the detection of false positives arising from legitimate traffic. The results obtained in the initial phase demonstrated that both proxies allow functional integration with Coraza, correctly blocking attacks categorized in the OWASP Top-Ten. However, from intermediate paranoia levels onward, a trend toward blocking legitimate requests was observed, and in stricter configurations (PL4), complete inaccessibility to web interfaces was even recorded. Analysis of false positives revealed significant differences between the evaluated proxies. Envoy showed better performance at PL2 and PL3, with fewer false positives compared to Caddy. On the contrary, at PL4, Caddy maintained a more stable response while Envoy increased its sensitivity, adversely affecting legitimate traffic.

Although the OWASP CRS version remained constant across all scenarios, the results indicate that the number of false positives depends not only on Coraza WAF but also on the operational characteristics of each reverse proxy. Factors such as header management, request handling, and filter execution order directly influence request interpretation. Therefore, selecting the most suitable proxy for integration with Coraza WAF should consider not only technical compatibility but also the configured sensitivity level and operational context, aiming to balance security and functionality according to the specific requirements of the protected environment.

The analysis of the statistical tests across paranoia levels revealed a progressive change in proxy behavior. Envoy demonstrated a clear advantage at PL2, with high statistical power, while Caddy exhibited superior performance under the strictest configuration at PL4. PL3 emerged as a transitional level between these extremes, indicating that neither proxy holds a decisive advantage. This intermediate behavior suggests that PL3 represents a critical threshold where the balance between security sensitivity and operational tolerance begins to shift, offering valuable guidance for calibrating WAF deployments in production environments.

This analysis will enable the evaluation of the performance of future deployments of the Coraza WAF solution in proxies that are currently in the development/experimental phase. However, it is important to note that these findings are derived from controlled laboratory scenarios. As such, their direct applicability to production environments may be limited due to variables such as user concurrency, heterogeneous traffic patterns, and infrastructure-specific configurations. Future work should extend this evaluation to real-world deployments to validate and refine the observed trends.