A Real-Time Snore Detector Using Neural Networks and Selected Sound Features †

Abstract

:1. Introduction

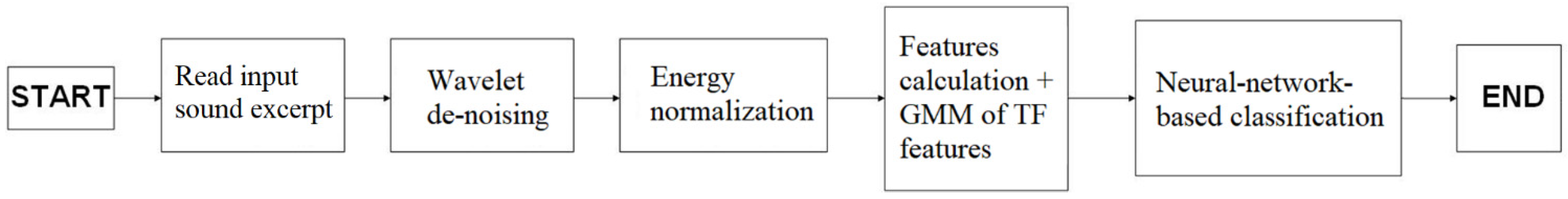

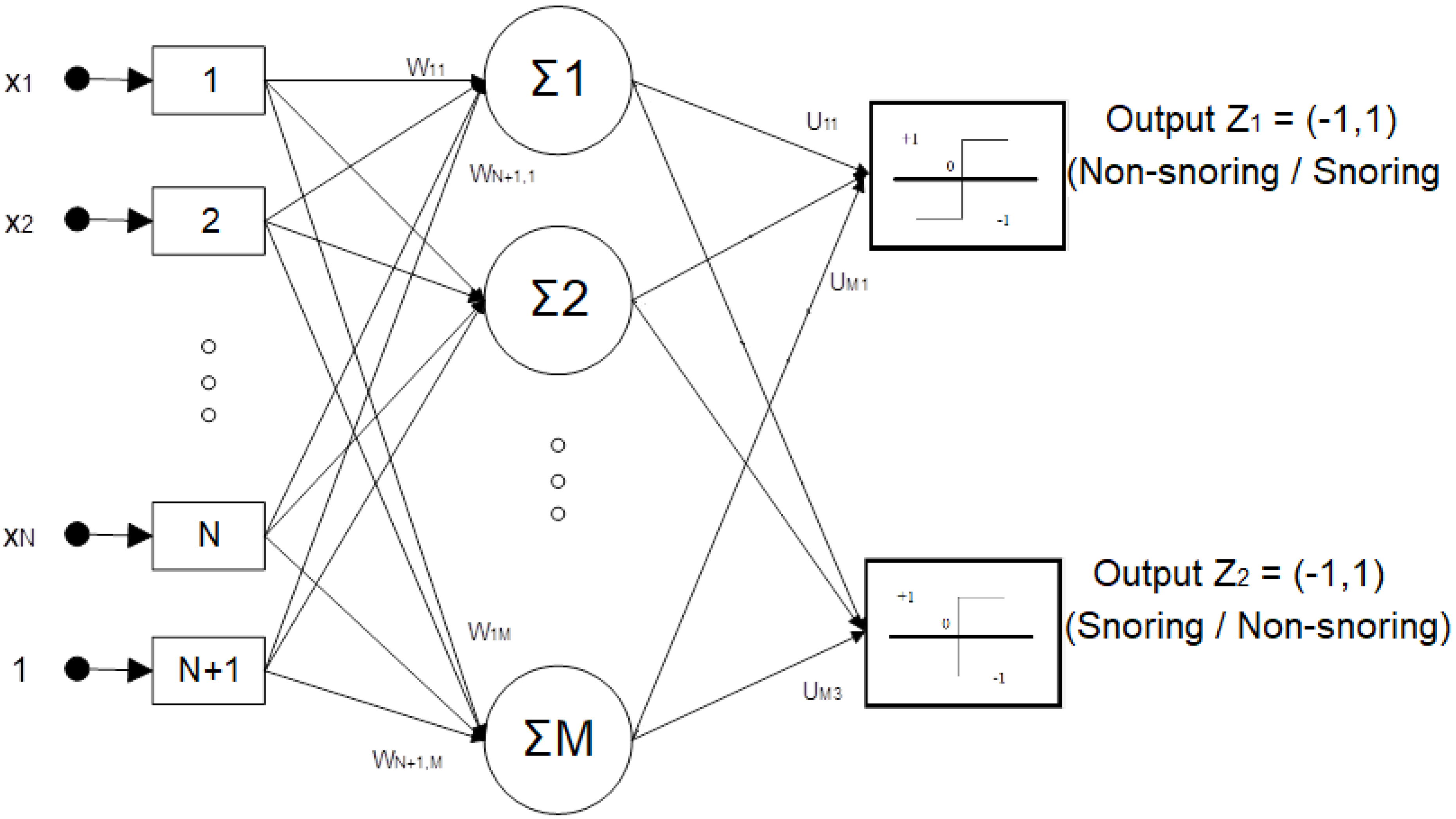

2. Architecture of the Proposed Classification Tool and Real-Time Snore Detector

3. Numerical Results

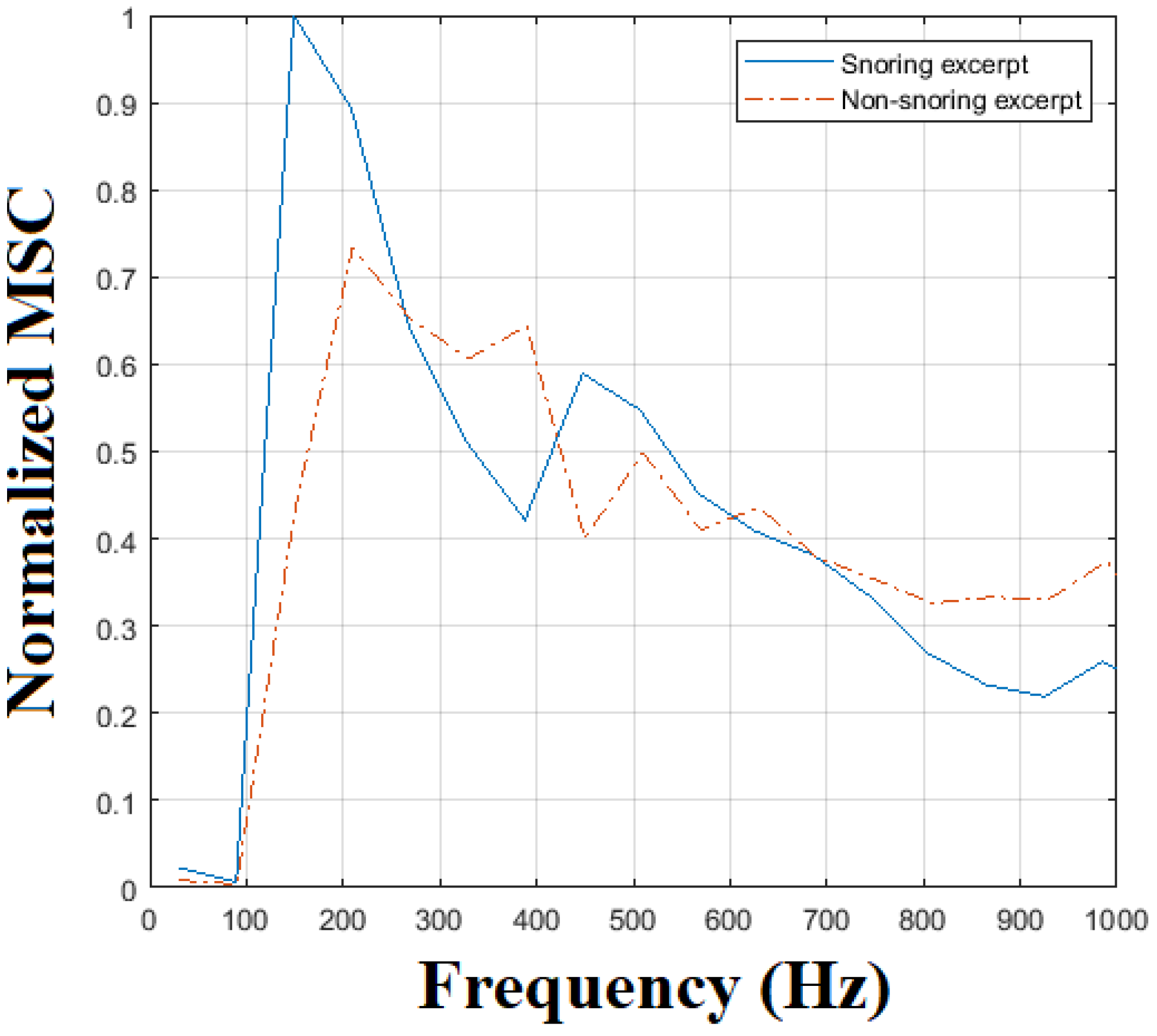

3.1. Features Selection and Performance of the Proposed Neural Network Snore Detection Tool

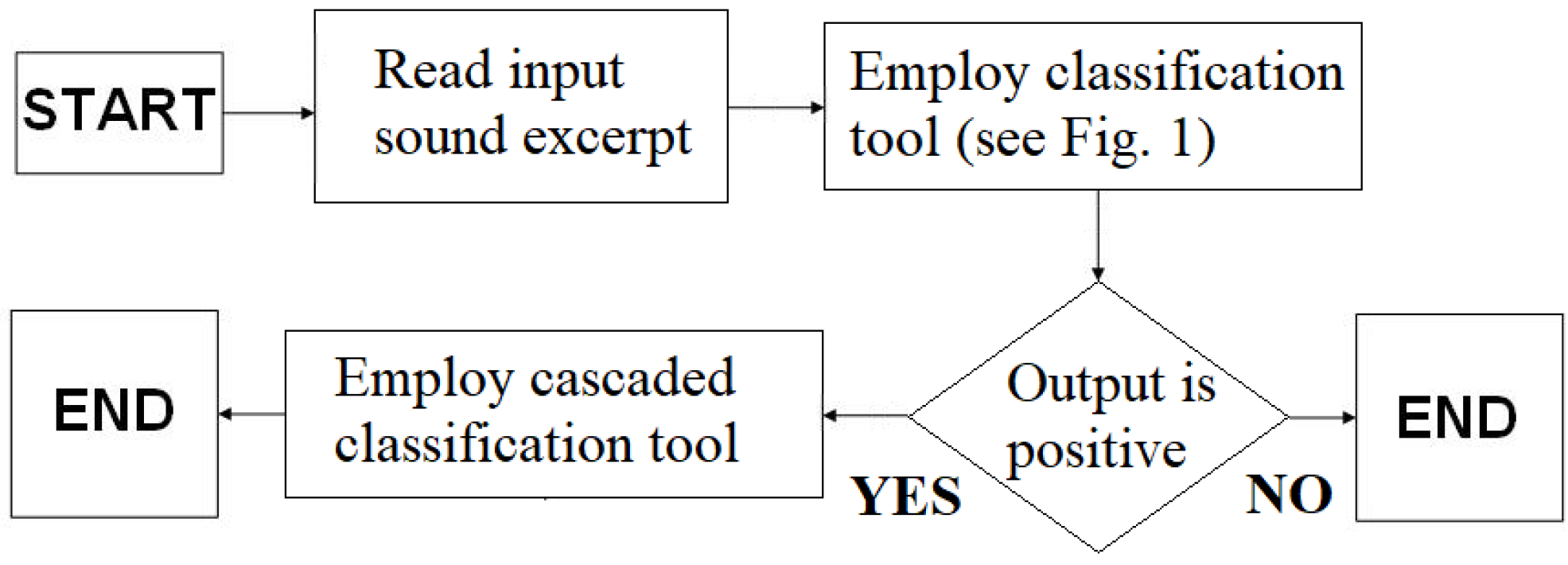

3.2. Application of the Real-Time Snore Detector

4. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Qaseem, A.; Holty, J.-E.C.; Owens, D.K.; Dallas, P.; Starkey, M.; Shekelle, P. Management of Obstructive Sleep Apnea in Adults: A Clinical Practice Guideline From the American College of Physicians. Ann. Intern. Med. 2013, 159, 471–483. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pack, A.I. Advances in Sleep-disordered Breathing. Am. J. Respir. Crit. Care Med. 2006, 173, 7–15. [Google Scholar] [CrossRef] [PubMed]

- APNEA Project: “Automatic Pre-Hospital and In-Home Screening of Sleep Apnea”, Operational Programme “Competitiveness, Entrepreneurship and Innovation. Available online: http://apnoia-project.gr/ (accessed on 1 April 2021).

- Korompili, G.; Amfilochiou, A.; Kokkalas, L.; Mitilineos, S.A.; Tatlas, N.-A.; Kouvaras, M.; Kastanakis, E.; Maniou, C.; Potirakis, S.M. Poly-SleepRec: A scored polysomnography dataset with simultaneous audio recordings for sleep apnea studies. Nat. Sci. Data 2021, 8, 1–13. [Google Scholar]

- Karunajeewa, A.S.; Abeyratne, U.; Hukins, C. Multi-feature snore sound analysis in obstructive sleep apnea–hypopnea syndrome. Physiol. Meas. 2010, 32, 83–97. [Google Scholar] [CrossRef] [PubMed]

- Ben-Israel, N.; Tarasiuk, A.; Zigel, Y. Obstructive Apnea Hypopnea Index Estimation by Analysis of Nocturnal Snoring Signals in Adults. Sleep 2012, 35, 1299–1305. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Azarbarzin, A.; Moussavi, Z. Snoring sounds variability as a signature of obstructive sleep apnea. Med. Eng. Phys. 2013, 35, 479–485. [Google Scholar] [CrossRef] [PubMed]

- Arsenali, B.; van Dijk, J.; Ouweltjes, O.; Brinker, B.D.; Pevernagie, D.; Krijn, R.; Van Gilst, M.; Overeem, S. Recurrent Neural Network for Classification of Snoring and Non-Snoring Sound Events. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar] [CrossRef]

- Emoto, T.; Abeyratne, U.R.; Akutagawa, M.; Nagashino, H.; Kinouchi, Y.; Karunajeewa, S. Neural networks for snore sound modeling in sleep apnea. In Proceedings of the 2005 IEEE International Conference on Computational Intelligence for Measurement Systems and Applications, Messian, Italy, 20–22 July 2005. [Google Scholar] [CrossRef]

- Kang, B.; Dang, X.; Wei, R. Snoring and apnea detection based on hybrid neural networks. In Proceedings of the 2017 International Conference on Orange Technologies (ICOT), Singapore, 8–10 December 2017. [Google Scholar] [CrossRef]

- Mitilineos, S.A.; Tatlas, N.-A.; Potirakis, S.M.; Rangoussi, M. Neural Network Fusion and Selection Techniques for Noise-Efficient Sound Classification. J. Audio Eng. Soc. 2019, 67, 27–37. [Google Scholar] [CrossRef]

- Mitilineos, S.A.; Potirakis, S.M.; Tatlas, N.-A.; Rangoussi, M. A Two-Level Sound Classification Platform for Environmental Monitoring. J. Sens. 2018, 2018, 1–13. [Google Scholar] [CrossRef]

- Dafna, E.; Tarasiuk, A.; Zigel, Y. Automatic Detection of Whole Night Snoring Events Using Non-Contact Microphone. PLoS ONE 2013, 8, e84139. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Z.; Han, J.; Qian, K.; Janott, C.; Guo, Y.; Schuller, B. Snore-GANs: Improving Automatic Snore Sound Classification with Synthesized Data. IEEE J. Biomed. Health Inform. 2019, 24, 300–310. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, C.; Peng, J.; Song, L.; Zhang, X. Automatic snoring sounds detection from sleep sounds via multi-features analysis. Australas. Phys. Eng. Sci. Med. 2016, 40, 127–135. [Google Scholar] [CrossRef] [PubMed]

- Tuncer, T.; Akbal, E.; Dogan, S. An automated snoring sound classification method based on local dual octal pattern and iterative hybrid feature selector. Biomed. Signal Process. Control. 2020, 63, 102173. [Google Scholar] [CrossRef] [PubMed]

- Agrawal, S.; Stone, P.; McGuinness, K.; Morris, J.; Camilleri, A. Sound frequency analysis and the site of snoring in natural and induced sleep. Clin. Otolaryngol. 2002, 27, 162–166. [Google Scholar] [CrossRef] [PubMed]

- Saunders, N.; Tassone, P.; Wood, G.; Norris, A.; Harries, M.; Kotecha, B. Is acoustic analysis of snoring an alternative to sleep nasendoscopy? Clin. Otolaryngol. 2004, 29, 242–246. [Google Scholar] [CrossRef] [PubMed]

| Scalar Features | MFCC | Normalized MSC |

|---|---|---|

| 93.4% | 95.7% | 97.7% |

| Scalar Features | MFCC | Normalized MSC | |

|---|---|---|---|

| Scalar features | - | 96.0% | 98.6% |

| MFCC | - | - | 98.7% |

| Normalized MSC | - | - | - |

| All feature classes | 97.3% | ||

| Number of Sound Excerpts Classified as Snoring | Subset of Sound Excerpts That Were Manually Scored by Experts | True Positive Ratio within Subset of Sound Excerpts (Number of True Positive Excerpts) |

|---|---|---|

| 12,090 (total duration equal to 20 h, 9 min) | 2500 (100 excerpts from each whole-night sound recording) | ~80% (2032 excerpts out of 2500) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mitilineos, S.A.; Tatlas, N.-A.; Korompili, G.; Kokkalas, L.; Potirakis, S.M. A Real-Time Snore Detector Using Neural Networks and Selected Sound Features. Eng. Proc. 2021, 11, 8. https://doi.org/10.3390/ASEC2021-11176

Mitilineos SA, Tatlas N-A, Korompili G, Kokkalas L, Potirakis SM. A Real-Time Snore Detector Using Neural Networks and Selected Sound Features. Engineering Proceedings. 2021; 11(1):8. https://doi.org/10.3390/ASEC2021-11176

Chicago/Turabian StyleMitilineos, Stelios A., Nicolas-Alexander Tatlas, Georgia Korompili, Lampros Kokkalas, and Stelios M. Potirakis. 2021. "A Real-Time Snore Detector Using Neural Networks and Selected Sound Features" Engineering Proceedings 11, no. 1: 8. https://doi.org/10.3390/ASEC2021-11176

APA StyleMitilineos, S. A., Tatlas, N.-A., Korompili, G., Kokkalas, L., & Potirakis, S. M. (2021). A Real-Time Snore Detector Using Neural Networks and Selected Sound Features. Engineering Proceedings, 11(1), 8. https://doi.org/10.3390/ASEC2021-11176