Smart Cloud Architectures: The Combination of Machine Learning and Cloud Computing †

Abstract

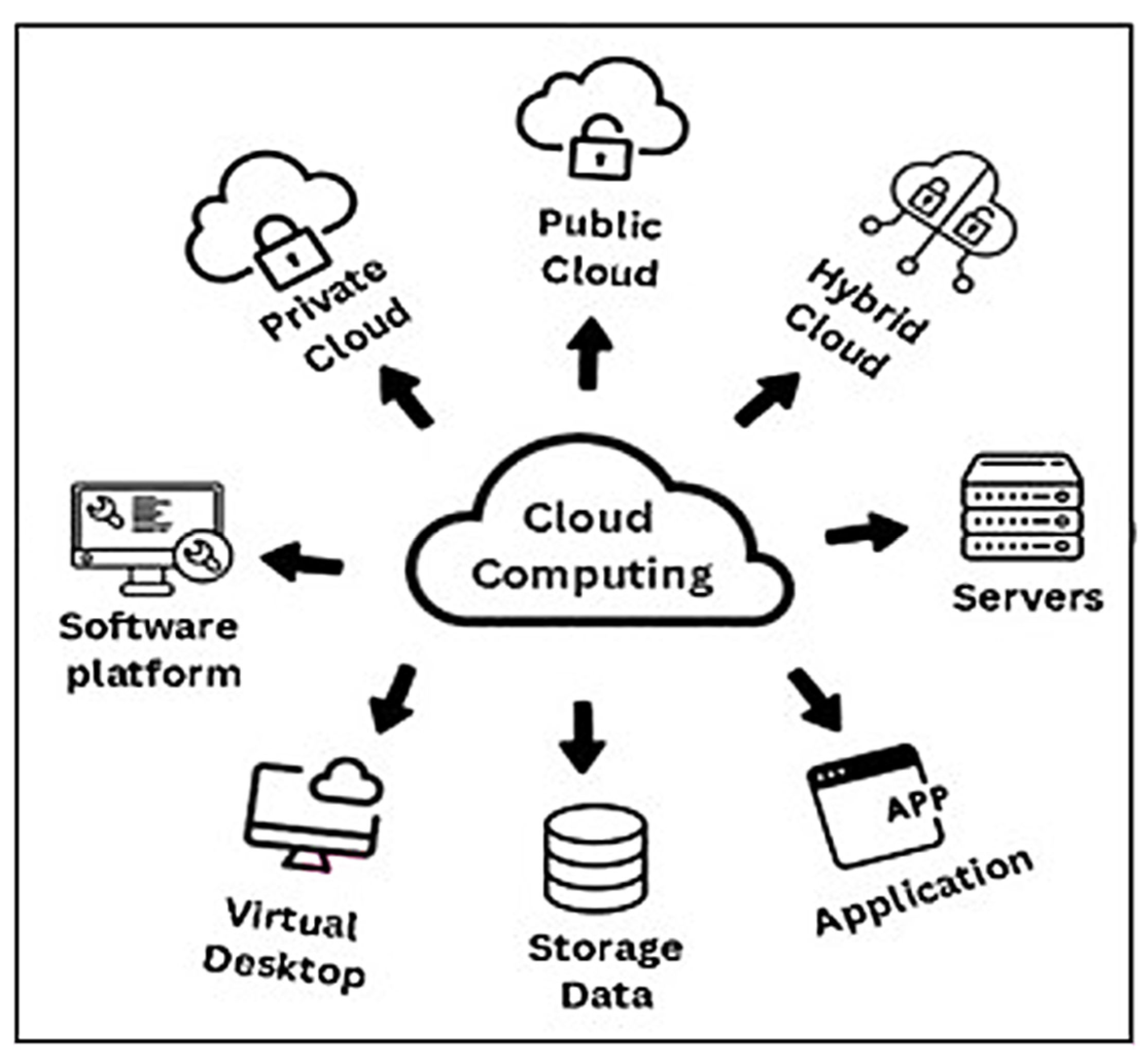

1. Introduction

2. Literature Review

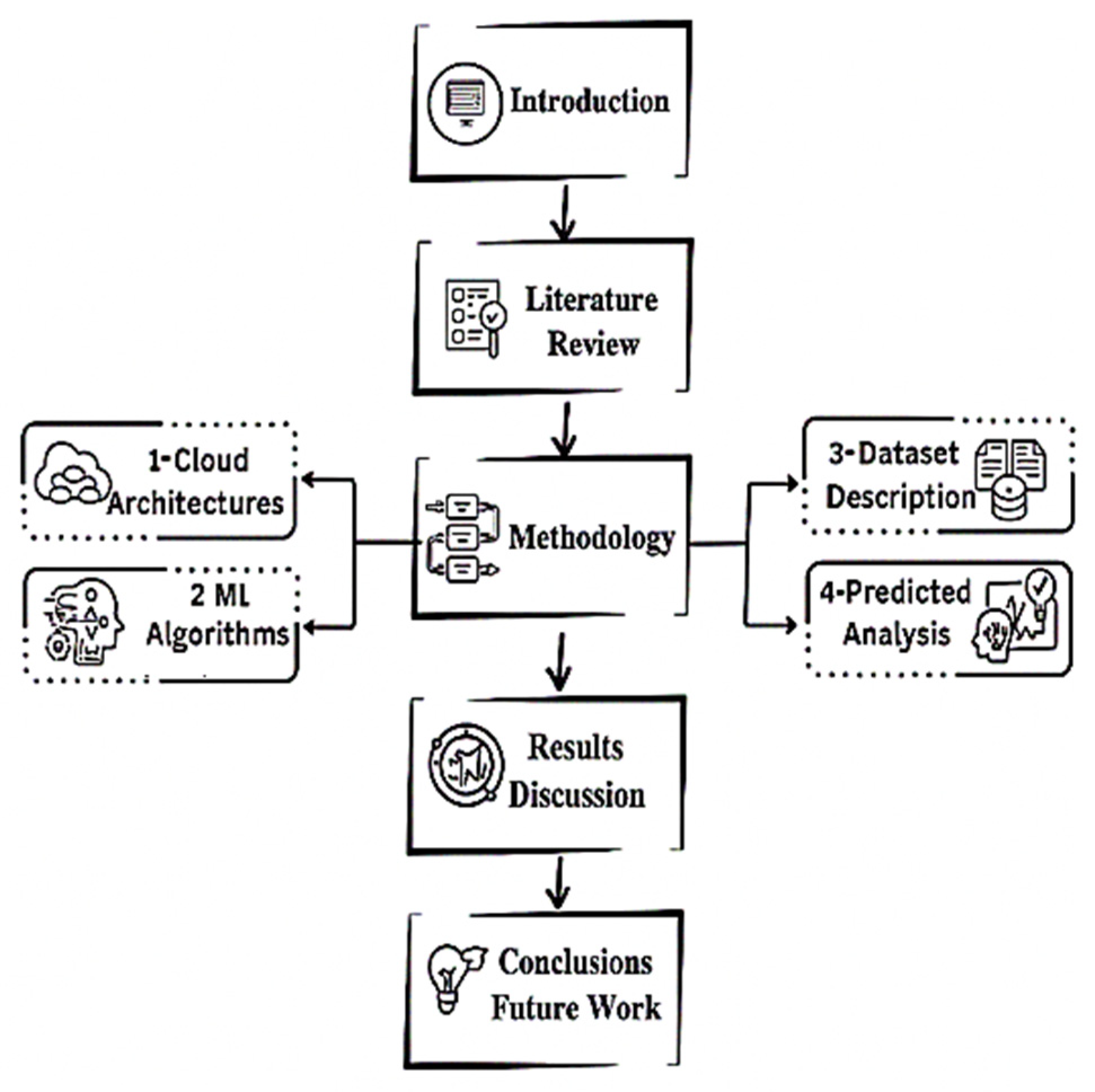

3. Methodology

3.1. ML Enhanced Cloud Architectures

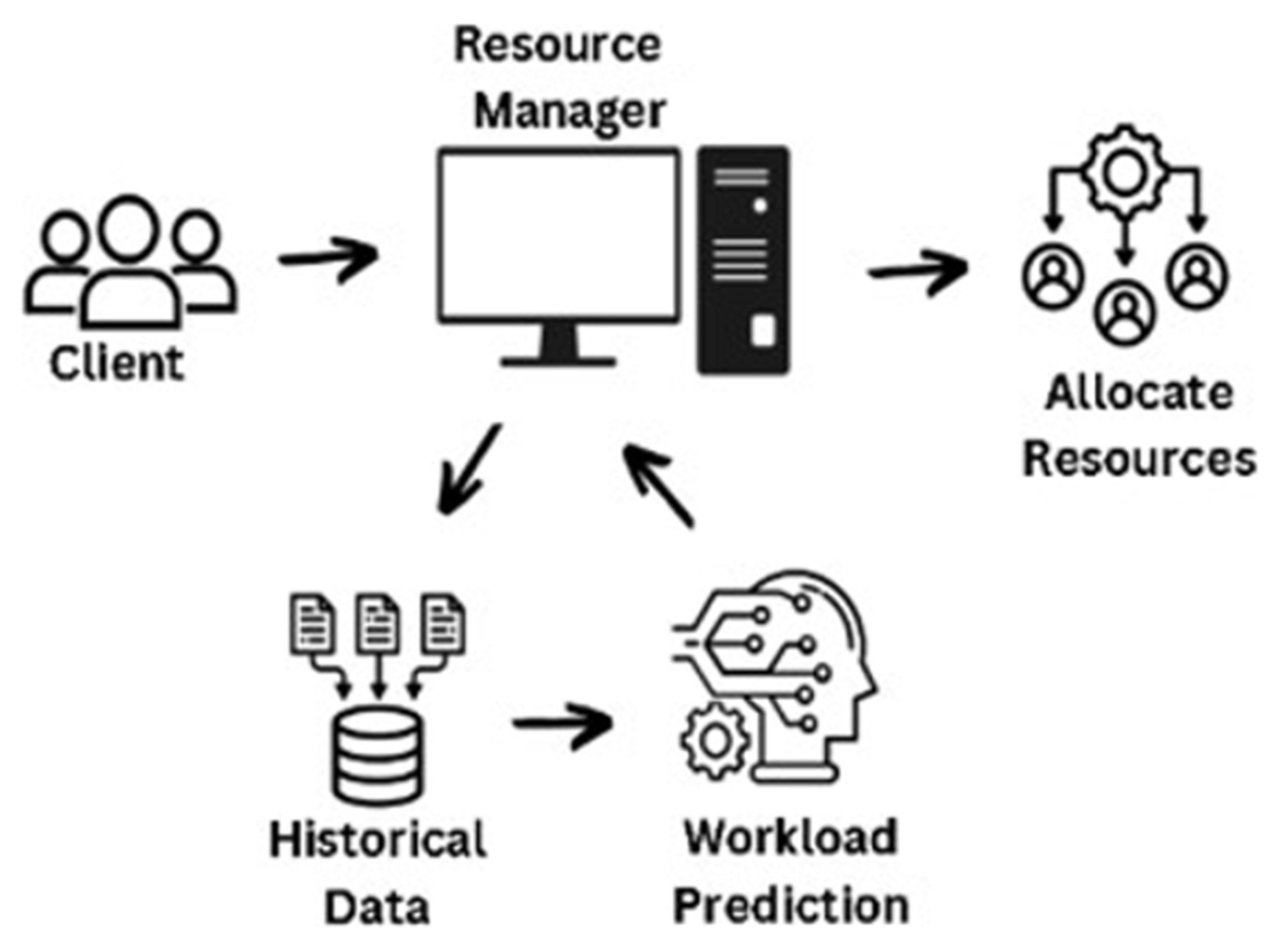

3.1.1. Workload Architecture

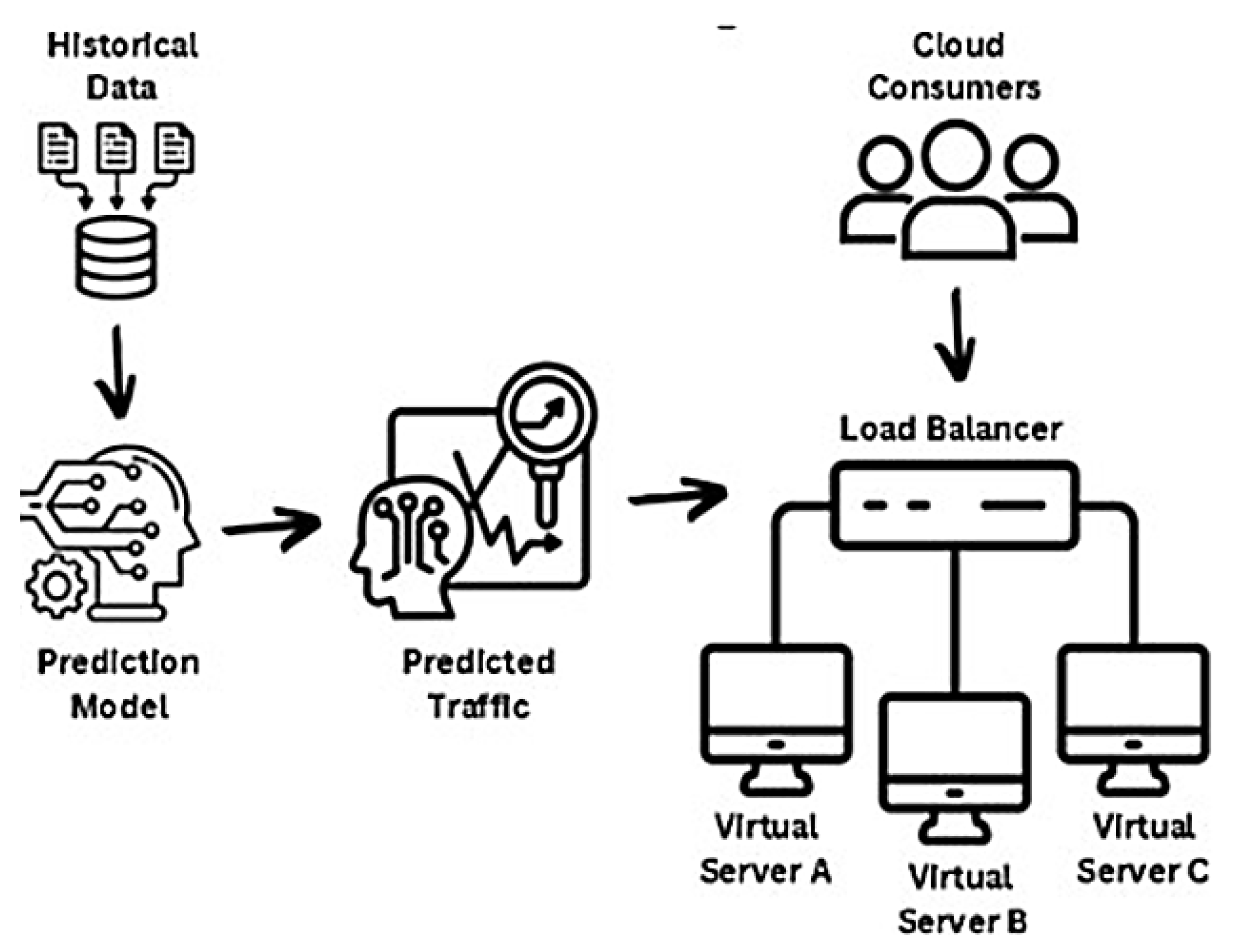

3.1.2. Service Load Balancing Architecture

3.2. Machine Learning Classifiers

3.2.1. Linear Regression

3.2.2. Gradient Boosted Tree

3.2.3. K-Nearest Neighbor

3.2.4. Decision Tree

3.2.5. Generalized Linear Model

3.2.6. Support Vector Machine

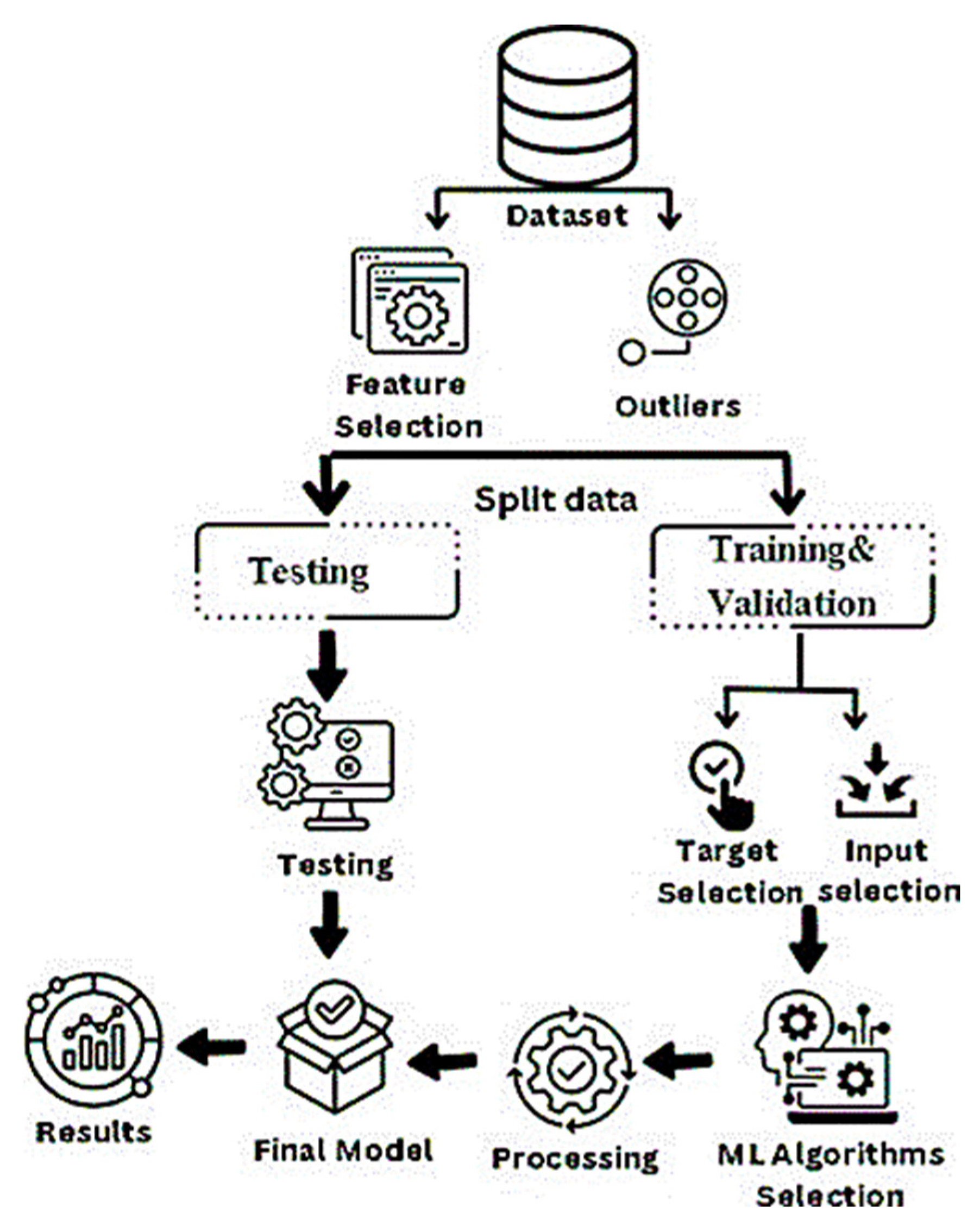

3.3. Machine Learning Framework

3.3.1. Feature Selection

3.3.2. Outlier

3.3.3. Split Data

3.4. Tool

3.5. Dataset Description

3.6. Predicted Analysis

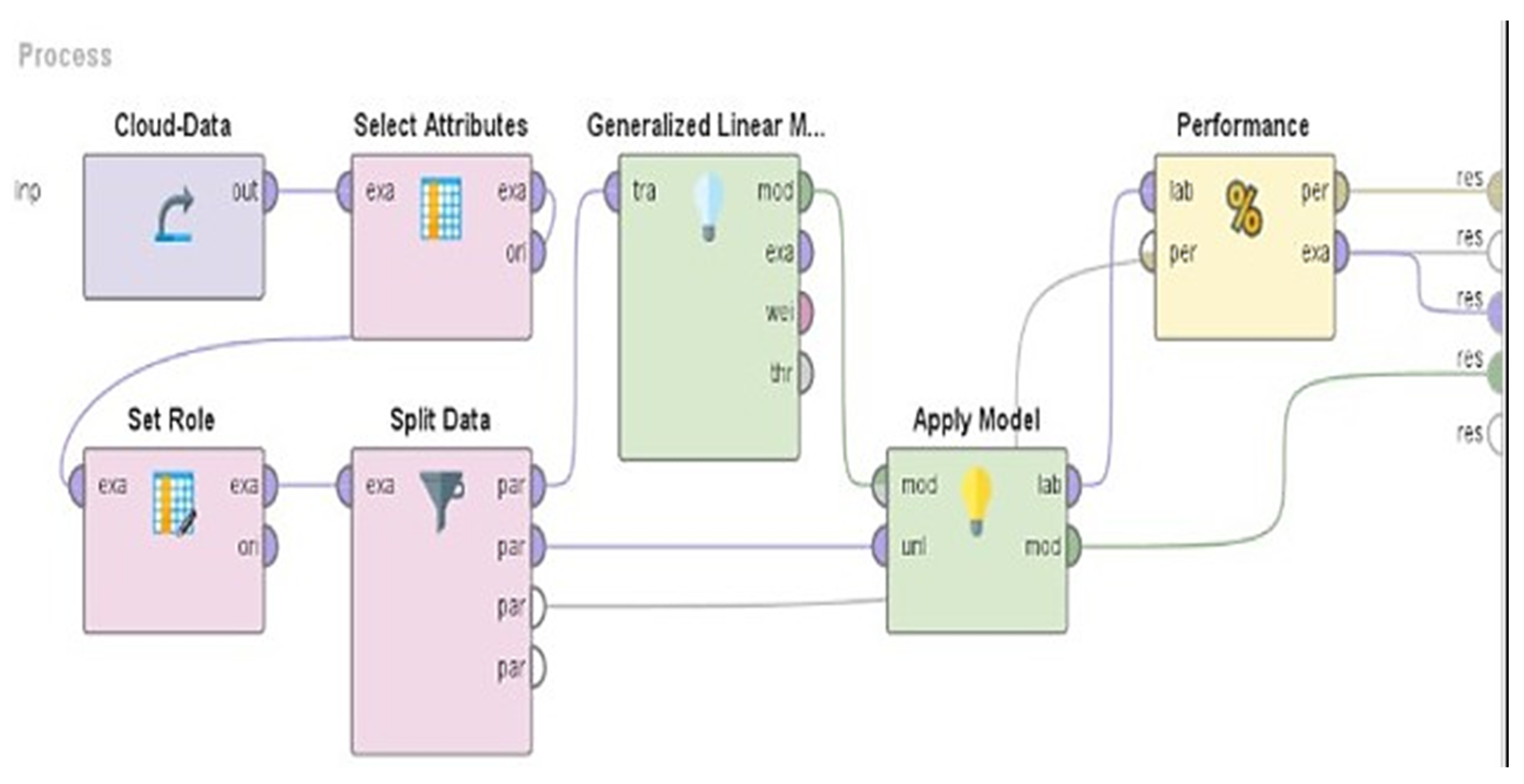

3.7. Predictive Modeling Process

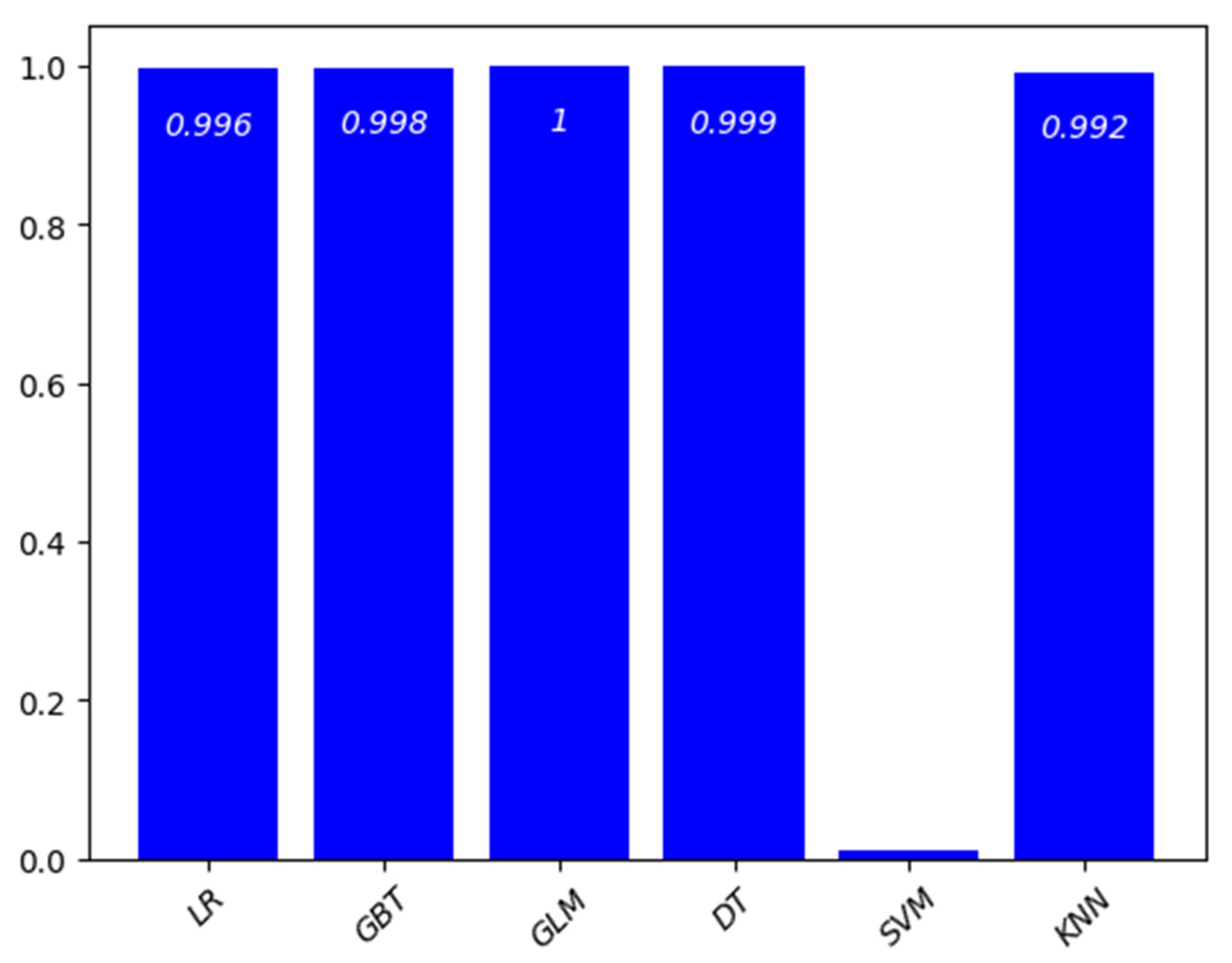

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hamzaoui, I.; Duthil, B.; Courboulay, V.; Medromi, H. A Survey on the Current Challenges of Energy-Efficient Cloud Resources Management. SN Comput. Sci. 2020, 1, 2. [Google Scholar] [CrossRef]

- Zhang, R. The Impacts of Cloud Computing Architecture on Cloud Service Performance. J. Comput. Inf. Syst. 2020, 60, 166–174. [Google Scholar] [CrossRef]

- Hussain, F.; Hassan, S.A.; Hussain, R.; Hossain, E. Machine Learning for Resource Management in Cellular and IoT Networks: Potentials, Current Solutions, and Open Challenges. IEEE Commun. Surv. Tutor. 2020, 22, 1251–1275. [Google Scholar] [CrossRef]

- Yadav, M.P.; Rohit; Yadav, D.K. Resource Provisioning Through Machine Learning in Cloud Services. Arab. J. Sci. Eng. 2022, 47, 1483–1505. [Google Scholar] [CrossRef]

- Kumar, P.; Kumar, R. Issues and Challenges of Load Balancing Techniques in Cloud Computing: A Survey. ACM Comput. Surv. 2019, 51, 6. [Google Scholar] [CrossRef]

- Dritsas, E.; Trigka, M. A survey on the applications of cloud computing in the industrial internet of things. Big Data Cogn. Comput. 2025, 9, 44. [Google Scholar] [CrossRef]

- Goodarzy, S.; Nazari, M.; Han, R.; Keller, E.; Rozner, E. Resource Management in Cloud Computing Using Machine Learning: A Survey. In Proceedings of the 19th IEEE International Conference on Machine Learning and Applications (ICMLA 2020), Miami, FL, USA, 14–17 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 811–816. [Google Scholar] [CrossRef]

- Jeyaraman, J.; Bayani, S.V.; Malaiyappan, J.N.A. Optimizing Resource Allocation in Cloud Computing Using Machine Learning. Eur. J. Technol. 2024, 8, 12–22. [Google Scholar] [CrossRef]

- Wang, Y.; Bao, Q.; Wang, J.; Su, G.; Xu, X. Cloud Computing for Large-Scale Resource Computation and Storage in Machine Learning. J. Theory Pract. Eng. Sci. 2024, 4, 163–171. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, M.; Yuan, J.; Wang, G.; Zhou, H. The Intelligent Prediction and Assessment of Financial Information Risk in the Cloud Computing Model. arXiv 2024, arXiv:2404.09322. [Google Scholar] [CrossRef]

- Shafiq, D.A.; Jhanjhi, N.Z.; Abdullah, A. Load Balancing Techniques in Cloud Computing Environment: A Review. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 3910–3933. [Google Scholar] [CrossRef]

- Patel, J.; Jindal, V.; Yen, I.L.; Bastani, F.; Xu, J.; Garraghan, P. Workload Estimation for Improving Resource Management Decisions in the Cloud. In Proceedings of the 12th IEEE International Symposium on Autonomous Decentralized Systems (ISADS 2015), Taichung, Taiwan, 25–27 March 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 25–32. [Google Scholar] [CrossRef]

- Moreno-Vozmediano, R.; Montero, R.S.; Huedo, E.; Llorente, I.M. Efficient Resource Provisioning for Elastic Cloud Services Based on Machine Learning Techniques. J. Cloud Comput. 2019, 8, 1. [Google Scholar] [CrossRef]

- Gill, S.S.; Garraghan, P.; Stankovski, V.; Casale, G.; Thulasiram, R.K.; Ghosh, S.K.; Ramamohanarao, K.; Buyya, R. Holistic Resource Management for Sustainable and Reliable Cloud Computing: An Innovative Solution to Global Challenge. J. Syst. Softw. 2019, 157, 110395. [Google Scholar] [CrossRef]

- Bi, J.; Li, S.; Yuan, H.; Zhou, M.C. Integrated Deep Learning Method for Workload and Resource Prediction in Cloud Systems. Neurocomputing 2021, 424, 35–48. [Google Scholar] [CrossRef]

- Mijuskovic, A.; Chiumento, A.; Bemthuis, R.; Aldea, A.; Havinga, P. Resource Management Techniques for Cloud/Fog and Edge Computing: An Evaluation Framework and Classification. Sensors 2021, 21, 1832. [Google Scholar] [CrossRef] [PubMed]

- García, Á.L.; De Lucas, J.M.; Antonacci, M.; Zu Castell, W.; David, M.; Hardt, M.; Iglesias, L.L.; Moltó, G.; Plociennik, M.; Tran, V.; et al. A Cloud-Based Framework for Machine Learning Workloads and Applications. IEEE Access 2020, 8, 18681–18692. [Google Scholar] [CrossRef]

- Ikram, H.; Fiza, I.; Ashraf, H.; Ray, S.K.; Ashfaq, F. Efficient Cluster-Based Routing Protocol. In Proceedings of the 3rd International Conference on Mathematical Modeling and Computational Science: ICMMC, Chandigarh, India, 11–12 August 2023. [Google Scholar]

- Althati, C. Machine Learning Solutions for Data Migration to Cloud: Addressing Complexity, Security, and Performance. Available online: https://sydneyacademics.com/ (accessed on 11 November 2024).

- Prasad, V.K.; Bhavsar, M.D. Monitoring and Prediction of SLA for IoT Based Cloud. Scalable Comput. 2020, 21, 349–357. [Google Scholar] [CrossRef]

- Tatineni, S.; Chakilam, N.V. Integrating Artificial Intelligence with DevOps for Intelligent Infrastructure Management: Optimizing Resource Allocation and Performance in Cloud-Native Applications. J. Bioinform. Artif. Intell. 2024, 4, 109–142. [Google Scholar]

- Botvich, A. Machine Learning for Resource Provisioning in Cloud Environments. In Proceedings of the 2020 IEEE International Conference on Cloud Engineering (ICEE), Sydney, Australia, 10 January 2020. [Google Scholar]

- Moghaddam, S.K.; Buyya, R.; Ramamohanarao, K. Performance-Aware Management of Cloud Resources: A Taxonomy and Future Directions. ACM Comput. Surv. 2019, 52, 4. [Google Scholar] [CrossRef]

- Hong, C.H.; Varghese, B. Resource Management in Fog/Edge Computing: A Survey on Architectures, Infrastructure, and Algorithms. ACM Comput. Surv. 2019, 52, 5. [Google Scholar] [CrossRef]

- Lim, M.; Abdullah, A.; Jhanjhi, N.; Khurram Khan, M.; Supramaniam, M. Link prediction in time-evolving criminal network with deep reinforcement learning technique. IEEE Access 2019, 7, 184797–184807. [Google Scholar] [CrossRef]

- Diwaker, C.; Tomar, P.; Solanki, A.; Nayyar, A.; Jhanjhi, N.; Abdullah, A.; Supramaniam, M. A New Model for Predicting Component-Based Software Reliability Using Soft Computing. IEEE Access 2019, 7, 147191–147203. [Google Scholar] [CrossRef]

- Airehrour, D.; Gutierrez, J.; Kumar Ray, S. GradeTrust: A secure trust based routing protocol for MANETs. In Proceedings of the 2015 25th International Telecommunication Networks And Applications Conference (ITNAC), Sydney, Australia, 18–20 November 2015; pp. 65–70. [Google Scholar]

| Feature | Description |

|---|---|

| Initial resource pool | The set of computing resources available for cloud services before workload execution begins. |

| Workload complexity | How demanding or complicated a task being processed is. |

| Initial utilization | The available resource pool being used for the workload execution. |

| Initial cost per unit | The initial cost of the resources before the execution. |

| Initial performance score | A score of the workload before optimization. |

| Optimized resource allocation | Dynamic reallocation of resources based on the actual demand of the task. |

| Optimized utilization | The percentage of resources used after the optimization process. |

| Optimized cost per unit | The cost of used resources after the optimization. |

| Algorithm | R2 | RMSE | MAE |

|---|---|---|---|

| LR | 0.996 | 0.488 | 0.295 |

| GBT | 0.998 | 0.523 | 0.299 |

| GLM | 1.000 | 0.179 | 0.086 |

| DT | 0.999 | 0.132 | 0.080 |

| SVM | 0.000 | 0.542 | 0.252 |

| KNN | 0.992 | 0.5671 | 0.495 |

| Author | Year | Technique | Classifier | Accuracy |

|---|---|---|---|---|

| IKHLASSE [18] | 2020 | CGPANN | Neural Network | 97.81% |

| FATIMA [19] | 2019 | NOMA | SVM | 98% |

| THANG [20] | 2019 | QOS | Auto-scaling | 91% |

| GAITH [21] | 2020 | RNN-LSTM | DL | 96% |

| JIECHAO [22] | 2020 | T-D | Clustering-based | 95% |

| SUKHPAL [23] | 2019 | HRM | CO | 95% |

| UMER [24] | 2020 | DDoS | SVM | 99.7% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asghar, A.; Rehman, A.U.; Ayaz, R.; Suryana, A. Smart Cloud Architectures: The Combination of Machine Learning and Cloud Computing. Eng. Proc. 2025, 107, 74. https://doi.org/10.3390/engproc2025107074

Asghar A, Rehman AU, Ayaz R, Suryana A. Smart Cloud Architectures: The Combination of Machine Learning and Cloud Computing. Engineering Proceedings. 2025; 107(1):74. https://doi.org/10.3390/engproc2025107074

Chicago/Turabian StyleAsghar, Aqsa, Attique Ur Rehman, Rizwan Ayaz, and Anang Suryana. 2025. "Smart Cloud Architectures: The Combination of Machine Learning and Cloud Computing" Engineering Proceedings 107, no. 1: 74. https://doi.org/10.3390/engproc2025107074

APA StyleAsghar, A., Rehman, A. U., Ayaz, R., & Suryana, A. (2025). Smart Cloud Architectures: The Combination of Machine Learning and Cloud Computing. Engineering Proceedings, 107(1), 74. https://doi.org/10.3390/engproc2025107074