Combating the Problems of the Necessity of Continuous Learning in Medical Datasets of Type 2 Diabetes †

Abstract

1. Introduction

2. Materials and Methods

2.1. Models

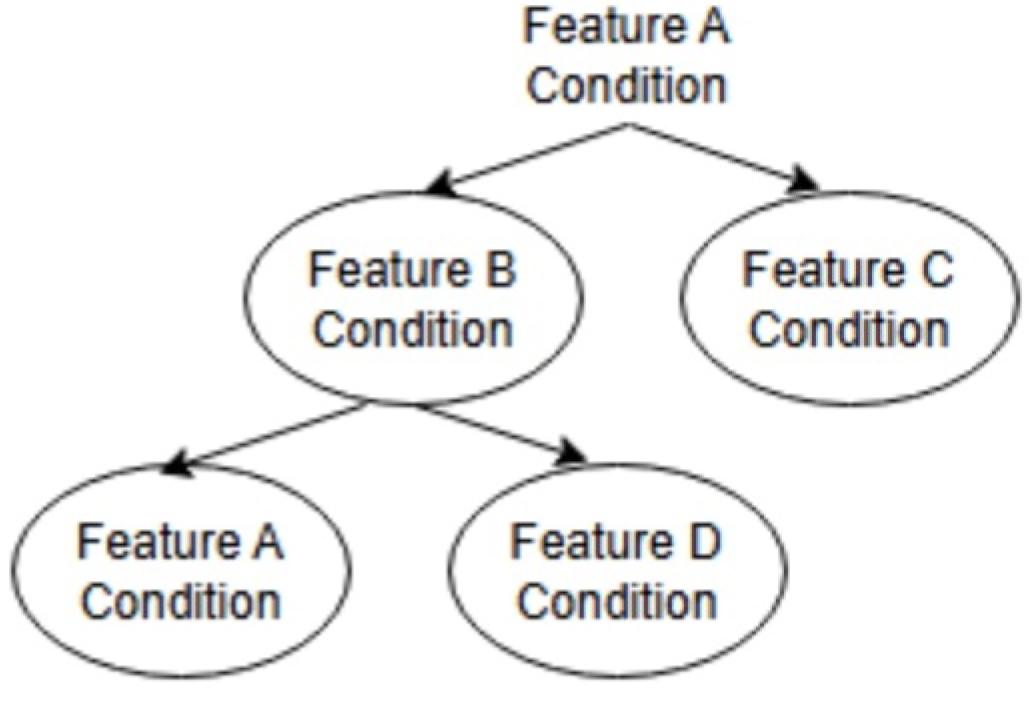

2.1.1. Multi-Classification

2.1.2. Binary Classification

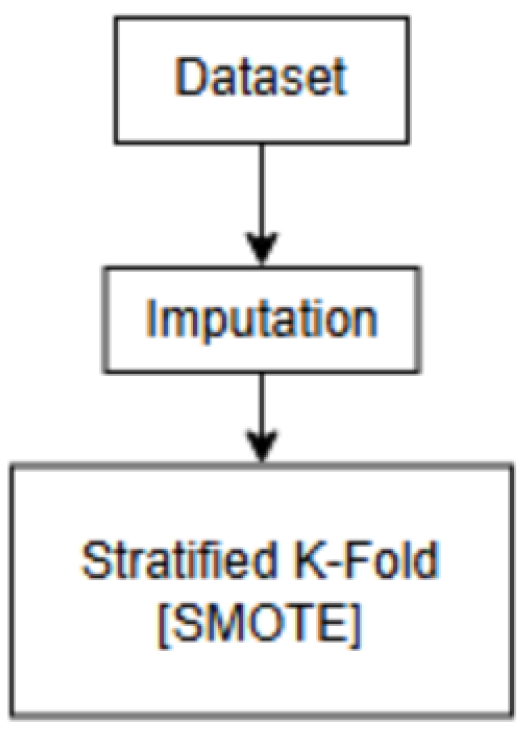

2.2. Experiment

Experimental Data

3. Results

3.1. Multi-Classification

3.2. Binary Classification

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Klincewicz, M.; Frank, L. Consequences of unexplainable machine learning for the notions of a trusted doctor and patient autonomy. In Proceedings of the 32nd International Conference on Legal Knowledge and Information Systems, Madríd, Spain, 11–13 December 2019. [Google Scholar]

- Ahmed, H.; Carmody, J. On the Looming Physician Shortage and Strategic Expansion of Graduate Medical Education. Cereus 2020, 12, e9216. [Google Scholar] [CrossRef] [PubMed]

- Kumari, P.; Chauhan, J.; Bozorgpour, A.; Huang, B.; Azad, R.; Merhof, D. Continual Learning in Medical Image Analysis: A Comprehensive Review of Recent Advancements and Future Prospects. arXiv 2024, arXiv:2312.17004. [Google Scholar] [CrossRef] [PubMed]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A comparative analysis of gradient boosting algorithms. Artif. Intell. Rev. 2020, 54, 1937–1967. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Tanabe, M.; Motonaga, R.; Terawaki, Y.; Nomiyama, T.; Yanase, T. Prescription of oral hypoglycemic agents for patients with type 2 diabetes mellitus: A retrospective cohort study using a Japanese hospital database. J. Diabetes Investig. 2017, 8, 227–234. [Google Scholar] [CrossRef] [PubMed]

- Campbell, D.; Campbell, D.; Ogundeji, Y.; Au, F.; Beall, R.; Ronksley, P.; Quinn, A.; Manns, B.; Hemmelgarn, B.; Tonelli, M.; et al. First-Line Pharmacotherapy for Incident Type 2 Diabetes: Prescription Patterns, Adherence and Associated Costs in Alberta. Can. J. Diabetes 2021, 45, S5–S6. [Google Scholar] [CrossRef]

- Egba, A.; Okonkwo, O.; R, O. Artificial Neural Networks for Medical Diagnosis: A Review of Recent Trends. Int. J. Comput. Sci. Eng. Surv. 2020, 11, 1–11. [Google Scholar] [CrossRef]

- Popescu, M.C.; Balas, V.; Perescu-Popescu, L.; Mastorakis, N. Multilayer perceptron and neural networks. WSEAS Trans. Circuits Syst. 2009, 8, 579–588. [Google Scholar]

- Guo, G.; Wang, H.; Bell, D.; Bi, Y.; Greer, K. KNN Model-Based Approach in Classification. In Proceedings of the OTM Confederated International Conferences CoopIS, DOA and ODBASE 2003, Catania, Sicily, Italy, 3–7 November 2003; Volume 2888, pp. 986–996. [Google Scholar] [CrossRef]

- Biau, G. Analysis of a Random Forests Model. J. Mach. Learn. Res. 2010, 13, 1063–1095. [Google Scholar]

- Grzesiak, E.; Bent, B.; McClain, M.; Woods, C.; Tsalik, E.; Nicholson, B.; Veldman, T.; Burke, T.; Gardener, Z.; Bergstrom, E.; et al. Assessment of the Feasibility of Using Noninvasive Wearable Biometric Monitoring Sensors to Detect Influenza and the Common Cold Before Symptom Onset. JAMA Netw. Open 2021, 4, e2128534. [Google Scholar] [CrossRef] [PubMed]

- Schober, P.; Vetter, T. Logistic Regression in Medical Research. Anesth. Analg. 2021, 132, 365–366. [Google Scholar] [CrossRef] [PubMed]

- Stekhoven, D.; Bühlmann, P. MissForest?Non-parametric missing value imputation for mixed-type data. Bioinformatics 2012, 28, 112–118. [Google Scholar] [CrossRef] [PubMed]

| Model | Accuracy |

|---|---|

| XGBoost | 25.4% |

| Support Vector Machine | 14.8% |

| Multi-layer Perceptron | 18.5% |

| Random Forest | 23.3% |

| Logistic Regression | 16.1% |

| KNN | 14.7% |

| VS | BG | SU | DPP | INS | GI | GR | TZD | SGLT | GLP |

| BG | 63% | 61% | 65% | 64% | 68% | 78% | 96% | 97% | |

| SU | 64% | 63% | 64% | 67% | 80% | 96% | 97% | ||

| DPP | 63% | 60% | 68% | 77% | 95% | 97% | |||

| INS | 59% | 63% | 72% | 94% | 96% | ||||

| GI | 59% | 64% | 92% | 94% | |||||

| GR | 62% | 91% | 94% | ||||||

| TZD | 84% | 89% | |||||||

| SGLT | 72% | ||||||||

| GLP |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Price, J.; Yamazaki, T.; Fujihara, K.; Sone, H. Combating the Problems of the Necessity of Continuous Learning in Medical Datasets of Type 2 Diabetes. Eng. Proc. 2025, 107, 69. https://doi.org/10.3390/engproc2025107069

Price J, Yamazaki T, Fujihara K, Sone H. Combating the Problems of the Necessity of Continuous Learning in Medical Datasets of Type 2 Diabetes. Engineering Proceedings. 2025; 107(1):69. https://doi.org/10.3390/engproc2025107069

Chicago/Turabian StylePrice, Jenny, Tatsuya Yamazaki, Kazuya Fujihara, and Hirohito Sone. 2025. "Combating the Problems of the Necessity of Continuous Learning in Medical Datasets of Type 2 Diabetes" Engineering Proceedings 107, no. 1: 69. https://doi.org/10.3390/engproc2025107069

APA StylePrice, J., Yamazaki, T., Fujihara, K., & Sone, H. (2025). Combating the Problems of the Necessity of Continuous Learning in Medical Datasets of Type 2 Diabetes. Engineering Proceedings, 107(1), 69. https://doi.org/10.3390/engproc2025107069