1. Introduction

Because of its detrimental effects on many facets of society, the promotion of online gambling on digital platforms, particularly YouTube, has grown in concern. Technological developments have made online gambling more accessible, which has increased user engagement and raised the danger of addiction and monetary losses [

1,

2]. Research shows that internet gambling sites significantly impact mental health, especially in adolescence [

1]. Even though internet gambling is illegal in Indonesia, there are still a number of obstacles to overcome before anti-gambling laws can be put into place [

2]. Furthermore, the legal system is still developing ways to handle crimes involving internet gambling and the effects they have on society [

3]. One study found that 54.2% of users’ educational needs about gambling risks originate from media content addressing this topic, demonstrating the critical role the media plays in educating the public about the risks associated with online gambling [

4].

The numerous detrimental effects of online gambling include addiction, strained family relationships, reduced productivity at work, and an increased risk of criminal activity [

5,

6]. The main reasons why people gamble online are the need for rapid cash gains, convenient access, and ignorance of the risks involved. Furthermore, according to [

6,

7], internet gambling is classified as a type of cybercrime that defies both legal and religious standards. Public awareness campaigns, law enforcement capacity building, and legal education are some of the measures taken to regulate this phenomenon [

5,

6,

8]. In order to properly solve this issue, cooperation between communities, corporations, and the government is essential [

6].

Promoters’ use of colloquialisms, slang, and cryptic verbiage to avoid automatic identification is one of the biggest obstacles to identifying online gambling promotions. According to recent research, the TF–IDF algorithm and Random Forest work well together to detect gambling-related content on Twitter [

9]. However, text-based detection algorithms continue to have difficulties when it comes to the usage of non-standard language, acronyms, and symbols in online gambling advertisements [

10]. Promoters frequently employ capital letters, quotation marks, and abbreviations to get past automatic filtering systems, according to another study that examined the structure of SMS-based online gambling ads [

11]. Furthermore, a comparison of gambling promotion laws in other nations shows that, even with Indonesia’s stringent laws against online gambling, there are still many barriers to their implementation [

2].

Natural language processing (NLP)-based artificial intelligence models, like IndoBERT, offer a promising way to overcome these obstacles. In a number of natural language processing tasks, the Indonesian-language model IndoBERT has shown exceptional performance [

12]. When compared to previous BERT models like IndoRoBERTa and mBERT, this model has demonstrated superior accuracy in domains like text categorization, sentiment analysis, and question-answering systems [

13]. Although there is still opportunity for advancement through more sophisticated information retrieval approaches, IndoBERT has also been demonstrated to be effective in processing domain-specific language [

14]. With more than 700 regional languages in use, linguistic diversity is one of Indonesia’s NLP problems, which makes the creation of NLP models more difficult [

15].

According to recent research, BERT-based models have been effectively used in Indonesia for a variety of text classification tasks. For electric vehicles, IndoBERT demonstrated great accuracy in sentiment analysis and spam message detection (98%) [

16,

17]. IndoBERT outperformed other models with an F1 score of 0.8897 in the analysis of harmful comments on Indonesian social media [

18]. In the meantime, IndoBERTweet obtained an F1-score of 0.89, a precision of 0.85, and a recall of 0.94 for the multi-label categorization of online toxic contents [

19]. These results demonstrate the significant potential of BERT-based models, such as IndoBERT, in identifying online gambling promotions and improving digital security in Indonesia.

The purpose of this study is to assess IndoBERT’s effectiveness in identifying online gambling promotions in YouTube comment areas. An AI-based method is required to identify language trends in online gambling ads since YouTube is increasingly being utilized as a secret platform for gambling marketing. The usefulness of IndoBERT in this detection job will be examined in this study, with particular attention paid to evaluation measures, including accuracy, precision, and recall, and the difficulties in spotting hidden language employed by online gambling marketers.

2. Literature Review

The use of transformer models and deep learning to identify harmful information in various situations has been investigated in a number of research articles. When it comes to identifying spam reviews, transformer-based models have outperformed conventional deep learning techniques; RoBERTa achieved the maximum accuracy of 90.8% [

20]. Transformer models have also demonstrated superior performance in cyberbullying identification when compared to machine learning and deep learning techniques; using Bangla and Chittagonian texts, XLM RoBERTa achieved an accuracy of 84.1% [

21]. Transformer models had the highest accuracy of 98.49% on YouTube data, according to another study that compared transformer, deep learning, and machine learning models in cyberbullying detection [

22]. On the basis of internet news, transformer models have also been used to identify changes in the stock market. The accuracy of indoBERT-large and multilingual-BERT, respectively, was 88.60% and 95.90% [

23]. These experiments demonstrate how well transformer models identify distinct types of hazardous content in a variety of languages and disciplines.

Recent developments in natural language processing (NLP) have shown a lot of promise in solving problems like fraud prevention and internet toxicity identification. In order to improve internet safety, showed how well a refined IndoBERTweet model classified harmful content in Indonesian [

24]. Investigated NLP techniques for examining fraudulent actions and presented FraudNLP, a publicly available dataset for online fraud detection [

25]. However, emphasized the need for more resilient models for practical applications by pointing out how susceptible NLP systems are to hostile attacks [

26]. The use of natural language processing (NLP) in model-to-model transformation was investigated by [

27], who compared several tools for tasks including phrase extraction and abbreviation identification. These studies demonstrate how NLP may be used to address difficult linguistic problems, but they also point to the need for more robust and flexible models that can be applied to a variety of domains.

Recent developments in natural language processing (NLP) models have demonstrated encouraging outcomes in a range of Indonesian text classification problems. With greater precision, recall, and F1-scores, IndoBERTweet has surpassed Indonesian RoBERTa in identifying online toxicity [

24]. On the IndoNLU dataset (Ahmadian et al., 2024), a hybrid model that combined IndoBERT with BiLSTM, BiGRU, and attention processes obtained 78% accuracy in emotion classification and 93% accuracy in sentiment analysis [

28]. With an accuracy range of 75–84.79% across many datasets, DeceptiveBERT, a transfer learning-based model based on pre-trained BERT, was able to identify deceptive reviews [

29]. In domain-specific tasks, IndoGovBERT, a BERT-based model trained on Indonesian government documents, outperformed general language models and non-transformer approaches in processing texts related to Sustainable Development Goals (SDG) [

30]. These experiments demonstrate how well transformer-based models perform in problems involving the classification of Indonesian text.

The use of BERT-based models for different NLP tasks in both English and Indonesian has been investigated in a number of papers. IndoBERTweet outperformed Indonesian RoBERTa in identifying and classifying online toxicity in Indonesian [

24]. On the IndoNLU dataset [

31], presented a hybrid model that combined IndoBERT with BiLSTM, BiGRU, and attention processes, attaining 78% accuracy in emotion classification and 93% accuracy in sentiment analysis [

28]. DeceptiveBERT, a transformer-based model for identifying deceptive reviews on social media, was presented in English by [

29]. It achieved an accuracy range of 75–84.79% across many datasets. These works show how BERT-based models can be used to solve a range of linguistic problems, such as sentiment analysis, toxicity detection, and the recognition of deceptive text. This study intends to fill a research gap as no study has explicitly examined the application of IndoBERT for identifying online gambling promotions on social media.

3. Research Method

3.1. Research Framework

The research framework describes the steps needed to use IndoBERT to find online gambling advertising in YouTube comments. The procedure starts with gathering data from publicly accessible films using the YouTube Data API v3 to extract comments, which are subsequently saved in CSV format. The next step in the preparation procedure is to clean the text by deleting special characters, changing all of the letters to lowercase, tokenizing the text with the IndoBERT tokenizer, and deleting unnecessary common terms. After that, the comments are manually classified as either non-spam or spam (gambling promotions) as a first labeling phase.

The model is trained using 80% of the dataset for training and 20% for testing, with hyperparameter tuning applied to optimize learning rate, batch size, and the number of epochs. After the model is trained, its performance is assessed using accuracy, precision, recall, and F1-score metrics. The evaluation results show that IndoBERT achieves high accuracy in detecting spam comments related to gambling promotions. IndoBERT was selected for the model selection and training stage due to its strong ability to understand the Indonesian language.

An error analysis is then carried out to determine the difficulties the model has while identifying covert or implicit gaming promotions. A number of enhancement techniques are suggested, such as enlarging the dataset to encompass a wider variety of linguistic variants, using rule-based filtering to manage certain linguistic patterns, and consistently upgrading the model to accommodate changing linguistic trends. Lastly, the built model has been released to Hugging Face, making it available to academics and developers, and is used in an automated moderation system for YouTube comments to weed out spam.

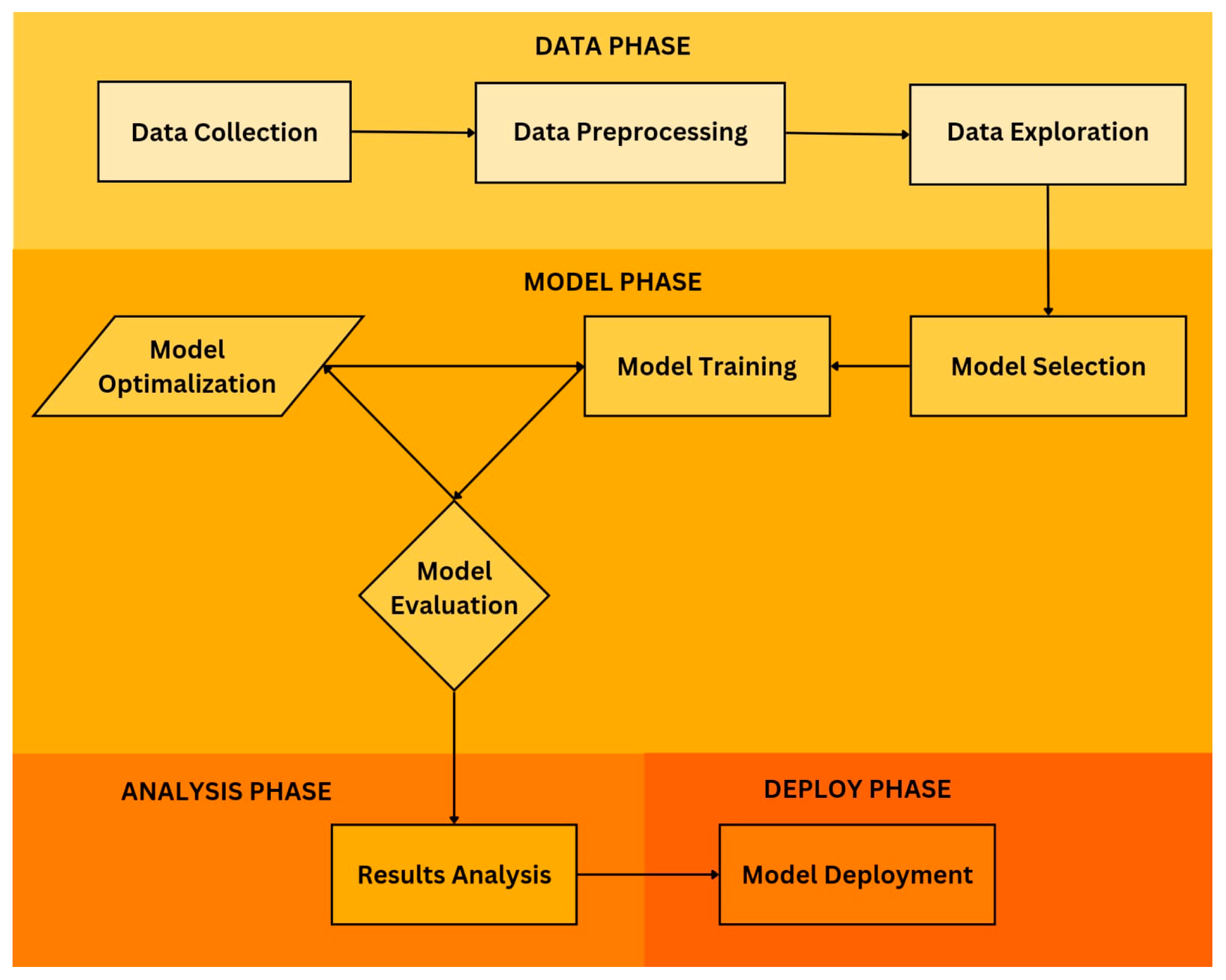

The overall process of this study is illustrated in

Figure 1, which presents the research framework. The colors in the figure are used to distinguish the different phases, namely: data phase, model phase, analysis phase, and deploy phase. It outlines the sequential stages, starting from data collection through the YouTube Data API, followed by preprocessing, model training, evaluation, and deployment.

3.2. Data Collection from YouTube Comments

The YouTube Data API version three was used to acquire the data for this investigation. The method used attempts to automatically extract comments from YouTube videos that are accessible to the public. The first step in this procedure is to find out if comments are available on the videos that are the subject of the investigation. To verify this the total number of comments is obtained from the video statistics provided in the video metadata, the total number of comments shown in the video statistics is retrieved. The data collecting procedure continues if there are comments on the video.

To create a representative dataset that is nevertheless manageable, comments are then fully retrieved, while a maximum data retrieval limit is specified. Important details like video identification, username (if accessible), comment content, publication time, and the quantity of likes earned by the remark are all included in every properly recovered comment. After that, the acquired data are saved in CSV format, which makes data administration easier and allows for seamless further analysis.

The prior research has demonstrated that gathering information from YouTube comments is an essential first step in identifying and categorizing spam. In order to detect spam comments in popular music videos, employed a comment-gathering technique [

32]. This allowed for the employment of several machine learning algorithms for additional analysis. Furthermore, a new dataset for identifying abusive language in YouTube comments was created by [

33], emphasizing the significance of thorough data collection that incorporates conversational context to increase classification accuracy. In addition, stressed in their review that enhancing spam detection methods on social media platforms requires an efficient data-gathering strategy [

34].

Following data collection, comments are manually categorized into two primary groups: spam comments and non-spam remarks on online gambling. This procedure entails going over every remark in detail and looking for reoccurring themes in comments that promote online gambling, such as mentions of gambling websites, referral codes, or terms related to gambling. To guarantee a high degree of accuracy, the labeling selections are based on predetermined criteria. Because spam comments frequently display linguistic pattern changes that are challenging to identify using automated approaches alone without enough model training, this manual approach was adopted. The data produced by hand labeling are more accurate and may be used as a starting point for the subsequent development of a more trustworthy classification model.

The usage of YouTube’s public data complies with relevant rules and ethical standards. No data containing sensitive personal information are obtained; only publicly accessible information is gathered. To ensure the best possible protection of user privacy and rights, the data collecting and labeling procedures are carried out exclusively for research and analytical reasons.

The study can effectively access and handle YouTube comment data using this method, producing a high-quality, organized dataset. This dataset includes precise labels to aid in future research in identifying and categorizing online gambling spam comments, in addition to reflecting the general features of comments.

3.3. Data Preprocessing

In order to prepare the gathered comments for the best possible use in training a transformer-based machine learning model, the data preparation step was completed in this study. The first step in this procedure is text cleaning, which involves standardizing the format of all comments.

Converting all characters to lowercase is the first step in text cleaning since it eliminates needless distinctions between capital and lowercase letters. After that, just letters and spaces remain once superfluous characters like symbols and numerals are eliminated. To make the writing clearer and more organized, extra spaces are also removed. This stage is essential for lowering data noise, which helps the model identify patterns that are already there. According to [

35], text cleaning is a crucial step in the detection of spam in social media comments. To improve the efficacy of machine learning models, preprocessing methods like text normalization and tokenization are crucial.

Incomplete data, particularly comments with empty values in the comment or label fields, are eliminated following text cleaning. This guarantees that the dataset is genuinely authentic and free of information that can interfere with the model’s ability to learn. Furthermore, comment labels are transformed into numerical representation so that the machine learning model can interpret them and provide suitable values for the online casino spam and non-spam categories.

The cleaned text must next be formatted so that the machine learning model can comprehend it. Text is transformed into a numerical representation by tokenization. Tokenization is done in this study using IndoBERT, a tokenizer that works with the transformer model. Tokenization is the process of dividing the text into smaller pieces, or tokens, and transforming them into numerical values that the model can understand. Additionally, this procedure uses truncation to cut excessively long texts so they do not go over the predetermined limit and padding to guarantee that all inputs have the same length. Ref. [

36] showed that transformer-based models may achieve excellent performance in SMS spam identification, demonstrating the effectiveness of transformer models in spam detection in earlier investigations.

The cleaned and tokenized dataset is divided into two sections for model training and assessment when preprocessing is finished. The data used in this study are divided into two sets: the training set (80%) and the test set (20%). While retaining a portion of the data for objectively assessing model performance, this divide guarantees that the model obtains an adequate number of samples for learning.

Following the preprocessing phase, the unstructured comment data have been cleaned up and prepared for use in both model training and inference. Through appropriate preprocessing and the use of transformer-based models like IndoBERT, this work seeks to improve the efficacy of social media spam comment identification.

3.4. Data Exploration

3.4.1. Dataset Overview

The dataset used in this study consists of 2,315 YouTube comments collected via the YouTube Data API. Each data entry contains information on the video ID, user ID, comment content, timestamp, like count, and label, as summarized in

Table 1. The label indicates whether the comment is classified as online gambling promotion (spam) or not (non-spam).

3.4.2. Data Cleaning

Before training the IndoBERT model, data cleaning was performed to ensure data quality.

Handling missing values: The dataset contains 13 missing comments, which were removed to maintain data integrity.

Text preprocessing: Special characters, symbols, and unnecessary spaces were removed to normalize the text for further processing.

Tokenization: The IndoBERT tokenizer was applied to segment text into smaller units, ensuring that the model accurately captures linguistic structures.

3.4.3. Label Distribution

The dataset consists of 1170 spam comments promoting online gambling and 1145 non-spam comments. The nearly balanced class distribution ensures that the model is not biased toward a particular category, making it suitable for training a robust classifier, as illustrated in

Figure 2.

3.4.4. Characteristics of Gambling Spam Comments

Upon further analysis, gambling spam comments often exhibit the following specific characteristics:

The frequent use of encoded text or modified spellings (e.g., “AEЯO88” instead of “AERO88”) to bypass automatic detection;

Promotional phrases that encourage users to engage in gambling, such as “Main di AEЯO88 bikin auto happy!” (Play on AERO88, guaranteed happiness!);

Mentions of gambling platforms, referral links, or misleading claims about potential winnings.

3.4.5. Implication for Model Training

Given the structure and characteristics of the dataset, IndoBERT was chosen due to its superior ability to process Indonesian text. The preprocessing steps, including tokenization and label balancing, further optimize the model’s learning capabilities. This exploration provides a solid foundation for accurate spam classification in YouTube comments.

3.5. Model Selection

3.5.1. Indobert Model

Because IndoBERT is better than other models at understanding the Indonesian language, it was selected as the main model for this investigation. IndoBERT, a BERT variant tailored for Indonesian, has demonstrated exceptional performance in a range of natural language processing (NLP) applications, such as emotion categorization and sentiment analysis. According to recent research, IndoBERT outperforms other NLP models on these tasks when paired with other neural network designs like BiLSTM and BiGRU [

28].

IndoBERT’s superiority over Recurrent Neural Network (RNN)-based models like Long Short-Term Memory (LSTM) and Bidirectional LSTM (BiLSTM) in capturing the linguistic context and structure of the Indonesian language is one of the primary factors in its selection. Because RNN-based models process information sequentially, they are not very good at capturing long-range word relationships. BiLSTM is less effective at handling complicated texts with substantial linguistic variances, despite its ability to comprehend context in both directions. Although BiLSTM is frequently employed in NLP tasks, prior research shows that it still has trouble with long-term dependencies. As a result, transformer-based models such as IndoBERT can improve its performance [

28]. Furthermore, research indicates that LSTM still has difficulties capturing word associations in complicated regression and natural language processing tasks, despite the fact that it was created to solve the vanishing gradient problem [

37].

Additionally, studies by [

38] show that monolingual models like IndoBERT are more sensitive than multilingual models like XLM-RoBERTa in Named Entity Recognition (NER) tasks for Indonesian. This bolsters the claim that models trained exclusively for Indonesian outperform multilingual transformers or RNN-based methods. IndoBERT is the best option for identifying online gambling promotion in Indonesian YouTube comments when taking into account its benefits in comprehending the syntactic and semantic structure of Indonesian as well as actual data pointing out the shortcomings of BiLSTM and LSTM.

However, multilingual transformer models that can handle many languages, including Indonesian, include Multilingual BERT (mBERT) and XLM-RoBERTa. Recent research, however, shows that multilingual models like XLM-RoBERTa are less sensitive than monolingual models like IndoBERT in Indonesian natural language processing tasks [

38]. In order to improve accuracy and relevance in comprehending Indonesian syntax and semantics, IndoBERT is trained utilizing a larger corpus of text in the Indonesian language, encompassing a variety of text sources like news, social media, and scholarly publications.

Furthermore, studies on the construction of Indonesian language models indicate that transformer-based models that are tailored for certain tasks perform better than multilingual or general-purpose language models. For instance, it has been demonstrated that IndoGovBERT, which was created especially for Indonesian government documents, outperforms multilingual models like mBERT in terms of text categorization and document similarity evaluation [

30]. These results emphasize how crucial it is to use models that are customized to meet certain requirements, such as identifying comments about online gambling promotions that use a variety of frequently altered terminology to avoid automated detection.

IndoBERT is the best option for identifying online gambling advertising in Indonesian YouTube comments when these characteristics are taken into account. In Indonesian text classification tasks, this model not only performs better than RNN-based and multilingual transformer models but also has advantages in contextual understanding and processing efficiency. IndoBERT is the best model for this study since prior research has demonstrated its superiority in handling NLP tasks unique to Indonesia.

3.5.2. IndoBERT’s Architecture

Specifically designed to handle text in the Indonesian language, IndoBERT is a model based on Bidirectional Encoder Representations from Transformers (BERT). Bidirectional contextual understanding of words within a sentence is made possible by this model’s several layers of multi-head self-attention encoders. The Masked Language Model (MLM) approach does this by enabling the model to concurrently record word meanings, depending on context from both sides [

39].

The general pre-training and fine-tuning process of BERT is depicted in

Figure 3. The same ideas apply to IndoBERT even though this process is mainly suited to BERT. This picture shows how the model is initialized for different downstream tasks, such identifying YouTube comments that contain online gambling advertising, using pre-trained model parameters. All model parameters are modified during fine-tuning in order to address this particular task of determining whether a comment contains content related to gambling advertising. While the [SEP] token acts as a separator to identify distinct input segments (such as between a comment and extra metadata), the [CLS] token is a special symbol appended at the start of each input.

IndoBERT handles text input that has been transformed into tokens using WordPiece Tokenization once the model has been initialized with pre-trained parameters. The input representation in BERT is depicted in the following picture,

Figure 4, and is made up of three fundamental embedding types: positional, segment, and token embeddings. Subwords or words that have undergone tokenization processing are represented by token embeddings. Positional embeddings give information about the relative position of words in a sentence, whereas segment embeddings show which portion of the input they belong to (such as whether it is a comment or metadata).

Because it shows how input representation is built in BERT and IndoBERT,

Figure 4 is essential. Vector representations of the input are created by combining token, segment, and positional embeddings; these representations are subsequently processed further through the encoder layers.

IndoBERT uses multi-head self-attention in each encoder layer to comprehend the relationships between words in a sentence after the input has been transformed into vector representations. This enables the model to recognize whether a comment contains online gambling promotions by capturing textual context and trends. IndoBERT is more effective in capturing word associations because it analyzes complete sentences in parallel, as opposed to sequentially processing text like Recurrent Neural Network (RNN)-based techniques such as Long Short-Term Memory (LSTM) and Bidirectional LSTM (BiLSTM).

IndoBERT functions as follows when it comes to categorizing YouTube comments that include advertisements for online gambling: First, WordPiece Tokenization is used to split the text into its smallest parts in comments that are extracted from YouTube videos. After that, each created token is transformed into a vector representation by fusing positional, segment, and token embeddings. The model can then determine whether the comment contains gambling promotion content by processing these vector representations through encoder layers to produce richer contextual representations.

A fully connected layer with a softmax or sigmoid activation function then receives these enriched representations and decides if the comment qualifies as an online gambling promotion. Even when the language is subtle or concealed, IndoBERT can differentiate between comments that are promoting gambling and those that are not.

3.6. Model Training

The IndoBERT model, a transformer-based model pre-trained on an Indonesian language corpus, is used in this study to optimize performance in classifying comments through a systematic training and fine-tuning process. The model is modified by adding a classification layer at the end, which ensures that the number of output neurons matches the number of classes in the dataset. Prior to training, the dataset is divided into two main subsets, with 80% set aside for training and 20% for testing. To maintain class distribution between comments containing gambling promotions and other comments, a stratified sampling technique is used to ensure balance between the class distribution.

The choice of training parameters, in particular the learning rate, is based on preliminary research and the literature on transformer models as well as a number of recent studies that highlight the critical role of learning rate configuration in various deep learning applications. For example, learning rate modulation in a TiOx memristor array during in situ training accelerates convergence and reduces energy consumption in the field of neuromorphic computing [

40], while [

41] found that convolutional neural networks (CNN) performed best in typhoon rainfall forecasting applications, with learning rates of 0.1, 0.01, and 0.001 significantly improving long-term prediction capabilities. Furthermore, in the field of fractal image coding showed that the learning rate is a critical factor in artificial neural networks’ recognition of recursive functions that produce fractal forms [

42]. Used an adaptive learning rate mechanism in the field of LoRaWAN–IoT localization [

43]. They achieved up to 98.98% accuracy by employing piecewise, exponential decay and cosine annealing decay techniques.

Based on these results, this study determines that the ideal learning rate for IndoBERT is 2 × 10−5, taking into account the features of transformer-based models and the text classification task. The model is trained for six epochs with a batch size of 16 samples per device, balancing training speed and memory usage, and regularization techniques are applied through weight decay with a value of 0.01 and an early stopping strategy that stops training if no performance improvement occurs for two consecutive epochs. The model is evaluated at each epoch using validation data, measuring accuracy, precision, recall, and F1-score.

The significance of these methods in improving the performance of deep learning models and avoiding overfitting has been emphasized in recent research. New method that uses training history to identify overfitting is more effective than traditional methods [

44], while [

45] highlighted the usefulness of regularization through weight decay and hyperparameter tuning, such as batch size and the number of epochs, to balance training speed and memory usage. Evaluation using metrics like accuracy, precision, recall, and F1-score has also been widely used in studies. For example, used TF–IDF and SVM in sentiment analysis and obtained 85% accuracy, 100% precision, 70% recall, and an F1-score of 82% [

46]. Research by [

47] supported similar results, demonstrating 95% accuracy in multi-label classification tasks using CNN for fruit flavor categorization.

According to these research articles, the use of regularization strategies like weight decay and early stopping, as well as hyperparameter settings like batch size and epoch count, are crucial components of the training process. This method guarantees that the final model generalizes effectively to new data, in addition to helping to maximize model performance.

Every 100 steps during training, a log is recorded to track the model’s development. The 16-bit numerical precision improves computational efficiency by enabling quicker processing without appreciably sacrificing accuracy. Based on the examination of validation data, the model that performs the best is preserved for further testing using test data that have not been seen yet. The goal of this last assessment is to confirm that the model can recognize comments on YouTube that promote online gambling.

4. Result and Discussion

4.1. Model Performance During Training

Figure 5 shows the loss curve during the training process, illustrating the changes in the IndoBERT model’s loss values on training and validation data from epoch to epoch. Although the initial training plan was for six epochs, the application of the early stopping technique halted training at the fifth epoch. This technique was implemented to prevent overfitting, which occurs when the validation loss stops improving or starts to increase.

As can be seen from the graph, validation loss remains relatively stable and reaches its lowest value at the third epoch, even though training loss increases slightly at this point. Although the early stopping mechanism stopped the entire training process at the fifth epoch, the model from the third epoch was chosen as the final model for further evaluation. Training loss decreases during the first epoch but shows a slight increase at the third epoch, before significantly decreasing again until the fifth epoch.

4.2. Evaluation Metrics

A confusion matrix and a number of important evaluation metrics were employed in addition to the analysis of the loss graph to assess the IndoBERT model’s performance, and the evaluation results show that the model performed exceptionally well in identifying online gambling promotions in YouTube comments. A summary of the evaluation results during training is provided in

Table 2.

The final evaluation results of the model (based on the 3rd epoch) are shown in

Table 3.

To gain a better understanding of the model’s misclassification tendencies, a study was also carried out utilizing the confusion matrix, as shown in

Figure 6. Which shows that the model correctly identified 232 spam comments (true positives) and 221 non-spam comments (true negatives), with only a small number of misclassifications.

Results from the confusion matrix showed that the IndoBERT model performs exceptionally well with a relatively low error rate.

The model successfully classified 232 positive comments (true positives) and 221 negative comments (true negatives).

There were six false positive cases, where non-spam comments were misclassified as spam.

There were two false negative cases, where spam comments were not detected.

These findings demonstrate that the IndoBERT model performs remarkably well, with a reasonably low error rate. The selection of the model from the third epoch was based on the lowest validation loss, assuring excellent generalization capabilities.

4.3. Error Analysis

To find out what kinds of misclassifications the IndoBERT model performed while categorizing YouTube comments on online gambling advertising, error analysis was done. False positives and false negatives are the two primary categories into which these categorization mistakes fall. False negatives occur when the model misses comments that really encourage online gambling, whereas false positives happen when the algorithm misclassifies non-promotional remarks as gambling promos.

A few misclassification situations that have been found show that the IndoBERT model still has trouble comprehending some of the subtleties of user comments. For instance, while having no clear promotional aim, brief and unclear remarks like “p” or “hadir teruss” were incorrectly categorized as gambling advertisements. Furthermore, the model had trouble differentiating comments that contained frequently used gambling-related phrases but were not truly promotional. For example, “hoki di tahun” is a broad phrase that was mistakenly labeled as spam.

Furthermore, when gambling incentives were communicated implicitly without specifically referencing frequently recognized gambling-related phrases, false negatives happened. For example, remarks like “pecah terus,” which are frequently used in gambling promotions, were occasionally overlooked as such. Additionally, the model showed shortcomings in identifying rare patterns of language that were not well-represented in the training dataset, such as informal language usage or spelling variants that were not included in the original training data.

Examples of model prediction errors are presented in

Table 4, illustrating cases of false positives and false negatives encountered by the IndoBERT model.

These errors suggest that there are still a number of ways to improve the IndoBERT model, including adding more diverse data to the training process to help the model capture a wider range of linguistic patterns, fine-tuning the model with a more flexible approach to informal language to help reduce misclassification errors, and integrating the IndoBERT model with rule-based filtering techniques to handle particular cases that are hard to detect with machine learning models alone.

By implementing these enhancements, the model is expected to achieve greater accuracy in detecting online gambling promotion comments while minimizing classification errors.

4.4. Discussion on Practical Implications

The results of this study demonstrate that the IndoBERT model has significant potential in detecting online gambling promotion comments on YouTube. Implementing this model in real-world scenarios can assist social media platforms, content creators, and regulators in identifying and reducing the spread of illegal or unwanted content.

One of the primary practical implications of this research is its ability to enhance automation in moderating comments on YouTube. With a model capable of accurately detecting online gambling promotion comments, the system can automatically flag or hide spam-like comments before they are seen by other users. This not only improves user experience but also helps maintain a healthier and safer digital ecosystem.

Additionally, platforms may handle a wider spectrum of policy infractions by including the IndoBERT model into an automated detection system. For example, the model may be modified to detect various sorts of spam, such as online fraud, hate speech, or false information. BERT-based models have demonstrated encouraging performance in a number of text classification tasks, such as misinformation identification and hate speech detection [

48,

49]. Content moderators may thus use this model as a useful tool to filter comments that might be against community norms.

The created model has been made available on Hugging Face as a follow-up measure to increase accessibility. Users can use the model without retraining because it is accessible through an API and is published in a public repository. It is available at

https://huggingface.co/jelialmutaali/online-gambling-spam-detector (accessed on 24 July 2025). The repository page has comprehensive usage instructions. By making the model publicly accessible, this study promotes its use in a variety of automated detection systems in addition to allowing other researchers to replicate and validate it.

Authorities can also utilize this approach in the regulatory environment to spot and keep an eye on new trends in online gambling marketing across digital platforms. Regulators may better understand the trends of online gambling marketing and implement more successful regulatory measures to stop illicit activity by gathering data from a variety of sources. Previous research articles have demonstrated that governments and regulators are increasingly using natural language processing (NLP) tools to monitor social media for unlawful actions, such as hate speech and disinformation [

50].

Nevertheless, before the model is widely used, a number of issues need to be resolved, even though it performs well in comment categorization. Making sure the model can manage the extremely dynamic language found in user comments is a major problem, particularly when inventive spellings or hidden words are employed to avoid automatic detection. Adversarial assaults, in which text inputs are altered to evade detection systems, can also affect NLP models [

51,

52]. To lessen this, the system has to update the model often using the most recent data in order to continue identifying patterns in online gambling promotions and bolster its defenses against hostile attacks.

Additionally, ethical and privacy policy considerations must be taken into account when implementing this model in the real world. Since overly aggressive automated detection systems run the risk of excessive censorship or misclassification, which could restrict users’ freedom of expression, a more balanced approach that includes a human-in-the-loop mechanism is required, allowing human moderators to review model classifications prior to final decisions being made. In this regard, self-diagnosis and self-debiasing approaches in NLP can be potential solutions to reduce bias and improve the transparency of automated detection systems [

53] as needed.

Given these advantages and difficulties, using IndoBERT to identify comments about online gambling promotions may prove to be a useful way to moderate digital material. To guarantee more openness and confidence in practical applications, future advancements may incorporate rule-based filtering methods and enhance model interpretability.

5. Conclusions

This study analyzed the effectiveness of IndoBERT in detecting online gambling promotion in YouTube comments. The results demonstrate that IndoBERT achieves high accuracy (98.26%), precision (98.28%), recall (98.26%), and F1-score (98.26%), making it a reliable model for identifying gambling-related spam. However, the model still encounters challenges in detecting implicit promotional language and ambiguous expressions.

To enhance performance, future improvements could involve expanding training datasets to include more diverse language variations, integrating rule-based filtering for specific word patterns, and implementing continuous model updates to adapt to evolving spam strategies. Additionally, combining IndoBERT with human moderation could mitigate misclassification risks, ensuring a balanced and effective content moderation system.

The findings of this study have significant implications for automated content moderation on digital platforms, aiding regulators and social media platforms in combating the spread of online gambling promotions. By making the trained model publicly accessible, this research contributes to the broader field of NLP-based spam detection and provides a valuable tool for enhancing digital security in Indonesia.