Heart Failure Prediction Through a Comparative Study of Machine Learning and Deep Learning Models †

Abstract

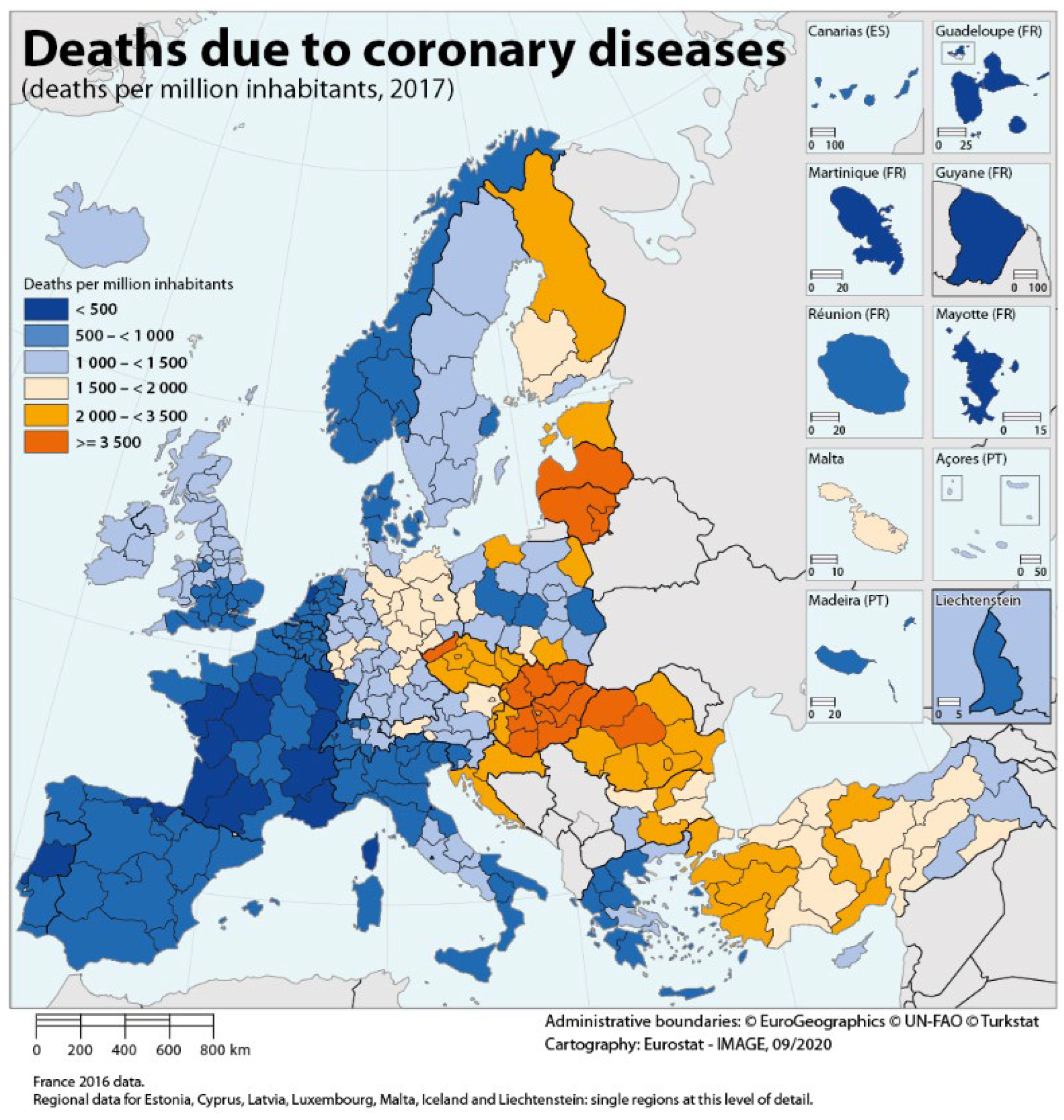

1. Introduction

2. Literature Review

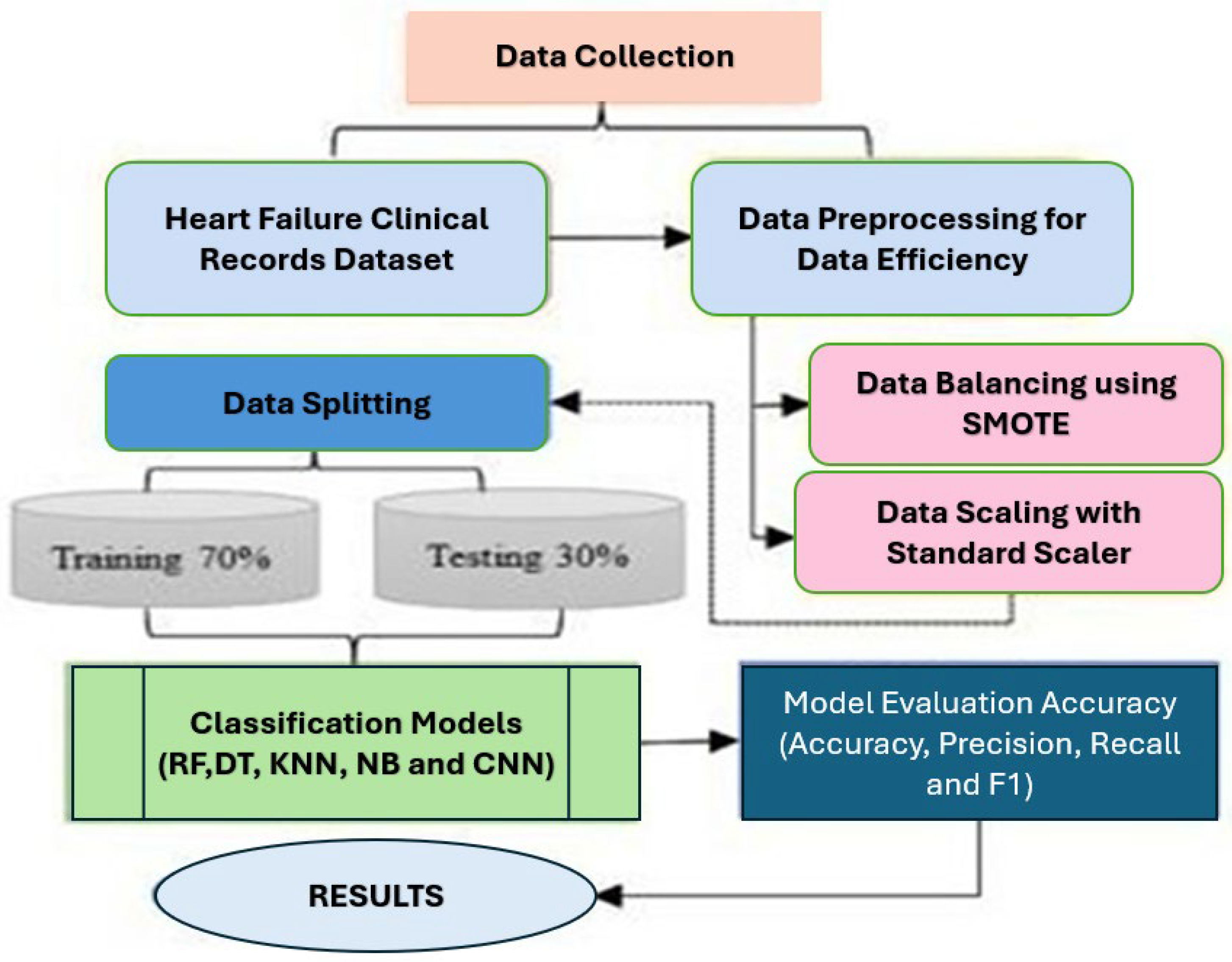

3. Methodology

3.1. Machine Learning Techniques

3.2. Supervised Learning

3.3. Unsupervised Learning

3.4. Reinforced Learning

3.5. Dataset Description

3.6. Dataset for Heart Failure Clinical Records

3.7. Operator Description

3.7.1. Optimized Selection

3.7.2. Apply Model

3.7.3. Split Data

3.7.4. Performance Evaluation

- True positive (TP): accurately anticipated favorable results.

- True negative (TN): accurately foreseen adverse results.

- False positive (FP): when positive results are incorrectly projected.

- False negative (FN): negative results that were not accurately expected.

3.7.5. Replace Missing Values

3.7.6. Filter Example

3.7.7. Convolutional Neutral Network

- The input layer takes raw image data, often in the form of pixel values. Each image is represented as a multidimensional array where every channel (for instance, red, green, and blue in a color image) is stored separately.

- The convolutional layer is the basic building block of a CNN. It employs several learnable filters (or kernels). Each filter is learned to recognize specific patterns such as edges, textures, or shapes. The filters are not predefined, but learned automatically during training.

- Following convolution, a non-linear activation function (most often ReLU) is used to introduce non-linearity in the model so that it can learn more complicated patterns.

- The pooling layer is also responsible for downsampling the feature maps. It lowers the spatial dimensions (width and height) of the data, decreasing computational burden, controlling overfitting, and strengthening the network against input variations. Max pooling is the most commonly employed scheme, which selects the maximum value in each sub-region.

- The output layer is usually a fully connected (dense) layer with softmax activation for classification problems. This layer produces the final predictions by encapsulating all the learned features.

3.8. KNN Classifier

3.9. Random Forest

3.10. Naïve Bayes

- Gaussian Naïve Bayes, used when features are normally distributed;

- Multinomial Naïve Bayes, commonly used in text classification where word counts are important;

- Bernoulli Naïve Bayes, suitable for binary/Boolean features (e.g., word presence or absence).

3.11. Deep Learning

- CNN for image processing;

- RNN for sequence modeling;

- Transformers for natural language processing and others;

- Autoencoders and Generative Adversarial Networks (GANs) for generative modeling.

3.12. Decision Tree

- Are simple to interpret and visualize;

- Do not necessitate a lot of data preprocessing (e.g., do not require feature scaling);

- Capable of modeling non-linear relationships in data;

- Handle classification and regression issues effectively.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Rozie, F. Rancang Bangun Alat Monitoring Jumlah Denyut Nadi/Jantung Berbasis Android. Available online: https://www.neliti.com/publications/191055/rancang-bangun-alat-monitoring-jumlah-denyut-nadi-jantung-berbasis-android (accessed on 1 January 2025).

- World Health Organization. Avoiding Heart Attacks and Strokes Don’t Be a Victim Protect Yourself; World Health Organization: Geneva, Switzerland, 2005. [Google Scholar]

- Widiastuti, N.A.; Santosa, S.; Supriyanto, C. Algoritma Klasifikasi Data Mining Naïve Bayes Berbasis Particle Swarm Optimization Untuk Deteksi Penyakit Jantung. 2014. Available online: https://ejournal.unib.ac.id/index.php/pseudocode/article/view/57 (accessed on 1 January 2025).

- Sapna, F.N.U.; Raveena, F.N.U.; Chandio, M.; Bai, K.; Sayyar, M.; Varrassi, G.; Khatri, M.; Kumar, S.; Mohamad, T. Advancements in Heart Failure Management: A Comprehensive Narrative Review of Emerging Therapies. Cureus 2023, 15, e46486. [Google Scholar] [CrossRef] [PubMed]

- Gollangi, H.K.; Galla, E.P.; Ramdas Bauskar, S.; Madhavaram, C.R.; Sunkara, J.R.; Reddy, M.S. Echoes in Pixels: The Intersection of Image Processing and Sound Detection through the Lens of AI and ML. Int. J. Dev. Res. 2020, 10, 39735–39743. [Google Scholar] [CrossRef]

- Karadeniz, T.; Maraş, H.H.; Tokdemir, G.; Ergezer, H. Two Majority Voting Classifiers Applied to Heart Disease Prediction. Appl. Sci. 2023, 13, 3767. [Google Scholar] [CrossRef]

- Van Ness, M.; Bosschieter, T.; Din, N.; Ambrosy, A.; Sandhu, A.; Udell, M. Interpretable Survival Analysis for Heart Failure Risk Prediction. 2023. Available online: http://arxiv.org/abs/2310.15472 (accessed on 1 January 2025).

- Saqib, M.; Perswani, P.; Muneem, A.; Mumtaz, H.; Neha, F.; Ali, S.; Tabassum, S. Machine learning in heart failure diagnosis, prediction, and prognosis: Review. Ann. Med. Surg. 2024, 86, 3615–3623. [Google Scholar] [CrossRef] [PubMed]

- Agarwal, A.; Thirunarayan, K.; Romine, W.L.; Alambo, A.; Cajita, M.; Banerjee, T. Leveraging Natural Learning Processing to Uncover Themes in Clinical Notes of Patients Admitted for Heart Failure. Available online: https://ieeexplore.ieee.org/abstract/document/9871400 (accessed on 1 January 2025).

- Rao, S.; Li, Y.; Ramakrishnan, R.; Hassaine, A.; Canoy, D.; Cleland, J.; Lukasiewicz, T.; Salimi-Khorshidi, G.; Rahimi, K. An explainable Transformer-based deep learning model for the prediction of incident heart failure. IEEE J. Biomed. Health Inform. 2022, 26, 3362–3372. [Google Scholar] [CrossRef] [PubMed]

- Ramdhani, Y.; Putra, C.M.; Alamsyah, D.P. Heart failure prediction based on random forest algorithm using genetic algorithm for feature selection. Int. J. Reconfigurable Embedded Syst. 2023, 12, 205–214. [Google Scholar] [CrossRef]

- Islam, S.; Hossain, F.; Sattar, A.H.M.S. Predicting Heart Disease from Medical Records Heart Disease Prediction from Medical Record. Available online: https://www.researchgate.net/publication/381609333 (accessed on 1 January 2025).

- Chicco, D.; Jurman, G. Machine learning can predict survival of patients with heart failure from serum creatinine and ejection fraction alone. BMC Med. Inform. Decis. Mak. 2020, 20, 16. [Google Scholar] [CrossRef] [PubMed]

- Patel, S. Survival Analysis in Heart Failure Patients Based on Clinical Data Using AI-Driven Statistical. Int. J. Core Eng. Manag. 2021, 6, 365–377. Available online: https://ssrn.com/abstract=5052706 (accessed on 1 January 2025). [CrossRef]

- Kia, H.; Vali, M.; Sabahi, H. Enhancing Mortality Prediction in Heart Failure Patients: Exploring Preprocessing Methods for Imbalanced Clinical Datasets. In Proceedings of the 2023 30th National and 8th International Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 30 November–1 December 2023. [Google Scholar]

- Fassina, L.; Faragli, A.; Lo Muzio, F.P.; Kelle, S.; Campana, C.; Pieske, B.; Edelmann, F.; Alogna, A. A random shuffle method to expand a narrow dataset and overcome the associated challenges in a clinical study: A heart failure cohort example. Front. Cardiovasc. Med. 2020, 7, 599923. [Google Scholar] [CrossRef] [PubMed]

- Ashfaq, F.; Jhanjhi, N.Z.; Khan, N.A.; Javaid, D.; Masud, M.; Shorfuzzaman, M. Enhancing ECG Report Generation With Domain-Specific Tokenization for Improved Medical NLP Accuracy. IEEE Access 2025, 13, 85493–85506. [Google Scholar] [CrossRef]

- Kodama, K.; Sakamoto, T.; Kubota, T.; Takimura, H.; Hongo, H.; Chikashima, H.; Shibasaki, Y.; Yada, T.; Node, K.; Nakayama, T.; et al. Construction of a Heart Failure Database Collating Administrative Claims Data and Electronic Medical Record Data to Evaluate Risk Factors for In-Hospital Death and Prolonged Hospitalization. Circ. Rep. 2019, 1, 582–592. [Google Scholar] [CrossRef] [PubMed]

- Stefane Souza, V.; Araújo Lima, D. Cardiac Disease Diagnosis Using K-Nearest Neighbor Algorithm: A Study on Heart Failure Clinical Records Dataset. Artif. Intell. Appl. 2024, 3, 56–71. [Google Scholar] [CrossRef]

- Eurostat. Standardised Rate of Deaths from Coronary Heart Diseases in the EU. Eurostat—News, 28 September 2020. Europe-Wide Rates and Infographic. Available online: https://ec.europa.eu/eurostat/web/products-eurostat-news/-/edn-20200928-1 (accessed on 1 January 2025).

- Lim, M.; Abdullah, A.; Jhanjhi, N.; Khurram Khan, M.; Supramaniam, M. Link prediction in time-evolving criminal network with deep reinforcement learning technique. IEEE Access 2019, 7, 184797–184807. [Google Scholar] [CrossRef]

- Diwaker, D.C.; Tomar, P.; Solanki, A.; Nayyar, A.; Jhanjhi, N.Z.; Abdullah, A.; Supramaniam, M. A New Model for Predicting Component Based Software Reliability Using Soft Computing. IEEE Access 2019, 7, 147191–147203. [Google Scholar] [CrossRef]

- Airehrour, D.; Gutierrez, J.; Kumar Ray, S. GradeTrust: A secure trust based routing protocol for MANETs. In Proceedings of the 2015 International Telecommunication Networks and Applications Conference (ITNAC), Sydney, NSW, Australia, 18–20 November 2015; pp. 65–70. [Google Scholar] [CrossRef]

| Features | Descriptions |

|---|---|

| Age | Patient’s age (years) |

| Anemia | Decline in hemoglobin and red blood cell levels |

| Creatinine | Blood concentrations of Creatine Phosphokinase (CPK) enzyme (mcg/L) |

| Diabetes | When dealing with diabetic patients |

| Ejection Fraction | The part of blood that is eliminated from the body as a result of heart contraction |

| High Blood Pressure | People suffering with hypertension |

| Platelets | Elements of blood plasma (kilo platelet/mL) |

| Serum Creatinine | Quantity of the creatinine in the blood serum (mg/dl) |

| Serum Sodium | Blood sodium concentration in the serum (mEq/L) |

| Sex | Describes gender (male or female) |

| Smoking | Whether the patient smokes or not |

| Time | Follow-up period (days) |

| Death Event | At completion of the follow-up |

| Algorithms | Accuracy | Precision | F Meas. | Sensitivity |

|---|---|---|---|---|

| KNN | 95.30% | 92.70% | 95.36% | 92.28% |

| Naive Bayes | 78.60% | 74.75% | 78.66% | 78.89% |

| CNN | 99.75% | 99.72% | 99.78% | 99.76% |

| Random Forest | 96.06% | 94.29% | 94.56% | 93.26% |

| Deep Learning | 92.22% | 92.36% | 92.44% | 92.39% |

| SVM | 94.27% | 94.15% | 94.36% | 94.44% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qadeer, M.; Ayaz, R.; Thohir, M.I. Heart Failure Prediction Through a Comparative Study of Machine Learning and Deep Learning Models. Eng. Proc. 2025, 107, 61. https://doi.org/10.3390/engproc2025107061

Qadeer M, Ayaz R, Thohir MI. Heart Failure Prediction Through a Comparative Study of Machine Learning and Deep Learning Models. Engineering Proceedings. 2025; 107(1):61. https://doi.org/10.3390/engproc2025107061

Chicago/Turabian StyleQadeer, Mohid, Rizwan Ayaz, and Muhammad Ikhsan Thohir. 2025. "Heart Failure Prediction Through a Comparative Study of Machine Learning and Deep Learning Models" Engineering Proceedings 107, no. 1: 61. https://doi.org/10.3390/engproc2025107061

APA StyleQadeer, M., Ayaz, R., & Thohir, M. I. (2025). Heart Failure Prediction Through a Comparative Study of Machine Learning and Deep Learning Models. Engineering Proceedings, 107(1), 61. https://doi.org/10.3390/engproc2025107061