1. Introduction

Errors are part of software engineering; even if we rigorously develop and test software, errors will not be completely eliminated [

1]. Detecting and preventing defects early in the software development lifecycle are important to avoid adverse effects on the project schedule, costs, and end-user experience. The capacity to anticipate software flaws prior to their emergence in functional settings has attracted significant interest in both academia and business [

2]. The goal of predictive models for software defect identification is to pinpoint possibly problematic parts or modules using a variety of software metrics, code features, and past defect information [

3,

4]. Through foreseeing software problems in high-risk locations, developers can optimize resource allocation towards testing, debugging, and quality assurance tasks.

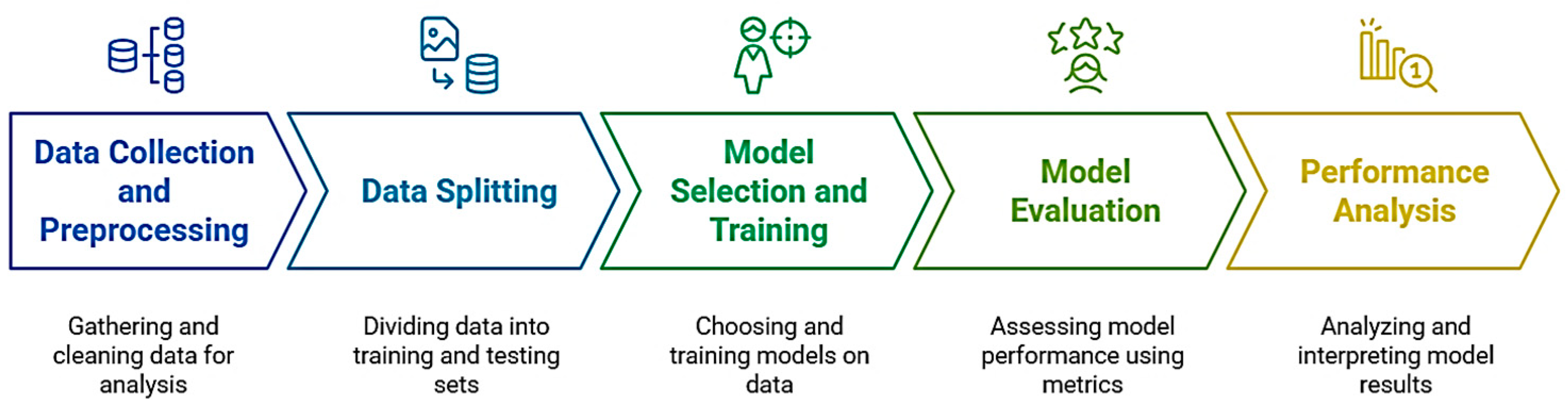

The process of software fault prediction (SFP) can be broken down into several steps. The first step involves determining the basic abstract information about software, like code and the development process, and using relevant metric elements to establish the metric features of the software element.

However, there could be a number of problems with the dataset that was acquired at this step, including imbalances, outliers, and missing numbers. Therefore, the historical database needs to be pre-processed by removing missing values, detecting outliers, normalizing data, and so on [

5]. By correctly cleaning and pre-processing the dataset, it can be used for prediction studies to anticipate defects in the SDLC in advance, enabling the software team to achieve better outcomes and higher software quality [

3,

4].

Figure 1 represents software fault prediction process.

Machine learning algorithms have become a potential tool for software fault detection in recent years. By making use of patterns and relationships found in software data, these techniques create prediction models that can be used to discover software components that are faulty [

6].

While individual machine learning algorithms lead to better insights into defect prediction, it has been shown that the usage of ensemble learning approaches can lead to increases in both prediction accuracy and robustness. Ensemble methods typically combine the predictions of multiple base models to produce a final prediction that is even more reliable and precise [

7,

8]. Ensemble methods can outperform individual models when they take into account the diversity of models and reduce their disadvantages.

This work focuses primarily on enhancing software quality assurance processes while extending the state of the art in software fault prediction methods. Through analyzing and comparing a number of prediction methods, this research aims to identify the optimal approaches to forecasting software defects [

9].

It also aims to offer this insightful knowledge to software engineering and quality assurance domains. This investigation aims to push software engineering approaches with the overall mission of providing developers with effective tools and techniques for the early detection and mitigation of problems [

10,

11]. Customers can depend on software products with more reliability, security, and efficiency, helping the company’s products to be used more in their respective fields. This is achieved by enhancing software quality assurance processes by automating defect prediction.

This study proposes a new feature selection method that has been specifically designed to address software defect prediction problems, potentially improving the related predictive models’ relevance and performance on the automated tasks they aim to solve. Using multiple datasets and rigorous cross-validation techniques, the study takes a comprehensive approach to establish the robustness and generalizability of the results. Nonetheless, empirical findings reveal better prediction performance than existing methods, exposing previously unnoticed trends in software defect data, as well as showing that the approach is scalable within a variety of project types. These results inform software development practices, enabling practitioners to act and eliminate defects proactively. Software fault prediction (SDP) is introduced in

Section 1, and popular machine learning algorithms for software fault prediction are listed here.

Section 2 presents a summary of previous studies in the research area of SDP.

Section 3 describes the research method employed in this work.

Section 4 displays the experimental method’s empirical results. The paper concludes with a summary of the findings and implications of the research in

Section 5.

2. Literature Review

In order to foresee software issues, ref. [

12] presented a Harmony Search-based Cost-Sensitive Decision Tree. The strategy increased discovered metrics and performed better than previous approaches. It ensured effective use of software project parameters by correctly predicting errors and allocating resources for quality assurance.

Ref. [

13] also introduced a program source code parsing model named Multi-Kernel Transfer Convolutional Neural Network (MKT-CNN). With an AST and a CNN algorithm, the model mines transferable semantic features. Hand-crafted features are also used to predict Cross-Project Defect (CPD).

Ref. [

14] established a prediction system using convolutional graph neural networks (GCNNs) and an E2E framework to detect software defects. Module classification of faulty and normal groups takes place through a framework that utilizes abstract syntax trees from source code. The evidence demonstrated that the framework achieved better results than previous approaches.

Ref. [

15] created a hybrid machine learning prediction system for software defects using a combination of Genetic Algorithms and Decision Trees. The results demonstrated that this method achieved higher accuracy than standard methods according to the comparative examination provided.

Ref. [

16] evaluated ensemble machine learning algorithms for malware detection on the Windows platform because traditional signature and heuristic detection faces various shortcomings [

16]. The hybrid models composed of Logistic Regression (LR), Decision Tree (DT) and Support Vector Machine (SVM) proved efficient for accuracy enhancement according to.

Ref. [

17] developed a framework that enhances SPI efficiency through the integration of DT methods for software development. Ref. [

18] developed a requirement mining framework that traces components in globally distributed development environments utilizing aspects of mining.

A CNN-LSTM network based on Gray Wolf Optimization (GWO) for smart home energy usage prediction produced favorable results by addressing traditional energy management system drawbacks according to [

19].

Ref. [

20] developed an enhanced predictive heterogeneous ensemble model for breast cancer prediction by combining different ML techniques to achieve better diagnostic accuracy. The research findings demonstrate how ensemble learning enhances breast cancer diagnosis by improving clinical decision support systems.

Ref. [

21] introduced a solution for software problem prediction that combines data pre-processing at three stages with a correlation analysis and machine learning techniques. The second strategy reached 98.7% diagnostic accuracy and simultaneously lowered maintenance expenses and programming complexity and improved software excellence by eliminating faults.

3. Research Methodology

The presented methodology is based on a number of algorithms, including Random Forest (RF), Multilayer Perceptron (MLP), Bayes Net, and C4.5 Decision Tree. This work projects an ensemble algorithm for predicting defects in software. This algorithm is an integration of Bayes Net, C4.5 Decision Tree, MLP, and RF. The utilized algorithms are discussed in the following paragraphs:

3.1. Bayes Net

The probabilistic graphical model known as Bayes Net, or the Bayesian Network, represents a collection of random variables and their conditional dependencies using a directed acyclic graph (DAG). It models the probability distribution of these variables and infers probabilistic correlations between them by utilizing the Bayes theorem. From a mathematical perspective, a Bayes Net is made up of a collection of nodes that symbolize random variables (1) and directed edges (2) that reflect the probabilistic connections between them.

There is a conditional probability distribution linked to each node

.

is the set of parent nodes in the graph. The joint probability distribution of all variables in the network is computed as the product of conditional probabilities, as shown in (3).

By employing techniques like variable elimination and belief propagation, Bayes Net facilitates the effective inference of the posterior distribution of variables given observed data.

3.2. C4.5 Decision Tree

One popular method for building classification models that employ a tree structure to contain decision rules is Ross Quinlan’s revolutionary C4.5 Decision Tree algorithm. The feature space is recursively divided into subsets according to the input feature values, where a leaf node represents a class label and an internal node indicates a choice based on a feature. The optimal characteristic for splitting at each node is chosen by C4.5 using a heuristic method that usually maximizes information gain or the gain ratio, which gauges the decrease in entropy or impurity in the data. Mathematically, the information gain

for a given attribute

in a Decision Tree

is calculated as shown in (4).

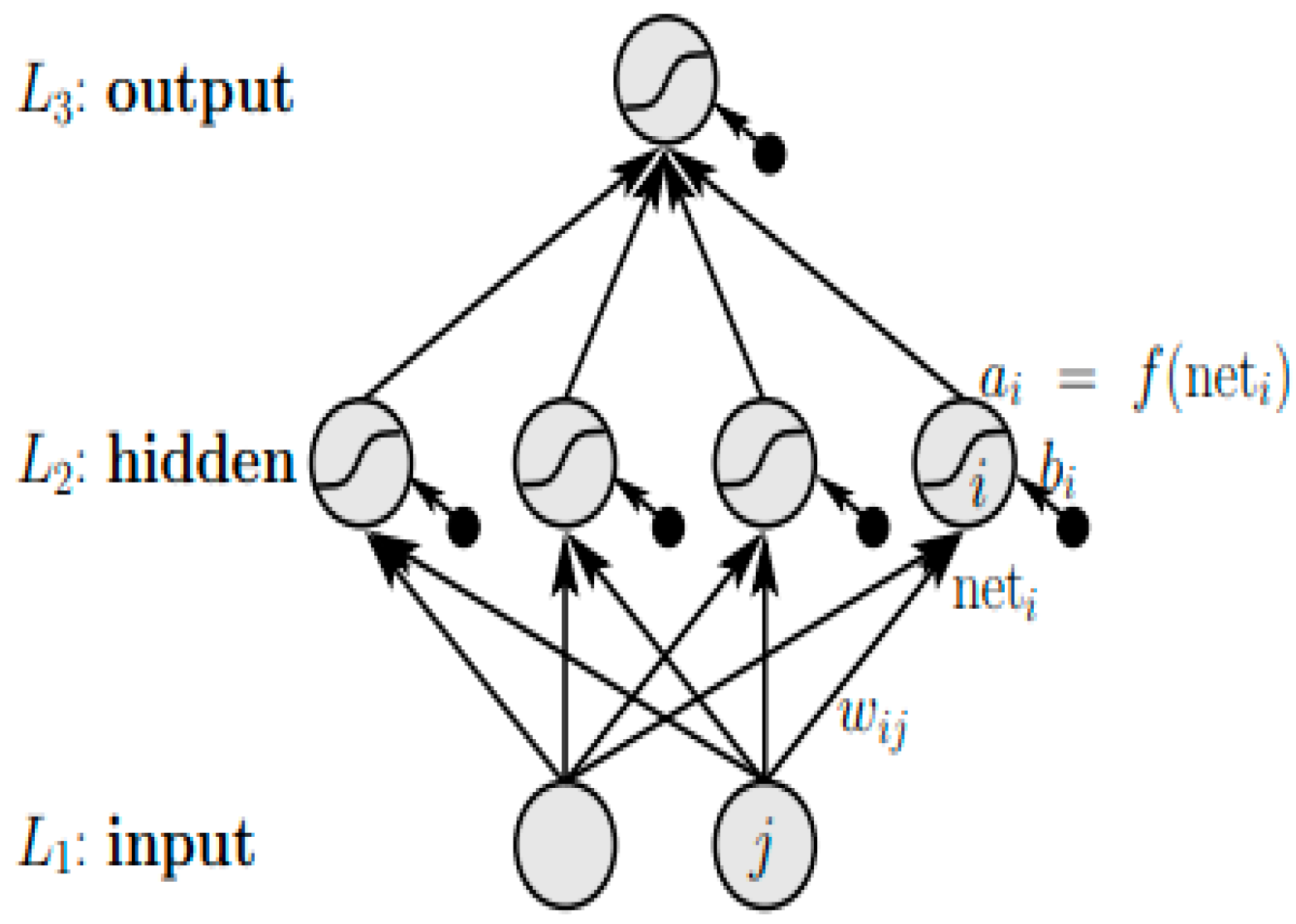

3.3. Multilayer Perceptron

This is a basic type of feed-forward network (FFN). This algorithm involves many perceptrons. The outcome generated from one perceptron is fed into the next one as an input. Moreover, a non-linear function is used to assess a neuron’s condition. The Multilayer Perceptron algorithm’s general framework is shown in

Figure 2.

Each neuron in the hidden layer of a Multilayer Perceptron (MLP) integrates inputs from the preceding layer, modifies them with weights, adds a bias, and then applies an activation function to generate an output. From a mathematical perspective, this appears as (5) and (6) for every neuron in the hidden layer.

Similarly, for each neuron in the output layer, this appears as (7) and (8).

In this case, the activation function gives the model non-linearity, bias permits flexibility, and weights provide the relative relevance of each input. The MLP learns tasks like classification or prediction by modifying these weights and biases through a procedure known as backpropagation, which better matches the intended output.

3.4. Random Forest

Random Forest is a powerful ensemble learning method that generates more accurate regression or classification predictions by combining the results of multiple Decision Trees [

22]. In order to increase diversity and decrease correlation between trees, each Decision Tree in the forest is constructed using a fraction of the training data and a random selection of characteristics at each node. The algorithm first chooses a selection of characteristics at random for the purpose of building the tree. It next chooses the optimum split among those features, usually maximizing information gain or Gini impurity. Mathematically, the Gini impurity IG(T) for a given node T in a Decision Tree is calculated as shown in (9).

where

is the number of classes and

is the proportion of instances of class

in the node. A Random Forest produces its final forecast through the aggregation of prediction decisions by individual trees by voting for classification and by averaging for regression. Using ensemble methods results in better generalization performance as well as reduced overfitting tendency when compared to standalone Decision Trees. Random Forest has become widely adopted for different machine learning applications because of its user-friendly capabilities combined with high scalability and outstanding performance levels.

3.5. Ensemble Model

Machine learning organizations produce strong ensemble models that improve prediction outcomes through the merging of basic model forecasts. The concept behind ensemble learning requires multiple modeling approaches to work jointly for stronger generalized performance. Bagging is an ensemble learning method that produces predictive results through averaging multiple base models trained with different subsets of the input data.

A base model receives different portions of the training data to be trained into separate subsets. Weak learners receive sequential training through boosting, where successive models consecutively focus on fixing the mistakes made by preceding models [

23,

24]. Ensemble model prediction f^(x) can be computed through the summation of weighted base models’ predictions f1(x), f2(x), …, fn(x) as shown in (10).

The method bases its approach on weights W_i, which determine the significance of each base model. The machine learning process now heavily relies on ensemble techniques including Random Forest, AdaBoost, Gradient Boosting, and stacking since they deliver extraordinary performance results.

The proposed methodology follows a pseudo-code presented in Algorithm 1. The proposed methodology comprises four different individual models, which can be seen in

Figure 3.

| Algorithm 1. Pseudo-code |

Input: A Stream of pairs (x,y),

Parameter (0,1)

Output: A Stream of prediction for each

1. Initialize experts with weight each

2. for each in stream

do Collect Predictions

for

do if then

for ,

do |

4. Results and Discussion

This study evaluated various models for predicting software defects, with Model 5 being the top performer as shown in

Table 1. It achieved the highest accuracy of 90.00%, accurately classifying 90.00% of instances. Models 4 and 5 showed exceptional precision, with ratings of 84.20% and 88.00%, respectively.

Model 5 had the best recall rate of 90.00%, demonstrating its efficacy in flaw detection. It also achieved the highest F1 score of 89.00%, indicating its superiority in forecasting software flaws [

25]. The results suggest that integrating different models through ensemble techniques significantly enhances prediction accuracy and performance [

25,

26,

27]. The weighted majority algorithm as shown in

Figure 3 is used to combine predictions from multiple experts.

Figure 4 presents the comparative performance of the five models across accuracy, precision, recall, and F1-score metrics.

5. Conclusions

Software flaws are a permanent aspect of software quality even after numerous attempts to eradicate them completely. The maintenance and improvement of software quality remain difficult to achieve with sufficient resources and time despite prevention efforts. This research examined Random Forest and MLP together with C4.5 Decision Tree and Bayes Net as various techniques that predict and repair software bugs. Educational research has developed ensemble models to boost the accuracy of defect predictions followed by extensive studies.

Empirical tests prove that the ensemble model is better compared to individual models when the F1 score, along with precision and recall values of accuracy, is measured. The research proves that consolidating various predictive modeling technologies generates improved outcomes for fault detection ability. The group method demonstrates outstanding utility in quality assurance software practices since it enables improved detection and resolution of early software defects. The software industry should endorse the methodology because it successfully performs defect detection, therefore becoming an essential foundation for software improvement efforts. Research in the future will develop from this work by studying new domains and uniting multiple data sources, thus helping to evaluate the enduring impact of defect detection strategies for better software quality assurance methods.