Improved YOLOv5 Lane Line Real Time Segmentation System Integrating Seg Head Network †

Abstract

1. Introduction

2. Literature Review

2.1. Lane Line Detection Using Traditional Computer Vision Techniques

- Limitations of Traditional Methods: Traditional methods like Canny edge detection and the Sobel filter perform well in well-lit conditions with clear lane markings, but are highly sensitive to noise and lighting changes, reducing effectiveness in complex environments. The Hough transform is effective for straight lanes but struggles with curved and non-linear lane markings. As such, these methods are less reliable for modern autonomous driving applications, where conditions are more variable.

- Limitations in Complex Environments: Traditional methods fail in complex road conditions like sharp turns, shadows, and low light. Their reliance on fixed algorithms limits adaptability to rapid environmental changes, making them unsuitable for autonomous driving in dynamic conditions.

- Traditional vs. Deep Learning Methods: Traditional methods are efficient in simple environments with low computational demands but lack flexibility in dynamic conditions. Deep learning methods, which can automatically adapt to various environments, offer better performance in complex scenarios but require significant computational resources.

2.2. Advantages of Deep Learning in Lane Detection

- Main Deep Learning Models: YOLO models, FCNs (fully convolutional network), U-Net, and ResUNet are widely used in lane detection, providing real-time detection and precise segmentation. YOLO is ideal for fast detection, FCNs excel in semantic segmentation, and U-Net and ResUNet are effective in complex road scenarios.

- Summary of Deep Learning Advancements: Deep learning models, particularly YOLO, FCNs, and U-Net, show exceptional performance in lane detection in complex conditions. Despite high computational demands, their accuracy and robustness make them ideal for autonomous driving applications.

- Traditional vs. Deep Learning Methods: Traditional methods excel in simple environments with limited computational resources, while deep learning methods provide better adaptability and accuracy in complex, dynamic conditions but require substantial computational power. Optimizing deep learning models for real-time performance and efficiency is a key focus for advancing autonomous driving technology.

3. Methodology

3.1. Image Preprocessing

3.1.1. Histogram Equalization

3.1.2. Median Filtering

3.1.3. White and Yellow Lane Splits

3.1.4. Lane Line Clustering

3.2. Inverse Perspective Transformation

3.2.1. Meaning of the Inverse Perspective Transformation

3.2.2. Methods of Inverse Perspective Transformation

3.3. Improvements to the YOLOv5 Network

3.3.1. Diverse Branch Block (DBB)

- Detailed Design and Optimization of the Multifaceted Branching Module: The DBB module’s design focuses on both the diversity of the multi-branch structure and its feature extraction capabilities. Each convolutional branch uses a different kernel size to capture features at varying scales: the 1 × 1 branch for channel compression, the 3 × 3 branch for extracting detailed spatial features, the 5 × 5 branch for capturing broader contextual information, and the average pooling branch for integrating global information. The design of residual connections ensures that features from all branches are effectively integrated, forming a richer and more comprehensive representation. Additionally, Batch Normalization (BN) and ReLU activation are used to enhance the feature representation capability, providing the necessary non-linear mappings during training.

- Implementation and Optimization of Structural Reparameterization: The DBB module utilizes structural re-parameterization to convert the multi-branch structure used during training into a single convolutional structure during inference. This method ensures that the network’s efficiency and performance during inference remain consistent with the original YOLOv5 network while benefiting from the multi-branch structure during training.

- Parameter Configuration and Training Optimization Strategies: For optimal performance, several parameter and training strategies were applied to the DBB module. The configuration of convolutional kernel sizes and the number of channels in each branch is carefully adjusted based on the scale and complexity of the lane line features. Additionally, a comprehensive loss function combining cross-entropy loss and Dice loss is used to improve the model’s ability to recognize lane line edges and detailed features. Data enhancement techniques, such as random cropping and rotation, are applied to increase the diversity of the training data and simulate real-world driving conditions. The use of the Adam optimizer and cosine annealing learning rate scheduling ensures fast convergence and stable optimization, further improving the model’s final detection performance. The DBB module’s design and training optimizations ensure its effectiveness in real-time lane line segmentation, delivering enhanced feature extraction, robustness, and computational efficiency.

- Optimization of Training Strategies: The optimization of training strategies includes the use of advanced data augmentation techniques, as well as regularization methods like Dropout and weight decay to prevent overfitting. The optimized learning rate scheduling and use of the Adam optimizer contribute to faster convergence and better model performance.

3.3.2. Improved Feature Fusion Network Architecture Design

- Integration of the Gather-and-Distribute Mechanism: The Gather-and-Distribute (GD) mechanism is introduced to optimize the fusion of multi-scale features. The Low-GD branch handles small- to medium-sized lane line features, while the High-GD branch processes larger lane line features. The GD mechanism enhances the transfer of information across scales, ensuring the effective fusion of both fine and high-level features.

- Application of Masked Image Modeling Pre-training: To further improve the model’s performance, Masked Image Modeling (MIM) pre-training is employed. This unsupervised pre-training, based on the MAE (Masked Autoencoders) technique, enables the model to learn both global and local image features. The MIM pretraining improves the model’s robustness and generalization ability, enhancing its performance in real-time lane line segmentation tasks.

4. Results and Analysis

4.1. Training Environment and Evaluation Indicators

4.2. Analysis of Experimental Results

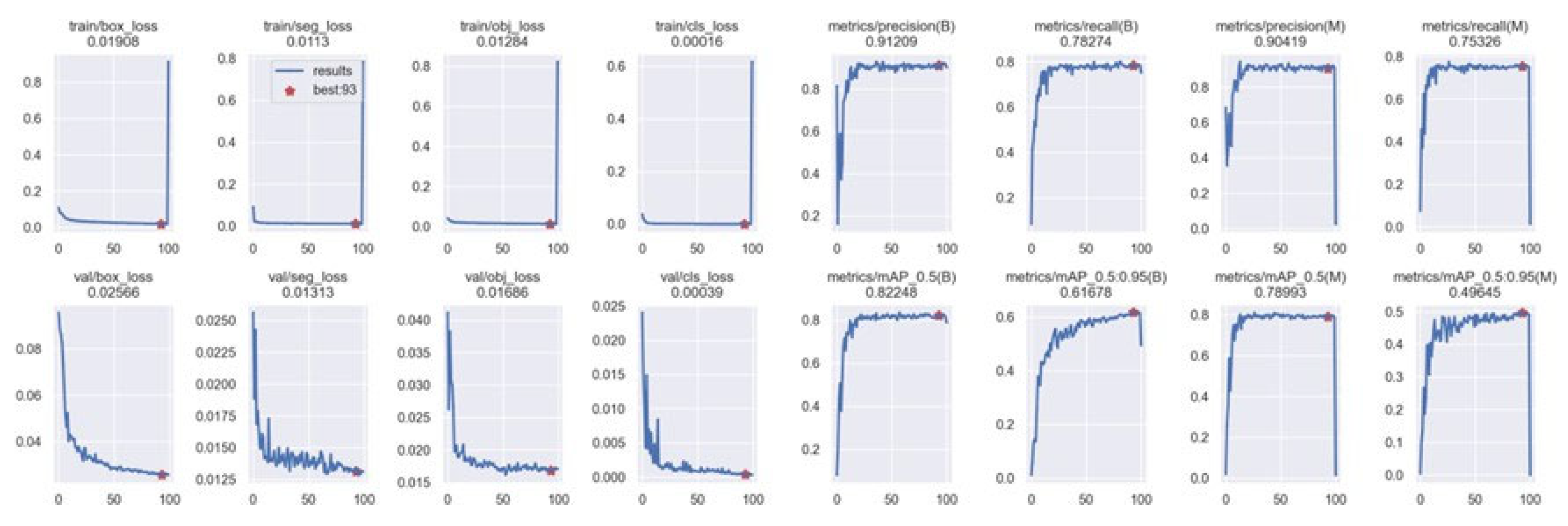

- Analysis of Ablation Experiment Results: The ablation experiment evaluates the contribution of each module to the overall model performance. Table 3 displays the performance metrics for four models: the original YOLOv5, YOLOv5-DBB, YOLOv5-GOLD, and YOLOv5-mix. The baseline YOLOv5 model achieves an F1 score of 0.821, precision of 0.904, recall of 0.753, and mAP@0.5 of 0.789, providing a stable reference for subsequent improvements. The YOLOv5-DBB model, which introduces the Diverse Branch Block (DBB), slightly improves precision and mAP@0.5, but recall remains unchanged, demonstrating that DBB enhances multi-scale feature extraction while having minimal impact on recall. YOLOv5-GOLD, which incorporates an improved segmentation head, improves recall significantly to 0.765, though precision slightly decreases to 0.897, and mAP@0.5 rises to 0.803. The YOLOv5-mix model, which combines both DBB and the improved segmentation head, further optimizes performance, increasing recall to 0.758 and mAP@0.5 to 0.804 while maintaining high precision (0.907). The results confirm that the DBB module improves precision and mAP, while the segmentation head enhances recall and segmentation accuracy.

- Analysis of Results from Side-by-Side Comparison Experiments: In side-by-side comparison experiments, the improved YOLOv5 model was compared with other mainstream models, including LSKNet and VanillaNet. Table 4 shows that YOLOv5 outperforms both models across all metrics. LSKNet shows significantly poorer performance with an F1 score of 0.497, precision of 0.756, and recall and mAP@0.5 of 0.371 and 0.300, respectively. VanillaNet performs better than LSKNet but still lags behind YOLOv5, with an F1 score of 0.595 and mAP@0.5 of 0.390. In contrast, the improved YOLOv5-GOLD and YOLOv5-mix models significantly outperform LSKNet and VanillaNet, excelling in precision, recall, and mAP@0.5. These results validate the effectiveness of the DBB and improved segmentation head in enhancing both the precision and recall of YOLOv5, making it highly competitive in real-time lane line segmentation tasks.

- Specific Analysis and Discussion: The introduction of the DBB module enhances the model’s ability to extract multi-scale features, improving both accuracy and mAP. This allows YOLOv5 to handle lane lines of varying sizes and complexities more effectively, contributing to its robustness. The Seg head segmentation network, based on the YOLACT architecture, improves recall and segmentation quality by efficiently generating accurate lane line masks. The integration of a multi-scale feature fusion mechanism, using self-attention, further enhances the model’s ability to capture fine-grained features and structural information. These improvements, combined with the results from comparison experiments, confirm the superior performance of the enhanced YOLOv5 model in real-time lane line segmentation.

5. Conclusions and Future Work

- Optimization of DBB Module: Further refine the Diverse Branch Block (DBB) to enhance feature representation and overall model accuracy while maintaining efficiency.

- Multi-modal Data Fusion: Incorporating sensor fusion can improve segmentation performance across varied conditions.

- Robustness in Extreme Conditions: Further work is needed to optimize the model’s ability to handle severe weather and lighting conditions.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Over 200,000 People Die in Traffic Accidents in China Every Year. Tencent News. 7 May 2015. Available online: http://news.qq.com/a/20150507/012185.html (accessed on 1 January 2025).

- Eng, X.; Luo, G. Intelligent traffic information systems based on feature of traffic flow. In Proceedings of the 14th World Congress on Intelligent Transport Systems, Beijing, China, 9 October 2007; p. 312. [Google Scholar]

- Liu, Y. Research on Key Algorithms for Real-Time Lane Line Segmentation System Based on Machine Vision. Master’s Thesis, Hunan University, Changsha, China, 2013. [Google Scholar]

- Ashfaq, F.; Ghoniem, R.M.; Jhanjhi, N.Z.; Khan, N.A.; Algarni, A.D. Using dual attention BiLSTM to predict vehicle lane changing maneuvers on highway dataset. Systems 2023, 11, 196. [Google Scholar] [CrossRef]

- Huang, G.; Xu, X. Research on intelligent driving systems for automobiles based on machine vision. Microcomput. Inf. 2004, 6, 4–6. [Google Scholar]

- Assidiq, A.A.M.; Olifa, O.O.; Islam, R. Real-time lane detection for autonomous vehicles. In Proceedings of the International Conference on Computer and Communication Engineering, Kuala Lumpur, Malaysia, 13–15 May 2008; pp. 82–88. [Google Scholar]

- Sun, Y.; Li, J.; Xu, X.; Shi, Y. Adaptive multi-lane detection based on robust instance segmentation for intelligent vehicles. IEEE Trans. Intell. Veh. 2022, 8, 888–899. [Google Scholar] [CrossRef]

- Zhou, S.; Jiang, Y.; Xie, J. A novel lane detection based on geometrical model and Gabor filter. In Proceedings of the IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 59–64. [Google Scholar]

- Gupta, R.A. Concurrent visual multiple lane detection for autonomous vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 2416–2422. [Google Scholar]

- Luo, G.; Qi, J. Lane detection for autonomous vehicles based on computer vision. J. Beijing Polytech. Coll. 2020, 19, 34–37. [Google Scholar]

- Xuan, H.; Liu, H.; Yuan, J.; Qing, L.; Niu, X. A robust multi-lane line detection algorithm. Comput. Sci. 2017, 44, 305–313. [Google Scholar]

- Deng, Y.; Pu, H.; Hua, X.; Sun, B. Research on lane line detection based on RC-DBSCAN. J. Hunan Univ. (Nat. Sci.) 2021, 48, 85–92. [Google Scholar] [CrossRef]

- Gao, X.; Zhang, X.; Lu, Y.; Huang, Y.; Yang, L.; Xiong, Y.; Liu, P. A survey of collaborative perception in intelligent vehicles at intersections. IEEE Trans. Intell. Veh. 2024, 1–20. [Google Scholar] [CrossRef]

- Zhu, Y. Research on Lane Marking Detection Method Based on RHT. Master’s Thesis, Dalian University of Technology, Dalian, China, 2018. [Google Scholar]

- Chai, H. Low contrast worn lane marking extraction method based on phase feature enhancement. Shanxi Transp. Technol. 2023, 5, 111–114+119. [Google Scholar]

- Wu, Y.; Liu, L. Research progress on vision-based lane line detection methods. Chin. J. Sci. Instrum. 2019, 40, 92–109. [Google Scholar] [CrossRef]

- Ye, Y. Intelligent detection concept of damaged road markings based on machine vision. Highways Automot. Transp. 2016, 3, 55–57. [Google Scholar]

- Guan, X.; Jia, X.; Gao, Z. Selection of region of interest and adaptive threshold segmentation in lane detection. Highway Traffic Technol. 2009, 26, 104–108. [Google Scholar]

- Xu, Y.; Li, J. Autonomous navigation method for lane detection based on sequential images. Meas. Control Technol. 2023, 42, 18–23. [Google Scholar] [CrossRef]

- Bai, B.; Han, J.; Pan, S.; Lin, C.; Yuan, H. Lane detection method based on dual-threshold segmentation. Inf. Technol. 2013, 37, 43–45. [Google Scholar] [CrossRef]

- Wang, X.; Huang, H. Research on a lightweight lane detection algorithm in complex lane-changing scenarios. Transm. Technol. 2023, 37, 3–15. [Google Scholar]

- Du, L.; Lyu, Y.; Wu, D.; Luo, Y. Fast lane detection method in complex scenarios. Comput. Eng. Appl. 2023, 59, 178–185. [Google Scholar]

- Hong, S.; Zhang, D. A review of lane detection technology based on semantic information processing. Comput. Eng. Appl. 2024, 1–20. Available online: http://kns.cnki.net/kcms/detail/11.2127.tp.20241015.1739.010.html (accessed on 1 September 2025).

- Liu, Y.; Yang, H.; Wang, Q. Lane detection method for complex lighting environments. Laser J. 2024, 45, 94–99. [Google Scholar] [CrossRef]

- Xu, X.; Zhao, H.; Fan, B.; Duan, M.; Li, G. Lane detection method for complex environments based on dual-branch segmentation network. Mod. Electron. Tech. 2024, 47, 87–94. [Google Scholar] [CrossRef]

- Wang, J.; Qiu, H.; Yang, B.; Zhang, W.; Zhong, J. Deployment of lane line detection, object detection, and drivable area segmentation algorithm based on YOLOP. Sci. Technol. Innov. Prod. 2024, 45, 92–97. [Google Scholar]

- Wu, L.; Zhang, Z.; Ge, C.; Yu, J. Lane detection algorithm based on improved SCNN network. Comput. Moderniz. 2024, 7, 87–92. [Google Scholar]

- Jiang, Y.; Zhang, Y.; Dong, H.; Zhang, X.; Wang, M. Lane detection under low-light conditions based on instance association. J. Syst. Simul. 2024, in press. [Google Scholar] [CrossRef]

- Yao, H.; Zhang, H.; Guo, Z. Lane detection algorithm under complex road conditions. Comput. Appl. 2020, 40, 166–172. [Google Scholar]

| Method/Algorithm | Findings/Methods | Limitations/Gaps | Source |

|---|---|---|---|

| Edge Detection and Hough Transform | The system reliably detects multiple lanes in real-time by identifying lane positions and required steering angles through a predictive control framework. | The algorithm faces challenges in complex urban environments with irregular or poorly marked lanes. | (Assidiq, Olifa, & Islam, 2008 [6]) |

| Real-time lane segmentation methods | Discusses methods using both traditional and machine learning approaches for lane segmentation. | Difficulty in maintaining high detection accuracy in environments with heavy traffic or poor road markings. | (Quanguang & Hu, 2009 [7]) |

| Geometrical model and Gabor filter-based lane detection | This method improves robustness in lane detection, especially on highways. | Struggles in highly complex road settings with irregular markings or obstructions. | Shengyan Zhou et al. (2010 [8]) |

| Monocular Vision System | The system is robust against shadows and variations in road textures, and it detects both continuous and dashed lines. | Performance is reduced in extreme weather or irregular lane markings. | (Gupta, 2010 [9]) |

| Edge Detection based on Lane Width + Parabolic Fitting + RANSAC(Random Sample Consensus) | Successfully tracks lanes using grayscale processing and RANSAC-based parabolic fitting. | High computational cost; sensitive to lighting changes and noise; relies on hardware for real-time performance. | (Luo & Qi, 2020 [10]) |

| Multi-condition Lane Feature Filtering + Kalman Filter + Adaptive ROI(Region of Interest) | Accurate and robust multi-lane detection in complex environments; real-time tracking and prediction using Kalman filter. | Sensitive to extreme weather conditions and lighting variations; high computational demands for real-time performance. | (Xuan, Liu, Yuan, Li, & Niu, 2017 [11]) |

| RC-DBSCAN Clustering + Kalman Filter + Feature Fusion | Improved lane detection in complex conditions with better robustness and real-time performance compared to traditional clustering methods. | High computational cost for image processing; sensitivity to lighting and complex road environments. | (Deng, Pu, & Hua, 2021 [12]) |

| Clustering-based Adaptive Inverse Perspective Transformation + Kalman Filter | Improved lane detection accuracy on sloped roads; enhanced robustness with Kalman filter for frame stability. | Computational cost; sensitive to lighting and complex road environments. | (He, Chang, Jiang, & Zhang, 2020 [13]) |

| Randomized Hough Transform (RHT) + Filtering | Improved lane line detection accuracy and reduced false detections using angle-based filtering; enhanced real-time performance through optimized point selection. | Computational cost for complex scenarios; sensitivity to noise and lighting variations. | (Zhu, 2018 [14]) |

| Phase Feature Enhancement + Hough Transform | Effective detection of worn lane markings under low contrast and uneven lighting; improved edge detection accuracy with phase consistency. | Sensitive to noise; requires preprocessing for denoising; high computational demand for phase analysis. | (Chai, 2023 [15]) |

| Feature-based, Model-based, and Learning-based Methods | Summarized the strengths and weaknesses of lane detection techniques, highlighting their progress over 20 years; included comparisons of datasets and performance indicators. | Challenges include variability in road conditions, lighting changes, shadows, and robustness across different scenarios. | (Wu & Liu, 2019 [16]) |

| Machine Vision for Road Marking Damage Detection | Proposed a machine vision method to detect damaged road markings, achieving effective results in simulation tests. | Challenges include handling varying environmental conditions and ensuring real-time detection in diverse road scenarios. | (Ye, 2016 [17]) |

| ROI Selection + Adaptive OTSU Threshold Segmentation | Effective for detecting discontinuous and worn lane markings, enhancing accuracy and robustness in complex environments. | Limited by environmental changes and computational demands for processing adaptive thresholding. | (Guan, Jia, & Gao, 2009 [18]) |

| HSV Image Transformation + Vertical Oblique OTSU | Enhanced real-time lane detection with robust lane identification under varying conditions; improved | Computational complexity due to multiple transformations; sensitive to environmental changes like light- | (Xu & Li, 2023 [19]) |

| Method/Algorithm | Findings/Methods | Limitations/Gaps | Source |

|---|---|---|---|

| RepVGG-A0 + Cross-layer Feature Fusion | Improved lane detection accuracy in complex lane-changing scenarios; achieved high real-time detection speed with 132 FPS (frames per second), up to 220 FPS with TensorRT. | Sensitive to shadows and varying lighting conditions; requires efficient hardware for real-time processing. | (Wang & Huang, 2023 [21]) |

| Fractal Residual Structure + Runge–Kutta Method + Row Anchor Detection | Improved lane detection accuracy and speed in complex traffic scenarios; achieved real-time performance with high accuracy using fractal modules. | Sensitive to complex road conditions and requires preprocessing for optimal performance; computationally demanding. | (Du, Lyu, Wu, & Luo, 2023 [22]) |

| Semantic Information Processing (Segmentation, Fusion, Enhancement, Modeling) | Reviewed 84 advanced algorithms categorized by semantic processing type; provided insights into the strengths and weaknesses of each approach. | Difficulty handling complex environmental challenges and high computational demands for real-time performance. | (Hong & Zhang, 2024 [23]) |

| Dynamic Region of Interest + Ant Colony Algorithm | Enhanced lane detection accuracy under complex lighting by dynamically adjusting ROI and using an ant colony algorithm for edge detection. | Computational cost due to complex transformations; sensitive to extreme lighting variations and noise. | (Liu, Yang, & Wang, 2024 [24]) |

| Two-branch Segmentation Network (LaneNet + H-Net) + Adaptive DBSCAN | Achieved high detection accuracy in complex conditions by using a dual-branch network for semantic and pixel embedding; optimized with adaptive DBSCAN and inverse perspective transformation. | Sensitive to high curvature lanes and computationally demanding for real-time performance. | (Xu, Zhao, Fan, Duan, & Li, 2024 [25]) |

| YOLOP (Panoptic Driving Perception) + Embedded Platform Deployment | Achieved efficient multi-task processing for lane detection, obstacle detection, and drivable area segmentation in real-time; optimized for embedded platforms. | High computational demand; requires significant data and training for accurate performance in diverse conditions. | (Wang, Qiu, Yang, Zhang, & Zhong, 2024 [26]) |

| Improved SCNN + PSA Attention Module + VGG-K Network(Visual Geometry Group) | Achieved enhanced lane detection accuracy in complex conditions using improved SCNN with VGG-K and PSA modules; strong performance on the CULane dataset. | Sensitive to extreme lighting and highly occluded lanes; requires further optimization for real-time deployment. | (Wu, Zhang, Ge, & Yu, 2024 [27]) |

| Instance Association Net (IANet) with Mask-based Feature Separation + Position Encoding | Enhanced low-light lane detection using instance association and position encoding; outperformed existing methods with a 71.9% F1 score in night scenes on CULane dataset. | High computational cost; sensitive to extreme lighting variations and occlusions in complex environments. | (Jiang, Zhang, Dong, Zhang, Wang, 2024 [28]) |

| Multi-scale Feature Fusion + ROI Optimization + Adaptive Thresholding | Improved lane detection accuracy on complex road conditions using feature fusion and adaptive thresholds; demonstrated high robustness in varied weather. | Computationally intensive; affected by extreme lighting and requires high-quality input images for optimal results. | (Yao, 2023 [29]) |

| Comparisons | Traditional Computer Vision Methods | Deep Learning Methods |

|---|---|---|

| Applicable Environment | Simple, stable environments. | Complex, dynamic environments. |

| Adaptability and Extension | Limited flexibility, difficult to adapt to diverse settings. | Highly flexible and can be adapted to a range of settings. |

| Computational Resource Demand | Low resource demand, suitable for limited hardware. | High resource demand, usually requires GPU support. |

| Performance | Stable in simple scenarios, reduced accuracy in complex conditions. | High accuracy and robustness in complex, dynamic conditions. |

| Network Models | F1 | Precision | Recall | mAP@0.5 |

|---|---|---|---|---|

| yolov5 | 0.821 | 0.904 | 0.753 | 0.789 |

| yolov5-DBB | 0.822 | 0.906 | 0.753 | 0.795 |

| yolov5-GOLD | 0.825 | 0.897 | 0.765 | 0.803 |

| yolov5-mix | 0.825 | 0.907 | 0.758 | 0.804 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feilong, Q.; Khan, N.A.; Jhanjhi, N.Z.; Ashfaq, F.; Hendrawati, T.D. Improved YOLOv5 Lane Line Real Time Segmentation System Integrating Seg Head Network. Eng. Proc. 2025, 107, 49. https://doi.org/10.3390/engproc2025107049

Feilong Q, Khan NA, Jhanjhi NZ, Ashfaq F, Hendrawati TD. Improved YOLOv5 Lane Line Real Time Segmentation System Integrating Seg Head Network. Engineering Proceedings. 2025; 107(1):49. https://doi.org/10.3390/engproc2025107049

Chicago/Turabian StyleFeilong, Qu, Navid Ali Khan, N. Z. Jhanjhi, Farzeen Ashfaq, and Trisiani Dewi Hendrawati. 2025. "Improved YOLOv5 Lane Line Real Time Segmentation System Integrating Seg Head Network" Engineering Proceedings 107, no. 1: 49. https://doi.org/10.3390/engproc2025107049

APA StyleFeilong, Q., Khan, N. A., Jhanjhi, N. Z., Ashfaq, F., & Hendrawati, T. D. (2025). Improved YOLOv5 Lane Line Real Time Segmentation System Integrating Seg Head Network. Engineering Proceedings, 107(1), 49. https://doi.org/10.3390/engproc2025107049