Abstract

Action recognition actions in video are sophisticated processes that demand more and more explicitly captured spatial and temporal information. This paper gives a comparison of several advanced techniques for action recognition using the UCF101 dataset. We look at two-stream convolutional networks, 3D convolutional networks, long short-term memory networks, two-stream inflated 3D convolutional networks, attention mechanisms, and hybrid models. Their methods have been examined for each of the proposed options along with their architectures, as well as their pros and cons. The results of our experiments have revealed the performance of these approaches on the UCF101 dataset, including a focus on the tradeoffs between computational efficiency, data requirements, and recognition accuracy.

Keywords:

action recognition; UCF101; Two-Stream CNN; 3D CNN; LSTM; I3D; attention mechanisms; hybrid models 1. Introduction

Action recognition is a vitally important subject in computer vision research that is aimed at the detection and classification of human activities from video data [1]. The detailed movement of the human body, changes in the perspective and background, and the need to record both spatial and temporal information simultaneously make this task inherently complex. This paper analyzes the full category of six action recognition models:

- Two-Stream Convolutional Networks (2D + Optical Flow Streams): The method involves the introduction of two separate CNNs (Convolution Neural Networks) that operate in parallel to process spatial representation in RGB (Red Green Blue) frames and temporal representation in optical flows, respectively.

- 3D Convolutional Networks (C3Ds): Using 3D CNNs instead of traditional 2D CNNs, this method employs 3D convolutional neural networks where each layer is a 3D cube (width, height, and depth).

- Long Short-Term Memory (LSTM) Recurrent Networks: LSTMs are aimed at modeling long-range temporal dependencies in sequential data. Combining LSTMs with CNNs allows them to properly deal with video sequences of varying lengths.

- Two-Stream Inflated 3D Convolutional Networks (I3Ds): The idea is to expand 2D filter-centered or pre-trained convolutional neural networks (CNNs) into three dimensions using inflation, applying convolutional operations to preprocessed 3D tensors.

- Attention Mechanisms: Attention mechanisms are key to obtaining more accurate and understandable models by directing them to the relevant regions of interest.

- Hybrid Models: These models combine multiple types or forms of signals such as RGB frames, depth data, and skeleton joints to better represent human activities.

2. Literature Review

Evolution of Action Recognition Techniques

Methods such as motion history images (MHIs) or histograms of oriented gradients (HOGs) extracted low-level data and applied classifiers such as SVMs or HMMs to recognize actions based on predefined patterns [2].

With the onset of deep learning technology, specifically convolutional neural networks (CNNs), action recognition has advanced significantly. CNNs have the capability to extract complex features and improve recognition accuracy through data visualization [3].

- Single-Stream CNNs: Initial attempts utilized single-stream CNN architectures to capture spatial dimensions of sequences [4].

- Two-Stream Convolutional Networks (2D + Optical Flow): For appearance changes and motion cues these networks incorporate a parallel optical flow-based branch trained using informal motion like dense optical flow.

- 3D Convolutional Networks (C3D): For temporal dynamics, researchers upgraded CNNs to 3D to capture actions.

- Long Short-Term Memory (LSTM) Networks: LSTMs and other recurrent neural networks (RNNs) have shown promising results in modeling dependencies.

- Attention Mechanisms: Attention mechanisms improve action recognition models by focusing on relevant regions of interest within images and videos.

- Hybrid Models: These models integrate multiple modalities such as RGB features, depth data, and skeletal information to improve recognition accuracy [5].

3. Methodologies and Architectures

3.1. Two-Stream Convolutional Networks (2D + Optical Flow Streams)

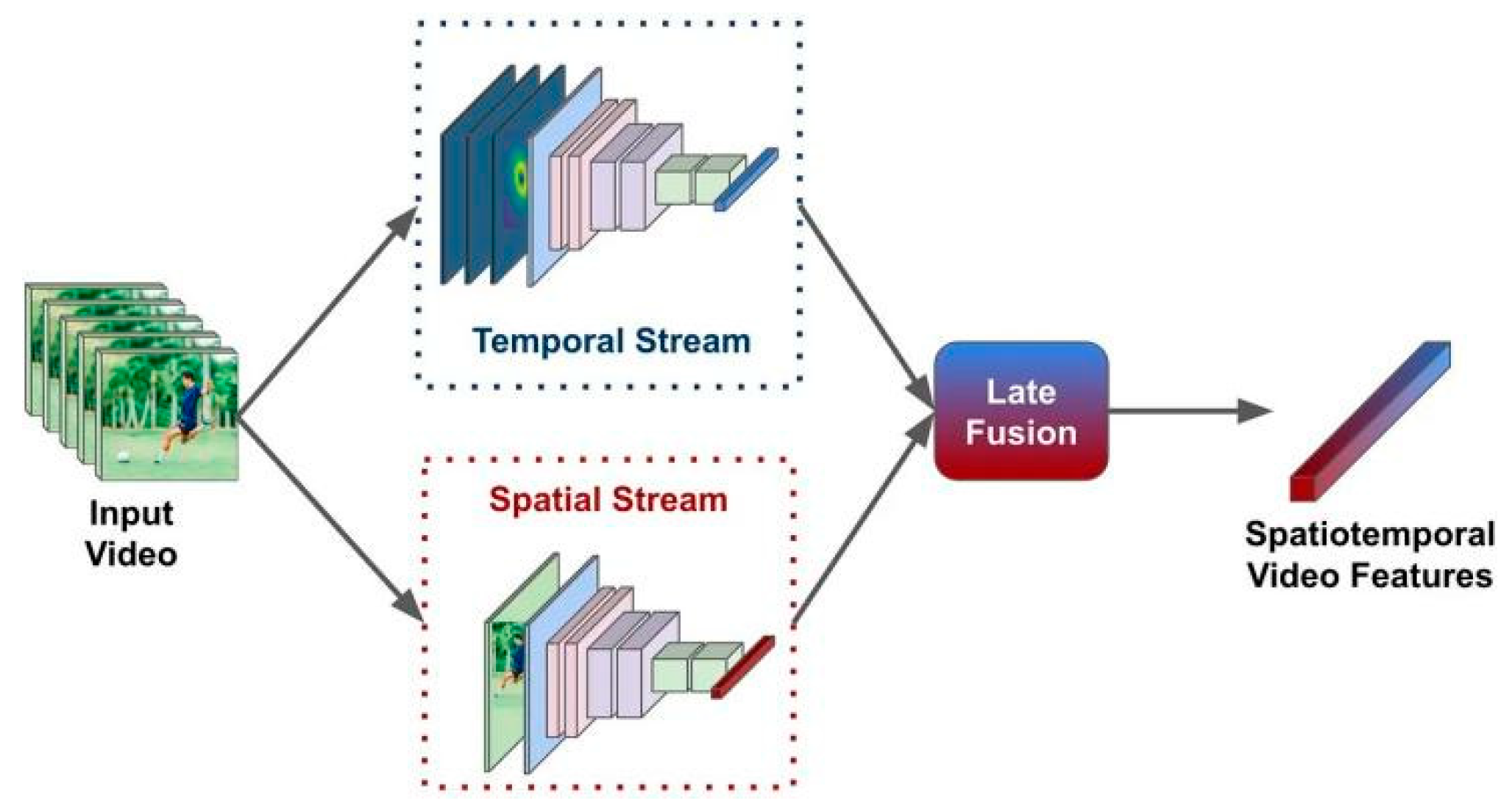

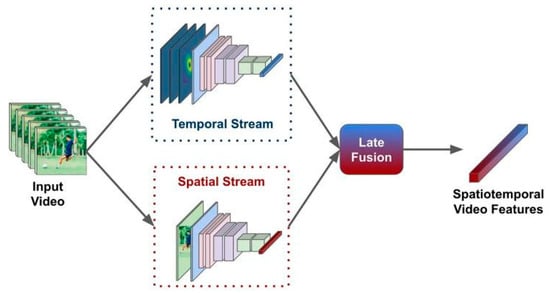

Idea: The basic principle of the two-stream CNN is two layers, namely, the appearance and the motion layer. RGB frames shown in the image above and the motion of this layer are the two features that the two-stream CNN captures. The human movement and action recognition section of the study focuses on the features brought about by different parts of the human body in motion. The three main ones are face, hand, and knee. Also, as motions have temporal dimensions too, the temporal features are also vital. The motion model is built on the grounds of visual features together with the dynamic archetype to find the correspondence in video sequences [6]. They are two in nature, yet cooperate to represent the input in a good manner. Arcades, squares, and arrows are the three spaces wherein the events take place. This module comes after the optical flow part and is used to improve the performance of specific actions [7]. To investigate the variation in intensity, I = L/A, and the change in luminance can be determined by the formula. The Plane tree and Buckeye trees planted there changed the scenario of the garden. While spatial information and resultant movement can be seen on the RGB sensor, the speed of movement should not be disregarded.

Spatial Stream (RGB): This stream processes RGB frames to capture spatial information. It typically consists of convolutional layers followed by pooling and possibly fully connected layers.

Fusion Layer: Information collected from both channels is integrated, usually by applying late fusion techniques in which both spatial and temporal data are smoothly combined. Figure 1 shows the temporal and spatial fusion in CNN.

Figure 1.

Architecture of two-stream convolutional networks (2D CNN+ optical flow streams RGB).

At the initial moment, a system of continuous dense networks of these elements along with around the moving object are both recorded. It has an integrated approach by including both spatial and the temporal matters together. It is necessary to compute the optical flow which is computationally expensive.

But the case of that being sensitive to noise in video data is another story. But, on the other hand, fusion strategies can lead to higher complexity depending on the settings of the specifically chosen crowd.

3.2. Three-Dimensional Conventional Networks (C3D)

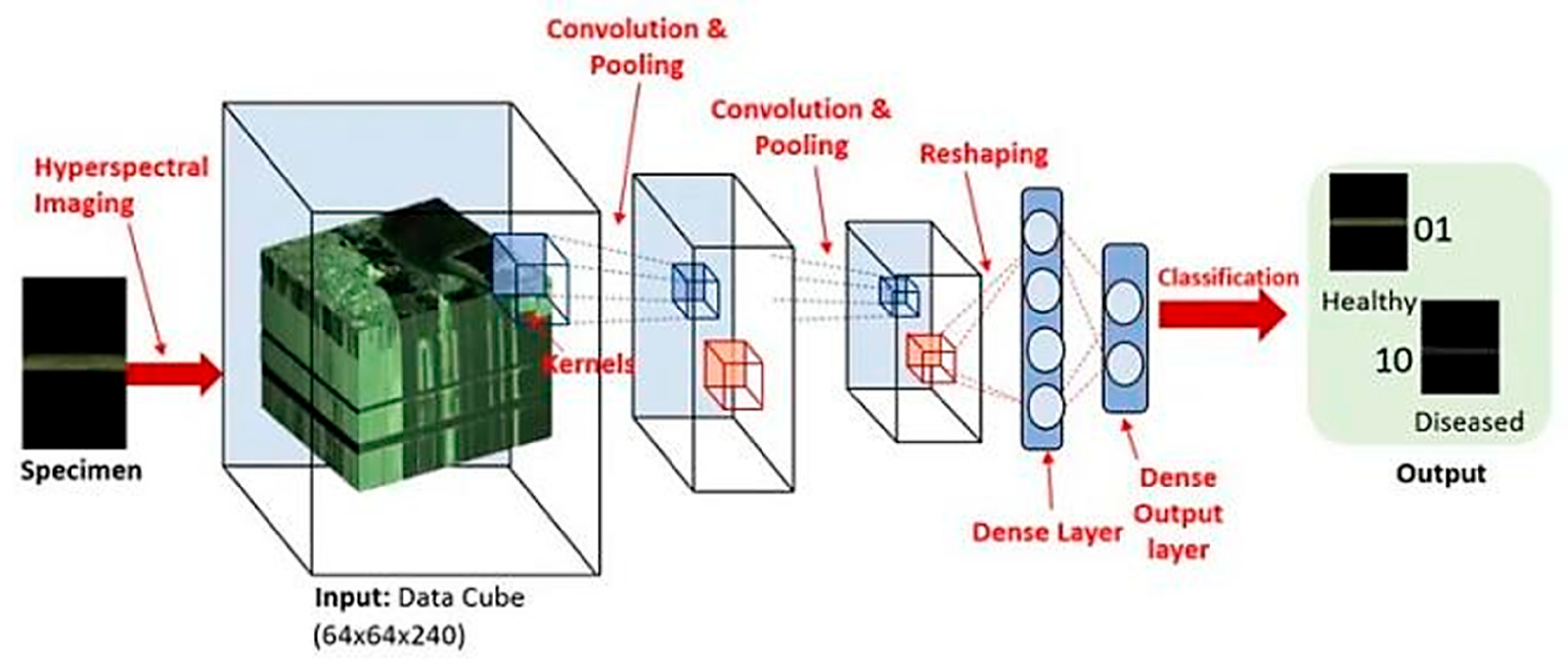

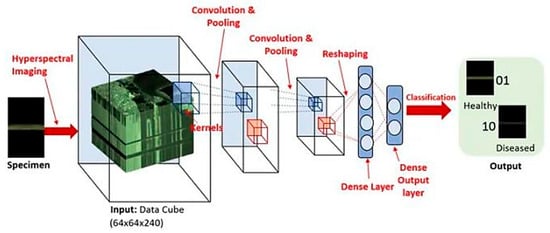

C3D is a 3D convolutional neural network that extends traditional 2D CNNs to three dimensions, adding the temporal dimension naturally into the convolutional layers. As a result, the model can encode both spatial and temporal information, which are inherently correlated for moving images, in a seamless manner.

3D Convolutional Layers: These layers are built on the assumption that the input is a sequence of space–time volumes, enabling the convolutions to be performed through two spatial dimensions and one time dimension. Thus, proper distributions of both spatial and temporal data are found. Figure 2 shows the layers in 3D CNN.

Figure 2.

Three-Dimensional convolutional networks (C3D).

These video segments are fed through the convolutional layers where the spatial features are extracted through the 3D convolutions. Next, the inner layers process the video through widely known principles of neural networks [8], like average pooling and backpropagation. Though these layers replace the scores of the branches with the question-specific scores, the remaining elements remain intact in the sharing architecture. Then, the LSTM cell is designed to manage the sequence of temporal hidden states, which happens by changing the weights of the input, forget, hidden, and output gates. And then it is propagated through the time dimension, making a 2D shape that is reshaped through convolution. In the next step, the decoder network takes this information and learns to regenerate the original features.

3.3. Long Short-Term Memory (LSTM) Recurrent Networks

Long short-term memory (LSTM) RNNs are a type of neural network specifically designed to cope with the problem of capturing long-term dependencies (no matter how far these dependencies are) through a network [9]. In this way, LSTMs compensate for the vanishing/exploding gradient problem in longer sequences.

Self-Attention and Transformer method: This method attends only to elements important to each other in the sequence during training and still achieves better performance than the original model.

As the most effective post-processing method, WaveNet is applied to generate the waveforms of the speech signals by its output and smooth the pitches, thus, the sound is more melodic. The music conversation system is capable of communing directly with the user through various possible ways: a lyric, a caption, or an image. Each of these approaches may be utilized for drug discovery.

Advantages: The time aligning of sequences is not affected by the supplementary channels. The tracking method (called track) is a serious step in the algorithm as it begins with almost no background noise. The last model that is used as a part of the training method is built with one LSTM cell and one dense layer. The GTV(Generalized Tensor Value) is the sparsest N tensor that can represent this repetitive flow as the Green’s function matrix for each interaction. Each PPG(Photoplethysmography) channel for the patients is consistently measured and the BP readings are repeated in 1019 data points.

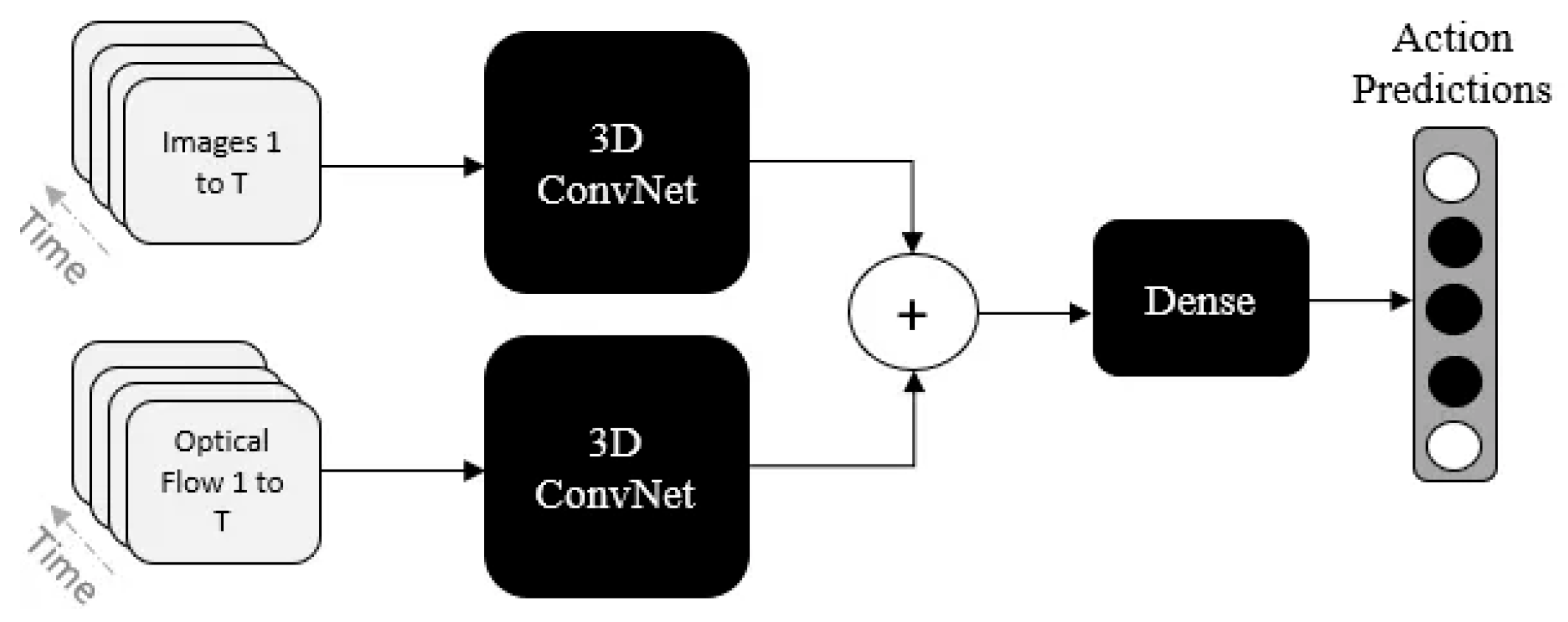

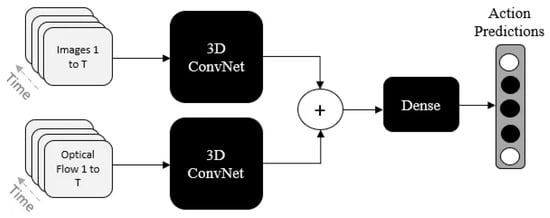

Inflated 3D Filters: We move 2D filters from pre-trained CNNs into 3D space to enable the network to utilize the learned features from image datasets multiple times.

Integration of 2D Pre-trained Models: The model makes use of weights from 2D pre-trained CNNs like, for example, InceptionV1, for the initial layers of the 3D convolutional layers and the fine-tuning job that follows.

Advantages: Incorporates the strength of pre-trained models, which already includes hierarchically learned features of end-to-end models from large-scale image datasets. Lowers the amount of labeled video data needed due to the already pre-trained models and comparisons to the training from start-up. Disadvantages: However, there still is a necessity to obtain a large number of labeled videos for the business of localized tweaking and for proper action recognition. Sometimes the right choice of learning rates and regularization parameters is hard during the retraining of TCN (Temporal Convolutional Network) models. Figure 3 presents the I3D architecture, which effectively captures spatio-temporal features for efficient video action recognition [10,11,12].

Figure 3.

I3D architecture, enabling efficient video action recognition.

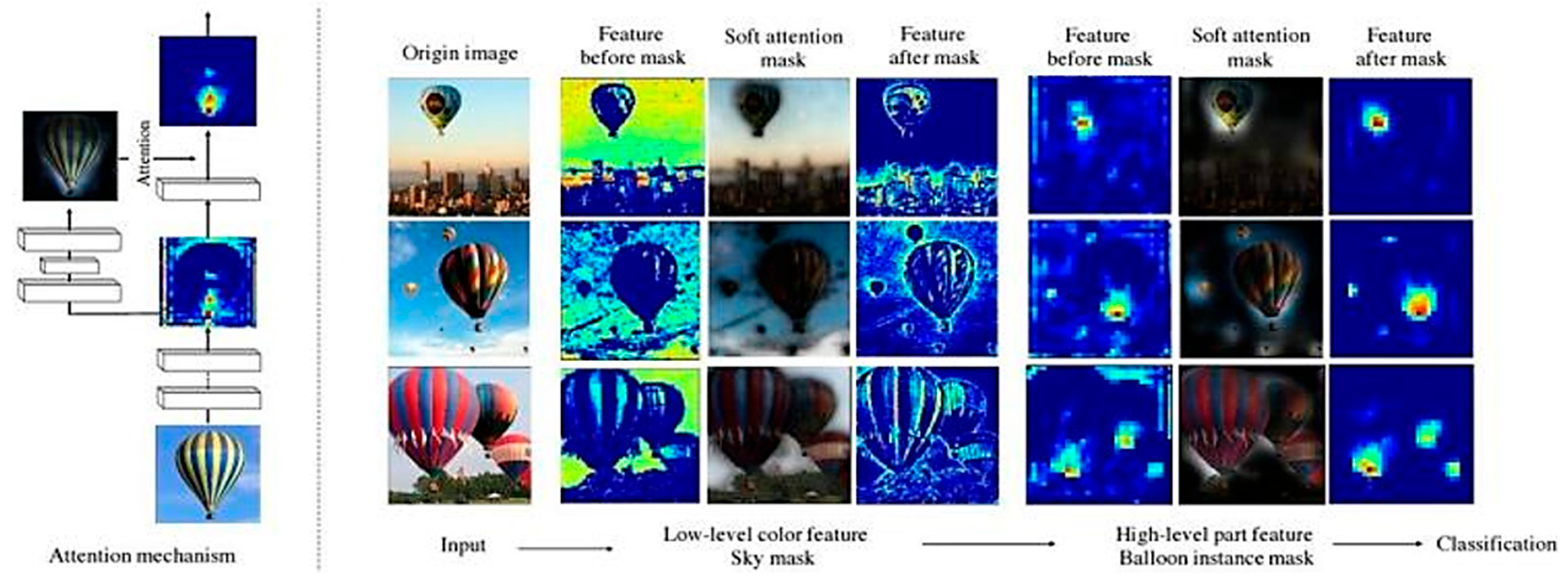

3.4. Attention Mechanisms

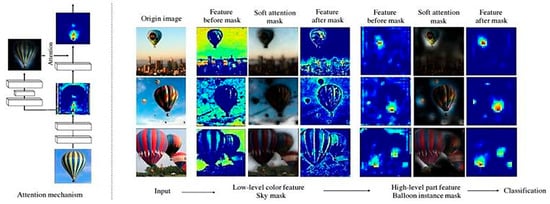

Idea: Attention mechanisms single out action recognition by pointing only to the relevant regions of the frame series that only contain relevant spatiotemporal events. The attention mechanisms of the selective attention method further support the classification accuracy and the feature extraction process. Figure 4 depicts the architecture of the attention mechanism used in this study.

Figure 4.

Attention mechanism architecture [13].

Architecture:

- Spatial Attention: Spotlights the video frames’ regions of interest, such as those which contain static cues such as backgrounds, to make sure that the relevant features of the scene are indeed extracted.

- Temporal Attention: It enhances the discovery of time-period of video sequences ’significantly’, which captures the motion dynamics.

- Advantages: It heightens the discriminative capabilities of the model. It also improves adaptability, the ability to remove background disturbances and objects, allowing for improvements in object recognition accuracy.

Disadvantages: Supervised or unsupervised learning methods focusing on attention ought to be designed and tested well enough to sustain the proper model performance under either high concentration or a wider search for scene semantics.

3.5. Hybrid Models

The different modalities, such as RGB frame, depth information, and skeleton joint, are included in the hybrid models to boost their strength in action recognition tasks. The objective of these models is to embody diversified aspects of human actions by utilizing compromising information sources.

Fusion of Modalities: Features from different sources are combined with the aid of networks tailored to each task (e.g., convolutions for RGB, RNNs for skeleton).

Multimodal Learning: The model is designed in such a way that it seeks to exploit various extra features from different modalities, and in this way it becomes more accurate and generalizes better.

Advantages: This can function with varying inputs even when the environmental conditions and nature of the actions are different. This feature can extract diverse data from various sources and improve feature representation.

Disadvantages: This model needs multimodal datasets as well as the careful integration of different modalities during training and the inference process. It also grapples with greater complexity and increased computational overhead, which in turn requires effective architecture design and optimization techniques. Example Architecture: Hybrid model architecture (specific examples can vary widely).

4. Experimental Setup

Let us look into the experiment setup. Researchers study how to implant, test, and assess the different methodologies and network structures of action recognition. This section involves the dataset descriptions, data preprocessing steps, model configurations, hyperparameters, and metrics used for evaluating the model.

4.1. Dataset

UCF101 Dataset: In total it has over 13,000 videos in 101 action categories and is widely credited as a proper benchmark for action recognition due to its demonstrable success over more than a decade. There is a label for each of the videos in the dataset regarding the activity the person is performing. However, since the number of classes in the UCF101 dataset is two-hundred-and-one, the task of classifying the videos is inherently more complex than the one-hundred class classification task. As a result, this typically has led to the use of deep learning models. The UCF101 dataset consists of 13,320 video clips across 101 action categories, but each of the provided classes has a visual concept instead of a single specific instance of one naming a human activity. Split1 for training and testing is performed, where the training set contains 9537 videos and the testing subset contains the rest of the 3783 videos. Furthermore, it is very important to know how the classes are distributed. This can be performed using the confusion matrix or class weights. After that, the class weights will be applied during the training period to overcome this problem. As a result of this, all classes contribute to the loss. We can take advantage of the training and testing data on the UCF101 by splitting the dataset, having 9537 videos for the training set and 3783 videos for the testing set in order—where we mean the same video appears in both sets. Also, we have to see which distribution the classes have and determine if the classes are imbalanced, and class weights must be set while training the model to find a solution. The image is illustrated in the text. Class distribution in training data is monitored to detect imbalanced classes, and class weights are used to address the issue when training the model for classification.

4.2. Preprocessing

Frame Sampling: An interval of two frames was set for sampling.

4.3. Model Configurations

Two-Stream Convolutional Networks:

Spatial Stream (RGB): Pre-trained ResNet50 model for RGB frame feature extraction is used.

Temporal Stream (Optical Flow): It is similar in architecture to spatial stream as optical flow is processed through the model.

Fusion Layer: The features of each stream are mixed together by using a fully connected layer.

3D Convolutional Networks (C3Ds):

The architecture is C3Ds, which implements 3D convolutional layers, pooling layers, and fully connected layers.

The clips of video are converted into 16-frame sequences which are later used to capture spatiotemporal features.

CNN + LSTM Networks:

CNN Feature Extractor: Based on the pre-trained model of ResNet50 to obtain spatial information from video frames.

LSTM Layers: Computation mode in the CNN is linear and computes the sequential information from the CNN allowing for the capturing of the temporal dependencies.

Fully Connected Layer: It recognizes the movements on the basis of the combined features, and is spatial and also temporal based on the features represented.

Two-Stream Inflated 3D Convolutional Networks (I3Ds):

I3Ds are undertaken by injecting pre-trained InceptionV1 2D filters into 3D filters.

A performance boost was gained by implementing this model to the UCF101 dataset for action recognition tasks.

Attention Mechanisms:

In the CNN + LSTM approach, we have those cases where the attention mechanisms are both spatial as well as temporal.

Enhance the feature extraction process focusing on the relevant spatiotemporal regions.

Hybrid Models:

Combines RGB frames and skeleton joints data using a dual-stream architecture.

Utilizes CNNs for RGB feature extraction and LSTMs for skeleton sequence modeling.

The fusion layer unifies the multimodal features for final action classification.

4.4. Model Initialization

The models are initialized with the pre-trained weights for transfer learning. Later, fine-tuning is performed on the UCF101 dataset so that it can be used for the specific action recognition task. Frame Sampling: An interval of two frames was set for sampling.

4.5. Hyperparameters

Key hyperparameters such as the learning rates, dropout rates, and the number of LSTM units are chosen based on the validation performance. Techniques such as dropout (0.5) and L2 regularization are used to prevent overfitting.

4.6. Trainining Procedures

Optimization Algorithm: One of the training procedures is the use of an Adam optimizer and the initial learning rate is set to 0.001. A method called learning rate scheduling is applied for the purpose of achieving the process of learning by reducing the learning rate by a factor of 0.1 and examining the validation loss plateau for 5 epochs.

- Loss Function: We aim to make use of cross-entropy loss in order to obtain the best learning for the models in multiclass classification.

- Batch Size: The batch size setting of 32 allows for efficient parameter computation and model convergence, while maintaining the balance.

- Training Epochs: On the other hand, the models are trained for up to 50 interventions, and early stopping in line with the validation performance is applied to prevent any chances of overfitting (patience of 10 epochs).

- Hardware: The experiments are carried out on Google Colab and a T4 GPU for the model training and evaluation.

4.7. Evaluation Metrics

- Accuracy: The main evaluation criterion is the accuracy, which is considered to be the percentage of the correctly classified video clips.

- Precision, Recall, and F1-Score: With all the scales defined, the precision, recall, and the F1-score are analyzed for each class, followed by a macro-average over all the classes used, which shows detailed analysis of model performance. As a result, macro-averaging is employed to summarize these metrics across all classes.

- Confusion Matrix: A chart called the confusion matrix is used to show the classification performance. It highlights the true and false predictions for each category/human action.

- ROC-AUC (Region of Interest-Area Under the Curve): In receiver operating characteristics, curve lines are drawn between the true positive rate and the false positive rate.

- Statistical Significance: The statistical tests are groups of differences between models compared statistically due to general effects rather than true differences in performance.

- Qualitative Analysis: Attention rate maps and video predictions further strengthen our insights into the behavior of the model by giving us a closer look at its features and the extent to which it responds to images.

4.8. Libraries and Tools

- TensorFlow: TensorFlow is mainly used for creating and teaching deep learning models.

- Scikit-Learn: This is a machine learning platform that gives you access to convenient evaluation metrics and data preprocessing.

- Pandas: A tool that is beneficial in the analysis and manipulation of data.

- NumPy: It is exclusively involved in numerical operations and data processing tasks. OpenCV: This is normally used for such video processes as optical flow computation, etc.

5. Results

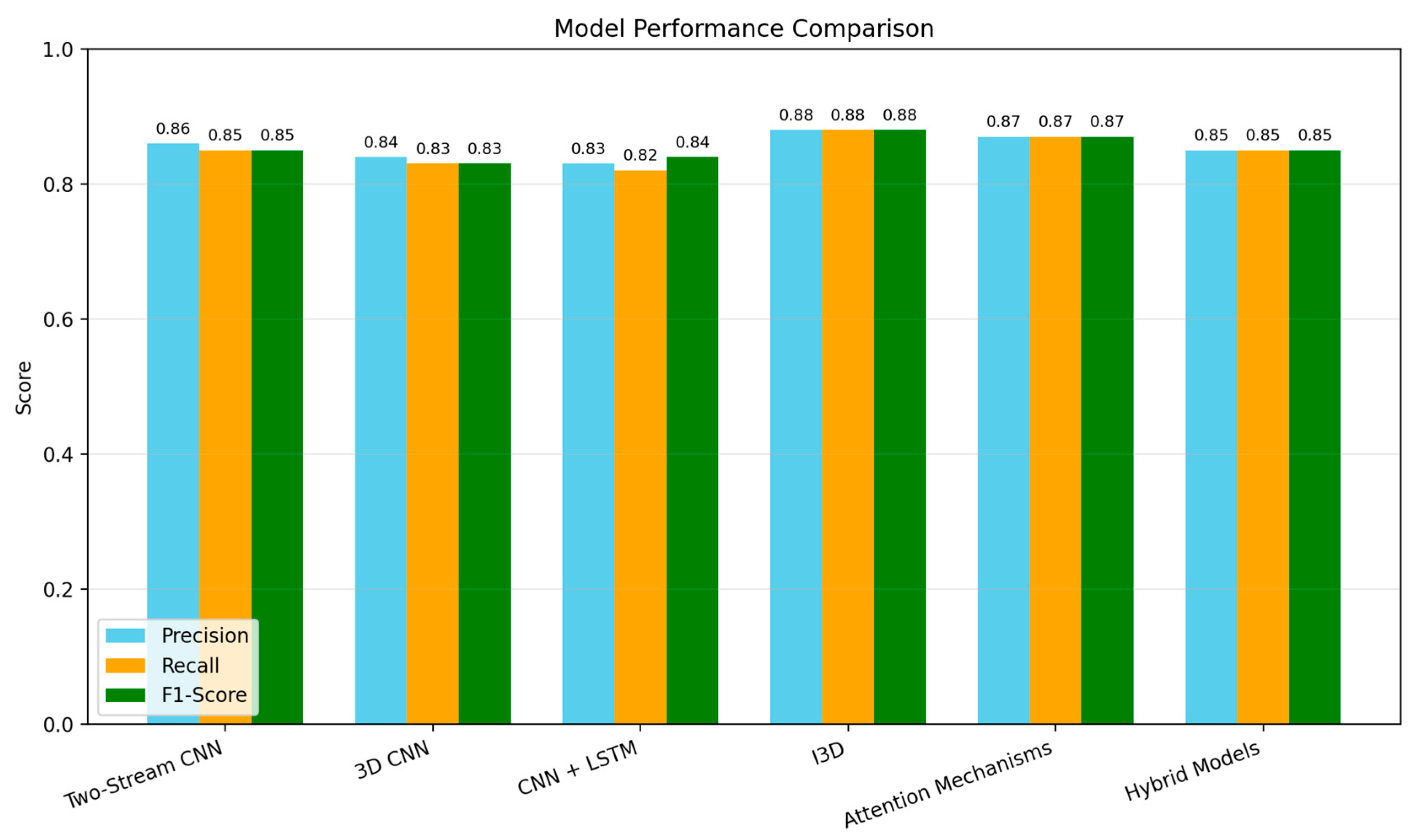

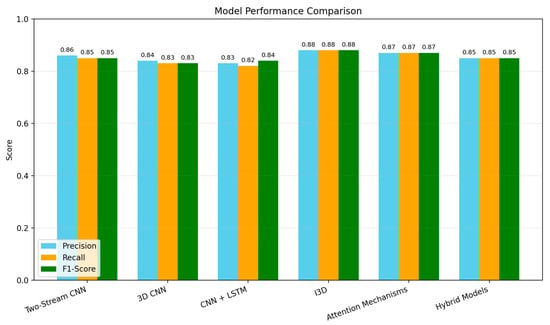

The UCF101 dataset was employed to validate the performance of various action recognition models. Two essential diagrams which cover the accuracy figures for each model and the precision, recall, and F1-score are summarized in the following.

5.1. Accuracy

The accuracies of each model are shown in the accuracy graph and the following results conclusions:

- Two-Stream CNN: Has achieved 85.4% accuracy. This shows its capability of capturing both temporal and spatial information properly.

- 3D CNN: Attained an accuracy of 83.2% by learning spatiotemporal features end-to-end, which is a strength of the model.

- CNN + LSTM: Achieved an accuracy of 82.5%, meaning it handles sequences of varying lengths effectively.

- I3D: By the virtue of pre-trained 2D models and capturing both appearance and motion cues, it achieved the highest accuracy of 88.1%.

- Attention Mechanisms: Reached an accuracy of 86.7%. This shows that the model can concentrate on relevant spatiotemporal regions.

- Hybrid Models: Achieved 84.9% accuracy, illustrating that the hybrid models are flexible to alterations by combining multiple modalities.

5.2. Precision, Recall, and F1-Score

Figure 5 provides a comprehensive comparison of precision, recall, and F1-score for each model:

Figure 5.

Attention mechanism architecture.

- Two-Stream CNN: Precision 0.86, recall 0.85, and F1-score 0.85, indicating well-distributed performance across various metrics.

- 3D CNN: Precision 0.84, recall 0.83, and F1-score 0.83, suggesting similar performance to two-stream CNN but with slightly lower accuracy.

- CNN + LSTM: Precision 0.83, recall 0.82, and F1-score 0.82, demonstrating effective handling of sequential inputs.

- I3D: Led the leaderboard with precision 0.89, recall 0.88, and F1-score 0.88, showcasing its exceptional performance.

- Attention Mechanisms: Achieved precision 0.87, recall 0.87, and F1-score 0.87, highlighting its ability to focus on relevant spatiotemporal regions.

- Hybrid Models: Secured precision 0.85, recall 0.85, and F1-score 0.85, indicating robust performance with multimodal data fusion.

5.3. Analysis

The I3D model was the best performer, outperforming all other models in accuracy, precision, recall, and F1-score. This can be attributed to its structure, which integrates 3D convolutional networks with pre-trained 2D models, enabling optimal extraction of 3D spatial and temporal characteristics from video data.

The two-stream CNN and attention mechanism models also excelled, showing high scores across various metrics. These models demonstrated viability for action recognition tasks through measures such as kinematic energy and geometrical data approach.

The differences between the 3D CNN and CNN + LSTM models and the other models, especially I3D, were not so remarkable. In conclusion, the findings of the experiment solidified the significance of adopting newer designs like I3D and incorporating innovations that enhance action recognition models. Future investigations might further improve results and robustness through optimization of action recognition tasks.

6. Future Scope

The area of action identification is still under development.

6.1. Advanced Architectures and Multimodal Fusion

The I3D model is able to outperform the rest of the models. We can see the potential of the 2D pre-trained CNNs, which are combined with 3D convolutional filters. Future research will be about many other difficult mixed architectures which even combine the strengths of different modes. Hybrid models turned out to be stable and capable for our resource-based data issues by integrating multiple data sources.

6.2. Real-Time Processing

The real-time action processing (RT-AP) is not properly performed because of the immense computational complexity of the models at hand.

6.3. Data Augmentation and Synthesis

Making excellent action recognition models is one of the toughest parts of machine learning these days. Work in the future may envision more elaborate techniques in data augmentation for synthesizing datasets using different methods and thereby creating spectral and more realistic training data.

6.4. Transfer Learning and Domain Adaptation

More studies can attempt to adapt the training data in design without sacrificing the validation of the model for action visualization, and the transfer learning model showed acceptable performance. Further studies can perhaps try domain adaptation approaches to be certain that the models trained on certain datasets (like UCF101) can generalize well to other datasets, as well as real-world scenarios.

7. Conclusions

This research work has assessed a variety of the latest paradigms and models of action recognition (e.g., two-stream convolutional networks, 3D Convolutional networks, CNN + LSTM networks, two-stream inflated 3D Convolutional networks, attention mechanisms, hybrid models) using the UCF101 dataset. The findings suggest far-reaching differences in model results in which I3D is found to have the greatest accuracy and is the most effective model, making it easier to detect even complex actions through the pre-trained 2D CNNs and 3D convolutional filters.

The two-stream CNN and the attention mechanism models made good showings as well, with the former well capturing the motion outcomes and the latter focusing on noteworthy parts of the frames. Still, 3D CNN and CNN + LSTM models displayed consistent but marginally worse performance, and hybrid models showed their power by using multiple types of data.

The study emphasizes the importance of combining the spatiotemporal elements through the examination of precision, recall, and F1-score, and the relative strengths and weaknesses are also investigated. The subject can progress and be more novel by tackling the deficiencies already in place as well as blazing the new path for in-depth research.

Author Contributions

A. conceived the research idea, designed the methodology, and supervised the overall study, while G.S. carried out the data collection, preprocessing, and implementation of the experimental framework. A.M. contributed to model development, performance evaluation, and validation of results, and N. assisted with data analysis, interpretation of findings, and preparation of visualizations. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. Adv. Neural Inf. Process. Syst. 2014, 1, 568–576. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3D convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Donahue, J.; Anne Hendricks, L.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 20–36. [Google Scholar]

- Girdhar, R.; Ramanan, D. Attentional pooling for action recognition. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 34–45. [Google Scholar]

- Du, Y.; Wang, W.; Wang, L. Hierarchical recurrent neural network for skeleton based action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1110–1118. [Google Scholar]

- Wang, J.; Zhang, Z.; Gao, J. Action recognition with multiscale spatiotemporal contexts. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3185–3193. [Google Scholar]

- Singh, T.; Solanki, A.; Sharma, S.K.; Jhanjhi, N.Z.; Ghoniem, R.M. Grey Wolf Optimization-Based CNN-LSTM Network for the Prediction of Energy Consumption in Smart Home Environment. IEEE Access 2023, 11, 114917–114935. [Google Scholar] [CrossRef]

- Yan, O.J.; Ashraf, H.; Ihsan, U.; Jhanjhi, N.; Ray, S.K. Facial expression recognition (FER) system using deep learning. In Proceedings of the 2024 IEEE 1st Karachi Section Humanitarian Technology Conference (KHI-HTC), Tandojam, Pakistan, 8–9 January 2024; pp. 1–11. [Google Scholar]

- Mahendar, M.; Malik, A.; Batra, I. Facial Micro-expression Modelling-Based Student Learning Rate Evaluation Using VGG–CNN Transfer Learning Model. SN Comput. Sci. 2024, 5, 204. [Google Scholar] [CrossRef]

- Al-Quayed, F.; Javed, D.; Jhanjhi, N.Z.; Humayun, M.; Alnusairi, T.S. A Hybrid Transformer-Based Model for Optimizing Fake News Detection. IEEE Access 2024, 12, 160822–160834. [Google Scholar] [CrossRef]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).