1. Introduction

Since obesity contributes substantially to the global burden of chronic illnesses like metabolic syndrome, type 2 diabetes, and cardiovascular conditions, it has emerged as a significant public health concern. Although BMI (Body Mass Index) and other traditional techniques of assessing obesity are widely used, they often fail to consider body composition and other important criteria, which leads to misclassification [

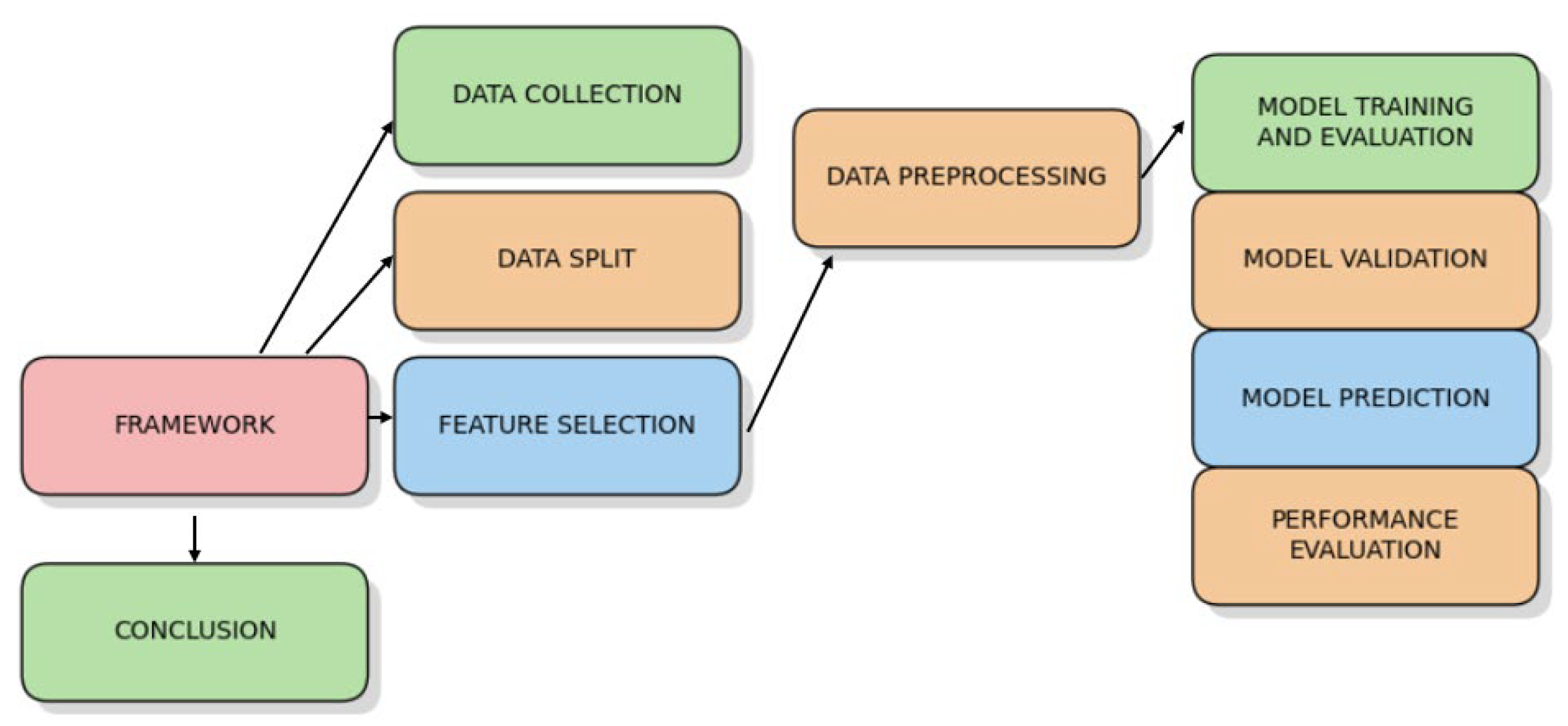

1]. One promising tool is ML (machine learning), which makes use of different types of datasets in the pursuit of subtle patterns and the interconnectedness of causal factors related to obesity. Recent studies have shown how well machine learning algorithms predict obesity by considering variables including metabolic markers, physical activity, and eating patterns. However, most of the current methods either use a small number of datasets or concentrate on a small number of algorithms, which leaves gaps in reaching the best possible predicted accuracy. By evaluating the performance of four machine learning algorithms, decision tree, naïve Bayes, random forest, and K-NN (K Nearest Neighbor), on a carefully chosen dataset that contains several traits associated with obesity, this work seeks to close these gaps. Finding the optimal algorithm to predict obesity is the aim of this study, with an emphasis on appropriate feature selection and data processing. The findings add to the expanding corpus of research on machine learning applications in obesity management and lay the groundwork for creating practical, evidenced-based strategies to address the obesity pandemic. The methodology of the dataset, results, and potential avenues for future research are covered in depth in the sections that follow. The workflow of our research paper is illustrated in

Figure 1.

2. Literature Review

By addressing the shortcomings of BMI on height and weight while ignoring body composition, which alters accurate obesity management, Jeon et al. uncovered a gap in the use of extensive machine learning models that involve 3D body scans, dual-energy X-ray absorptiometry (DXA), and bioelectrical impedance analysis (BIA) data in a unique framework that has been enhanced through featuring the selection of a genetic algorithm. The authors’ model excelled compared to BMI- and BIA-based techniques by a significant amount, reaching an accuracy rate of 80%. With its robust machine learning methodology, this study exhibited better obesity division than earlier research with narrow scopes or less model comparisons [

2].

Jeon et al. addressed the limitations of the model that only includes lifestyle variables when trying to predict obesity by choosing to emphasize metabolic parameters that are distinct to age and gender. They detected a research gap in the adoption of metabolic data for individualized obesity categorization and employed six machine learning modules implementing to predict obesity risk. With an accuracy of more than 70% for younger groups and less for older ones, their result demonstrates the significance of triglyceride, glycated hemoglobin, alanine aminotransferase i.e., ALT (SGPT), and uric acid as predictors. Unlike earlier studies that concentrated on a smaller number of models or lifestyle factors, this metabolic approach enables more thorough predictions over a wide range of demographics [

3]. Du et al. designed machine learning-based obesity risk prediction in response to the need for accurate and reasonably priced treatments for obesity and the chronic conditions it is associated with.

This strategy includes 10 models with extensive data, choosing XGBoost for its better performance compared to conventional approaches that have low predictive availability and frequently regard obesity as a binary outcome. By using SHAP (SHapley Additive exPlanations) analysis to identify important variables like hip circumference and triglycerides, the system demonstrates great prediction accuracy. In contrast to earlier research, it provides a more comprehensive, multi-stage classification of obesity and improves useful applications for medical practitioners [

4]. Ref. [

5]’s study tackles the problem of differentiating between obese and overweight people, which is a crucial gap in the literature on obesity since most studies focus on comparison with individuals’ normal weight. The scientist used a machine learning technique that integrates the study of metabolism and neuroimaging to identify unique brain connection patterns and gut-derived chemicals as important distinctions. Their models show accuracy above 90%, suggesting that obesity may require different brain-gut processes than those seen in overweight people. This shows that there is a possibility for focused intervention techniques based on unique neuro-metabolic markers, in contrast to earlier research that suggested obesity was just an extension of the overweight phenotype [

6].

The need to predict obesity-related conditions like hypertension, dyslipidemia, and type 2 diabetes based on eating habits has increased as a result of the growth in cardiometabolic illnesses. This issue is tackled in the research performed by Ref [

7]. This study addresses a research gap by utilizing a deep neural network (DNN) to uncover complex relationships in dietary data collected from the Korea National Health and Nutrition Examination Survey, where previous research often relied on traditional statistical approaches. Regarding conditions like hypertension and type 2 diabetes, the DNN model demonstrated superior prediction accuracy compared to logistic regression and decision tree models. By implementing deep learning for more accurate classification and forecasting across various health conditions, this research expands upon previous studies conducted by Panaretos and Choe [

8]. The difficulty of predicting obesity and providing personalized meal suggestions to prevent obesity-related conditions is covered in a study by [

4]. This research fills in the missing piece by integrating predictions related to meal planning. Earlier studies used standard machine learning techniques for forecasting obesity. The author used two machine learning approaches: gradient boosting (GB) and XGBoost. GB achieved a notable accuracy of 98.11% based on a 90:10 train/test split. Furthermore, they recommended meal options based on caloric needs using the Harris–Benedict equation. This method is better than any previous methods, including those used by Pang et al. and Ferdowsy et al., as they offer a comprehensive tool for obesity management that combines forecasting with practical dietary recommendations [

9].

The worldwide issue of overweight and obesity and lifestyle-related diseases like cardiovascular issues and type 2 diabetes that people suffer with as a result are covered in this study. The study focuses on a research gap related to the inadequate use of data-related machine learning methods to analyze obesity risk factors using public health data. The author looks at the datasets from Kaggle and UCI (University of California) with various machine learning models to point out the risk factors and underscore the application of regression and visualization methods to enhance the strategies for obesity prevention.

This research provides a valuable perspective on data-driven health interventions by evaluating the anticipated effectiveness of different machine learning techniques [

7]. The main issues related to childhood obesity, which leads to the likelihood of developing long-term health issues such as diabetes and cardiovascular diseases, are examined in this study by Singh and Tawfik. They pointed to issues such as uneven BMI standards and data imbalance as restrictions in the use of sophisticated machine learning algorithms for identifying obesity in children. Their method forecasts obesity at age 14 based on past BMI records and improves prediction accuracy by utilizing techniques like SMOTE (Synthetic Minority Over-sampling Technique) and analyzing data from the “UK’s Millennium Cohort” Study. The outcome demonstrates that for “at-risk” youngsters, their sophisticated forecast accuracy outperforms more basic statistical models. This research is different from other studies as it uses sophisticated machine learning techniques to enhance obesity risk prediction [

10]. Colmenarejo’s research focuses on the rising issues of obesity among teenagers, which causes significant health risks such as metabolic disorders and cardiovascular diseases.

This study points to the limitations of traditional statistical models that struggle to capture the complex and nonlinear relationships present in obesity data. By exploring various models, including those that utilize deep learning methods and extensive datasets, the research shows the better predictive capabilities of machine learning for identifying obesity risk. When dealing with high-dimensional, multivariate data, machine learning (ML) outperforms simpler statistical methods by offering enhanced insights into risk factors and delivering more accurate predictions [

11,

12,

13].

The growing issue of obesity was tackled by Ref [

8], who highlighted the importance of identifying which type of lifestyle results in the majority of weight gain across different populations [

14]. The author points out a gap in existing research by employing interpretable machine learning techniques on data from the U.S National Health and Nutrition Survey (CHNS), keeping in mind the limitations of previous studies that are only based on a single country or condition statistical methods [

15]. Using a gradient boosting decision tree, their work indicates that the main factors contributing to obesity risk are protein intake, alcohol consumption, and lack of physical activity. This research aligns with earlier studies recognizing alcohol and inactivity as an obesity risk factor but surpasses them regarding cross-national scope and predictive performance.

3. Research Methodology

To predict obesity, machine learning classifiers are used on a carefully chosen dataset in this work. The goal of this project is to develop an efficient and understandable machine learning-based system that predicts obesity using Rapid Miner. The methodology includes choosing features, training models, and processing data and evaluation. The following algorithms were used in our experiment:

The dataset was divided relative to feature values utilizing a decision tree classifier, thereby creating a tree structure to categorize obesity levels. The dataset was periodically divided into subgroups by the tree according to the characteristics that decrease impurity or optimize information gain. Information gain in Equation (1):

where

is the entropy of the dataset,

the proportion of samples where feature

takes value

, and

is the entropy of the subset

.

Random forest is an ensemble learning method that builds several decision trees during training and chooses the most common class for classification tasks or average predictions for regression tasks to obtain the final result. Random forest formula shown in Equation (2):

where

is the final predictedoutput,

prediction of the

decision tree for input

a.

number of decision trees.

The K-NN algorithm classifies a data point based on most classes of its k-nearest neighbors. Distance of metric: the degree of resemblance between data points is determined using the Euclidean distance, the Equation (3) below:

where

is the distance measure,

is the distance (a non-negative real number) between data points

and

.

and

= the

coordinate (feature value) of data points

and

, respectively.

Assuming feature independence, the naïve Bayes classification technique is based on Bayes’ theorem. It works well with large data and uses feature likelihoods to estimate the likelihood that a data point belongs to a class. The Equation (4) below:

where

P(A|K) = posterior probability of class A given feature vector K;

P(K|A) = likelihood of K given class A;

P(A) = prior probability of class A;

P(K) = marginal probability of K.

3.1. Framework

The procedure of the proposed framework, which integrates several machine learning classifiers for predicting obesity, includes gathering and preprocessing obesity-related data; feature engineering, i.e., handling all missing values, selecting features, and selecting relevant predictors; model training and evaluation using machine learning algorithms to train and validate modes; and generating predictions using the model with the best performance.

Figure 2 shows framework of the proposed methodology, illustrating the sequence of processes from data collection and preprocessing through feature selection, data splitting, model training, validation, prediction, performance evaluation, and final conclusion.

3.2. Attribute with Description

Twenty-one characteristics, including physical stature, family history of obesity, and lifestyle choices, were included in the dataset utilized for this investigation. People are categorized by the goal variables into classes like normal, overweight, and obese.

Table 1 shows the features used in this research along with feature type and description.

3.3. Replace Missing Values

We used different techniques to tackle the missing values in the dataset to make it clear in order to achieve better performance and accuracy. The techniques we used to handle the missing values are as follows: to deal with the numeric features, we replaced the mean of the respective feature, and for categorical features, we replaced this with the most frequent category, ensuring that no data point is lost so that the dataset maintains its integrity.

3.4. Split Data

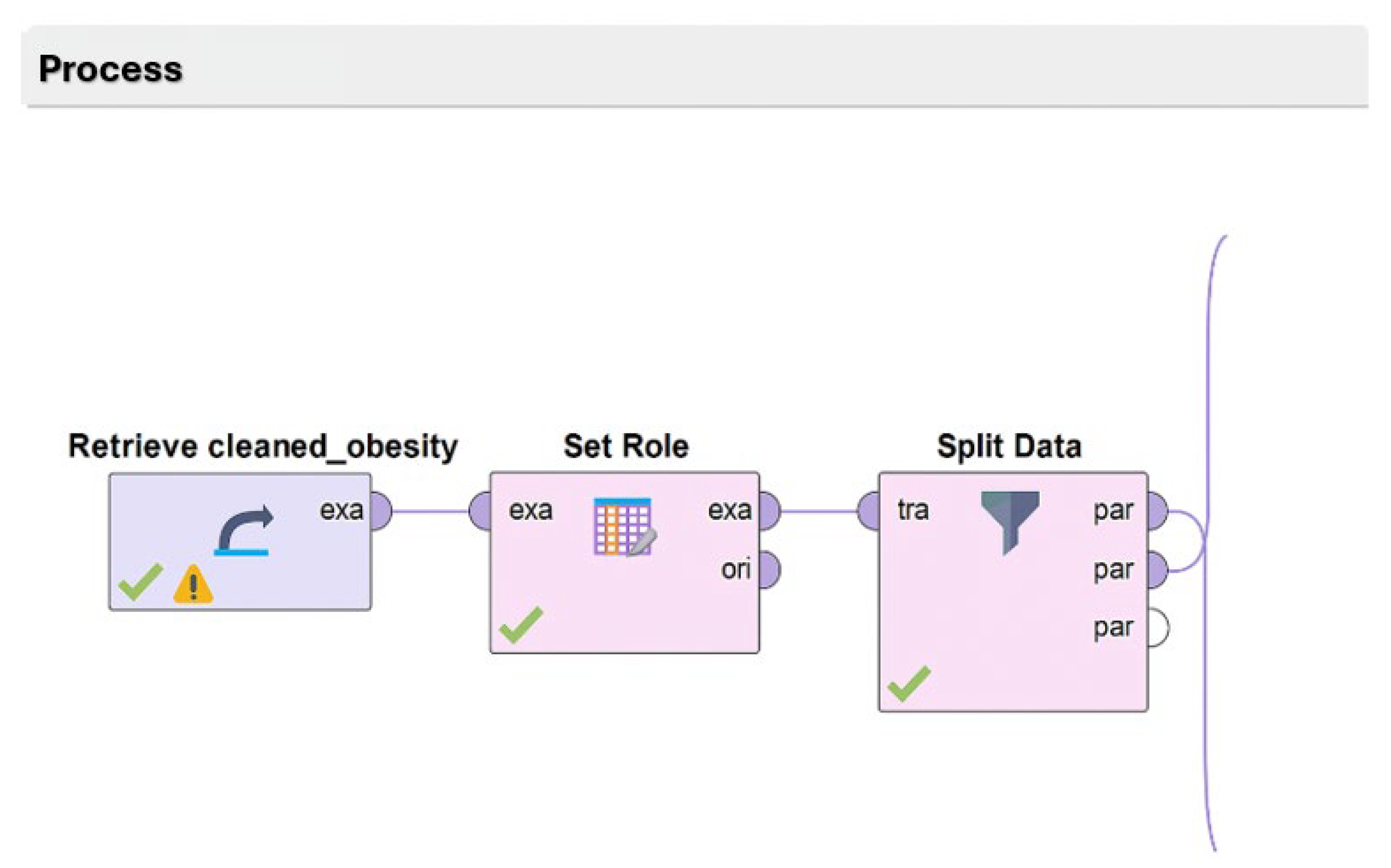

To guarantee proper model training and evaluation, the dataset was divided into two sections: training and testing. The workflow is shown in

Figure 3. Twenty percent of the data were set aside for performance evaluation, and the remaining eighty percent were used for training.

3.5. Machine Learning

Four distinct machine learning algorithms decision tree, random forest, K-NN, and naive Bayes—are used in the suggested methodology to forecast obesity based on the carefully selected dataset. Each of these algorithms was implemented within RapidMiner, and their respective performances were estimated based on accuracy as shown in

Table 2. The best accuracy of 98.33% was achieved with the decision tree algorithm, with a tree-based structure for the classification of the data. Random forest is another ensemble technique in which many decision trees are aggregated to obtain an improved version in terms of robustness; 98.27% accuracy was observed from this. In the K-NN algorithm, classification is made using the majority class of its neighbors, achieving a high accuracy of 98.03%. Finally, the probabilistic classifier was naive Bayes is the Bayes theorem-based method. It obtained only 90.08% accuracy; this was low because of the independence assumption among the features. The results show that tree-based models perform well in predicting obesity effectiveness; therefore, for this dataset, decision tree will be the appropriate algorithm.

4. Results

Random forest, K-NN, naive Bayes, and decision tree were the four machine learning algorithms that were examined in this study. At 98.33% accuracy, decision tree had the highest score, followed by random forest at 98.27% and K-NN at 98.03%. Naive Bayes, which assumed independence, performed the worst, with a score of 90.08%. All things considered, tree-based models performed well, so decision tree was the ideal option for this task.