Insights into the Emotion Classification of Artificial Intelligence: Evolution, Application, and Obstacles of Emotion Classification †

Abstract

1. Introduction

2. Methodology

2.1. Search and Selection Criteria

2.2. Screening and Eligibility

2.3. Data Extraction

2.4. Quality Assessment

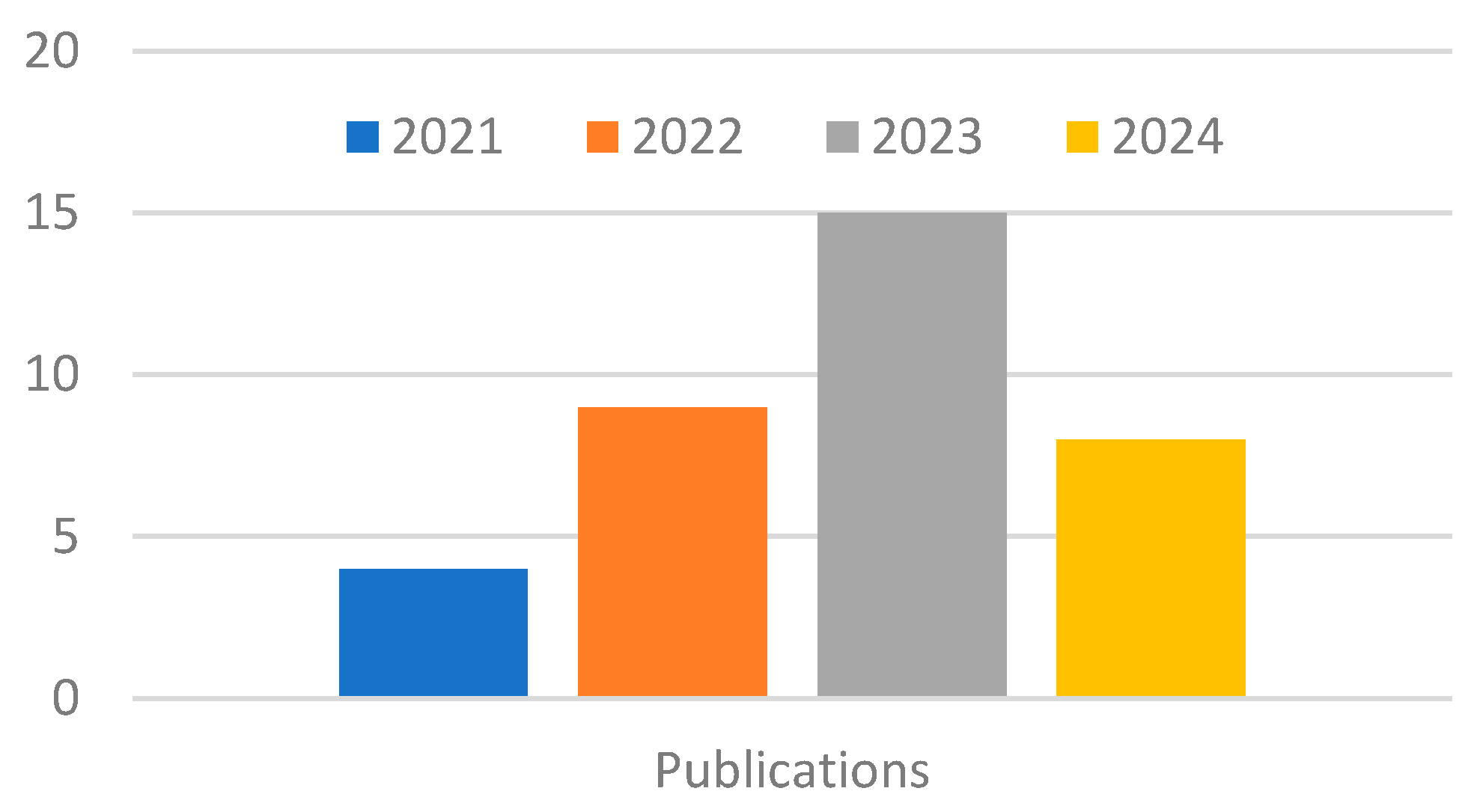

2.5. Results

3. Results and Discussion

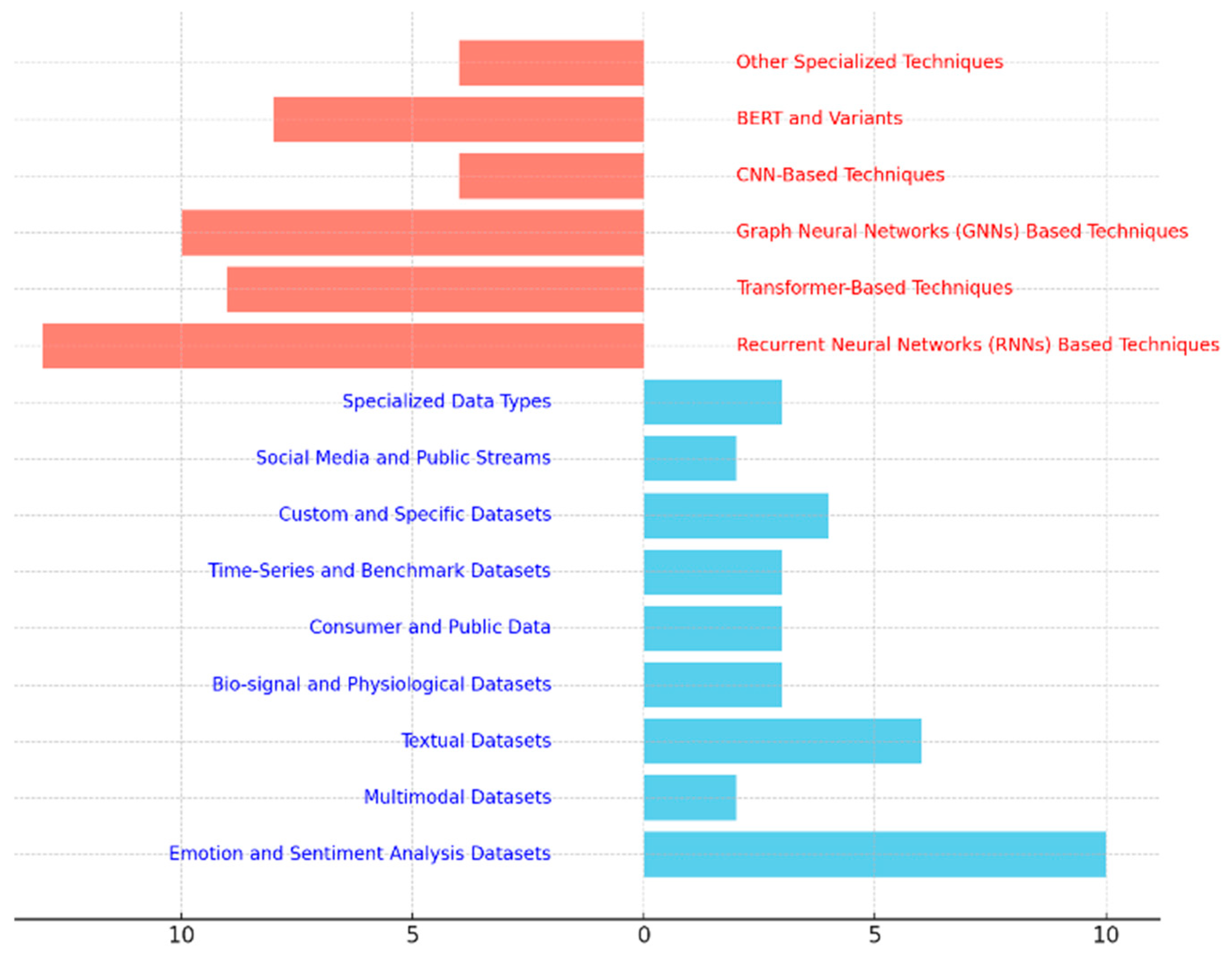

3.1. Datasets

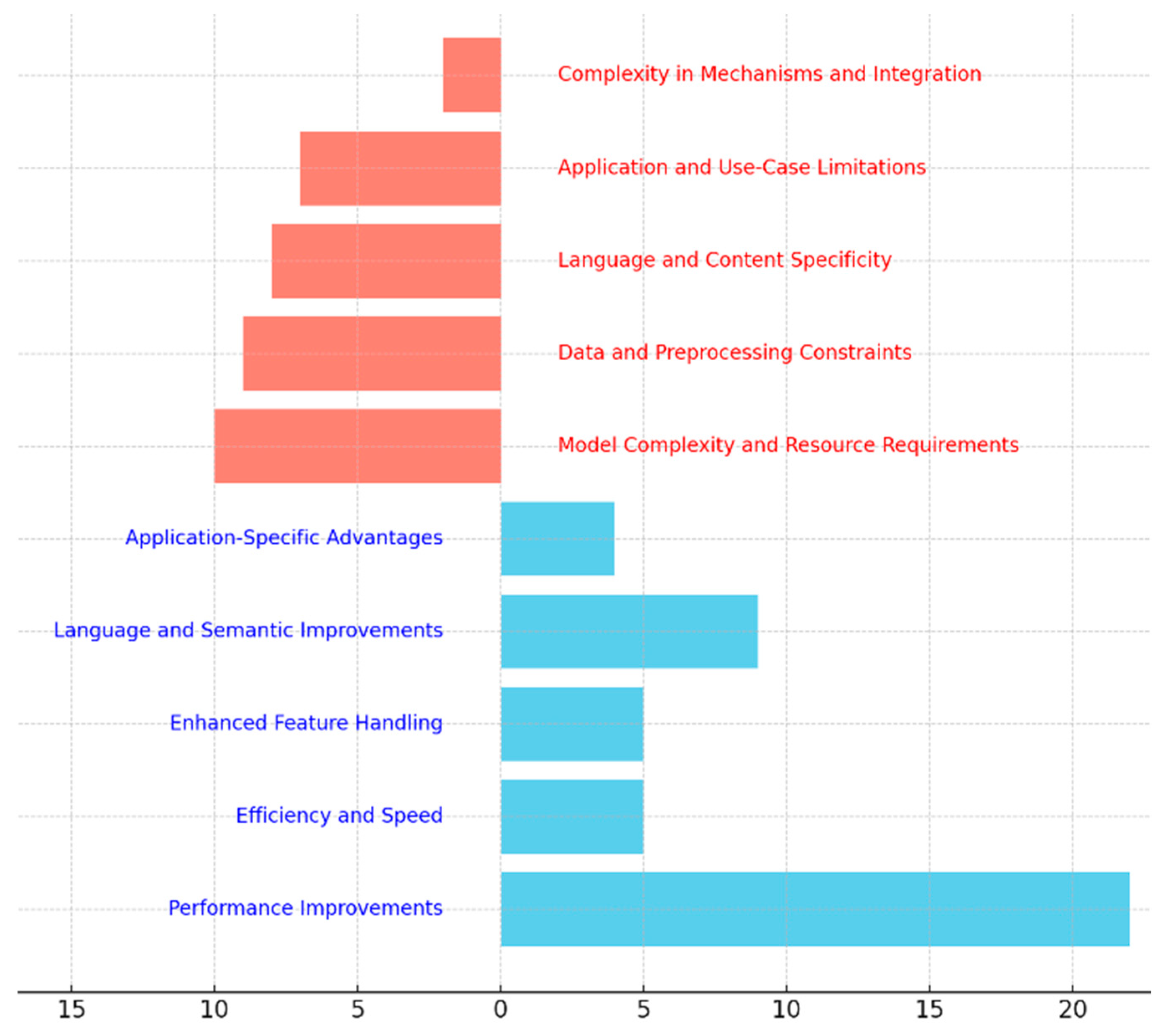

3.2. Advantages and Disadvantages

3.3. Opportunities and Challenges

3.4. Research Gaps

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, C.; Li, P.; Zhang, Y.; Li, N.; Si, Y.; Li, F.; Cao, Z.; Chen, H.; Chen, B.; Yao, D.; et al. Effective Emotion Recognition by Learning Discriminative Graph Topologies in EEG Brain Networks. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 10258–10272. [Google Scholar] [CrossRef]

- Zhu, T.; Li, L.; Yang, J.; Zhao, S.; Xiao, X. Multimodal Emotion Classification with Multi-Level Semantic Reasoning Network. IEEE Trans. Multimed. 2023, 25, 6868–6880. [Google Scholar] [CrossRef]

- Gao, Q.; Zeng, H.; Li, G.; Tong, T. Graph Reasoning-Based Emotion Recognition Network. IEEE Access 2021, 9, 6488–6497. [Google Scholar] [CrossRef]

- Ameer, I.; Sidorov, G.; Gómez-Adorno, H.; Nawab, R.M.A. Multi-Label Emotion Classification on Code-Mixed Text: Data and Methods. IEEE Access 2022, 10, 8779–8789. [Google Scholar] [CrossRef]

- Zhu, X.; Liu, G.; Zhao, L.; Rong, W.; Sun, J.; Liu, R. Emotion Classification from Multi-Band Electroencephalogram Data Using Dynamic Simplifying Graph Convolutional Network and Channel Style Recalibration Module. Sensors 2023, 23, 1917. [Google Scholar] [CrossRef]

- Das, A.; Hoque, M.M.; Sharif, O.; Dewan, M.A.A.; Siddique, N. TEmoX: Classification of Textual Emotion Using Ensemble of Transformers. IEEE Access 2023, 11, 109803–109818. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, Y.; Zhang, H.-R.; Wu, J.; Gu, X. Enhancing Text Classification by Graph Neural Networks with Multi-Granular Topic-Aware Graph. IEEE Access 2023, 11, 20169–20183. [Google Scholar] [CrossRef]

- Ahanin, Z.; Ismail, M.A.; Singh, N.S.S.; AL-Ashmori, A. Hybrid Feature Extraction for Multi-Label Emotion Classification in English Text Messages. Sustainability 2023, 15, 12539. [Google Scholar] [CrossRef]

- Shirian, A.; Tripathi, S.; Guha, T. Dynamic Emotion Modeling with Learnable Graphs and Graph Inception Network. IEEE Trans. Multimedia 2021, 24, 780–790. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, Q.; Zhang, Z.; Cummins, N.; Wang, H.; Tao, J.; Schuller, B.W. Combining a Parallel 2D CNN with a Self-Attention Dilated Residual Network for CTC-based Discrete Speech Emotion Recognition. Neural Netw. 2021, 141, 52–60. [Google Scholar] [CrossRef] [PubMed]

- Andayani, F.; Theng, L.B.; Tsun, M.T.; Chua, C. Hybrid LSTM-Transformer Model for Emotion Recognition from Speech Audio Files. IEEE Access 2022, 10, 36018–36027. [Google Scholar] [CrossRef]

- Atila, O.; Şengür, A. Attention Guided 3D CNN-LSTM Model for Accurate Speech Based Emotion Recognition. Appl. Acoust. 2021, 182, 108260. [Google Scholar] [CrossRef]

- Kollias, D.; Zafeiriou, S. Exploiting Multi-CNN Features in CNN-RNN Based Dimensional Emotion Recognition on the OMG in-the-Wild Dataset. IEEE Trans. Affect. Comput. 2021, 12, 595–606. [Google Scholar] [CrossRef]

- Ameer, I.; Bölücü, N.; Sidorov, G.; Can, B. Emotion Classification in Texts Over Graph Neural Networks: Semantic Representation Is Better Than Syntactic. IEEE Access 2023, 11, 56921–56934. [Google Scholar] [CrossRef]

- Leem, S.-G.; Fulford, D.; Onnela, J.-P.; Gard, D.; Busso, C. Selective Acoustic Feature Enhancement for Speech Emotion Recognition with Noisy Speech. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 917–929. [Google Scholar] [CrossRef]

- Mavsar, M.; Morimoto, J.; Ude, A. GAN-Based Semi-Supervised Training of LSTM Nets for Intention Recognition in Cooperative Tasks. IEEE Robot. Autom. Lett. 2024, 9, 263–270. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, X.; Wang, Z.; Yang, H. Graph-Based Object Semantic Refinement for Visual Emotion Recognition. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 3036–3049. [Google Scholar] [CrossRef]

- Hasib, K.M.; Azam, S.; Karim, A.; Al Marouf, A.; Shamrat, F.J.M.; Montaha, S.; Yeo, K.C.; Jonkman, M.; Alhajj, R.; Rokne, J.G. McNn-Lstm: Combining CNN and LSTM to Classify Multi-Class Text in Imbalanced News Data. IEEE Access 2023, 11, 93048–93063. [Google Scholar] [CrossRef]

- Jia, X. Music Emotion Classification Method Based on Deep Learning and Improved Attention Mechanism. Comput. Intell. Neurosci. 2022, 2022, 5181899. [Google Scholar] [CrossRef]

- Kim, D.-H.; Son, W.-H.; Kwak, S.-S.; Yun, T.-H.; Park, J.-H.; Lee, J.-D. A Hybrid Deep Learning Emotion Classification System Using Multimodal Data. Sensors 2023, 23, 9333. [Google Scholar] [CrossRef]

- Huang, F.; Li, X.; Yuan, C.; Zhang, S.; Zhang, J.; Qiao, S. Attention-Emotion-Enhanced Convolutional LSTM for Sentiment Analysis. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 4332–4345. [Google Scholar] [CrossRef] [PubMed]

- Le, H.-D.; Lee, G.-S.; Kim, S.-H.; Kim, S.; Yang, H.-J. Multi-Label Multimodal Emotion Recognition with Transformer-Based Fusion and Emotion-Level Representation Learning. IEEE Access 2023, 11, 14742–14751. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, Z.; Liu, K.; Zhao, Z.; Wang, J.; Wu, C. Text Sentiment Classification Based on BERT Embedding and Sliced Multi-Head Self-Attention Bi-Gru. Sensors 2023, 23, 1481. [Google Scholar] [CrossRef]

- Üveges, I.; Ring, O. HunEmBERT: A Fine-Tuned BERT-Model for Classifying Sentiment and Emotion in Political Communication. IEEE Access 2023, 11, 60267–60278. [Google Scholar] [CrossRef]

- Chen, L.; Li, M.; Wu, M.; Pedrycz, W.; Hirota, K. Convolutional Features-Based Broad Learning with LSTM for Multidimensional Facial Emotion Recognition in Human–Robot Interaction. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 64–75. [Google Scholar] [CrossRef]

- Bian, M.; He, G.; Feng, G.; Zhang, X.; Ren, Y. Verifiable Privacy-Preserving Heart Rate Estimation Based on LSTM. IEEE Internet Things J. 2024, 11, 1719–1731. [Google Scholar] [CrossRef]

- Cheng, Y.; Sun, H.; Chen, H.; Li, M.; Cai, Y.; Cai, Z.; Huang, J. Sentiment Analysis Using Multi-Head Attention Capsules with Multi-Channel CNN and Bidirectional GRU. IEEE Access 2021, 9, 60383–60395. [Google Scholar] [CrossRef]

- Chen, H.; Sun, Y.; Zhang, M.; Zhang, M. Automatic Noise Generation and Reduction for Text Classification. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 139–150. [Google Scholar] [CrossRef]

- Tao, W.; Li, C.; Song, R.; Cheng, J.; Liu, Y.; Wan, F.; Chen, X. EEG-Based Emotion Recognition via Channel-Wise Attention and Self Attention. IEEE Trans. Affect. Comput. 2023, 14, 382–393. [Google Scholar] [CrossRef]

- Li, C.; Zhang, Z.; Zhang, X.; Huang, G.; Liu, Y.; Chen, X. EEG-based Emotion Recognition via Transformer Neural Architecture Search. IEEE Trans. Ind. Inform. 2023, 19, 6016–6025. [Google Scholar] [CrossRef]

- Yu, W.; Kim, I.Y.; Mechefske, C.K. Analysis of Different RNN Autoencoder Variants for Time Series Classification and Machine Prognostics. Mech. Syst. Signal Process. 2021, 149, 107322. [Google Scholar] [CrossRef]

- Machová, K.; Szabóova, M.; Paralič, J.; Mičko, J. Detection of Emotion by Text Analysis Using Machine Learning. Front. Psychol. 2023, 14, 1190326. [Google Scholar] [CrossRef]

- Asghar, M.A.; Khan, M.J.; Shahid, H.; Xiong, N.; Mehmood, R.M. Semi-Skipping Layered Gated Unit and Efficient Network: Hybrid Deep Feature Selection Method for Edge Computing in EEG-Based Emotion Classification. IEEE Access 2021, 9, 13378–13389. [Google Scholar] [CrossRef]

- Zulqarnain, M.; Ghazali, R.; Hassim, Y.M.M.; Aamir, M. An Enhanced Gated Recurrent Unit with Auto-Encoder for Solving Text Classification Problems. Arab. J. Sci. Eng. 2021, 46, 8953–8967. [Google Scholar] [CrossRef]

- Li, M.; Qiu, M.; Kong, W.; Zhu, L.; Ding, Y. Fusion Graph Representation of EEG for Emotion Recognition. Sensors 2023, 23, 1404. [Google Scholar] [CrossRef]

- Shen, S.; Fan, J. Emotion Analysis of Ideological and Political Education Using a GRU Deep Neural Network. Front. Psychol. 2022, 13, 908154. [Google Scholar] [CrossRef]

- Wang, X.; Tong, Y. Application of an Emotional Classification Model in E-Commerce Text Based on an Improved Transformer Model. PLoS ONE 2021, 16, e0247984. [Google Scholar] [CrossRef]

- Khan, P.; Ranjan, P.; Kumar, S. AT2GRU: A Human Emotion Recognition Model with Mitigated Device Heterogeneity. IEEE Trans. Affect. Comput. 2023, 14, 1520–1532. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Endah Hiswati, M.; Utami, E.; Kusrini, K.; Setyanto, A. Insights into the Emotion Classification of Artificial Intelligence: Evolution, Application, and Obstacles of Emotion Classification. Eng. Proc. 2025, 103, 24. https://doi.org/10.3390/engproc2025103024

Endah Hiswati M, Utami E, Kusrini K, Setyanto A. Insights into the Emotion Classification of Artificial Intelligence: Evolution, Application, and Obstacles of Emotion Classification. Engineering Proceedings. 2025; 103(1):24. https://doi.org/10.3390/engproc2025103024

Chicago/Turabian StyleEndah Hiswati, Marselina, Ema Utami, Kusrini Kusrini, and Arief Setyanto. 2025. "Insights into the Emotion Classification of Artificial Intelligence: Evolution, Application, and Obstacles of Emotion Classification" Engineering Proceedings 103, no. 1: 24. https://doi.org/10.3390/engproc2025103024

APA StyleEndah Hiswati, M., Utami, E., Kusrini, K., & Setyanto, A. (2025). Insights into the Emotion Classification of Artificial Intelligence: Evolution, Application, and Obstacles of Emotion Classification. Engineering Proceedings, 103(1), 24. https://doi.org/10.3390/engproc2025103024