Enhancing Anatomy Teaching and Learning Through 3D and Mixed-Reality Tools in Medical Education †

Abstract

1. Introduction

2. Background and Related Works

2.1. MR in Medical Education

2.2. Volumetric Video and 3D Capturing

3. System Design and Implementation

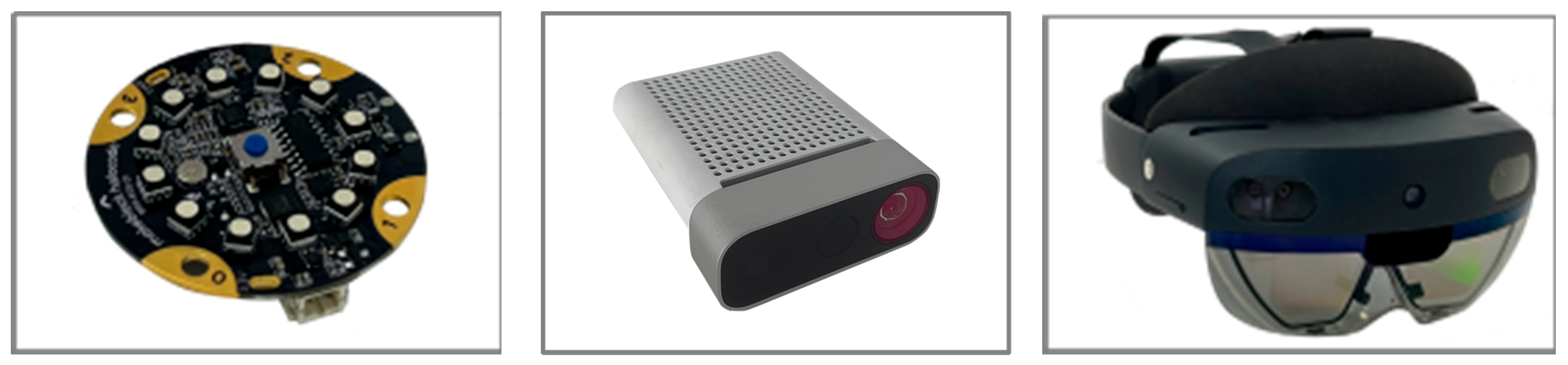

3.1. Hardware and Software

3.2. System Architecture

3.3. Interactive Design

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Meershoek, A.J.A.; Loonen, T.G.J.; Maal, T.J.J.; Hekma, E.J.; Hugen, N. Three Dimensional Printing as a Tool For Anatomical Training in Lung Surgery. Med. Sci. Educ. 2023, 33, 873–878. [Google Scholar] [CrossRef]

- Sadeghi, A.H.; El Mathari, S.; Abjigitova, D.; Maat, A.P.; Taverne, Y.J.; Bogers, A.J.; Mahtab, E.A. Current and future applications of virtual, augmented, and MR in cardiothoracic surgery. Ann. Thorac. Surg. 2022, 113, 681–691. [Google Scholar] [CrossRef] [PubMed]

- Vervoorn, M.T.; Wulfse, M.; Hoesein, F.A.A.M.; Stellingwerf, M.; van der Kaaij, N.P.; de Heer, L.M. Application of three-dimensional computed tomography imaging and reconstructive techniques in lung surgery: A mini-review. Front. Surg. 2022, 9, 1079857. [Google Scholar] [CrossRef]

- Tokuno, J.; Chen-Yoshikawa, T.F.; Nakao, M.; Iwakura, M.; Motoki, T.; Matsuda, T.; Date, H. Creation of a video library for education and virtual simulation of anatomical lung resection. Interact. Cardiovasc. Thorac. Surg. 2022, 34, 808–813. [Google Scholar] [CrossRef]

- Dho, Y.-S.; Park, S.J.; Choi, H.; Kim, Y.; Moon, H.C.; Kim, K.M.; Kang, H.; Lee, E.J.; Kim, M.-S.; Kim, J.W.; et al. Development of an insideout augmented reality technique for neurosurgical navigation. Neurosurg. Focus 2021, 51, E21. [Google Scholar] [CrossRef]

- Andrews, C.; Southworth, M.K.; Silva, J.N.A.; Silva, J.R. Extended reality in medical practice. Curr. Treat. Options Cardiovasc. Med. 2019, 21, 18. [Google Scholar] [CrossRef]

- Alaker, M.; Wynn, G.R.; Arulampalam, T. VR training in laparoscopic surgery: A systematic review & meta-analysis. Int. J. Surg. 2016, 29, 85–94. [Google Scholar] [CrossRef] [PubMed]

- Vitish-Sharma, P.; Knowles, J.; Patel, B. Acquisition of fundamental laparoscopic skills: Is a box really as good as a VR trainer? Int. J. Surg. 2011, 9, 659–661. [Google Scholar] [CrossRef] [PubMed]

- Jones, C.; Ramanau, R.; Cross, S.; Healing, G. Net generation or Digital Natives: Is there a distinct new generation entering university? Comput. Educ. 2010, 54, 722–732. [Google Scholar] [CrossRef]

- Murad, M.H.; Coto-Yglesias, F.; Varkey, P.; Prokop, L.J.; Murad, A.L. The effectiveness of self-directed learning in health professions education: A systematic review. Med. Educ. 2010, 44, 1057–1068. [Google Scholar] [CrossRef]

- Clark, R.C.; Mayer, R.E. E-Learning and the Science of Instruction: Proven Guidelines for Consumers and Designers of Multimedia Learning; John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Cook, D.A.; Brydges, R.; Hamstra, S.J.; Zendejas, B.; Szostek, J.H.; Wang, A.T.; Erwin, P.J.; Hatala, R. Comparative effectiveness of technology-enhanced simulation versus other instructional methods: A systematic review and meta-analysis. Simul. Healthc. 2012, 7, 308–320. [Google Scholar] [CrossRef]

- Dalgarno, B.; Lee, M.J.W. What are the learning affordances of 3-D virtual environments? Br. J. Educ. Technol. 2010, 41, 10–32. [Google Scholar] [CrossRef]

- Akçayır, M.; Akçayır, G. Advantages and challenges associated with augmented reality for education: A systematic review of the literature. Educ. Res. Rev. 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Jang, S.; Vitale, J.M.; Jyung, R.W.; Black, J.B. Direct manipulation is better than passive viewing for learning anatomy in a three-dimensional VR environment. Comput. Educ. 2017, 106, 150–165. [Google Scholar] [CrossRef]

- Küçük, S.; Kapakin, S.; Göktaş, Y. Learning anatomy via mobile augmented reality: Effects on achievement and cognitive load. Anat. Sci. Educ. 2016, 9, 411–421. [Google Scholar] [CrossRef]

- Mikhail, E.M.; Bethel, J.S.; McGlone, J.C. Introduction to Modern Photogrammetry; Wiley: Hoboken, NJ, USA, 2001. [Google Scholar]

- Kunkler, K. The role of medical simulation: An overview. Int. J. Med. Robot. Comput. Assist. Surg. 2006, 2, 203–210. [Google Scholar] [CrossRef]

- McGaghie, W.C.; Issenberg, S.B.; Cohen, E.R.; Barsuk, J.H.; Wayne, D.B. Does Simulation-Based Medical Education With Deliberate Practice Yield Better Results Than Traditional Clinical Education? A Meta-Analytic Comparative Review of the Evidence. Acad. Med. 2011, 86, 706–711. [Google Scholar] [CrossRef] [PubMed]

- McGaghie, W.C.; Issenberg, S.B.; Petrusa, E.R.; Scalese, R.J. A critical review of simulation-based medical education research: 2003–2009. Med. Educ. 2010, 44, 50–63. [Google Scholar] [CrossRef]

- Okuda, Y.; Bryson, E.O.; DeMaria, S., Jr.; Jacobson, L.; Quinones, J.; Shen, B.; Levine, A.I. The utility of simulation in medical education: What is the evidence? Mt. Sinai J. Med. 2009, 76, 330–343. [Google Scholar] [CrossRef]

- Gorbanev, I.; Agudelo-Londoño, S.; González, R.A.; Cortes, A.; Pomares, A.; Delgadillo, V.; Yepes, F.J.; Muñoz, Ó. A systematic review of serious games in medical education: Quality of evidence and pedagogical strategy. Med. Educ. Online 2018, 23, 1438718. [Google Scholar] [CrossRef]

- Graafland, M.; Schraagen, J.M.; Schijven, M.P. Systematic review of serious games for medical education and surgical skills training. Br. J. Surg. 2012, 99, 1322–1330. [Google Scholar] [CrossRef] [PubMed]

- Barsom, E.Z.; Graafland, M.; Schijven, M.P. Systematic review on the effectiveness of augmented reality applications in medical training. Surg. Endosc. 2016, 30, 4174–4183. [Google Scholar] [CrossRef] [PubMed]

- Brown, R.; McIlwain, S.; Willson, B.; Hackett, M. Enhancing Combat Medic training with 3D virtual environments. In Proceedings of the 2016 IEEE International Conference on Serious Games and Applications for Health (SeGAH), Orlando, FL, USA, 11–13 May 2016; pp. 1–7. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Gang, W.; Ye, Y.; Zhu, Y.; You, S.; Huang, Z.; Lv, T.; Gang, R. Enhancing Anatomy Teaching and Learning Through 3D and Mixed-Reality Tools in Medical Education. Eng. Proc. 2025, 103, 22. https://doi.org/10.3390/engproc2025103022

Yang J, Gang W, Ye Y, Zhu Y, You S, Huang Z, Lv T, Gang R. Enhancing Anatomy Teaching and Learning Through 3D and Mixed-Reality Tools in Medical Education. Engineering Proceedings. 2025; 103(1):22. https://doi.org/10.3390/engproc2025103022

Chicago/Turabian StyleYang, Jie, Wang Gang, Yihe Ye, Yaning Zhu, Shijiang You, Zhihuang Huang, Tianyu Lv, and Ren Gang. 2025. "Enhancing Anatomy Teaching and Learning Through 3D and Mixed-Reality Tools in Medical Education" Engineering Proceedings 103, no. 1: 22. https://doi.org/10.3390/engproc2025103022

APA StyleYang, J., Gang, W., Ye, Y., Zhu, Y., You, S., Huang, Z., Lv, T., & Gang, R. (2025). Enhancing Anatomy Teaching and Learning Through 3D and Mixed-Reality Tools in Medical Education. Engineering Proceedings, 103(1), 22. https://doi.org/10.3390/engproc2025103022