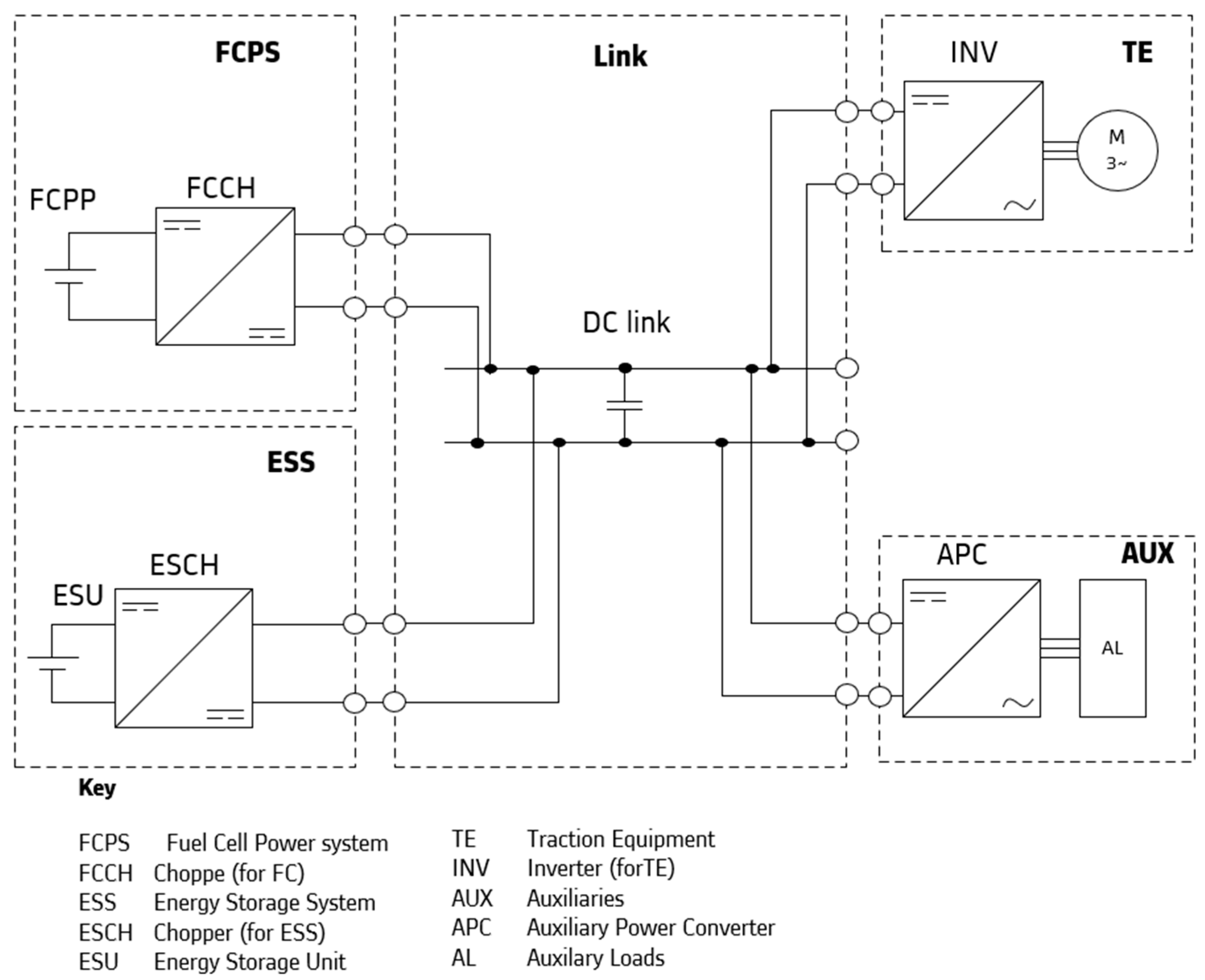

The online energy management strategy presented previously includes several tunable parameters, which will be defined and their application domain given in the following section.

As already mentioned, the shunting locomotive may be assigned a wide variety of missions. For instance, the weight of the wagons it hauls can range from a few hundred tons up to 2000 tons, as is the case for the locomotive under study. Moreover, the load hauled is often unpredictable. The diversity and randomness of these missions necessitate a robust energy management system capable of handling the full range of operational scenarios to ensure optimal locomotive performance.

3.2.1. EPMS Parameters

The range extender (RE) mode’s objective is to maintain the battery energy level near the nominal threshold, but without abrupt changes. To achieve this, the system considers the recent power demand, as follows:

A long-term regulation where the objective is to recharge the battery to a nominal energy level. The next terms are demand oriented.

A medium-term average (shorter than the time required to reach the nominal energy threshold) is used to assess the general power demand trend.

A shorter-term average is also considered to allow for a more dynamic response to sudden changes in demand.

This dual-timescale approach helps the system balance energy stability and responsiveness. Equation (7) summarizes the logic of the range extender mode.

Let be the power setpoint for the fuel cell, and the total power demand, which includes both auxiliary consumption and traction/braking consumption. Let and represent the battery energy levels at the nominal threshold and the current state of charge, respectively. The parameters , and are time windows used for averaging power demand, while and are weighting coefficients. These five parameters are subject to tuning as part of the energy management strategy.

The energy thresholds were defined during the system sizing phase to match the mission profiles established for the project. The five parameters mentioned above remain to be optimized. They are subject to constraints, which are summarized in the following Equations (8)–(11):

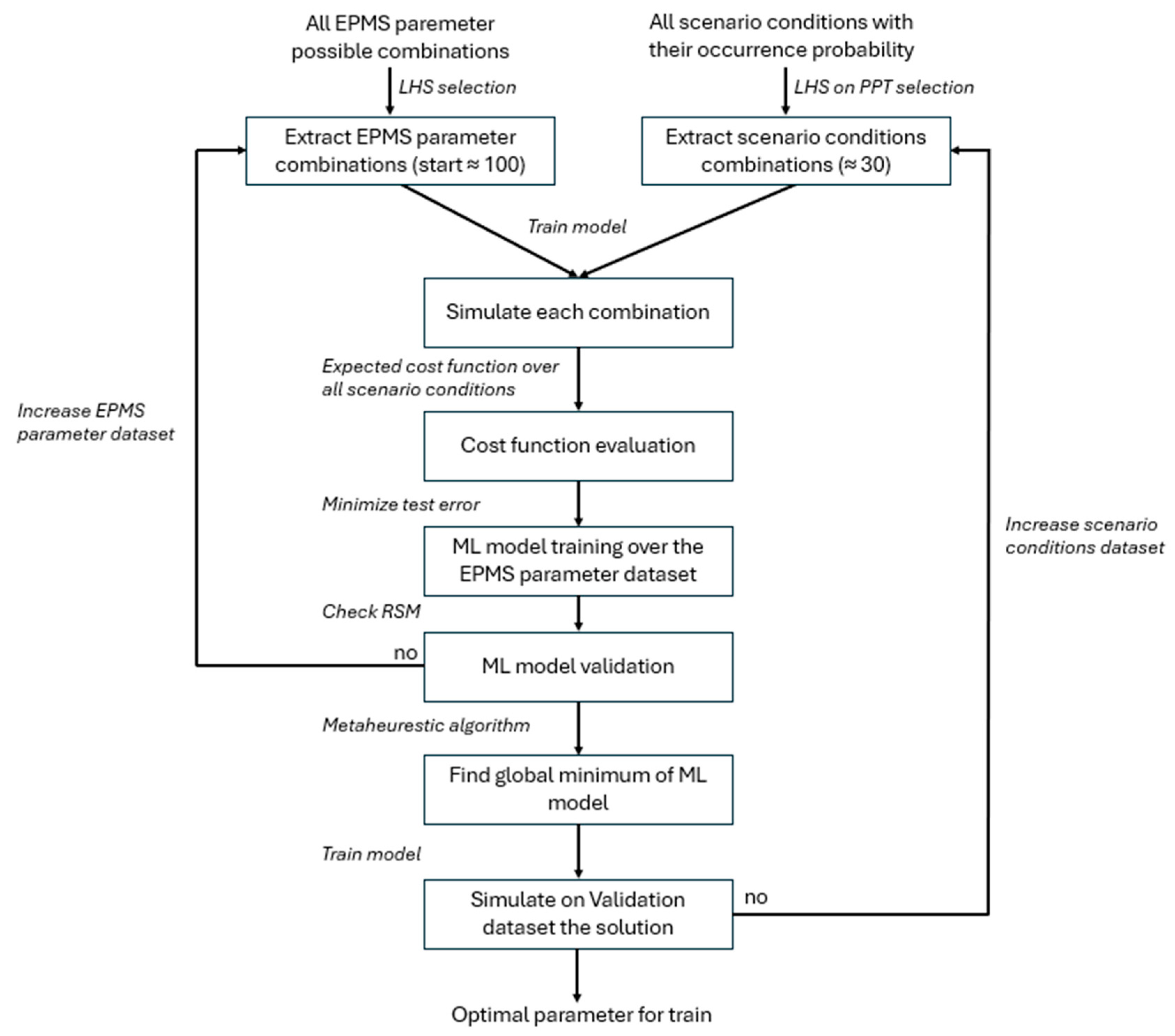

The number of possible combinations is too high (>10

10 with duration step of 30 s and ratio step of 1%). Simulating every combination is therefore computationally infeasible. We need to significantly reduce the number of combinations. While the Taguchi method could be considered, our goal is not to analyze parameter sensitivity but rather to train a machine learning (ML) model. To ensure the generalizability of the model, it is essential to sample the parameter space uniformly and comprehensively. Latin Hypercube Sampling (LHS) will be used. This is a statistical method designed to ensure the even coverage of the parameter space [

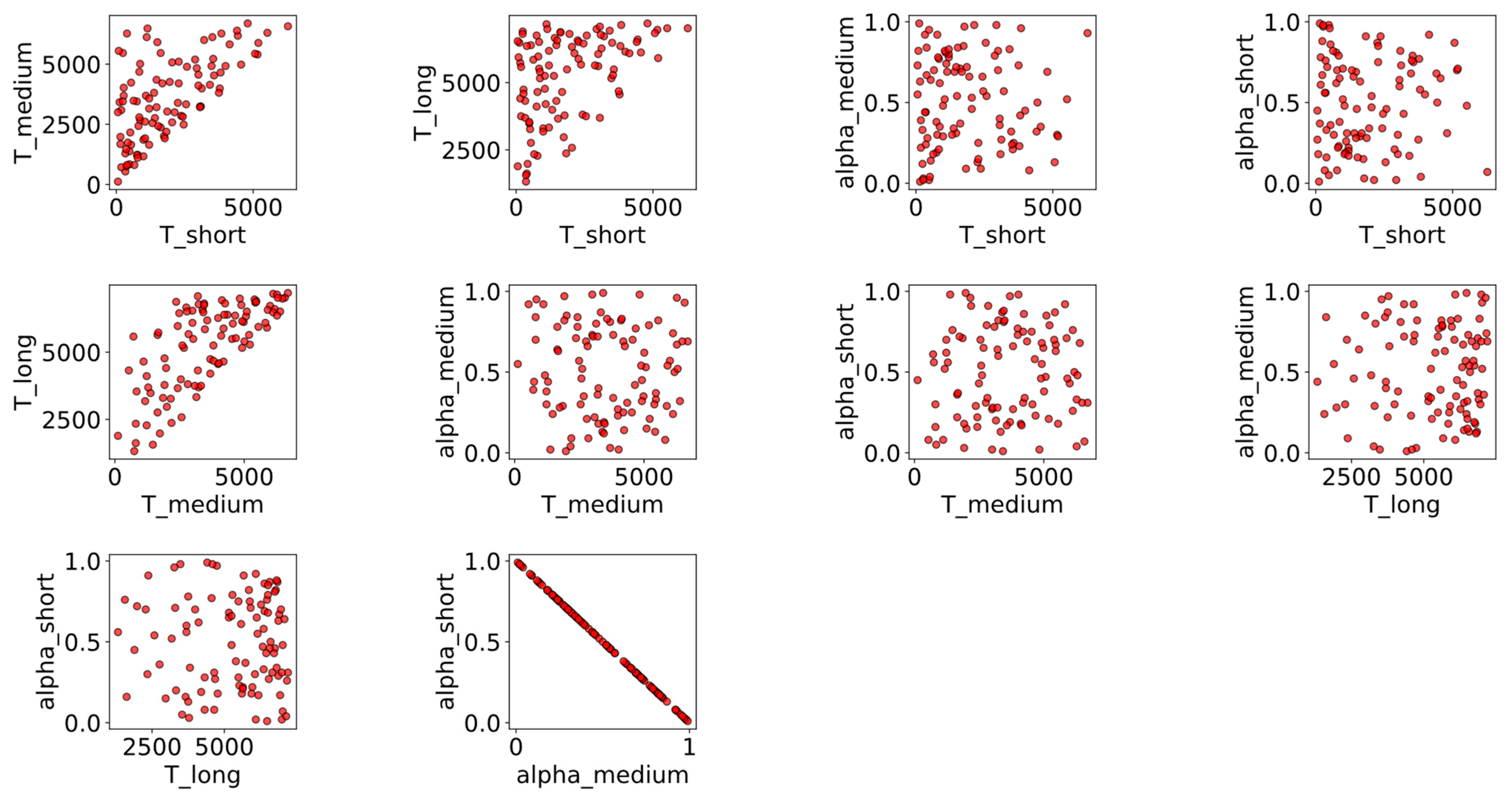

17]. Thanks to the LHS, the number of parameter combinations can be reduced to 100 for the initial phase. This number is sufficient to begin training the ML model. The representation of the selected combinations is given on

Figure 5.

We observe that with only 100 points distributed across five constrained dimensions, the sampling appears visually to cover the entire constrained space relatively evenly.

To estimate whether this dataset sufficiently covers the 5D constrained space, we compute the maximum distance from any point in the space to the nearest of our 100 sampled points. In a normalized 5D space, the theoretical maximum distance is . In our case, the observed maximum distance is 0.4, which represents approximately 18% of the theoretical maximum. This suggests a good level of coverage.

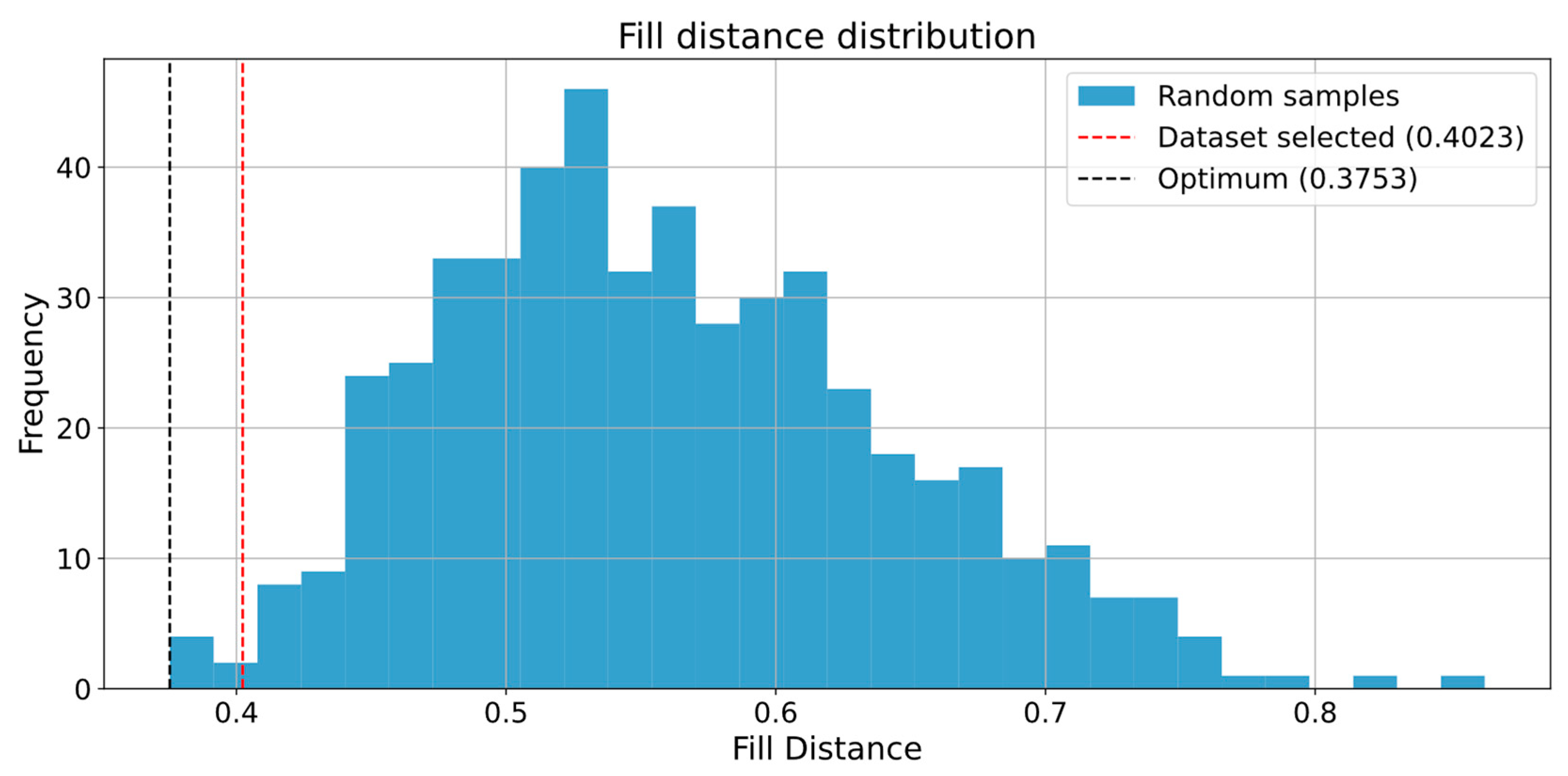

To further assess this, we compare our result to 500 random draws of 100 points within the same 5D constrained space. The results are shown in

Figure 6. We can observe that the selected solution is very close to the optimum (0.4 vs. 0.38). This indicates that the coverage achieved with thew 100 points sampled from our 5D constrained space is nearly optimal.

3.2.2. Scenario Conditions

To ensure the robustness of the optimization process, it is not sufficient to optimize all parameters based on a single scenario. The optimization must account for the full range of possible scenario conditions. These conditions include:

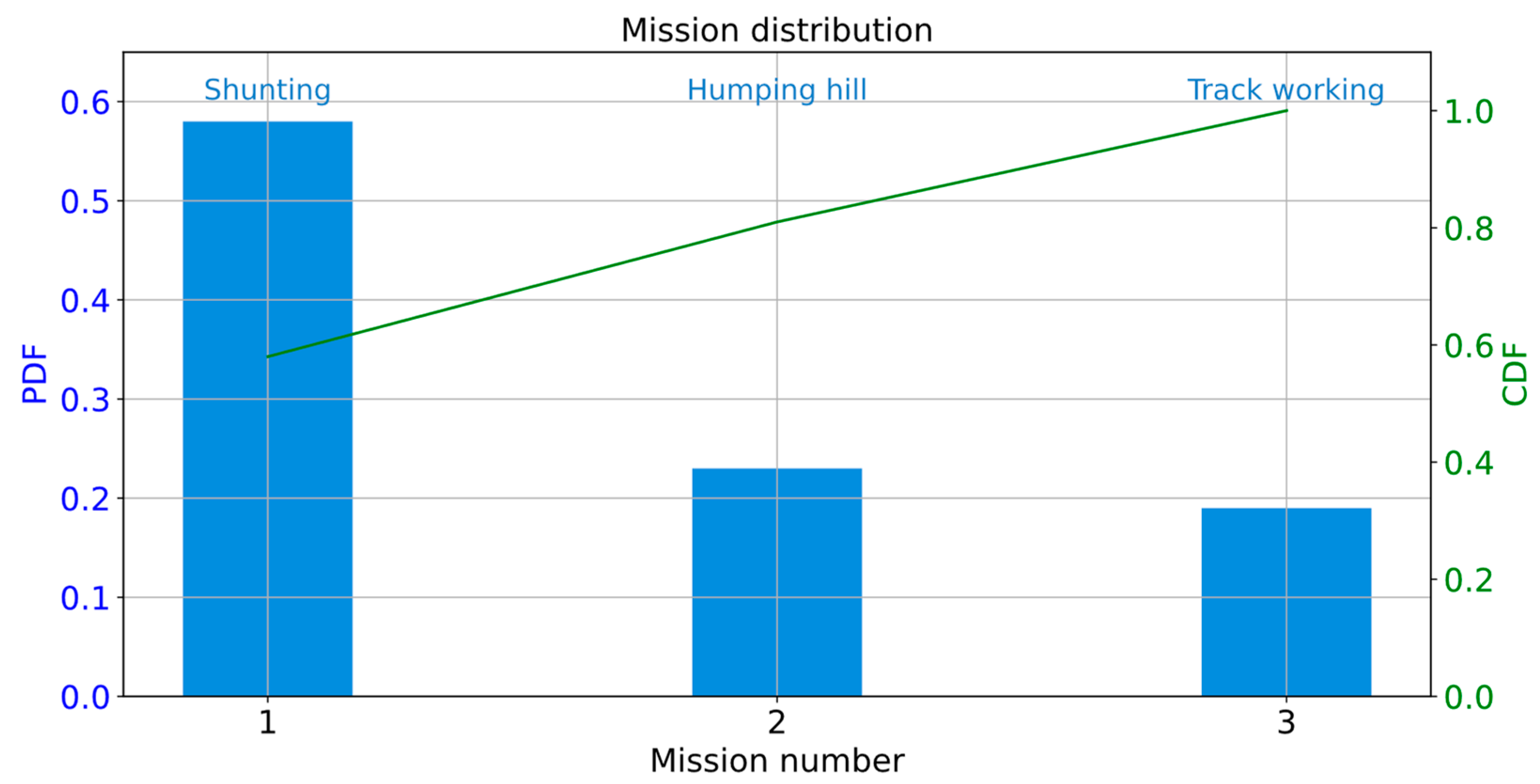

For the locomotive study, three representative missions have been selected:

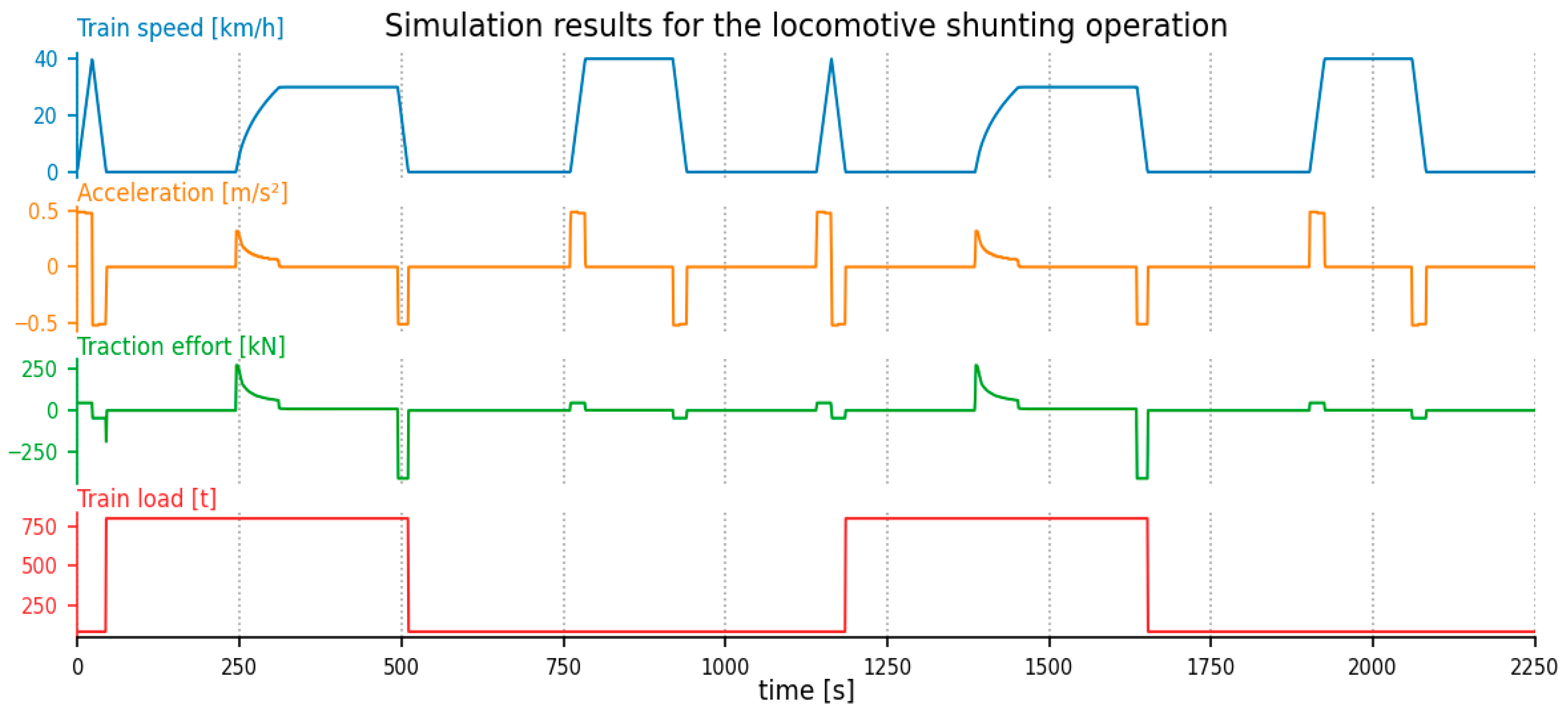

In this mission, the locomotive retrieves wagons of varying loads. The train runs empty in one direction and fully loaded in the other, with the load ranging from 500 to 2000 tons. This round trip is repeated 10 times consecutively;

- 2.

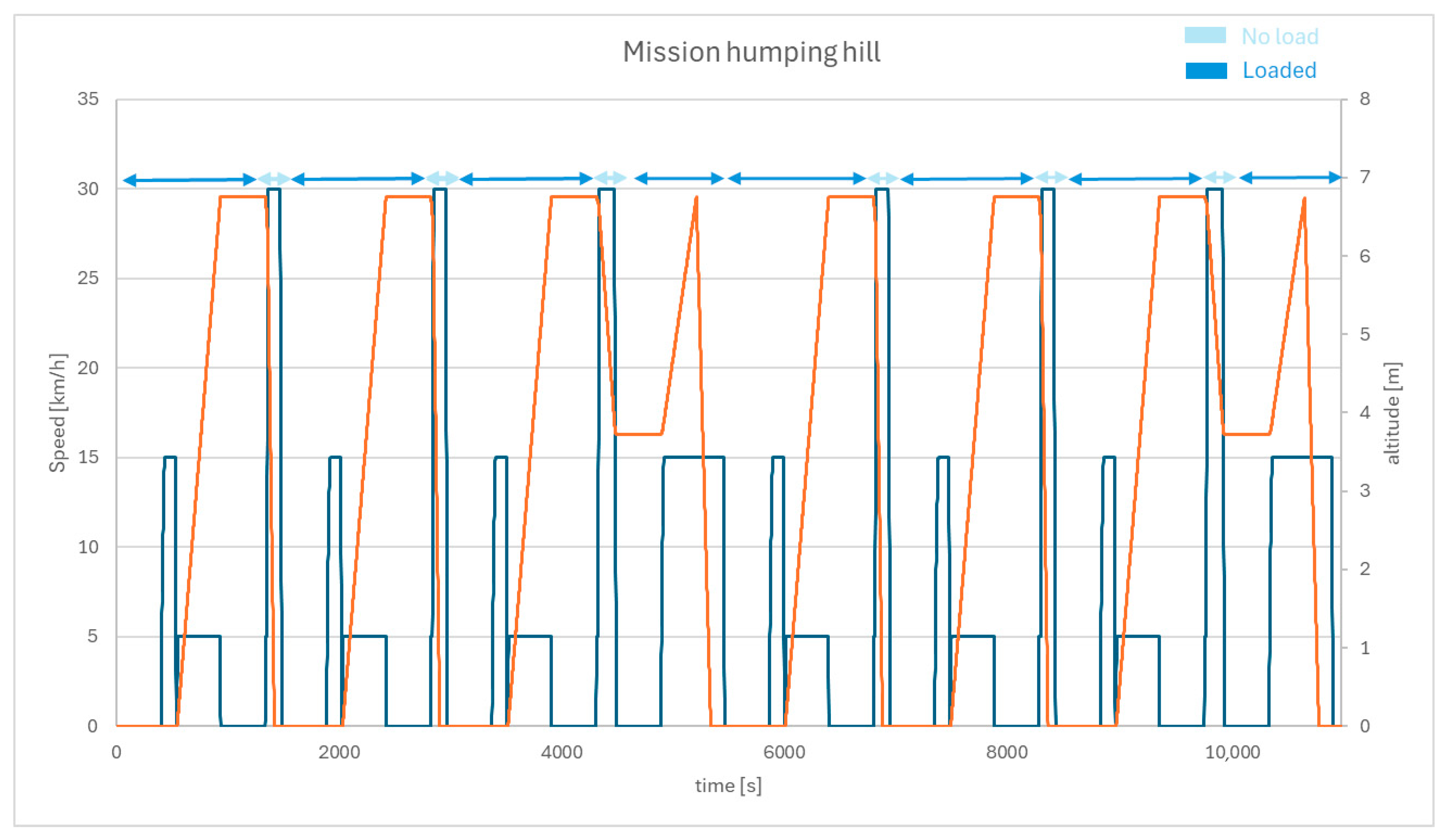

Humping Hill Mission

The locomotive pushes wagons to the top of a humping hill and then releases them. As with the previous mission, the load can vary between 500 and 2000 tons. The potential energy gained at the summit is used to sort the wagons;

- 3.

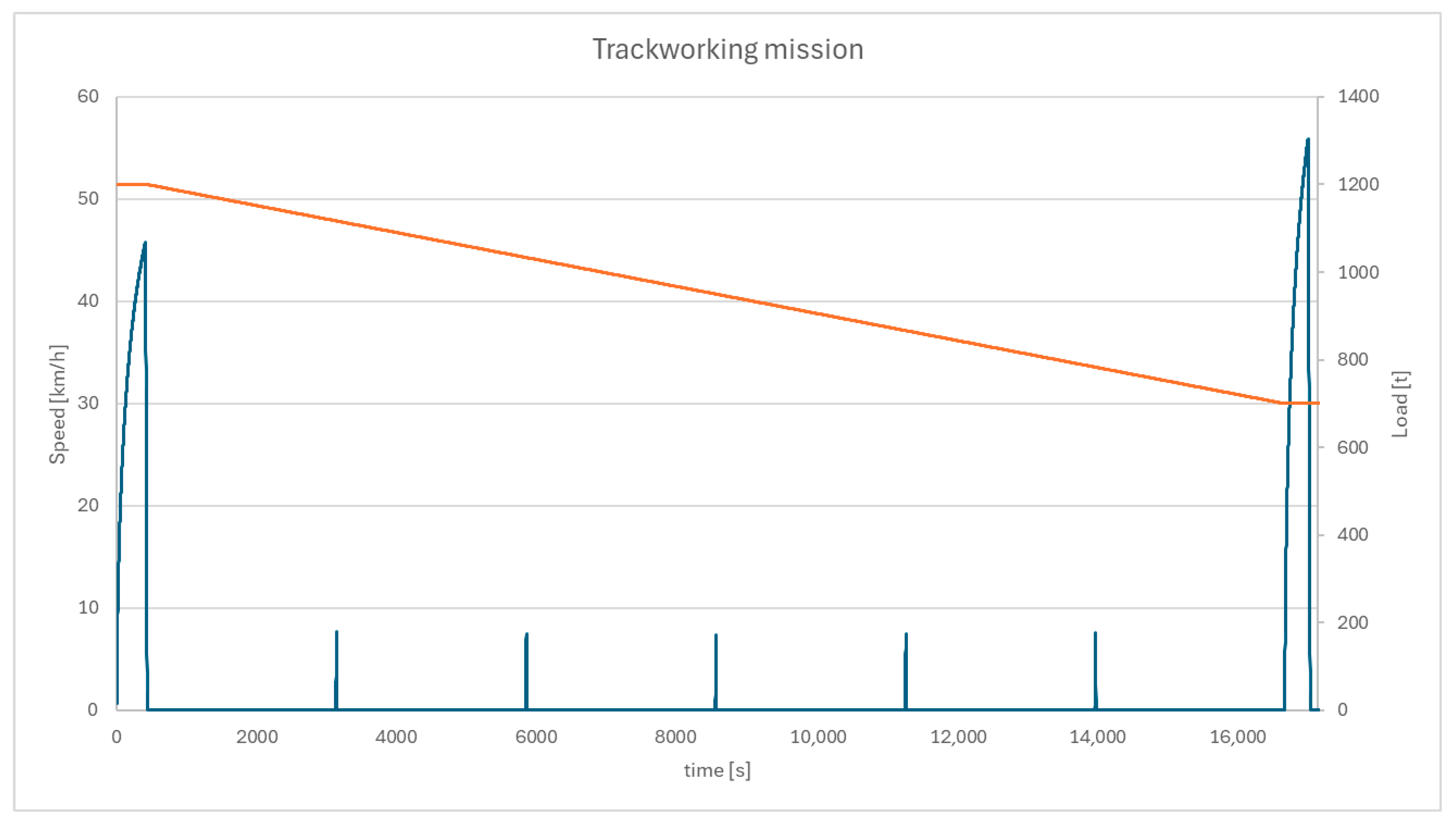

Track Work Mission

This mission simulates the locomotive towing a maintenance machine for track operations. It involves short back-and-forth movements. The convoy weighs approximately 1200 tons at the start, and after using materials for track repairs, the return trip weight is reduced to around 800 tons.

Figure 7 illustrates the Probability Density Function (PDF) and the Cumulative Density Function (CDF) associated with the mission occurrence.

For the wagon mass load, the locomotive is specified to carry up to 2000 tons. For the purpose of the study, we will use the wagon definition as given in the

Table 1. When the loaded mass changes, other characteristics of the train also change resistance to motion, train length and mechanical brake effort. Each wagon adds its own resistance to motion and length to the convoys. Besides this, each wagon also has brake disks to help with mechanical braking.

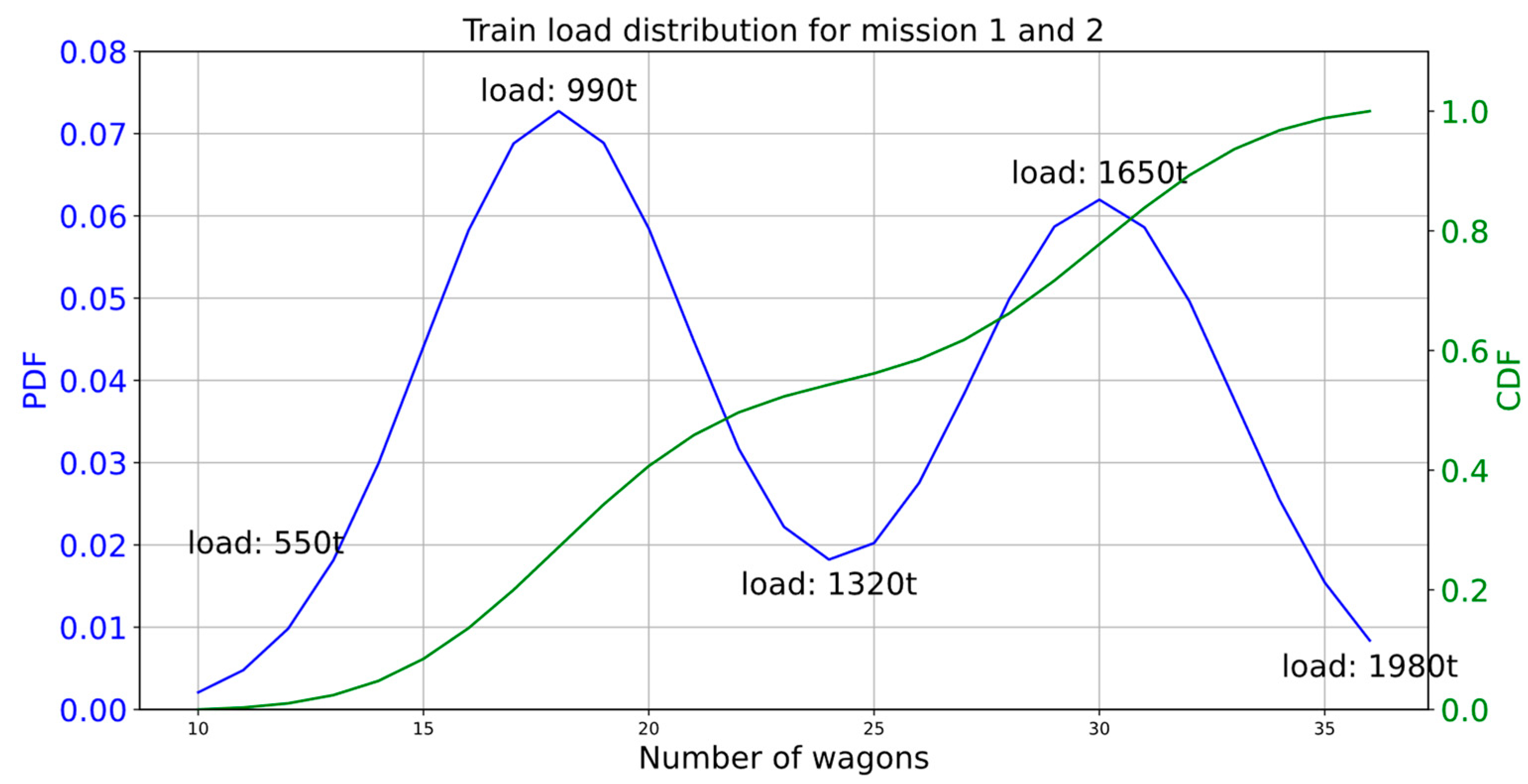

The shunting missions operate from 10 wagons up to 36 wagons. From the data of a customer, it appears that the most likely situations are around 1000 t (≈18 wagons) and between 1800 tons and 2000 tons (≈33 wagons). To represent the bimodal law, we have added two normal laws centered around 18 wagons and 30 wagons. The second peak for 30 wagons was a bit shifted to include a high density at 33 wagons, but a wider impact. This bimodal law is finally truncated between 10 wagons and 36 wagons. The bimodal probability density law is given by Equations (12)–(16) below.

Let

be the probability of X being x. We want to follow a normal distribution, with

as the mean and

the standard deviation. The probability density function is then defined by Equation (13). The cumulative density function is then described by Equations (14) and (15).

is the probability of having x wagons.

and

are the means of the two normal laws of the bimodal law.

and

are the standard deviations of the two normal laws of the bimodal law. Finally, both densities are not evenly distributed, and this distribution is represented by the parameters

and

. Finally, the values are given in

Table 2. The graphic representation of the probability law for the mass load is given in

Figure 8.

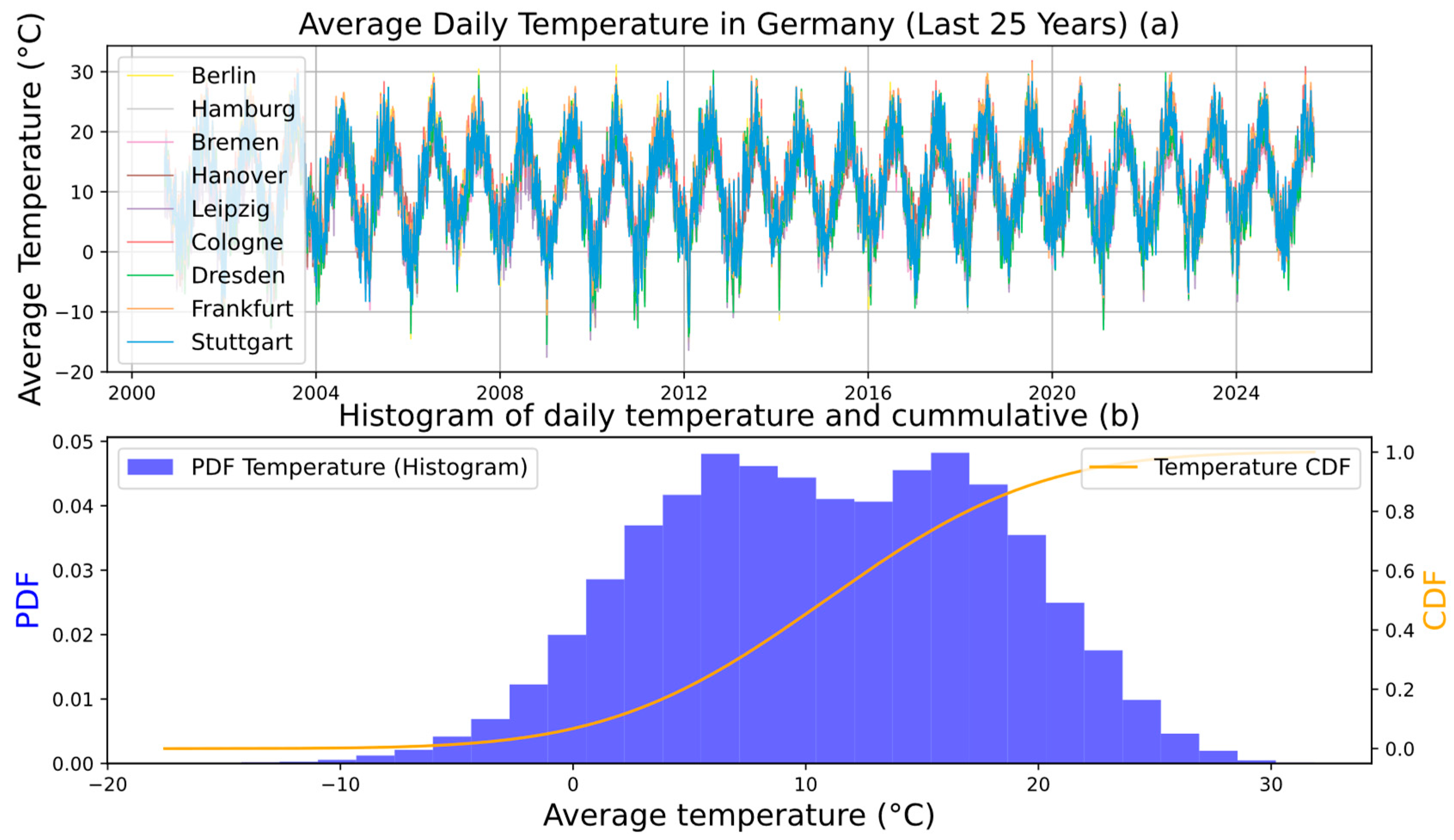

The locomotive is designed to run in all of Germany. To consider the climatic conditions of Germany, we have extracted meteorological data for the last 25 years from Meteostats [

18] for the nine main cities of Germany (Bremen, Berlin, Cologne, Dresden, Frankfurt, Hamburg, Hanover, Leipzig and Stuttgart). The PDF and CDF are represented in

Figure 9. As the law seems not to follow a standard probability density function, we have decided not to fit any classic probability laws, and instead use the histogram as calculated.

Concerning the SOH of the components, we will age the ESU and the FC together. We will consider two states, as the aging is considered linear over time—the BoL (Beginning of Life) and the EoL (End of Life). For the battery, the aging is simple; the internal resistance is increased up to twice the BOL value at the EOL. The capacity is reduced by 20% at the EOL to reach 80% of the BOL capacity. The FC is more complex; the polar curve is updated and so is the efficiency, but the auxiliary consumption cannot be shared for confidentiality reasons. As the components age linearly, the BOL and EOL will have the same occurrence probability of 50% each.

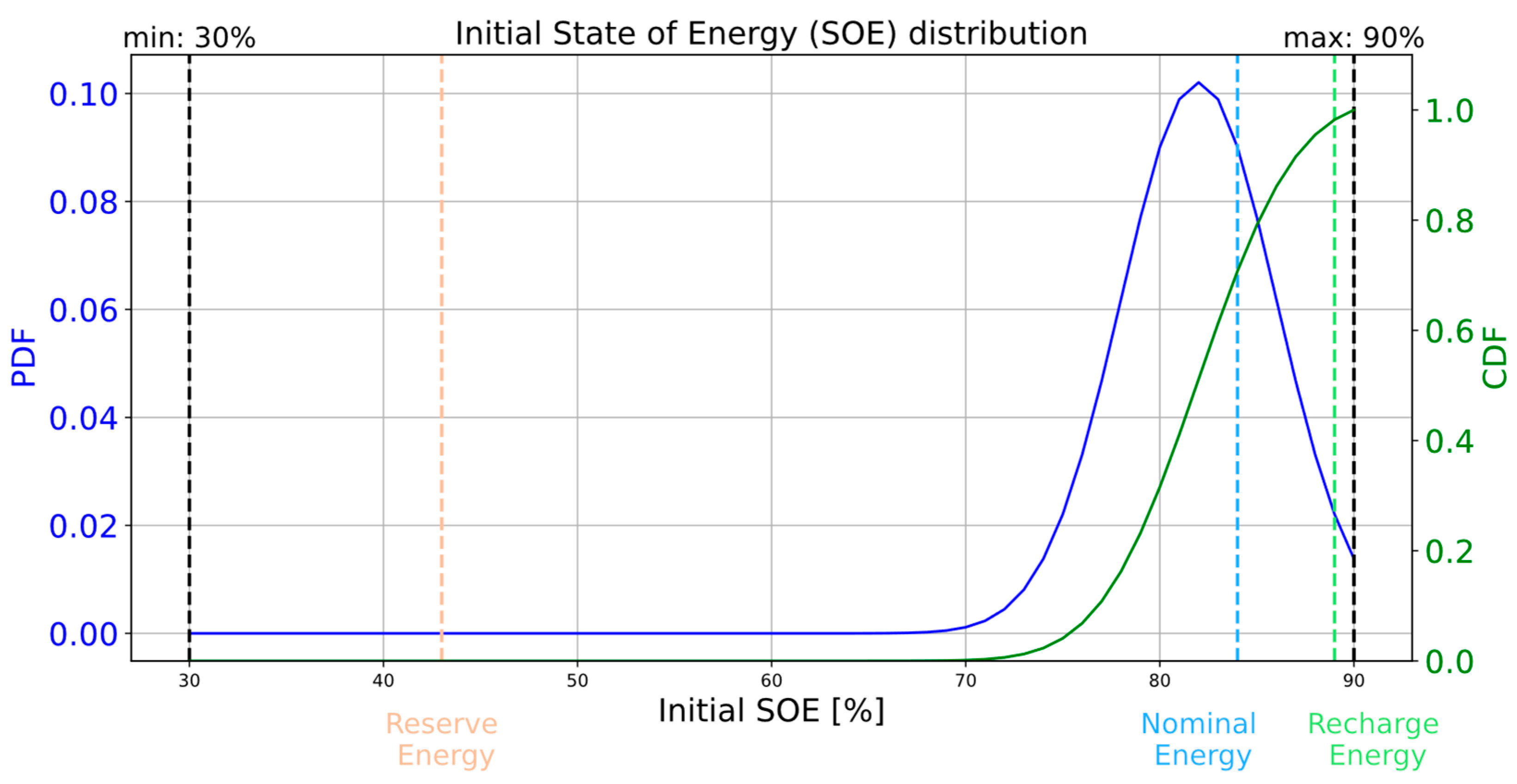

The last two factors in the scenario are related to the initialization of the simulation. These factors account for the fact that, prior to the current mission, any number of situations could have occurred. The first factor is the initial state of energy at the beginning of the mission. Although the EPMS is designed to regulate the state of energy close to the nominal value (84%), modeling a normal distribution centered at 84% would result in a probability that is too high, of nearly 90%, which is the maximum allowed energy. Moreover, the likelihood of ending a mission with an undercharged battery is higher than of ending it with an overcharged one. Therefore, the mean value was set at 82%. A standard deviation of 5% was chosen to reflect the variability in the final charge level after each mission. Finally, the normal distribution is truncated to remain within the operational range of the battery, which is between 30% and 90%. The corresponding probability density function is shown in

Figure 10.

The last parameter is the initialization of the energy management law. As the law averages the power demand on the former moments, the past may have an impact on the way of working of the EPMS, mainly during the first minutes. As the missions are not predictable, the initialization follows a uniform law between the minimal possible power and maximum possible power.

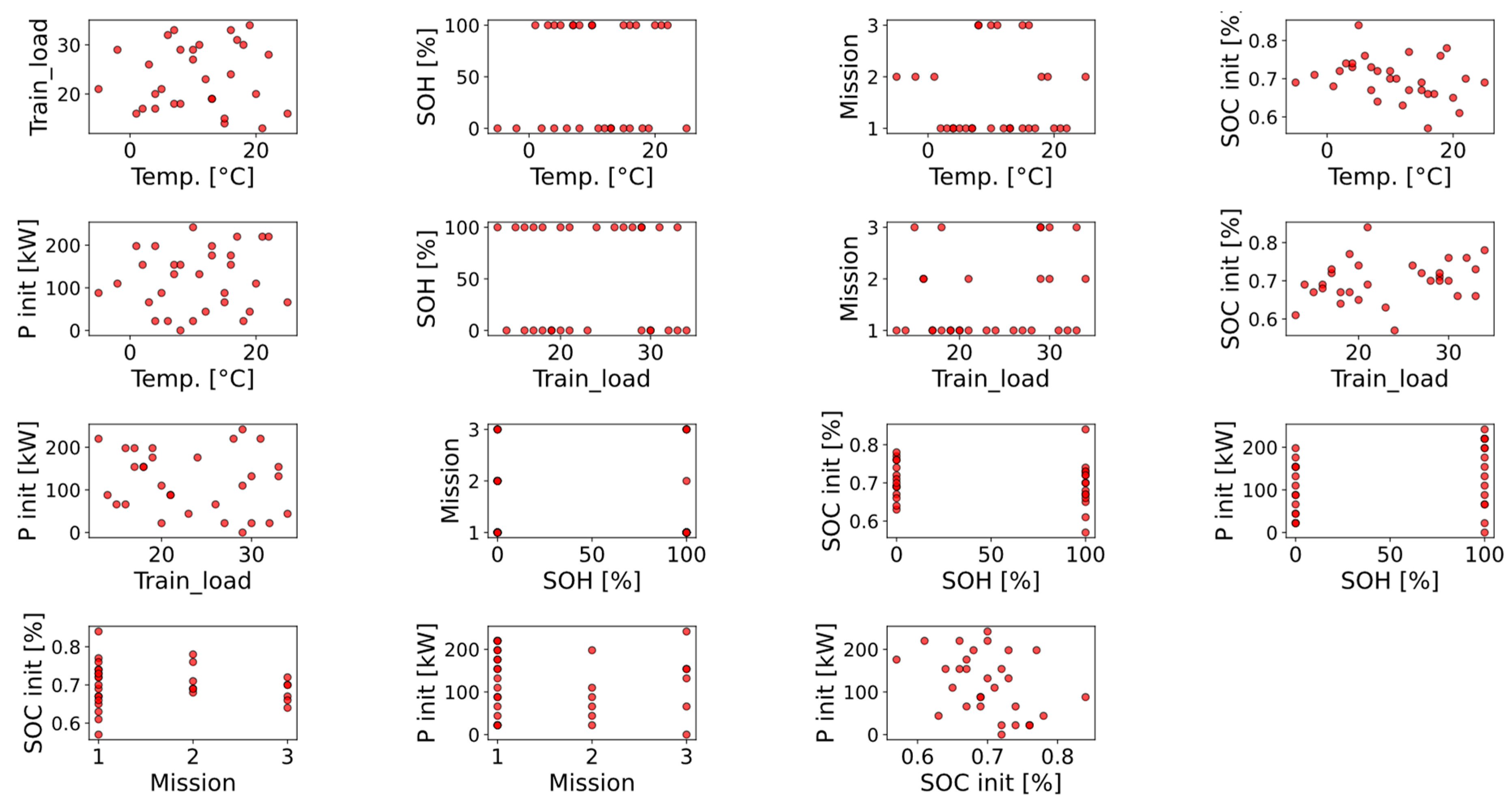

Finally, the 30 scenarios defined are represented in

Figure 11. We can see that the points seem spread over each dimension, but the small number of points leaves huge empty areas.

In

Figure 11, the first subgraph (row 1, column 1) shows the train load and temperature across 30 scenarios. Despite the limited number of data points, we can observe a good distribution across both scenario conditions. In the 13th subgraph (row 4, column 1), the scenario conditions are illustrated across three missions with their corresponding initial state of charge (SOC). Since the first mission is more likely to occur, we ensure the broader coverage of initial SOC values for this mission. In contrast, missions 2 and 3 focus more specifically on the most probable initial SOC values.