Automated Taxonomy Construction Using Large Language Models: A Comparative Study of Fine-Tuning and Prompt Engineering

Abstract

1. Introduction

1.1. Motivation

1.2. Problem Statement

1.3. Research Questions

- How effectively can LLMs identify and extract the vocabularies present in a domain-specific corpus?

- To what degree can the two competing LLM methodologies, fine-tuning and prompt engineering, implicitly determine the correct hierarchical knowledge representation structure between these vocabularies?

- Which approach yields a more accurate, stable, and logically sound multi-level taxonomy, and what are the inherent trade-offs between them?

1.4. Approaches

2. Related Work

3. Data Acquisition and Preprocessing

3.1. Data Source and Cleaning

- Data Acquisition: Product information was systematically acquired using eBay’s Marketplace RESTful API [53]. Endpoints for retrieving item summaries across various categories were queried to gather an initial set of 5000 product listings, ensuring sufficient size and diversity for analysis and model training.

- Feature Selection: From the raw JSON data retrieved via the API, only the product title field was retained for its descriptive value. This field was designated as the ‘Product Description’. All other metadata (item IDs, pricing, seller details, condition, etc.) were discarded to focus the analysis on the textual content used for classification.

- Deduplication and Null Handling: To ensure data quality and uniqueness, entries with identical ‘Product Description’ texts were removed. Additionally, records with missing or null descriptions were filtered out. This refinement process reduced the dataset to approximately 4000 unique product descriptions, forming the core corpus for the study.

- Text Normalization: Standard text normalization procedures were applied to the ‘Product Description’ field. This included converting all text to lowercase, using regular expressions to remove special characters, punctuation, and extraneous symbols, and normalizing whitespace by collapsing multiple spaces into single spaces. This standardization minimizes vocabulary variations unrelated to semantic meaning.

3.2. Keyword Extraction and Refinement

- Keyword Extraction: The KeyBERT library [54] was employed to extract the most representative keywords. This technique utilizes the ‘all-MiniLM-L6-v2’ Sentence-BERT model [51] to generate contextual embeddings for each description. Based on these embeddings, KeyBERT identifies and extracts the short keywords or keyphrases that best capture the semantic essence of the product. For this study, the model was configured to extract the single most relevant 1–2 word phrase from each description. This method provides a semantically grounded representation superior to simple frequency-based techniques.

- Keyword Cleaning: The keywords extracted by KeyBERT underwent a final semi-automatic cleaning step. This involved a review of the extracted terms to filter out overly generic words (e.g., “item”, “new”, and “sale”) or any remaining artifacts from the extraction process that lack discriminative power for categorization. This step, while involving some manual oversight, was crucial to ensure that the keywords forming the basis for subsequent clustering were both semantically meaningful and relevant for distinguishing between different product types. Figure 4 illustrates examples of original product descriptions and the corresponding cleaned keywords extracted by this process.

4. Methodology

4.1. Approach 1: Chain-of-Layer Clustering and LLM Fine-Tuning

4.2. Approach 2: Prompt Engineering for Context-Aware Taxonomy

- Level 1 (Category I) Generation: For each initial keyword cluster identified in the previous step, a specific prompt was constructed. This prompt provided the LLM with a set of representative keywords (e.g., the top 15 most frequent keywords) from that cluster as the primary context. The instruction within the prompt explicitly asked the LLM to generate a concise (ideally 2-word) professional-sounding category name suitable for the eBay e-commerce context. Emphasis was placed on ensuring the name was relevant to product classification and avoided ambiguity. A system message reinforcing the LLM’s role as a “product categorization expert” was potentially included to frame the task. To promote consistency and reduce randomness in the naming, a low generation temperature (e.g., 0.3) was used.

- Level 2 (Category II) Generation: Once Category I names were generated for all initial clusters, the products were regrouped based on these assigned Category I labels. For each resulting group (containing multiple similar Category I names), a new prompt was formulated. This prompt presented the list of constituent Category I names as context and instructed the LLM to abstract a broader common theme, generating a suitable 2–3 word Category II name encompassing the characteristics of the input Category I names. This abstraction process is illustrated conceptually in Figure 7.

- Level 3 (Category III) Generation: The iterative process was repeated one final time. Products were grouped according to their assigned Category II names. For each of these broader groups, a prompt containing the relevant Category II names was sent to the LLM, requesting the generation of the most general top-level Category III name appropriate for that collection of categories.

4.3. Evaluation Metrics

5. Results and Discussion

5.1. Fine-Tuning Approach

5.2. Prompt-Engineering Approach

5.3. Task-Based Evaluation

5.3.1. Qualitative Analysis

5.3.2. Quantitative Alignment

5.4. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| BERT | Bidirectional Encoder Representations from Transformers |

| CoL | Chain-of-Layer |

| GPU | Graphics Processing Unit |

| GPT | Generative Pre-Trained Transformer |

| HAC | Hierarchical Agglomerative Clustering |

| IDF | Inverse Document Frequency |

| JSON | JavaScript Object Notation |

| LLM | Large Language Model |

| LoRA | Low-Rank Adaptation |

| ML | Machine Learning |

| NLP | Natural Language Processing |

| PEFT | Parameter-Efficient Fine-Tuning |

| QLoRA | Quantized Low-Rank Adaptation |

| TF–IDF | Term Frequency–Inverse Document Frequency |

Appendix A. Prompts

Appendix A.1. Prompt for LLM-Based Category Naming

Appendix A.2. Prompt for Level 1 (Category I) Generation

Appendix A.3. Prompt for Level 2 (Category II) Generation

Appendix A.4. Prompt for Level 3 (Category III) Generation

References

- Tahseen, Q. Taxonomy-The Crucial yet Misunderstood and Disregarded Tool for Studying Biodiversity. J. Biodivers. Bioprospecting Dev. 2014, 1, 3. [Google Scholar] [CrossRef]

- Ross, N.J. “What’s That Called?” Folk Taxonomy and Connecting Students to the Human-Nature Interface. In Innovative Strategies for Teaching in the Plant Sciences; Quave, C.L., Ed.; Springer: New York, NY, USA, 2014; pp. 121–134. [Google Scholar] [CrossRef]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Barnes, N.; Mian, A.S. A Comprehensive Overview of Large Language Models. arXiv 2023, arXiv:2307.06435. [Google Scholar] [CrossRef]

- Mars, M. From Word Embeddings to Pre-Trained Language Models: A State-of-the-Art Walkthrough. Appl. Sci. 2022, 12, 8805. [Google Scholar] [CrossRef]

- Vu, B.; deVelasco, M.; Mc Kevitt, P.; Bond, R.; Turkington, R.; Booth, F.; Mulvenna, M.; Fuchs, M.; Hemmje, M. A Content and Knowledge Management System Supporting Emotion Detection from Speech. In Conversational Dialogue Systems for the Next Decade; Springer: Singapore, 2021; pp. 369–378. [Google Scholar]

- Wang, X. The application of NLP in information retrieval. Appl. Comput. Eng. 2024, 42, 290–297. [Google Scholar] [CrossRef]

- Vu, B.; Mertens, J.; Gaisbachgrabner, K.; Fuchs, M.; Hemmje, M. Supporting taxonomy management and evolution in a web-based knowledge management system. In Proceedings of the 32nd International BCS Human Computer Interaction Conference, Belfast, UK, 4–6 July 2018. BCS Learning & Development. [Google Scholar]

- Sujatha, R.; Rao, B.R.K. Taxonomy construction techniques-issues and challenges. Int. J. Comput. Sci. Inf. Technol. 2016, 7, 706–711. [Google Scholar]

- Le, T.T.; Cao, T.; Xuan, X.; Pham, T.D.; Luu, T. An Automatic Method for Building a Taxonomy of Areas of Expertise. In Proceedings of the 15th International Conference on Agents and Artificial Intelligence, ICAART 2023, Lisbon, Portugal, 22–24 February 2023; Rocha, A.P., Steels, L., van den Herik, H.J., Eds.; SCITEPRESS: Setúbal, Portugal, 2023; Volume 3, pp. 169–176. [Google Scholar] [CrossRef]

- Punera, K.; Rajan, S.; Ghosh, J. Automatic Construction of N-ary Tree Based Taxonomies. In Proceedings of the Sixth IEEE International Conference on Data Mining-Workshops (ICDMW’06), Hong Kong, China, 18–22 December 2006; pp. 75–79. [Google Scholar]

- Chen, B.; Yi, F.; Varró, D. Prompting or Fine-Tuning? A Comparative Study of Large Language Models for Taxonomy Construction. In Proceedings of the 2023 ACM/IEEE International Conference on Model Driven Engineering Languages and Systems Companion (MODELS-C), Västerås, Sweden, 1–6 October 2023; pp. 588–596. [Google Scholar]

- Fayyad, U.M.; Piatetsky-Shapiro, G.; Smyth, P. From data mining to knowledge discovery in databases. AI Mag. 1996, 17, 37–54. [Google Scholar]

- Meta. Meta Llama 3 8B Instruct. 2024. Available online: https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct (accessed on 5 January 2025).

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The Llama 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Microsoft. Phi-3.5 Mini Instruct. 2024. Available online: https://huggingface.co/microsoft/Phi-3.5-mini-instruct (accessed on 5 January 2025).

- Abdin, M.; Aneja, J.; Awadalla, H.; Awadallah, A.H.; Awan, A.A.; Bach, N.; Bahree, A.; Bakhtiari, A.; Bao, J.; Behl, H.; et al. Phi-3 technical report: A highly capable language model locally on your phone. arXiv 2024, arXiv:2404.14219. [Google Scholar] [CrossRef]

- Hearst, M.A. Automatic acquisition of hyponyms from large text corpora. In Proceedings of the 14th Conference on Computational Linguistics, Nantes, France, 23–28 August 1992; Volume 2, pp. 539–545. [Google Scholar]

- Murthy, K.; Faruquie, T.A.; Subramaniam, L.V.; Prasad, K.H.; Mohania, M. Automatically generating term-frequency-induced taxonomies. In Proceedings of the 48th Annual Meeting of the Association for Computational Linguistics, Uppsala, Sweden, 11–16 July 2010; ACL 2010 Conference Short Papers. pp. 126–131. [Google Scholar]

- Qaiser, S.; Ali, R. Text Mining: Use of TF-IDF to Examine the Relevance of Words to Documents. Int. J. Comput. Appl. 2018, 181, 25–29. [Google Scholar] [CrossRef]

- Rose, S.; Engel, D.; Cramer, N.; Cowley, W. Automatic Keyword Extraction from Individual Documents. In Text Mining: Applications and Theory; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2010; pp. 1–20. [Google Scholar]

- Song, Y.; Liu, S.; Liu, X.; Wang, H. Automatic Taxonomy Construction from Keywords via Scalable Bayesian Rose Trees. IEEE Trans. Knowl. Data Eng. 2015, 27, 1861–1874. [Google Scholar] [CrossRef]

- Yin, H.; Aryani, A.; Petrie, S.; Nambissan, A.; Astudillo, A.; Cao, S. A Rapid Review of Clustering Algorithms. arXiv 2024, arXiv:2401.07389. [Google Scholar] [CrossRef]

- Velardi, P.; Faralli, S.; Navigli, R. OntoLearn Reloaded: A graph-based algorithm for taxonomy induction. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Sofia, Bulgaria, 4–9 August 2013; pp. 623–633. [Google Scholar]

- Devdai1y. A Rapid Review of Clustering Algorithms. 2025. Available online: https://velog.io/@devdai1y/A-Rapid-Review-of-Clustering-Algorithms (accessed on 24 September 2025).

- Zahera, H.M.; Sherif, M.A. ProBERT: Product Data Classification with Fine-Tuning BERT Model. In Proceedings of the Mining the Web of HTML-Embedded Product Data Workshop, Athens, Greece, 2–6 November 2020. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Huggingface’s transformers: State-of-the-art natural language processing. arXiv 2019, arXiv:1910.03771. [Google Scholar]

- Brinkmann, A.; Bizer, C. Improving Hierarchical Product Classification Using Domain-Specific Language Modelling. IEEE Data Eng. Bull. 2021, 44, 14–25. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. In Proceedings of the Advances in Neural Information Processing Systems 35 (NeurIPS 2022), New Orleans, LA, USA, 28 November–9 December 2022; Curran Associates, Inc.: New York, NY, USA, 2022; pp. 24824–24837. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. A Survey on Prompt Engineering for Large Language Models: Progress, Methods, and Challenges. arXiv 2023, arXiv:2302.11382. [Google Scholar]

- Srivastava, A.; Rastogi, A.; Rao, A.; Shoeb, A.A.M.; Abid, A.; Fisch, A.; Brown, A.R.; Santoro, A.; Gupta, A.; Garriga-Alonso, A.; et al. Beyond the Imitation Game: Quantifying and extrapolating the capabilities of language models. arXiv 2022, arXiv:2206.04615. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems 33 (NeurIPS 2020), Virtual, 6–12 December 2020; Curran Associates, Inc.: New York, NY, USA, 2020; pp. 1877–1901. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-Train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. ACM Comput. Surv. 2023, 55, 195. [Google Scholar] [CrossRef]

- Zeng, Q.; Bai, Y.; Tan, Z.; Feng, S.; Liang, Z.; Zhang, Z.; Jiang, M. Chain-of-Layer: Iteratively Prompting Large Language Models for Taxonomy Induction from Limited Examples. arXiv 2024, arXiv:2402.07386. [Google Scholar]

- Zhao, T.Z.; Wallace, E.; Feng, S.; Klein, D.; Singh, S. Calibrate Before Use: Improving Few-Shot Performance of Language Models. In Proceedings of the 38th International Conference on Machine Learning (ICML 2021), Virtual, 18–24 July 2021; Proceedings of Machine Learning Research; Meila, M., Zhang, T., Eds.; PMLR: New York, NY, USA, 2021; Volume 139, pp. 12697–12706. [Google Scholar]

- Wiegreffe, S.; Hessel, J.; Swayamdipta, S.; Riedl, M.; Choi, Y. Reframing Human-AI Collaboration for Generating Free-Text Explanations. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA + Online, 10–15 July 2022. [Google Scholar]

- Touretzky, D.S. Word Embedding Demo: Tutorial. 2022. Available online: https://www.cs.cmu.edu/~dst/WordEmbeddingDemo/tutorial.html (accessed on 24 September 2025).

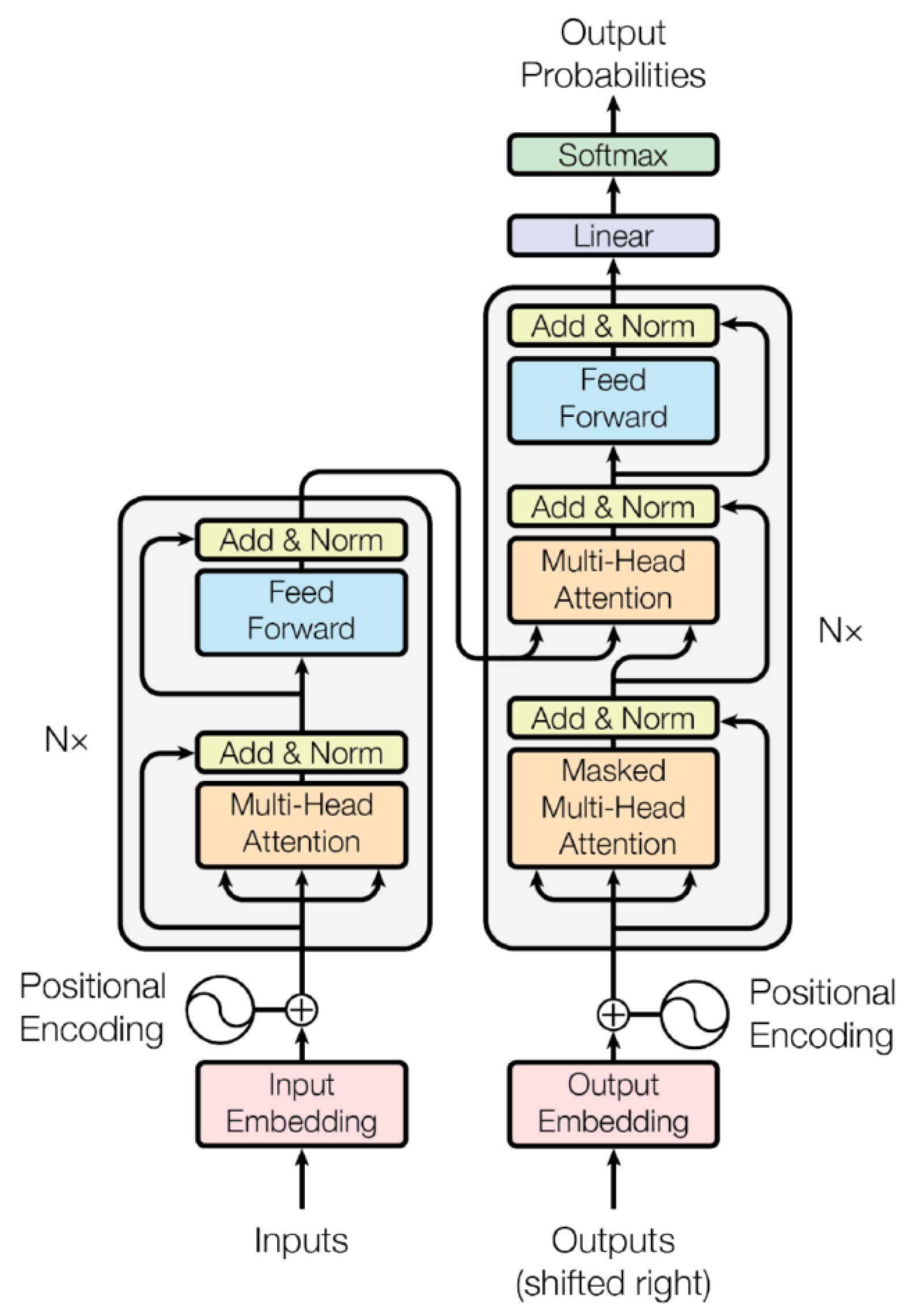

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; pp. 5998–6008. [Google Scholar]

- Howard, J.; Ruder, S. Universal Language Model Fine-tuning for Text Classification. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 328–339. [Google Scholar]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. BioBERT: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef]

- Gururangan, S.; Marasović, A.; Swayamdipta, S.; Lo, K.; Beltagy, I.; Downey, D.; Smith, N.A. Don’t Stop Pretraining: Adapt Language Models to Domains and Tasks. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Virtual, 5–10 July 2020; pp. 8342–8360. [Google Scholar]

- Le Scao, T.; Rush, A.M. How Many Data Points is a Prompt Worth? In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Virtual, 6–11 June 2021; pp. 2607–2614. [Google Scholar]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- McCloskey, M.; Cohen, N.J. Catastrophic Interference in Connectionist Networks: The Sequential Learning Problem. In The Psychology of Learning and Motivation; Psychology of Learning and Motivation; Bower, G.H., Ed.; Academic Press: Cambridge, MA, USA, 1989; Volume 24, pp. 109–165. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. QLoRA: Efficient Finetuning of Quantized LLMs. In Proceedings of the Advances in Neural Information Processing Systems 36 (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023; Beygelzimer, A., Hsu, D., Locatello, F., Schölkopf, B., Eds.; Curran Associates, Inc.: New York, NY, USA, 2023; pp. 36175–36204. [Google Scholar]

- Xu, L.; Xie, H.; Qin, S.Z.; Tao, X.; Wang, F.L. Parameter-efficient fine-tuning methods for pretrained language models: A critical review and assessment. arXiv 2023, arXiv:2312.12148. [Google Scholar]

- Lialin, V.; Deshpande, V.; Rumshisky, A. Scaling Down to Scale Up: A Guide to Parameter-Efficient Fine-Tuning. J. Mach. Learn. Res. 2023, 24, 1–51. [Google Scholar]

- Zhang, C.; Tao, F.; Chen, X.; Shen, J.; Jiang, M.; Sadler, B.; Vanni, M.; Han, J. Taxogen: Unsupervised topic taxonomy construction by adaptive term embedding and clustering. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 2701–2709. [Google Scholar]

- Bordea, G.; Lefever, E.; Buitelaar, P. SemEval-2016 task 13: Taxonomy extraction evaluation (texeval-2). In Proceedings of the 10th International Workshop on Semantic Evaluation (SemEval-2016), San Diego, CA, USA, 16–17 June 2016; pp. 1081–1091. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating text generation with BERT. arXiv 2019, arXiv:1904.09675. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. arXiv 2019, arXiv:1908.10084. [Google Scholar] [CrossRef]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A survey on evaluation of large language models. ACM Trans. Intell. Syst. Technol. 2024, 15, 39. [Google Scholar] [CrossRef]

- eBay Inc. eBay RESTful APIs. 2024. Available online: https://developer.ebay.com/api-docs/static/ebay-rest-landing.html (accessed on 20 December 2024).

- Grootendorst, M. KeyBERT: Minimal Keyword Extraction with BERT. 2020. Available online: https://doi.org/10.5281/zenodo.4461265 (accessed on 10 May 2025).

- Hugging Face. Bitsandbytes Documentation. 2024. Available online: https://huggingface.co/docs/bitsandbytes/ (accessed on 25 February 2025).

- Hu, S.; Shen, L.; Zhang, Y.; Chen, Y.; Tao, D. On Transforming Reinforcement Learning with Transformers: The Development Trajectory. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 8580–8599. [Google Scholar] [CrossRef] [PubMed]

- Jeong, C. Domain-specialized LLM: Financial fine-tuning and utilization method using Mistral 7B. J. Intell. Inf. Syst. 2024, 30, 93–120. [Google Scholar] [CrossRef]

- Shahane, S. E-Commerce Text Classification. 2022. Available online: https://www.kaggle.com/datasets/saurabhshahane/ecommerce-text-classification (accessed on 5 June 2025).

| Parameter Category | Parameter | Value |

|---|---|---|

| Base Model | LLM | Meta-Llama-3 8B Instruct |

| Technique | QLoRA (PEFT) | |

| Quantization | 4-bit (NF4 via bitsandbytes) | |

| LoRA Rank (r) | 32 | |

| Fine-Tuning | LoRA Alpha | 16 |

| LoRA Dropout | 0.05 | |

| LoRA Target Modules | q_proj, k_proj, v_proj, o_proj, | |

| gate_proj, up_proj, down_proj | ||

| Library | TRL (SFTTrainer) | |

| Optimizer | Paged AdamW 8-bit | |

| Learning Rate | 1 × 10−4 | |

| Training | Epochs | 2 |

| Max Sequence Length | 512 tokens | |

| Batch Size (Train/Eval) | 2/4 | |

| Gradient Accumulation Steps | 4 |

| Category | Metric | Fine-Tuning | Prompt Engineering |

|---|---|---|---|

| Accuracy | Overall BERTScore F1 (%) | 70.91 | 61.66 |

| Overall Cosine Sim. (%) | 66.40 | 60.34 | |

| Resource Usage | Peak System RAM | 9.2 GB | 5.7 GB |

| Peak GPU RAM | 13.5 GB | 7.7 GB | |

| Time and Speed | Model Training Time | 31 min 17 s | Not Applicable |

| Avg. Inference Speed (Token/s) | 47.37 | 43.48 |

| Metric | Category I | Category II | Category III | Overall Average |

|---|---|---|---|---|

| BERTScore Precision (%) | 66.25 | 71.72 | 76.10 | 71.36 |

| BERTScore Recall (%) | 70.27 | 67.68 | 74.29 | 70.74 |

| BERTScore F1 (%) | 68.14 | 69.60 | 75.00 | 70.91 |

| Cosine Sim. (%) | 65.93 | 65.37 | 67.90 | 66.40 |

| Result Type and Level | Category Comparison | Analysis |

|---|---|---|

| Successes: Strong Semantic and Lexical Alignment | ||

| Success (Level III) | Gen: Clothing, Accessories, and Fashion | |

| Truth: Clothing, Shoes, and Accessories | Excellent match. The model correctly identified the top-level concept. | |

| Success (Level II) | Gen: Sports and Outdoors | |

| Truth: Sporting Goods | Strong semantic and lexical alignment for this mid-level category. | |

| Success (Level I) | Gen: Antique Maps | |

| Truth: Antique Maps | Perfect lexical match, indicating precise learning from specific data. | |

| Partial Successes: Semantically Correct, Lexically Different | ||

| Partial (Level III) | Gen: Health, Wellness, and Personal Care | |

| Truth: Health and Beauty | Semantically correct. Generated a valid, arguably more modern, term. | |

| Partial (Level II) | Gen: Material Equipment and Building Supplies | |

| Truth: Business and Industrial | Semantically related, but scope is different. The model created a more specific sub-category within the broader “Business and Industrial” domain. | |

| Partial (Level I) | Gen: Health Monitoring and Tracking Devices | |

| Truth: Wearable Health Devices | Correct concept but focuses on function (“tracking”) versus form (“wearable”). | |

| Mismatches and Failures | ||

| Mismatch (Level III) | Gen: Energy Solutions and Eco-Friendly Products | |

| Truth: Eco-Home | Generated category is too broad and conflates two distinct concepts. The ground truth is more focused. | |

| Mismatch (Level II) | Gen: Decorative Hardware | |

| Truth: Automotive | A clear mismatch. The model likely miscategorized a sub-group of products, leading to an incorrect mid-level category. | |

| Mismatch (Level I) | Gen: AI-Powered Code Assistants | |

| Truth: Developer Tools | Too specific. The model focused on a niche product type instead of the general category. | |

| Category III | Category II | Category I |

|---|---|---|

| Health, Wellness, and Personal Care | Health and Personal Care | Airbrushing and Compressors |

| Pain Relief Devices | ||

| First Aid and Training Tools | ||

| Skin Care Products | ||

| Personal Care | ||

| First Aid and Safety Equipment | ||

| Hair and Grooming | ||

| Health and Wellness | ||

| Airbrush Tanning and Accessories | ||

| Fitness and Wellness | Fitness Trackers | |

| Weight Management and Nutrition | ||

| Safety Equipment | Emergency Safety Kits | |

| Firefighting and Ignition Equipment | ||

| Health Monitoring and Diagnostics | ||

| Medical Equipment | Detectors and Sensors | |

| Health Monitoring and Tracking Devices | ||

| Therapeutic Devices | ||

| Pain Relief and Therapy Devices | ||

| Health Monitoring Sensors | ||

| Diagnostic Medical Kits | ||

| Technology Gadgets and Consumer Electronics | Consumer Electronics | Cameras and Accessories |

| Computers and Accessories | ||

| Mobile Phones | ||

| Safety Tech Accessories | ||

| Entertainment Electronics | ||

| Tech Gadgets and Accessories | ||

| Latest Tech Gadgets | ||

| Entertainment | Virtual Reality Accessories | |

| Technology and Software | Software Development and Tech Tools | |

| Information Technology and Software | ||

| Media Editing and Production | ||

| Luxury Items | High-End Smartwatches |

| Metric | Category I | Category II | Category III | Overall Average |

|---|---|---|---|---|

| BERTScore Precision (%) | 72.35 | 57.97 | 55.20 | 61.84 |

| BERTScore Recall (%) | 72.23 | 57.25 | 56.25 | 61.91 |

| BERTScore F1 (%) | 72.05 | 57.32 | 55.61 | 61.66 |

| Cosine Sim. (%) | 79.79 | 52.20 | 49.02 | 60.34 |

| Category III | Category II | Category I |

|---|---|---|

| Clothing, Accessories, and Fashion | Apparel | Yoga and Fitness Wear |

| Clothing and Accessories | Rain and Waterproof Gear Base Layers and Thermals Women’s Clothing | |

| Beauty and Makeup | Makeup Products | |

| Luxury and Designer Items | Designer Sunglasses | |

| Cultural, Educational, and Artistic Collections | Books and Literature | Literary Works Inspirational Leaders Antique Books |

| Fine Art | Art Prints and Frames | |

| Instruments | Drums and Percussion | |

| Health, Wellness, and Personal Care | Health and Personal Care | Hair and Grooming |

| Safety Equipment | Emergency Safety Tools Cleaning Supplies | |

| Home Essentials, Furniture, and Decor | Home Improvement | Measurement Tools Fans and Ventilation |

| Kitchenware | Kitchen Tools and Gadgets Tea Kettles and Makers | |

| Industrial Equipment and Building Supplies | Construction Supplies | Roofing and Waterproofing Supplies |

| Industrial Supplies | Process Control Equipment | |

| Miscellaneous | Food and Beverages | Packaged Food and Beverages Ethnic and Regional Foods |

| Seasonal Accessories | Holiday Decorations | |

| Home Essentials | Home and Kitchen | |

| Miscellaneous | Miscellaneous Labels and Labeling | |

| Equestrian Apparel and Accessories | Saddlery | |

| Furniture and Decor | Stools | |

| Sports, Outdoor Activities, and Leisure | Sports and Outdoors | Outdoor Adventure Packs Running and Athletic Gear |

| Technology Gadgets and Consumer Electronics | Consumer Electronics | Latest Electronics Gadgets Gaming Accessories Mobile Phones |

| Transportation, Travel Gear, and Accessories | HVAC and Refrigeration Equipment | Air and Refrigeration Systems and Accessories |

| Product Description (Summary) | Ground Truth | Generated Category III | Analysis |

|---|---|---|---|

| AREO Yoga Pant | Clothing and Accessories | Clothing, Accessories, and Fashion | Direct Match: The model correctly identifies the product’s primary domain. |

| Born to Run: A Hidden Tribe, Superathletes… | Books | Sports, Outdoor Activities, and Leisure | Semantic Match: Categorizes by the book’s content (running), not its media type (book), showing deep contextual understanding. |

| Durastrip SBS Bitumen Self Adhesive Bitumen Flashing Tape… | Household | Industrial Equipment and Building Supplies | Granular and Accurate: Provides a much more specific and useful category than the broad “Household” label. |

| TopMate C5 12-15.6 inch Gaming Laptop Cooler… | Electronics | Technology Gadgets and Consumer Electronics | Direct Match: Correctly identifies the electronics domain with a more descriptive label. |

| Ground-Truth Category (Kaggle) | Primary Generated Category III (Our Model) |

|---|---|

| Clothing and Accessories | Clothing, Accessories, and Fashion |

| Electronics | Technology Gadgets and Consumer Electronics |

| Household | Home Essentials, Furniture, and Decor |

| Books | Cultural, Educational, and Artistic Collections * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vu, B.; Naik, R.G.; Nguyen, B.K.; Mehraeen, S.; Hemmje, M. Automated Taxonomy Construction Using Large Language Models: A Comparative Study of Fine-Tuning and Prompt Engineering. Eng 2025, 6, 283. https://doi.org/10.3390/eng6110283

Vu B, Naik RG, Nguyen BK, Mehraeen S, Hemmje M. Automated Taxonomy Construction Using Large Language Models: A Comparative Study of Fine-Tuning and Prompt Engineering. Eng. 2025; 6(11):283. https://doi.org/10.3390/eng6110283

Chicago/Turabian StyleVu, Binh, Rashmi Govindraju Naik, Bao Khanh Nguyen, Sina Mehraeen, and Matthias Hemmje. 2025. "Automated Taxonomy Construction Using Large Language Models: A Comparative Study of Fine-Tuning and Prompt Engineering" Eng 6, no. 11: 283. https://doi.org/10.3390/eng6110283

APA StyleVu, B., Naik, R. G., Nguyen, B. K., Mehraeen, S., & Hemmje, M. (2025). Automated Taxonomy Construction Using Large Language Models: A Comparative Study of Fine-Tuning and Prompt Engineering. Eng, 6(11), 283. https://doi.org/10.3390/eng6110283