Abstract

This article employed pupillometry as a non-invasive technique to analyze pupillary light reflex (PLR) using LED flash stimuli. Particularly, for the experiments, only the red LED with a wavelength of 600 nm served as the light stimulation source. To stabilize the initial pupil size, a pre-stimulus (PRE) period of 3 s was implemented, followed by a 1 s stimulation period (ON) and a 4 s post-stimulus period (POST). Moreover, an experimental, low-cost pupillometer prototype was designed to capture pupillary images of 13 participants. The prototype consists of a 2-megapixel web camera and a lighting system comprising infrared and RGB LEDs for image capture in low-light conditions and stimulus induction, respectively. The study reveals several characteristic features for classifying the phenomenon, notably the mobility of Hjórth parameters, achieving classification percentages ranging from 97% to 99%, and offering novel insights into pattern recognition in pupillary activity. Moreover, the proposed device successfully captured the PLR from all the participants with zero reported incidents or health affectations.

1. Introduction

The pupil, a small black aperture at the iris’s center, regulates incoming light. It contributes to image clarity on the retina along with the cornea and crystalline lens. The autonomic nervous system governs involuntary actions, including pupil size, shape, and light responsiveness. Two types of muscles modulate pupil size—the constrictor and dilator—which are innervated by the parasympathetic and sympathetic systems. Changes in lighting conditions typically drive variations in pupil diameter. As the light level increases, the pupil constricts to minimize light scatter and enhance retinal illuminance, consequently boosting visual acuity. Conversely, in low-light conditions, such as darkness, the pupil dilates to facilitate more light entry, optimizing visual perception [1].

The pupillary light reflex (PLR) test examines instantaneous pupillary contraction in response to a light stimulus. It is a rapid and convenient method of detecting and evaluating autonomic nervous system abnormalities, such as Alzheimer’s, Parkinson’s, and autism spectrum disorder [2,3,4]. The typical PLR encompasses three temporal components: the pre-stimulus, where no external illumination is applied to the pupil to establish its initial size; the stimulus step, where a colored light stimulus is applied during a short time period; and the post-stimulus period, where the post-illumination pupil response (PIPR) is captured [5,6].

However, the high cost of digital pupillometers makes it difficult for devices to reach all medical centers, for instance, the NPi-300 Pupillometer [7] and the Automatic keratometer OptoChek Plus [8], devices that allow physicians to measure the pupil and obtain insightful data from the image. Additionally, the limited number of specialized clinicians impacts the early and accurate diagnosis of different conditions in emerging countries due to the manual assessment of pupil response, which has relied on subjective methods using a penlight or flashlight. Thus, these manual pupillary assessment techniques are prone to substantial inaccuracies and inconsistencies.

For such a reason, different low-cost pupillometers have been developed; for instance, Moon et al. [9] proposed a system that utilizes the flash LED of a smartphone to deliver light stimuli to the patient’s pupil. Subsequently, the reactions are recorded as images and videos, and then analyzed using a smartphone application to measure the PLR. Other systems developed by McAnany et al. [10] and Neice et al. [11] also employ the built-in camera of smartphones, illuminated by the smartphone camera light at the lowest setting to capture the pupil images. Similarly, Barker and Levkowitz [12] used the HP Reverb G2 Omnicept Edition VR Headset to record pupil responses. This headset contains an eye-tracking sensor, facilitating the image capture process by keeping the eye inside a region of interest. Despite the high portability of these last devices, their cost is still considerable.

On the other hand, Bernabei et al. [13] proposed a less portable but cheaper hardware design comprising three primary functional blocks: the optical head (OH), the control electronics (CE), and the computer interface (CI). This device illuminates the eye with a diffused RGB stimulus with preset chromatic characteristics over a wide range of intensities and flicker frequencies, simultaneously capturing the near-infrared (NIR) images of the pupil. Similarly, Kotani et al. [14] developed an automatic mobile pupillometer to obtain the pupil diameter and the PLR of healthy volunteers and patients with intracranial lesions in an intensive care unit. This device emits visible light onto one side of the pupil and then uses infrared light to measure the diameter of the pupils.

In this way, herein, a low-cost and portable pupillometer prototype was designed to capture pupillary images and analyze pupil size using different machine-learning techniques. The article is organized as follows: Section 2 describes the digital pupillometer design and the feature extraction methods for the PLR; Section 3 presents the numerical and visual results of the proposed approach; Section 4 shows a brief discussion of the proposed methodology; and finally, Section 5 draws conclusions.

2. Materials and Methods

2.1. Digital Pupillometer

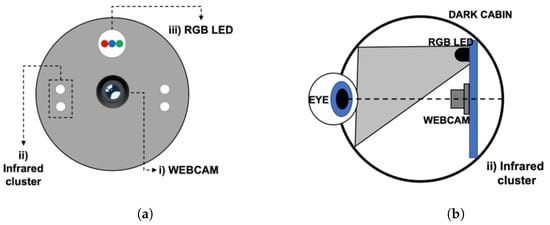

An optoelectronic device capable of pupil stimulation was designed and built using light stimuli emitted by LEDs, in this case, coming from three light-emitting diodes corresponding to Red (R), Green (G), and Blue (B). This device measures pupil diameters in a controlled lighting environment and records variations in diameter during stimulation, enabling observation of the pupillary light reflex. Figure 1a illustrates the constructed pupillometer, highlighting key components, and Figure 1b presents a lateral view of the pupillometer, including the following:

Figure 1.

Pupillometer diagram, highlighting the main components. (a) Front view and (b) lateral view.

- A 2 MP web camera positioned 5 cm from the eye to record the photomotor reflex.

- Two clusters of infrared LEDs for pupil illumination within a dark enclosure.

- LEDs serving as stimulators at different wavelengths (Red = 600 nm, Green = 550 nm, Blue = 400 nm).

These components are placed inside a dark booth to stabilize the pupil at an initial diameter before light stimulation. The 5 cm distance minimizes the effects of accommodation that could result in pupillary constriction due to the proximity of the device. Additionally, the dark enclosure ensures that any variation in pupil diameter is attributed to light stimuli and not to changes in focal length [15].

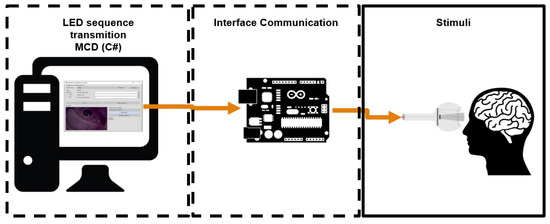

These components are synchronized through a graphical interface controlling the webcam’s COM port to ensure proper eye posture visualization. Additionally, the interface manages the resources of the micro-controller (ARDUINO UNO) and its interconnected components (infrared and LEDs) to regulate baseline and stimulation times, as illustrated in Figure 2. The pupil must be in total darkness to ensure stability, rendering it impossible for the camera sensor to detect any pupil changes. In such instances, a pair of Infrared Light-Emitting Diodes (IR LEDs) are integrated into the device.

Figure 2.

Graphical interface and communication protocol of the digital pupillometer.

2.2. Stimulation Protocol

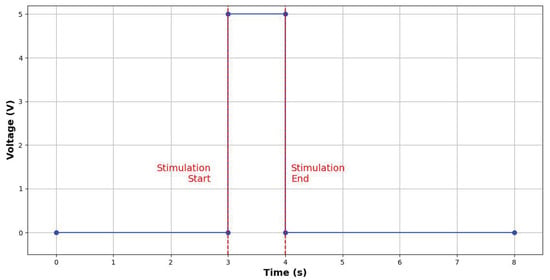

The electronics depicted in Figure 1 are placed within a dark cabin to stabilize the pupil to an initial diameter. Particularly, for this experiment, a red LED with a wavelength of 600 nm served as the light stimulation source. To stabilize the initial pupil size, a pre-stimulus (PRE) period of 3 s was implemented, followed by a 1 s stimulation period (ON) and a 4 s post-stimulus period (POST). Figure 3 illustrates the time segments and voltage applied to the LED throughout the experiment, similar to Adhikari et al. [16], where a 1 s red stimulus was applied.

Figure 3.

Illumination protocol including the PRE, ON, and POST period.

2.3. Data Acquisition

The sample under study consisted of 13 volunteers (6 women and 7 men), healthy young adults between 20 and 32 years old with no neurological history and no chronic degenerative diseases. They were students and academics from the Julio Garavito Colombian School of Engineering in Bogotá, Colombia. This study was carried out according to the Declaration of Helsinki. The participants were informed of the action protocol before giving their informed consent through protocol 004-2018 of the ethics committee of the project through the Characterization of brain activity under light stimulation, which reviews the experiment protocol, informed consent, and researchers’ curriculum. Among the participants, ten were found to be in perfect health with no vision-related issues. However, one participant had myopia, which causes difficulty in seeing distant objects clearly, potentially affecting their light response due to the need for corrective lenses [17]. Additionally, two participants had astigmatism, a condition characterized by an irregular curvature of the cornea or lens, leading to blurred or distorted vision that influences their perception of light [18].

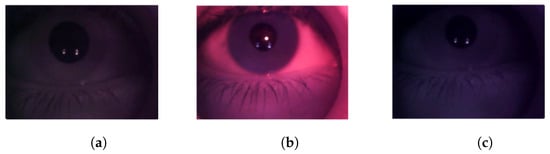

Throughout the stimuli protocol, participants’ videos were recorded in .avi format, with a resolution of 2 Mega Pixels (MP) ( pixels) with a sampling frequency of 15 Hz. Thus, over the 8 s duration, 120 images were captured for each participant. In Figure 4, three extracts depict the time segments of the events: (a) PRE, (b) ON, and (c) POST. It is important to point out that no white light was used in the study. The stimulation process was as follows: 3 s of baseline (no light) to measure the initial diameter (PRE), one second of red light (ON), and 4 s of recovery without light stimulation (POST). Following the acquisition stage, the video undergoes frame-by-frame processing to estimate pupil diameters.

Figure 4.

Image acquisition during the (a) PRE, (b) ON, and (c) POST period.

Before the PLR test, the distance hole-in-the-card test [19] was performed first to identify the dominant eye of each participant. The subject received a black paper with a circular hole of 3 cm in diameter in the center. They were instructed to hold the paper straight ahead at arm’s length while viewing a single 20/50 letter at 10 feet with both eyes. The examiner covered the left eye and asked if the subject could still see the letter, then repeated the process for the right eye. The eye that could see the letter was recorded as the dominant eye (right or left). If the subject could see the letter with both eyes, the dominant eye was recorded as neither; thus, the right eye was selected to perform the PLR test.

Two independent experiments were carried out for each participant. In each experiment, the next procedure was followed to acquire the data:

- The participants arrived individually at the laboratory and signed an informed consent document, which explained the activities to be carried out during the test, and they received instructions on the necessary body posture to guarantee a satisfactory test.

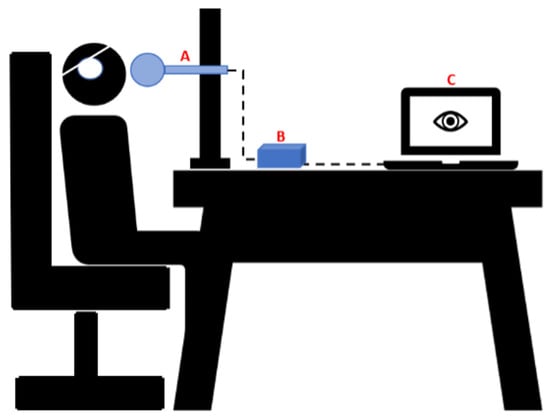

- In an appropriate posture, the pupillometer was placed on the participant’s dominant eye [20]. In contrast, an eye patch was placed on the other eye to not modify the nature of the consensual ocular reflex [13], as shown in Figure 5. The indications before experimenting were the following:

Figure 5. Experimental posture setup. The A symbol represents the pupillometer position, the B symbol the Interface Communication, and the C symbol the Graphical User Interface.

Figure 5. Experimental posture setup. The A symbol represents the pupillometer position, the B symbol the Interface Communication, and the C symbol the Graphical User Interface.- (a)

- Remain as long as possible with both eyes open before, during, and after receiving the flash stimulus.

- (b)

- Stare at the center of the device.

- (c)

- Do not speak while the video is being acquired or make body movements, intending to avoid other stimuli that could affect the pupil’s diameter.

- Once the pupillometer was placed, each participant was told the moment before the start of the test, and the red light stimulation was carried out for one second according to the stimulation protocol: PRE, ON, and POST period.

- The pupillometer and the eye patch were removed, and the following questions were repeated for all participants:

- (a)

- If they could see naturally.

- (b)

- If flashing visual elements were not reflected after stimulation.

- (c)

- Walk around the environment for a couple of minutes and ask questions 1 and 2 again.

In no case was there an incident.

It is important to point out that the laboratory environment always remained in the same conditions: ambient lighting lamps were turned off and in complete silence, since studies analyzing multiple sclerosis and recognition of amblyopic eye advise taking these variables into account [21,22,23].

2.4. Image Pre-Processing

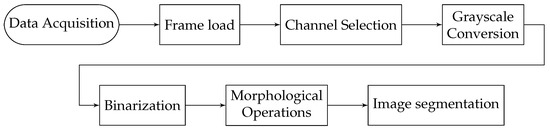

The images were acquired under uniform lighting conditions using the same device and stimulation method, with participants sharing characteristics of similar age and nationality and adhering to identical instructions per the experimental protocol. The proposed method involved the following steps, as depicted in Figure 6:

Figure 6.

General pipeline for the image pre-processing procedure.

- Frame loading: Each video underwent frame-by-frame analysis.

- Channel selection: Green and blue color channels were chosen due to the saturation observed in the red channel caused by LED color stimulation during the analysis of frames corresponding to the stimulation time.

- Grayscale conversion: Images were converted to grayscale, and histogram equalization was applied.

- Binarization: An adaptive thresholding [24] was applied to binary the enhanced grayscale image.

- Morphological operations: Dilation and erosion morphological operations eliminate small objects and fill internal isolated white pixels.

- Image segmentation: The final segmented image was obtained.

2.5. Feature Extraction

After pre-processing the image and obtaining the segmented image, the properties of connected regions of pixels in the image were analyzed to identify and extract pupil diameters from circular-like elements [25]. Once these circular objects are detected and segmented, the diameter of the pupillary response, expressed in pixels, is determined. Therefore, this measure is computed for each frame in the experiment to obtain a temporal signal

. Then, a mobility window technique was employed to exploit temporal information of the pupil size during the ON event with a window size of one second and a 50% overlap. The extracted features in each window are four statistic features, including the mean

variance

asymmetry

and curtosis

with

(number of frames per second) and

is the i-th diameter related to the i-th frame. Furthermore, three Hjorth parameters are computed, which are activity

mobility:

and complexity:

In such a way, 105 features per sample are obtained. Thus, both experiments of each individual are concatenated, resulting in a final feature vector

.

2.6. Machine Learning Techniques

In this work, four different classification algorithms were selected: Binary Tree, Naive Bayes, Fisher’s Linear Discriminant (FLD), and Support Vector Machine (SVM).

Binary trees are a specific type of decision tree, a non-parametric supervised learning method where each node represents any Boolean (binary) function. The main goal of this method is to create a model that learns simple decision rules (if-else). Instead of performing binary comparisons, SVM has the ability to project high-dimensional features into low-dimensional orders, enabling successful classification by computing a hyperplane separating class instances from the training set. Similarly, the FLD approaches the problem by assuming the conditional probability functions

. In this way, FLD can maximize the ratio between the between-class and within-class variations. On the other hand, the Naive Bayes method is based on the Bayes theorem with the assumption of

, that is the independence between features and classes

in the training set seeking to model the distribution of

.

Additionally, cross-validation was implemented using 5-fold validation (20% test, 80% training). As an important variable acquired from the data of each participant, it was noted whether they suffered from any visual disease. This allowed for classification between visually healthy participants and those with visual impairments.

3. Results

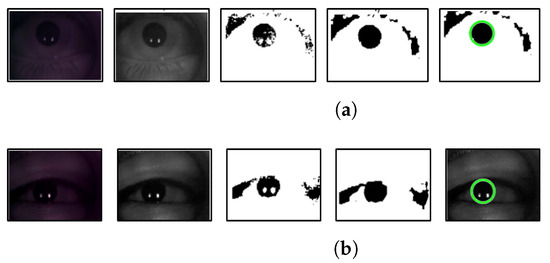

As mentioned before, the red channel of the images was removed due to color saturation produced by the stimuli procedure. Then, the images are pre-processed to obtain a segmented image in order to detect and compute the pupil size. Figure 7 depicts an example of this procedure for participants with a clear or a partially occluded pupil. In both scenarios, the proposed methodology is able to detect the whole pupil.

Figure 7.

Image pre-processing, pupil detection, and diameter computation. (a) Participant with clear pupil, (b) participant with partially occluded pupil. The green circles represent the final detected diameter of the pupil.

Furthermore, it is important to note that Figure 7 shows only one frame during the stimulation process.

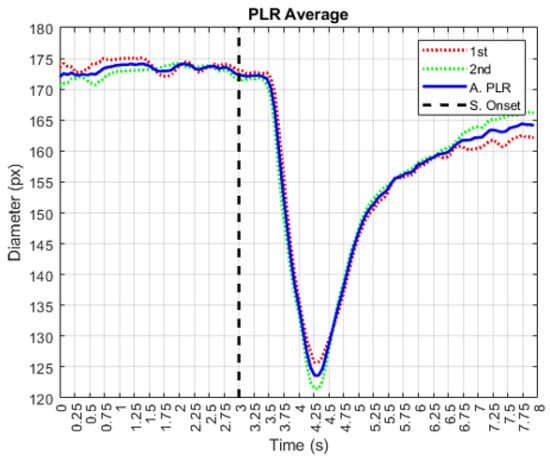

Hence, inspecting the average pupil diameter (in pixels) in the two independent experiments revealed a consistent behavior, as shown in Figure 8. The following line segments represent the general population’s average across repetition numbers. Particularly, the colored lines in the graph represent the average behavior of the sample in two repetitions of the experiment for each subject. The red line indicates the first stimulation repetition, the green line indicates the second repetition, the blue line represents the average derived from the first and second repetition, and the vertical red line marks the start of the stimulus.

Figure 8.

Average pupilarity light response. The average response of all participants of each trial is computed. The red line is the first trial response, the green line is the second trial response, and the blue line is the average of these two.

No abrupt change is observed after stimulation since the pupil takes 200 to 300 milliseconds to respond. The response is a function of a short stimulus of one second, and that is why once the stimulation with red light begins, on average, the abrupt changes in the decrease in pupil diameter are observed after 3.3 s. Furthermore, after 4250 s, a recovery of pupil diameter is evident. These results demonstrate that LED stimulation effectively elicits measurable and relevant pupillary responses.

Likewise, Table 1 presents the results of the PLR experiments, describing the average pupil size along with the standard deviation (SD) at different endpoints: Baseline, Stimuli, and Recovery. The mean pupil size decreases from the Baseline to the Stimuli phase for all measured parameters. For example, the initial diameter decreases from 173.3 to 154.9 pixels, indicating a significant reduction in pupil size in response to the stimuli. Additionally, the p-values less than 0.05 indicate that the differences observed between the Baseline, Stimuli, and Recovery phases are statistically significant.

Table 1.

Average pupil size and standard deviation (SD).

Table 2 shows the classification of visual health and unhealthy participant percentages. The SVM and Naive Bayes achieved the highest classification percentage, with an accuracy of 99.0% and 99.3%, respectively. It can be seen that the proposed framework of extracting temporal features of the pupil diameter through the three phases of the experiment achieved high classification rates in the four classification models.

Table 2.

Classification results.

4. Discussion

In low-income countries, specialists still perform manual clinical examinations with a penlight, which can be affected by factors such as ambient light, the observer’s expertise, and the penlight bulb (light amplitude).

For such a reason, low-cost pupillometers in clinical settings are insightful, not only for measuring the PLR but also for diagnosing cognitive conditions, such as Alzheimer’s and Parkinson’s [26], in which pupils may reveal insightful information about preparation [27], or in more social contexts which represent a critical condition for autism [28].

This research developed a new low-cost pupillometer to measure PLR and classify healthy from unhealthy patients. Furthermore, as mentioned before, further investigation of the relationship between pupil reflexes and other related conditions could be useful to develop multimodal classifiers.

Nevertheless, the proposed study had some limitations that need to be addressed in future research, including obtaining a larger dataset to train a more generalized machine learning model and taking different pupil images of different cognitive conditions to classify them automatically.

5. Conclusions

In this work, the dilation and constriction of the pupil were investigated through the design of a low-cost pupillometer; based on this, we have been able to propose a series of characteristics that allow us to classify physiological conditions derived from visual health through machine learning techniques. The results presented are preliminary evidence for using an alternative method to pupillary stimulation. In the short term, we propose connecting with electroencephalography tools, which allow us to know the behavior that this device induces and evokes when performing neurological examinations through the use of light patterns, such as Fernández-Torre et al. [29], that identify nonconvulsive status epilepticus by the EEG and the pupillometry test captured prolonged oscillations of pupillary diameter. Likewise, different stimulation protocols will be performed to improve the detection and generalization of the detection model as well as the improvement of the device to make it more portable, without the necessity of external devices, offering diagnostic techniques with greater portability, lower equipment maintenance costs, and real-time processing. Moreover, different measurements such as constriction speed, constriction time, and maximum constriction speed will be evaluated to provide additional clinical insights.

Author Contributions

Conceptualization, D.A.G.-H. and M.S.G.-D.; methodology, M.S.G.-D. and F.J.C.-R.; software M.S.G.-D.; validation, F.J.C.-R. and E.O.-M.; formal analysis, F.J.C.-R. and E.O.-M.; investigation, M.S.G.-D. and E.O.-M.; resources, D.A.G.-H. and M.S.G.-D.; data curation, E.O.-M.; writing—original draft preparation, M.S.G.-D. and F.J.C.-R.; writing—review and editing, E.O.-M.; visualization, M.S.G.-D. and E.O.-M.; supervision, F.J.C.-R. and E.O.-M.; project administration, F.J.C.-R. and E.O.-M.; funding acquisition, E.O.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committe of Escuela Colombiana Julio Garavito (004-2018, 08/22/2018).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CI | Computer Interface |

| CE | Control Electronics |

| FLD | Fisher’s Linear Discriminant |

| MP | Mega Pixels |

| NIR | Near-InfraRed |

| OH | Optical Head |

| PIPR | Post-Illumination Pupil Response |

| PLR | Pupillary Light Reflex |

| SVM | Support Vector Machine |

References

- Maqsood, F. Effects of varying light conditions and refractive error on pupil size. Cogent Med. 2017, 4, 1338824. [Google Scholar] [CrossRef]

- McDougal, D.; Gamlin, P. Pupillary Control Pathways. In The Senses: A Comprehensive Reference; Masland, R.H., Albright, T.D., Albright, T.D., Masland, R.H., Dallos, P., Oertel, D., Firestein, S., Beauchamp, G.K., Catherine Bushnell, M., Basbaum, A.I., et al., Eds.; Academic Press: New York, NY, USA, 2008; pp. 521–536. [Google Scholar] [CrossRef]

- Damodaran, T.V. Peripheral nervous system toxicity biomarkers. In Biomarkers in Toxicology; Elsevier: Amsterdam, The Netherlands, 2014; pp. 169–198. [Google Scholar] [CrossRef]

- Lynch, G. Using Pupillometry to Assess the Atypical Pupillary Light Reflex and LC-NE System in ASD. Behav. Sci. 2018, 8, 108. [Google Scholar] [CrossRef] [PubMed]

- Kankipati, L.; Girkin, C.A.; Gamlin, P.D. Post-illumination Pupil Response in Subjects without Ocular Disease. Investig. Ophthalmol. Vis. Sci. 2010, 51, 2764–2769. [Google Scholar] [CrossRef] [PubMed]

- Mathôt, S. Pupillometry: Psychology, physiology, and function. J. Cogn. 2018, 1, 16. [Google Scholar] [CrossRef] [PubMed]

- Neuroptics. NPI-300 Pupillometer. Available online: https://neuroptics.com/npi-300-pupillometer/ (accessed on 1 April 2024).

- OptoChek Plus. OptoChek Plus. Available online: https://www.reichert.com/es-ar/products/optochek-plus (accessed on 1 April 2024).

- Moon, S.Y.; Lee, J.P.; Bae, J.H.; Lee, T.S. Measurement of Pupillary Light Reflex Features through RGB-HSV Color Mapping. Biomed. Eng. Lett. 2015, 5, 29–32. [Google Scholar] [CrossRef]

- McAnany, J.J.; Smith, B.M.; Garland, A.; Kagen, S.L. iPhone-based Pupillometry: A Novel Approach for Assessing the Pupillary Light Reflex. Optom. Vis. Sci. 2018, 95, 953–958. [Google Scholar] [CrossRef] [PubMed]

- Neice, A.E.; Fowler, C.; Jaffe, R.A.; Brock-Utne, J.G. Feasibility study of a smartphone pupillometer and evaluation of its accuracy. J. Clin. Monit. Comput. 2021, 35, 1269–1277. [Google Scholar] [CrossRef] [PubMed]

- Barker, D.; Levkowitz, H. Thelxinoe: Recognizing Human Emotions Using Pupillometry and Machine Learning. Mach. Learn. Appl. Int. J. 2024, 10, 1–14. [Google Scholar] [CrossRef]

- Bernabei, M.; Rovati, L.; Peretto, L.; Tinarelli, R. Measurement of the pupil responses induced by RGB flickering stimuli. In Proceedings of the 2015 IEEE International Instrumentation and Measurement Technology Conference (I2MTC) Proceedings, Pisa, Italy, 11–14 May 2015; pp. 1634–1639. [Google Scholar] [CrossRef]

- Kotani, J.; Nakao, H.; Yamada, I.; Miyawaki, A.; Mambo, N.; Ono, Y. A Novel Method for Measuring the Pupil Diameter and Pupillary Light Reflex of Healthy Volunteers and Patients with Intracranial Lesions Using a Newly Developed Pupilometer. Front. Med. 2021, 8, 598791. [Google Scholar] [CrossRef]

- Hernández, D.A.G.; Ruiz, J.A.A.; Michel, J.R.P. Digital Measurement of the Human Pupil’s Dynamics under Light Stimulation for Medical Applications and Research. Am. J. Biomed. Eng. 2013, 3, 143–147. [Google Scholar] [CrossRef]

- Adhikari, P.; Pearson, C.A.; Anderson, A.M.; Zele, A.J.; Feigl, B. Effect of age and refractive error on the melanopsin mediated post-illumination pupil response (PIPR). Sci. Rep. 2015, 5, 17610. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Zhu, H. Light Signaling and Myopia Development: A Review. Ophthalmol. Ther. 2022, 11, 939–957. [Google Scholar] [CrossRef] [PubMed]

- Harris, W. Astigmatism. Ophthalmic Physiol. Opt. 2000, 20, 11–30. [Google Scholar] [CrossRef]

- Rice, M.L.; Leske, D.A.; Smestad, C.E.; Holmes, J.M. Results of ocular dominance testing depend on assessment method. J. Am. Assoc. Pediatr. Ophthalmol. Strabismus 2008, 12, 365–369. [Google Scholar] [CrossRef] [PubMed]

- Oh, A.J.; Amore, G.; Sultan, W.; Asanad, S.; Park, J.C.; Romagnoli, M.; La Morgia, C.; Karanjia, R.; Harrington, M.G.; Sadun, A.A. Pupillometry evaluation of melanopsin retinal ganglion cell function and sleep-wake activity in pre-symptomatic Alzheimer’s disease. PLoS ONE 2019, 14, e0226197. [Google Scholar] [CrossRef] [PubMed]

- Bitirgen, G.; Daraghma, M.; Özkağnıcı, A. Evaluation of pupillary light reflex in amblyopic eyes using dynamic pupillometry. Turk. J. Ophthalmol. 2019, 49, 310. [Google Scholar] [CrossRef]

- Bitirgen, G.; Akpinar, Z.; Turk, H.B.; Malik, R.A. Abnormal dynamic pupillometry relates to neurologic disability and retinal axonal loss in patients with multiple sclerosis. Transl. Vis. Sci. Technol. 2021, 10, 30. [Google Scholar] [CrossRef] [PubMed]

- Van der Stoep, N.; Van der Smagt, M.; Notaro, C.; Spock, Z.; Naber, M. The additive nature of the human multisensory evoked pupil response. Sci. Rep. 2021, 11, 707. [Google Scholar] [CrossRef] [PubMed]

- Bradley, D.; Roth, G. Adaptive thresholding using the integral image. J. Graph. Tools 2007, 12, 13–21. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Hutton, S.B. Cognitive control of saccadic eye movements. Brain Cogn. 2008, 68, 327–340. [Google Scholar] [CrossRef] [PubMed]

- Dalmaso, M.; Castelli, L.; Galfano, G. Microsaccadic rate and pupil size dynamics in pro-/anti-saccade preparation: The impact of intermixed vs. blocked trial administration. Psychol. Res. 2020, 84, 1320–1332. [Google Scholar] [CrossRef] [PubMed]

- Nuske, H.J.; Vivanti, G.; Hudry, K.; Dissanayake, C. Pupillometry reveals reduced unconscious emotional reactivity in autism. Biol. Psychol. 2014, 101, 24–35. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Torre, J.L.; Paramio-Paz, A.; Lorda-de Los Ríos, I.; Martín-García, M.; Hernández-Hernández, M.A. Pupillary hippus as clinical manifestation of refractory autonomic nonconvulsive status epilepticus: Pathophysiological implications. Seizure 2018, 63, 102–104. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).