Artificial Intelligence and Public Sector Auditing: Challenges and Opportunities for Supreme Audit Institutions

Abstract

1. Introduction

2. Artificial Intelligence in Public Administration

2.1. Concept and Types of Artificial Intelligence

- Reactive Machines: Those AI systems that work on the data available and respond to external stimuli in real-time, without the ability to store data or learn from past experiences. Deep blue (chess-playing supercomputer that beat the chess grandmaster Kasparov in the 90s) or Netflix’s recommendation engine are two examples of this type of AI.

- Limited Memory: those AI systems that can store and use past experiences to make predictions or decisions, but their memory capacity is limited. Some examples of this type are ChatGPT 4, virtual assistants and chatbots, such as Siri or Alexa and self-driving cars.

- Theory of Mind: those AI systems that could understand and respond to human psychological and emotional aspects, potentially leading to more natural and intuitive interactions.

- Self-Awareness: Those AI systems that can think and act. They possess self-consciousness and understanding and will be capable of thinking about their own existence and beliefs.

2.2. The Penetration of Artificial Intelligence in the Public Sector: Opportunities and Risks

- The improvement of public administrations’ decision-making, especially in the formulation, implementation and evaluation of public policies: AI systems can capture the interests and concerns of citizens and identify trends and anticipate situations that deserve the attention of public entities to forecast possible results or impacts, increasing the chances of successful interventions [20];

- The improvement of the design and delivery of more inclusive services to citizens and businesses: The collection and processing of digital data facilitates the improvement of public services, enables interactive engagement with the public and offers guidance or transmits vital information, assisting citizen participation in public sector activities. In the case of infrastructures, the preventive maintenance, fault correction or scheduling of their use according to demand is possible through AI applications, contributing to a more efficient resource utilisation [21];

- The improvement of the management and internal efficiency of public institutions: AI systems can facilitate the fulfilment of objectives and responsibilities, freeing officials from routine tasks to engage in activities of greater value and complexity. They can also support the allocation and management of financial resources, helping to identify and prevent fraud and diversions or inefficiencies in the allocation and use of public money, among other problems [22]. In this sense, Soylu et al. [23] propose as a case study the government of Slovenia, where through the availability of open public procurement data, anomalies have been detected, with spikes observed during periods of crisis, elections or the recent global COVID-19 pandemic;

- The reinforcement of democracy and the fight against corruption: AI tools make it possible, through the examination of information and data, to prevent misinformation and cyber-attacks and to achieve greater transparency and competition, for example, in public procurement processes. Thus, Alhazbi [24] studied the phenomenon of trolling in social networks due to its significant impact on the reputation of public institutions and the increase in polarisation. Henrique et al. [25] proposed using control tools based on machine learning and on statistics to detect non-compliance with public contracts which may seriously affect institutions;

- The enhancement of public safety and security: AI is already being used in crime prevention and the criminal justice system, enabling faster information processing and a more accurate analysis of criminal actions that would reduce judicial procedures, including preventing or predicting terrorist attacks or other criminal actions. AI is also being integrated into the military or national security operations in numerous countries.

- -

- Privacy, confidentiality and security: The collection and processing of large amounts of data in the public sector can pose significant challenges in terms of privacy and security. AI systems may exhibit flaws and vulnerabilities that must be foreseen to prevent unauthorised access, such as attacks that manipulate their ability to learn or act on what they have learnt. It is essential to ensure that citizens’ data are protected and not misused. It is also essential that citizens are informed of their rights, the applicable regulations and how they can make any complaints if they consider it necessary [26,27];

- -

- Transparency: AI systems, such as deep neural networks, are often difficult to interpret. Algorithmic transparency is the element by which citizens can know how autonomous decision systems make decisions that impact their lives [28]. Much of the processing, storage and use of information is performed by algorithms and in a non-transparent way, within a “black box” of virtually inscrutable processing, whose content is unknown even to its programmers [29]. This raises concerns about the lack of transparency in government decisions, which often have implications in the lives of individuals or groups, making it difficult to account for and understand how certain decisions are made. As noted by Berryhill et al. [11], when algorithms are too complex, the possibility of explaining them can be reinforced by traceability and audit mechanisms, alongside the disclosure of their scope;

- -

- Algorithmic discrimination, access and equity: AI algorithms can perpetuate biases and discrimination if trained with erroneous or biassed historical data, which reflect the bias or prejudice of the people who collect them, which may affect groups of citizens by gender, race, age or other factors in their access to resources or services, the level of surveillance to which they are exposed and even their ability to be taken into account in an environment that emphasises technologies [30]. Thus, algorithms can reinforce social-bias-generating injustices [31], distributing resources unevenly [32] and reinforcing the technocratic nature of public administration [33].

3. The External Control of Public Sector Performance in the Face of the Use of Artificial Intelligence

3.1. Key Challenges in AI Adoption

- -

- Which AI systems could be used as tools to perform the work in the institution in a more effective and efficient way?

- -

- How should the institution get ready to address the audit of AI systems employed in the management of public services?

3.2. Institutional Strategies

- -

- Institutional arrangements, such as regulations or decisions in public administration, are necessary to promote data sharing, ensure data openness and improve the value derived from data utilisation;

- -

- The construction of an audit platform in the SAI that incorporates the entire process of data collection, preparation, storage, analysis and presentation enables the integrated management of auditors, audit procedures and technical means in a single system.

3.3. Illustrative Uses of AI by SAIs

- -

- The need for a good understanding of the high-level principles of ML models.

- -

- The need to understand the most common coding languages and model implementations and be able to use the right software tools.

- -

- The need for a computer infrastructure to support machine learning with a high computing power, which often involves cloud-based solutions.

- -

- The need to have a basic understanding of cloud services to properly perform audit work.

- -

- Little previous knowledge: the application of AI in public management is relatively recent and is not as widespread so that both management and auditing experience is scarce.

- -

- Low skills of auditors: As it is a new and complex field of knowledge, it will be difficult to have staff with sufficient training to deal with this type of audit, so this should be one of the priorities in the control institution. This type of audit will, in any case, be closely linked to the audit of information systems, so the presence of specialist teams in this area will facilitate the transition.

- -

- Few guides and manuals: precisely the novelty in auditing AI models entails the difficulty of finding guides or manuals that address this type of audit.

- -

- Have a clear strategy and leadership to improve the use of data;

- -

- Have a coherent infrastructure for data management (emphasis on aspects such as data quality or the interoperability of tools);

- -

- Have broader conditions (e.g., legal, training and security) to safeguard and support the better use of data.

- -

- Artificial Intelligence in Health Care: Benefits and Challenges of Machine Learning Technologies for Medical Diagnostics analyses the current ML medical diagnostic technologies—those that are being used for five selected diseases, emerging ones, challenges affecting their development and adoption and policy options to help address these challenges;

- -

- Department Of Defence Needs Department-Wide Guidance to Inform Acquisitions, in which they examine the key factors that the 13 selected private companies claim to take into account when acquiring AI capabilities and to what extent the Ministry of Defence has guidelines for the acquisition of AI across the department and how these guidelines reflect, if at all, the key factors identified by private sector companies;

- -

- Artificial Intelligence: Agencies Have Begun Implementation but Need to Complete Key Requirements. This report analyses the application of AI in major federal agencies, focusing on current and anticipated uses of AI reported by federal agencies, the degree of completeness and accuracy of AI reports and the degree of compliance with certain federal AI policies and guidelines.

- ➢

- Insufficient technical skills;

- ➢

- Compliance with legal, ethical, data protection and contractual obligations;

- ➢

- The multidisciplinary complexity of the subject;

- ➢

- A lack of business analysis skills in the institution;

- ➢

- Budgetary constraints.

- The awareness and commitment of the governing bodies to implement the necessary initiatives and measures, providing the necessary funding and staff devoted to the implementation process and development;

- The design of a data policy or strategy within the institution should ensure the availability of consistent and reliable information to be able to perform the analysis and the protection of the data, which minimises potential vulnerabilities, granting the necessary relevance to the cybersecurity measures adopted;

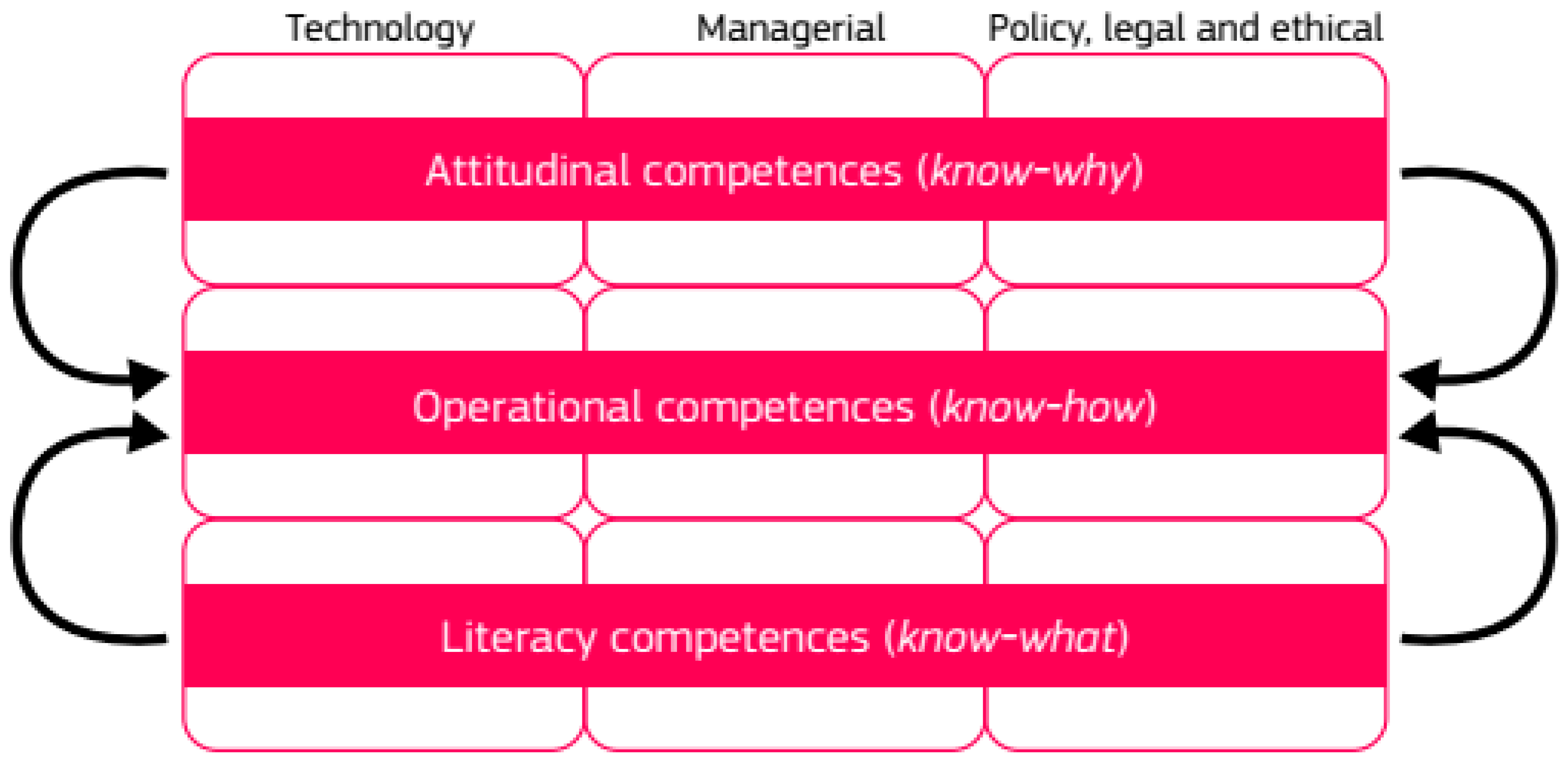

- Fostering the training of staff to achieve the adaptation of the auditor to the technological environment and promoting the creation of multidisciplinary teams. The training and capacity-building programmes developed should consider the use of already defined competence models, such as the one mentioned in Figure 1, that, according to the European Commission, respond to the competences needed or used by individuals engaging with AI in the public sector. They should include technical aspects of AI, especially the knowledge about the handling and processing of data that should be part of the qualification of the auditor and ICT staff of any SAI since the examination of the data used by the public administration and its quality is becoming an essential part of audit work. The deeper knowledge of ethical considerations, potential biases and relevant legislation must also be considered relevant;

- The recruitment of experts in data analytics and AI models but also those with legal and technical training that qualify them to audit the algorithms applied in public administration AI systems and to develop internal protocols to identify and mitigate biases in an SAI’s AI systems. The latter will involve regular testing before and after deploying AI tools to ensure the fairness and transparency of the SAI oversight operations;

- Inter-institutional cooperation and collaboration with other control bodies, promoting the secure interconnection of their internal networks to share information that can help every control institution in the tasks of the internal and external control of public funds and operations.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mo Ahn, J. Artificial Intelligence in Public Administration: New Opportunities and Threats. Korean J. Public Adm. 2021, 30, 1–33. [Google Scholar]

- Asociación Española de Contabilidad y Administración de Empresas (AECA). La transformación digital del sector público en la era del gobierno. In Documento de las Comisiones Nuevas Tecnologías y Contabilidad (nº 18) y Contabilidad y Administración del Sector Público (nº 16). 2022. Available online: https://aeca.es/publicaciones2/documentos/nuevas-tecnologias-y-contabilidad-documentos-aeca/nt18_ps16/ (accessed on 7 February 2025).

- Wang, P. On Defining Artificial Intelligence. J. Artif. Gen. Intell. 2019, 10, 1–37. [Google Scholar] [CrossRef]

- McCarthy, J. Why Is Artificial Intelligence? 2004. Available online: https://borghese.di.unimi.it/Teaching/AdvancedIntelligentSystems/Old/IntelligentSystems_2008_2009/Old/IntelligentSystems_2005_2006/Documents/Symbolic/04_McCarthy_whatisai.pdf (accessed on 2 February 2025).

- Tzafestas, S.G. Roboethics. A Navigating Overview; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Rich, E. Artificial Intelligence; McGraw-Hill: New York, NY, USA, 1983; 411p. [Google Scholar]

- Patterson, D.W. Introduction to Artificial Intelligence and Expert Systems; Prentice Hall of India: Hoboken, NJ, USA, 1990. [Google Scholar]

- Organisation for Economic Cooperation and Development-OECD Artificial Intelligence in Society. 2019. Available online: www.oecd.org/going-digital/artificialintelligencein-society-eedfee77-en.htm (accessed on 7 February 2025).

- Organisation for Economic Cooperation and Development-OECD Recommendation of the Council on Artificial Intelligence. 2019. Available online: https://legalinstruments.oecd.org/en/instruments/OECD-LEGAL-0449 (accessed on 7 February 2025).

- Fjelland, R. Why general artificial intelligence will not be realized. Humanit. Soc. Sci. Commun. 2020, 7, 10. [Google Scholar] [CrossRef]

- Berryhill, J.; Heang, K.K.; Clogher, R.; McBride, K. Hello, World: Artificial Intelligence and Its Use in the Public Sector. OECD Working Papers on Public Governance No. 36. 2019. Available online: https://www.ospi.es/export/sites/ospi/documents/documentos/Tecnologias-habilitantes/IA-Public-Sector.pdf (accessed on 7 February 2025).

- Frank, M.R.; Wang, D.; Cebrian, M.; Rahwan, I. The evolution of citation graphs in artificial intelligence research. Nat. Mach. Intell. 2019, 1, 79–85. [Google Scholar] [CrossRef]

- Reyes Alva, W.A.; Recuenco Cabrera, A.D. Artificial intelligence: Road to a new schematic of the world. Sciéndo 2020, 23, 299–308. [Google Scholar]

- Bostrom, N. Superintelligence; Oxford University Press: Oxford, UK, 2014. [Google Scholar]

- Ghosh, M.; Thirugnanam, A. Introduction to Artificial Intelligence. In Artificial Intelligence for Information Management: A Healthcare Perspective. Studies in Big Data; Srinivasa, K.G., Siddesh, G.M., Sekhar, S.R.M., Eds.; Springer: Singapore, 2021; Volume 88, pp. 23–44. [Google Scholar]

- Xu, C.E.; Xu, L.S.; Lu, Y.Y.; Xu, H.; Zhu, Z.L. E-government recommendation algorithm based on probabilistic semantic cluster analysis in combination of improved collaborative filtering in big-data environment of government affairs. Pers. Ubiquitous Comput. 2019, 23, 475–485. [Google Scholar] [CrossRef]

- Guillem, F. Funciones y Características de la Inteligencia Artificial. Seguritecnia: Revista decana independiente de seguridad. Seguritecnia 2022, 493, 174–181. [Google Scholar]

- Ubaldi, B.; Le Febre, E.M.; Petrucci, E.; Marchionni, P.; Biancalana, C.; Hiltunen, N.; Intravaia, D.M.; Yang, C. State of the Art in the Use of Emerging Technologies in the Public Sector. OECD Working Papers on Public Governance No. 31. 2019. Available online: https://www.sipotra.it/wp-content/uploads/2019/09/State-of-the-art-in-the-use-of-emerging-technologies-in-the-public-sector.pdf (accessed on 7 February 2025).

- Samoili, S.; Lopez, C.M.; Gomez Gutierrez, E.; De Prato, G.; Martinez-Plumed, F.; Delipetrev, B. Defining Artificial Intelligence. Towards An Operational Definition and Taxonomy of Artificial Intelligence (JRC118163) [EUR-Scientific and Technical Research Reports]. Publications Office of the European Union. 2020. Available online: https://publications.jrc.ec.europa.eu/repository/handle/111111111/59452 (accessed on 7 February 2025).

- Valle-Cruz, D.; Criado, I.; Sandoval-Almazán, R.; Ruvalcaba-Gómez, E.A. Assessing the public policy cycle framework in the age of artificial intelligence. From agenda-setting to policy evaluation. Gov. Inf. Q. 2020, 37, 101509. [Google Scholar] [CrossRef]

- Van Ooijen, C.; Ubaldi, B.; Welby, B. A Data-Driven Public Sector: Enabling the Strategic Use of Data for Productive, Inclusive and Trusted Governance. OECD Working Papers on Public Governance No. 33. 2019. Available online: https://www.oecd.org/en/publications/a-data-driven-public-sector_09ab162c-en.html (accessed on 7 February 2025).

- CAF-Banco de Desarrollo de América Latina. Conceptos Fundamentales y Uso Responsable de la Inteligencia Artificial en el Sector Público. Informe 2. Corporación Andina de Fomento. 2022. Available online: https://scioteca.caf.com/handle/123456789/1921 (accessed on 7 February 2025).

- Soylu, A.; Corcho, O.; Elvesaeter, B.; Badenes-Olmedo, C.; Yedro-Martinez, F.; Kovacic, M.; Roman, D. Data Quality Barriers for Transparency in Public Procurement. Information 2022, 13, 99. [Google Scholar] [CrossRef]

- Alhazbi, S. Behavior-Based Machine Learning Approaches to Identify State-Sponsored Trolls on Twitter. IEEE Access 2020, 8, 195132–195141. [Google Scholar] [CrossRef]

- Henrique, B.M.; Sobreiro, V.A.; Kimura, H. Contracting in Brazilian public administration: A machine learning approach. Expert Syst. 2020, 37, e12550. [Google Scholar] [CrossRef]

- Newman, J.; Mintrom, M.; O’Neill, D. Digital technologies, artificial intelligence, and bureaucratic transformation. Futures 2022, 136, 102886. [Google Scholar] [CrossRef]

- Leocádio, D.; Malheiro, L.; Reis, J. Exploration of Audit Technologies in Public Security Agencies: Empirical Research from Portugal. J. Risk Financ. Manag. 2025, 18, 51. [Google Scholar] [CrossRef]

- Cui, I.; Ho, D.E.; Martin, O.; O’Connell, A.J. Governing by Assignment. SSRN Electron. J. 2024, 173, 157. [Google Scholar] [CrossRef]

- Criado, J.I. Inteligencia Artificial (y Administración Pública). Econ. Rev. Cult. Leg. 2021, 20, 348–372. [Google Scholar] [CrossRef]

- Diakopoulos, N. Accountability in Algorithmic Decision Making. Commun. ACM 2016, 59, 56–62. [Google Scholar] [CrossRef]

- Stone, P.; Brooks, R.; Brynjolfsson, E. 2016 Report, One-Hundred-Year Study on Artificial Intelligence. AI100. 2016. Available online: https://ai100.stanford.edu/2016-report (accessed on 7 February 2025).

- DeSouza, K. Delivering Artificial Intelligence in Government: Challenges and Opportunities. IBM Center for Business of Government. 2018. Available online: http://www.businessofgovernment.org/sites/default/files/Delivering%20Artificial%20Intelligence%20in%20Government.pdf (accessed on 7 February 2025).

- Brookfield Institute. Introduction to AI for Policymakers: Understanding the Shift. 2018. Available online: https://brookfieldinstitute.ca/intro-to-ai-for-policymakers (accessed on 7 February 2025).

- Kilian, R.; Jäck, L.; Ebel, D. European AI Standards-Technical Standardization and Implementation Challenges Under the EU AI Act (February 26, 2025). Available online: https://ssrn.com/abstract=5155591 (accessed on 7 March 2025).

- Noble, S.U. Algorithms of Oppression: How Search Engines Reinforce Racism; New York University Press: New York, NY, USA, 2018. [Google Scholar]

- European Commission; Joint Research Centre; Medaglia, R.; Mikalef, P.; Tangi, L. Competences and Governance Practices for Artificial Intelligence in the Public Sector; JRC138702; Publications Office of the European Union: Luxembourg, 2024; Available online: https://op.europa.eu/en/publication-detail/-/publication/949913fa-aae4-11ef-acb1-01aa75ed71a1/language-en (accessed on 7 February 2025).

- Filgueiras, F. Inteligencia artificial en la administración pública: Ambigüedad y elección de sistemas de IA y desafíos de gobernanza digital. Rev. CLAD Reforma Democr. 2021, 79, 5–38. [Google Scholar] [CrossRef]

- Morgan, M.S. Exemplification and the use-values of cases and case studies. Stud. Hist. Philos. Sci. Part A 2019, 78, 5–13. [Google Scholar] [CrossRef]

- INTOSAI Development Initiative. Research Paper on Innovative Audit Technology; INTOSAI Working Group on Big Data: Beijing, China, 2022. [Google Scholar]

- Amsler, L.B.; Martinez, J.K.; Smith, S.E. Dispute System Design: Preventing, Managing, and Resolving Conflict; Stanford University Press: Stanford, CA, USA, 2020. [Google Scholar]

- Rivera, T. Application of machine learning in SAIs. Int. J. Gov. Audit. 2023, 50, 13–17. Available online: https://intosaijournal.org/es/journal-entry/machine-learning-application-for-sais/ (accessed on 8 February 2025).

- Prasad Dotel, R. Artificial Intelligence: Preparing for the Future of Audit. Int. J. Gov. Audit. 2020, 47, 32–35. Available online: https://intosaijournal.org/journal-entry/artificial-intelligence-preparing-for-the-future-of-audit/ (accessed on 8 February 2025).

- Garde Roca, J.A. ¿Pueden los algoritmos ser evaluados con rigor? Encuentros Multidiscip. 2023, 25, 25. Available online: http://www.encuentros-multidisciplinares.org/revista-73/juan-antonio-garde.pdf (accessed on 8 February 2025).

- Koshiyama, A.; Kazim, E.; Treleaven, P.; Rai, P.; Szpruch, L.; Pavey, G.; Ahamat, G.; Leutner, F.; Goebel, R.; Knight, A.; et al. Towards algorithm auditing: Managing legal, ethical and technological risks of AI, ML and associated algorithms. R. Soc. Open Sci. J. 2024, 11. Available online: https://royalsocietypublishing.org/doi/10.1098/rsos.230859 (accessed on 8 February 2025). [CrossRef]

- European Court of Auditors. Artificial Intelligence Initial Strategy and Deployment Roadmap 2024–2025; European Court of Auditors: Luxembourg, 2024. [Google Scholar]

- National Audit Office. Challenges in Using Data Across Government. UK National Audit Office. 2019. Available online: https://www.nao.org.uk/insights/challenges-in-using-data-across-government/ (accessed on 8 February 2025).

- National Audit Office. Use of Artificial Intelligence in Government. UK National Audit Office. 2024. Available online: https://www.nao.org.uk/wp-content/uploads/2024/03/use-of-artificial-intelligence-in-government-summary.pdf (accessed on 8 February 2025).

- European Court of Auditors. EU Artificial Intelligence Ambition: Stronger Governance and Increased, More Focused Investment Essential going Forward, Special Report 8/2024. 2024. Available online: https://www.eca.europa.eu/en/publications/SR-2024-08 (accessed on 8 February 2025).

| Tool Type | Potential Implementation |

|---|---|

| Clustering algorithms of similar data points | External control could be useful for, for example, grouping expenditures by ministerial department or in identifying groups of spending programmes or similar projects, thus facilitating their comparison and evaluation. |

| Anomaly detection algorithms or deviations from the standard | Its application to control makes it possible to detect budgetary irregularities or prioritise audits according to the areas in which the results deviate from the foreseeable patterns. |

| Artificial neural networks | These algorithms allow one to perform various tasks, including image and voice recognition and natural language processing (PLN), so in the field of external control they can be applied to process and analyse a large amount of unstructured information, such as texts and images, with the aim of extracting audit trails or relevant conclusions. For example, prediction models can be created from the information available from previous audits to detect unauthorised aid payments, unusual expenses or overpricing. |

| Decision trees | When classifying data points based on a set of previously defined decision rules, it may be useful in external control to classify transactions as fraudulent or not, to classify suppliers as high or low risk or to predict the likelihood of fraud in a given area. |

| K closest neighbours | These are some of the simplest algorithms and easiest to implement and are used to classify some points (neighbours) starting from others, with k being the number of neighbours that are checked. They are used in image and video recognition applications, in stock exchange analyses, pattern recognition, intruder detection and in the construction of more complex algorithms. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Genaro-Moya, D.; López-Hernández, A.M.; Godz, M. Artificial Intelligence and Public Sector Auditing: Challenges and Opportunities for Supreme Audit Institutions. World 2025, 6, 78. https://doi.org/10.3390/world6020078

Genaro-Moya D, López-Hernández AM, Godz M. Artificial Intelligence and Public Sector Auditing: Challenges and Opportunities for Supreme Audit Institutions. World. 2025; 6(2):78. https://doi.org/10.3390/world6020078

Chicago/Turabian StyleGenaro-Moya, Dolores, Antonio Manuel López-Hernández, and Mariia Godz. 2025. "Artificial Intelligence and Public Sector Auditing: Challenges and Opportunities for Supreme Audit Institutions" World 6, no. 2: 78. https://doi.org/10.3390/world6020078

APA StyleGenaro-Moya, D., López-Hernández, A. M., & Godz, M. (2025). Artificial Intelligence and Public Sector Auditing: Challenges and Opportunities for Supreme Audit Institutions. World, 6(2), 78. https://doi.org/10.3390/world6020078