4.1. Error Detection Criteria

In this section, we extend the error detection criteria of two-workstation lines (TEDC) to multi-workstation cases (MEDC).

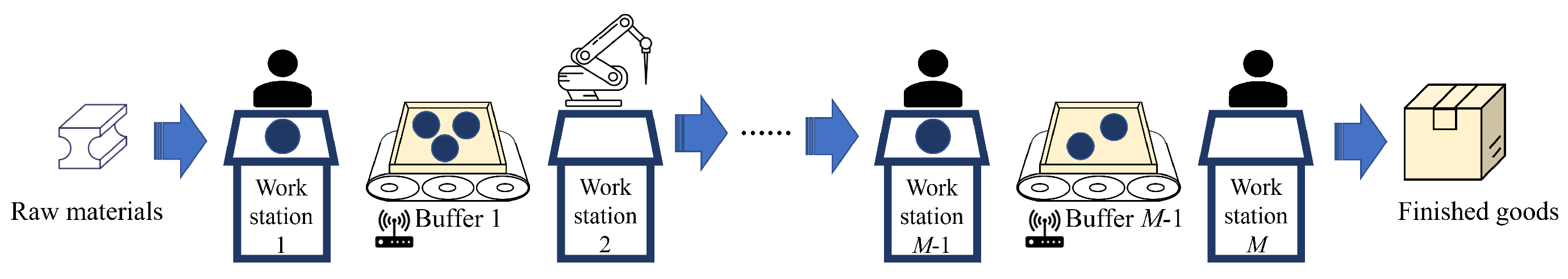

For multi-workstation lines, the workstation production status of each time slot can be estimated based on the parts flow data similar to the two-workstation case. Specifically, for Workstation 1 and Workstation

M, the expressions in Equations (

1) and (

3) take the measurement-based forms shown in Equations (

16) and (

17).

For internal workstations,

,

, since each of them is connected with two buffers, we can estimate its production status from either its upstream buffer, denoted as

, or its downstream buffer, denoted as

in Equations (

18) and (

19).

To detect potential errors in the data, we first confirm whether the deduced values of , , comply with the system constraints. If they do not, error detection criteria are designed.

If , then error is potentially present in or or both.

If or or , then error is potentially present in , , , and or a subset of them.

If , then error is potentially present in or or both.

Similar to TEDC in the two-workstation case, MEDC can be used to identify suspicious parts flow data entries that may potentially contain errors.

4.2. Error Correction Process

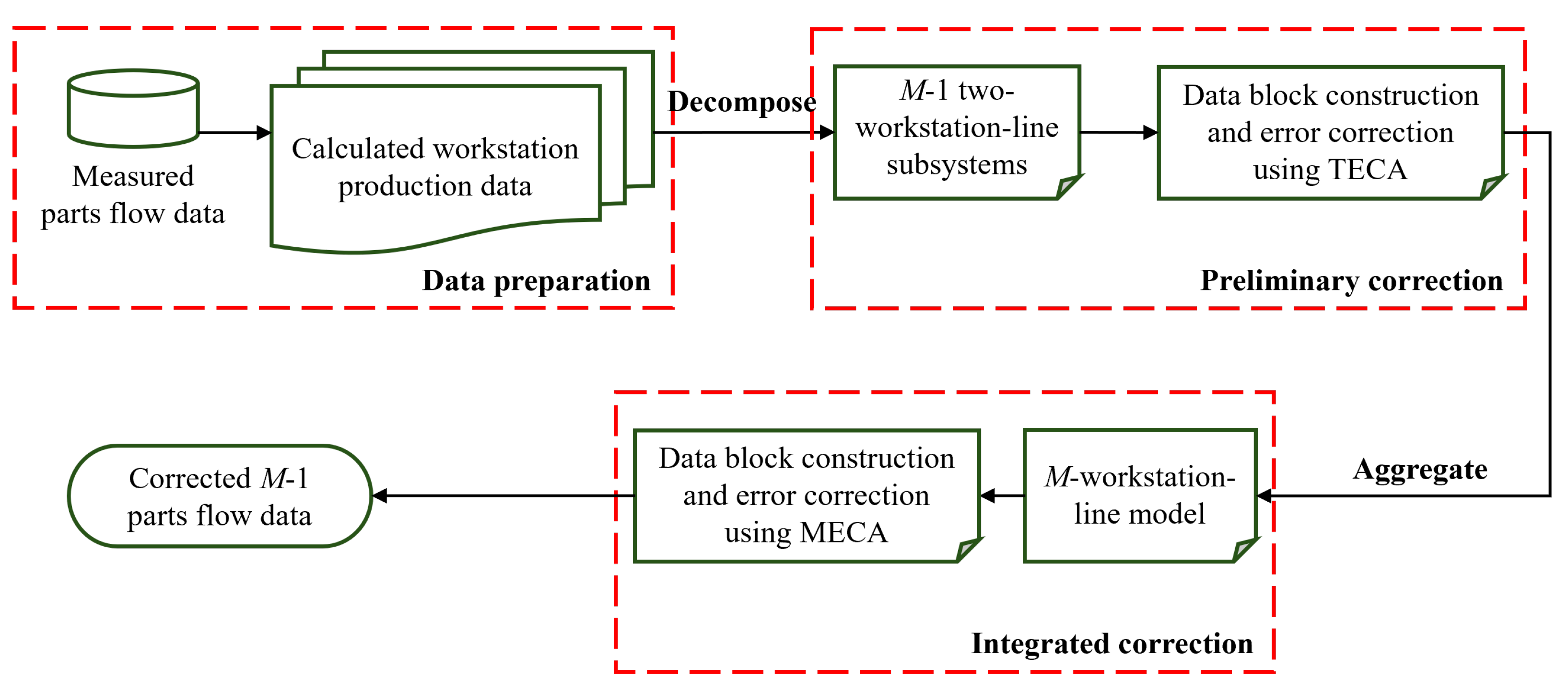

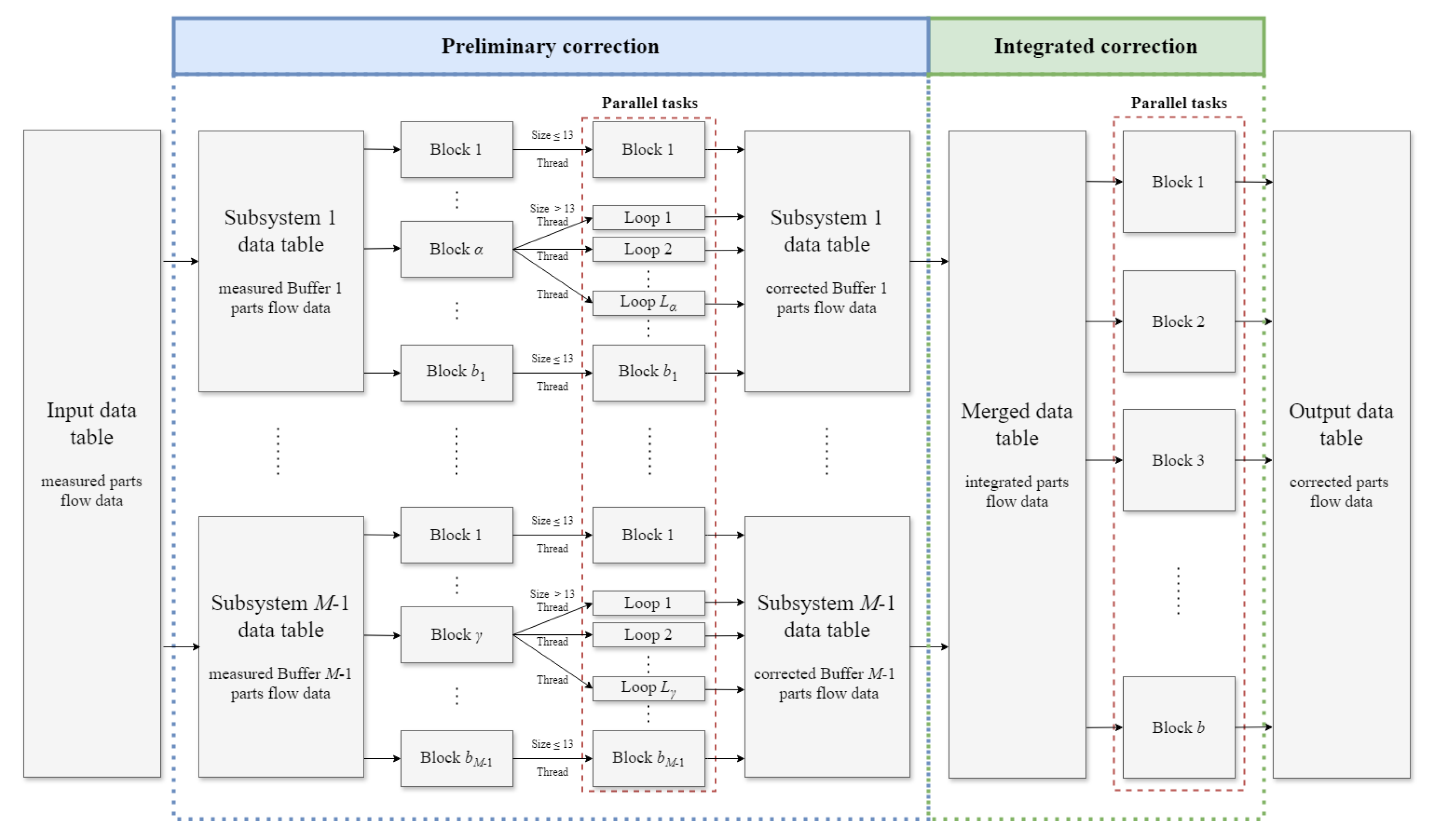

MEDC encounters the challenge of accurately identifying data entries that actually contain errors. In addition, an exhaustive search for all potential error correction options throughout the entire dataset would be excessively complex and time-consuming. Therefore, for systems with multiple workstations and buffers, we propose a decomposition/aggregation-based approach to overcome these challenges. The steps of this method are illustrated in the flow chart of

Figure 4. Each of them is outlined below.

Data preparation

In the first step, the parts flow data,

and

,

, are collected, and the workstation production data,

,

,

,

, and

, are calculated based on Equations (

16)–(

19).

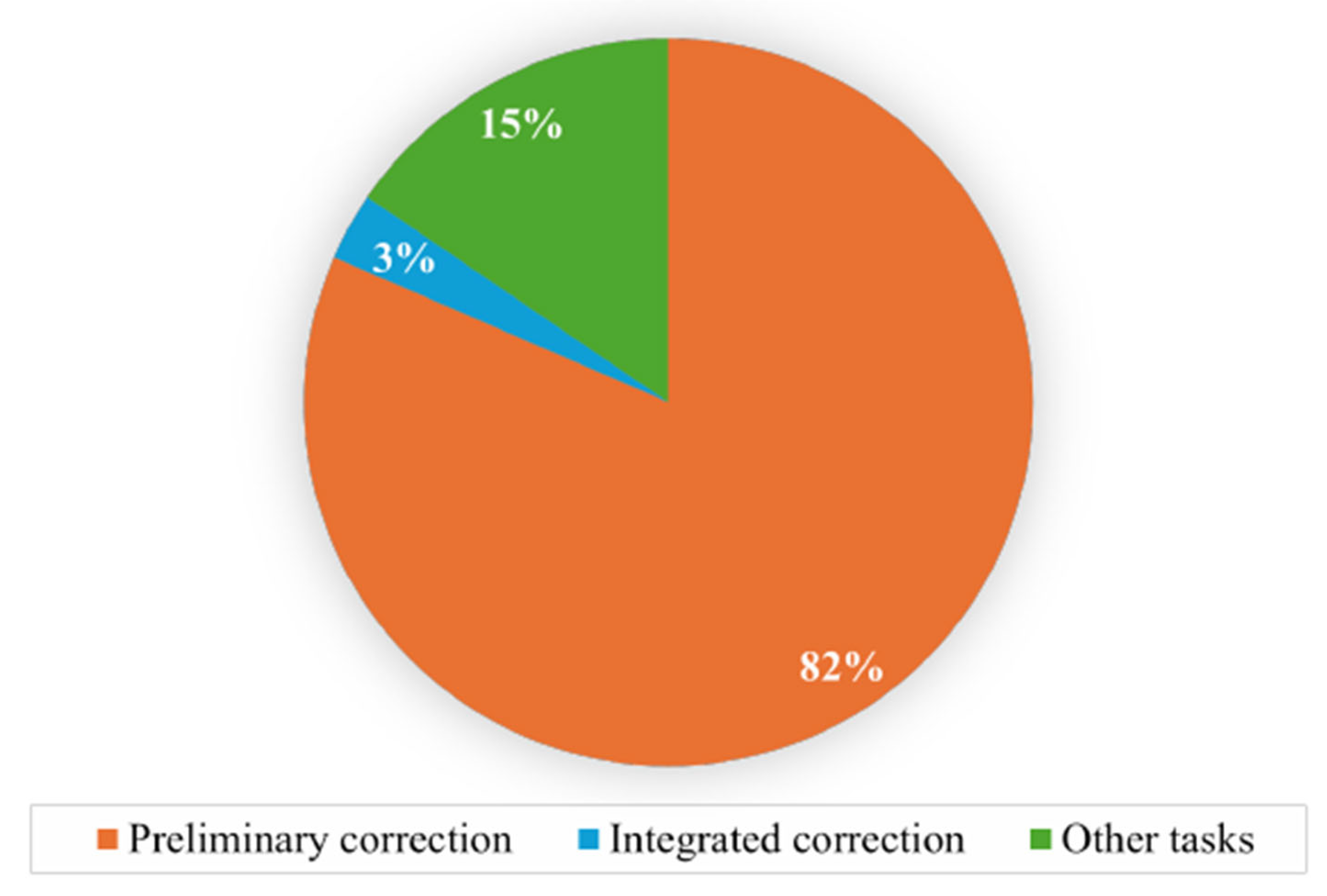

Preliminary correction

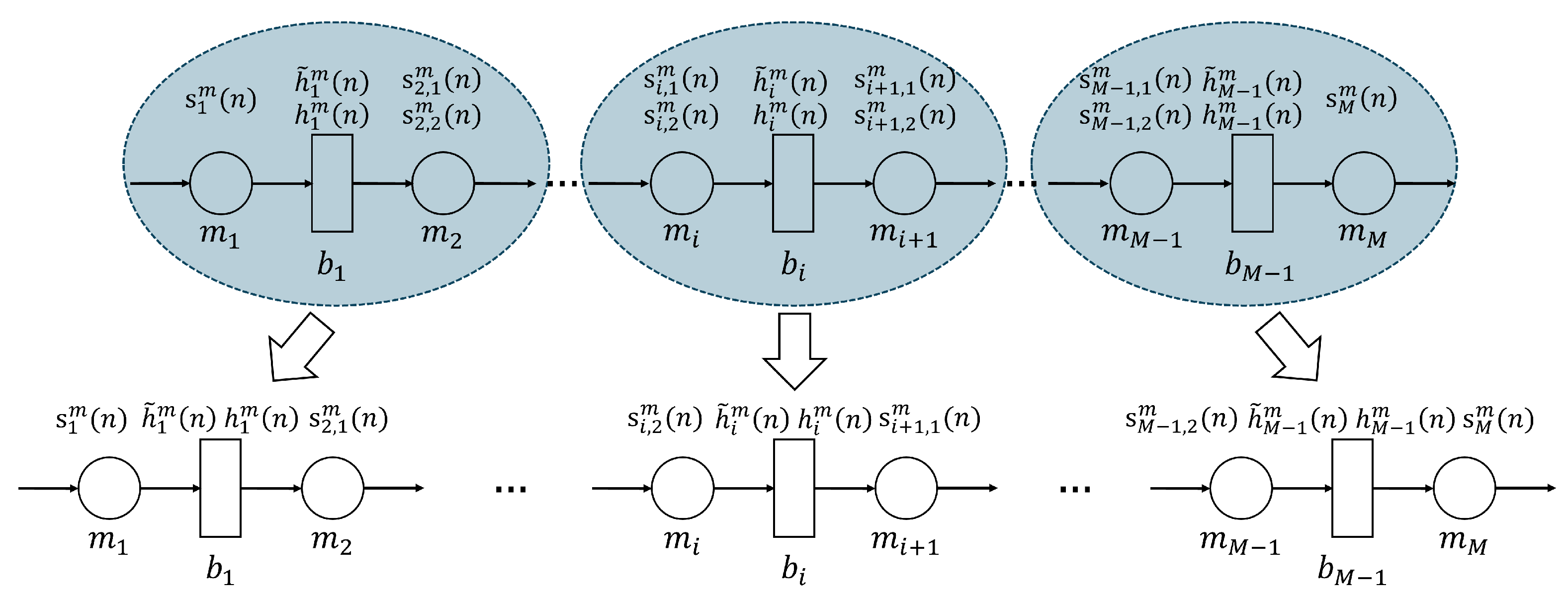

In this step of the error correction process, we decompose the

M-workstation line into

two-workstation-line subsystems. This is illustrated in

Figure 5. For each resulting two-workstation line, a sub-dataset is constructed that contains the parts flow data from the buffer belonging to this subsystem, and the workstation production data is calculated based on this buffer’s parts flow data. Specifically, for the two-workstation-line subsystem with Workstation 1, Workstation 2 and Buffer 1, the sub-dataset consists of entries of

,

,

, and

; for the two-workstation-line subsystem with Workstation

i, Workstation

and Buffer

i,

, the sub-dataset consists of entries of

,

,

, and

; for the two-workstation-line subsystem with Workstation

, Workstation

M and Buffer

, the sub-dataset consists of entries of

,

,

, and

.

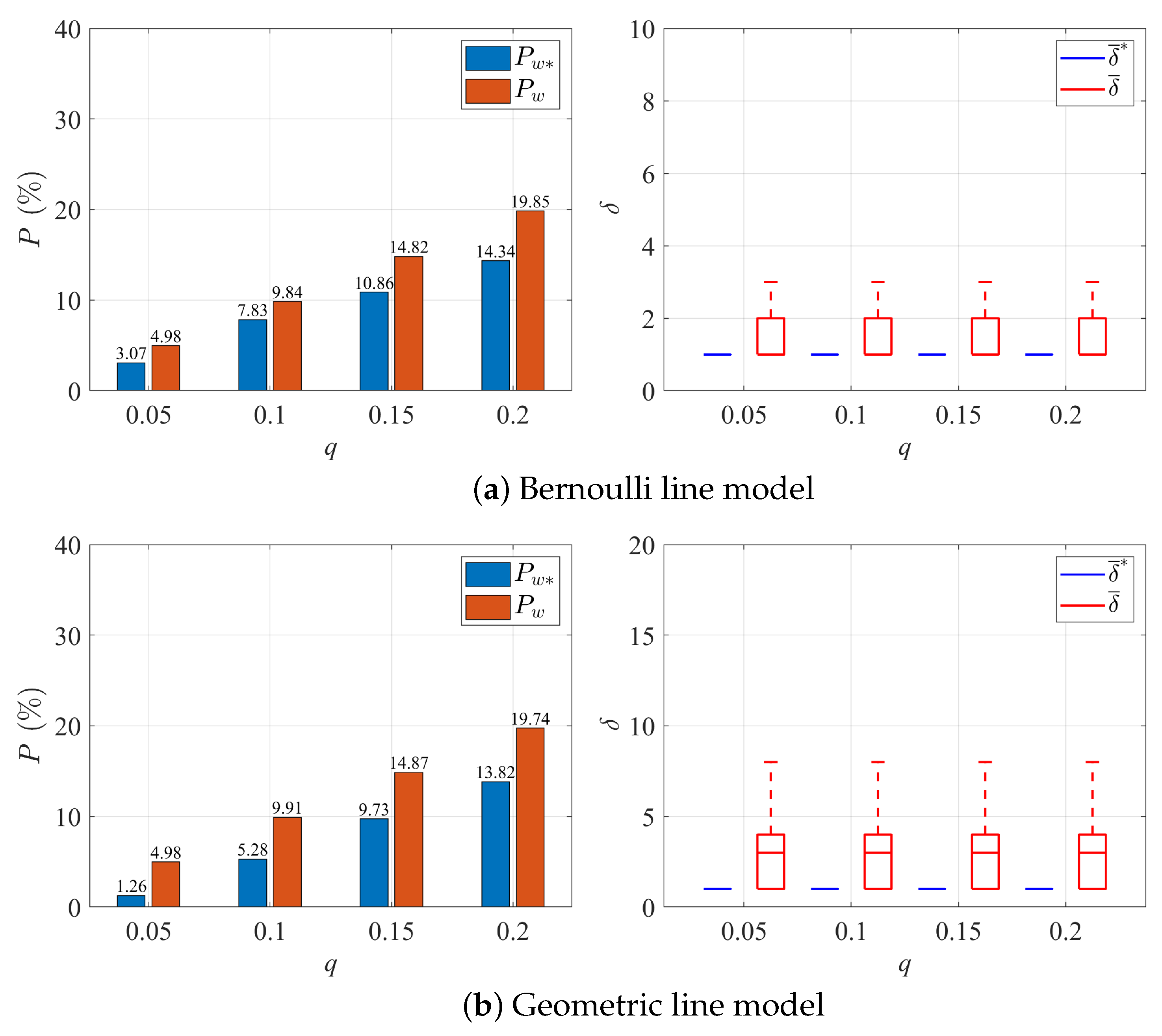

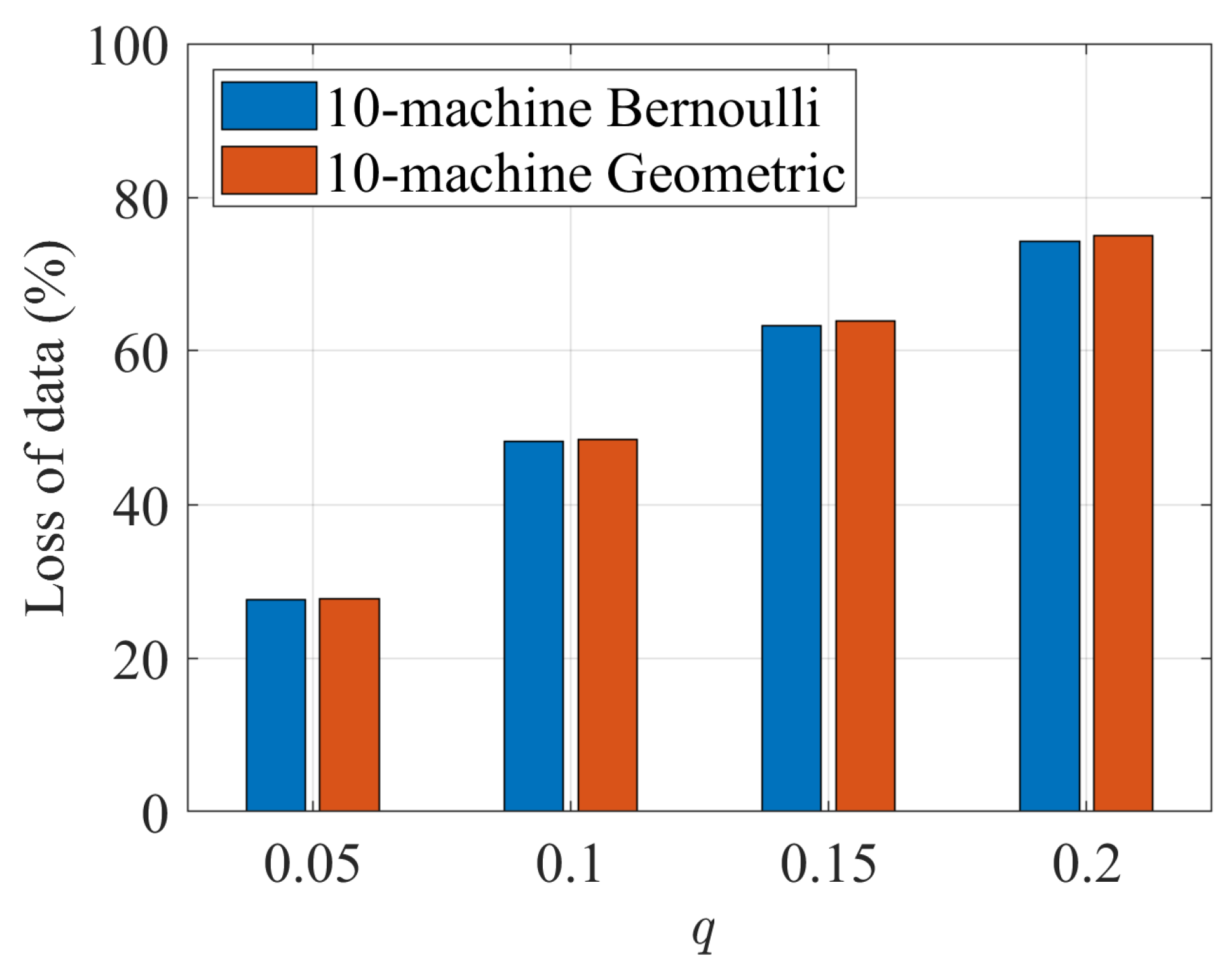

With the above decomposition, we treat each two-workstation-line subsystem independently in this step and apply TECA to each sub-dataset constructed above. Note that preliminary correction is intended to identify and correct the errors in the parts flow data entries locally, i.e., within each two-workstation-line subsystem.

Upon completion of the preliminary correction, the workstation production data, , , , and , are guaranteed to be within their feasible range of . However, since the corrections are performed locally and for given i, and belong to the datasets of different subsystems, inconsistency between them may still exist after preliminary correction. This potential problem will be addressed in the next step, integrated correction.

Integrated correction

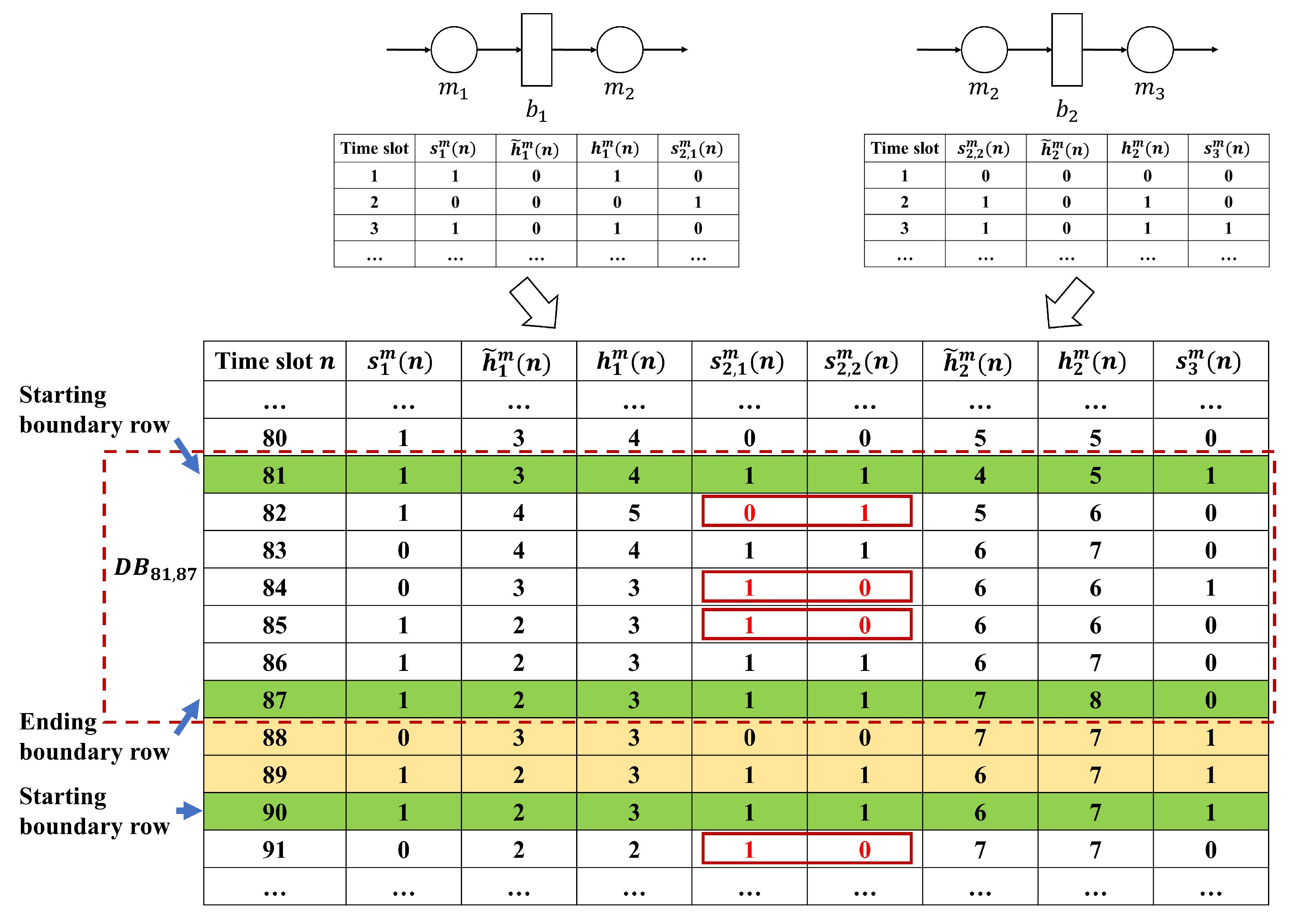

In this step, we aggregate the two-workstation-line subsystems from the preliminary correction back into the original

M-workstation serial line structure. An integrated dataset for the whole

M-workstation-line system is constructed by merging the TECA-corrected sub-datasets from preliminary correction. An illustration is given in

Figure 6 for an

-workstation line case. In this illustration, the data of

and

are obtained from subsystems

-

-

and

-

-

from the previous step, as shown in

Figure 6.

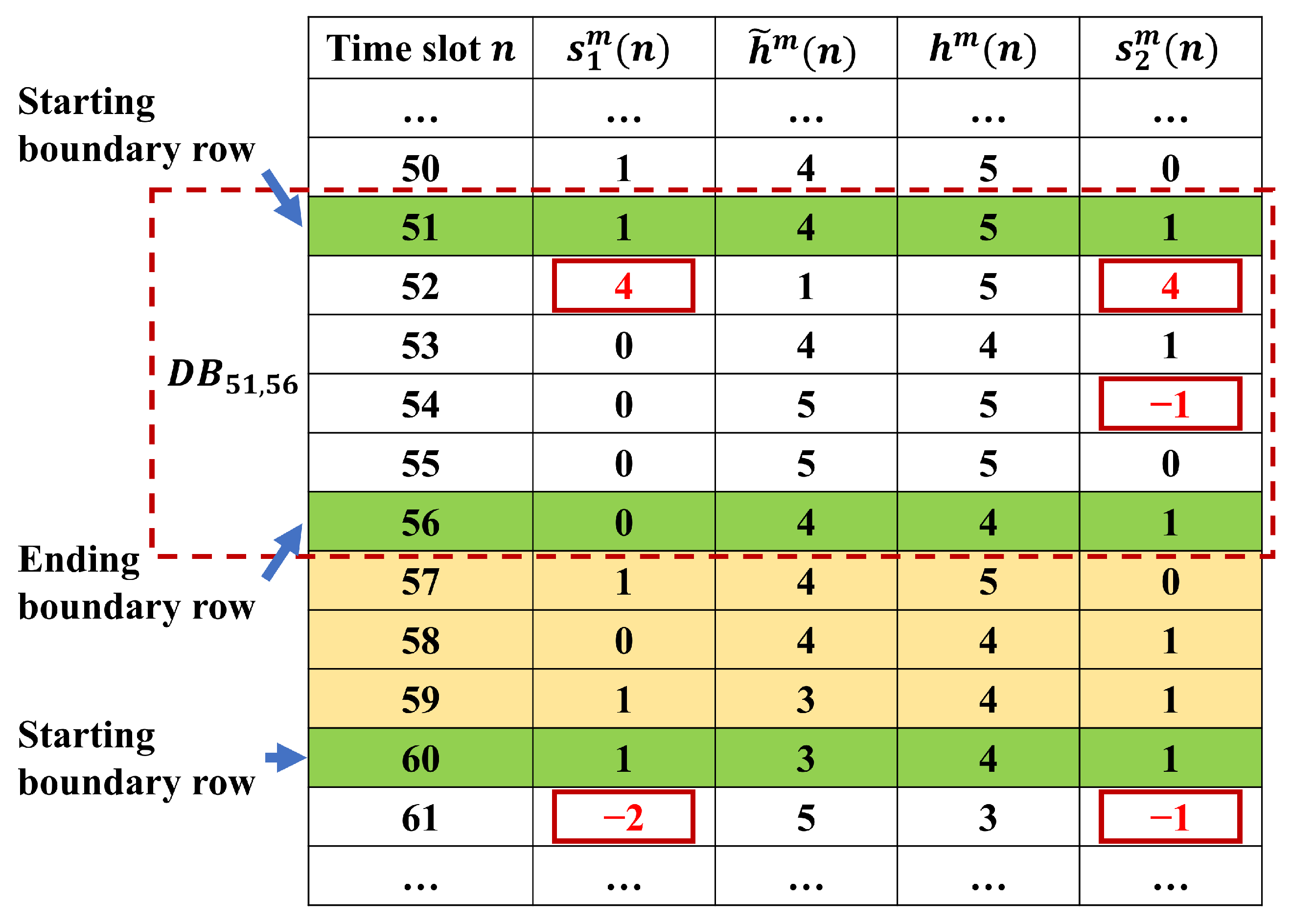

Now, to identify and correct the errors in the merged dataset comprised of multiple workstations and multiple buffers, the data-block-based method described in

Section 3 is extended to the

Multi-workstation Error Correction Algorithm (MECA). In this case, the data table is partitioned into blocks using the starting and ending boundary rows specified based on MEDC. Specifically, if the data entries in two consecutive rows

and

satisfy the consistency constraint (

20).

for all

, and the entries in the subsequent row

fails to meet the above constraint for at least one

, then row

is designated as the starting boundary row of a data block. Then, starting from row

and scanning the data in each row that follows

, if the data entries in row

do not satisfy consistency constraint (

20) for at least one

, but the entries in the subsequent two consecutive rows

and

do for all

, then row

is identified as the ending boundary row of this data block.

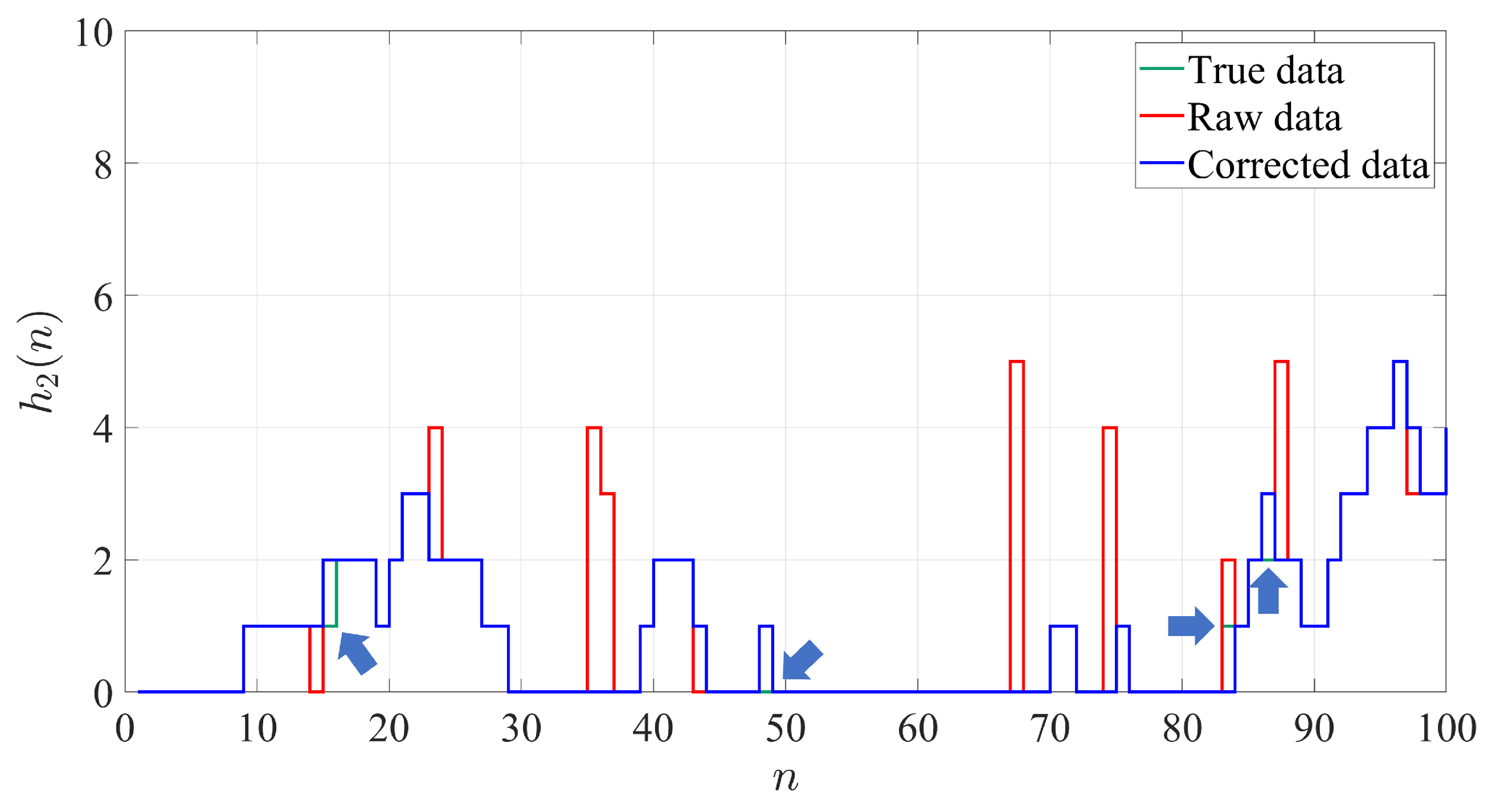

To illustrate the construction of a data block, consider again the parts flow and workstation production data after the preliminary correction, as depicted in

Figure 6. In this example, row 81 is first identified as the starting boundary row of a data block since the data entries in rows 80 and 81 all pass constraint (

20) but row 82 has entries violating (

20) (

). The next several rows either have entries violating constraint (

20) (rows 82, 84, 85) or are immediately followed by a row with data violating (

20) (row 83 passes MEDC but row 84 fails), until rows 86 and 87, where (

20) is met for two consecutive rows, which makes row 87 the ending boundary of this data block. The data blocks, thus obtained, should encompass a great portion of the data set but does not necessarily cover the entire dataset. For the example shown in

Figure 6, rows 88 and 89 do not belong to any data blocks.

With the data blocks constructed, the identification and correction of erroneous data entries will be performed within each individual data block. Note that it follows from Equations (

1)–(

3) that the parts flow and workstation production data variables of an

M-workstation serial line satisfy Equations (

21) and (

22).

The measured data should follow the same relationships. Thus, for a given data block with starting boundary row

and ending boundary row

, the above Equations (

21) and (

22) can be rewritten as Equations (

23) and (

24).

Using Equations (

23) and (

24), one can trial different combinations of workstation production data,

’s, calculate the corresponding parts flow data,

and

, and determine the combinations of workstation production data that are most likely to represent the true data. However, due to a greater amount of data entries in the multi-workstation case, it is computationally infeasible to replicate the TECA approach and enumerate all valid combinations of workstation production data. Therefore, a procedure is developed to only inspect a select set of the most suspicious combinations of workstation production status to ensure a manageable computing burden. Specifically, for the data block with starting and ending boundary rows

and

, let

denote the set of workstation production data within the data block that will be trialed in Equations (

23) and (

24). Then,

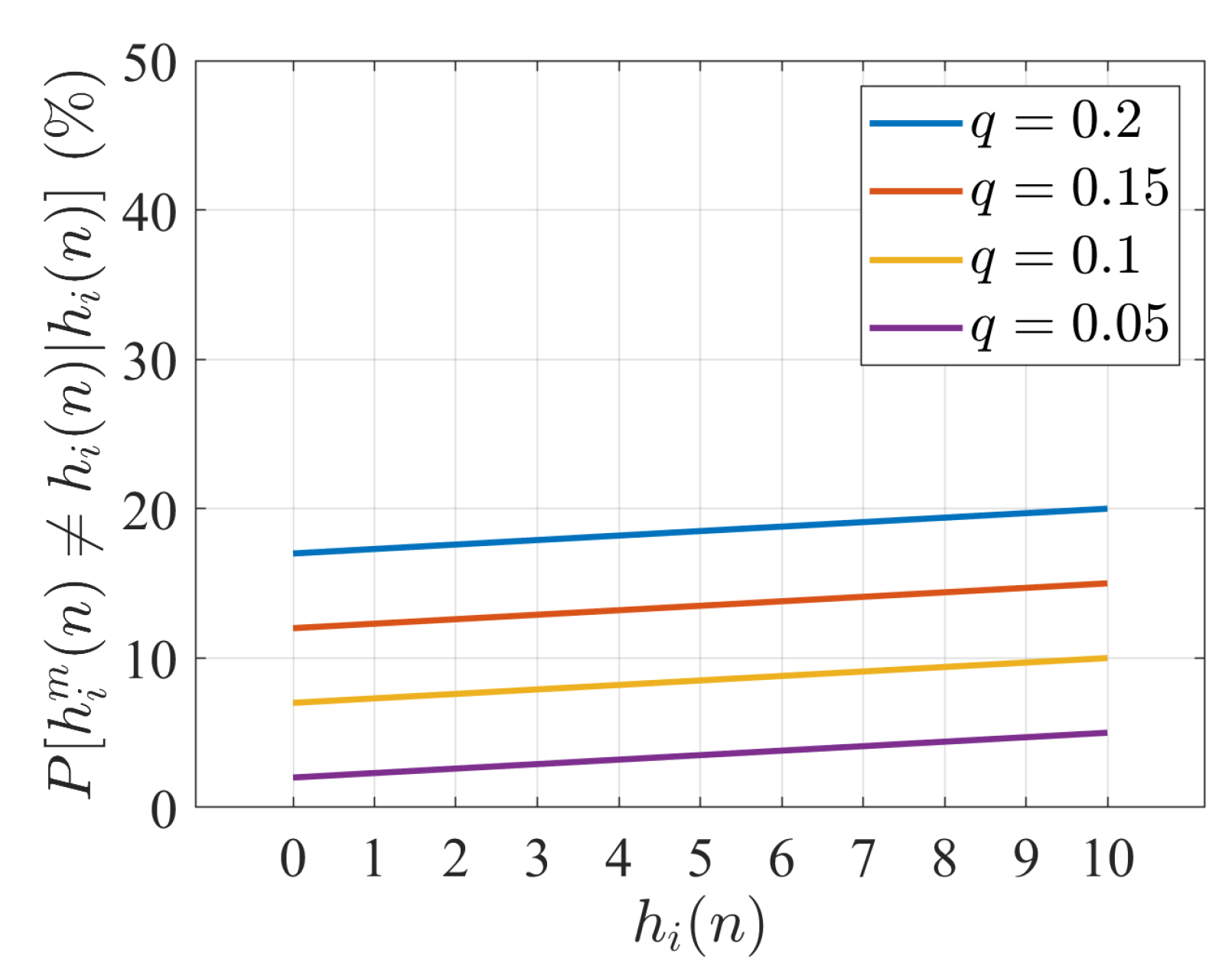

For Workstation i, , is selected into if

- –

, or

- –

but ;

For Workstation 1, is selected into if is selected into ;

For Workstation M, is selected into if is selected into .

Following this procedure, the

data entries not selected into

are assumed to be error-free and will remain unchanged during the data correction process. As a result, a total of

combinations of workstation production status will be tested (with each data entry taking a value of either 0 or 1) to identify potential erroneous data entries, as opposed to

combinations if all workstation production data were to be enumerated. For each of the combinations examined, Equations (

23) and (

24) are used to calculate the corresponding parts flow data

and

. If the resulting

and

are within their feasible ranges

, then the algorithm proceeds to calculate the number of data entries modified compared with the original measured parts flow data. The combination with the minimal number of modified entries is output as the final corrected dataset.

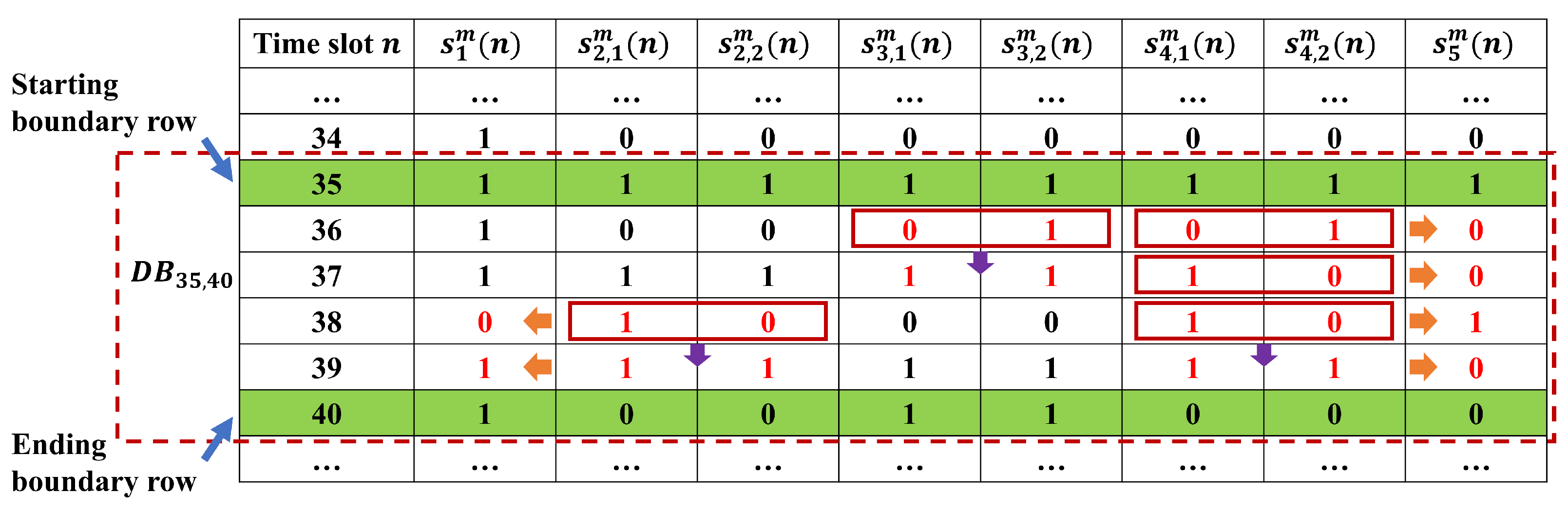

Figure 7 shows an example of the above data entry selection procedure for a five-workstation line. In this example, for the data block bounded by row 35 and row 40, inconsistency is observed between

and

,

and

,

and

,

and

, and

and

, which are indicated by the red boxes in the figure. They lead to

,

,

,

, and

to be selected into

for calculation in Equations (

23) and (

24). Then,

,

and

are also selected into

since

,

and

are selected due to inconsistency observed (indicated by purple arrows). Finally,

,

,

,

,

, and

are selected into

since

,

,

,

,

, and

have all been selected (indicated by orange arrows). The resulting

consists of 14 workstation production data entries out of the 20 total in the data block—reducing the computation burden for this data block to only 1.56% of the total enumeration approach. The entire process of MECA for integrated correction is provided as a pseudo-code of Algorithm 1.

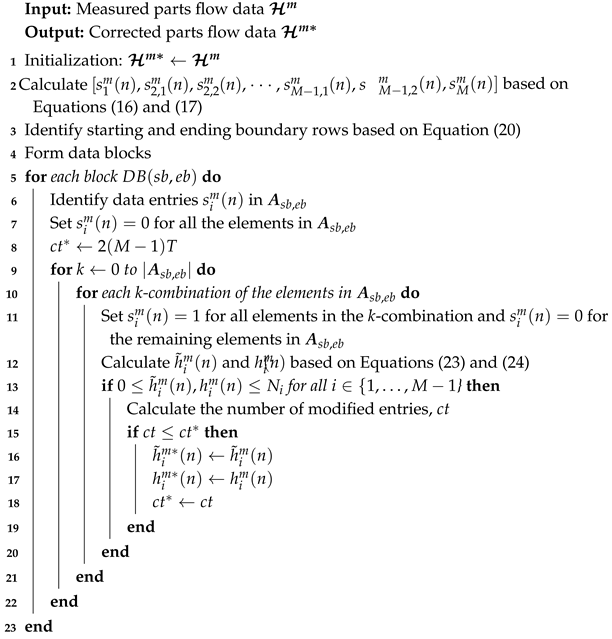

| Algorithm 1:

Multi-workstation Error Correction Algorithm (MECA) |

![Automation 06 00078 i001 Automation 06 00078 i001]() |