1. Introduction

In orthopedic surgical procedures, such as internal fixation of fractures, precision bone drilling is a fundamental and critical step. Prior to the placement of fixation hardware, such as screws, plates, and intramedullary nails, it is essential to drill accurately positioned and dimensioned holes into the bone. The biomechanical stability of the fixation and the long-term healing outcome are highly dependent on the drilling accuracy, thermal damage control, and minimization of mechanical trauma to the surrounding bone tissue [

1]. In conventional surgical drilling, complications such as thermal necrosis, microcracks, or misaligned trajectories may arise due to uncontrolled force application, excessive temperature rise (above the 47 °C threshold), or poor ergonomic control by the surgeon [

2]. Therefore, robot-assisted bone drilling offers an ideal solution to achieve repeatable, accurate, and minimally invasive procedures.

Precision bone drilling is a fundamental and critical procedure in orthopedic surgery, particularly for the fixation of fractures using implants such as screws, plates, or intramedullary rods. The process demands not only geometric accuracy in the drilled hole but also the preservation of bone integrity and biological viability [

3]. Improper drilling can lead to delayed healing due to complications such as microcracks [

4], misalignment [

5], and thermal necrosis [

6] of bone tissue.

A key challenge in bone drilling is the generation of excessive heat due to friction between the drill bit and bone. Early studies [

7] have pointed out how poor drill condition increases bone cell damage. It is well established that temperatures exceeding 47 °C can lead to thermal necrosis, permanently damaging bone structure, and affecting postoperative recovery [

8]. The application of excessive axial force or torque may cause mechanical failure, such as microfractures, while insufficient force can lead to incomplete drilling or tool slippage [

9].

Another critical factor influencing surgical success is tool wear, which accumulates progressively during drilling. A worn drill bit results in increased cutting forces, higher temperatures, poor hole quality, and longer drilling times [

10]. Predicting and compensating for tool wear is therefore essential for ensuring consistent performance and minimizing intraoperative risks. Staroveski et al. [

11] demonstrated that the wear of the cortical bone drill could be effectively classified using neural networks trained in the features of force, torque, and acoustic emission, demonstrating the feasibility of AI-assisted wear detection during surgery.

Additionally, vibrations generated during drilling, especially in dense cortical bone, can degrade the precision of drilling and cause discomfort or structural damage [

12]. These vibrations, along with force, torque, and thermal feedback, provide vital information for real-time monitoring of the drilling state. Although the focus of the present work is surgical bone drilling, and surgery in general is a life-critical activity, the methodologies for characterization and tool wear prediction may be inspired by broader drilling research in manufacturing, which involve materials that are much stronger, such as metals and alloys, including those used in surgical implants [

13], and much less homogeneous, such as composites [

14].

Liu et al. [

15] recently designed a deep learning-based tool wear monitoring system that uses vibration and cutting force signals to predict drill wear progression in bone drilling with high accuracy, highlighting the potential of integrating AI for risk reduction.

The convergence of robotics and sensor technology provides a platform for developing high-precision, minimally invasive surgical systems capable of overcoming the limitations of traditional manual drilling. Such systems offer repeatability, consistency, and data-driven decision support, marking a significant advancement in orthopedic surgical practice [

16].

In the context of orthopedic bone drilling, tool wear is an increasingly recognized factor influencing both surgical accuracy and patient safety. Repeated usage of surgical drill bits, especially in high-speed or dense bone drilling scenarios, leads to progressive loss of sharpness, chipping, or flank wear, which can significantly degrade cutting efficiency [

17]. As the tool wears, the required cutting force and torque increase, thermal energy rises, and hole quality deteriorates, contributing to longer operation times and increased risk of thermal necrosis [

18]. Contamination may be a serious issue in the case of orthopedic implant surgeries if traditional coolants are used for machining in the custom-fitting of implants. Gómez-Escudero et al. [

13] have presented a novel solution to this problem by using cryogenic carbon dioxide as the coolant. In the case of orthopedic surgeries, sterilized water is used as a coolant intermittently. Although the end-effector developed by us has provision for dispensing coolant water, coolant was not used in the drilling experiments as the focus is exclusively on tool condition monitoring.

Multiple studies in the field of manufacturing and biomedical engineering have addressed the importance of real-time tool wear monitoring and prediction. Techniques such as acoustic emission analysis, vibration signal monitoring, and cutting force modeling have been applied to industrial machining processes. These methods have shown potential for translation into surgical applications where predictive models can alert the system or surgeon about imminent tool failure or reduced performance. Peña et al. [

19] have successfully implemented a novel method of preventing burr formation on aluminium alloy components during drilling using real-time monitoring of spindle torque and signal processing. Islam et al. [

20] combined conventional analysis techniques with supervised ML algorithms to predict bone drilling temperatures using two different algorithms. Kung et al. [

21] proposed a neural network model for immediate thermal visualization as a surgical assistive device for a human surgeon.

Recent research has explored the use of machine learning (ML) algorithms to correlate sensor-derived features (force, torque, vibration, and temperature) with the actual wear condition of drill bits [

22,

23]. These predictive frameworks, when combined with robotic systems, have been shown to reduce intraoperative risks, improve hole quality, and optimize tool replacement cycles, especially in constrained surgical environments where visual inspection is not feasible. Agarwal et al. [

24] demonstrated that machine learning can effectively predict temperature elevation in conventional and rotary ultrasonic bone drilling. Extensive review of the literature on tool wear monitoring using AI [

25] and big data [

26] indicate the increasing research interest in and possible effectiveness of these modern techniques for tool condition monitoring. Due to the availability of innumerable ML models, identification of the most suitable model is a problem of plenty. Ensemble models that combine the results from multiple models are often employed. Bustillo et al. [

27] have employed one such technique for the process optimization of friction drilling. Alajmi and Almeshal [

28], using copper and cast-iron datasets, observe that the extreme gradient boosting algorithm with hyperparameter optimization performs better for predicting the tool wear as compared to support vector machines and multilayer perceptron, which are otherwise more popular. Our work attempts to analyze the performance of 47 different models, including a number of ensemble models, and the best few models are shortlisted for predicting real-time tool condition monitoring in bone drilling.

Despite these advances, the literature focused specifically on orthopedic surgical tool wear monitoring using real-time data acquisition for robotic surgery with sensor-integrated drilling tools is limited. This gap has motivated us to develop an end-effector capable of intelligently monitoring tool condition during bone drilling and automatically control the drilling process to maintain consistent performance and prevent complications.

This paper is organised as follows:

Section 2 describes the experimental setup of the end-effector for robotic drilling for surgical applications.

Section 3 discusses the signal processing on the multisensor data and the feature extraction from the sensory data for the purpose of machine learning.

Section 4,

Section 5,

Section 6,

Section 7 and

Section 8 discusses the model training and hyperparameter tuning framework. Results are discussed in

Section 9. Conclusions along with the scope and limitations of the current study are summarized in

Section 10.

2. Experimental Setup

We have developed a sensor-integrated smart end-effector, specifically designed for precision bone drilling in robotic-assisted orthopedic surgery by a collaborative robot arm. The end-effector is engineered to meet the following objectives:

Drill precisely aligned holes in cortical and cancellous bone structures, with minimal human intervention.

Monitor drilling conditions in real-time, including force, torque, temperature, and vibration.

Ensure thermal safety and mechanical precision, especially during high-speed operations or drilling in dense bone regions.

The experimental drilling setup consists of the collaborative robotic arm (Model UR5e from Universal Robots Inc., Odense, Denmark [

29]) integrated with the end-effector developed by the authors for precision bone drilling. The experimental setup of the UR5e robot with the end-effector for robotic drilling is shown schematically in

Figure 1, with wrist force sensors located on the robot arm providing force–torque data. The end-effector (shown in enlarged view) has provisions for optional external sensors (for vision and obstacle avoidance). Vibration data will be acquired from the sensors located on the bone to be drilled.

The objective of the experiment is to perform robotic drilling on the bone sample. The data are collected when drilling multiple holes with a depth of 3 mm while monitoring real-time force data and ensuring precise motor control.

2.1. Drilling Controller and Sensor Data Acquisition

The drilling controller is interfaced with the UR5e Robot Controller and acts as an interface for programmed actuation of the drilling tool. The configuration of the drilling controller is shown in

Figure 2. The controller and sensors are integrated with the UR5e robot controller using the protocol prescribed by the UR5e robot manufacturer. This facilitates automatic control of the drilling processes and real-time data acquisition.

The drill bit is rotated by the DC motor, which may be controlled from the robot controller automatically. The motor driver that delivers the required power input to the motor supports a broad voltage range, ranging from 5 V to 35 V. The motor driver is interfaced with the UR5e robot controller through a microcontroller boards. The DC-DC converter ensures a safe level of the input voltage to the motor driver and the microcontroller board. A custom-made joystick interface is also provided for manual positioning of the tool by jogging. The joystick provides a convenient interface for manual motion control of the end-effector. The Left/Right input to the joystick moves the end-effector along the X-axis, while the Up/Down input to the joystick results in the vertical motion of the end-effector along the Z-axis. This helps in positioning or retraction of the drilling tool manually, if required.

The force data during the entire drilling cycle was captured using the UR5e’s built-in six-component wrist force sensor. The data acquisition was performed using the RTDE (Real-Time Data Exchange) interface [

30]. The axial drilling force (

), the lateral forces (

and

), and the torque values

are acquired. The RTDE interface is configured for data acquisition at a sampling frequency of 125 Hz, enabling real-time monitoring and analysis of the drilling behavior and the forces involved in the tool–bone interaction, and the evaluation of tool wear indicators such as increasing force or torque during repeated cycles.

The force–torque data from the robot arm, drilling parameters from the drilling controller, and the temperature and vibration data from the bone being drilled are collectively acquired by the master controller. The master controller is a laptop running RTDE. Temperature and vibration sensors are located on the bone being drilled, and the data from these sensors are acquired directly by the laptop through a microcontroller interface.

2.2. Robot Motion and Drilling Sequence

Using the combination of the joystick and programmed monitoring by the infrared depth sensor, the robot is moved to a predefined starting position so that the distance between the tip of the tool and the bone surface is exactly 2 mm. This “ready” position allows safe tool engagement before spindle activation.

Once the UR5e robot positions the drill tip 2 mm away from the bone surface, the drill motor is activated. After contact with the bone, the robot advances the drill 3 mm along the tool axis, resulting in an actual drilled hole depth of 3 mm as shown in

Figure 3. A hole depth of 3 mm was selected to simulate realistic cortical bone drilling in surgical applications.

Upon reaching the target depth, the robot retracted to the starting point (2 mm above the bone target) and the motor is turned OFF to complete the drilling cycle.

Titanium nitride-coated HSS drill bits the from Bosch Titanium drill bit set were used for the experiments. Ten holes each were drilled using the drill bits of diameters 2 mm and 3 mm with chisel edge drill geometry with a point angle of 135° and a helix angle of 30°. The cutting parameters are as follows: Spindle speeds of 1000 rpm and 1500 rpm, and feed rates of 30 mm/min and 50 mm/min. No coolant was used. The objective of the study was the real-time condition monitoring of a given tool under allowable drilling conditions. The dataset used for the machine learning methodology consisted solely of the sensory data resulting from the above experiments.

3. Machine Learning Methodology

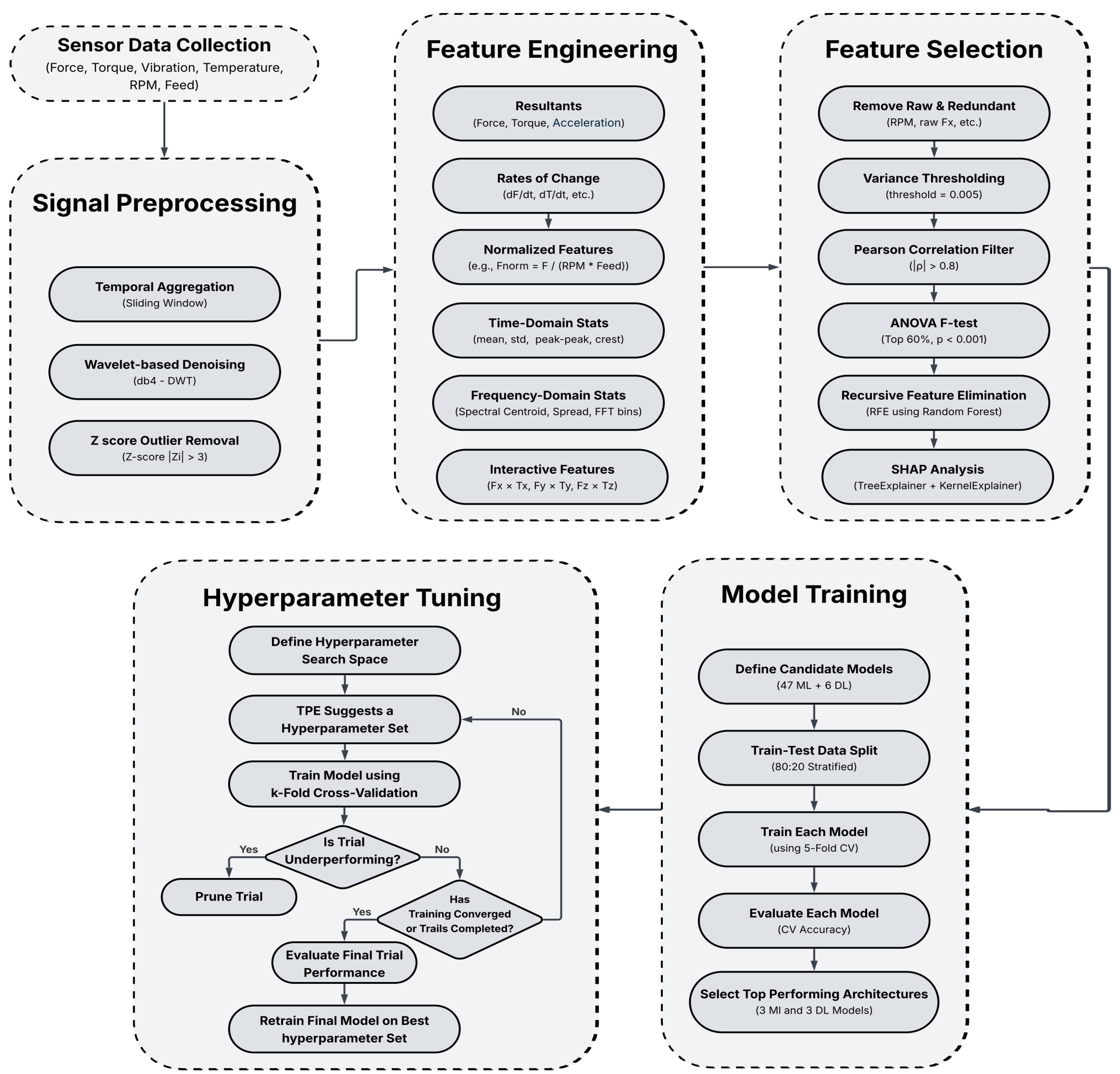

As the data from the sensors were collected at the sampling frequency of 125 HZ (every 8 ms), a large amount of raw data is created. Tool condition monitoring (TCM) relies on analyzing sensor signals such as force, vibration, torque, and thermal data to detect early signs of tool wear. As raw sensor data often contain high-frequency and noisy signals, making it difficult to establish clear links between the signals and the wear state of the tool, we adopt a sequence of processes involving signal pre-processing, feature extraction, and feature selection, as shown in

Figure 4, which will be useful in training the ML model to classify tool wear.

In Signal Preprocessing, raw sensory data from different sensors are temporally aggregated over a period of time or a number of samples. The noise from the sensor data caused by external and unnecessary factors is removed using denoising techniques. The resulting data passes through outlier removal to make the sensor data more stable and reliable [

31]. The Feature Engineering process helps in obtaining more features such as the resultant of the force vector, the rate of change of force, basic statistics, and time-domain and frequency-domain values of raw sensor data [

32].

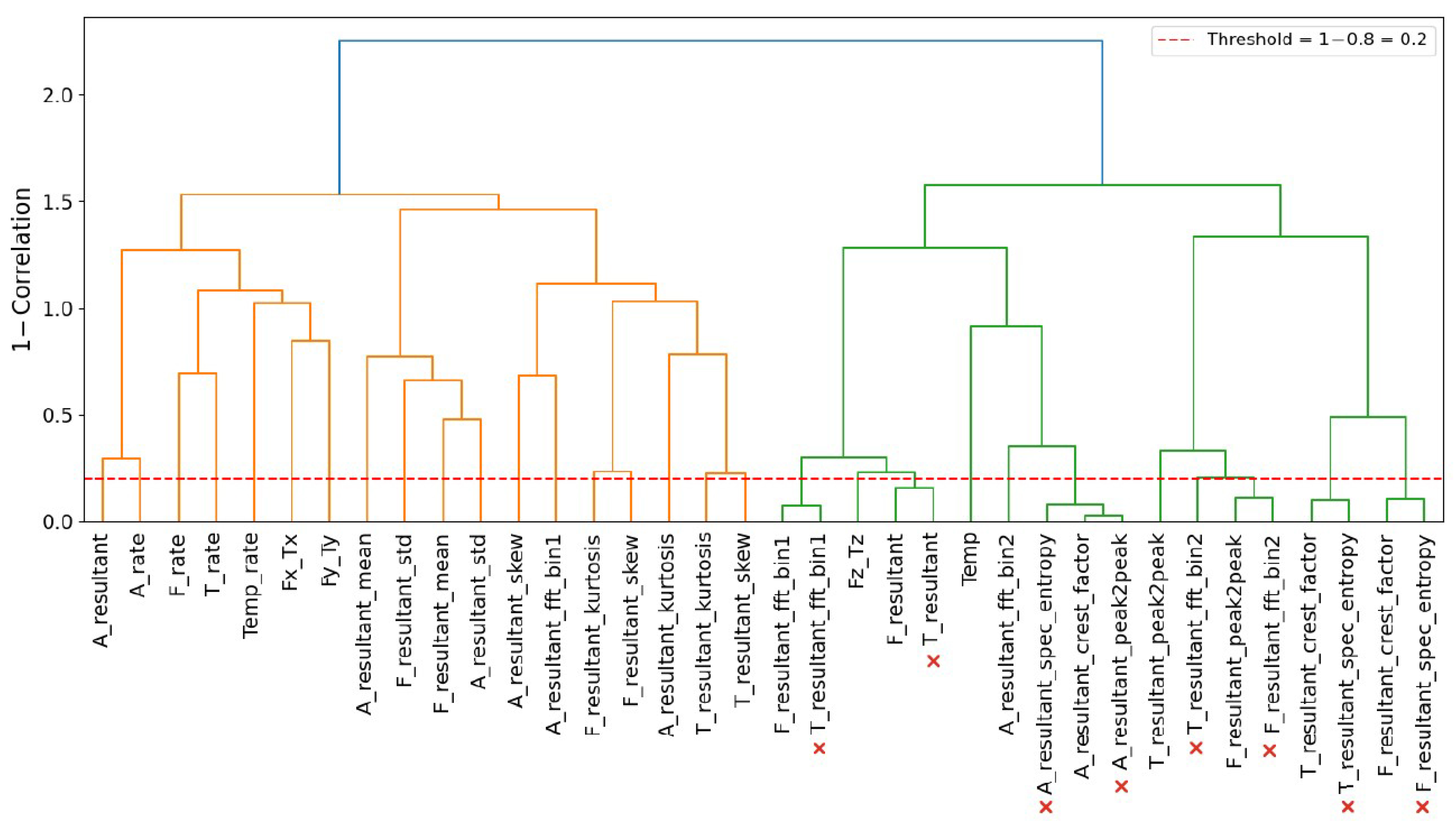

Finally, Feature Selection is employed to filter out the unnecessary features [

33].

To select the best model for TCM, it is proposed to consider supervised learning algorithms based on both classical ML models and deep learning (DL) architectures. Three top-performing models with diverse architectures are selected from each of these two categories [

23]. This approach ensures that the models show different improvements from optimization techniques like Bayesian and Hyperband optimization, implemented using Optuna [

34], rather than having a single architecture outperform the others [

35].

This multi-step approach with signal preprocessing, feature engineering, feature selection, and model selection and optimization ensures good model performance and reliable tool wear classification by cleaning the data, selecting important features, training models with diverse architecture, and optimizing them with techniques like Bayesian and hyperband optimization.

4. Data Preprocessing

Data preprocessing is a necessary step before being able to train models with the data collected from sensors, especially in TCM. Due to noise and other external factors in the signals collected during the experiment, it is challenging to establish a clear relationship between the raw signals and the tool wear state. Data preprocessing generally includes cleaning and transforming the raw sensor data to reduce noise and removing outliers to improve the performance of the trained model [

31], as well as making sure the trained model does not overfit.

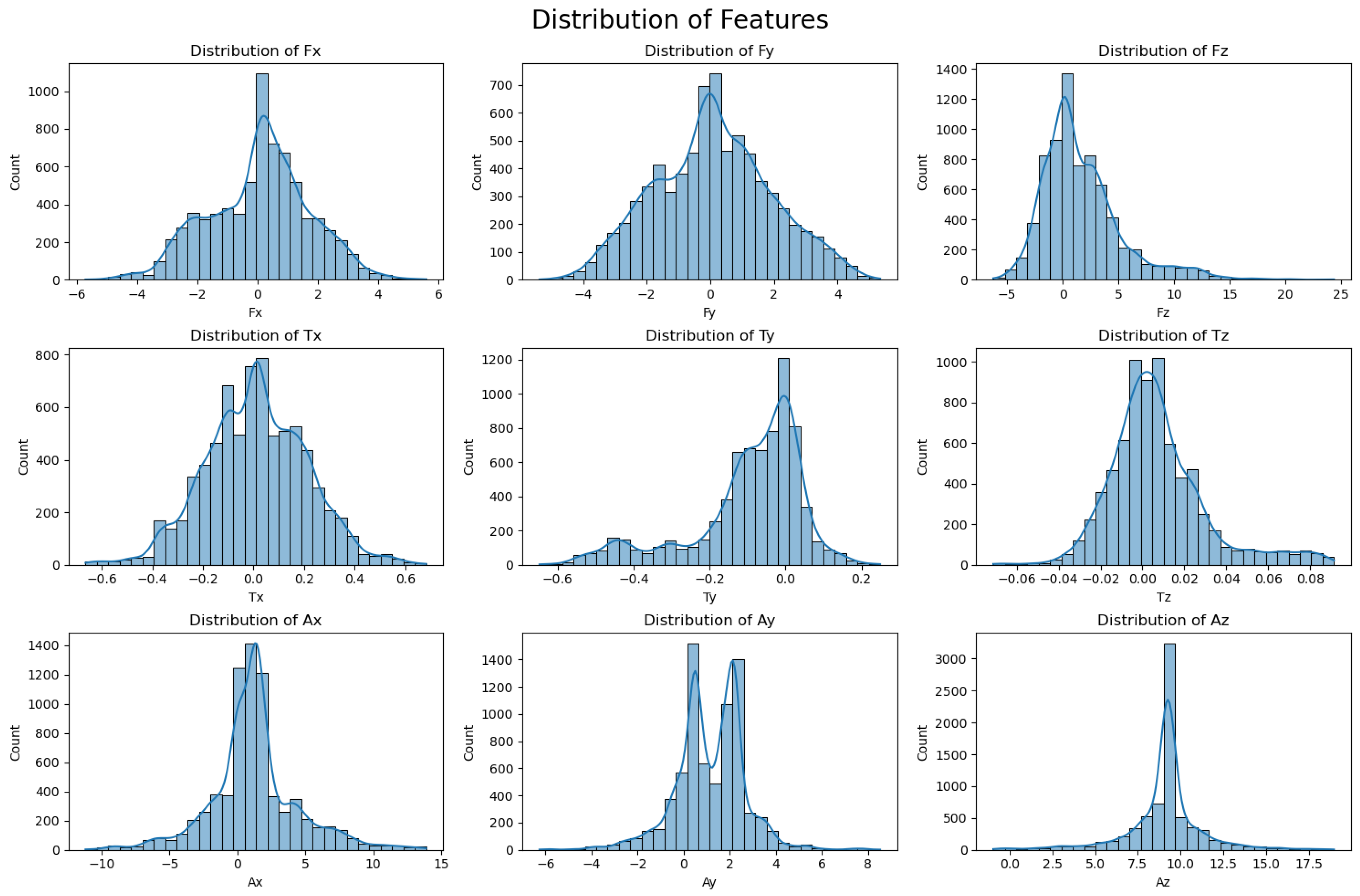

Before data preprocessing, we analyzed the distribution of raw features.

Figure 5 shows the typical distribution of force (

,

,

), torque (

,

,

), and acceleration (

,

,

) features. In order to facilitate subsequent analysis such as outlier detection, the dimensionless Z-score of the raw features rather than the actual values of the raw features are used for obtaining the plots in

Figure 5. The Z-score of a raw data

x with mean

and standard deviation

is defined as

.

and are symmetric and centered near zero, indicating balanced lateral forces, while is right-skewed with high outliers due to vertical tool contact. Torque features show irregular patterns; is clearly multimodal, pointing to variable cutting dynamics. and display bimodal behavior, and peaks around 9.81 due to gravity, with added vibration. The non-Gaussian, skewed, and multimodal nature of these distributions highlights the need for robust preprocessing and careful feature selection.

4.1. Temporal Aggregation

In this study, the raw sensory data is subjected to temporal aggregation, which is a common signal smoothing and dimensionality reduction technique used in time-series signal processing. Temporal aggregation reduces high-frequency noise but retains hidden patterns or trends, preventing overfitting of trained models due to high noise and correlated consecutive samples. Temporal aggregation was applied on a sliding window of 5 consecutive data samples for the data from all sensors.

4.2. Denoising

Next, we applied denoising techniques to the temporally aggregated dataset. We used wavelet-based denoising with the Daubechies-4 (db4) wavelet; this was applied to all force, torque, acceleration in three axes, and temperature sensor data due to excellent time–frequency localization for nonstationary mechanical signals of db4. The denoising process follows the discrete wavelet transform (DWT) in which the input signal is decomposed, thresholded, and reconstructed, and then soft thresholding is applied to the detail coefficients at each level, with noise variance estimated using the mean absolute deviation.

The DWT coefficients are decomposed by projecting the signal onto scaled and shifted wavelet bases:

To remove noise, soft thresholding is applied to the detail coefficients

:

where

is a scale-adaptive threshold chosen via universal thresholding.

where

N is the signal length and the variance

estimates noise using the mean absolute deviation.

The denoised signal is reconstructed via the inverse DWT:

4.3. Outlier Removal

Finally, we removed the outliers from the datasets using Z-score outlier removal, where points with

as shown in

Figure 5 are removed to avoid a skewed distribution and misrepresentative features that are due to events like tool crashes or sudden material changes [

33]. The removal of outliers prevents classifier models from being biased toward extreme but misrepresentative patterns from sensor failure or machine glitches.

7. Model Training Framework

The preparation of a robust and reproducible model training framework is important to ensure a fair and accurate comparison of algorithm performance. So, we made sure that our dual-pipeline architecture can evaluate a diverse range of supervised learning algorithms, including both classical ML models and DL architectures [

23].

The drilling experiment was conducted on an actual bone sample used in medical education. The amount of drilling data that may be acquired is limited as the workpiece in this case is a rare commodity. Unlike in industrial applications where the drilling process may last from a few minutes to even an hour, the bone drilling lasts for a few seconds. The size of the data that may be collected and the assessment of tool wear after every drill cycle pose challenges.

As the sensory data is collected every 8 ms (125 Hz) from various sensors and 20 holes were drilled, a good amount of sensory data is available. But, this data is unlabeled as the tool-wear labeling of the data for every time step (8 ms) is a difficult task. Tool force is known to be an important contributor towards tool wear. The perception of unusually large drilling force provides a cue on the tool wear in manual surgeries, too [

15]. In view of this, it was decided to label the data based on the magnitude of the force vector sensed by the wrist force sensor. The maximum and minimum value of the force magnitude data was identified, and the range was divided into three categories based on the force magnitude: 0 (slight), 1 (moderate), and 2 (severe). This provides us with a reasonable dataset to be used with ML algorithms requiring a labeled dataset.

The dataset was randomized and an 80:20 train–test split that preserves the class distribution of the three tool wear categories was adopted. Multiple models (47 ML models and 6 DL models) were studied to select 3 models from each category, as described below.

7.1. Machine Learning Models

The following two-stage validation strategy was adopted for evaluating the various supervised ML classification models:

Each model is automatically wrapped in meta-classifiers such as One-vs-Rest or One-vs-One if native multiclass support is absent. Ensemble models including Voting Classifier and Stacking Classifier are built using diverse base learners to test the benefits of algorithmic heterogeneity [

57]. Models with convergence issues (e.g., NuSVC) are supplied with adjusted parameters or dropped if unstable.

After training the 47 ML models and ranking their accuracy, the results obtained for the 15 most accurate models are shown in

Figure 9a, from which, with the criteria of diverse architecture and good accuracy, the following 3 models are chosen for further optimization:

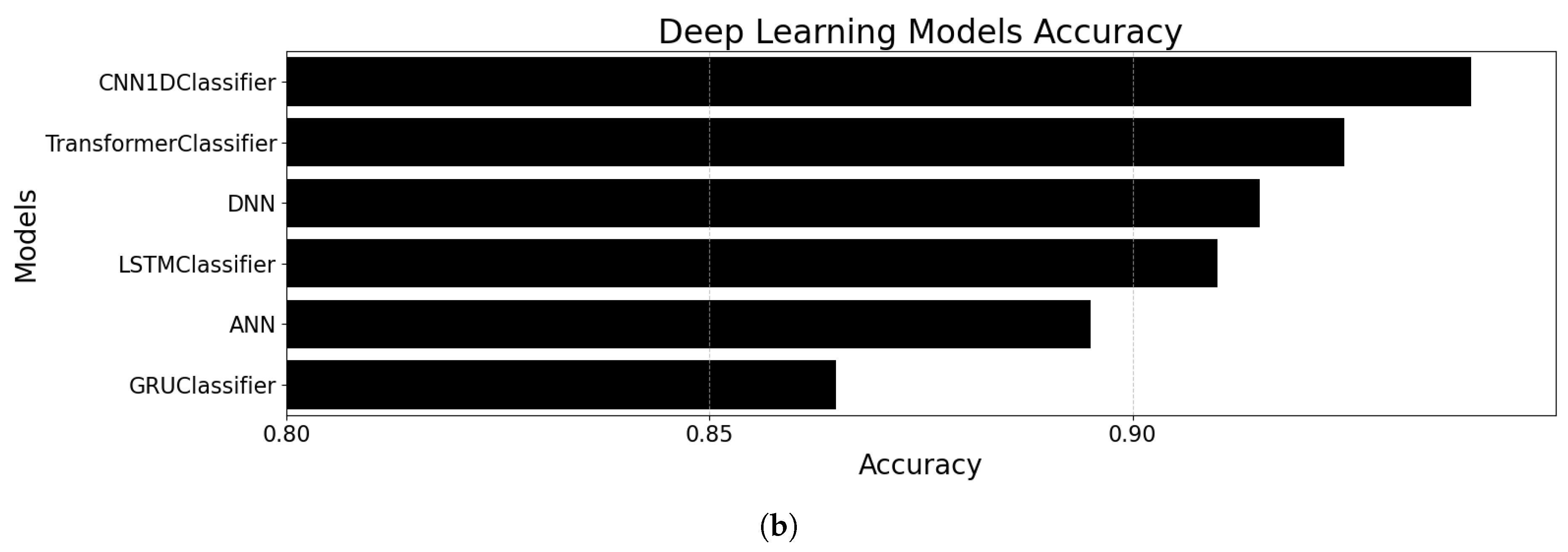

7.2. Deep Learning Models

Six custom-designed DL architectures were implemented using the PyTorch framework (Version 2.6.0). The models were trained with learning rates from

to

and batch sizes of 32 to 128 over a maximum of 100 epochs, employing early stopping to prevent overfitting [

58].

After training and ranking based on the criteria of diverse architecture and high accuracy as show in

Figure 9b, the following three DL models were chosen:

CNN 1D Classifier

Transformer Classifier

DNN Classifer

7.3. Hyperparameters During the Training

Hyperparameters are variables that are set before training a DL model and remain constant during the training process; they determine the change from the current iteration to the next iteration. They are not learned from the data, but they significantly impact the model’s performance.

The key hyperparameters used were the learning rate of

, the batch size of 16, and the gradient clipping of 1.0. Regularization techniques such as dropout (0.3–0.5), L2 weight decay, and batch normalization were used for better generalization [

59], with ReLU activations for hidden layers and SoftMax for output.

The architecture and the optimizer used for different DL models are listed in

Table 6.

The choice of hyperparameters is decided through the hyperparameter tuning process discussed in

Section 8. The hyperparameters obtained for all the three DL models were using the search space tailored to each hyperparameter and architecture [

60]. The results are shown in

Figure 10.

9. Results and Discussion

The main scope and contribution of this paper are the following:

Identification of the most relevant features from the multi-sensor data;

Identification the most suitable ML and DL models for analysing the data;

Training the models using the most optimal hyperparameters;

The assessment of the prediction accuracy of the models.

The results for the first two items were presented in

Section 6,

Section 7 and

Section 8. The results of the latter two items are discussed here and the relevance of our work is compared with earlier attempts reported in the literature.

The hyperparameters for each model were optimized individually for 25 trials in each model, and the hyperparameters that had to be optimized were selected individually depending on their relevance to the model architecture and the sensitivity to the model performance [

63]. The hyperparameter search space was carefully defined using domain knowledge and the observed results to include critical parameters such as the tree depth, the number of estimators, the learning rates, the regularization strength, and the splitting criteria. After applying this hybrid approach, we identified the best performing hyperparameters of each classifier obtained from the Optuna study [

34].

Among the classical ML models, the Extra Trees Classifier resulted in the best prediction accuracy of 96.33% for TCM on the test set, while CNN 1D Classifier was the best among the DL models, with a prediction accuracy of 95.67%. The search space and the optimal value of the hyperparameters obtained from hyperparameter tuning for both these models are given in

Table 7, and the classification reports for these two models are given in

Table 8. The CNN model’s optimization process involved tuning both architectural and training-related parameters. The hyperparameter search space was carefully bounded to enhance convergence and reduce overfitting.

The confusion matrices for the Extra Trees Classifier and the CNN 1D Classifier obtained after training with the set of hyperparameters after the hyperparameter tuning process are shown in

Figure 11, where the distribution of correctly and incorrectly classified instances across all classes of TCM may be noted.

The results of this study show that combining a proper data preprocessing pipeline with well-selected features and comparing different model types can help detect tool wear during robotic bone drilling quite accurately. It may be further inferred that both traditional ML and DL models can perform well if used with the right data and approach.

Previous work using 1D CNNs also had good success in predicting tool wear from vibration and force signals. For example, models that combine CNN with temporal or frequency-domain processing have been shown to work well in noisy environments such as drilling [

14,

15,

64]. But in most of those studies, the focus was mainly on raw signal inputs or just one signal type. Our approach, on the other hand, mixes different kinds of features, such as interaction-based and normalized values, making the model generalize better across samples.

Classical ML models are also useful for faster decision-making and identification of the most relevant features. Past research works have used such models for the prediction of bone drilling temperature or wear stages from vibration signals [

20,

65], but without full feature engineering and selection processes like ours. By adding a multi-stage methodology (ANOVA, RFE, SHAP), we are able to improve performance and reduce noise in predictions.

Overall, while earlier research focused on single models or features, our work stands out by combining engineered signals, strong model selection, and a clear validation process. This not only improves accuracy but also makes the system more reliable for real-time use in surgical settings.

10. Conclusions

In this study, we proposed a complete and real-time framework for Tool Condition Monitoring (TCM) in robotic bone drilling applications. Unlike traditional methods which mostly rely on limited models or single feature types, our approach combines signal preprocessing, domain-based feature engineering, multi-stage feature selection, and comparative model training across different supervised learning algorithms including both classical machine learning and deep learning models.

Using a UR5e robotic arm setup with a custom drilling end-effector, we collected multi-sensor data and performed preprocessing steps such as temporal aggregation, wavelet denoising, and outlier removal to make the raw signals more stable and meaningful. Feature engineering was done with a focus on resultant values, time-domain statistics, frequency-domain characteristics, and interaction-based signals which reflect the physical behaviour of the drilling process. Then, feature selection methods including ANOVA F-test, RFE, and SHAP were applied to pick the most important and relevant features which improved both model performance and interpretability.

After training and tuning a wide variety of models, Extra Trees Classifier showed the best performance among classical models, with a test accuracy of 96.33%, and CNN 1D Classifier was the best deep learning model, with a test accuracy of 95.33%. These results prove that a proper pipeline which uses data cleaning, smart feature extraction, and architecture-level diversity can lead to high accuracy and reliable predictions.

This framework can be very useful in real surgical setups where bone drilling is done in orthopaedic or neurosurgery. Although periodic drill bit replacement and regular machine maintenance are already part of standard protocol, still there can be unexpected problems like drill cracking, sudden breakage, or excessive heating due to local bone density differences or long operation times. Our model helps to detect these unexpected tool failures by analysing sensor signals in real-time, which can reduce the risk of tool breakage during operation and help avoid surgical errors.

This system can act like a second layer of safety by giving alerts when the tool condition starts to degrade, even if it is still under standard maintenance limits. This not only improves surgical safety but also helps in reducing unnecessary downtime and manual inspections.

Future Scope

This work is a part of an ongoing research in the development of end-effectors for robotic drilling. We are currently implementing real-time temperature sensing on the bone by locating sensors close to the drilling location. Currently, the IMU sensors to measure the vibration are located on the bone, which may not be possible in the case of a real patient. However, data may be collected by locating vibration sensors on the body of the patient or on a cadaver for further validation. TCM could be used for other surgical settings such as dental drilling, neurosurgery, and veterinary surgeries. Future work will consider contamination aspects and potential bone damage mechanisms, which are beyond the current focus on tool condition monitoring.