1. Introduction

Nowadays, robotic support is an integral part of industrial production processes [

1]. Especially in the area of mass production, robots have become standard and are increasingly spreading to medium-sized companies. According to [

2], it is very likely that robots of various kinds will soon find application in more than just their traditional form in industry and also, to an increasing extent, in private households and other work environments. This is primarily due to the rapid advancement of technology and the new possibilities it offers.

As production environments become increasingly flexible, mobile, and versatile, assistant and transport robots are becoming essential [

3]. In such applications, robots are no longer completely separated from humans but work together with them, at least to some extent. A number of cooperative or collaborative robots have been available on the market for a while and can be used in a wide variety of areas [

4]. A common motivation for introducing cooperation between humans and robots is to reduce monotony and avoid physically difficult tasks that affect ergonomics.

A basic distinction can be made between different forms of human–robot interaction (HRI) [

5,

6]. A classification is given in the literature by assigning them to a hierarchical structure, which varies in detail depending on the author. Onnasch et al. divide interaction with robots into three classes: coexistence, cooperation, and collaboration [

5]. While coexistence does not involve a common work goal between humans and robots, this is certainly the case with cooperation. Although there is no direct dependency between the tasks of humans and robots, they are working on a common, overarching task. Collaboration differs from cooperation in terms of the joint processing of a subtask. In this case, particular attention must be paid to the coordination of both interaction partners. Bender et al. not only include the joint task in the classification but also the respective workspace of humans and robots [

6].

Robots that can work collaboratively are called cobots [

7]. Cobots are, above all, characterized by the fact that they achieve a significantly higher level of safety despite direct interaction with humans. This is enabled, for example, by using multiple compact robots instead of one large one. In addition, special sensor technology allows for more sensitive perception of the environment and, as a result, better collision detection. The standard [

8] specifies safety aspects that are particularly important for collaborative robots. Weber et al. point out that employee qualifications are essential to ensure a sufficient level of safety [

9].

In addition to safety, ergonomics also plays a central role in human–robot interaction [

10]. The correct use of robot technologies can, under certain circumstances, not only increase cognitive ergonomics but also reduce the physical strain on humans. Strengthening trust in the robot and reducing negative emotions is also important for successful interaction [

11,

12].

When working directly with robots in a human–robot team, trust in the robot is crucial [

13]. Especially in high-risk scenarios, trust directly affects how well people accept working with robots and how they act in different situations. For example, people who do not trust the robot are more likely to intervene in the robot’s automated process. In contrast, too much trust can also have a negative effect on cooperation and situation awareness because people may ignore the robot for a longer period of time. On the one hand, robot performance, size, type, and behavior appear to have a major influence on trust. On the other hand, environmental factors such as task- and team-related aspects also have an impact. Lewis et al. also divide the relevant factors into system characteristics (reliability, errors, predictability, transparency, and degree of automation) and environmental characteristics (risk due to task or context) and also introduce the personal characteristics of user(s) as a factor group [

14]. The latter primarily includes personal tendency to trust in general and self-confidence of individuals.

According to [

15], the acceptance of robots differs mainly in terms of the task to be performed and also in terms of the domain in which the application takes place. For example, [

16] showed that for the healthcare sector, robots that only assist with physical tasks such as transportation are relatively widely accepted. In contrast, robots that work closely with patients, for instance, assisting them with eating, are significantly less accepted. Another factor influencing the acceptance of robots is personal experience [

17]. If no personal experience has been gained with the specific robot to be worked with, negative attitudes toward it are more common [

18].

In addition to acceptance and trust, people’s emotional reactions can also influence HRI. For instance, a study by [

19] provides initial evidence regarding the influence of the robot’s movement speed on the emotional response of the user. Various distances between humans and robots were also investigated. The relevance of these factors is confirmed by the recommendations in the publication by [

20], which suggests both a specific maximum speed of the robot and a minimum distance from it.

To sum that up, to make the constantly increasing fields of human–robot interactions pleasant, effective, and productive for humans, and to maximize the quality of the interaction, we are required to develop ways to reduce fears and increase acceptance and trust. Regarding the reduction of fear in human–robot interaction, various research works show the effectiveness of interventions and training [

21,

22]. Additionally, as the study by [

23] shows, targeted training can also influence people’s trust in a system. By familiarizing them with a collision warning system in a car, trust in the system was strengthened in the long term. In order to make training as effective and efficient as possible, technologies that were not used in classic training courses are becoming increasingly popular. One of these technologies is virtual reality (VR) [

24]. The use of VR in training offers a number of advantages. First, participants are placed directly in the context of the situation to be trained. This enables them to participate far more actively in the scenario than in other forms of training. It also encourages experimenting with steps that would not have been tried in reality due to a perceived greater risk. The targeted use of gamification can also increase motivation. Another important factor is the possibility of collaboration over long distances, which enables shared experiences in groups. The ability to adapt virtual scenarios to individual users, for example, through different training speeds or learning methods, is another advantage. Various forms of training were already being implemented with the help of virtual realities. Examples include training and professional development for various career groups, including doctors [

25]. VR training has been used explicitly to reduce anxiety, as a study by [

26] shows. Additionally, training using VR is already available in robotics. However, these are mainly used as a training platform to teach users how to operate the robot correctly [

27].

As far as the authors know, no successful training programs to reduce anxiety and other negative emotions while increasing acceptance and trust in human–robot interaction exist. Furthermore, there seems to be a research gap regarding whether VR can be suitable for this kind of training. In order to weigh up the usefulness of VR training, it should first be determined whether virtual interactions with robots have a similar effect on human reactions as real interactions do. In addition to subjective measures, objective measurement methods should ideally also be used to obtain a holistic picture of the processes taking place within the human body. Examples are the cardiovascular and the electrodermal activity, which are non-invasive and mostly cannot be influenced intentionally [

28]. In the past, measurements of cardiovascular and electrodermal activity were found to be suitable for assessing the relevance of various factors for human–robot interaction [

19,

20] as well as for a VR teaching tool [

29].

Previous paragraphs have demonstrated the need for training to reduce anxiety and other negative emotions and to increase acceptance of human–robot interaction. The aim of this study is to examine whether emotional reactions, acceptance, and trust in human–robot interaction differ between virtual scenarios and real interactions. This is intended to investigate the suitability of training in virtual realities for this specific use case. Of particular interest is whether emotional and mental activation changes during a virtual interaction with a robot and to what extent it does so. This leads to the following two research questions:

Research question 1. Does human–robot interaction in a virtual environment lead to human reactions different to those observed in real-life interactions?

Research question 2. Are interactions with a robot in a virtual reality, which is as realistic as possible, subjectively perceived as different from interactions with a real robot?

Learning effects observed during repeated human–robot interactions are also of interest for assessing the suitability of VR training. Special attention should be paid to the differences from possible learning effects in real interaction. When virtual scenarios show fewer objective human reactions or a more positive subjective evaluation with an increasing number of repetitions, this may indicate that training in virtual reality is a good option. That leads to research question three:

Research question 3. Is there a difference in the learning effect of human–robot interaction between a virtual environment and a real interaction after multiple repetitions?

When designing VR training, one of the first questions that arises is to what extent the level of detail in the virtual representation of a scene plays a role in the implementation. To answer this question, the fourth research question was formulated.

Research question 4. Does the level of detail of the digital twin (e.g., modalities used) have an influence on objective human reactions and the subjective evaluation?

5. Conclusions

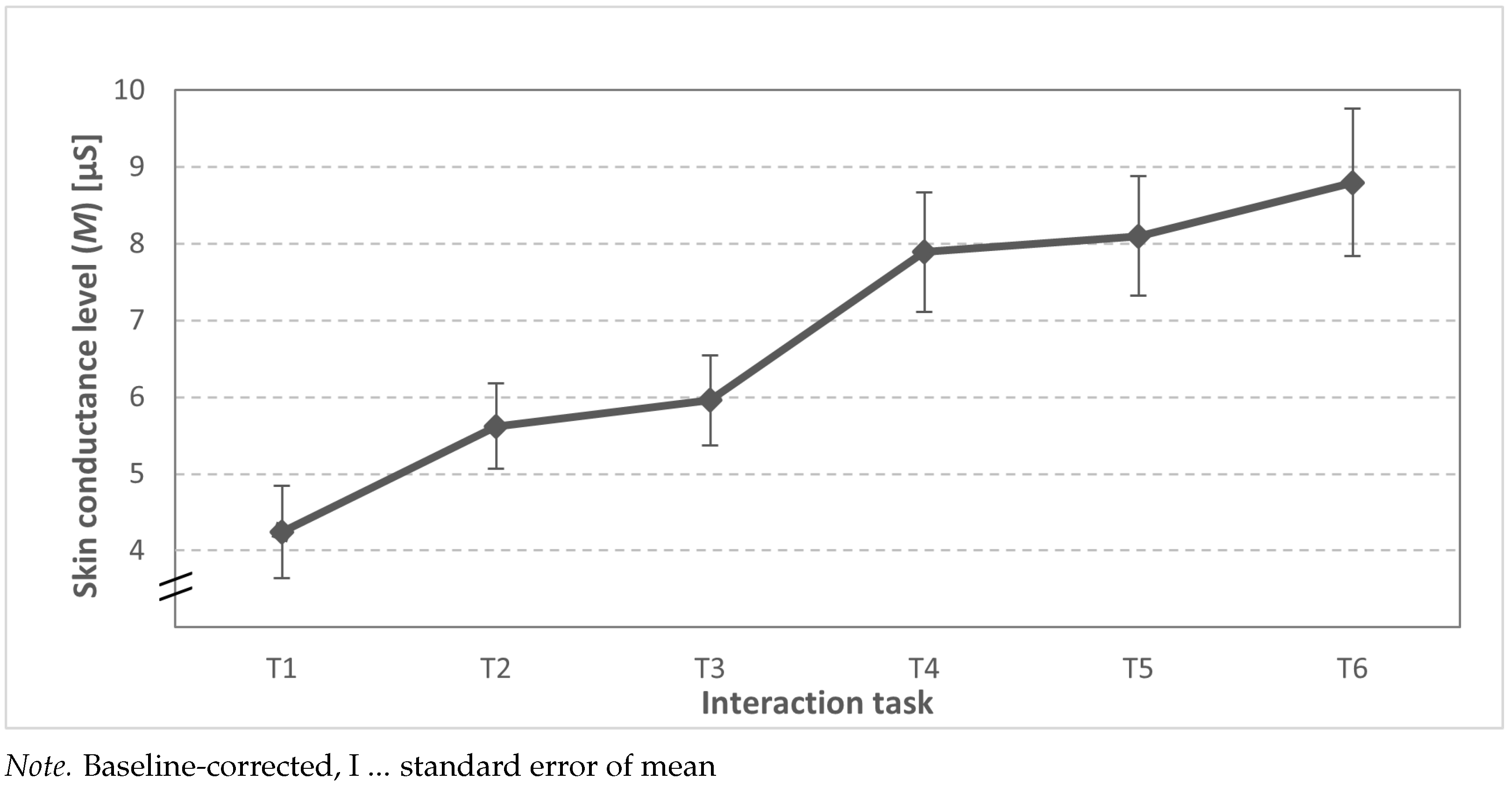

The presented study examined the suitability of virtual realities for implementing a training program to reduce and prevent fears and other negative emotions in human–robot interaction. The potential VR training is intended to increase acceptance and trust. Four research questions were therefore formulated. The first finding relates to the similarities observed between human reactions when interacting with a robot in reality and in a virtual scenario. That was made possible by recording psychophysiological measures. This initial finding already suggests that the stress factors associated with human–robot interaction can be transferred to a virtual scenario. The results of a similar evaluation, but for subjective measures, largely confirm this. However, some differences in acceptance were found, which may be due to the novelty of virtual interaction.

In addition, the question arises as to whether a learning effect could already be observed in this very rudimentary type of interaction. Such a learning effect was not shown by the results of this study. Although isolated effects related to the iterations of the task did occur, these differences were, in most cases, inverse to the expected direction. Only the scale for the emotional state showed a more positive value in later interactions than in earlier ones. However, this was the only indication of a learning effect. Choosing a different interaction task with a higher training factor might reveal other effects. Therefore, future studies shall investigate this aspect. However, the findings on learning effects have no bearing on the suitability of virtual realities, as the task was not designed to determine such an effect.

Furthermore, the question arose as to how varying levels of detail in the virtual environment influence participants’ reactions and subjective evaluations. No direct effects on psychophysiological measures could be found. Except for perceived dominance, the subjective measures also did not show any significant differences. However, when looking at the interview results, completely different feedback from the participants can be observed. The results indicate that differences arising from methodological approaches—particularly regarding the modalities employed—may have obscured detectable variations, underscoring the need for further research in this area.