Artificial Intelligence Approaches for UAV Deconfliction: A Comparative Review and Framework Proposal †

Abstract

1. Introduction

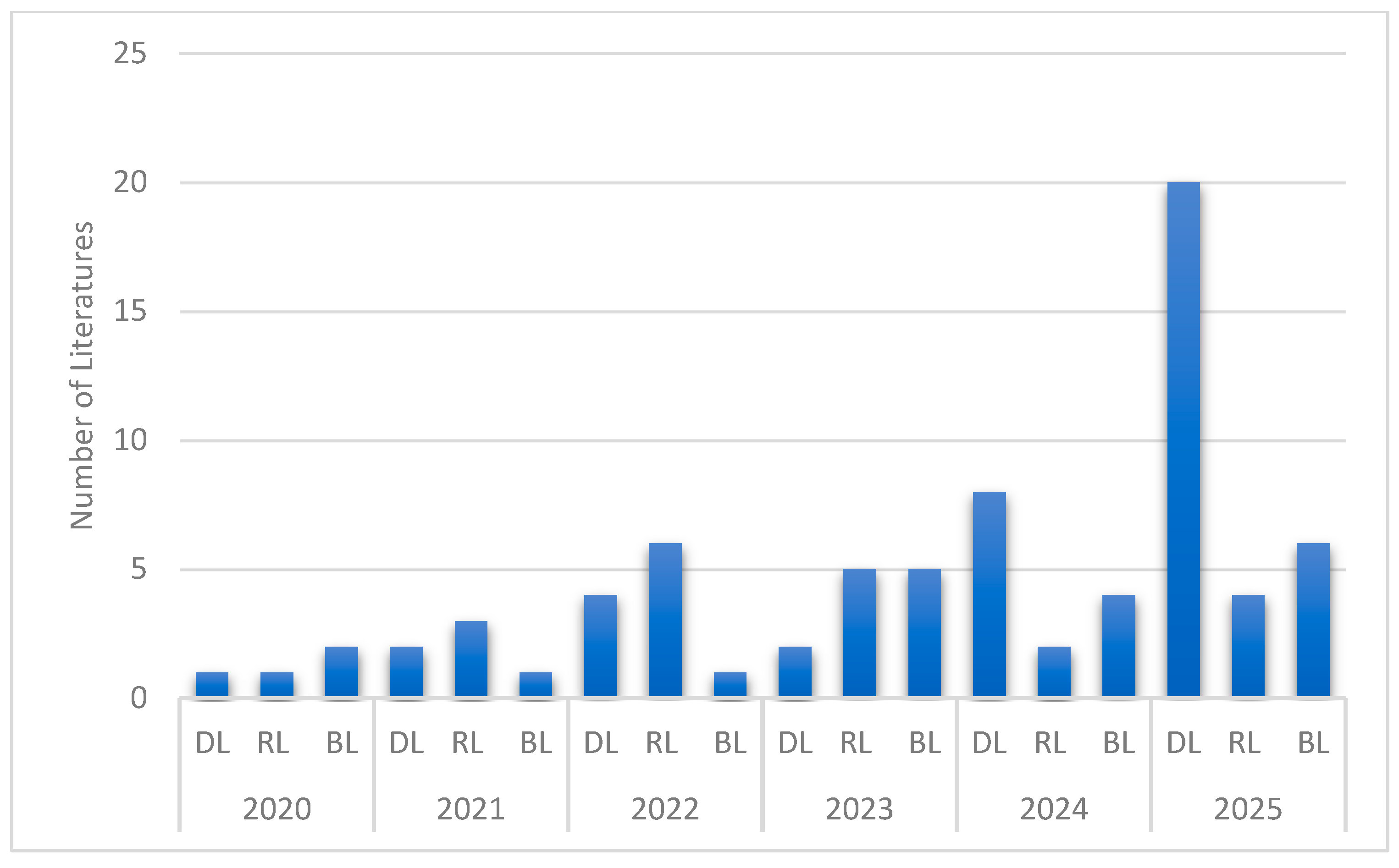

2. AI Algorithms for Drone Deconfliction

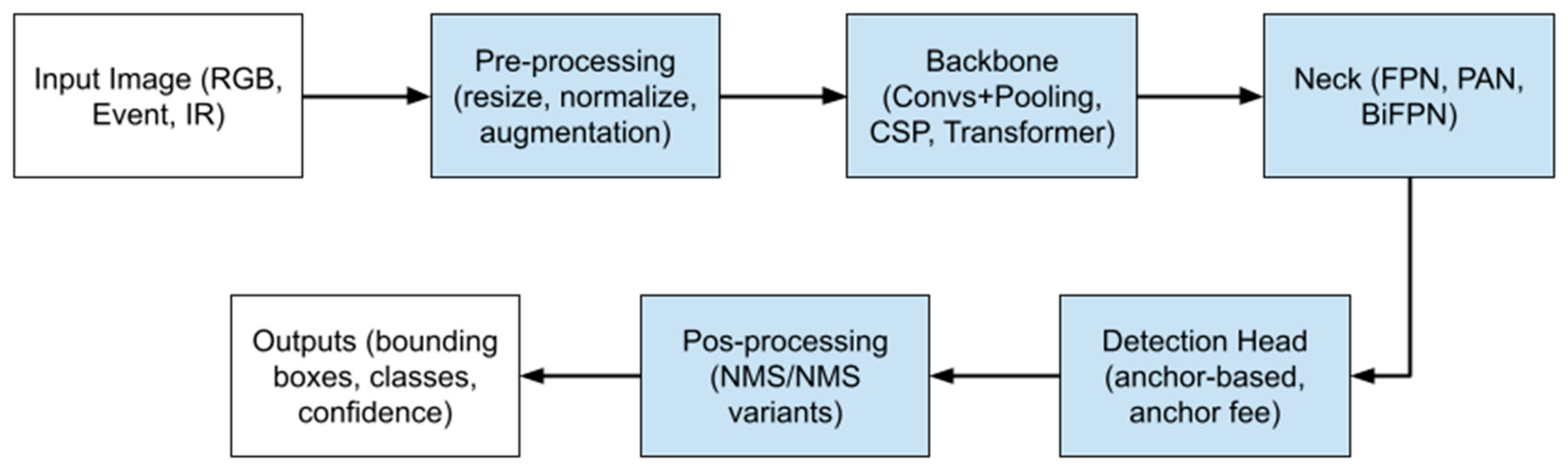

2.1. Deep Learning

2.1.1. Convolutional Neural Networks (CNNs) for Vision-Based Deconfliction

2.1.2. Recurrent Neural Networks (RNNs) and LSTMs for Trajectory Prediction

2.1.3. Transformer Neural Networks for Complex Interaction Modeling

2.1.4. Graph Neural Networks (GNNs) for Cooperative Conflict Resolution

2.1.5. The Emerging Role of Large Language Models (LLMs) in UAV Operations

2.2. Reinforcement Learning

2.2.1. Traditional Reinforcement Learning (RL) for Single-Agent Deconfliction

2.2.2. Multiagent Reinforcement Learning (MARL) for Cooperative Deconfliction

2.2.3. Deep Reinforcement Learning (DRL) for Advanced Deconfliction

2.3. Bio-Inspired Learning

2.3.1. Swarm Intelligence Algorithms for Collision Avoidance and Coordination

2.3.2. Evolutionary Algorithms for Adaptive Deconfliction

2.3.3. Neural-Inspired Algorithms: Spiking Neural Networks (SNNs)

3. Discussion

3.1. Key Findings

3.1.1. Deep Learning

3.1.2. Reinforcement Learning

3.1.3. Bio-Inspired Learning

3.2. Trade-Offs and Considerations

3.3. AI4HyDrop Approach to AI-Based Drone Deconfliction

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Comparative Tables for Deep Learning

| Ref. No. | Dataset | Performance Metrics | Application Constraints |

|---|---|---|---|

| [14] | Realistic vision data obtained with a self-developed simulator; Test flights using two unmanned aerial vehicles. | Effectiveness in real-time detection and avoidance; Ability to isolate moving aerial objects and classify them; Detection of all incoming obstacles along their trajectories. | SWaP-constrained mini-UAVs; MATLAB Deep Learning Toolbox; ResNet-50; 150 layers; Detection system cannot determine intruder range. |

| [15] | Datasets used in the field of Visual SLAM and object detection | Dynamic point removal, data association, point cloud segmentation, robustness, and accuracy | General robotics and autonomous driving applications, with a focus on dynamic environments. |

| [16] | ColANet dataset (for transfer learning customization); DJI Tello drone camera (for real-time deployment). | Accuracy (validation accuracy, training accuracy), model size, inference time, and power consumption. | Drones with limited computing resources; Real-time deployment on DJI Tello drone; Tested on AMD EPYC processor, 1.7T RAM, Tesla A100 GPU for evaluation. |

| [17] | ARD100 dataset (newly proposed, 100 videos, 202,467 frames, smallest average object size); NPS-Drones datasets; Untrained Drone-vs-Bird dataset. | Average Precision (AP). | Detection of tiny objects; Complex urban backgrounds; Abrupt camera movement; Low-light conditions; Focus on tiny drones; Potential for deployment on mobile platforms. |

| [18] | UAV-based imagery for wildlife. | Multi-objective loss functions (Wise IoU (WIoU) and Normalized Wasserstein Distance (NWD)); Parameter count reduction. | Small object detection in UAV imagery; Real-time processing and low-latency are critical for UAV applications. |

| [19] | VisDrone2019 dataset; DOTA v1.0 dataset. | mAP@0.5; Parameter count. | UAV remote sensing images; Challenges with small object size, multi-scale variations, and background interference. |

| [20] | SIMD dataset, NWPY VHR-10 dataset | Precision, accuracy | Remote sensing applications. |

| [21] | Real-time UAV image processing pipeline data. | Inference speed (FPS), power consumption, GPU memory consumption, mean average precision (mAP@50), and end-to-end processing times. | Constrained computational edge devices (Jetson Orin NX, Raspberry Pi 5); Real-time UAV image processing; Drone applications. |

| [22] | Various datasets are used across the YOLO series. | Speed, accuracy, and computational efficiency | General object detection applications; Focus on speed, accuracy, and computational efficiency. |

| [23] | Simulated forest environments. | Not explicitly named | Real-time UAV obstacle detection; Simulated Forest environments. |

| [24] | UAVDT dataset; VisDrone dataset. | Detection mAP@0.5 scores; Classification accuracies. | Nighttime operations; Challenges with poor illumination, noise, occlusions, and dynamic lighting artifacts. |

| Ref. No. | Dataset | Performance Metrics | Application Constraints |

|---|---|---|---|

| [25] | Historical flight data (simulated drone failure at Hannover airport, Germany). | Not explicitly quantified | U-Space environment; BlueSky ATM simulator used for simulation; Focus on system failure scenarios (hardware failure, broken communication) |

| [26] | Not explicitly named | Not explicitly quantified | Not explicitly explained |

| [27] | Preprocessed Automatic Dependent Surveillance-Broadcast (ADS-B) dataset. | Prediction performance (especially for long-term trajectory); Conflict detection results (detect conflicts within seconds). | Internet of Aerial Vehicles; Air-Ground Integrated Vehicle Networks (AGIVN); Addresses limitations of traditional surveillance technology for intensive Air Traffic Management (ATM). |

| [28] | Not explicitly named | Not explicitly quantified | Not explicitly explained |

| [29] | ADS-B information. | Not explicitly quantified | Relies on ADS-B information. |

| [30] | Real experimental drone flight data. | Comparison of prediction performances between Long Short-Term Memory (LSTM) and Nonlinear Autoregressive with Exogenous Inputs (NARX) models. | Enhancing UAV control capabilities; circumventing the complexity of traditional flight control systems; minimizing risk during actual flights (which implies a simulation environment for testing). |

| [31] | Synthetic/Real-world 3D UAV trajectory data (UZH-FPV, Mid-Air). | Mean Squared Error (MSE) (ranging from 2 × 10−8 to 2 × 10−7). | Real-time 3D UAV trajectory prediction. |

| [32] | Hourly notional amounts traded on various cryptocurrency assets from Binance (1 January 2020–31 December 2022). | Root Mean Square Error (RMSE) (loss function); Average R-squared (R2) (primary metric). TKAN achieved R2 at least 25% higher than GRU for longer time steps, and demonstrated better model stability. | Numerical prediction problem; Compared against GRU and LSTM; Focus on layer performance rather than full architecture; Preprocessing involves two-stage scaling (moving median, MinMaxScaling). |

| [33] | UAV-collected urban traffic data (TUMDOT-MUC dataset) | R2 score, RMSE, MSE (R2: 0.98; RMSE: 39.85; MSE: 1588.5; Outperforms LSTM, GRU, and other ML/DL models in its context). | Urban environments; Multiagent trajectory prediction; Optimized with Particle Swarm Optimization (PSO) for hyperparameter tuning. |

| Ref. No. | Dataset | Performance Metrics | Application Constraints |

|---|---|---|---|

| [34] | INTERACTION, highD, and CitySim datasets. | Prediction performance, computational costs, and inference latency. Outperforms other state-of-the-art methods; Achieved better prediction performance with lower inference latency. | Autonomous driving applications; Need for interpretability and explainability; Real-time decision-making for intelligent planning systems. |

| [35] | Authentic logistics datasets. | Path length, time efficiency, energy consumption. Achieves 15% reduction in travel distance, 20% boost in time efficiency, and 10% decrease in energy consumption. | Logistics robots; Real-time responsiveness; Interpretability; May incur higher computational costs for large-scale environments. |

| [36] | Simulated swarm-vs-swarm engagements; Enriched dataset with variations in defender numbers, motions, and measurement noise levels. | Accuracy, noise robustness, and scalability to swarm size. Predicts swarm behaviors with 97% accuracy (20 time steps); Graceful degradation to 80% accuracy (50% noise); Scalable to 10–100 agents. | Military contexts; Real-time decision-making support; Short observation windows (20 time steps). |

| [37] | DisasterEye (custom dataset: 2751 images across eight categories, sourced from UAVs and on-site individuals); DFAN; AIDER. | Accuracy, inference latency, memory usage, and performance speed. Achieved high accuracy with lowered inference latency and memory use; 3–5x faster performance than original models with almost similar accuracy. | Resource-constrained UAV platforms (e.g., Jetson Nano); Onboard processing; Real-time performance; Addresses privacy, connectivity, and latency issues in disaster-prone areas. |

| Ref. No. | Dataset | Performance Metrics | Application Constraints |

|---|---|---|---|

| [38] | Not explicitly named | Convergence, reduction in Losses of Separation (LOSS), consistent avoidance of Near Mid-Air Collisions (NMACs). | Multiagent reinforcement learning (MARL) problem; Cooperative agents; Compound conflicts. |

| [39] | Not explicitly named | Not explicitly quantified | General GCRL framework |

| [40] | Not explicitly named | Not explicitly quantified | Dense UAV networks; Conflict resolution. |

| [41] | Not explicitly named | Not explicitly quantified | Multiagent cooperation. |

| Ref. No. | Dataset | Performance Metrics | Application Constraints |

|---|---|---|---|

| [42] | Not explicitly named | Not explicitly quantified | Focus on suitability for UAV integration; Identifying novel opportunities for LLM embedding within UAV frameworks. |

| [43] | data from UAV-assisted sensor networks. | Minimizing the average Age of Information (AoI) across ground sensors and optimizing data collection schedules and velocities. | LLM-Enabled In-Context Learning (ICL) for onboard Flight Resource Allocation; UAV-assisted sensor networks. |

| [44] | Case study on data collection scheduling. | Significantly reduce packet loss compared to conventional approaches; Mitigate potential jailbreaking vulnerabilities. | Deployment of LLMs at the network edge to reduce latency and preserve data privacy; Suitable for real-time, mission-critical public safety UAVs. |

| [45] | 150,000 telemetry log entries | Decision Accuracy; Cosine Similarity (retrieved context vs. input data); BLEU Score (linguistic similarity of generated commands to expert commands); Response Time (Decision Latency) | LLaMA3.2 1B Instruct (lightweight model); Quantized (INT8 precision) for edge deployment; Cloud processing hub for centralized processing and scalability; Resource-constrained edge hardware. |

| [46] | evaluated across scenarios involving code generation and mission planning. | Qwen 2.5 excels in multi-step reasoning; Gemma 2 balances accuracy and latency; LLaMA 3.2 offers faster responses with lower logical coherence. | Integrates open-source LLMs (Qwen 2.5, Gemma 2, LLaMA 3.2) with UAV autopilot systems (ArduPilot stack); Supports simulation and real-world deployment; Operates offline via Ollama runtime; |

| [47] | Simulated environment for testing swarm behavior. | Multi-critic consensus mechanism to evaluate trajectory quality; Hierarchical prompt structuring for improved task execution | Multimodal LLMs (interprets text and audio inputs); Generates 3D waypoints for UAV movements |

| [48] | real-world SAR scenarios. Tested on two different scenarios. | Time to complete missions. Faster on average by 33.75% compared with an off-the-shelf autopilot and 54.6% compared with a human pilot. | Multimodal system (LLM: ChatGPT-4o, VLM: quantized Molmo-7B-D BnB 4-bit model); Onboard computer (e.g., OrangePi) for deployment. |

| [49] | real-time sensing and environmental feedback data. | Enhanced performance and scalability of UAV systems. | MLLMs (LLMs, VFMs, VLMs); Communication systems (WiFi, cellular, ground radio links, satellite communication); UAV platforms (multirotor, fixed-wing, rotary-wing, hybrid, flapping-wing) |

| [50] | Not explicitly named | Not explicitly quantified | state-of-the-art LLM technology, multimodal data resources for UAVs, and key tasks/application scenarios where UAVs and LLMs converge. |

Appendix B. Comparative Tables for Reinforcement Learning

| Ref. No. | Agent/Actions | Environment/States | Application Constraints |

|---|---|---|---|

| [55] | Agent guarantees minimum separation distance; exhibits centralized learning and distributed policy. Actions: (1) RL controlling heading and speed variation; (2) RL managing heading, speed, and altitude variation. | High traffic densities. Rewards are based on global information (cumulative losses of all aircraft). | Distributed conflict resolution. |

| [56] | The agent is the drone itself. Utilizes a novel Estimated Time of Arrival (ETA)-based temporal reward system. Baseline algorithm includes greedy search, delayed learning, and multi-step learning. | Restructured state space using the risk sectors concept—tactical conflict resolution for air logistics transportation. | Air logistics transportation. |

| [57] | The agent’s primary objective is to avoid obstacles within a defined buffer zone. | Airspace reservation with a risk-based operational safety bound. Considers UAS performance, weather conditions, and uncertainties in UAS operations (including positioning errors)—new reward function devised for reinforcement learning. | UAS conflict resolution. |

| [58] | Agent configures parameters for computing conflict resolution (CR) maneuvers using a geometric CR method. RL selected for its capacity to comprehend and execute complete action sequences. | Conflict resolution (CR) scenarios can lead to secondary conflicts. Reward: Adept at detecting emergent secondary conflicts. | Hybrid method combining geometric CR techniques with RL. |

| [59] | Agents learn to self-prioritize for conflict resolution. | Air Traffic Control (ATC) with limited instructions. | Air Traffic Control. |

| [60] | Distributed Reinforcement Learning. Agents are UAVs in a swarm. | UAV swarm control. | Flexible and efficient UAV swarm control. |

| [61] | Deep Reinforcement Learning (DRL) agent for obstacle avoidance. Actions in a continuous action space. | UAS obstacle avoidance. | Continuous action space. |

| [62] | Reinforcement learning with counterfactual credit assignment for multi-UAV collision avoidance. | Multi-UAV collision avoidance scenarios. | Multi-UAV systems. |

| [63] | DRL model with continuous state and action spaces. Dynamically chooses a resolution strategy for pairs of UAVs. The second layer is a collaborative UAV collision avoidance model integrating a three-dimensional conflict detection and resolution pool. | Urban environments. Multiple UAVs. Two-layer resolution framework involving speed adjustments and rerouting strategies. | Urban environments; Multiple UAVs. |

| Ref. No. | Agent/Actions | Environment/States | Application Constraints |

|---|---|---|---|

| [38] | Cooperative agents (UAVs) using a Graph Convolutional Reinforcement Learning (DGN) model. Actions: jointly generate resolution maneuvers. | Multi-UAV conflicts are conceptualized as compound ecosystems; Air traffic is modeled as a graph (UAVs as nodes, conflict creates an edge)—scenarios: 3-agent and 4-agent. | Multiagent reinforcement learning (MARL) problem; Cooperative agents; Compound conflicts. |

| [64] | Multiagent approach overseeing individual aircraft behavior. Actions: learning-based tactical deconfliction. | Urban Air Mobility (UAM) environment; Strategic conflict management with demand capacity balancing (DCB). | Integrated conflict management for UAM. |

| [65] | Agents are flights with conflict status—actions: ground delay, speed adjustment, flight cancelation (selected autonomously by recurrent actor-critic networks). | Dynamic Urban Air Mobility (UAM) environments, considering uncertainties. Strategic conflict management. | Urban Air Mobility operations; Addresses challenges related to DCB, separation conflicts, and block unavailability caused by wind turbulence. |

| [66] | UAVs in a large-scale swarm. Actions: path planning based on mean-field reinforcement learning. | Large-scale UAV swarm. | Large-scale UAV swarm. |

| [67] | Multiagent reinforcement learning. Agents are UAVs in a mission-oriented drone network. Actions: collaborative execution, energy-aware decisions. | Mission-oriented drone networks. | Energy-aware collaborative execution. |

| [68] | Multi-UAVs. Actions: cooperative search for moving targets. | 3D scenarios with moving targets. | Multi-UAV systems; 3D scenarios. |

| [69] | Agents are UAVs in a swarm. Actions: confrontation/combat maneuvers. | UAV swarm confrontation. | UAV swarm confrontation. |

| [70] | Multi-UAVs using a leader-follower strategy within a hierarchical reinforcement learning framework. Actions: combat maneuvers. | Multi-UAV combat scenarios. | Multi-UAV combat. |

| Ref. No. | Agent/Actions | Environment/States | Application Constraints |

|---|---|---|---|

| [71] | DRL-based algorithms. Actions: prevent collisions while optimizing energy consumption; operate without pre-existing knowledge. | UAV environment; Challenging setting with numerous UAVs moving randomly in a confined area without correlation. | Deployment onboard UAV or at Multi-Access Edge Computing (MEC); Diverse environments. |

| [72] | Host drone using Double Deep Q Network (DDQN) framework. Actions: generate conflict-free maneuvers at each time step. | Urban airspace for UAV operations; Tactical conflict resolution framed as a sequential decision-making problem | Urban airspace; Unmanned Aerial Vehicles (UAVs). |

| [73] | Multi-UAV formation control. Actions: maintain formation; avoid static and dynamic obstacles. | Multi-UAV formation control scenarios with static and dynamic obstacles. | Multi-UAV systems. |

| [74] | Swarm UAVs. Actions: intuitive and immersive control of swarm formation of UAVs; multi-UAV collision avoidance. | Swarm formation of UAVs; Augmented Reality (AR) human-drone interaction. | DroneARchery system; Haptic interface (LinkGlide) for tactile sensation |

Appendix C. Comparative Tables for Bio-Inspired Learning

| Ref. No. | Algorithm | Performance Metrics | Application Constraints |

|---|---|---|---|

| [78] | Drone swarm strategy for detection and tracking. | Not specified | Complex environments; Occluded targets. |

| [79] | Particle Swarm Optimization (PSO), Artificial Potential Field (APF). Extensive simulation experiments. | Energy saving, average tracking error, and task time. | 3D space; UAV swarms; LiDAR for obstacle detection; Sharing environmental data within the swarm. |

| [80] | Inspired by bird flocking and fish schooling. Simulations dataset. | Total collisions with obstacles (NoCs), duration time (DT), path length, and energy efficiency. | IoD swarms; Limited detection range; Multiple static and dynamic obstacles; 3D dynamic environment; Battery constraints |

| [81] | Inspired by the foraging behavior of ant colonies. Three-dimensional models of real structures dataset. | Path length. | Multiple UAVs; Cooperative inspection tasks. |

| [82] | Inspired by the flashing behavior of fireflies. | Shortest collision-free path length, ability to converge, ability to discover optimum solutions. | Mobile robot path planning; 2D-dimensional space (static obstacles) |

| [83] | Inspired by the social hierarchy and cooperative hunting behavior of grey wolves. | Optimization performance on benchmark functions; Path length (m). | UAV path planning; Obstacle-laden environments |

| [84] | Inspired by the foraging behavior of honeybees. Simulation datasets. | Effectiveness for epistasis detection (for SFMOABC); Convergence capabilities; Susceptibility to local optima. | Multi-drone path planning; Addresses drawbacks of traditional ant colony algorithms |

| [85] | Multi-swarm discrete quantum-inspired particle swarm optimization with adaptive simulated annealing | Localization accuracy, computational efficiency, and adaptability to varying UAV/target scales. | Complex 3D environments; Multi-target localization; Resource-constrained collaborative localization tasks. |

| Ref. No. | Algorithm | Performance Metrics | Application Constraints |

|---|---|---|---|

| [86] | GA with seeded initial population using ACO path, Voronoi vertices, clustering/collision-center seeding | ≥70% fewer objective evaluations with best seeding; faster convergence vs. plain GA. | 3-D terrain; target coverage (visit checkpoints); terrain collision avoidance. |

| [87] | Co-evolutionary multi-UAV CPP (sub-pop per UAV); penalty for constraints; two info-sharing strategies | Compared vs. two evolutionary baselines on cost (length + threat), constraint satisfaction, and efficiency; effective in complex rendezvous scenarios. | Known environment w/threat map; multi-UAV non-collision & time coordination enforced. |

| [88] | α-RACER: learn near-potential offline; maximize it online for approx. Nash; nonlinear bicycle + Pacejka; MPC-style policy with overtake/block | Wins most races vs. baselines; near-potential gap ≤ 10% (median ~2%); Nash regret ≤ 3%; compute comparable to tuned IBR, aimed at real-time. | Simulated track; three cars; full-state; proximity slow-down for collision handling; bounded throttle/steering; discrete time-step. |

| Ref. No. | Algorithm | Performance Metrics | Application Constraints |

|---|---|---|---|

| [89] | Reward-modulated SNN for swarm collision avoidance | Collision rate, success rate, inter-drone distance | Decentralized, onboard SNN hardware, local sensing only |

| [90] | Event-based vision + neuromorphic planning on Bebop2 | Latency, nav accuracy, FPS, success rate | Indoor, Bebop2 hardware limits |

| [91] | Neuromorphic digital-twin for multi-UAV indoor control | Tracking error, coordination success, and latency | Indoor GPS-denied, comm stability |

| [92] | Neuromorphic SNN attitude estimator & controller | Estimation error, stability, and power use | Small UAVs, neuromorphic IMU needed |

| [93] | SNN for obstacle avoidance with GA optimization | Success rate, energy, latency | SpiNNaker hardware, sensing simulation |

| [94] | SNN + DRL (actor-critic) | Rewards, sample efficiency, and task completion | Robotics simulation, training costs high |

| [95] | LGMD binocular model | Accuracy, FPR/FNR, reaction time | Stereo cameras, vision-based |

| [96] | BrainCog large-scale SNN cognitive engine | Accuracy, adaptability, energy efficiency | General platform, scaling cost |

References

- Pavithra, S.; Kachroo, D.; Kadam, V.; Padala, H.; Purbey, R. Drone-Based Weed and Disease Detection in Agricultural Fields to Maximize Crop Health Using a Yolov8 Approach. In Proceedings of the 2023 IEEE 7th Conference on Information and Communication Technology, CICT 2023, Jabalpur, India, 15–17 December 2023; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Bernardo, R.M.; da Silva, L.C.B.; Rosa, P.F.F. UAV Embedded Real-Time Object Detection by a DCNN Model Trained on Synthetic Dataset. In Proceedings of the 2023 International Conference on Unmanned Aircraft Systems, ICUAS 2023, Warsaw, Poland, 6–9 June 2023; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2023; pp. 580–585. [Google Scholar] [CrossRef]

- Chour, K.; Pradeep, P.; Munishkin, A.A.; Kalyanam, K.M. Aerial Vehicle Routing and Scheduling for UAS Traffic Management: A Hybrid Monte Carlo Tree Search Approach. In Proceedings of the AIAA/IEEE Digital Avionics Systems Conference-Proceedings, Barcelona, Spain, 1–5 October 2023; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Bilgin, Z.; Bronz, M.; Yavrucuk, I. Automatic in Flight Conflict Resolution for Urban Air Mobility using Fluid Flow Vector Field based Guidance Algorithm. In Proceedings of the AIAA/IEEE Digital Avionics Systems Conference-Proceedings, Barcelona, Spain, 1–5 October 2023; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Pourjabar, M.; Rusci, M.; Bompani, L.; Lamberti, L.; Niculescu, V.; Palossi, D.; Benini, L. Multi-sensory Anti-collision Design for Autonomous Nano-swarm Exploration. In Proceedings of the ICECS 2023-2023 30th IEEE International Conference on Electronics, Circuits and Systems: Technosapiens for Saving Humanity, Istanbul, Turkiye, 4–7 December 2023; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- SESAR 3 JU, “AI4HyDrop”. Available online: https://ai4hydrop.eu/ (accessed on 11 April 2024).

- Pati, D.; Lorusso, L.N. How to Write a Systematic Review of the Literature. Health Environ. Res. Des. J. 2018, 11, 15–30. [Google Scholar] [CrossRef]

- Lin, C.; Han, G.; Wu, Q.; Wang, B.; Zhuang, J.; Li, W.; Hao, Z.; Fan, Z. Improving Generalization in Collision Avoidance for Multiple Unmanned Aerial Vehicles via Causal Representation Learning. Sensors 2025, 25, 3303. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Koul, P. A review of machine learning applications in aviation engineering. Adv. Mech. Mater. Eng. 2025, 42, 16–40. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Amodei, D. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Opromolla, R.; Fasano, G. Visual-based obstacle detection and tracking, and conflict detection for small UAS sense and avoid. Aerosp. Sci. Technol. 2021, 119, 107167. [Google Scholar] [CrossRef]

- Peng, J.; Chen, D.; Yang, Q.; Yang, C.; Xu, Y.; Qin, Y. Visual SLAM Based on Object Detection Network: A Review. Comput. Mater. Contin. 2023, 77, 3209–3236. [Google Scholar] [CrossRef]

- Zufar, R.N.; Banjerdpongchai, D. Selection of Lightweight Cnn Models with Limited Computing Resources for Drone Collision Prediction. ECTI Trans. Electr. Eng. Electron. Commun. 2024, 22. [Google Scholar] [CrossRef]

- Guo, H.; Lin, X.; Zhao, S. YOLOMG: Vision-based Drone-to-Drone Detection with Appearance and Pixel-Level Motion Fusion. arXiv 2025, arXiv:2503.07115. [Google Scholar]

- Naidu, A.P.; Gosalia, H.; Gakhar, I.; Rathore, S.S.; Didwania, K.; Verma, U. DEAL-YOLO: Drone-based Efficient Animal Localization using YOLO. arXiv 2025, arXiv:2503.04698. [Google Scholar]

- Wu, Y.; Mu, X.; Shi, H.; Hou, M. An object detection model AAPW-YOLO for UAV remote sensing images based on adaptive convolution and reconstructed feature fusion. Sci. Rep. 2025, 15, 1–20. [Google Scholar] [CrossRef]

- Wu, T.; Dong, Y. YOLO-SE: Improved YOLOv8 for Remote Sensing Object Detection and Recognition. Appl. Sci. 2023, 13, 12977. [Google Scholar] [CrossRef]

- Rey, L.; Bernardos, A.M.; Dobrzycki, A.D.; Carramiñana, D.; Bergesio, L.; Besada, J.A.; Casar, J.R. A Performance Analysis of You Only Look Once Models for Deployment on Constrained Computational Edge Devices in Drone Applications. Electronics 2025, 14, 638. [Google Scholar] [CrossRef]

- Sapkota, R.; Flores-Calero, M.; Qureshi, R.; Badgujar, C.; Nepal, U.; Poulose, A.; Zeno, P.; Vaddevolu, U.B.P.; Khan, S.; Shoman, M.; et al. YOLO advances to its genesis: A decadal and comprehensive review of the You Only Look Once (YOLO) series. Artif. Intell. Rev. 2024, 58, 1–83. [Google Scholar] [CrossRef]

- Partheepan, S.; Sanati, F.; Hassan, J. Evaluating YOLO Variants with Transfer Learning for Real-Time UAV Obstacle Detection in Simulated Forest Environments. IEEE Access 2025, 13, 99266–99290. [Google Scholar] [CrossRef]

- Alazeb, A.; Hanzla, M.; Al Mudawi, N.; Alshehri, M.; Alhasson, H.F.; AlHammadi, D.A.; Jalal, A. Nighttime Intelligent UAV-Based Vehicle Detection and Classification Using YOLOv10 and Swin Transformer. Comput. Mater. Contin. 2025, 84, 4677–4697. [Google Scholar] [CrossRef]

- Komatsu, R.; Bechina, A.A.A.; Güldal, S.; Şaşmaz, M. Machine Learning Attempt to Conflict Detection for UAV with System Failure in U-Space: Recurrent Neural Network, RNNn. In Proceedings of the 2022 International Conference on Unmanned Aircraft Systems, ICUAS 2022, Dubrovnik, Croatia, 21–24 June 2022; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2022; pp. 78–85. [Google Scholar] [CrossRef]

- Nguyen, X.-P.P.; Ruseno, N.; Chagas, F.S.; Bechina, A.A.A. A Survey of AI-based Models for UAVs’ Intelligent Control for Deconfliction. In Proceedings of the 10th 2024 International Conference on Control, Decision and Information Technologies, CoDIT, Vallette, Malta, 1–4 July 2024; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2024; pp. 2705–2710. [Google Scholar] [CrossRef]

- Cheng, C.; Guo, L.; Wu, T.; Sun, J.; Gui, G.; Adebisi, B.; Gacanin, H.; Sari, H. Machine-Learning-Aided Trajectory Prediction and Conflict Detection for Internet of Aerial Vehicles. IEEE Internet Things J. 2022, 9, 5882–5894. [Google Scholar] [CrossRef]

- Olive, X.; Sun, J.; Murça, M.C.R.; Krauth, T. A Framework to Evaluate Aircraft Trajectory Generation Methods. In Proceedings of the Fourteenth USA/Europe Air Traffic Management Research and Development Seminar (ATM2021), Virtual, 20–23 September 2021. [Google Scholar]

- Zhang, Y.; Jia, Z.; Dong, C.; Liu, Y.; Zhang, L.; Wu, Q. Recurrent LSTM-based UAV Trajectory Prediction with ADS-B Information. In Proceedings of the GLOBECOM 2022-2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022. [Google Scholar]

- Dong, S.; Das, S.; Townley, S. Drone motion prediction from flight data: A nonlinear time series approach. Syst. Sci. Control Eng. 2024, 12, 2409098. [Google Scholar] [CrossRef]

- Nacar, O.; Abdelkader, M.; Ghouti, L.; Gabr, K.; Al-Batati, A.; Koubaa, A. VECTOR: Velocity-Enhanced GRU Neural Network for Real-Time 3D UAV Trajectory Prediction. Drones 2025, 9, 8. [Google Scholar] [CrossRef]

- Genet, R.; Inzirillo, H. TKAN: Temporal Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2405.07344. [Google Scholar] [CrossRef]

- Mohebbi, M.; Kafash, E.; Döller, M. Multiagent Trajectory Prediction for Urban Environments with UAV Data Using Enhanced Temporal Kolmogorov-Arnold Networks with Particle Swarm Optimization. In Proceedings of the International Conference on Agents and Artificial Intelligence, Porto, Portugal, 23–25 February 2025; Science and Technology Publications, Lda: Setúbal, Portugal, 2025; pp. 586–597. [Google Scholar] [CrossRef]

- Huang, S.; Ye, L.; Chen, M.; Luo, W.; Wang, D.; Xu, C.; Liang, D. Interpretable Interaction Modeling for Trajectory Prediction via Agent Selection and Physical Coefficient. arXiv 2024, arXiv:2405.13152. [Google Scholar] [CrossRef]

- Luo, H.; Wei, J.; Zhao, S.; Liang, A.; Xu, Z.; Jiang, R. Intelligent logistics management robot path planning algorithm integrating transformer and GCN network. arXiv 2025, arXiv:2501.02749. [Google Scholar] [CrossRef]

- Peltier, D.W.; Kaminer, I.; Clark, A.H.; Orescanin, M. Swarm Characteristics Classification Using Neural Networks. IEEE Trans. Aerosp. Electron. Syst. 2024, 61, 389–400. [Google Scholar] [CrossRef]

- Jankovic, B.; Jangirova, S.; Ullah, W.; Khan, L.U.; Guizani, M. UAV-Assisted Real-Time Disaster Detection Using Optimized Transformer Model. arXiv 2025, arXiv:2501.12087. [Google Scholar] [CrossRef]

- Isufaj, R.; Omeri, M.; Piera, M.A. Multi-UAV Conflict Resolution with Graph Convolutional Reinforcement Learning. Appl. Sci. 2022, 12, 610. [Google Scholar] [CrossRef]

- Jiang, J.; Dun, C.; Huang, T.; Lu, Z. Graph Convolutional Reinforcement Learning. arXiv 2018, arXiv:1810.09202. [Google Scholar] [CrossRef]

- Li, Y.; Li, J.; Wang, J.; Zhang, X.; Ding, H.; Du, W. Multi-Scale Graph Enhanced Reinforcement Learning for Conflict Resolution in Dense UAV Networks. IEEE Internet Things J. 2025, 1. [Google Scholar] [CrossRef]

- Elrod, M.; Mehrabi, N.; Amin, R.; Kaur, M.; Cheng, L.; Martin, J.; Razi, A. Graph Based Deep Reinforcement Learning Aided by Transformers for Multiagent Cooperation. arXiv 2025, arXiv:2504.08195. [Google Scholar] [CrossRef]

- Javaid, S.; Fahim, H.; He, B.; Saeed, N. Large Language Models for UAVs: Current State and Pathways to the Future. IEEE Open J. Veh. Technol. 2024, 5, 1166–1192. [Google Scholar] [CrossRef]

- Emami, Y.; Zhou, H.; Nabavirazani, S.; Almeida, L. LLM-Enabled In-Context Learning for Data Collection Scheduling in UAV-assisted Sensor Networks. arXiv 2025, arXiv:2504.14556. [Google Scholar] [CrossRef]

- Emami, Y.; Zhou, H.; Gaitan, M.G.; Li, K.; Almeida, L.; Han, Z. From Prompts to Protection: Large Language Model-Enabled In-Context Learning for Smart Public Safety UAV. arXiv 2025, arXiv:2506.02649. [Google Scholar] [CrossRef]

- Sezgin, A. Scenario-Driven Evaluation of Autonomous Agents: Integrating Large Language Model for UAV Mission Reliability. Drones 2025, 9, 213. [Google Scholar] [CrossRef]

- Nunes, D.; Amorim, R.; Ribeiro, P.; Coelho, A.; Campos, R. A Framework Leveraging Large Language Models for Autonomous UAV Control in Flying Networks. arXiv 2025, arXiv:2506.04404. [Google Scholar] [CrossRef]

- Aikins, G.; Dao, M.P.; Moukpe, K.J.; Eskridge, T.C.; Nguyen, K.-D. LEVIOSA: Natural Language-Based Uncrewed Aerial Vehicle Trajectory Generation. Electronics 2024, 13, 4508. [Google Scholar] [CrossRef]

- Yaqoot, Y.; Mustafa, M.A.; Sautenkov, O.; Lykov, A.; Serpiva, V.; Tsetserukou, D. UAV-VLRR: Vision-Language Informed NMPC for Rapid Response in UAV Search and Rescue. arXiv 2025, arXiv:2503.02465. [Google Scholar] [CrossRef]

- Ping, Y.; Liang, T.; Ding, H.; Lei, G.; Wu, J.; Zou, X.; Zhang, T. Multimodal Large Language Models-Enabled UAV Swarm: Towards Efficient and Intelligent Autonomous Aerial Systems. arXiv 2025, arXiv:2506.12710. [Google Scholar] [CrossRef]

- Tian, Y.; Lin, F.; Li, Y.; Zhang, T.; Zhang, Q.; Fu, X.; Huang, J.; Dai, X.; Wang, Y.; Tian, C.; et al. UAVs Meet LLMs: Overviews and Perspectives Toward Agentic Low-Altitude Mobility. Inf. Fusion 2025, 122, 103158. [Google Scholar] [CrossRef]

- Shakya, A.K.; Pillai, G.; Chakrabarty, S. Reinforcement learning algorithms: A brief survey. Expert Syst. Appl. 2023, 231, 120495. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Amodu, O.A.; Althumali, H.; Hanapi, Z.M.; Jarray, C.; Mahmood, R.A.R.; Adam, M.S.; Bukar, U.A.; Abdullah, N.F.; Luong, N.C. A Comprehensive Survey of Deep Reinforcement Learning in UAV-Assisted IoT Data Collection. Veh. Commun. 2025, 55, 100949. [Google Scholar] [CrossRef]

- Abdalla, A.S.; Marojevic, V. Machine Learning-Assisted UAV Operations with UTM: Requirements, Challenges, and Solutions. arXiv 2020. [Google Scholar] [CrossRef]

- Ribeiro, M.; Ellerbroek, J.; Hoekstra, J. Distributed Conflict Resolution at High Traffic Densities with Reinforcement Learning. Aerospace 2022, 9, 472. [Google Scholar] [CrossRef]

- Li, C.; Gu, W.; Zheng, Y.; Huang, L.; Zhang, X. An ETA-Based Tactical Conflict Resolution Method for Air Logistics Transportation. Drones 2023, 7, 334. [Google Scholar] [CrossRef]

- Hu, J.; Liu, Y.; Tyagi, A.; Wieland, F.; Toussaint, S.; Luxhoj, J.T.; Maroney, D.; Lacher, A.; Erzberger, H.; Goebel, K.; et al. Uas conflict resolution integrating a risk-based operational safety bound as airspace reservation with reinforcement learning. In Proceedings of the AIAA Scitech 2020 Forum, Orlando, FL, USA, 6–10 January 2020; American Institute of Aeronautics and Astronautics Inc., AIAA: Reston, VA, USA, 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Ribeiro, M.; Ellerbroek, J.; Hoekstra, J. Improving Algorithm Conflict Resolution Manoeuvres with Reinforcement Learning. Aerospace 2022, 9, 847. [Google Scholar] [CrossRef]

- Nilsson, J.; Unger, J.; Eilertsen, G. Self-Prioritizing Multiagent Reinforcement Learning for Conflict Resolution in Air Traffic Control with Limited Instructions. Aerospace 2025, 12, 88. [Google Scholar] [CrossRef]

- Venturini, F.; Mason, F.; Pase, F.; Chiariotti, F.; Testolin, A.; Zanella, A.; Zorzi, M. Distributed Reinforcement Learning for Flexible and Efficient UAV Swarm Control. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 955–969. [Google Scholar] [CrossRef]

- Hu, J.; Yang, X.; Wang, W.; Wei, P.; Ying, L.; Liu, Y. Obstacle Avoidance for UAS in Continuous Action Space Using Deep Reinforcement Learning. IEEE Access 2022, 10, 90623–90634. [Google Scholar] [CrossRef]

- Huang, S.; Zhang, H.; Huang, Z. Multi-UAV Collision Avoidance using Multiagent Reinforcement Learning with Counterfactual Credit Assignment. arXiv 2022. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, H.; Zhou, J.; Hua, M.; Zhong, G.; Liu, H. Adaptive Collision Avoidance for Multiple UAVs in Urban Environments. Drones 2023, 7, 491. [Google Scholar] [CrossRef]

- Chen, S.; Evans, A.D.; Brittain, M.; Wei, P. Integrated Conflict Management for UAM with Strategic Demand Capacity Balancing and Learning-based Tactical Deconfliction. IEEE Trans. Intell. Transp. Syst. 2024, 25, 10049–10061. [Google Scholar] [CrossRef]

- Huang, C.; Petrunin, I.; Tsourdos, A. Strategic Conflict Management using Recurrent Multiagent Reinforcement Learning for Urban Air Mobility Operations Considering Uncertainties. J. Intell. Robot. Syst. Theory Appl. 2023, 107, 21. [Google Scholar] [CrossRef]

- Zhang, Y.; Ding, M.; Yuan, Y.; Zhang, J.; Yang, Q.; Shi, G.; Jiang, J. Large-scale UAV swarm path planning based on mean-field reinforcement learning. Chin. J. Aeronaut. Chin. J. Aeronaut. 2025, 38, 103484. [Google Scholar] [CrossRef]

- Li, Y.; Li, C.; Chen, J.; Roinou, C. Energy-Aware Multiagent Reinforcement Learning for Collaborative Execution in Mission-Oriented Drone Networks. In Proceedings of the 2022 International Conference on Computer Communications and Networks (ICCCN), Honolulu, HI, USA, 25–28 July 2022. [Google Scholar] [CrossRef]

- Liu, Y.; Li, X.; Wang, J.; Wei, F.; Yang, J. Reinforcement-Learning-Based Multi-UAV Cooperative Search for Moving Targets in 3D Scenarios. Drones 2024, 8, 378. [Google Scholar] [CrossRef]

- Wang, B.; Li, S.; Gao, X.; Xie, T. UAV Swarm Confrontation Using Hierarchical Multiagent Reinforcement Learning. Int. J. Aerosp. Eng. 2021, 2021, 1–12. [Google Scholar] [CrossRef]

- Pang, J.; He, J.; Mohamed, N.M.A.A.; Lin, C.; Zhang, Z.; Hao, X. A Hierarchical Reinforcement Learning Framework for Multi-UAV Combat Using Leader-Follower Strategy. Knowledge-Based Syst. 2025, 316, 113387. [Google Scholar] [CrossRef]

- Ouahouah, S.; Bagaa, M.; Prados-Garzon, J.; Taleb, T. Deep-Reinforcement-Learning-Based Collision Avoidance in UAV Environment. IEEE Internet Things J. 2022, 9, 4015–4030. [Google Scholar] [CrossRef]

- Zhang, M.; Yan, C.; Dai, W.; Xiang, X.; Low, K.H. Tactical conflict resolution in urban airspace for unmanned aerial vehicles operations using attention-based deep reinforcement learning. Green Energy Intell. Transp. 2023, 2, 100107. [Google Scholar] [CrossRef]

- Xie, Y.; Yu, C.; Zang, H.; Gao, F.; Tang, W.; Huang, J.; Wang, Y. Multi-UAV Formation Control with Static and Dynamic Obstacle Avoidance via Reinforcement Learning. arXiv 2024. [Google Scholar] [CrossRef]

- Dorzhieva, E.; Baza, A.; Gupta, A.; Fedoseev, A.; Cabrera, M.A.; Karmanova, E.; Tsetserukou, D. DroneARchery: Human-Drone Interaction through Augmented Reality with Haptic Feedback and Multi-UAV Collision Avoidance Driven by Deep Reinforcement Learning. In Proceedings of the 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Singapore, 17–21 October 2022. [Google Scholar]

- Aljalaud, F.; Kurdi, H.; Youcef-Toumi, K. Bio-Inspired Multi-UAV Path Planning Heuristics: A Review. Mathematics 2023, 11, 2356. [Google Scholar] [CrossRef]

- Poudel, S.; Arafat, M.Y.; Moh, S. Bio-Inspired Optimization-Based Path Planning Algorithms in Unmanned Aerial Vehicles: A Survey. Sensors 2023, 23, 3051. [Google Scholar] [CrossRef]

- Ali, Z.A.; Zhangang, H.; Zhengru, D. Path planning of multiple UAVs using MMACO and DE algorithm in dynamic environment. Meas. Control 2020, 56, 459–469. [Google Scholar] [CrossRef]

- Nathan, R.J.A.A.; Kurmi, I.; Bimber, O. Drone swarm strategy for the detection and tracking of occluded targets in complex environments. Commun. Eng. 2023, 2, 12. [Google Scholar] [CrossRef]

- Huang, S.; Zhang, H.; Huang, Z. E2CoPre: Energy Efficient and Cooperative Collision Avoidance for UAV Swarms with Trajectory Prediction. IEEE Trans. Intell. Transp. Syst. 2024, 25, 6951–6963. [Google Scholar] [CrossRef]

- Ahmed, G.; Sheltami, T.; Mahmoud, A.; Yasar, A. IoD swarms collision avoidance via improved particle swarm optimization. Transp. Res. Part A Policy Pract. 2020, 142, 260–278. [Google Scholar] [CrossRef]

- Bui, D.N.; Duong, T.N.; Phung, M.D. Ant Colony Optimization for Cooperative Inspection Path Planning Using Multiple Unmanned Aerial Vehicles. In Proceedings of the 2024 IEEE/SICE International Symposium on System Integration (SII), Ha Long, Vietnam, 8–11 January 2024. [Google Scholar] [CrossRef]

- Ab Wahab, M.N.; Nazir, A.; Khalil, A.; Bhatt, B.; Noor, M.H.M.; Akbar, M.F.; Mohamed, A.S.A. Optimised path planning using Enhanced Firefly Algorithm for a mobile robot. PLoS ONE 2024, 19, e0308264. [Google Scholar] [CrossRef]

- Teng, Z.; Dong, Q.; Zhang, Z.; Huang, S.; Zhang, W.; Wang, J.; Chen, X. An Improved Grey Wolf Optimizer Inspired by Advanced Cooperative Predation for UAV Shortest Path Planning. arXiv 2025. [Google Scholar] [CrossRef]

- Wang, Z.-C.; Xu, T.-L.; Liu, F.; Wei, Y.-P. Artificial bee colony based optimization algorithm and its application on multi-drone path planning. AIP Adv. 2025, 15, 055306. [Google Scholar] [CrossRef]

- Gong, W.; Lou, S.; Deng, L.; Yi, P.; Hong, Y. Efficient Multi-Target Localization Using Dynamic UAV Clusters. Sensors 2025, 25, 2857. [Google Scholar] [CrossRef]

- Pehlivanoglu, Y.V.; Pehlivanoglu, P. An enhanced genetic algorithm for path planning of autonomous UAV in target coverage problems. Appl. Soft Comput. 2021, 112, 107796. [Google Scholar] [CrossRef]

- Wu, Y.; Nie, M.; Ma, X.; Guo, Y.; Liu, X. Co-Evolutionary Algorithm-Based Multi-Unmanned Aerial Vehicle Cooperative Path Planning. Drones 2023, 7, 606. [Google Scholar] [CrossRef]

- Kalaria, D.; Maheshwari, C.; Sastry, S. α-RACER: Real-Time Algorithm for Game-Theoretic Motion Planning and Control in Autonomous Racing using Near-Potential Function. arXiv 2024. [Google Scholar] [CrossRef]

- Zhao, F.; Zeng, Y.; Han, B.; Fang, H.; Zhao, Z. Nature-inspired self-organizing collision avoidance for drone swarm based on reward-modulated spiking neural network. Patterns 2022, 3, 100611. [Google Scholar] [CrossRef]

- Joshi, A.; Sanyal, S.; Roy, K. Real-Time Neuromorphic Navigation: Integrating Event-Based Vision and Physics-Driven Planning on a Parrot Bebop2 Quadrotor. arXiv 2024. [Google Scholar] [CrossRef]

- Ahmadvand, R.; Sharif, S.S.; Banad, Y.M. Neuromorphic Digital-Twin-based Controller for Indoor Multi-UAV Systems Deployment. IEEE J. Indoor Seamless Position Navig. 2025, 3, 165–174. [Google Scholar] [CrossRef]

- Stroobants, S.; De Wagter, C.; de Croon, G.C.H.E. Neuromorphic Attitude Estimation and Control. IEEE Robot. Autom. Lett. 2025, 10, 4858–4865. [Google Scholar] [CrossRef]

- Salt, L.; Howard, D.; Indiveri, G.; Sandamirskaya, Y. Parameter Optimization and Learning in a Spiking Neural Network for UAV Obstacle Avoidance Targeting Neuromorphic Processors. IEEE Trans. Neural Networks Learn. Syst. 2019, 31, 3305–3318. [Google Scholar] [CrossRef]

- Zanatta, L.; Barchi, F.; Manoni, S.; Tolu, S.; Bartolini, A.; Acquaviva, A. Exploring spiking neural networks for deep reinforcement learning in robotic tasks. Sci. Rep. 2024, 14, 1–15. [Google Scholar] [CrossRef]

- Zheng, Y.; Wang, Y.; Wu, G.; Li, H.; Peng, J. Enhancing LGMD-based model for collision prediction via binocular structure. Front. Neurosci. 2023, 17, 1247227. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhao, D.; Zhao, F.; Shen, G.; Dong, Y.; Lu, E.; Zhang, Q.; Sun, Y.; Liang, Q.; Zhao, Y.; et al. BrainCog: A spiking neural network based, brain-inspired cognitive intelligence engine for brain-inspired AI and brain simulation. Patterns 2023, 4, 100789. [Google Scholar] [CrossRef]

- EU 2021/664; A Regulatory Framework for the U-Space. Official Journal of the European Union: Luxembourg, 2021.

- ISO 21384-3; Unmanned Aircraft Systems Part 3: Operational Procedures. ISO: Geneva, Switzerland, 2023.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chagas, F.S.; Ruseno, N.; Bechina, A.A.A. Artificial Intelligence Approaches for UAV Deconfliction: A Comparative Review and Framework Proposal. Automation 2025, 6, 54. https://doi.org/10.3390/automation6040054

Chagas FS, Ruseno N, Bechina AAA. Artificial Intelligence Approaches for UAV Deconfliction: A Comparative Review and Framework Proposal. Automation. 2025; 6(4):54. https://doi.org/10.3390/automation6040054

Chicago/Turabian StyleChagas, Fabio Suim, Neno Ruseno, and Aurilla Aurelie Arntzen Bechina. 2025. "Artificial Intelligence Approaches for UAV Deconfliction: A Comparative Review and Framework Proposal" Automation 6, no. 4: 54. https://doi.org/10.3390/automation6040054

APA StyleChagas, F. S., Ruseno, N., & Bechina, A. A. A. (2025). Artificial Intelligence Approaches for UAV Deconfliction: A Comparative Review and Framework Proposal. Automation, 6(4), 54. https://doi.org/10.3390/automation6040054