Automatic Irrigation System Based on Computer Vision and an Artificial Intelligence Technique Using Raspberry Pi

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

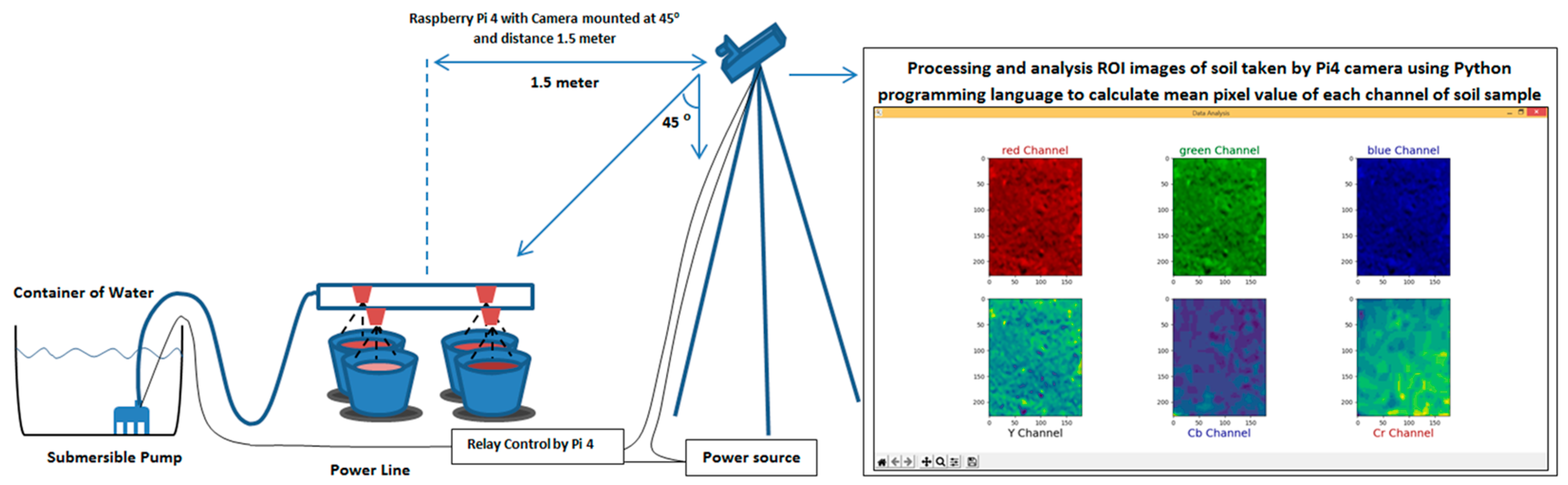

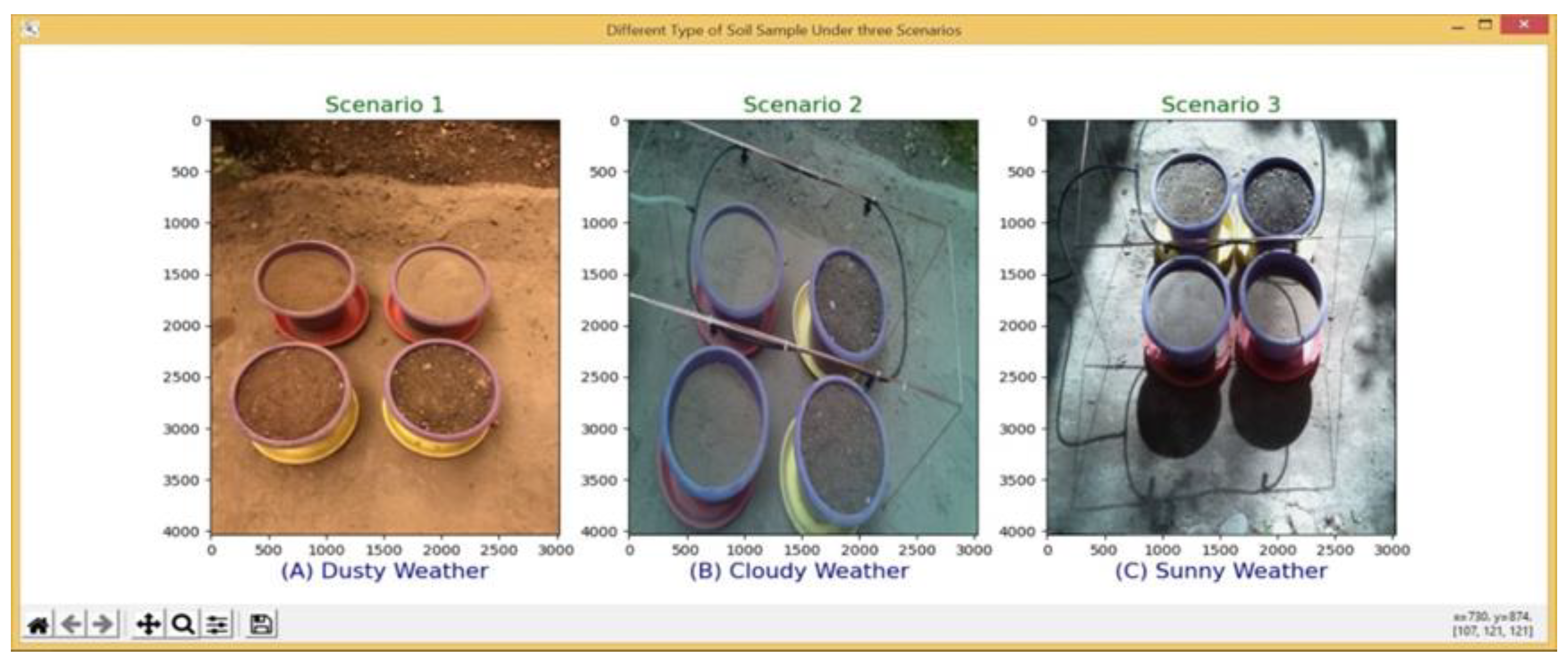

3.1. Data Collection and Experimental Setup

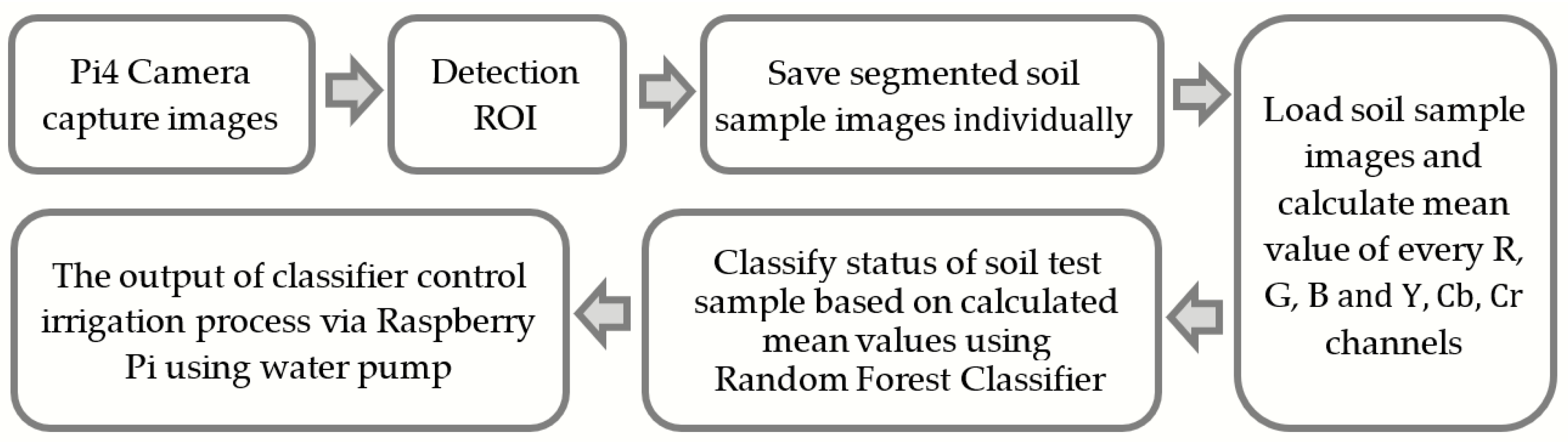

3.2. System Framework and Hardware Design

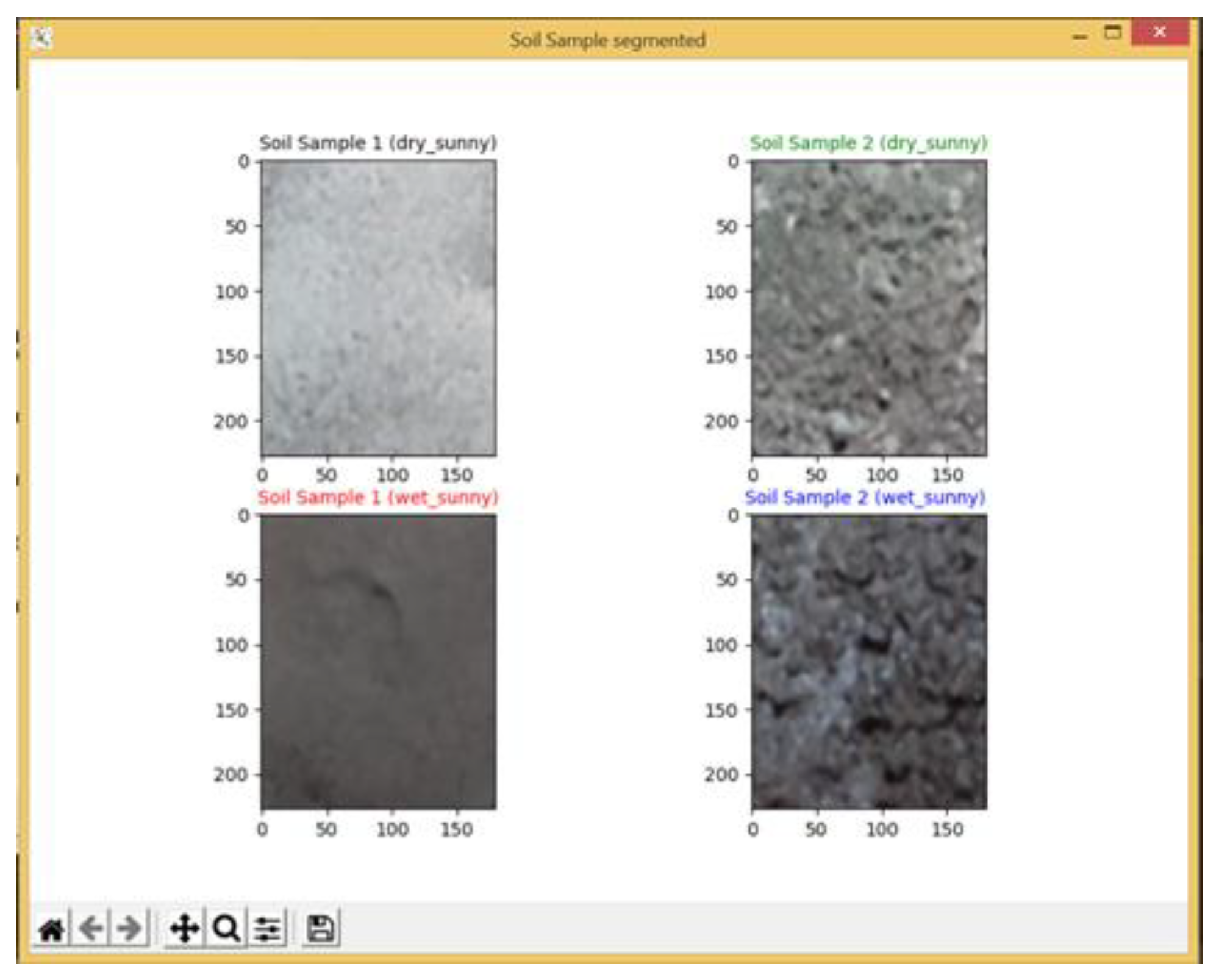

3.2.1. Soil Image Dataset

3.2.2. Soil Image Analysis

3.2.3. Random Forest Classifier Model

- Drawing M-tree bootstrap samples from the training data.

- For each of the bootstrap sample data entries, growing an un-pruned classification tree.

- At each internal node, randomly selecting an entry from the N predictors and determining the best split using only those predictors.

- Saving the tree as-is, alongside those built thus far (not performing cost complexity pruning).

- Forecasting new data by aggregating the forecasts of the M-tree trees.

3.3. Evaluation Metrics

4. Experimental Results

4.1. Hardware

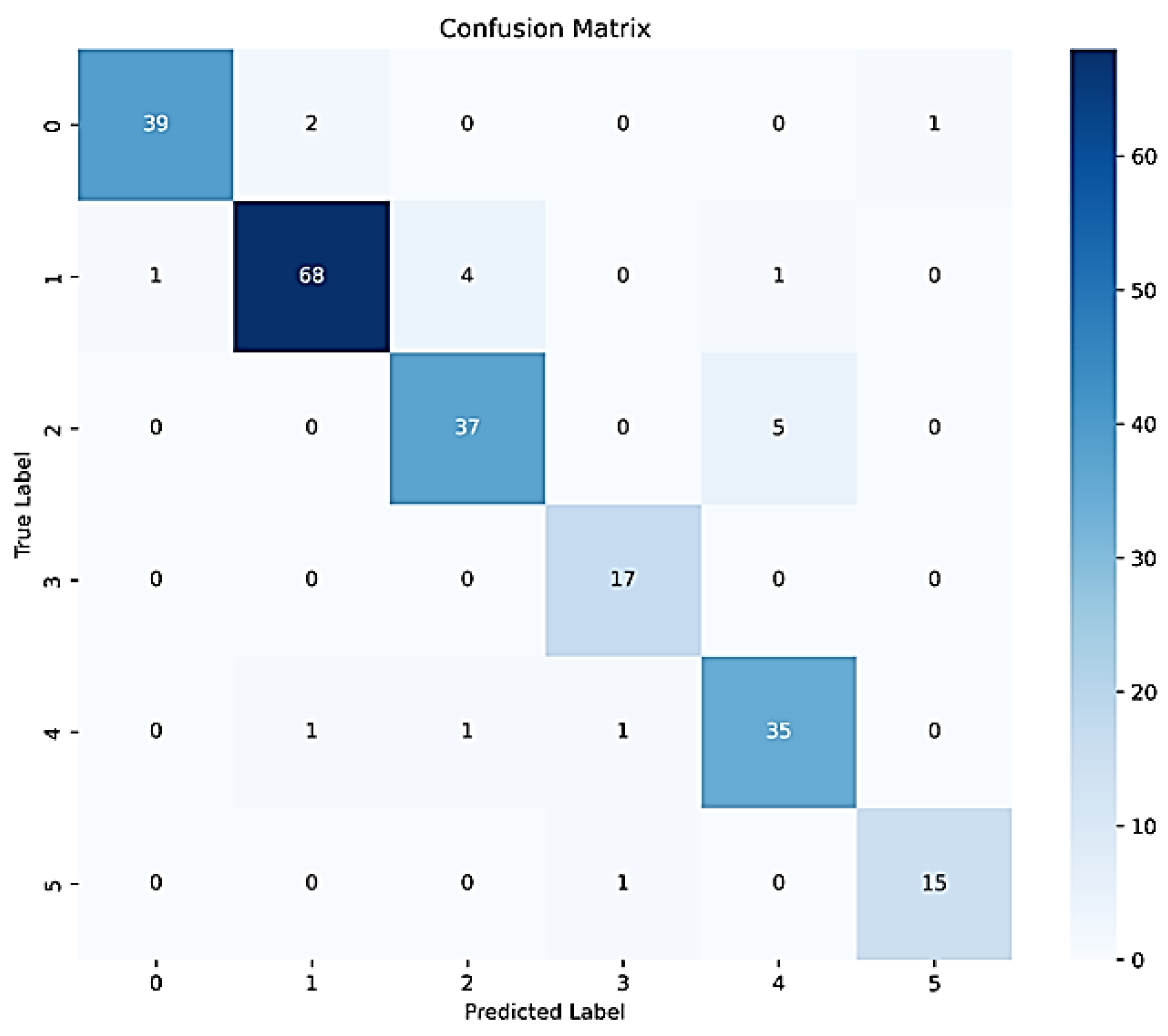

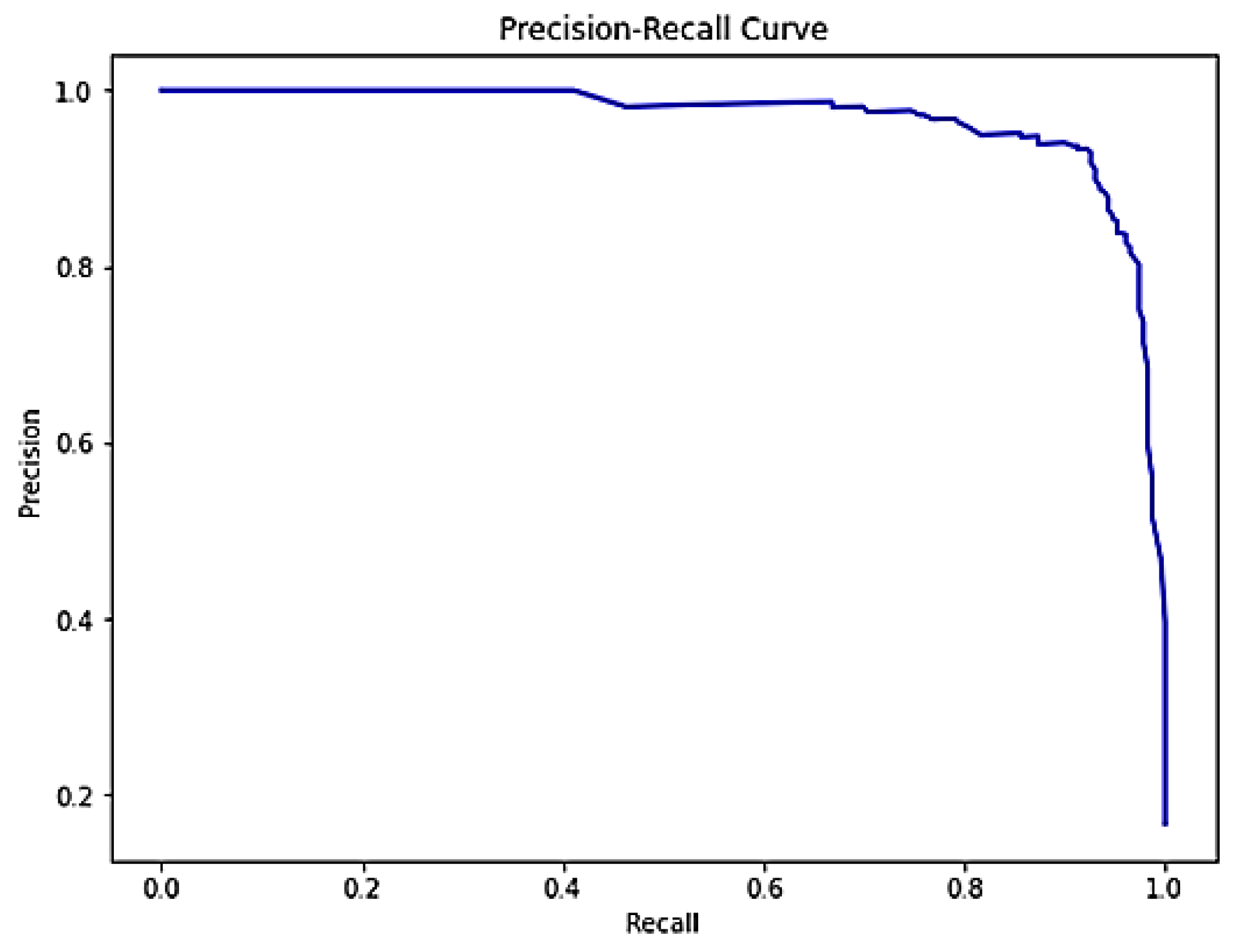

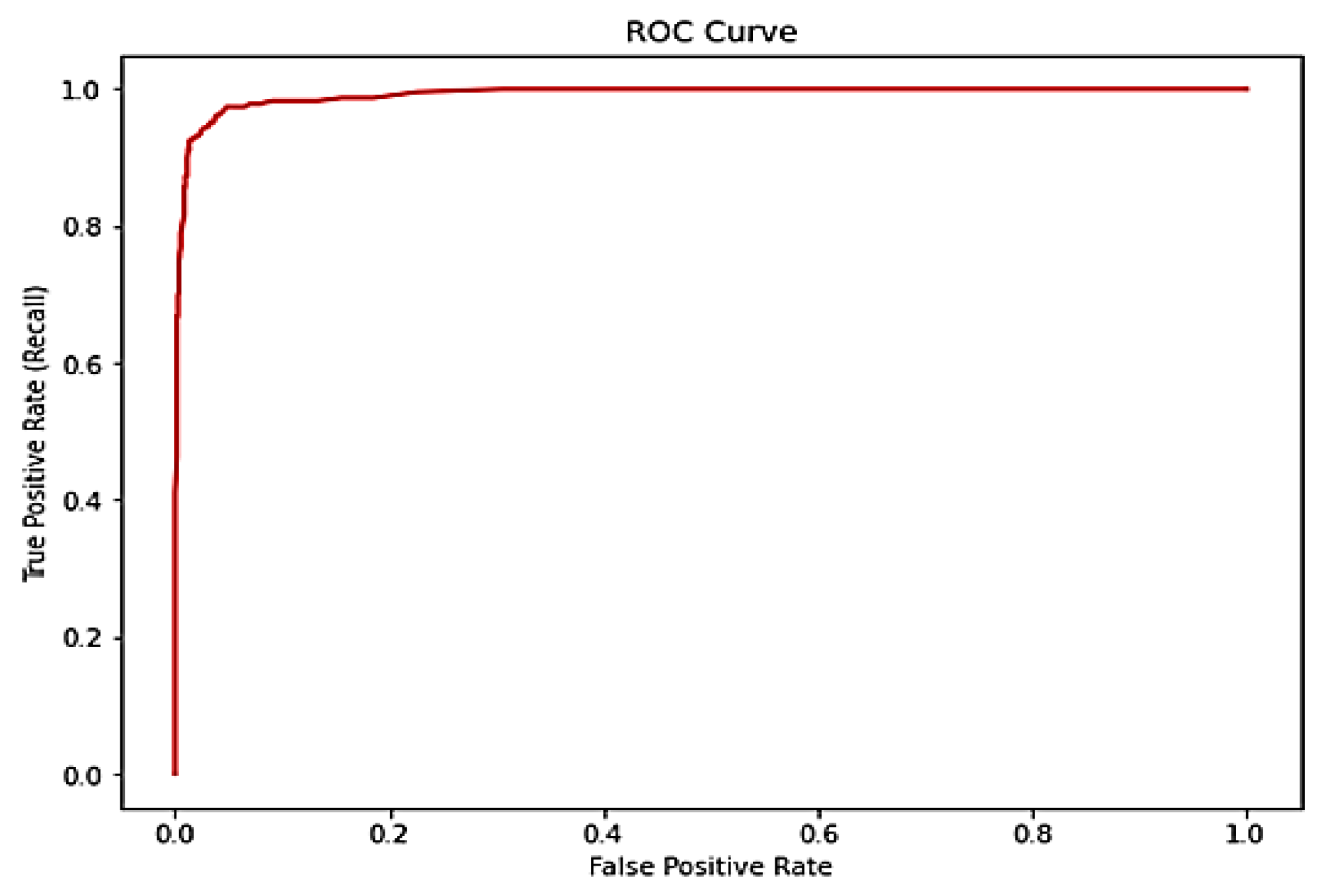

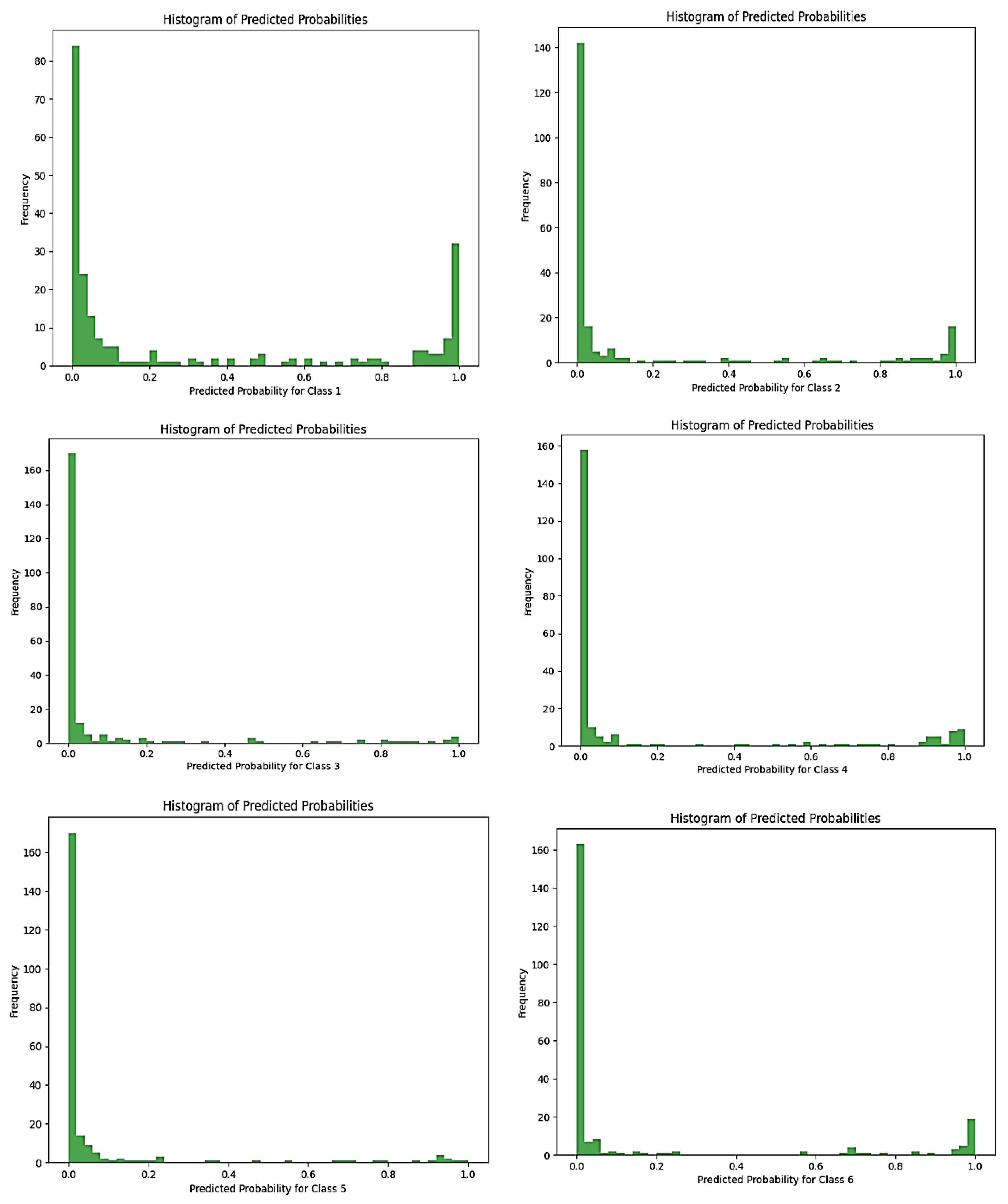

4.2. Evaluation of the RF Classifier Model

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Esteva, A.; Chou, K.; Yeung, S.; Naik, N.; Madani, A.; Mottaghi, A.; Liu, Y.; Topol, E.; Dean, J.; Socher, R. Deep learning-enabled medical computer vision. Npj Digit. Med. 2021, 4, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Narendra, V.G.; Hareesha, K.S. Prospects of computer vision automated grading and sorting systems in agricultural and food products for quality evaluation. Int. J. Comput. Appl. 2010, 1, 1–9. [Google Scholar] [CrossRef]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Wu, Z.; Chen, Y.; Zhao, B.; Kang, X.; Ding, Y. Review of weed detection methods based on computer vision. Sensors 2021, 21, 3647. [Google Scholar] [CrossRef]

- Azarmdel, H.; Jahanbakhshi, A.; Mohtasebi, S.S.; Muñoz, A.R. Evaluation of image processing technique as an expert system in mulberry fruit grading based on ripeness level using artificial neural networks (ANNs) and support vector machine (SVM). Postharvest Biol. Technol. 2020, 166, 111201. [Google Scholar] [CrossRef]

- Kakani, V.; Nguyen, V.H.; Kumar, B.P.; Kim, H.; Pasupuleti, V.R. A critical review on computer vision and artificial intelligence in food industry. J. Agric. Food Res. 2020, 2, 100033. [Google Scholar] [CrossRef]

- Muhammad, Z.; Hafez, M.A.A.M.; Leh, N.A.M.; Yusoff, Z.M.; Hamid, S.A. Smart agriculture using internet of things with Raspberry Pi. In Proceedings of the 2020 10th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 21–22 August 2020; pp. 85–90. [Google Scholar]

- Scheberl, L.; Scharenbroch, B.C.; Werner, L.P.; Prater, J.R.; Fite, K.L. Evaluation of soil pH and soil moisture with different field sensors: Case study urban soil. Urban For. Urban Green. 2019, 38, 267–279. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, S.; Wang, M.; Zheng, W. Design and performance analysis of soil temperature and humidity sensor. IFAC-PapersOnLine 2018, 51, 586–590. [Google Scholar] [CrossRef]

- Al-Naji, A.; Fakhri, A.B.; Gharghan, S.K.; Chahl, J. Soil color analysis based on a RGB camera and an artificial neural network towards smart irrigation: A pilot study. Heliyon 2021, 7, e06078. [Google Scholar] [CrossRef]

- Agrawal, N.; Singhal, S. Smart drip irrigation system using raspberry pi and Arduino. In Proceedings of the International Conference on Computing, Communication & Automation, Greater Noida, India, 15–16 May 2015; pp. 928–932. [Google Scholar]

- Tarange, P.H.; Mevekari, R.G.; Shinde, P.A. Web based automatic irrigation system using wireless sensor network and embedded Linux board. In Proceedings of the 2015 International Conference on Circuits, Power and Computing Technologies [ICCPCT-2015], Nagercoil, India, 19–20 March 2015; pp. 1–5. [Google Scholar]

- Dhanekula, H.; Kumar, K.K. GSM and Web Application based Real-Time Automatic Irrigation System using Raspberry pi 2 and 8051. Indian J. Sci. Technol. 2016, 9, 1–6. [Google Scholar] [CrossRef][Green Version]

- Ashok, G.; Rajasekar, G. Smart drip irrigation system using Raspberry Pi and Arduino. Int. J. Sci. Eng. Technol. Res. 2016, 5, 9891–9895. [Google Scholar]

- Sharma, S.; Gandhi, T. An automatic irrigation system using self-made soil moisture sensors and Android App. In Proceedings of the Second National Conference on Recent Trends in Instrumentation and Electronics, Gwalior, India, 30 September–1 October 2016. [Google Scholar]

- Chate, B.K.; Rana, J.G. Smart irrigation system using Raspberry Pi. Int. Res. J. Eng. Technol. 2016, 3, 247–249. [Google Scholar]

- Koprda, Š.; Magdin, M.; Vanek, E.; Balog, Z. A Low Cost Irrigation System with Raspberry Pi–Own Design and Statistical Evaluation of Efficiency. Agris-Line Pap. Econ. Inform. 2017, 9, 79–90. [Google Scholar] [CrossRef][Green Version]

- Ishak, S.N.; Malik, N.N.N.A.; Latiff, N.M.A.; Ghazali, N.E.; Baharudin, M.A. Smart home garden irrigation system using Raspberry Pi. In Proceedings of the 2017 IEEE 13th Malaysia International Conference on Communications (MICC), Johor Bahru, Malaysia, 28–30 November 2017; pp. 101–106. [Google Scholar]

- Padyal, A.; Shitole, S.; Tilekar, S.; Raut, P. Automated Water Irrigation System using Arduino Uno and Raspberry Pi with Android Interface. Int. Res. J. Eng. Technol. 2018, 5, 768–770. [Google Scholar]

- Vineela, T.; NagaHarini, J.; Kiranmai, C.; Harshitha, G.; AdiLakshmi, B. IoT based agriculture monitoring and smart irrigation system using raspberry Pi. Int. Res. J. Eng. Technol. 2018, 5, 1417–1420. [Google Scholar]

- Mahadevaswamy, U.B. Automatic IoT based plant monitoring and watering system using Raspberry Pi. Int. J. Eng. Manuf. 2018, 8, 55. [Google Scholar]

- Hasan, M. Real-time and low-cost IoT based farming using raspberry Pi. Indones. J. Electr. Eng. Comput. Sci. 2020, 17, 197–204. [Google Scholar]

- Kuswidiyanto, L.W.; Nugroho, A.P.; Jati, A.W.; Wismoyo, G.W.; Arif, S.S. Automatic water level monitoring system based on computer vision technology for supporting the irrigation modernization. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Yogyakarta, Indonesia, 4–5 November 2020; Volume 686, p. 12055. [Google Scholar]

- Mukhopadhyay, P.; Chaudhuri, B.B. A survey of Hough Transform. Pattern Recognit. 2015, 48, 993–1010. [Google Scholar] [CrossRef]

- Chavolla, E.; Zaldivar, D.; Cuevas, E.; Perez, M.A. Color spaces advantages and disadvantages in image color clustering segmentation. In Advances in Soft Computing and Machine Learning in Image Processing; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–22. [Google Scholar]

- Sharfina, G.; Gunawan, H.A.; Redjeki, S. The comparison of color space systems analysis on enamel whitening with infusion extracts of strawberry leaves. J. Int. Dent. Med. Res. 2018, 11, 1011–1017. [Google Scholar]

- Tan, Y.; Qin, J.; Xiang, X.; Ma, W.; Pan, W.; Xiong, N.N. A robust watermarking scheme in YCbCr color space based on channel coding. IEEE Access 2019, 7, 25026–25036. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

| No. of Soil Types per Scenario | Exp. Scenario | Conditions |

|---|---|---|

| 1, 2 | Dusty | Dry |

| Wet | ||

| 1, 2 | Cloudy | Dry |

| Wet | ||

| 1, 2 | Sunny | Dry |

| Wet |

| Soil Type | Exp. Scenario | Condition | Threshold Value for RGB and YCbCr Channels, Respectively |

|---|---|---|---|

| Peat moss soil | Dusty | Dry | (146, 90, 57, 87, 106, 161) |

| Sandy soil | (213, 140, 92, 134, 98, 172) | ||

| Peat moss soil | Wet | (77, 9, 2, 15, 118, 163) | |

| Sandy soil | (45, 39, 6, 30, 110, 136) | ||

| Peat moss soil | Cloudy | Dry | (99.58, 96.39, 95.27, 96.43, 127.20, 129.70) |

| Sandy soil | (133.89, 144.22, 146.62, 143.74, 129.98, 122.50) | ||

| Peat moss soil | Wet | (51.98, 56.20, 55.99, 55.68, 128.28, 126.09) | |

| Sandy soil | (84.41, 90.64, 85.24, 88.43, 125.78, 125.86) | ||

| Peat moss soil | Sunny | Dry | (126.38, 127.12, 125.08, 126.3, 126.96, 127.99) |

| Sandy soil | (96.46, 99.26, 102.29, 99.88, 129.82, 126.18) | ||

| Peat moss soil | Wet | (84.70, 83.86, 87.57, 85.06, 129.84, 127.79) | |

| Sandy soil | (90.74, 87.47, 86.31, 87.45,126.94, 129.67) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oudah, M.; Al-Naji, A.; AL-Janabi, T.Y.; Namaa, D.S.; Chahl, J. Automatic Irrigation System Based on Computer Vision and an Artificial Intelligence Technique Using Raspberry Pi. Automation 2024, 5, 90-105. https://doi.org/10.3390/automation5020007

Oudah M, Al-Naji A, AL-Janabi TY, Namaa DS, Chahl J. Automatic Irrigation System Based on Computer Vision and an Artificial Intelligence Technique Using Raspberry Pi. Automation. 2024; 5(2):90-105. https://doi.org/10.3390/automation5020007

Chicago/Turabian StyleOudah, Munir, Ali Al-Naji, Thooalnoon Y. AL-Janabi, Dhuha S. Namaa, and Javaan Chahl. 2024. "Automatic Irrigation System Based on Computer Vision and an Artificial Intelligence Technique Using Raspberry Pi" Automation 5, no. 2: 90-105. https://doi.org/10.3390/automation5020007

APA StyleOudah, M., Al-Naji, A., AL-Janabi, T. Y., Namaa, D. S., & Chahl, J. (2024). Automatic Irrigation System Based on Computer Vision and an Artificial Intelligence Technique Using Raspberry Pi. Automation, 5(2), 90-105. https://doi.org/10.3390/automation5020007