Detection of Hand Poses with a Single-Channel Optical Fiber Force Myography Sensor: A Proof-of-Concept Study

Abstract

1. Introduction

Force Myography

2. Materials and Methods

2.1. Hardware Design

2.2. Force Transducer

2.3. Experimental Procedure

2.3.1. Evaluated Postures

2.3.2. Measurement Protocol

2.4. Classification of Hand Postures

2.4.1. Signal Processing

2.4.2. Classification System

3. Results

3.1. Analysis of FMG Signals

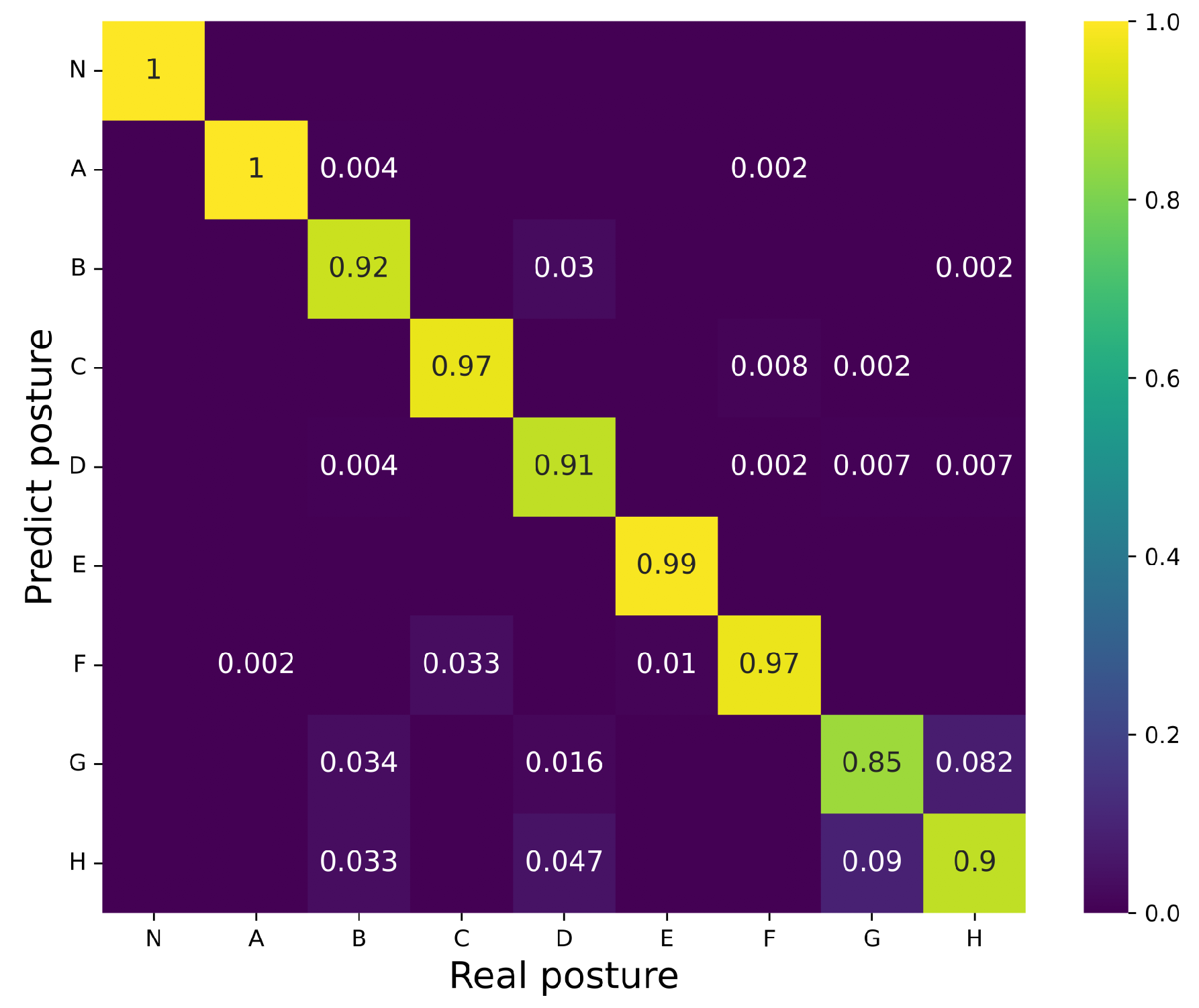

3.2. Identification of Hand Poses

3.3. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mastinu, E.; Engels, L.F.; Clemente, F.; Dione, M.; Sassu, P.; Aszmann, O.; Brånemark, R.; Håkansson, B.; Controzzi, M.; Wessberg, J.; et al. Neural feedback strategies to improve grasping coordination in neuromusculoskeletal prostheses. Sci. Rep. 2020, 10, 11793. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Gu, G.; Wang, L.; Song, R.; Qi, L. Using EMG signals to assess proximity of instruments to nerve roots during robot-assisted spinal surgery. Int. J. Med. Robot. 2022, 18, e2408. [Google Scholar] [CrossRef]

- Lee, S.H.; Park, G.; Cho, D.Y.; Kim, H.Y.; Lee, J.-Y.; Kim, S.; Park, S.-B.; Shin, J.-H. Comparisons between end-effector and exoskeleton rehabilitation robots regarding upper extremity function among chronic stroke patients with moredare-to-severe upper limb impairment. Sci. Rep. 2020, 10, 1806. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.; Jeon, C.; Kim, J. A study on immersion and presence of a portable hand haptic system for immersive virtual reality. Sensors 2017, 17, 1141. [Google Scholar] [CrossRef] [PubMed]

- Connolly, J.; Condell, J.; Curran, K.; Gardiner, P. Improving data glove accuracy and usability using a neural network when measuring finger joint range of motion. Sensors 2022, 22, 2228. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Liu, Z.; Tan, J. A survey on 3D hand pose estimation: Cameras, methods, and datasets. Pattern Regogn. 2019, 93, 251–272. [Google Scholar] [CrossRef]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain–computer interface paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef]

- Cho, E.; Chen, R.; Merhi, L.-K.; Xiao, Z.; Pousett, B.; Menon, C. Force myography to control robotic upper extremity prostheses: A feasibility study. Front. Bioeng. Biotechnol. 2016, 8, 18. [Google Scholar] [CrossRef]

- Radmand, A.; Scheme, E.; Englehart, K. High-density force myography: A possible alternative for upper-limb prosthetic control. J. Rehabil. Res.Dev. 2016, 53, 443–456. [Google Scholar] [CrossRef]

- Qi, J.; Jiang, G.; Li, G.; Sun, Y.; Tao, B. Surface EMG hand gesture recognition system based on PCA and GRNN. Neural Comput. Appl. 2020, 32, 6343–6351. [Google Scholar] [CrossRef]

- Xiao, Z.G.; Menon, C. A review of force myography research and development. Sensors 2019, 19, 4557. [Google Scholar] [CrossRef] [PubMed]

- Asfour, M.; Menon, C.; Jiang, X. A machine learning processing pipeline for reliable hand gesture classification of FMG signals with stochastic variance. Sensors 2021, 21, 1504. [Google Scholar] [CrossRef] [PubMed]

- Nowak, M.; Eiband, T.; Ramírez, E.R.; Castellini, C. Action interference in simultaneous and proportional myocontrol: Comparing force- and electromyography. J. Neural Eng. 2020, 17, 026011. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.; Gao, Q.; Liu, H.; Shull, P.B. A novel, co-located EMG-FMG-sensing wearable armband for hand gesture recognition. Sens. Actuat. A-Phys. 2020, 301, 111738. [Google Scholar] [CrossRef]

- Schofield, J.S.; Evans, K.R.; Hebert, J.S.; Marrasco, P.D.; Carey, J.P. The effect of biomechanical variables on force sensitive resistor error: Implications for calibration and improved accuracy. J. Biomech. 2016, 49, 786–792. [Google Scholar] [CrossRef]

- Roriz, P.; Carvalho, L.; Frazão, O.; Santos, J.L.; Simões, J.A. From conventional sensors to fibre optic sensors for strain and force measurements in biomechanics applications: A review. J. Biomech. 2014, 47, 1251–1261. [Google Scholar] [CrossRef]

- Wu, Y.T.; Gomes, M.K.; Silva, W.H.; Lazari, P.M.; Fujiwara, E. Integrated Optical Fiber Force Myography Sensor as Pervasive Predictor of Hand Postures. Biomed. Eng. Comput. Biol. 2020, 11, 1179597220912825. [Google Scholar] [CrossRef]

- Ribas Neto, A.; Fajardo, J.; Silva, W.H.A.; Gomes, M.K.; Castro, M.C.F.; Fujiwara, E.; Rohmer, E. Design of a tendon-actuated robotic glove integrated with optical fiber force myography sensor. Automation 2021, 2, 187–201. [Google Scholar] [CrossRef]

- Hellara, H.; Djemal, A.; Barioul, R.; Ramalingame, R.; Atitallah, B.B.; Fricke, E.; Kanoun, O. Classification of dynamic hand gestures using multi sensors combinations. In Proceedings of the IEEE 9th International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA), Chemnitz, Germany, 15–17 June 2022; IEEE: Bellingham, WA, USA, 2022; pp. 1–5. [Google Scholar]

- Li, X.; Zheng, Y.; Liu, Y.; Tian, L.; Fang, P.; Cao, J.; Li, G. A novel motion recognition method based on force myography of dynamic muscle contractions. Front. Neurosci. 2021, 15, 783539. [Google Scholar] [CrossRef]

- Fujiwara, E.; Gomes, M.K.; Wu, Y.T.; Suzuki, C.K. Identification of dynamic hand gestures with force myography. In Proceedings of the 32nd International Symposium on Micro-NanoMechatronics and Human Science, Nagoya, Japan, 5–8 December 2021; IEEE: Bellingham, WA, USA, 2021; pp. 1–5. [Google Scholar]

- Zajac, F.E. Muscle and tendon: Properties, models, scaling, and applications in biomechanics and motor control. Crit. Rev. Biomed. Eng. 1989, 17, 359–411. [Google Scholar]

- Connan, M.; Ruiz Ramírez, E.; Vodermayer, B.; Castellini, C. Assessment of a wearable force-and electromyography device and comparison of the related signals for myocontrol. Front. Neurorob. 2016, 10, 17. [Google Scholar] [CrossRef] [PubMed]

- Dalley, S.A.; Varol, H.A.; Goldfarb, M. A method for the control of multigrasp myoelectric prosthetic hands. IEEE Trans. Neural Sys. Rehabil. Eng. 2012, 20, 58–67. [Google Scholar] [CrossRef] [PubMed]

- Fajardo, J.; Ferman, V.; Cardona, D.; Maldonado, G.; Lemus, A.; Rohmer, E. Galileo Hand: An anthropomorphic and affordable upper-limb prosthesis. IEEE Access 2020, 8, 81365–81377. [Google Scholar] [CrossRef]

- Wang, W.; Yiu, H.H.P.; Li, W.J.; Roy, V.A.L. The principle and architecture of optical stress sensors and the progress on the development of microbend optical sensors. Adv. Opt. Mater. 2021, 9, 2001693. [Google Scholar] [CrossRef]

- Fujiwara, E.; Suzuki, C.K. Optical fiber force myogaphy sensor for identification of hand postures. J. Sensor. 2018, 2018, 8940373. [Google Scholar] [CrossRef]

- Pizzolato, S.; Tagliapietra, L.; Cognolato, M.; Reggiani, M.; Müller, H.; Atzori, M. Comparison of six electromyography acquisition setups on hand movement classification tasks. PLoS ONE 2017, 12, e0186132. [Google Scholar] [CrossRef]

- Chollet, F. Keras. Available online: https://github.com/fchollet/keras (accessed on 16 September 2022).

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recogn. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Dubey, S.R.; Singh, S.K.; Chaudhuri, B.B. Activation functions in deep learning: A comprehensive survey and benchmark. Neurocomputing 2022, 503, 92–108. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recogn. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Rodriguez, J.D.; Perez, A.; Lozano, J.A. Sensitivity analysis of k-fold cross validation in prediction error estimation. IEEE Trans. Pattern Anal. 2010, 32, 569–575. [Google Scholar] [CrossRef]

- Li, N.; Yang, D.; Jiang, L.; Liu, H.; Cai, H. Combined use of FSR sensor array and SVM classifier for finger motion recognition based on pressure distribution map. J. Bionic Eng. 2012, 9, 39–47. [Google Scholar] [CrossRef]

- Jiang, X.; Merhi, L.-K.; Xiao, Z.G.; Menon, C. Exploration of force myography and surface electromyography in hand gesture classification. Med. Eng. Phys. 2017, 41, 63–73. [Google Scholar] [CrossRef] [PubMed]

- Fujiwara, E.; Rodrigues, M.S.; Gomes, M.K.; Wu, Y.T.; Suzuki, C.K. Identification of hand gestures using the inertial measurement unit of a smartphone: A proof-of-concept study. IEEE Sens. J. 2021, 21, 13916–13923. [Google Scholar] [CrossRef]

- Jiang, X.; Merhi, L.-K.; Menon, C. Force Exertion Affects Grasp Classification Using Force Myography. IEEE Trans. Hum. Mach. Syst. 2018, 48, 219–226. [Google Scholar] [CrossRef]

- Lei, G.; Zhang, S.; Fang, Y.; Wang, Y.; Zhang, X. Investigation on the Sampling Frequency and Channel Number for Force Myography Based Hand Gesture Recognition. Sensors 2021, 21, 3872. [Google Scholar] [CrossRef] [PubMed]

- Ahmadizadeh, C.; Merhi, L.K.; Pousett, B.; Sangha, S.; Menon, C. Toward Intuitive Prosthetic Control: Solving Common Issues Using Force Myography, Surface Electromyography, and Pattern Recognition in a Pilot Case Study. IEEE Robot. Autom. Mag. 2017, 24, 102–111. [Google Scholar] [CrossRef]

| Ref | Transducers | Classifier | Accuracy (Gestures) |

|---|---|---|---|

| [19] | 15 (strain/pressure sensors, 9-axis IMU, electrodes) | Random forest | 99.5% (10) |

| [20] | 8 (piezoelectric sensors) | k-nearest neighbor | 95.5% (6) |

| [36] | 1 (6-axis IMU) | Correlator with competitive layer | 96.6% (4) |

| This work | 1 (optical fiber) | CNN | 94.0% (8) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gomes, M.K.; da Silva, W.H.A.; Ribas Neto, A.; Fajardo, J.; Rohmer, E.; Fujiwara, E. Detection of Hand Poses with a Single-Channel Optical Fiber Force Myography Sensor: A Proof-of-Concept Study. Automation 2022, 3, 622-632. https://doi.org/10.3390/automation3040031

Gomes MK, da Silva WHA, Ribas Neto A, Fajardo J, Rohmer E, Fujiwara E. Detection of Hand Poses with a Single-Channel Optical Fiber Force Myography Sensor: A Proof-of-Concept Study. Automation. 2022; 3(4):622-632. https://doi.org/10.3390/automation3040031

Chicago/Turabian StyleGomes, Matheus K., Willian H. A. da Silva, Antonio Ribas Neto, Julio Fajardo, Eric Rohmer, and Eric Fujiwara. 2022. "Detection of Hand Poses with a Single-Channel Optical Fiber Force Myography Sensor: A Proof-of-Concept Study" Automation 3, no. 4: 622-632. https://doi.org/10.3390/automation3040031

APA StyleGomes, M. K., da Silva, W. H. A., Ribas Neto, A., Fajardo, J., Rohmer, E., & Fujiwara, E. (2022). Detection of Hand Poses with a Single-Channel Optical Fiber Force Myography Sensor: A Proof-of-Concept Study. Automation, 3(4), 622-632. https://doi.org/10.3390/automation3040031