Cloud-Edge Collaborative Inference-Based Smart Detection Method for Small Objects

Abstract

1. Introduction

- (1)

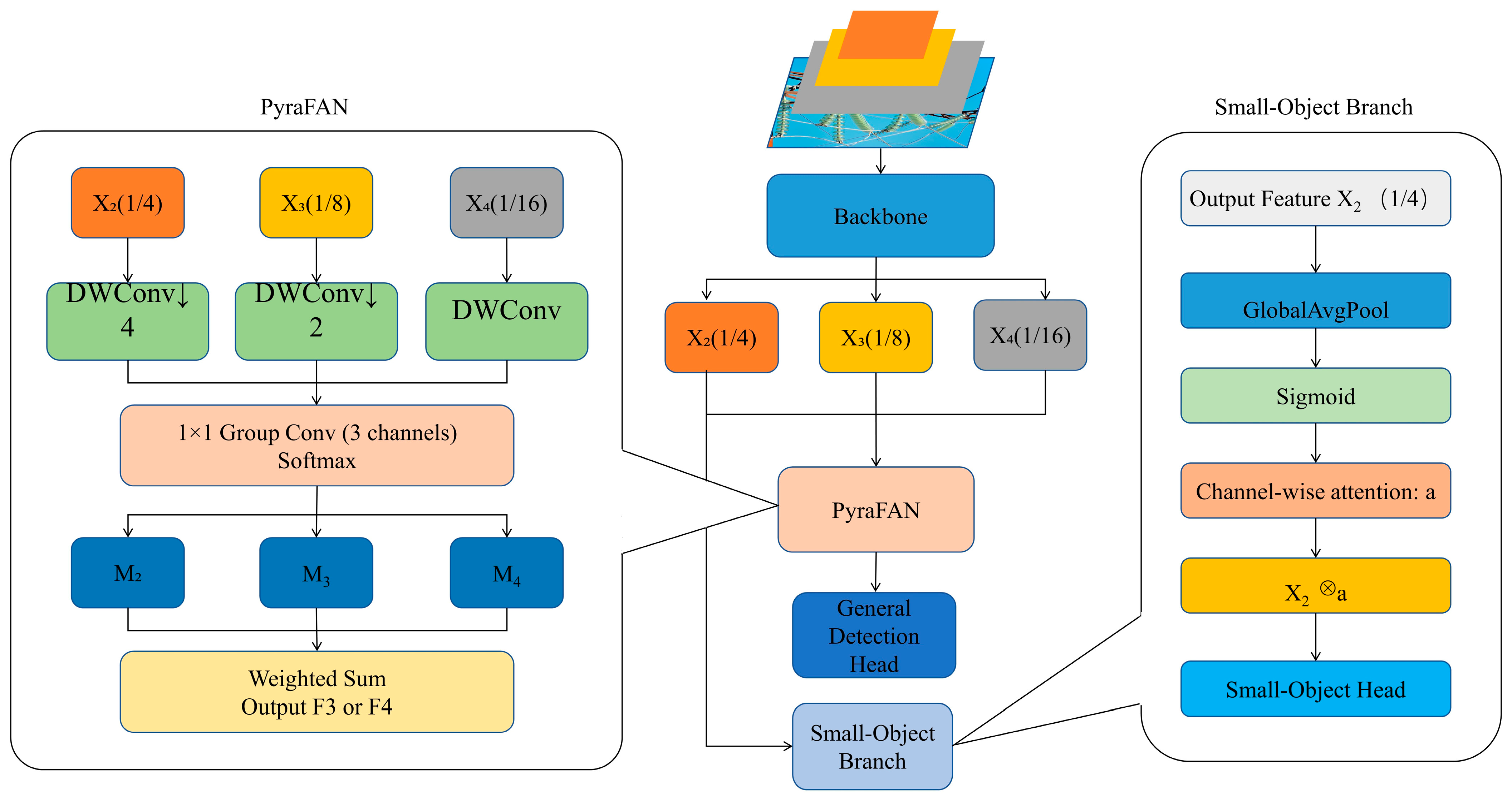

- Proposed PyraFAN Module: We introduce PyraFAN, a novel feature fusion module that integrates multi-level feature pyramids with attention mechanisms. This lightweight module is specifically designed to address the challenges of small object detection by enhancing feature representation and improving detection accuracy.

- (2)

- Cloud-Edge Collaborative Framework: We propose an innovative cloud-edge collaborative inference framework that optimizes the balance between computational efficiency and detection accuracy. This framework leverages the strengths of both cloud and edge computing to improve the robustness and real-time performance of small object detection systems.

- (3)

- Graph-Guided Distillation Method: We develop a graph-guided knowledge distillation method for training edge models. This method enhances the detection capabilities of edge devices by effectively transferring knowledge from cloud models while considering spatial, task, and model similarities among edge nodes.

2. Related Work

3. Design of Pyramid-Fusion Attention Network for Small Object Detection (PyraFAN)

4. Cloud-Edge Collaborative Inference Based Smart Detection Method

4.1. Design of the Proposed Method

4.2. The Details of the Cloud-Edge Collaborative Inference Method

- 1.

- Dynamic coordination and task diversion for small objects: Instead of static task division, this architecture establishes a dynamic coordination mechanism. End device (like AR glasses and cameras), as sensing tentacles, focus on collecting raw video streams, denoted as . Edge nodes play a key role in real time response, performing temporal correlation analysis on continuous frame data. They dynamically extract key-frames through inter-frame difference method, effectively filtering out redundant information.where is the inter-frame difference calculated by the inter-frame difference method. When the inter-frame difference exceeds a threshold , the frame is marked as a key-frame, usually with a value of 1.5 or 2.

- (a)

- Low-confidence samples: The confidence score of the detection result output by the edge model is below a preset confidence threshold . This threshold can be adjusted according to the signal to noise ratio of the actual scenario, for example, set at 0.5.

- (b)

- Physically small size samples: The pixel area of the detected object’s bounding box is smaller than a preset size threshold . This directly corresponds to objects with weak features and difficult to classify on the edge side due to their small physical size.

- (c)

- Unstable detection samples: Combined with temporal analysis, if an object roughly in the same spatial position is intermittently detected and missed in continuous N frames (N is a preset integer, e.g., N = 5), it is also regarded as an ambiguous sample. This indicates unstable detection results, requiring deeper consistency verification by the cloud.

- 2.

- Cloud based in depth analysis and closed loop knowledge gain for small objects: The cloud, with its powerful computing power, conducts in-depth, refined secondary analysis of received data. The cloud model performs high precision reinspection of data:

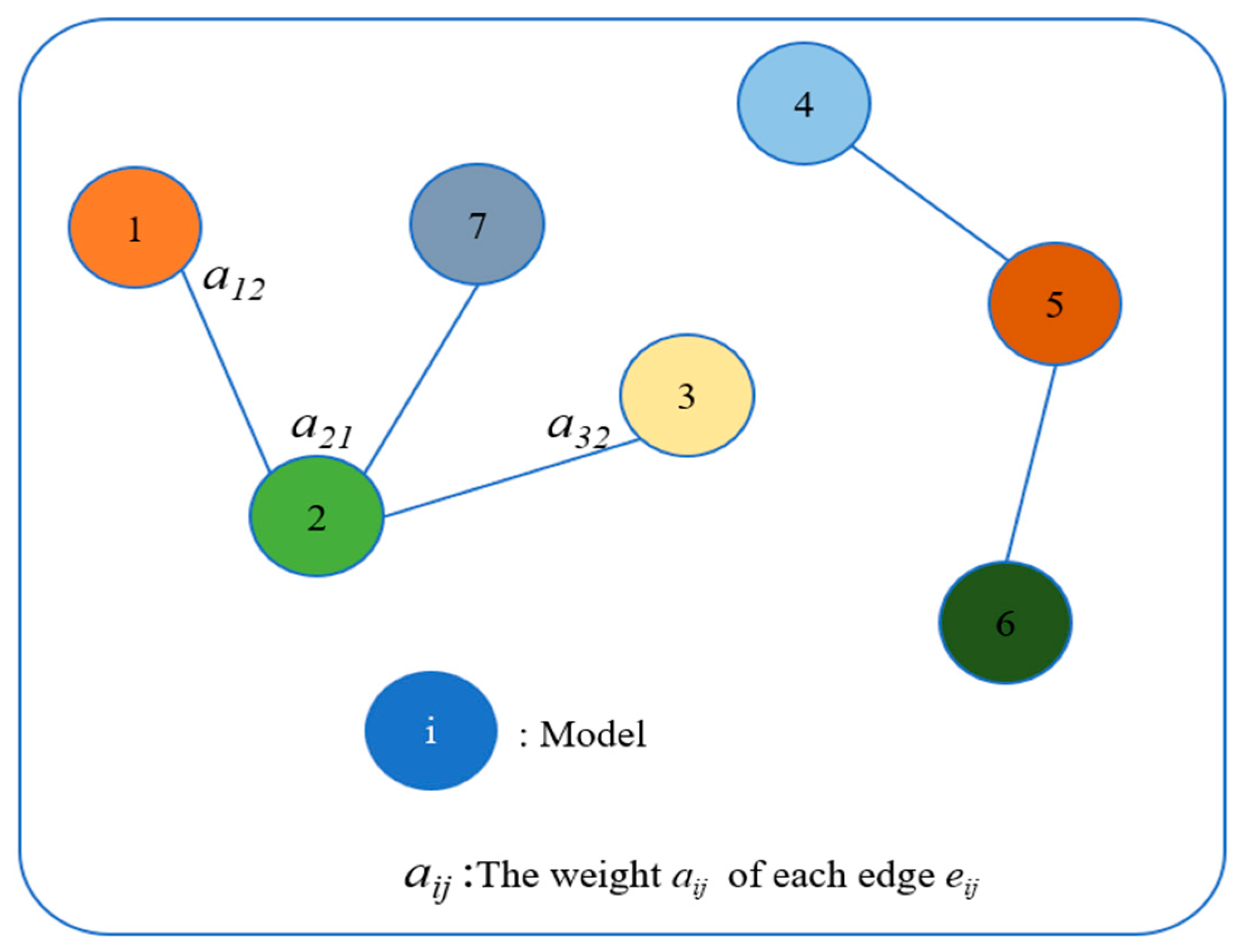

4.3. Graph-Guided Distillation Among Edge Models

- (1)

- Spatial proximity:where denotes the 2-D geolocation of node .

- (2)

- Task relevance:where the task relevance is computed as the ratio of the intersection to the maximum size of the task categories between models and .

- (3)

- Model-feature similarity (AP-based performance vector):

- (a)

- Periodic Global Recalculating: System administrators can set a fixed recalculating period (e.g., weekly, or monthly) according to operational and maintenance needs. At the end of each period, the system automatically triggers all edge models to undergo performance evaluation on the unified benchmark dataset and re-executes the complete clustering process based on the latest performance characterization vectors. This mode ensures that the entire edge network periodically adjusts collaborative relationships to adapt to slow environmental or device state changes.

- (b)

- Event-driven Local Dynamic Adjustment: When the system detects a significant change in the performance of a single edge node’s model, local adjustment is triggered. There are two situations that require adjustment:

5. Results and Discussion

5.1. Experimental Design

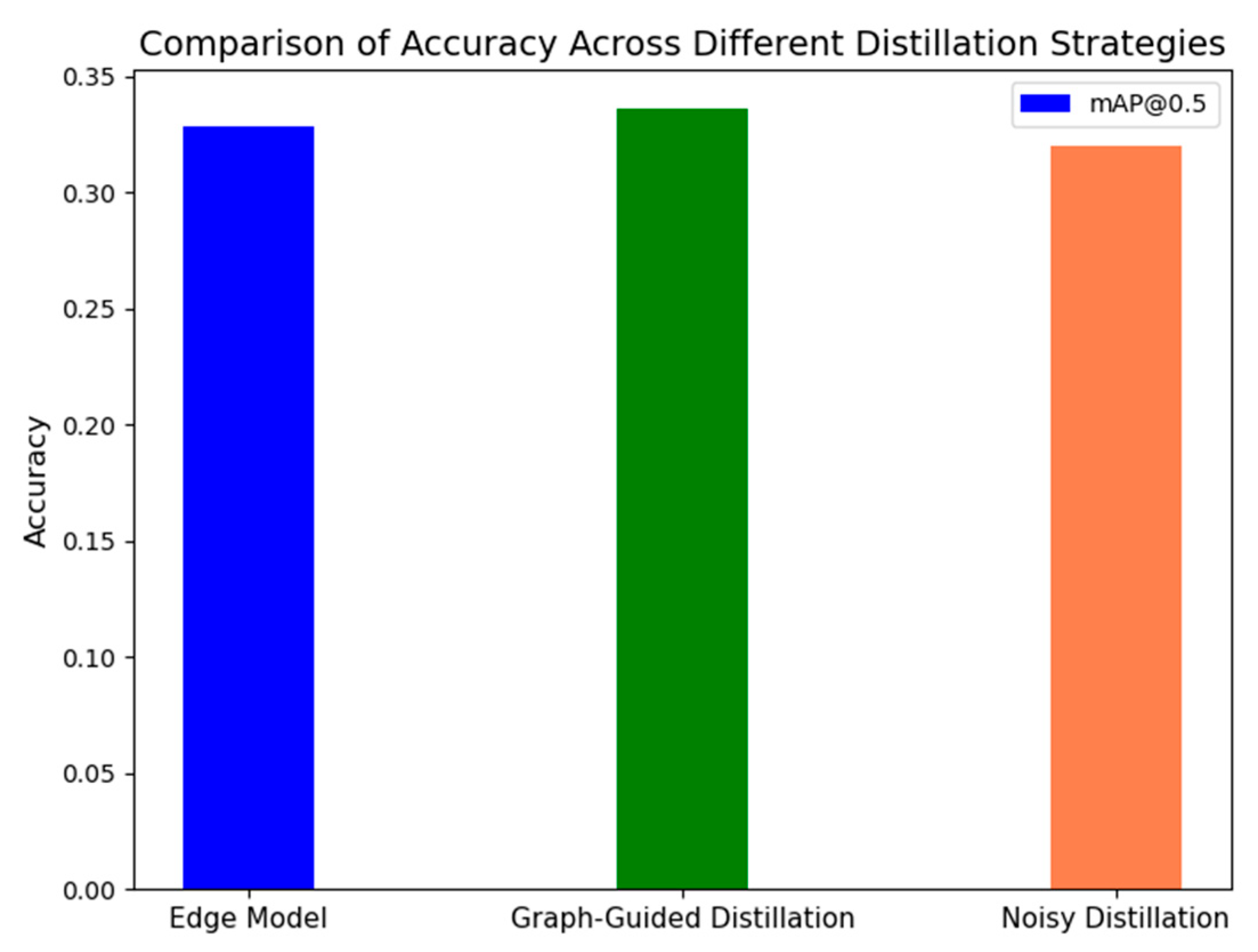

5.2. Detection Performance

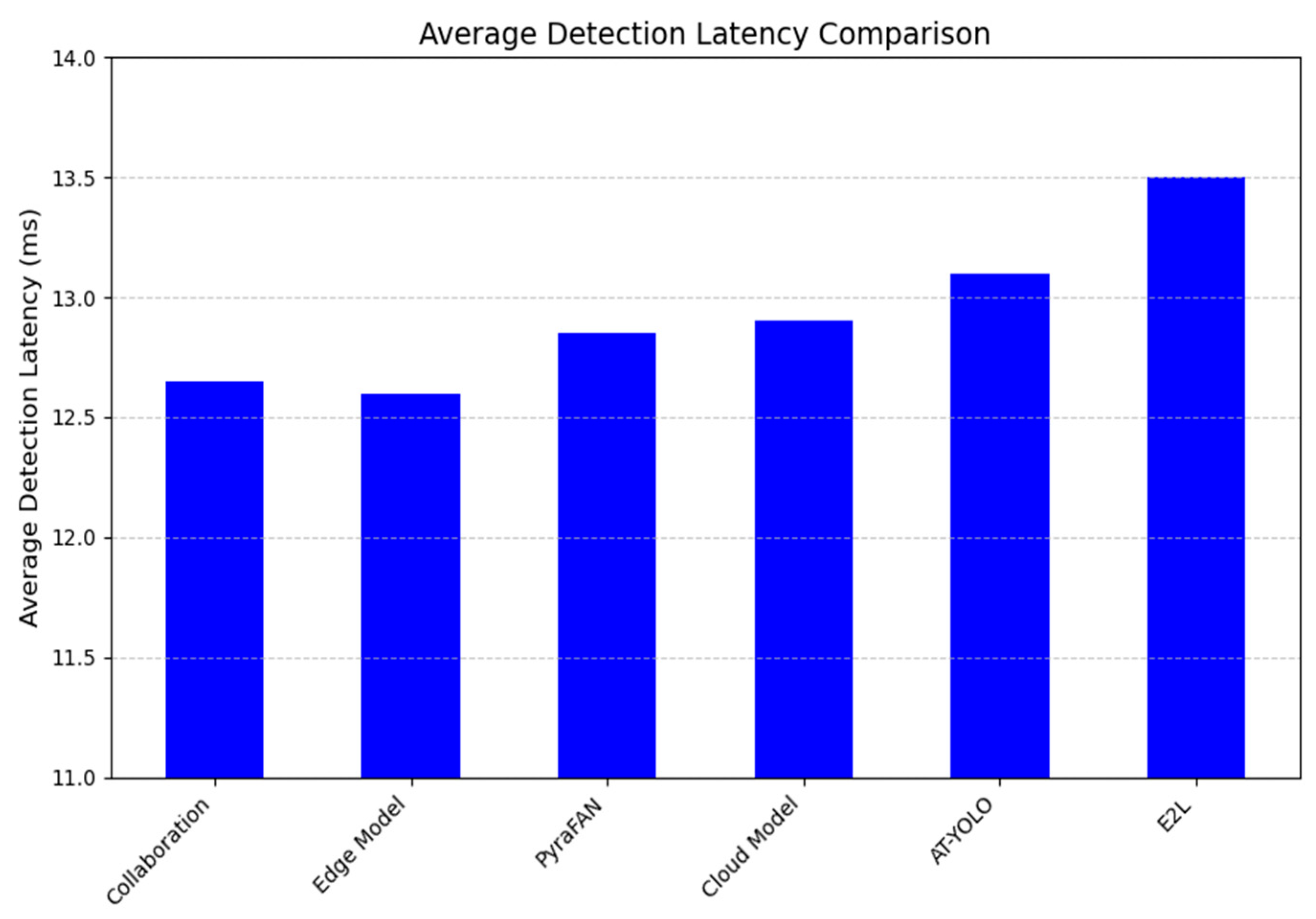

5.3. Response Time

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Xiang, T.; Hospedales, T.M.; Lu, H. Deep Mutual Learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4320–4328. [Google Scholar] [CrossRef]

- Yang, Z.; Li, Z.; Shao, M.; Shi, D.; Yuan, Z.; Yuan, C. Masked Generative Distillation. arXiv 2022, arXiv:2205.01529. [Google Scholar] [CrossRef]

- Ren, J.; Zhang, M.; Yu, C.; Liu, Z. Balanced MSE for Imbalanced Visual Regression. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 7916–7925. [Google Scholar] [CrossRef]

- Ji, S.; Zhang, Z.; Ying, S.; Wang, L.; Zhao, X.; Gao, Y. Kullback–Leibler Divergence Metric Learning. IEEE Trans. Cybern. 2022, 52, 2047–2058. [Google Scholar] [CrossRef] [PubMed]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3645–3649. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Bakke Vennerød, C.; Kjærran, A.; Stray Bugge, E. Long Short-term Memory RNN. arXiv 2021, arXiv:2105.06756. [Google Scholar] [CrossRef]

- Ding, C.; Zhou, A.; Liu, Y.; Chang, R.N.; Hsu, C.-H.; Wang, S. A Cloud-Edge Collaboration Framework for Cognitive Service. IEEE Trans. Cloud Comput. 2022, 10, 1489–1499. [Google Scholar] [CrossRef]

- Yang, L.; Han, Y.; Chen, X.; Song, S.; Dai, J.; Huang, G. Resolution Adaptive Networks for Efficient Inference. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2366–2375. [Google Scholar] [CrossRef]

- Lan, G.; Liu, Z.; Zhang, Y.; Scargill, T.; Stojkovic, J.; Joe-Wong, C.; Gorlatova, M. Collabar: Edge-assisted collaborative image recognition for mobile augmented reality. In Proceedings of the 2020 19th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), Sydney, NSW, Australia, 21–24 April 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 301–312. [Google Scholar] [CrossRef]

- Deng, D. DBSCAN Clustering Algorithm Based on Density. In Proceedings of the 2020 7th International Forum on Electrical Engineering and Automation (IFEEA), Hefei, China, 25–27 September 2020; pp. 949–953. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, C.; Lan, S.; Zhu, L.; Zhang, Y. End-Edge-Cloud Collaborative Computing for Deep Learning: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2024, 26, 2647–2683. [Google Scholar] [CrossRef]

- Tao, X.; Duan, Y.; Qin, Z.; Huang, D.; Wang, L. Cloud-Edge-End Intelligent Coordination and Computing. In Wireless Multimedia Computational Communications; Wireless Networks; Springer: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Li, L.; Zhu, L.; Li, W. Cloud–Edge–End Collaborative Federated Learning: Enhancing Model Accuracy and Privacy in Non-IID Environments. Sensors 2024, 24, 8028. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Xu, X.; Liang, W.; Zeng, Z.; Yan, Z. Deep-learning-enhanced multitarget detection for end–edge–cloud surveillance in smart IoT. IEEE Internet Things J. 2021, 8, 12588–12596. [Google Scholar] [CrossRef]

- Zhang, R.; Jiang, H.; Wang, W.; Liu, J. Optimization Methods, Challenges, and Opportunities for Edge Inference: A Comprehensive Survey. Electronics 2025, 14, 1345. [Google Scholar] [CrossRef]

- Yang, L.; Shen, X.; Zhong, C.; Liao, Y. On-demand inference acceleration for directed acyclic graph neural networks over edge-cloud collaboration. J. Parallel Distrib. Comput. 2023, 171, 79–87. [Google Scholar] [CrossRef]

- Teerapittayanon, S.; McDanel, B.; Kung, H.T. Distributed Deep Neural Networks Over the Cloud, the Edge and End Devices. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 328–339. [Google Scholar] [CrossRef]

- Yuan, Y.; Gao, S.; Zhang, Z.; Wang, W.; Xu, Z.; Liu, Z. Edge-Cloud Collaborative UAV Object Detection: Edge-Embedded Lightweight Algorithm Design and Task Offloading Using Fuzzy Neural Network. IEEE Trans. Cloud Comput. 2024, 12, 306–318. [Google Scholar] [CrossRef]

- Ding, C.; Ding, F.; Gorbachev, S.; Yue, D.; Zhang, D. A learnable end-edge-cloud cooperative network for driving emotion sensing. Comput. Electr. Eng. 2022, 103, 108378. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Liu, Y.; Yu, Z.; Zong, D.; Zhu, L. Attention to Task-Aligned Object Detection for End–Edge–Cloud Video Surveillance. IEEE Internet Things J. 2024, 11, 13781–13792. [Google Scholar] [CrossRef]

- Du, D.; Zhu, P.; Wen, L.; Bian, X.; Lin, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-DET2019: The Vision Meets Drone Object Detection in Image Challenge Results. In Proceedings of the ICCV Visdrone Workshop, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar] [CrossRef]

- Zhu, P.; Du, D.; Wen, L.; Bian, X.; Ling, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-VID2019: The Vision Meets Drone Object Detection in Video Challenge Results. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 227–235. [Google Scholar] [CrossRef]

| Model | VisDrone-DET (mAP@0.5) | VisDrone-VID (mAP@0.5) |

|---|---|---|

| Cloud Model | 0.401 | 0.302 |

| Edge Model | 0.328 | 0.246 |

| Edge Model with PyraFAN | 0.368 | 0.262 |

| Cloud-to-Edge Distillation | 0.336 | 0.249 |

| Edge-to-Edge Distillation | 0.336 | 0.248 |

| Cloud-to-Edge Distillation + Edge-to-Edge Distillation | 0.337 | 0.247 |

| Cloud-Edge Collaboration | 0.429 | 0.302 |

| Method | mAP@0.5 | GFLOPs | FPS |

|---|---|---|---|

| E2L [15] | 0.375 | 28.8 | 110 |

| YOLOv10s (pre-trained) | 0.403 | 21.6 | 133 |

| YOLOv11n | 0.298 | 7.7 | 145 |

| AT-YOLO [18] | 0.299 | 4.41 | 127 |

| Collaboration with PyraFAN (ours) | 0.429 | 15.7 | 135 |

| Model | Confidence | Recall | FNR |

|---|---|---|---|

| Cloud Model | 0.952 | 0.60 | 0.40 |

| Edge Model | 0.972 | 0.54 | 0.46 |

| Collaborative Edge Model | 0.960 | 0.54 | 0.46 |

| Edge Model with PyraFAN | 0.967 | 0.55 | 0.45 |

| Cloud-Edge Collaboration | 0.952 | 0.63 | 0.37 |

| Model | Confidence | Recall | FNR |

|---|---|---|---|

| Cloud Model | 0.980 | 0.57 | 0.43 |

| Edge Model | 0.999 | 0.55 | 0.45 |

| Collaborative Edge Model | 0.974 | 0.51 | 0.49 |

| Edge Model with PyraFAN | 0.982 | 0.56 | 0.44 |

| Cloud-Edge Collaboration | 0.98 | 0.57 | 0.43 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, C.; Li, S.; Wang, J.; Li, H.; Li, X.; Shao, S. Cloud-Edge Collaborative Inference-Based Smart Detection Method for Small Objects. Modelling 2025, 6, 112. https://doi.org/10.3390/modelling6040112

Ye C, Li S, Wang J, Li H, Li X, Shao S. Cloud-Edge Collaborative Inference-Based Smart Detection Method for Small Objects. Modelling. 2025; 6(4):112. https://doi.org/10.3390/modelling6040112

Chicago/Turabian StyleYe, Cong, Shengkun Li, Jianlei Wang, Hongru Li, Xiao Li, and Sujie Shao. 2025. "Cloud-Edge Collaborative Inference-Based Smart Detection Method for Small Objects" Modelling 6, no. 4: 112. https://doi.org/10.3390/modelling6040112

APA StyleYe, C., Li, S., Wang, J., Li, H., Li, X., & Shao, S. (2025). Cloud-Edge Collaborative Inference-Based Smart Detection Method for Small Objects. Modelling, 6(4), 112. https://doi.org/10.3390/modelling6040112