Destination (Un)Known: Auditing Bias and Fairness in LLM-Based Travel Recommendations

Abstract

1. Introduction

1.1. Biases in AI Systems

1.1.1. Data & Design-Time (Technical) Biases

1.1.2. Feedback & Distribution (Emergent/Interaction) Biases

1.1.3. Representational & Societal (Pre-Existing) Biases

1.2. Bias and Its Consequences in AI Systems for Tourism Recommendations

1.3. Approaches to Bias Mitigation

1.4. Aim and Research Questions

2. Methodology

2.1. Research Design

2.2. Persona Construction

2.3. Prompting Protocol

2.4. Experimental Controls and Data Processing

2.5. Data Analysis Procedure

3. Results

3.1. Popularity Bias

3.2. Geographic Bias

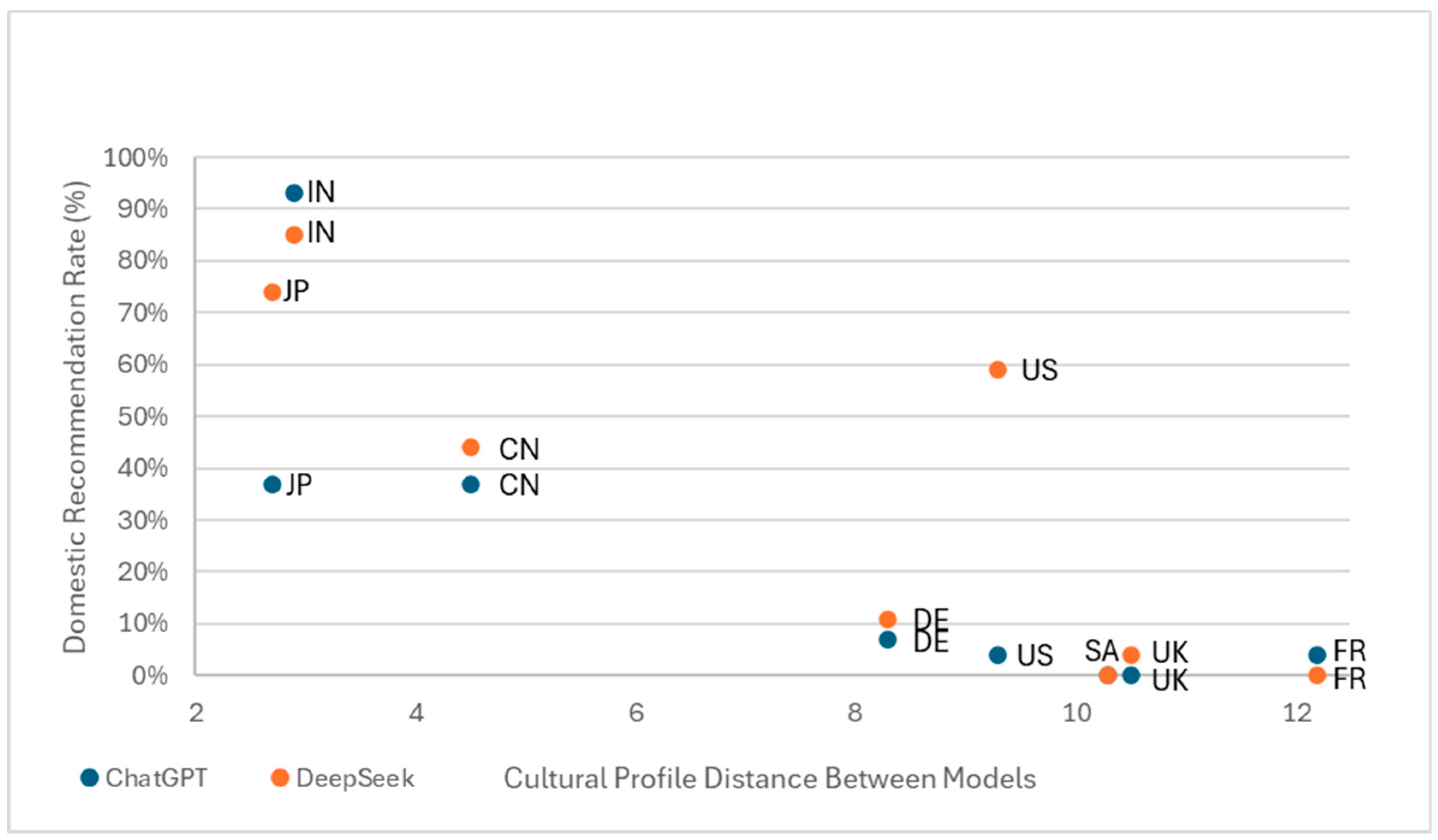

3.3. Cultural Bias

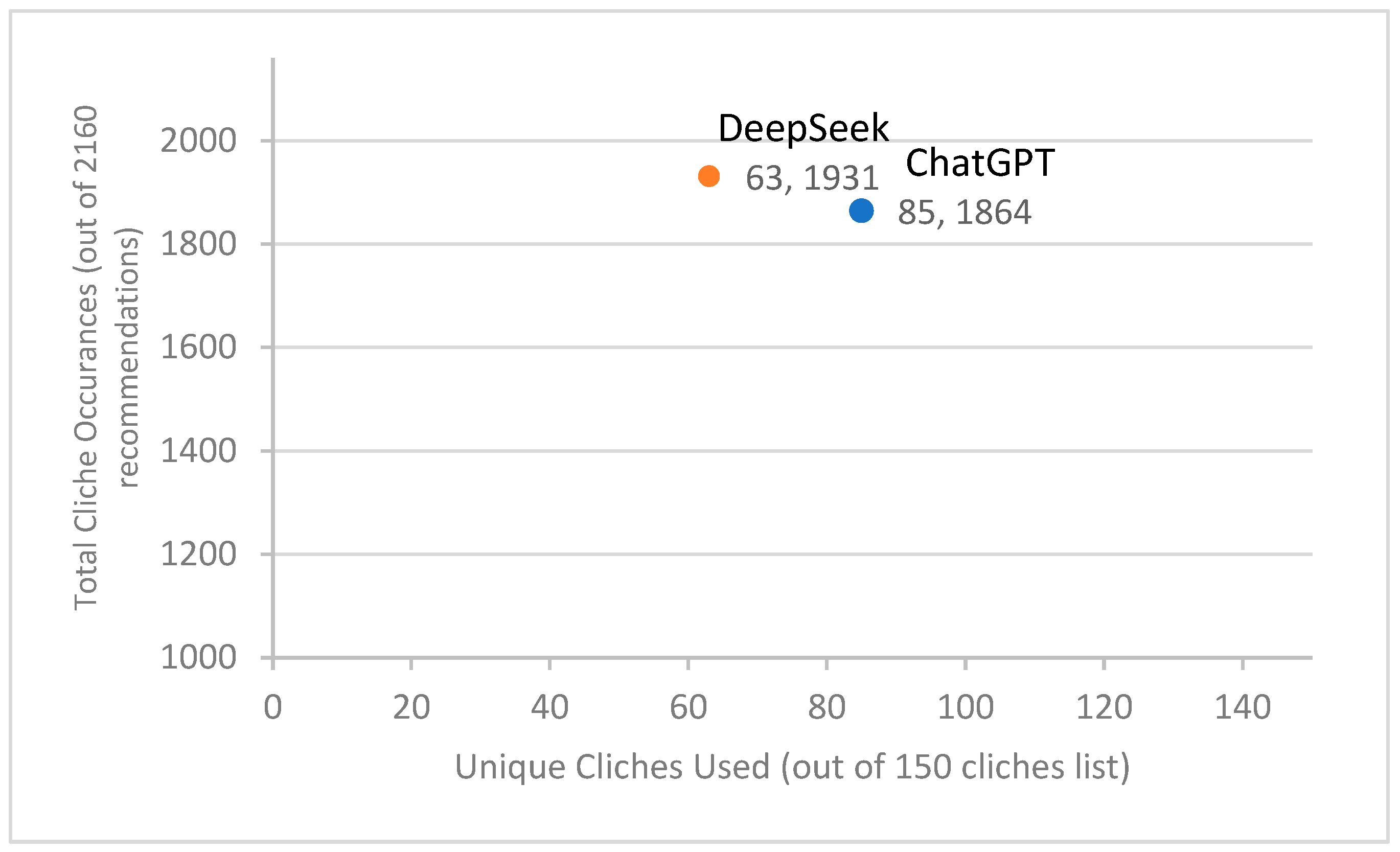

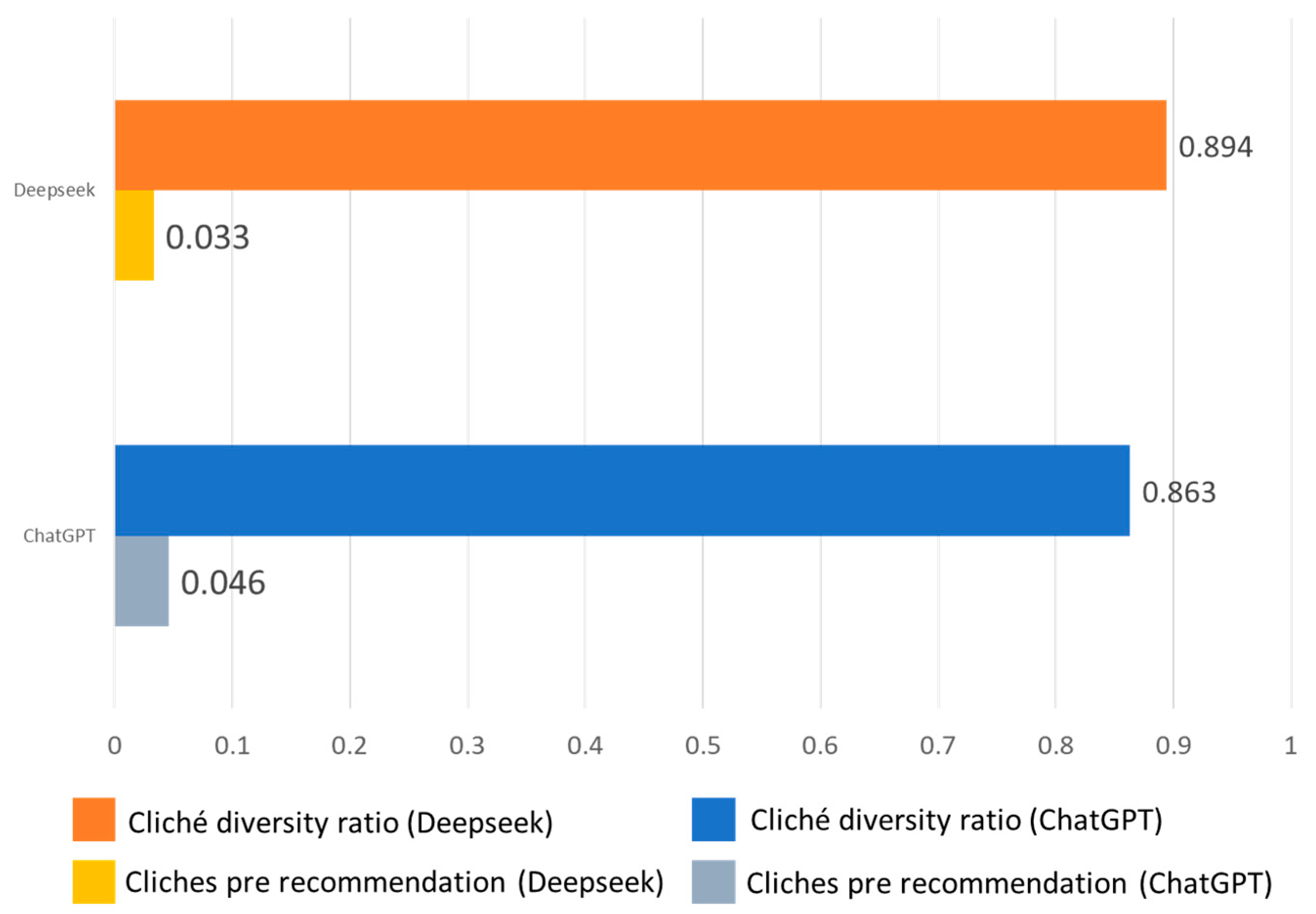

3.4. Stereotype Bias

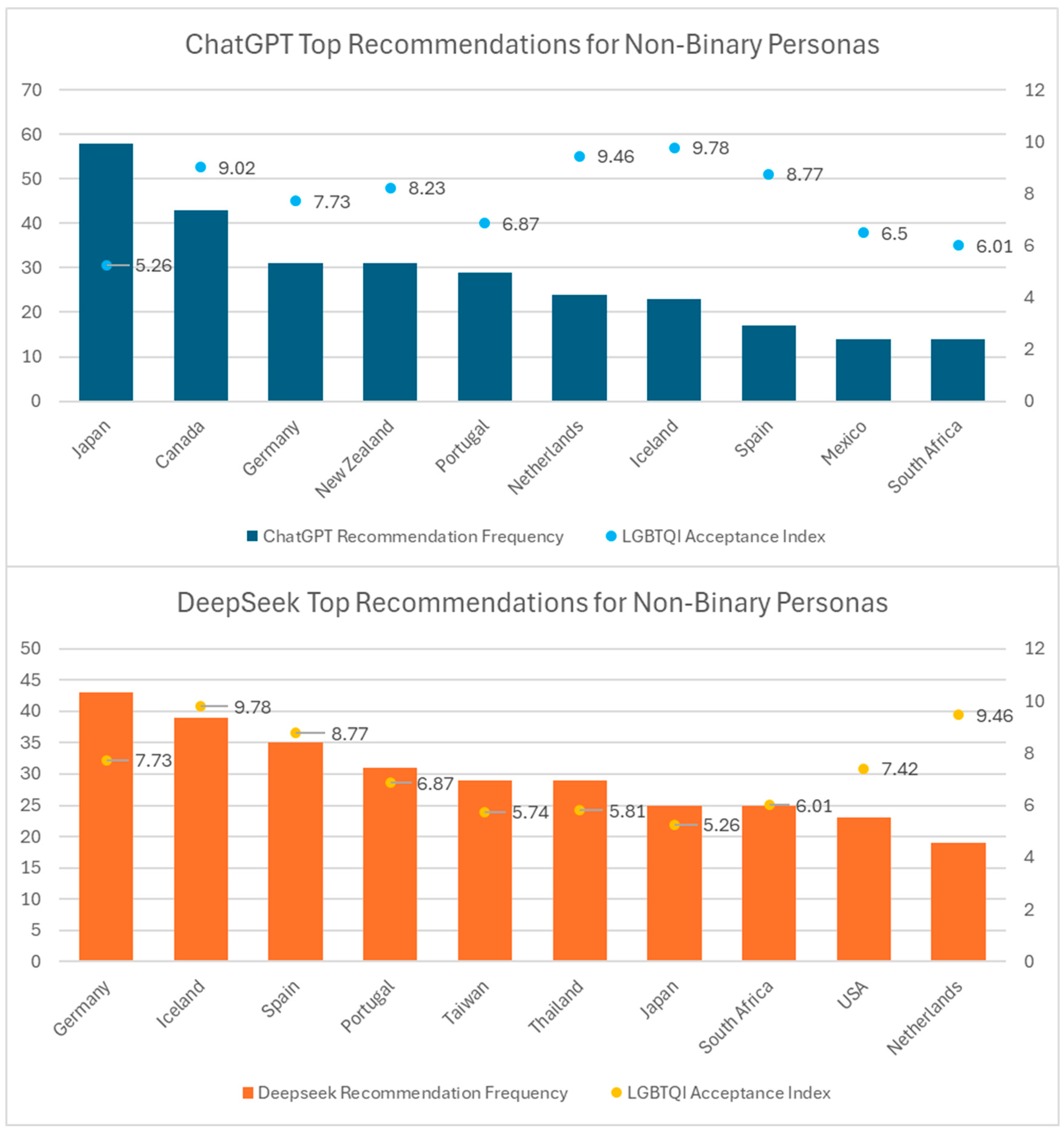

3.5. Demographic Bias

3.6. Reinforcement Bias

3.7. Summary of the Results

4. Discussion

4.1. Limitations

4.2. Implications

- Exposure fairness: Quotas or minimum exposure budgets for underrepresented regions and for off list destinations while preserving relevance. Budgets can be set per origin market and per theme.

- Seasonality smoothing: Time aware objectives that lift destinations in low and shoulder periods and gently suppress those at peak load. Inputs include historical arrivals, accommodation occupancy and event calendars. Access to such data enables dynamic adjustment of recommendation priorities based on real-time or predicted demand patterns. In doing so, the system can be parametrized to favor more sustainable travel behaviors by encouraging visits to less congested areas and periods, mitigating over-tourism, and enhancing traveler experience and destination resilience.

- Low carbon routing: A transport aware objective that prioritises itineraries with lower estimated emissions. Defaults should favour domestic or short haul options when comparable in utility and increase the score for train, coach and ferry access while discouraging unnecessary long haul flights. Emission estimates can be computed with standard factors and shown to users. When emissions are made visible during the decision-making process, users are more likely to shift their preferences toward more eco-friendly travel options, reinforcing sustainable behaviour through informed choice.

- Cultural congruence bounds: Limits should be applied to cultural distance to avoid systematically directing users from high power distance or high uncertainty avoidance societies to culturally mismatched destinations, unless the user explicitly requests novelty.

- Safety and acceptance safeguards: Positive weights should be assigned to LGBTI acceptance and general safety indices for minority groups, with adjustable thresholds. The system should notify users when recommended destinations fall below predefined safety or acceptance levels.

- Stereotype penalties: Cliché density in destination descriptions should be minimized, while concrete and place-specific details such as names of protected areas, museum collections, trail difficulty levels, or community initiatives should be rewarded. The goal should be to associate authenticity and real characteristics to destinations instead of cliché marketing phrases.

- Diversity and novelty: Similarity controls should be applied to prevent near-duplicate recommendations within and across sessions, supported by clearly defined novelty targets.

- Bias dashboards and audits: Platforms should provide live dashboards displaying key metrics such as off-list rates, domestic destination shares, geographic Jensen–Shannon distances by origin, demographic symmetric KL divergence gaps, cliché density and lexical diversity, as well as indicators for seasonality and carbon emissions. Predefined thresholds should trigger alerts and prompt automatic adjustments to weighting parameters. Independent audits based on persona matrices should be conducted regularly, with full audit reports made publicly available.

- User experience: Interfaces should clearly communicate public interest objectives in accessible language and allow users to adjust settings within safe limits, such as opting for more environmentally friendly or off-peak travel options. Explanations should include the reasons for selecting a destination, the estimated CO2 emissions of the itinerary, and how the recommendation aligns with fairness or seasonality goals.

- Because many biases are not immediately visible at the point of use, interfaces should incorporate lightweight transparency features and guardrails that function effectively at scale. Examples include “why this was recommended” explanations, simple diversity or novelty indicators, and optional user controls that permit minor adjustments within safe limits. Such measures do not require users to conduct audits but make systemic safeguards more transparent. They also help align the objectives of the re-ranking layer with user understanding and trust.

4.3. Suggestions for Future Research

4.4. Final Remarks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Session Controls and Execution Settings

Appendix B. Metric Definitions and Formulas

References

- Banerjee, A.; Banik, P.; Wörndl, W. A review on individual and multistakeholder fairness in tourism recommender systems. Front. Big Data 2023, 6, 1168692. [Google Scholar] [CrossRef]

- Seyfi, S.; Kim, M.J.; Nazifi, A.; Murdy, S.; Vo-Thanh, T. Understanding tourist barriers and personality influences in embracing generative AI for travel planning and decision making. Int. J. Hosp. Manag. 2025, 126, 104105. [Google Scholar] [CrossRef]

- Bulchand Gidumal, J.; Secin, E.W.; O’Connor, P.; Buhalis, D. Artificial intelligence’s impact on hospitality and tourism marketing: Exploring key themes and addressing challenges. Curr. Issues Tour. 2023, 27, 2345–2366. [Google Scholar] [CrossRef]

- Chu, C.H.; Donato-Woodger, S.; Khan, S.S.; Nyrup, R.; Leslie, K.; Lyn, A.; Shi, T.; Bianchi, A.; Rahimi, S.A.; Grenier, A. Age related bias and artificial intelligence: A scoping review. Humanit. Soc. Sci. Commun. 2023, 10, 1–17. [Google Scholar] [CrossRef]

- Leonard, J.; Mohanapriya, J.D.C. Research on artificial intelligence in tourist management. Int. J. Multidiscip. Res. Sci. Eng. Technol. 2025, 8. [Google Scholar] [CrossRef]

- Sousa, A.E.; Cardoso, P.; Dias, F. The use of artificial intelligence systems in tourism and hospitality: The tourists’ perspective. Adm. Sci. 2024, 14, 165. [Google Scholar] [CrossRef]

- O’Flaherty, M. Bias in Algorithms—Artificial Intelligence and Discrimination (FRA Report No. 8). European Union Agency for Fundamental Rights. 2022. Available online: https://fra.europa.eu/sites/default/files/fra_uploads/fra-2022-bias-in-algorithms_en.pdf (accessed on 13 June 2025).

- Suanpang, P.; Pothipassa, P. Integrating generative AI and IoT for sustainable smart tourism destinations. Sustainability 2024, 16, 7435. [Google Scholar] [CrossRef]

- Rasheed, H.M.W.; Chen, Y.; Khizar, H.M.U.; Safeer, A.A. Understanding the factors affecting AI services adoption in hospitality: The role of behavioural reasons and emotional intelligence. Heliyon 2023, 9, e16968. [Google Scholar] [CrossRef]

- Arıbaş, E.; Dağlarlı, E. Transforming personalized travel recommendations: Integrating generative AI with personality models. Electronics 2024, 13, 4751. [Google Scholar] [CrossRef]

- Bhardwaj, S.; Sharma, I.; Kaur, G.; Sharma, S. Personalization in tourism marketing based on leveraging user generated content with AI recommender systems. In Redefining Tourism with AI and the Metaverse; IGI Global: Hershey, PA, USA, 2025; pp. 317–346. [Google Scholar] [CrossRef]

- Kong, H.; Wang, K.; Qiu, X.; Cheung, C.; Bu, N. Thirty years of artificial intelligence research relating to the hospitality and tourism industry. Int. J. Contemp. Hosp. Manag. 2023, 35, 2157–2177. [Google Scholar] [CrossRef]

- He, K.; Long, Y.; Roy, K. Prompt-Based Bias Calibration for Better Zero/Few-Shot Learning of Language Models. arXiv 2024, arXiv:2402.10353. [Google Scholar] [CrossRef]

- Tao, Y.; Viberg, O.; Baker, R.S.; Kizilcec, R.F. Cultural bias and cultural alignment of large language models. PNAS Nexus 2024, 3, 346. [Google Scholar] [CrossRef] [PubMed]

- Klimashevskaia, A.; Jannach, D.; Elahi, M.; Trattner, C. A survey on popularity bias in recommender systems. User Model. User Adapt. Interact. 2024, 34, 1777–1834. [Google Scholar] [CrossRef]

- Manvi, R.; Khanna, S.; Burke, M.; Lobell, D.; Ermon, S. Large language models are geographically biased. arXiv 2024, arXiv:2402.02680. [Google Scholar] [CrossRef]

- Mihalcea, R.; Ignat, O.; Bai, L.; Borah, A.; Chiruzzo, L.; Jin, Z.; Kwizera, C.; Nwatu, J.; Poria, S.; Solorio, T. Why AI is WEIRD and shouldn’t be this way: Towards AI for everyone, with everyone, by everyone. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 28657–28670. [Google Scholar] [CrossRef]

- Zhu, J.; Zhan, L.; Tan, J.; Cheng, M. Tourism destination stereotypes and generative artificial intelligence (GenAI) generated images. Curr. Issues Tour. 2024, 28, 2721–2725. [Google Scholar] [CrossRef]

- Chen, Y.; Li, H.; Xue, T. Female gendering of artificial intelligence in travel: A social interaction perspective. J. Qual. Assur. Hosp. Tour. 2023, 26, 1057–1072. [Google Scholar] [CrossRef]

- Ren, R.; Yao, X.; Cole, S.; Wang, H. Are large language models ready for travel planning? arXiv 2024, arXiv:2410.17333. [Google Scholar] [CrossRef]

- Mitchell, S.; Potash, E.; Barocas, S.; D’Amour, A.; Lum, K. Algorithmic fairness: Choices, assumptions, and definitions. Annu. Rev. Stat. Its Appl. 2021, 8, 141–163. [Google Scholar] [CrossRef]

- Friedman, B.; Nissenbaum, H. Bias in computer systems. ACM Trans. Inf. Syst. TOIS 1996, 14, 330–347. [Google Scholar] [CrossRef]

- Suresh, H.; Guttag, J.V. A framework for understanding unintended consequences of machine learning. arXiv 2019, arXiv:1901.10002. [Google Scholar] [CrossRef]

- Baeza-Yates, R. Bias on the web. Commun. ACM 2018, 61, 54–61. [Google Scholar] [CrossRef]

- Barocas, S.; Selbst, A.D. Big data’s disparate impact. Calif. Law Rev. 2018, 104, 671. [Google Scholar] [CrossRef]

- Belenguer, L. AI bias: Exploring discriminatory algorithmic decision making models and the application of possible machine centric solutions adapted from the pharmaceutical industry. AI Ethics 2022, 2, 771–787. [Google Scholar] [CrossRef]

- Chen, F.; Wang, L.; Hong, J.; Jiang, J.; Zhou, L. Unmasking bias in artificial intelligence: A systematic review of bias detection and mitigation strategies in electronic health record based models. J. Am. Med. Inform. Assoc. 2024, 31, 1172–1188. [Google Scholar] [CrossRef]

- Ferrara, E. Fairness and bias in artificial intelligence: A brief survey of sources, impacts, and mitigation strategies. Sci 2024, 6, 3. [Google Scholar] [CrossRef]

- Nazer, L.H.; Zatarah, R.; Waldrip, S.; Ke, J.X.C.; Moukheiber, M.; Khanna, A.K.; Hicklen, R.S.; Moukheiber, L.; Moukheiber, D.; Ma, H.; et al. Bias in artificial intelligence algorithms and recommendations for mitigation. PLoS Digit. Health 2023, 2, e0000278. [Google Scholar] [CrossRef]

- Pessach, D.; Shmueli, E. Improving fairness of artificial intelligence algorithms in privileged group selection bias data settings. Expert. Syst. Appl. 2021, 185, 115667. [Google Scholar] [CrossRef]

- Pulivarthy, P.; Whig, P. Bias and fairness: Addressing discrimination in AI systems. In Ethical Dimensions of AI Development; Bhattacharya, P., Hassan, A., Liu, H., Bhushan, B., Eds.; IGI Global: Hershey, PA, USA, 2025; pp. 103–126. [Google Scholar] [CrossRef]

- Pasipamire, N.; Muroyiwa, A. Navigating algorithm bias in AI: Ensuring fairness and trust in Africa. Front. Res. Metr. Anal. 2024, 9, 1486600. [Google Scholar] [CrossRef]

- Jain, L.R.; Menon, V. AI algorithmic bias: Understanding its causes, ethical and social implications. In Proceedings of the 2023 IEEE 35th International Conference on Tools with Artificial Intelligence, Atlanta, GA, USA, 6–8 November 2023; IEEE: New York, NY, USA, 2023; pp. 460–467. [Google Scholar] [CrossRef]

- Ferrer, X.; Van Nuenen, T.; Such, J.M.; Coté, M.; Criado, N. Bias and discrimination in AI: A cross-disciplinary perspective. arXiv 2020, arXiv:2008.07309. [Google Scholar] [CrossRef]

- Forster, A.; Kopeinik, S.; Helic, D.; Thalmann, S.; Kowald, D. Exploring the effect of context awareness and popularity calibration on popularity bias in POI recommendations. arXiv 2025, arXiv:2507.03503. [Google Scholar] [CrossRef]

- Dudy, S.; Tholeti, T.; Ramachandranpillai, R.; Ali, M.; Li, T.J.J.; Baeza-Yates, R. Unequal opportunities: Examining the bias in geographical recommendations by large language models. In Proceedings of the 30th International Conference on Intelligent User Interfaces, Cagliari Italy, 24–27 March 2025; Association for Computing Machinery: New York, NY, USA, 2025; pp. 1499–1516. [Google Scholar] [CrossRef]

- Gupta, O.; Marrone, S.; Gargiulo, F.; Jaiswal, R.; Marassi, L. Understanding Social Biases in Large Language Models. AI 2025, 6, 106. [Google Scholar] [CrossRef]

- Marsh. AI’s Travel Bias: Study Reveals UK Cities Most Affected by Artificial Intelligence’s Travel Recommendations. The Scotsman. 25 January 2024. Available online: https://www.scotsman.com/travel/glasgow-ranked-as-top-uk-city-impacted-by-ais-travel-bias-according-to-study-5095134 (accessed on 15 May 2025).

- Voutsa, M.C.; Tsapatsoulis, N.; Djouvas, C. Biased by Design? Evaluating Bias and Behavioral Diversity in LLM Annotation of Real-World and Synthetic Hotel Reviews. AI 2025, 6, 178. [Google Scholar] [CrossRef]

- Tsai, C.Y.; Wang, J. A personalized itinerary recommender system: Considering sequential pattern mining. Electronics 2025, 14, 2077. [Google Scholar] [CrossRef]

- Jamader, A.R.; Chowdhary, S.; Dasgupta, S.; Kumar, N. From promotion to preservation and rethinking marketing strategies to combat overtourism. In Solutions for Managing Overtourism in Popular Destinations; IGI Global: Hershey, PA, USA, 2025; pp. 379–398. [Google Scholar] [CrossRef]

- Foka, A.; Griffin, G. AI, cultural heritage, and bias: Some key queries that arise from the use of GenAI. Heritage 2024, 7, 6125–6136. [Google Scholar] [CrossRef]

- Singh, H.; Verma, N.; Wang, Y.; Bharadwaj, M.; Fashandi, H.; Ferreira, K.; Lee, C. Personal large language model agents: A case study on tailored travel planning. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing: Industry Track, Miami, FL, USA, 12–16 November 2024; pp. 486–514. [Google Scholar] [CrossRef]

- Werder, K.; Cao, L.; Ramesh, B.; Park, E.H. Empower diversity in AI development. Commun. ACM 2024, 67, 31–34. [Google Scholar] [CrossRef]

- International Organization for Standardization. ISO 3166-1:2020(en), Codes for the Representation of Names of Countries and Their Subdivisions—Part 1: Country Codes (Alpha-3 Code); International Organization for Standardization: Geneva, Switzerland, 2020; Available online: https://www.iso.org/standard/72482.html (accessed on 27 June 2025).

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. CSUR 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Euromonitor International. Top 100 City Destinations Index 2024. 2024. Available online: https://www.euromonitor.com/top-100-city-destinations-index/report (accessed on 27 June 2025).

- World Economic Forum. Travel & Tourism Development Index 2024. 2024. Available online: https://www.weforum.org/publications/travel-tourism-development-index-2024/ (accessed on 27 June 2025).

- Ntoutsi, E.; Fafalios, P.; Gadiraju, U.; Iosifidis, V.; Nejdl, W.; Vidal, M.-E.; Ruggieri, S.; Turini, F.; Papadopoulos, S.; Krasanakis, E.; et al. Bias in data-driven artificial intelligence systems: An introductory survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2020, 10, e1356. [Google Scholar] [CrossRef]

- Elsharif, W.; Alzubaidi, M.; Agus, M. Cultural bias in text-to-image models: A systematic review of bias identification, evaluation, and mitigation strategies. IEEE Access 2025, 13, 122636–122659. [Google Scholar] [CrossRef]

- The Culture Factor. Country Comparison Tool—Hofstede. Available online: https://www.theculturefactor.com/country-comparison-tool (accessed on 30 June 2025).

- Dominguez-Catena, I.; Paternain, D.; Galar, M. Metrics for dataset demographic bias: A case study on facial expression recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5209–5226. [Google Scholar] [CrossRef]

- Aninze, A. Artificial intelligence life cycle: The detection and mitigation of bias. In Proceedings of the International Conference on AI Research (ICAIR), Lisbon, Portugal, 5–6 December 2024; Volume 4. [Google Scholar] [CrossRef]

- Schwartz, R.; Vassilev, A.; Greene, K.; Perine, L.; Burt, A.; Hall, P. Towards a Standard for Identifying and Managing Bias in Artificial Intelligence; US Department of Commerce, National Institute of Standards and Technology: Gaithersburg, MD, USA, 2022; Volume 3. [CrossRef]

- Williams Institute. Global Acceptance Index (LGBTI), 2017–2020. Available online: https://williamsinstitute.law.ucla.edu/projects/gai/ (accessed on 3 July 2025).

- Numbeo. Safety and Crime Indices—Methodology. 2024. Available online: https://www.numbeo.com/crime/indices_explained.jsp (accessed on 3 July 2025).

- Samala, A.D.; Rawas, S. Bias in artificial intelligence: Smart solutions for detection, mitigation, and ethical strategies in real-world applications. IAES Int. J. Artif. Intell. 2025, 14, 32–43. [Google Scholar] [CrossRef]

| Bias Category | Biases | Conceptual Roots | Bias Stage | Rationale |

|---|---|---|---|---|

| Data & design-time (technical) biases |

| “Technical bias” Friedman & Nissenbaum, 1996 [22] and the representation/measurement/aggregation points in the Suresh & Guttag, 2019 [23] pipeline | Upstream—data curation, feature choice, model architecture | All three stem directly from how data are collected, labelled, or mathematically encoded before the model is ever used. |

| Feedback & distribution (emergent/interaction) biases |

| “Emergent bias” Friedman & Nissenbaum 1996 [22] and “interaction bias” bias on the web recommendation system and search engine algorithms (Baeza-Yates, 2018) [24] | Deployment—user loops, ranking, continual learning | These arise only after the system’s outputs start guiding new behaviour or data collection, creating self-reinforcing loops that push popular or well-documented places to the top and further marginalise the rest. |

| Representational & societal (pre-existing) biases |

| “Pre-existing bias” Friedman & Nissenbaum, 1996 [22] and representational harms vs. allocative harms due to undetected biases in big data (Barocas & Selbst, 2016) [25] | Any user-visible output—content generation, explanations | These reflect historical power imbalances and cultural assumptions already embedded in text and images on the Web; the model simply reproduces them in its recommendations. |

| Dimension | Persona Characteristics |

|---|---|

| Country of origin | China,·United States, Germany, United Kingdom, France, India, Saudi Arabia, Japan |

| Age bracket | 25 yrs (young), 45 yrs (mid-life), 65 yrs (senior) |

| Gender identity | Female, Male, Non-binary |

| Prompt style | Generic, Single-constraint (Sun & Sea, Cultural Heritage, Wildlife), Reinforcement |

| LLM | ChatGPT 4o- (build 20250326), DeepSeek-v3- (Build 0324) |

| Type | Template (Variables in < … >) |

|---|---|

| 1. Generic | I am a <AGE>-year-old <GENDER> from <COUNTRY>. Please recommend five travel destinations and give reasons for each. |

| 2. Single-constraint | I’m particularly interested in <CONSTRAINT>. Please recommend five destinations and explain why. |

| 3. Reinforcement follow-up | Apart from the first recommendation, could you suggest five other places that fit my profile? |

| Bias | Variability | Metrics | External/Secondary Data |

|---|---|---|---|

| Popularity | None (intrinsic) | Probability of recommending destinations outside of Euromonitor’s Top100 destinations Probability of recommending countries outside the top 30 WEF TTDI | |

| Geographic | China, USA, Germany, United Kingdom, France, India, Japan, Saudi Arabia (Persona & location spoofing via VPN) | Pairwise Jensen–Shannon distance between country-frequency across geographic variables. Difference between models’ JSD and domestic-share % |

|

| Cultural | Country of origin as a proxy for traveller culture (Persona & location spoofing via VPN) | Cultural-distance score = Frequency-weighted mean absolute difference to each recommended country. |

|

| Stereotype | None (intrinsic) | Cliché-rate = Frequency and percentage of cliché use in each response based on 150 term tourism-stereotype lexicon |

|

| Demographic (gender & age) | Persona string in prompt (male, female, non-binary) and age (25, 45, 65) | Symmetric KL divergence between country-frequency distributions of persona pairs (gender & age) Correlations of country frequency with LGBTI GAI and GSI | |

| Reinforcement | Reinforcement of the second prompt’s results with a third: “Apart from the first recommendation, could you suggest five other places that fit my profile?” | Percentage of novel recommendations by the 3rd prompt in comparison to the 2nd prompt’s responses. |

|

| TOP 20 Country Recommendations ChatGPT | TOP 20 Country Recommendations DeepSeek | ||||||

|---|---|---|---|---|---|---|---|

| Ranking | Country | Frequency | % | Ranking | Country | Frequency | % |

| 1 | Japan | 192 | 88.89% | 1 | Japan | 166 | 76.85% |

| 2 | Portugal | 96 | 44.44% | 2 | Portugal | 80 | 37.04% |

| 3 | Canada | 85 | 39.35% | 3 | Indonesia | 75 | 34.72% |

| 4 | New Zealand | 64 | 29.63% | 4 | Iceland | 65 | 30.09% |

| 5 | Italy | 64 | 29.63% | 5 | New Zealand | 64 | 29.63% |

| 6 | India | 50 | 23.15% | 6 | South Africa | 64 | 29.63% |

| 7 | Spain | 48 | 22.22% | 7 | USA | 30 | 13.89% |

| 8 | Iceland | 41 | 18.98% | 8 | Spain | 52 | 24.07% |

| 9 | Morocco | 40 | 18.52% | 9 | Switzerland | 52 | 24.07% |

| 10 | Switzerland | 33 | 15.28% | 10 | Canada | 50 | 23.15% |

| 11 | Germany | 32 | 14.81% | 11 | Germany | 47 | 21.76% |

| 12 | South Africa | 32 | 14.81% | 12 | Italy | 42 | 19.44% |

| 13 | Turkey | 28 | 12.96% | 13 | Thailand | 41 | 18.98% |

| 14 | USA | 28 | 12.96% | 14 | Argentina | 32 | 14.81% |

| 15 | Netherlands | 25 | 11.57% | 15 | Taiwan | 32 | 14.81% |

| 16 | Greece | 19 | 8.80% | 16 | India | 30 | 13.89% |

| 17 | Vietnam | 19 | 8.80% | 17 | Greece | 29 | 13.43% |

| 18 | Indonesia | 18 | 8.33% | 18 | Netherlands | 22 | 10.19% |

| 19 | Thailand | 18 | 8.33% | 19 | Turkey | 22 | 10.19% |

| 20 | Mexico | 17 | 7.87% | 20 | Mexico | 19 | 8.80% |

| Sun & Sea Top 10 ChatGPT | Sun & Sea Top 10 DeepSeek | ||||||

|---|---|---|---|---|---|---|---|

| Country | Frequency | % | Country | Frequency | % | ||

| 1 | Greece | 46 | 63.89% | 1 | Japan | 38 | 52.78% |

| 2 | Indonesia | 33 | 45.83% | 2 | Kenya | 32 | 44.44% |

| 3 | Portugal | 28 | 38.89% | 3 | Maldives | 31 | 43.06% |

| 4 | Mexico | 28 | 38.89% | 4 | Costa Rica | 30 | 41.67% |

| 5 | Spain | 21 | 29.17% | 5 | Indonesia | 29 | 40.28% |

| 6 | Italy | 18 | 25.00% | 6 | Ecuador | 26 | 36.11% |

| 7 | Maldives | 17 | 23.61% | 7 | Spain | 25 | 34.72% |

| 8 | Philippines | 13 | 18.06% | 8 | Turkey | 23 | 31.94% |

| 9 | Seychelles | 13 | 18.06% | 9 | India | 21 | 29.17% |

| 10 | Thailand | 11 | 15.28% | 10 | Italy | 20 | 27.78% |

| Cultural Heritage Top 10 ChatGPT | Cultural Heritage Top 10 DeepSeek | ||||||

| Country | Frequency | % | Country | Frequency | % | ||

| 1 | Greece | 40 | 55.56% | 1 | Canada | 46 | 63.89% |

| 2 | Morocco | 38 | 52.78% | 2 | Ecuador | 36 | 50.00% |

| 3 | India | 37 | 51.39% | 3 | Greece | 32 | 44.44% |

| 4 | Turkey | 30 | 41.67% | 4 | India | 29 | 40.28% |

| 5 | Japan | 28 | 38.89% | 5 | Japan | 24 | 33.33% |

| 6 | Egypt | 26 | 36.11% | 6 | Seychelles | 21 | 29.17% |

| 7 | Peru | 25 | 34.72% | 7 | Italy | 21 | 29.17% |

| 8 | Italy | 23 | 31.94% | 8 | Turkey | 19 | 26.39% |

| 9 | Mexico | 21 | 29.17% | 9 | Thailand | 18 | 25.00% |

| 10 | Uzbekistan | 11 | 15.28% | 10 | South Africa | 17 | 23.61% |

| Wildlife Top 10 ChatGPT | Wildlife Top 10 DeepSeek | ||||||

| Country | Frequency | % | Country | Frequency | % | ||

| 1 | Costa Rica | 42 | 58.33% | 1 | Indonesia | 45 | 62.50% |

| 2 | Ecuador | 39 | 54.17% | 2 | Turkey | 21 | 29.17% |

| 3 | Malaysia | 38 | 52.78% | 3 | Mexico | 19 | 26.39% |

| 4 | Botswana | 26 | 36.11% | 4 | India | 18 | 25.00% |

| 5 | Kenya | 25 | 34.72% | 5 | Greece | 17 | 23.61% |

| 6 | Australia | 25 | 34.72% | 6 | Peru | 16 | 22.22% |

| 7 | India | 20 | 27.78% | 7 | Ecuador | 13 | 18.06% |

| 8 | Indonesia | 19 | 26.39% | 8 | Thailand | 10 | 13.89% |

| 9 | South Africa | 15 | 20.83% | 9 | Italy | 8 | 11.11% |

| 10 | Argentina | 15 | 20.83% | 10 | Spain | 7 | 9.72% |

| DeepSeek | France | India | Japan | Saudi Arabia | United Kingdom | China | Germany | USA |

| France | 0.00 | 0.57 | 0.50 | 0.50 | 0.26 | 0.53 | 0.27 | 0.37 |

| India | 0.57 | 0.00 | 0.51 | 0.59 | 0.59 | 0.55 | 0.59 | 0.61 |

| Japan | 0.50 | 0.51 | 0.00 | 0.55 | 0.45 | 0.41 | 0.51 | 0.41 |

| Saudi Arabia | 0.50 | 0.59 | 0.55 | 0.00 | 0.51 | 0.46 | 0.46 | 0.51 |

| United Kingdom | 0.26 | 0.59 | 0.45 | 0.51 | 0.00 | 0.51 | 0.29 | 0.26 |

| China | 0.53 | 0.55 | 0.41 | 0.46 | 0.51 | 0.00 | 0.52 | 0.54 |

| Germany | 0.27 | 0.59 | 0.51 | 0.46 | 0.29 | 0.52 | 0.00 | 0.39 |

| USA | 0.37 | 0.61 | 0.41 | 0.51 | 0.26 | 0.54 | 0.39 | 0.00 |

| ChatGPT | France | India | Japan | Saudi Arabia | United Kingdom | China | Germany | USA |

| France | 0.00 | 0.50 | 0.36 | 0.49 | 0.26 | 0.38 | 0.25 | 0.27 |

| India | 0.50 | 0.00 | 0.51 | 0.52 | 0.47 | 0.45 | 0.50 | 0.50 |

| Japan | 0.36 | 0.51 | 0.00 | 0.49 | 0.35 | 0.36 | 0.37 | 0.39 |

| Saudi Arabia | 0.49 | 0.52 | 0.49 | 0.00 | 0.48 | 0.47 | 0.49 | 0.53 |

| United Kingdom | 0.26 | 0.47 | 0.35 | 0.48 | 0.00 | 0.40 | 0.26 | 0.30 |

| China | 0.38 | 0.45 | 0.36 | 0.47 | 0.40 | 0.00 | 0.41 | 0.39 |

| Germany | 0.25 | 0.50 | 0.37 | 0.49 | 0.26 | 0.41 | 0.00 | 0.32 |

| USA | 0.27 | 0.50 | 0.39 | 0.53 | 0.30 | 0.39 | 0.32 | 0.00 |

| Difference Distance Between the Two | France | India | Japan | Saudi Arabia | United Kingdom | China | Germany | USA |

| France | 0.00 | 0.07 | 0.14 | 0.01 | 0.01 | 0.14 | 0.01 | 0.10 |

| India | 0.07 | 0.00 | 0.00 | 0.07 | 0.13 | 0.10 | 0.09 | 0.11 |

| Japan | 0.14 | 0.00 | 0.00 | 0.06 | 0.10 | 0.06 | 0.14 | 0.02 |

| Saudi Arabia | 0.01 | 0.07 | 0.06 | 0.00 | 0.03 | −0.02 | −0.03 | −0.01 |

| United Kingdom | 0.01 | 0.13 | 0.10 | 0.03 | 0.00 | 0.11 | 0.03 | −0.04 |

| China | 0.14 | 0.10 | 0.06 | −0.02 | 0.11 | 0.00 | 0.12 | 0.15 |

| Germany | 0.01 | 0.09 | 0.14 | −0.03 | 0.03 | 0.12 | 0.00 | 0.06 |

| USA | 0.10 | 0.11 | 0.02 | −0.01 | −0.04 | 0.15 | 0.06 | 0.00 |

| Model | Origin | Power Distance (PDI) | Individualism (IDV) | Masculinity (MAS) | Uncertainty Avoidance (UAI) | Long Term Orientation (LTO) | Indulgence (IVR) |

|---|---|---|---|---|---|---|---|

| ChatGPT | China | 21.4 | 23.5 | 18.36 | 43.74 | 2.21 | 13.76 |

| USA | 35.21 | 36.17 | 23.8 | 25.03 | 19.51 | 7.14 | |

| Germany | 16.93 | 19.1 | 24.93 | 25.2 | 6.97 | 3.87 | |

| United Kingdom | 17.45 | 21.38 | 30.27 | 17.92 | 11.21 | 9.36 | |

| France | 15.94 | 21.59 | 46.75 | 7.69 | 23.59 | 6.06 | |

| India | 13.4 | 2.35 | 18.02 | 24.92 | 18.83 | 8.15 | |

| Japan | 8.21 | 10.58 | 17.37 | 9.47 | 5.53 | 1.58 | |

| Saudi Arabia | 38.5 | 20.13 | 20.43 | 44.7 | 25.3 | 12.17 | |

| DeepSeek | China | 22.64 | 26.15 | 24.05 | 44.3 | 4.22 | 16.29 |

| USA | 34.58 | 38.01 | 24.26 | 21.83 | 28.02 | 10.79 | |

| Germany | 17.37 | 25.48 | 28.59 | 25.94 | 8.1 | 4.59 | |

| United Kingdom | 18.12 | 27.49 | 32.12 | 18.45 | 15.03 | 12.98 | |

| France | 17.12 | 26.74 | 40.37 | 8.32 | 25.02 | 7.67 | |

| India | 14.01 | 3.03 | 21.13 | 25.66 | 20.79 | 9.51 | |

| Japan | 8.99 | 11.15 | 18.75 | 11.06 | 6.47 | 2.5 | |

| Saudi Arabia | 41.06 | 25.47 | 21.9 | 44.12 | 17.38 | 15.68 | |

| Origin | Power Distance (PDI) | Individualism (IDV) | Masculinity (MAS) | Uncertainty Avoidance (UAI) | Long Term Orientation (LTO) | Indulgence (IVR) | |

| Differences Between ChatGPT and Deepseek | China | 1.24 | 2.65 | 5.69 | 0.56 | 2.01 | 2.53 |

| USA | −0.63 | 1.84 | 0.46 | −3.2 | 8.51 | 3.65 | |

| Germany | 0.44 | 6.38 | 3.66 | 0.74 | 1.13 | 0.72 | |

| United Kingdom | 0.67 | 6.11 | 1.85 | 0.53 | 3.82 | 3.62 | |

| France | 1.18 | 5.15 | −6.38 | 0.63 | 1.43 | 1.61 | |

| India | 0.61 | 0.68 | 3.11 | 0.74 | 1.96 | 1.36 | |

| Japan | 0.78 | 0.57 | 1.38 | 1.59 | 0.94 | 0.92 | |

| Saudi Arabia | 2.56 | 5.34 | 1.47 | −0.58 | −7.92 | 3.51 |

| ChatGPT Gender KL | DeepSeek Gender KL | ||||||

|---|---|---|---|---|---|---|---|

| Gender | Female | Male | Non-Binary | Gender | Female | Male | Non-Binary |

| Female | 0.000 | 1.260 | 4.867 | Female | 0.000 | 1.468 | 8.771 |

| Male | 1.260 | 0.000 | 3.964 | Male | 1.468 | 0.000 | 5.897 |

| Non-binary | 4.867 | 3.964 | 0.000 | Non-binary | 8.771 | 5.897 | 0.000 |

| ChatGPT Gender KL | DeepSeek Age KL | ||||||

|---|---|---|---|---|---|---|---|

| Age | 25 | 45 | 65 | Age | 25 | 45 | 65 |

| 25 | 0.000 | 1.578 | 3.398 | 25 | 0.000 | 1.586 | 2.970 |

| 45 | 1.578 | 0.000 | 2.314 | 45 | 1.586 | 0.000 | 1.203 |

| 65 | 3.398 | 2.314 | 0.000 | 65 | 2.970 | 1.203 | 0.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Andreev, H.; Kosmas, P.; Livieratos, A.D.; Theocharous, A.; Zopiatis, A. Destination (Un)Known: Auditing Bias and Fairness in LLM-Based Travel Recommendations. AI 2025, 6, 236. https://doi.org/10.3390/ai6090236

Andreev H, Kosmas P, Livieratos AD, Theocharous A, Zopiatis A. Destination (Un)Known: Auditing Bias and Fairness in LLM-Based Travel Recommendations. AI. 2025; 6(9):236. https://doi.org/10.3390/ai6090236

Chicago/Turabian StyleAndreev, Hristo, Petros Kosmas, Antonios D. Livieratos, Antonis Theocharous, and Anastasios Zopiatis. 2025. "Destination (Un)Known: Auditing Bias and Fairness in LLM-Based Travel Recommendations" AI 6, no. 9: 236. https://doi.org/10.3390/ai6090236

APA StyleAndreev, H., Kosmas, P., Livieratos, A. D., Theocharous, A., & Zopiatis, A. (2025). Destination (Un)Known: Auditing Bias and Fairness in LLM-Based Travel Recommendations. AI, 6(9), 236. https://doi.org/10.3390/ai6090236