1. Introduction

The success of a web page depends on more than just content and its functionality. The layout of the web page is often overlooked, but it can dictate how users consume information and how they interact with the page. The layout of a web page is the structure and arrangement of its visual elements. It is the way content, such as text, images, buttons, and navigation menus, is organized and positioned on a page. Despite its importance, analyzing web page layout has traditionally depended on browser rendering or browser-based automation tools. Analyzing web page layouts purely from code (HTML and CSS) without using a browser has received much less attention. This leaves a significant gap in existing research. Existing techniques that depend on browser rendering can be sensitive to differences in browser states. Factors like different window sizes, user settings, or even ad blockers can alter the visual output of the same web page, potentially leading to inconsistent or unreliable analysis. By contrast, our approach, which analyzes web page layouts directly from the source code, is less susceptible to these browser-related issues. By bypassing the rendering step, our method aims to provide more consistent results regardless of the device or user environment. We hope this work offers a new, more consistent perspective on web UI analysis.

The well-designed layout is like an invisible hand that guides users and provides a pleasant user experience. Usability is one of the first objectives that we have to have in mind when making a web page. This becomes even more critical in our multi-device world, where the same content must seamlessly adapt to screens of all shapes and sizes.

A good user interface layout creates a visual hierarchy that guides users through the most important content. It also helps maintain consistency, making the application feel like a unified and intuitive whole. Even white space is important; it improves readability and helps avoid a cluttered look. Small errors in layout can negatively impact the user experience and make a web application difficult to use. This is why understanding and analyzing the impact of web layout is so crucial for creating effective web pages/applications.

The information architecture (IA) of a web application is also crucial to the user experience. IA is a way of organizing content to make a webpage easy to use. While a layout ensures elements are correctly positioned, IA focuses on the efficiency and clarity of the user interface (UI) so users can navigate it easily. According to Rosenfeld et al., “Good information architecture design is informed by all three areas [users, content, context], and all three are moving targets” [

1].

Poor IA can lead to user frustration, no matter how visually appealing the layout (or the content) is. Even a correct layout can fail if it lacks a clear and consistent information structure.

In this paper, we focus primarily on the spatial and visual aspects of layout. However, we recognize that these aspects are interconnected with the higher-level information architecture, which influences how users find and understand the structured content within the interface.

Different approaches for the implementation of a web layout offer distinct advantages and disadvantages, especially when considering the diverse set of devices users usually use. The main approaches are as follows:

Fixed Layout—This approach uses constant width (and height) values for elements, regardless of screen dimensions. It is straightforward to design and predictable in behavior, but it is not ideal for users accessing the site on mobile devices, as content may appear cut off or require excessive zooming. This approach is no longer used today; it is included here to show a historical point in the evolution of layout methods.

Fluid Layout—Instead of fixed values, this method utilizes relative units like percentages. This allows content to expand and contract based on screen size. Although it offers flexibility, if not implemented correctly, it can often lead to layout errors and become difficult to maintain.

Adaptive Layout—This type uses predefined width breakpoints, applying different layouts for each. This allows the layout to be adaptable to specific predetermined screen sizes. The drawback is that it does not behave fluidly between these breakpoints, meaning transitions can appear abrupt.

Responsive Layout—This modern approach combines fluid grids, flexible images, and

media queries (special rules that apply styles based on the browser window or screen type). This allows layouts to dynamically adjust to any screen width, offering a seamless experience across all devices. Ethan Marcotte provides an excellent overview of the core technologies needed for responsive design in his work [

2].

Beyond traditional strategies, modern web design uses new techniques to meet user expectations and work on different devices. Single-page applications (SPAs) and long-page designs improve interaction by preventing page reloads and allowing continuous scrolling. In the Mozilla documentation, SPA is defined as follows: “SPA is a web app implementation that loads only a single web document, and then updates the body content of that single document via JavaScript APIs … ” [

3]. To address performance issues on smaller devices, Accelerated Mobile Pages (AMP) and other mobile solutions were created. These focus on speed and delivering lightweight content. In their official documentation, the AMP Project team writes, “AMP is a web component framework to easily create user-first experiences for the web.” [

4].

These approaches, along with frameworks like Bootstrap or Material Design, show that layout is part of a larger design ecosystem shaped by Web 2.0 and Web 3.0 developments. The existence of so many different ways to create layouts highlights the complexity of modeling a web application’s layout from its source code alone. Although our work focuses on the spatial structure of layouts from source code, understanding these broader design techniques helps provide context for the many solutions developers use to implement web layouts.

Displaying a user interface (and verifying it is correctly displayed) is often not a straightforward task. Unlike a lot of other types of graphical user interfaces, the layout of elements in web-based applications needs to be robust, ensuring usability across a variety of conditions. Many factors influence the appearance of a web application’s user interface. Despite existing standards for interpreting HyperText Markup Language (HTML), Cascading Style Sheets (CSS), and JavaScript code, each web browser has its own interpretation. This sometimes leads to the same web page appearing differently in various browsers. This problem is now almost solved, but developers should still check if the web page has the same appearance on different browsers. Different browsers still have some differences in displaying some controls, like a date picker and a scroll bar, and in the case of some new controls (or in the case of new or updated standards), discrepancies may occur until they settle. Also, users can access web applications on a wide range of devices, each with a different hardware configuration. This includes screen size and resolution, display type, and the kind of input devices available. The operating system on which a user accesses a web application is not uniform for all users and can affect how the user interface is displayed and functions. Even changing the language can impact the correct display of a web application. Some text labels might be longer or shorter than the labels used during the application’s development, potentially disrupting the layout. The content within web applications can change dynamically. Any alteration in content might lead to an incorrect display of the user interface’s element layout. We referenced these claims in the related work section.

Understanding these important factors is crucial for developers and designers aiming to create adaptable and user-friendly web experiences, and also for testers to test if the web page performs in the expected way. Having a method that can translate the structure, layout, and position of webpage elements into a format a neural network can understand would open up new ways to integrate machine learning into this complex ecosystem. Although some existing tools and approaches use machine learning, to our knowledge, none can infer a webpage’s layout data solely from its source code (CSS and HTML).

There are many approaches for analyzing a web page’s visual appearance. These analyses are conducted in various fields, and as we will see in the next section, most of them rely on using web browser data. In this paper, we propose a solution for the part of the analysis that requires information on the spatial relationships of elements on a web page. This solution is based solely on the static source code and does not need information from a web browser or an automation solution that uses a web browser directly or indirectly, like Selenium. There are a few important benefits this approach offers.

Our method provides significant potential advantages, particularly regarding performance, determinism, and security. Methods relying on web browsers must load the entire web page, which consumes considerable memory and time, as they need to load all multimedia and scripts in addition to HTML and CSS. While we could not directly compare performance in terms of time and memory due to the unavailability of source code from the dataset’s methods, common sense suggests our approach should be more efficient. Furthermore, methods that depend on web browsers are susceptible to issues such as advertisements, browser version, and the current state of the browser at the time of analysis. In contrast, methods that rely solely on source code avoid these problems, as they will always produce the same output for the same input. Finally, in terms of security, methods that depend on web browsers are exposed to potential security vulnerabilities if they analyze malicious websites, as harmful scripts can be executed along with the page. The method presented in this paper is not subject to such attacks because it does not execute scripts from the web page, nor are web pages parsed within a web browser. Despite progress in web page analysis tools, most current methods depend on web browsers or browser automation. As we have discussed, these methods have drawbacks related to performance, predictability, and security.

However, very little research has explored whether source code alone (HTML and CSS) can accurately model the spatial relationships between web page elements. This is the gap our study aims to fill.

The purpose of this paper is to determine if neural networks can learn spatial information about web page layouts from just their static source code. During training, labels are calculated using DOM information. However, for inference, only the static source code is used as input, without any additional data. To guide this, we focus on three research questions:

Can web page layouts be accurately represented using only HTML and CSS, without a browser?

Can neural networks learn the spatial relationships between elements from this representation?

How well can these learned representations support applications like evaluating web page similarity?

The main hypothesis of this paper proposes the feasibility of modeling web pages using neural networks. These networks can learn the spatial information of elements solely from their source code (HTML and CSS), and this information can then be used in applications such as web page similarity evaluation.

This paper is organized into an Introduction, Methods, Results, and Discussion. The Methods section is dedicated to introducing all components of our approach and their connectivity, along with a detailed explanation of our methodology. The Results section is primarily focused on presenting the training results for all key components and the evaluation outcomes of the model in the application of web page similarity evaluation. The Discussion section offers final remarks regarding the results and concludes the paper, addressing study limitations and outlining future work.

2. Related Work

Analysis of web-based user interfaces typically involves three main approaches: web browser data analysis (often via the Document Object Model, DOM), screenshot analysis, and manual human analysis. These methods can be employed individually or in combination. A comprehensive overview of these methods and their research applications is provided by I. Prazina et al. in their paper [

5].

Web phishing detection is one area where web page visual analysis is crucial. In this context, it is important to recognize malicious sites that mimic legitimate ones by adopting their visual appearance. J. Mao et al. [

6] address this using CSS selectors and attributes for DOM-based classification, as detailed in their paper. Similarly, other methods for phishing detection that utilize visual similarity and are also based on DOM data can be found in the papers written by Zhang et al. [

7] and Rosiello et al. [

8].

Another significant research area is software testing, where the early detection of visual appearance errors on web pages is paramount to mitigating potential financial and other damages. While numerous studies address UI testing based on control flow, a subset specifically focuses on UI layout testing. The work by T. A. Walsh [

9] serves as a key inspiration for our method; this paper analyzes web page layouts by employing layout graphs, in which elements are depicted as nodes and their layout relations as edges. This aligns substantially with our method’s emphasis on modeling web page layouts, hierarchical context, and spatial relationships. Furthermore, analyses conducted by [

10], written by Ryou et al., and [

11], writen by Althomali et al., also utilize layout graphs to detect UI errors.

Classic usability research offers principles that guide effective interface design. Nielsen’s ten usability heuristics [

12], in particular, are still very important for evaluating graphical user interfaces. They focus on things like visibility of the system status, consistency, error prevention, and aesthetic design. These heuristics have been commonly used to assess usability in both desktop and web applications, and they continue to influence how we design modern web and mobile experiences. While Nielsen’s heuristics and similar UX frameworks focus on qualitatively evaluating usability, our work tackles a different but related challenge: the automatic, quantitative analysis of web page layouts directly from their static source code.

Layout errors can break several of Nielsen’s principles. For instance, a layout that gets cluttered goes against aesthetic and minimalist design, and inconsistent spacing or alignment in a layout disrupts consistency. By modeling the spatial relationships between web elements, our approach provides technical tools that could help support some evaluations.

Roy Choudhary et al. [

13] and Mahajan et al. [

14] address the problem of ensuring consistent web page layouts across different web browser environments. More recently, Watanabe et al. [

15] gave a method that combines screenshots and DOM information in finding similar layout errors. The results were compared with popular tools used in cross-browser testing. We bring up this very problem in our introduction, noting how a web page’s appearance can change depending on the browser used. Although modern browsers have largely solved this, some inconsistencies can still pop up in certain browsers. Another set of problems involves layout errors caused by internationalization. As mentioned in the introduction, these errors can occur when a web page’s language is changed. For instance, the width of certain labels might change because the translated text is either longer or shorter than the original. Alameer et al. [

16,

17] discuss this issue and offer solutions. Layout problems can also happen due to dynamic content changes. In their paper [

18], S. Kolla et al. note, “Dynamic content represents one of the most persistent challenges in snapshot testing, creating frequent false positives that undermine confidence in test results”. This highlights how problematic dynamic content can be for developers. Our method could help with some of the problems mentioned before. Predicting spatial relationships directly from the source code might offer insight into why these layout problems occur. It could even work as a lightweight pre-rendering analysis tool, helping detect potential layout issues before they appear in different browsers.

However, a commonality among these approaches is their reliance on DOM data obtained either directly from a web browser or through an automation framework such as Selenium.

Another connected problem relevant in web page testing is the consistency of an element locator. Elements in different versions of a web page can have different IDs, classes, and attributes, which can cause automated tests to fail. Ricardo Coppola et al. addressed this problem in their paper [

19], offering a comparison of approaches to solve it. This work on resilient element location has some similarity with our approach because it also analyzes the underlying structure of a web page. Their problem is different; they aim to identify key components for a more robust testing process.

The analysis of layouts is an important part of web page similarity assessment or modeling human perception of web pages. In their paper, Bozkir et al. [

20] directly address the layout-based computation of web page similarity, which constitutes a core component of this paper’s model evaluation. Their work also offers a valuable dataset of websites. Furthermore, M. Bakaev et al. [

21] present another method that assesses similarity using Artificial Neural Networks (ANN). An earlier contribution by R. Song [

22] focuses on recognizing important blocks within a layout. Their research aims to identify and understand the significant components within a web page for applications such as information retrieval or summarization through the analysis of both structural arrangement and textual content. Web page segmentation is a relevant technique that partitions a page into semantically coherent blocks. Huynh et al., in their paper [

23], aim to extract the main content and disregard irrelevant regions based on structural information from the DOM tree. While their goal is different, this method is similar to ours because it also leverages the underlying structure of a web page rather than relying on rendered images for analysis.

Although recent studies have shown that source code can be used for layout tasks, their methods often rely on complex or computationally intensive frameworks. For example, Cheng et al. [

24] use neural networks to assess the quality of the web page from the DOM tree, which means that they are restricted to using the DOM during both training and inference. Another paper that extracts information from HTML is [

25] by Kawamura et al., which focuses only on HTML tables and does not use CSS for its analysis. Other papers, like [

26] by Seol et al. and [

27] by Tang et al., focus on generating new layouts rather than analyzing existing ones, a problem that is outside the scope of our method. In contrast, our approach is designed to be lightweight, predictable, and secure by working only with static HTML and CSS, which offers potential advantages in both performance and consistency.

In the literature review, we identified various fields that address the challenges of web page layout analysis. Some techniques analyze screen images, others analyze the tree structure of the DOM, and some combine both types of information (for more details, see [

5]). However, most methods are based on a web browser or an environment that uses one in some way. Those methods that do not depend on a web browser are typically focused on very specific applications.

This dependence on browsers often necessitates frequent updates to these methods and their tools due to browser changes. In addition, it makes the analysis results susceptible to various factors. These include the web browser’s state at the time of analysis, the web page’s current state (such as its data, script states, and advertisements), and network conditions.

We see that many fields benefit from the spatial information of web page elements. An approach that could provide this information without directly using a web browser would solve the aforementioned problems and open opportunities for further research and cooperation among different fields.

3. Methods

In this section, we will describe our approach and all its parts. Unlike other approaches, the method presented here utilizes web browser outputs as reference values only during the training phase; after training, only the source code is needed. This paves the way for addressing numerous issues such as performance, security, and analysis consistency, which will be discussed further. We will illustrate how this approach solves these problems through one of its model applications.

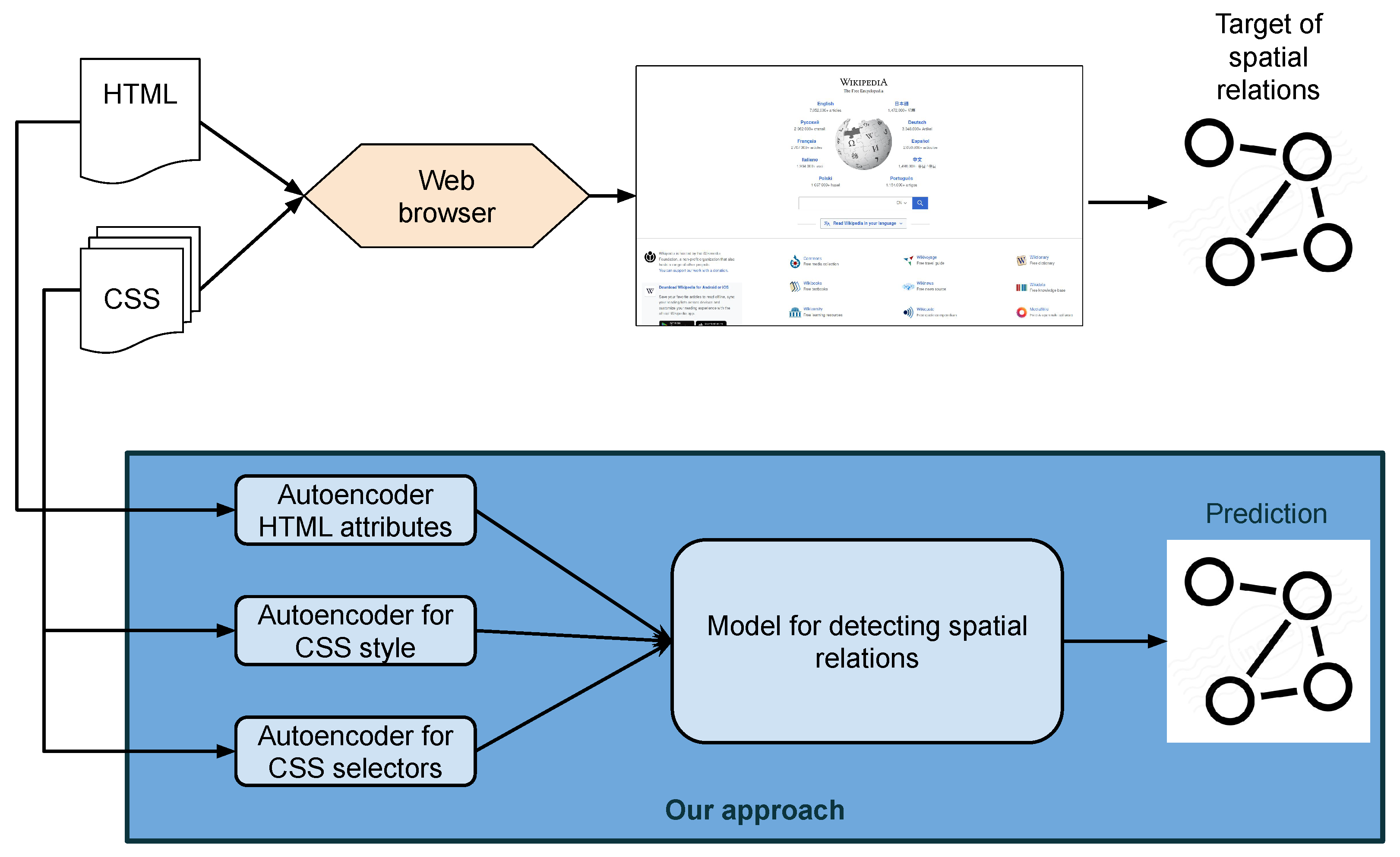

The approach is based on encoding parts of HTML and CSS using autoencoders made and trained for this paper. This step translates string inputs into corresponding vectors suitable for further processing within machine learning models based on neural networks. The second part of the approach combines different segments of the source code for each web page element to create a relevant vector per element, which can then be used to identify spatial relationships. The scheme of the approach can be seen in

Figure 1.

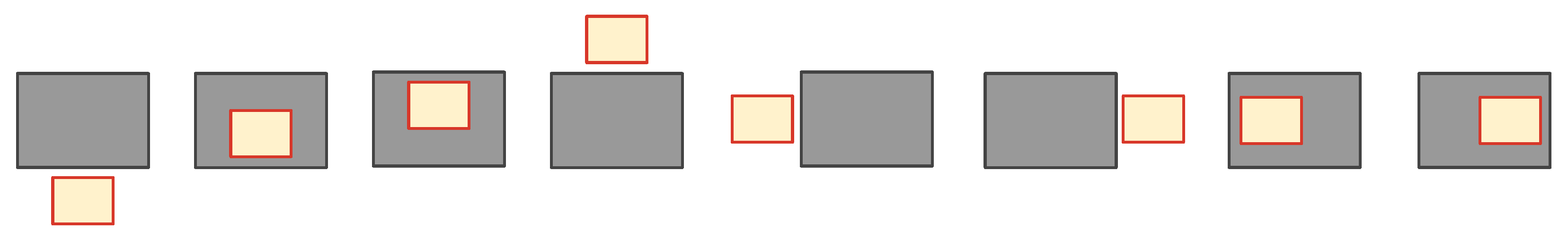

Our approach is designed to analyze HTML and its associated CSS files. It does not consider the state of the web browser, nor does it account for scripts or the changes they induce on a page. Given any pair of elements, our method can identify their spatial relationships, recognizing the 8 distinct spatial relations (or their absence) (

Figure 2).

While currently not implemented, the approach can be easily extended to recognize other spatial relations, such as element overlap, the overlap of two or more edges, or whether one element contains another. The output of our approach is an array of 8 values, with each value representing the probability that two elements share a given spatial relationship. Currently, the approach cannot work with dynamically added or modified content. However, if a script were available to save the HTML and CSS state at the moment of change, our method could then analyze such pages.

3.1. Autoencoders

To convert web page elements into vectors, we need to encode several key components: the style of HTML elements, the style selectors applied to those elements, and the attributes of the HTML elements themselves. These parts of the user interface specification include not only predefined keywords but also a large number of arbitrarily chosen words and values, such as class names, IDs, textual and numerical content, and web links.

To adapt this varied content for the final model—which will determine the spatial relationships of user interface elements based on the provided source code—it is essential to encode it into a fixed-length vector. For this task, we employed an autoencoder built upon a Long Short-Term Memory (LSTM) neural network. LSTM was chosen over a Transformer architecture due to its simplicity, performance, and suitability for the specific problem we are solving. While Transformer networks are often more appropriate for encoding spoken language, their complexity is excessive for the type of content encoding required here.

Before training the model, we preprocessed the data. Web pages often contain a large number of classes and IDs, whose values can be either words from natural language or generated words with no specific meaning. Such values make it difficult for the autoencoder model to learn the structure of the elements it needs to encode and unnecessarily increase the complexity of the training dataset. The actual meaning of a class or ID name within a web page is not essential for determining its style and appearance. The only crucial aspect is that for an ID, the name is unique within the web page, or for a class, there’s no collision with other class names. Bearing this in mind, we can replace all classes and IDs within a web page with unique values that follow the structure ‘’ or ‘’, where CODE is a generated sequence of random values unique to a given class or ID.

Beyond replacing classes and IDs, URLs within attributes and style values significantly impact the length of strings that need encoding. These values are considerably longer than attributes directly contributing to the style and final layout of user interface elements, and they can hinder the autoencoder’s ability to encode useful information. For this reason, we decided to remove all links from attributes and styles before encoding. We achieved this by utilizing the PostCSS (“PostCSS is a tool for transforming styles with JS plugins” [

28]) and PurgeCSS (“PurgeCSS is a tool to remove unused CSS from your project” [

29]) libraries.

Autoencoder Model and Structure

Our autoencoder was implemented in the PyTorch 2.7.0+cu118 environment, utilizing the following layers:

Embedding Layer—This PyTorch module represents discrete token values as continuous values, making them suitable for an LSTM network. Mapping from the token space to a continuous value space is essential because LSTMs are not designed to directly process words or text. In PyTorch, the embedding layer is realized as a special lookup table where each input token index returns a tensor of a predefined dimension (known as the ). During training, the returned tensor for a given index is adjusted to represent increasingly meaningful values.

LSTM Encoder—This LSTM module is responsible for encoding the sequence of tokens, which have already been mapped to continuous values by the Embedding layer.

Linear Bottleneck—A linear layer designed to reduce the dimensionality of the tensor. The output of this layer serves as the encoded value of the elements for other models.

Linear ‘Debottleneck’—A linear layer that increases the dimensionality of the tensor, effectively reversing the bottleneck’s operation.

LSTM Decoder—An LSTM model used to decode the output of the ‘debottleneck’ layer, with the aim of reconstructing the original input.

Linear Output—A final linear layer that maps the output of the LSTM decoder back into the space of the token vocabulary.

We built the autoencoder as a PyTorch module, incorporating the previously mentioned layers, each implemented using its corresponding PyTorch module. This model expects a BPE (Byte Pair Encoding) encoded string sequence as input, representing the element to be encoded.

The model’s output has the shape

. Here,

refers to the batch size used during training,

is the length of the BPE sequence after padding (all strings have a uniform length after encoding), and

represents the size of the BPE token vocabulary. This output signifies the probability that each token from the given vocabulary appears at every position within the sequence. A visual representation of this autoencoder’s scheme can be found in

Figure 3.

Autoencoders, by their nature, aim to learn a compressed version of their input during the training process. This type of learning is known as representation learning, in which the model extracts the fundamental and representative information from the given input by compressing the data. The input to our system is source code (CSS for style and HTML for structure). Although this code is structured, its complexity can vary significantly across different elements. Additionally, some parts of HTML and CSS do not directly influence the user interface element layout we are modeling. These two aforementioned facts are precisely why this type of data compression and unification is necessary. This means that every element is represented by a tensor of consistent length and structure, encapsulating the most significant information from the given code.

3.2. Finding Target Spatial Relations and Layout Graph

In the literature review, it can be observed that some of the methods analyzing web-based user interfaces represent element layouts as a graph. In these graphs, nodes represent individual elements, and edges depict the type of spatial relationship between two elements. This method of representing elements simplifies the comparison of similar user interfaces and provides a way for the identification of differences during regression testing.

The layout graph also provides a useful representation for responsive pages. Here, information can be added within the edges to indicate at which resolutions a particular relationship holds true. This approach allows for effective modeling of changes due to responsiveness.

The layout graph was an inspiration for our method. We devised a plan for our approach to obtain edges using node representational vectors. For target values for edges, we need a way to get the real state of the element displayed in a web page. This information is obtained from the Selenium WebDriver DOM (Document Object Model) data structure. The elements bounding rectangle is used. To efficiently identify relationships between all elements, we need a structure that allows for effective searching based on element proximity. When considering the proximity between two elements in terms of spatial relations between their edges, there are four horizontal pairs and four vertical pairs of edges (see

Figure 2). Given that this search involves multiple dimensions when looking for an element’s neighbors, a K-D Tree emerges as a suitable structure. The search procedure is explained in Algorithm 1.

The K-D tree construction process happens recursively. First, the array of elements is sorted along the current dimension. Then, the median element is found, and a new tree node is created based on this median element. After the node is created, the process repeats for the current node’s left and right children. The left child will receive half of the array with smaller values along the current dimension, and the right child will receive the other half. This process continues for new levels of the tree until, at the step where the array needs to be split, it contains no more than one element. If the array contains exactly one element, a node is simply created for that element, and it is placed in the appropriate position in the tree as a leaf.

A K-D Tree is a binary tree in which each node represents a k-dimensional point. This tree organizes elements by alternately dividing the k-dimensional space by levels, with each level bisecting a specific dimension of that space. For our search, we use a pair of values,

, which indicates the direction along which we want to align edges, where

and

can be

top,

bottom,

left, or

right. To derive the spatial relations for each element, we initiate a nearest neighbor search for the 8 possible pairs of

, which yields up to 8 potential spatial relations. In some instances, an element may not have neighbors for every

pair. For example, an element positioned along the left edge of the screen might not have another element to its left. In such cases, that particular spatial relationship will not be detected.

| Algorithm 1 Finding near elements using K-D Tree |

Require: Tree is constructed

- 1:

function findNear() - 2:

- 3:

function nearSearch(currentRoot, depth) ▹ Function is recursively called to search through the tree - 4:

if then - 5:

return - 6:

end if - 7:

sideDistance(dir, targetNode.rect, currentRoot.rect) ▹ Function sideDistance calculates Euclidean distance between centers of edges - 8:

if isRightSide(targetNode, currentRoot, dir) AND currentRoot.key ≠ targetNode.key then ▹ Function isRightSide checks if edges are in right order, for example if left-right direction is used first edge position x (distance from left side of the screen) should be less than second edges x - 9:

if closest ≠ null AND distanceTC < sideDistance(dir, targetNode, closest) then - 10:

- 11:

else if closest ≠ null AND distanceTC sideDistance(dir, targetNode, closest) then - 12:

closest.node.push(currentRoot) - 13:

else - 14:

- 15:

end if - 16:

end if - 17:

- 18:

- 19:

if then ▹ If we are in the depth of the tree that corresponds to the search dimension (position of the edge we are interested in), then we can prune the search - 20:

if isRightSide(targetNode.rect, currentRoot.childL.rect, dir) then - 21:

nearSearch() - 22:

else if isRightSide(targetNode.rect, currentRoot.childR.rect, dir) then - 23:

nearSearch() - 24:

end if - 25:

else ▹ The case when pruning cannot be done, we are on the depth that does not correspond to the search dimension. We need to search both sides of the tree - 26:

if then - 27:

nearSearch() - 28:

end if - 29:

if then - 30:

nearSearch() - 31:

end if - 32:

end if - 33:

return - 34:

end function - 35:

nearSearch(this.root, 0) - 36:

return - 37:

end function

|

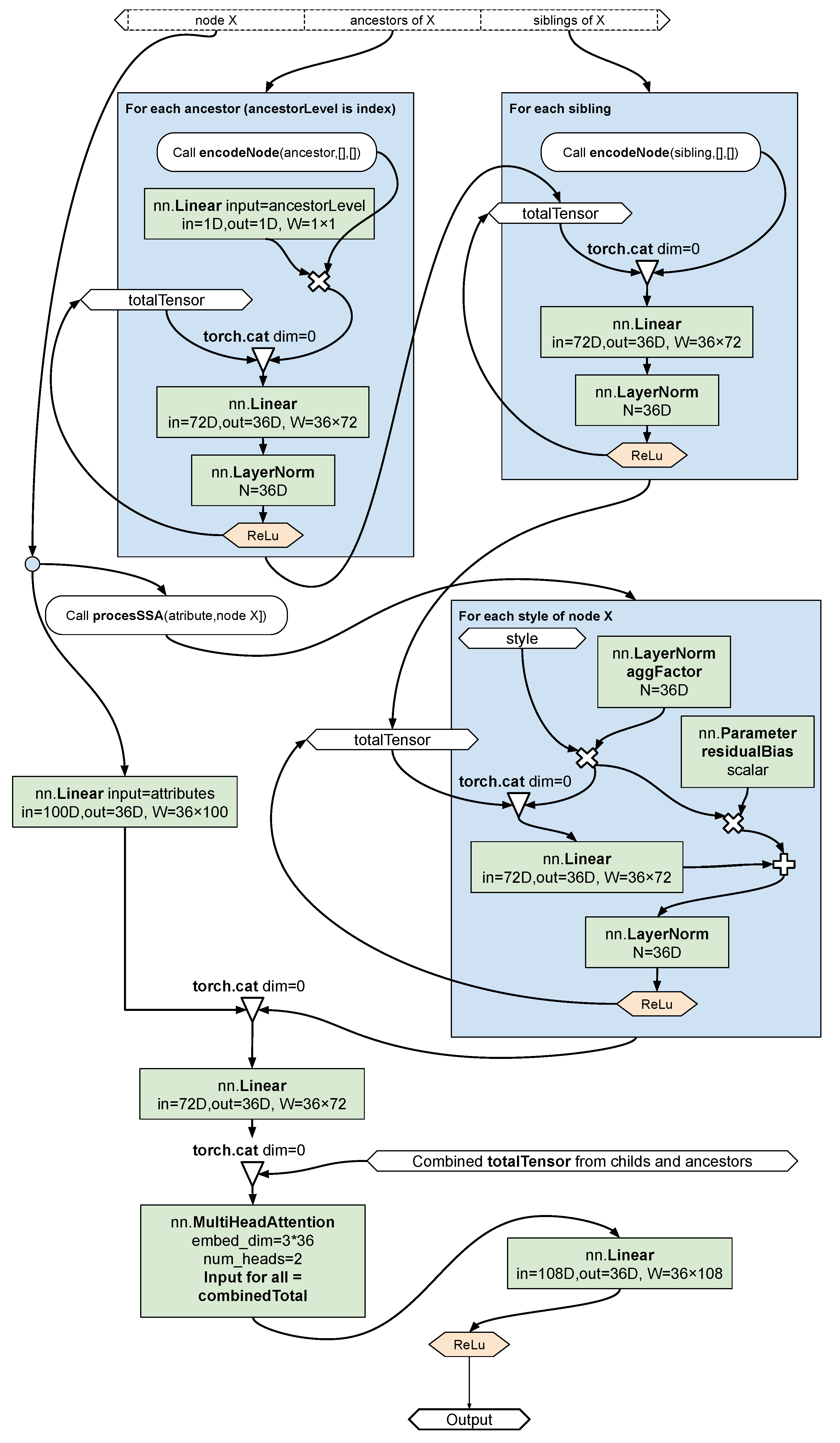

3.3. Representing Element Relationships Through Deep Learning

We created the model of the relationship graph as a neural network incorporating linear and convolutional layers. This network aggregates data from multiple nodes within the graph to produce a vector containing the necessary information for determining spatial relations with other nodes. In this model, for each node, we utilize data from its ancestor nodes and from nodes whose direct parent is the same as the current node’s parent (sibling nodes). The influence of an individual parent’s value and that of a direct neighbor is a value that will be learned during training. In the context of this section, a direct neighbor refers to a node that shares the same direct parent as another node. A direct parent is a parent that directly contains the given node, meaning there’s a direct connection without any intermediate nodes between the parent and the given node.

Each node within our model holds the following information:

Encoded Styles: This is represented as an array of tensors, capturing the style information of the HTML elements.

Encoded Selectors: An array of tensors of the same length as the encoded styles array, where each element at a given position in this array corresponds directly to the style element at the same position in the style array. This links styles to their specific selectors for that element.

Encoded Element Attributes: A single tensor containing the encoded attributes of the element.

Ancestor Information: A list of node names that represent all of the element’s ancestors in the hierarchy.

Siblings Information: A list of node names that represent all of the element’s siblings

List of target relations for the pair of elements (only for training): An array of 8 values, where each value is a binary value (true/false) as an answer to the question: “Does the pair of elements share some spatial relationship?”.

We used a combination of Node.js (v23) and Python (v3.13) scripts to obtain the previously described data. The Node.js scripts, built with Selenium WebDriver, parse the web page, gather information from the generated DOM, and create an offline version of the page. This offline version then serves as input for the model training process. The Python scripts leverage the pre-trained autoencoder models discussed earlier. Information extracted from the DOM object is used to construct the K-D tree, from which we derive spatial relationship data. From the offline version of the page, we identify elements for which to create corresponding pairs sharing spatial relationships, along with structural relationships like parent and direct neighbor, and attribute data for each element. Style and selector data are obtained from offline versions of files linked via <link> tags that contain styles, as well as from <style> tags within the HTML file itself.

The described scripts create a dataset of web pages. The modular design of these web page preprocessing scripts makes this dataset highly versatile. Since every intermediate step and script result is saved, the dataset contains a wealth of useful information. It is also easily expandable, as the scripts can be simply utilized to add new pages. The dataset has the following limitations:

All CSS content from <link> tags or <style> tags is consolidated into a single allCSS.json file. This means the style data for elements is combined and merged from all CSS source files.

The dataset itself comprises 100 web pages selected from the Mozilla Top 500 list. The web pages are selected if they have a complete layout (it is not just a simple text web page), and the web page should not be just a login screen (some pages from the Mozilla Top 500 list require authentication). The structure of this dataset is detailed in

Table 1.

Aggregating Node Information

The model’s input consists of a pair of nodes. Their encoded data is loaded from the encoded_elements.json file. Additionally, information about supplementary nodes (parents and neighbors of both original nodes) that require processing, along with a list of relationships between the two chosen nodes, is loaded from completePairs.json. The model’s output is an array. When the sigmoid function is applied to this array, it produces probabilities (values between 0 and 1) for each of the 8 spatial relationships. This represents a form of multilabel classification, where each pair can have one or more labels of spatial relationships.

The core part of the model is the encodeNode method, which takes all the prepared data for a node and returns an aggregated tensor. This tensor should capture as much relevant information as possible to determine spatial relationships with other nodes.

When the forward method receives two nodes for which it needs to determine relationships, it first calls encodeNode for the first node and then for the second. The resulting tensors (let us call them encoded_A and encoded_B) are then concatenated with the ordinal numbers of both nodes. In this context, the ordinal number of a node, on_X, refers to the count of elements in the same parent that precede a given node in the HTML document. This information is crucial and cannot be inferred from other data within the encoded tensors. The ordinal number of an element significantly impacts spatial relationships because three elements might have identical styles and attributes, yet their ordinal positions determine whether or not they share a particular spatial relationship. After creating this combined tensor (on_A, encoded_A, on_B, encoded_B), we apply a linear layer within the forward method. This layer outputs a tensor containing the final probabilities for each of the spatial relationships.

Figure 4 illustrates the schema for the

encodeNode method. This method takes three main parameters. The first parameter represents the node to be encoded, including its tensor of encoded attributes and arrays of tensors for both encoded styles and selectors. The second parameter is a list of all the parent nodes, each parent containing the same data structure as the first parameter. Finally, the third parameter is a list of all neighbor nodes, formatted identically to the second parameter.

The next part of the

encodeNode method is responsible for encoding styles. Considering that an element can have multiple styles and corresponding selectors, we need to process each one. All style values are combined with the element’s attributes to understand the meaningful influence of the style relative to those attributes (e.g., some selectors have higher priority). This combination happens in the

processSSA method, as illustrated in

Figure 5.

The core of the processSSA method acts as an attention layer. Depending on the attributes and selectors, it assigns a specific weight to each style. After applying this weight to a style, it is added to a list of styles. Within the encodeNode method, this list of styles is then processed by combining all styles with the totalTensor vector, which was previously aggregated from neighbor and ancestor nodes. During this combination, a residual bias parameter is used, allowing the model to learn how much each new style influences the overall totalTensor.

Once all styles are processed and a single, combined totalTensor is obtained, the final step is executed. A MultiHeadAttention layer is applied, giving all relevant tensors (ancestor tensors and neighbor tensors) the opportunity to contribute to the final result. This final result is then passed through a linear layer and, ultimately, a ReLU activation layer to produce the most representative tensor possible.

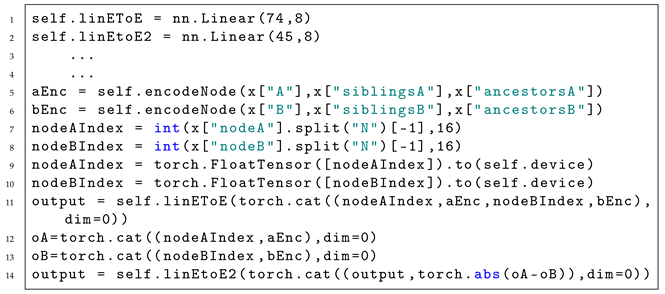

In Listing 1, the final steps of the model are presented. To estimate the spatial relationships between a pair of web page elements, we utilize their representative tensors (36-dimensional FloatTensor obtained from the encodeNode method, lines 1 and 2 in the listing). The process of determining the probabilities for each of the 8 spatial relationships begins by concatenating the tensors of the two elements with their respective ordinal numbers within their parents (lines 7 and 8 in the listing). This results in a 74-dimensional tensor (36D for the first element, 1D for its ordinal number, 36D for the second element, and 1D for its ordinal number).

A linear layer with an weight matrix (output dimension 8, input dimension 74) is applied (line 11 of the listing) to this combined tensor. The second layer in this part of the network is another linear layer with dimensions of . The input to this layer is the concatenated output of the previous layer and a tensor representing the difference between the two element tensors (lines 12–14 of the listing). This design allows the model to learn as many dependencies as possible between the paired elements when forming the final result.

| Listing 1. Final steps of the model for detecting spatial relationships between elements ’a’ and ’b’. |

![Ai 06 00228 i001 Ai 06 00228 i001]() |

3.4. Applying the Model: Web Page Similarity

In the spatial relationship recognition model, for each node of the web page we are modeling, we can obtain a representative vector. This vector implicitly contains information about the spatial relationships between other elements. This information can be leveraged for many applications where spatial data about an element itself is needed, or where aggregated information is used to compare parts of a web page, or an entire web page, with others.

In this section of the paper, we will provide an example application that simply utilizes spatial relationship information to calculate the similarity of one web page to others.

In [

20], the authors provide a dataset of web pages along with the results of their experiment, where users were asked to rate the similarity of given pairs of web pages based on their own perception. This dataset is well-documented and valuable because, in addition to user interface screenshots, it includes the source code of the pages themselves. This last feature makes it particularly useful for applying the models described earlier in our work. Furthermore, this dataset will serve as an excellent form of validation, as none of its pages were used in the training process of the models presented in this paper.

Before the user evaluation, the authors in [

20] categorized the pages into four groups, a fact they did not disclose to the participants. A total of 312 participants took part in the evaluation. Following the evaluation, the authors compared the results and found that users grouped the pages by similarity with a high degree of concordance with the pre-established groups. Therefore, we can utilize these same groups, just as the authors did, when training and validating our similarity model. The dataset from the aforementioned work comprises 40 pages. Unfortunately, two of these pages were not properly archived, making them unusable. Despite this, the remaining 38 pages can be utilized without issue.

The approach developed in this paper exclusively uses the raw HTML and CSS code of a web page. It generates representative vectors for each element without needing a web browser. This is a significant distinction from the original work from which our dataset was sourced. Unlike that work, which had access to DOM states and user interface images, our method relies solely on the source code. This fact makes our approach browser-agnostic and simplifies its integration into any environment, even one without a web browser. Furthermore, it opens up more opportunities for optimization. Processing a web page no longer requires loading it into a web browser, which typically consumes substantial RAM for parsing and rendering and significant processing time for parsing and executing some of the scripts that ultimately will not affect the web page’s visual appearance. Moreover, by not directly depending on a specific browser version, our approach reduces its reliance on web browsers, enabling future possibilities to treat browser type and resolution as just another parameter within our model.

Figure 6 illustrates the scheme of our approach, which leverages the models previously described. The autoencoder models (for style, selectors, and attributes) are used to extract the necessary properties per element for the model that generates a representative vector of spatial relationships. Unlike other approaches mentioned in

Section 2, our method relies exclusively on source code, completely eliminating the need for a web browser’s capabilities.

Once we have a representative vector containing implicit spatial information, we need to adapt it by extracting useful data for similarity comparisons. When comparing two web pages, we will compare their aggregated vectors. For our distance metric, we are using Euclidean distance because, in addition to the angle between two vectors, their magnitude is also important (pages with more elements will have larger values in certain vector dimensions).

To obtain the necessary vector, we created a simple neural network. The network needs to be straightforward due to the limited number of available web pages; more complex networks would easily face overfitting issues. The role of this network is to map the vector and adapt it for comparison. The network has two layers with a ReLU activation function between them to learn nonlinear relationships. The hidden layer of the network has a dimension of 64, while the output vector has a dimension of 16. The input to the network is an aggregated vector of dimension 36 (

Figure 7). Finally, similarity between pages is calculated using the Euclidean distance between their vectors (the

vector obtained after adaptation through the simple neural network).

3.5. Training

3.5.1. Autoencoders

After preprocessing, we have three distinct datasets: a set of attributes (expanded with HTML element names), a set of selectors applied to the chosen HTML elements, and a set of styles. BPE (Byte Pair Encoding) was applied separately to each of these three sets.

The BPE algorithm is a compression technique where an arbitrarily large vocabulary is represented by a smaller set of subwords. A subword, in this context, is a sequence of characters that frequently appears across all words in the original vocabulary. This new set of subwords is constrained by the size of the resulting vocabulary, which we set as a parameter for the BPE algorithm. The role of BPE encoding is to identify the most frequently repeated sequences within strings and, based on them, create a dictionary of tokens used in these datasets. Applying BPE encoding to a string yields a sequence of tokens suitable for further processing, an approach commonly used in NLP (Natural Language Processing) problems. The resulting token sequence also represents a compressed version of the original string. We used the Hugging Face Tokenizer Python library to create the BPE token dictionaries. These dictionaries were created separately for each of the three datasets due to their differing structures and content, ensuring the best possible representation of the data for tokenization.

Our models were trained on data sourced from real-world websites. These pages were selected from the Mozilla’s Top 500 most-visited sites. While the majority of these sites are from English-speaking regions, this selection does not impact the model’s generality. This is because every class and ID name is replaced with a corresponding code, and the remaining input to the autoencoders primarily consists of CSS and HTML syntax keywords. From the chosen set of pages, we extracted styles for each element, encompassing two values: the CSS selector and CSS properties, as well as attributes for each element. Three distinct datasets were formed from this collected data and used for training the autoencoders. The models were trained for 10 epochs. For validation, we set aside 10 pages not used in training; these were used to monitor the training progress and ensure overfitting did not occur.

The loss function employed during training was Cross-Entropy, implemented within the torch.nn package. This function takes the autoencoder’s output as parameters, to which the SoftMax method is applied. This constrains the values to a 0–1 range, representing the probability of a specific token from the vocabulary appearing.

3.5.2. Spatial Relationship Model

We used 100 real-world websites to train our model. Pages are selected from Mozilla Top 500 list. Inclusion criteria for this dataset were that a webpage have a nontrivial layout and that the page not be hidden behind some authentication form. For each selected page, we generated dataset files using the scripts described earlier in

Section 3.3. During the training process, we experimented with various optimizers, with the AdamW optimizer proving to be the most effective so far. AdamW operates on the principles of the Adam optimizer, utilizing an adaptive learning rate along with added L2 regularization of weights (also known as weight decay). This characteristic of the optimizer stabilizes training and significantly helps prevent overfitting. By applying weight loss, the model is penalized if certain weights become too large. This encourages the model to remain simpler and rely less on obvious features, thereby increasing its generalization capability. A model with better generalization performs more effectively on unseen inputs.

After selecting an optimizer, a significant challenge during the training of our relationship graph model was class imbalance (as detailed in

Table 2). Some spatial relationship classes appeared with much higher frequency than others, while some were quite rare.

To address this class imbalance, we opted to exclude the most frequent classes from the dataset. This meant not all pairs with common spatial relationships were included. Our method involved keeping a counter for frequent pairs: if the counter was below a value of 2 and a pair contained a common element, we did not add that pair to the set. However, if the counter had reached 2, even if the pair included a common spatial relationship, it was added. Applying this rule resulted in a more uniform class distribution within the dataset (see

Table 3).

Besides choosing a different set of web pages or modifying the existing one, another solution is to select a loss function that mitigates the impact of imbalance. Imbalance still persists for label configurations, which represent the types of relationships shared by a pair of elements. Many pairs exhibit the same configurations. We addressed this problem by introducing a configuration-specific weight. If a particular configuration is frequent, its weight is smaller, meaning it has less influence on training. Without this adjustment, the model would primarily learn frequent configurations, effectively ignoring those that appear less often.

For our loss function (Formula (

1)), we selected a combination of Dice loss, binary focal loss, and L1 loss for the sum of labels. Dice loss was specifically designed to address the problem of imbalanced datasets. During training, we observed that while Dice loss alone (compared to cross-entropy loss) achieved better recall and precision for underrepresented classes, it led to slightly worse recall and precision for well-represented classes. Combining these loss functions yields better results for both minority and majority classes.

The role of the L1 loss function is to minimize the difference between the number of predicted labels and the actual number of labels. This reduces the chance of the model predicting more or fewer spatial relationships than are actually present. The weights for each component of the loss function were determined through trial training with various weight parameters, resulting in the most stable training performance for these specific values.

The loss function formula is

where:

is weight of current label configuration based on target labels ;

is the target, and is model prediction;

is the binary focal loss function;

is the Dice loss function;

is the L1 loss function.

Since our dataset is not structured for direct batch processing (due to varying numbers of parent and child nodes, which would necessitate extensive padding for uniform batch sizes), we employed a batch simulation approach, also known as delayed optimization. The loss value is aggregated over a specific number of samples or pairs (in our case, 100). The optimization step then occurs after every 100th pair. This method prevented large jumps in the training gradient and kept the training from oscillating between local minima, leading to a more stable learning process.

3.5.3. Simple Neural Network for Vector Adaptation in Similarity Detection

Training for this simple network was based on the triplet principle. For this process, the dataset was divided into 30 pages for training and 8 pages for validation. From the set of 30 training pages, 5000 unique triplets were generated. Each triplet consists of two elements that should belong to the same group and one element that should not. The group labels used were those provided in the paper where the dataset was originally introduced. During training, our objective was to increase the distance between elements from different groups while decreasing the distance between elements within the same group.

The triplet loss function is defined as follows: For a triplet (), where a is the anchor (the element based on which we calculate the loss value), p is the positive element (an element from the same group as the anchor), and n is the negative element (an element from a different group than the anchor).

The formula for the loss function used in training this network is

where:

—Euclidian distance.

a—the anchor element.

p—the positive element.

n—the negative element.

4. Results

The Results section is structured in two parts. The first part covers the training results for each phase of our approach. The second part presents the model metrics for the web page similarity application, as well as the precision and recall metrics for spatial relationship detection.

4.1. Training Results

4.1.1. Autoencoders

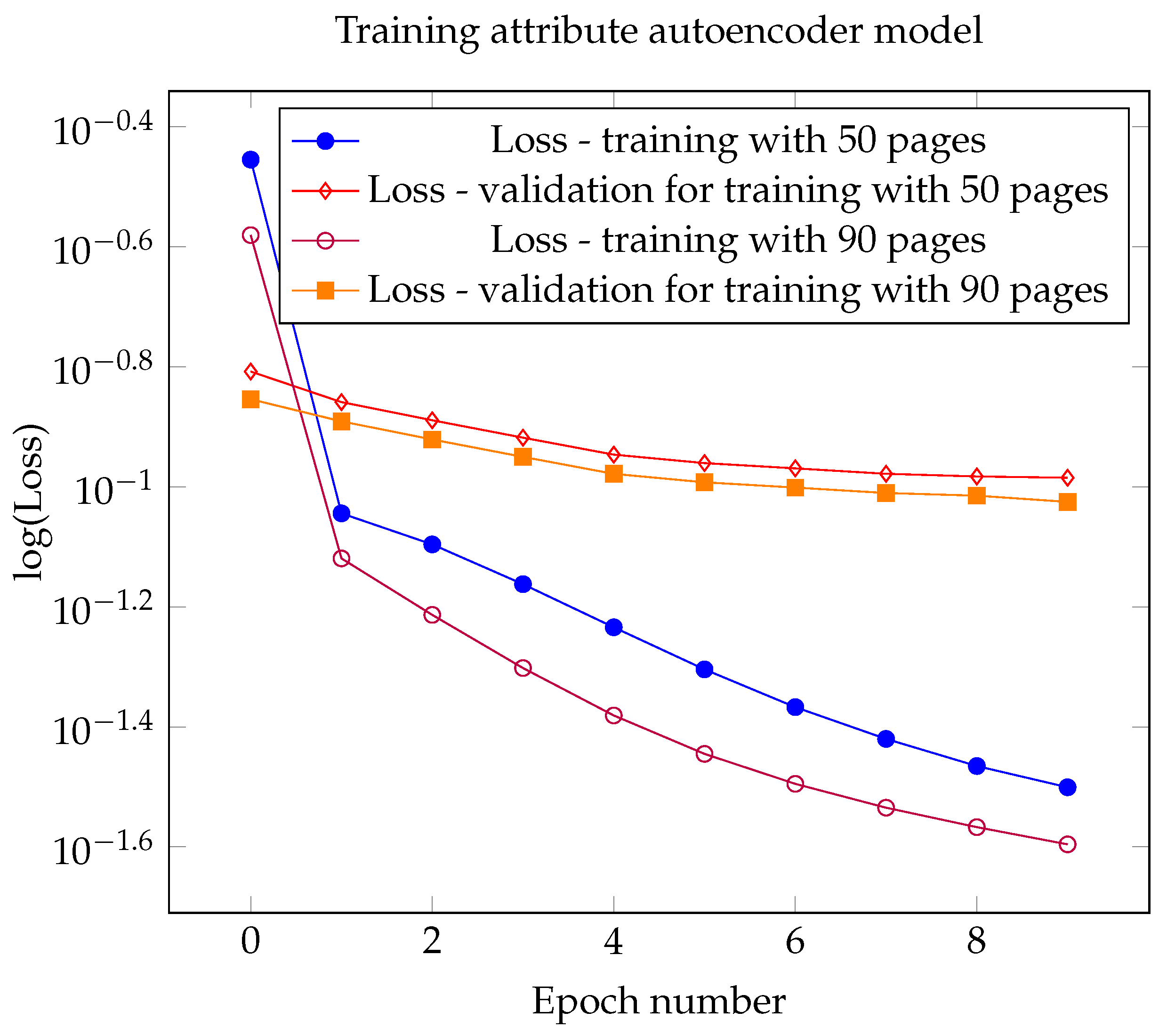

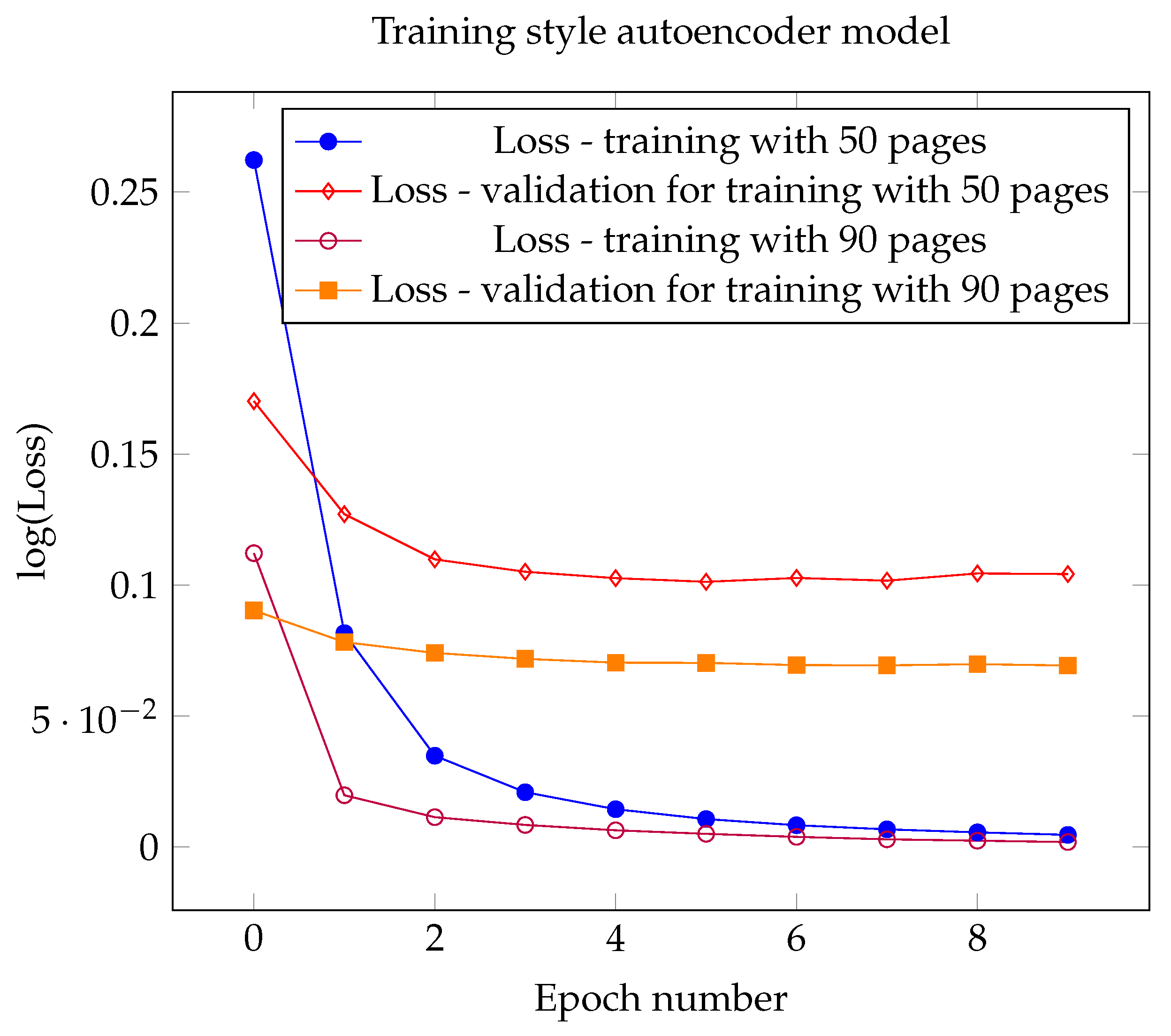

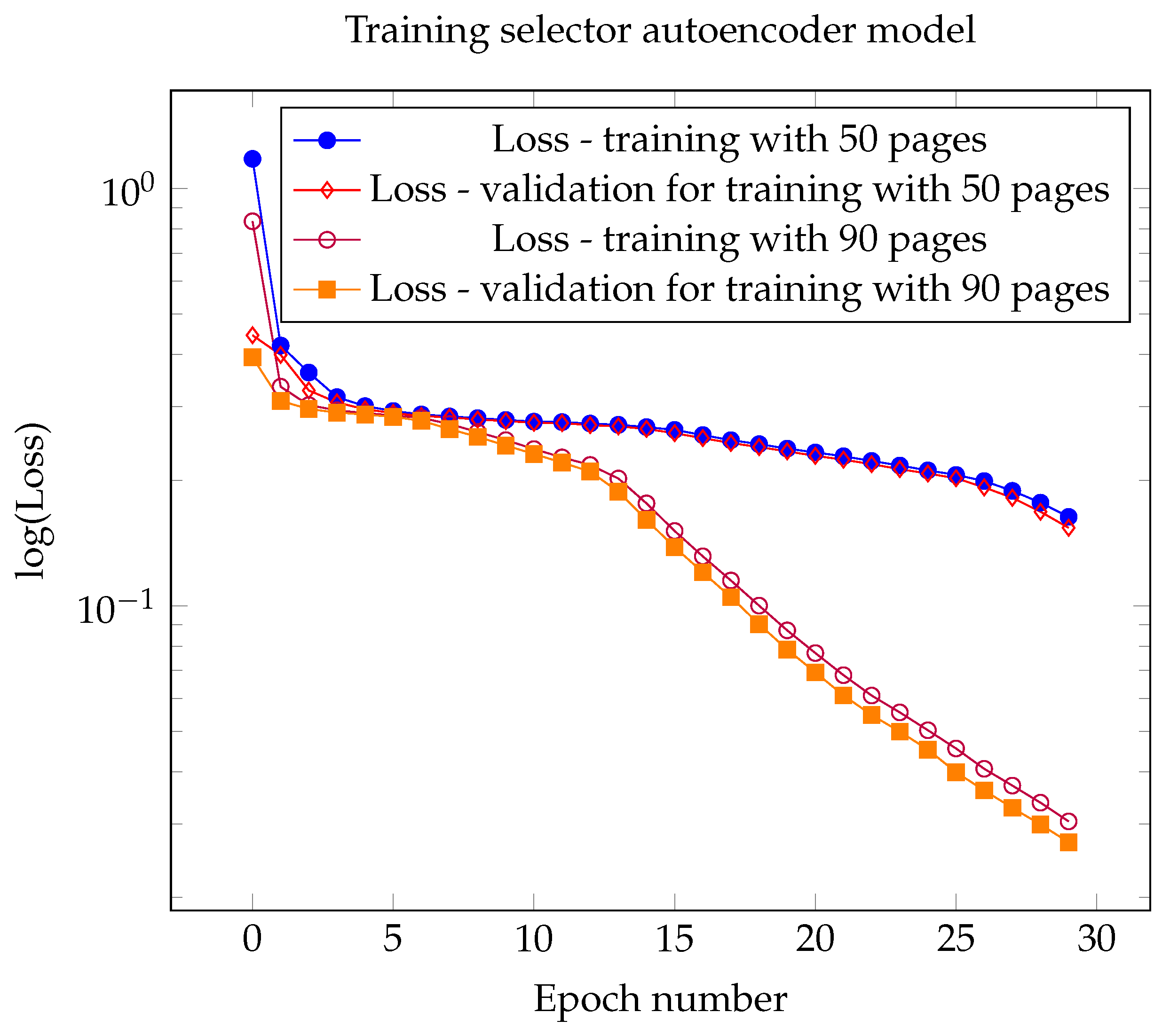

Figure 8,

Figure 9 and

Figure 10 display graphs illustrating the change in the loss function over epochs. Based on our chosen validation set, we ensured that the models do not overfit the data; in all training runs, the validation error never increased.

The inputs for the selector autoencoder are the simplest, so there is not a significant difference between the validation and training errors. In contrast, for the style and attribute models, the difference in errors (validation and training) was more noticeable. The graphs also show training scenarios with both 50 and 90 pages, along with their respective validations using a 10-page validation set. It is evident that training with more pages yielded better results.

When the training set was increased from 50 to 90 web pages, the following improvements were observed at the end of 10 epochs of autoencoder training:

Attributes: ∼20% better loss value in training and ∼10% better in validation.

Selectors: ∼80% better loss value in both training and validation.

Styles: ∼59% better loss value in training and ∼33% better in validation.

From the above list of improvements when increasing the number of pages, we can conclude that increasing the dataset size enhances autoencoder performance. The selector autoencoder benefits the most from a larger dataset, while the attribute autoencoder benefits the least. This can be explained by the fact that selectors have more inherent structure and rules in their formation, whereas attributes can have a wide variety of values and show significant differences across web pages.

The graphs clearly show that the loss function value consistently decreases across all epochs. The use of the AdamW optimizer also reduced the chance of overfitting. This algorithm improves upon the Adam optimizer (which is based on adaptive moment estimation) by introducing a weight decay factor. With each weight update step, this factor reduces the weights by a certain amount.

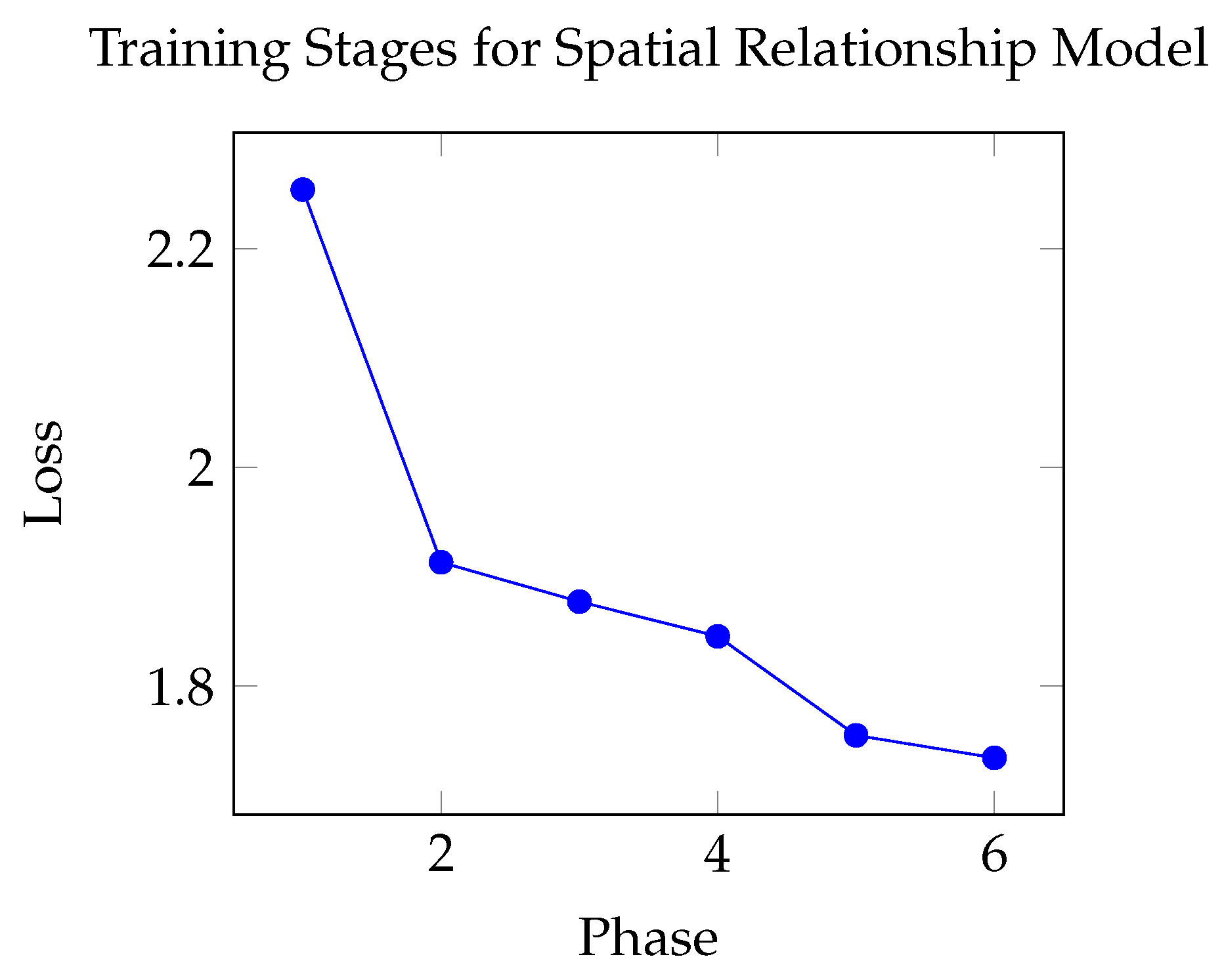

4.1.2. Main Model

Model training was conducted in phases because pairs from different web pages can have vastly different properties (attribute, style, and selector vectors). This meant training progressed very slowly when pairs from highly dissimilar pages appeared in quick succession. We solved this by implementing phased training. The training was conducted in several steps, validating it with new pages. We selected three pages that use different layout approaches: CSS flex, CSS grid, and float with relative units. This selection ensured that the training did not favor any particular CSS approach for creating web page layouts. You can see the training results by phase in

Figure 11.

The phases were structured so that we would take a portion of the dataset and train on it (e.g., the first 40 pages). In the next phase, training would continue with 30 new pages plus 10 pages from the previous phase. This approach prevented the model from overfitting while also giving it space to learn specific characteristics that are valid across diverse pages.

The model was also fine-tuned after initial training. For this step, we used geometric conditions that should hold true among the recognized labels. For example, if the model identifies a “left-right” relationship between elements A and B (meaning the left edge of element A is next to the right edge of element B), then when the input is reversed (element B then element A), the model should recognize a “right-left” relationship. This is the property of symmetry. We calculated the symmetry error using Mean Squared Error (MSE) between symmetric relationships when the pair is presented as (A, B) versus (B, A).

Additionally, if elements A and B have a “left-right” relationship, they cannot simultaneously have a “right-left” relationship. This is the property of exclusivity.

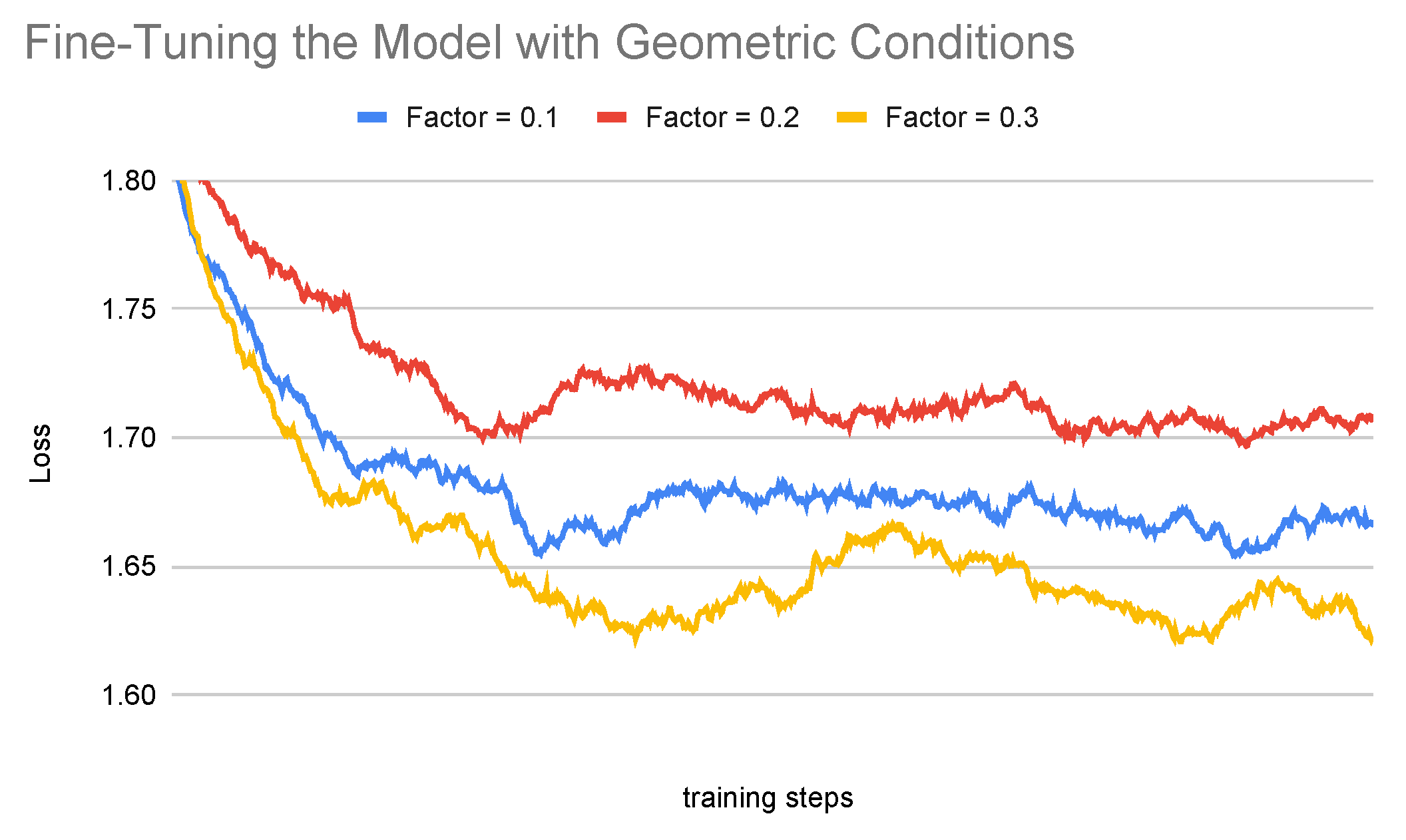

The results of the fine-tuning process are visible in

Figure 12. We experimented with different influence factors for these geometric conditions. A factor of 0.3 proved to be the best, as the model achieved the lowest overall loss with this value.

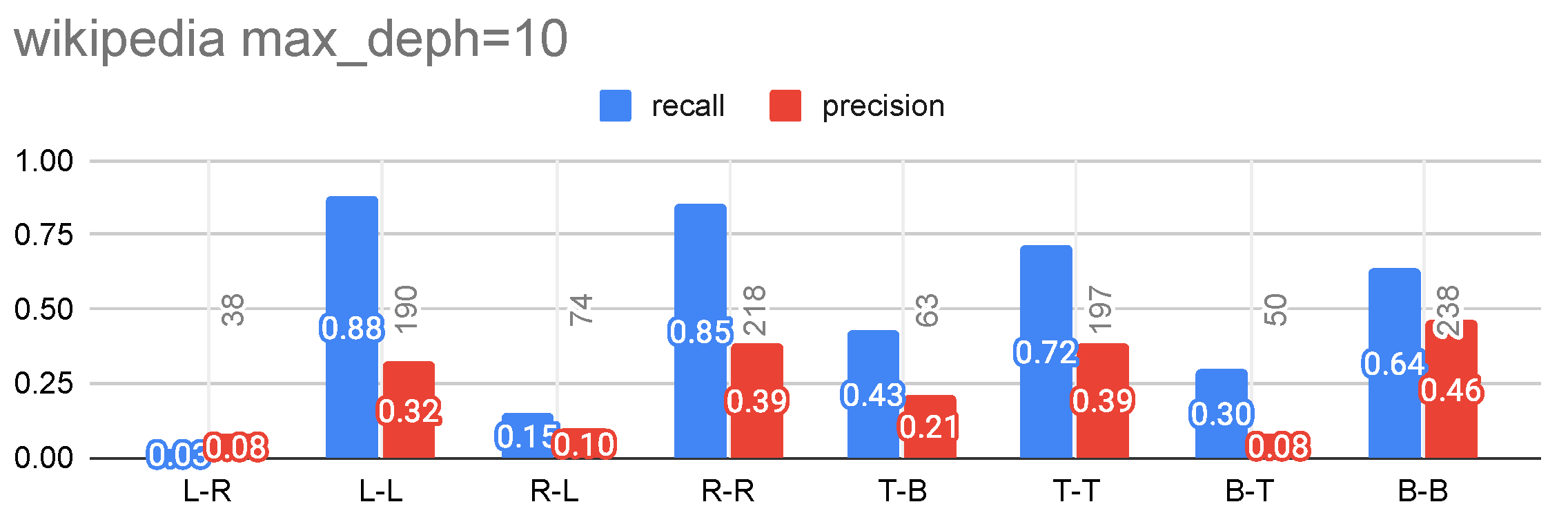

Below, we present the precision and recall metrics for the three selected validation pages: Wikipedia (

Figure 13): the layout is primarily built using relative units and floated elements.

Figure 14 and

Figure 15 show the recall and precision values for all spatial relationships for elements in the Wikipedia web page.

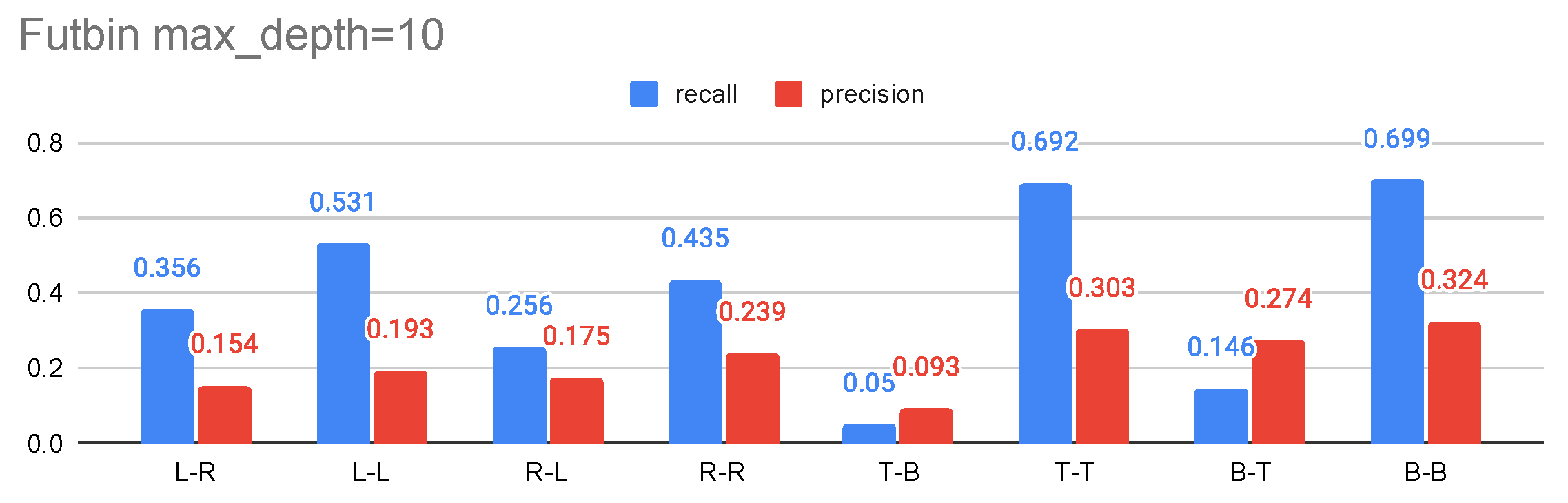

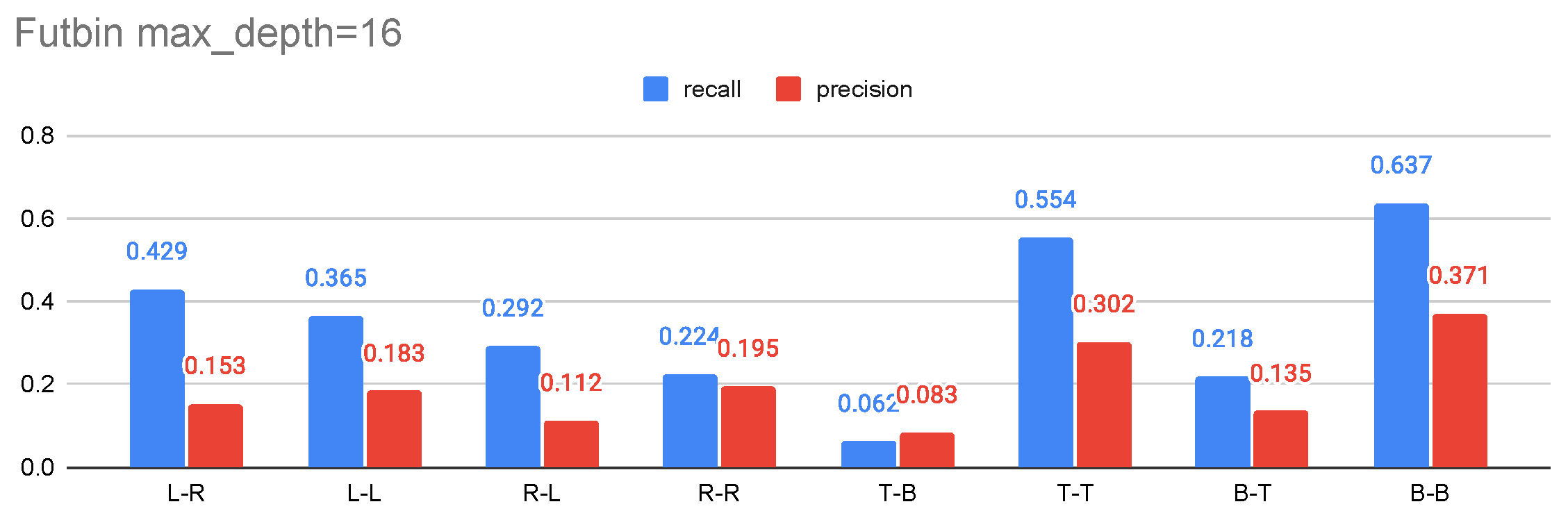

Futbin (

Figure 16), whose layout is primarily built using the CSS flex property.

Figure 17 and

Figure 18 display the recall and precision values for all spatial relationships in the Futbin web page. For elements up to depth 10, the average recall and precision values are better than when considering elements up to depth 16. This is likely because deeper levels contain a higher number of smaller elements, making their spatial relationships more challenging to determine.

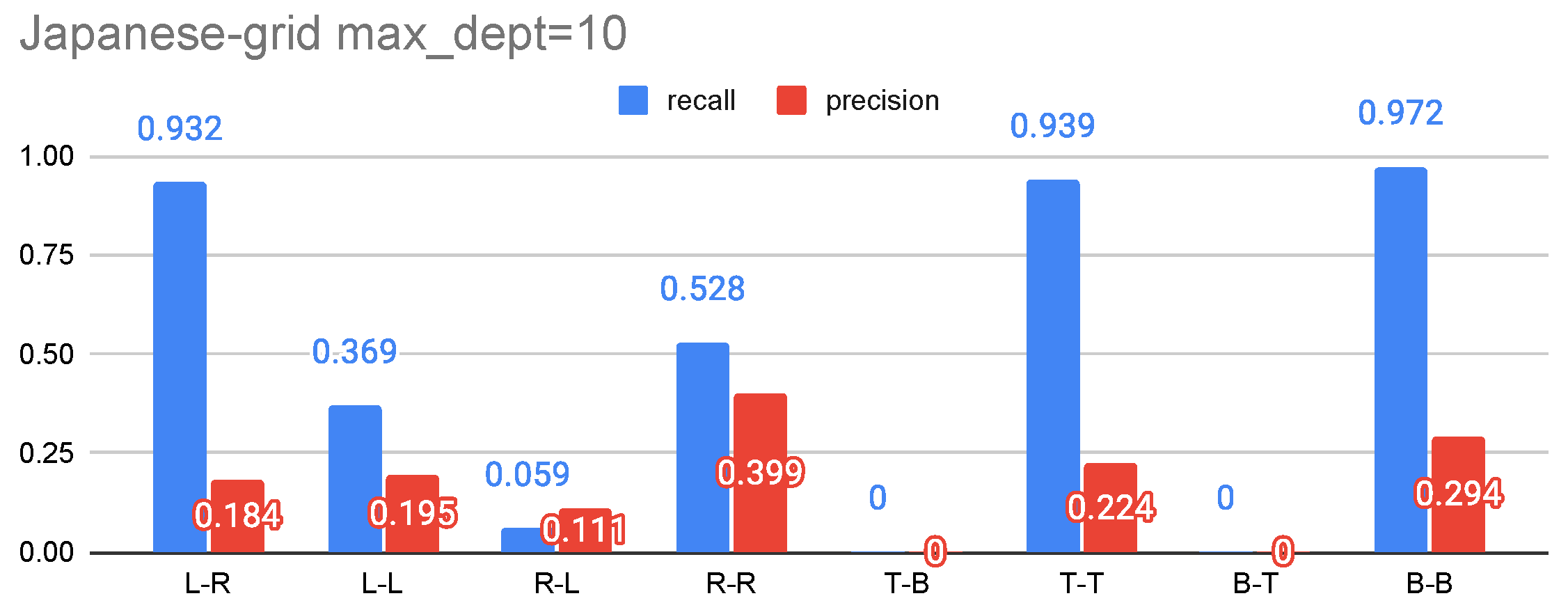

Japanese-grid (

Figure 19), whose layout is primarily built using CSS grid properties.

Figure 20 and

Figure 21 show the recall and precision values for all spatial relationships. On this particular web page, the approach does not recognize “bottom-top” and “top-bottom” relationships, but it achieves similar results for other relationships compared to the previous two pages.

These three examples, using web pages not included in the training set, demonstrate that the model can effectively recognize certain relationships between elements. Furthermore, there’s no significant impact of the page’s implementation approach (e.g., CSS Grid, Flexbox) on the spatial relationship recognition results.

Another form of validation for this model can be found in

Section 4.2. There, the model is validated in a real-world application on a much larger set of new pages.

4.1.3. Why Not BERT for Autoencoders?

In this section, we will explain why we developed our own autoencoders instead of leveraging existing approaches like the BERT model. BERT, developed by Google, is a prominent model for NLP. It excels at analyzing words within the context of their surrounding text, building on the revolutionary transformer architecture (which is based on the multi-head attention mechanism) (for more on BERT, see [

30]). One key application of BERT is encoding words into representative vectors, a role our autoencoders also fulfill.

To evaluate whether BERT would be a better fit for our application compared to our custom autoencoders, we trained our approach using BERT as well. After one epoch of training on 40 pages, the results show that when our custom autoencoders are used to encode element properties, the model achieves a loss function value that is, on average, ∼8% better than when using BERT.

Furthermore, utilizing our custom autoencoders results in a simpler final model with a smaller memory footprint for weights (353.6 KB with our autoencoders versus 661.3 KB with BERT). The dataset size is also significantly smaller with our autoencoders (3.2 GB with our autoencoders versus 15 GB with BERT). For this analysis, we used ’bert-base-uncased’, which is one of the more compact BERT versions. For BERT’s encoding tensor, we took the output from its last layer (last_hidden_state[:, 0, :]). These results do not claim that the autoencoders in this paper are universally superior to BERT, but rather that their application in this specific context is more appropriate and efficient than using BERT.

4.2. Applying the Approach for Web Page Similarity Assessment

We used the previously described dataset from Bozkir et al. [

20] to validate our approach. Since this dataset was developed independently of our methods, it provided a robust way to validate our models. Our hypothesis going into the experiment was our approach could recognize the similarity of web pages with comparable success to the work from which the dataset was taken, but without using any results or intermediate results from a web browser (i.e., without using the DOM or screenshots of web pages).

Our experiment had two limitations. First, a portion of the pages in the dataset were inadequately archived. This limitation was easily overcome, as the number of poorly archived pages was small (2 out of 40). The second limitation was that the original approach, from the work where the dataset was introduced, used comparisons based on screenshots of only a portion of the web page (specifically, 1024 px of page height). This means the clustering in the original study was limited to only a part of the web page. Consequently, two web pages that are partially similar might not be similar in their entirety, and vice versa, web pages that are entirely similar might not appear so based on the sampled portions. This limitation was not as straightforward to overcome. However, by training an auxiliary neural network, we can implicitly assign greater importance to vectors or parts of vectors that originate from elements displayed within the 1024 px height.

The expected outcome of the experiment was that our approach would be able to identify the four distinct groups of similarity of the web pages and that there would be a similarity match with the method proposed in Bozkir et al. [

20]. We also anticipated that the approach described in this paper would produce meaningful distances between pages that align with the groups from the aforementioned work.

The metric used in the original dataset paper is ANR (Average Normalized Rank). This metric, ranging from 0 to 1 (where 0 is the best value), evaluates how well a system can return expected results for a given input. It reflects the average relative position of the correct results among the retrieved results (from the system). ANR is frequently used in information retrieval tasks to assess whether a search consistently ranks elements from the same group close to each other.

The formula for ANR is

where:

N—total number of elements;

—number of relevant elements;

—real rank of the i-th relevant element.

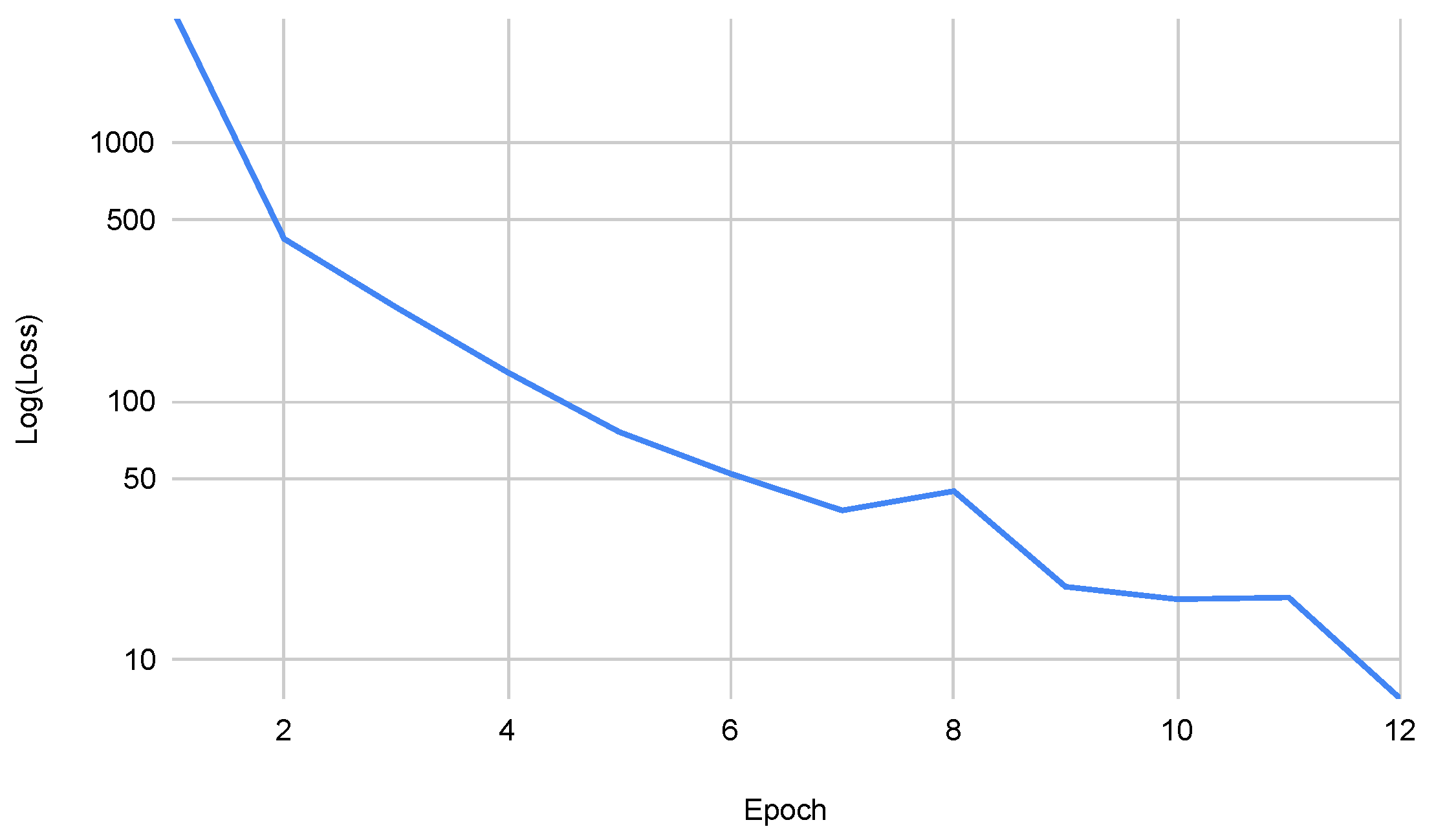

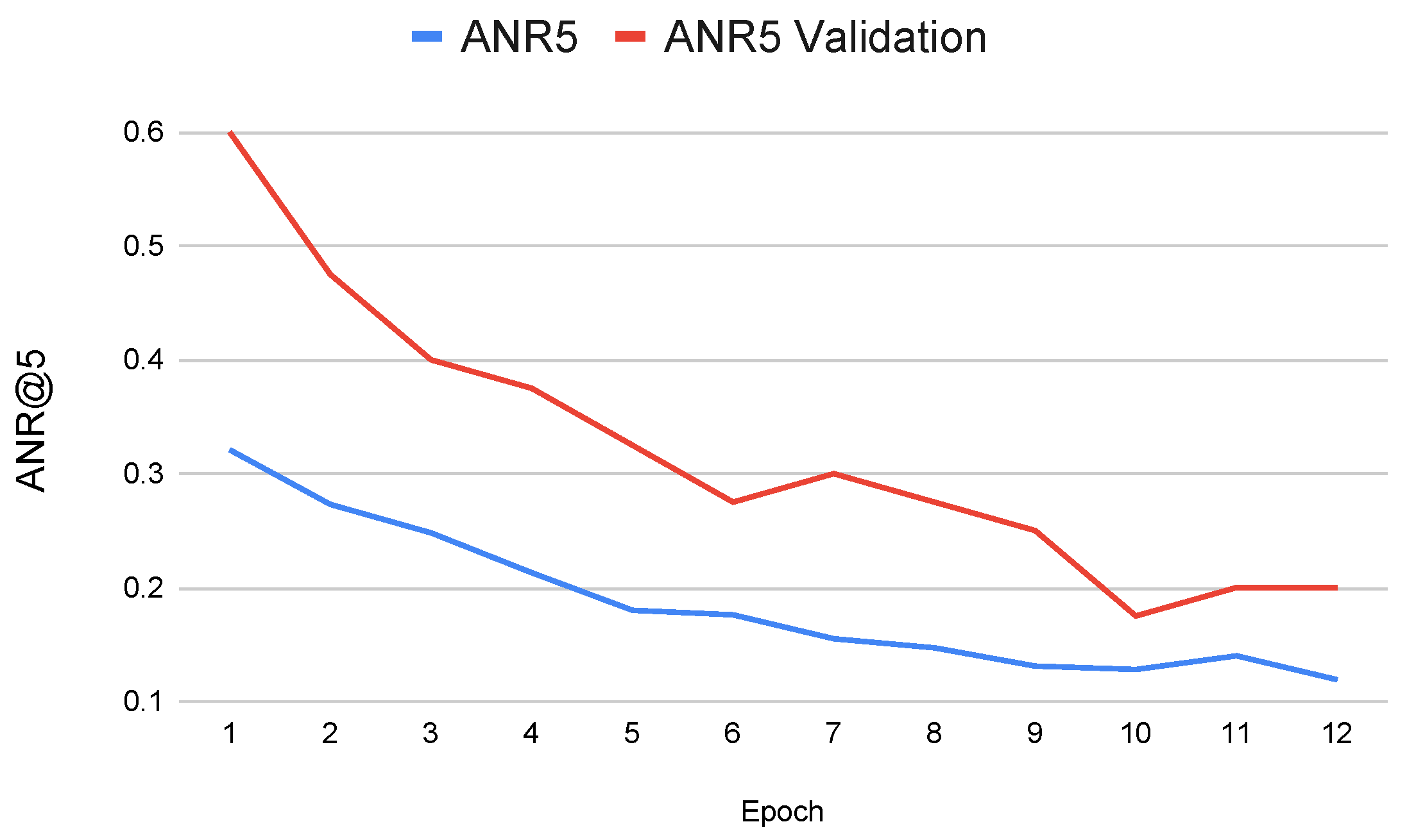

The utility model (the simple neural network for preparing vectors for comparison) was trained for 12 epochs. The training results are shown in

Figure 22 and

Figure 23. These graphs indicate that training over epochs reduces the model’s error, and validation with the ANR top 5 metric confirms that the model achieves improved performance.

After we trained our model, we measured its performance. On the data it was trained on (the training set), it got an ANR top 5 score of 0.119. But on the validation set, its ANR score was 0.2.

To see how well our model works, we compared its score on the new data (0.2) to the best-performing model from another research paper by Bozkir et al., which got an ANR score of 0.1233. Our model’s score is a bit worse than theirs. But it has a notable advantage because our model uses much less information. The other model had to look at parts of screenshots and other web page data, while ours only needed the website’s source code. Using cropped screenshots can be a problem because it might make some web pages that are actually similar look different from each other (and vice versa). Our method avoids this by focusing on the underlying code, which gives us a more consistent way to determine similarity.

The use of cropped screenshots by Bozkir et al. could have also impacted their ANR score. Because their source code is not publicly available, we could not compare ANR results using full web page screenshots to see how that might have affected their findings.

It is worth noting that the original paper also calculates the ANR top 10 metric, which we could not perform due to our reduced set of pages (some were discarded due to incomplete archiving). Another limitation of our results is that the original paper’s topn5 comparison is based on user evaluation results, whereas we rely on predefined groups since complete user evaluation data is not available in our dataset.

The results of the evaluation metrics applied to our approach, mentioned earlier, showed that it yields similar results, even without access to all the information available to the method from the previously cited work (screen images and DOM values). This confirms our earlier hypothesis.

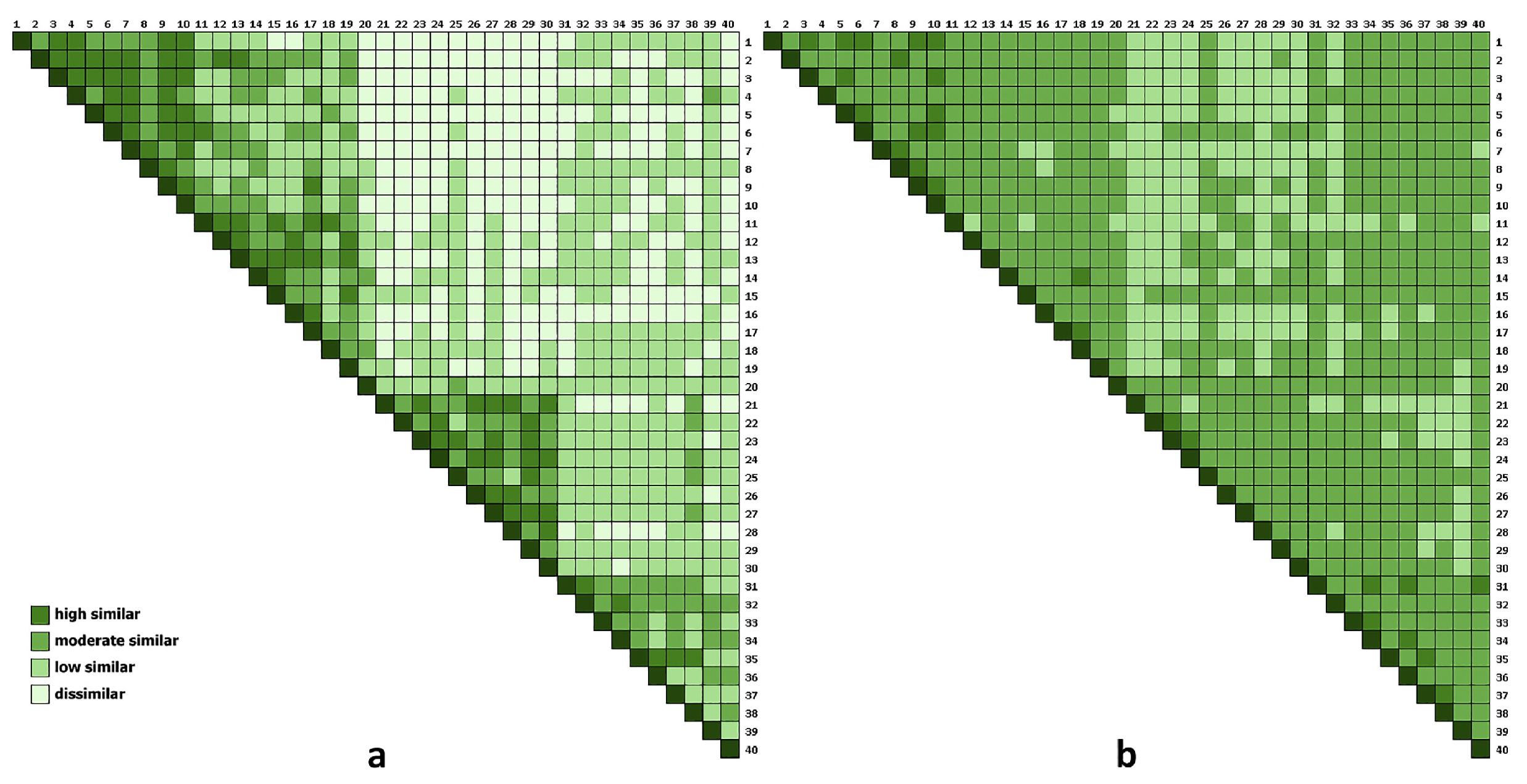

Even without these metrics, we could visually assess the capability of our approach by directly comparing the similarity matrices it generates against those from other methods.

Figure 24 displays the similarity matrix of our approach. If we compare it to the matrix from the user evaluation in the paper that introduced the dataset (

Figure 25a), we can see that, visually (in addition to the metrics already discussed), our approach produced results very similar to human perception.

In this case, you can even observe that web page groups 1 and 2 (the first half, up to page number 20) have a less clear boundary between them, while groups 3 and 4 (the latter half) are well-demarcated. This aligns with the user evaluation results in the work of Bozkir et al. [

20].

If we compare the results of our method with those from the earlier work (

Figure 24 and

Figure 25b), we can see that our approach visually corresponds better to human perception and more effectively distinguishes different groups. On the similarity matrices, the intersections of different groups show more white values, indicating greater distances.

5. Discussion

In this paper, we presented a generalized approach that can be applied without directly using a web browser. To build our model, we focused on modeling individual elements and their relationships as displayed on a web page. This process involved creating three autoencoder models for element attributes, selectors, and styles, along with one model for learning relationships based on these encodings.

The autoencoder models showed strong performance during training, effectively mapping data from the code domain (CSS code, CSS selectors, or HTML attributes) into a continuous value domain. Such values are well-suited for various neural network-based applications.

After developing models for encoding elements, we created a method to extract crucial information for learning directly from a web page’s document. Only during training did we identify relevant elements and their relationships using a K-D Tree and the DOM. The DOM provided the necessary relationship labels for the model to learn. With this, we had all the information required for training. On the other hand, during inference, the model could then take only HTML and CSS to recognize spatial relationships.

The models showed good results in recognizing spatial relationships between elements, with recall ranging from 0.35 to 0.979 and precision from 0.1 to 0.46. The low precision was a trade-off for achieving higher recall in this multi-label classification problem. Furthermore, precision varies significantly across different spatial relationships. Some relationships are inherently more complex and difficult to assess accurately. For instance, relationships between elements involving complex CSS layouts or elements with smaller dimensions are challenging to classify with high precision. The lower precision scores (e.g., 0.10) are often associated with these more ambiguous cases. The fact that the model still identifies most of the relationships (high recall) is what we consider a successful outcome for our application. While these results could have been improved with more training and a larger dataset, even with these values, the model has been successfully applied to the problem of web page similarity assessment.

Considering the results obtained in this paper, we can conclude that our approach is effective for assessing web page similarity. Unlike other methods discussed in the literature review and those known to the authors, ours is unique in that it doesn’t require any data from a web browser (neither screenshots nor the DOM tree of a parsed web page nor a combination of these). Even without this information, our method achieves strong results with a validation ANR of 0.2. This is comparable to the approach presented in the work of Bozkir et al. [

20], which uses all that information and achieved an ANR of 0.1233 for its best configuration. Based on a visual inspection of the similarity matrix, we found that the patterns of web page groupings our method created were very similar to those obtained from the original user evaluation data. In other words, our model classified web pages as “similar” in a way that closely matched human judgment.

These results show that we have a method that can successfully assess how visually similar web pages are without actually rendering them in a web browser. This confirms our hypothesis and also offers several advantages over other methods:

Performance: Methods relying on a web browser must load the entire web page, consuming significant memory and time (beyond HTML and CSS, all multimedia and scripts need to be loaded). We could not compare performance (time and memory) directly, as we do not have the source code for the method from the available dataset.

Determinism: Browser-dependent methods are sensitive to ads, browser versions, and the browser’s current state at the time of analysis. Methods that rely solely on source code do not have this problem; they will always produce the same output for the same input.

Security: If browser-reliant methods analyze malicious web pages, they risk potential security vulnerabilities because malicious scripts can execute alongside the page content. The method presented in this paper is immune to such attacks because it does not execute scripts from the web page, nor are web pages parsed within a browser.

Beyond these practical benefits, our approach shows a strong alignment with human perception, opening up possibilities for automating web page analysis. This approach automates parts of the analysis that would typically require human effort. In fact, human evaluation of visual web page similarity based solely on available source code is often impossible for more complex web pages with many elements and numerous CSS rules applying to the same elements.

Challenges, Limitations, and Future Work

Modeling web pages and their relationships is a challenging process. The challenges are numerous because the methods of creating web pages change drastically and frequently, leading to diverse web pages that achieve similar element layouts in different ways.

Some of these approaches require little data analysis (e.g., elements with fixed dimensions and positions), while others demand not only an analysis of the elements being compared but also an examination of their parents (and ancestors) and their siblings. Throughout this process, web pages often contain many elements that are effectively outliers (elements added as advertisements or as by-products of specific libraries, frameworks, or plugins). This further complicates the creation of a generalizable model.

These challenges show why creating adaptable methods for modeling web layouts is difficult and why many current methods depend on browser-based rendering. However, our approach is specifically designed and created despite these challenges. By focusing on the structural and spatial relationships identified directly from the source code, it can learn strong patterns that capture the layout’s core elements. This proves that, even though the overall task is tough, our method offers a practical and effective solution to the problem, which we evaluated through its application in assessing web page similarity.

Our current model was trained on 100 web pages, which takes a lot of time (one day for three training cycles). Training with more web pages would help the model better handle the many different ways web pages are designed. This would likely lead to more accurate results in recognizing spatial relationships.

While the current accuracy is good enough for the application shown in this paper, other uses might need better precision. For those cases, the model could be retrained with more data using the same method described here.

Beyond the challenges and limitations already mentioned, it would be beneficial to explore other applications in the future. The work we have done has opened up possibilities for applying these models in various areas and to problems identified during the analysis of the field’s current state. Although this goes beyond the scope of this particular paper, it would be valuable to investigate how the models and approaches developed could be utilized in diverse domains and their specific challenges.