GLNet-YOLO: Multimodal Feature Fusion for Pedestrian Detection

Abstract

1. Introduction

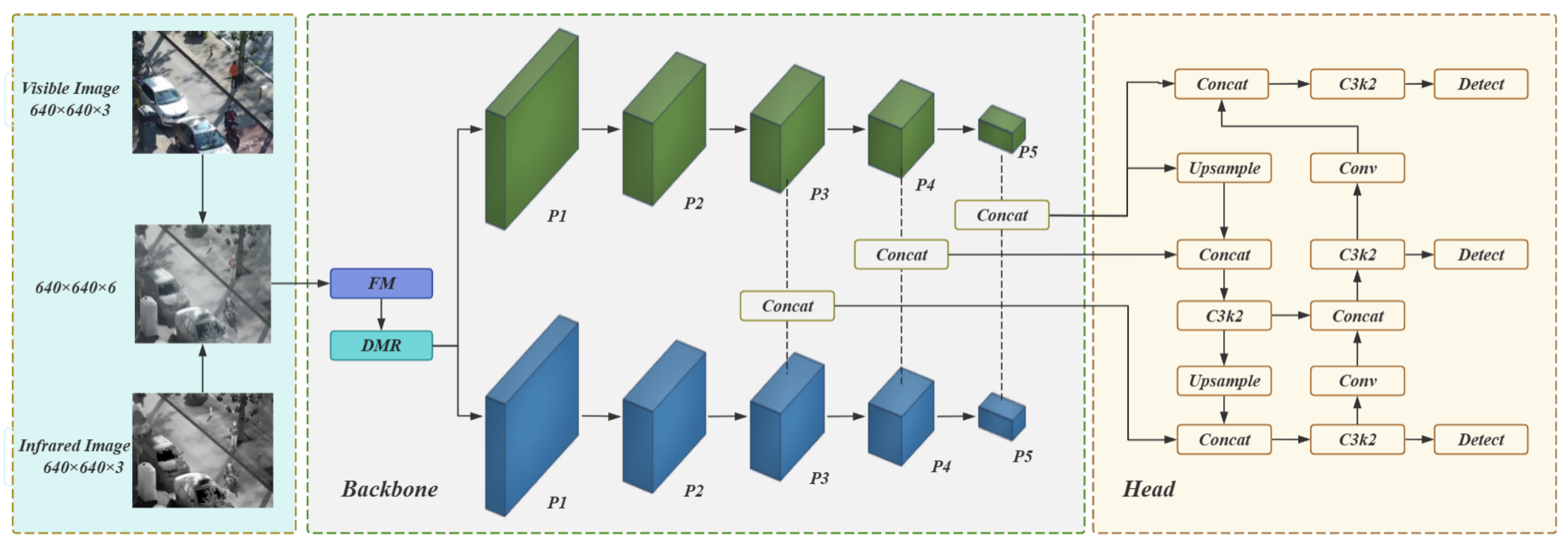

- Construct an early-fusion dual-branch architecture: Based on YOLOv11, a dual-branch structure is designed, with the fusion node moved forward to the modality input stage. This enables interaction to be completed before feature attenuation, preserving modality-specific learning while enhancing modality complementarity in complex scenes.

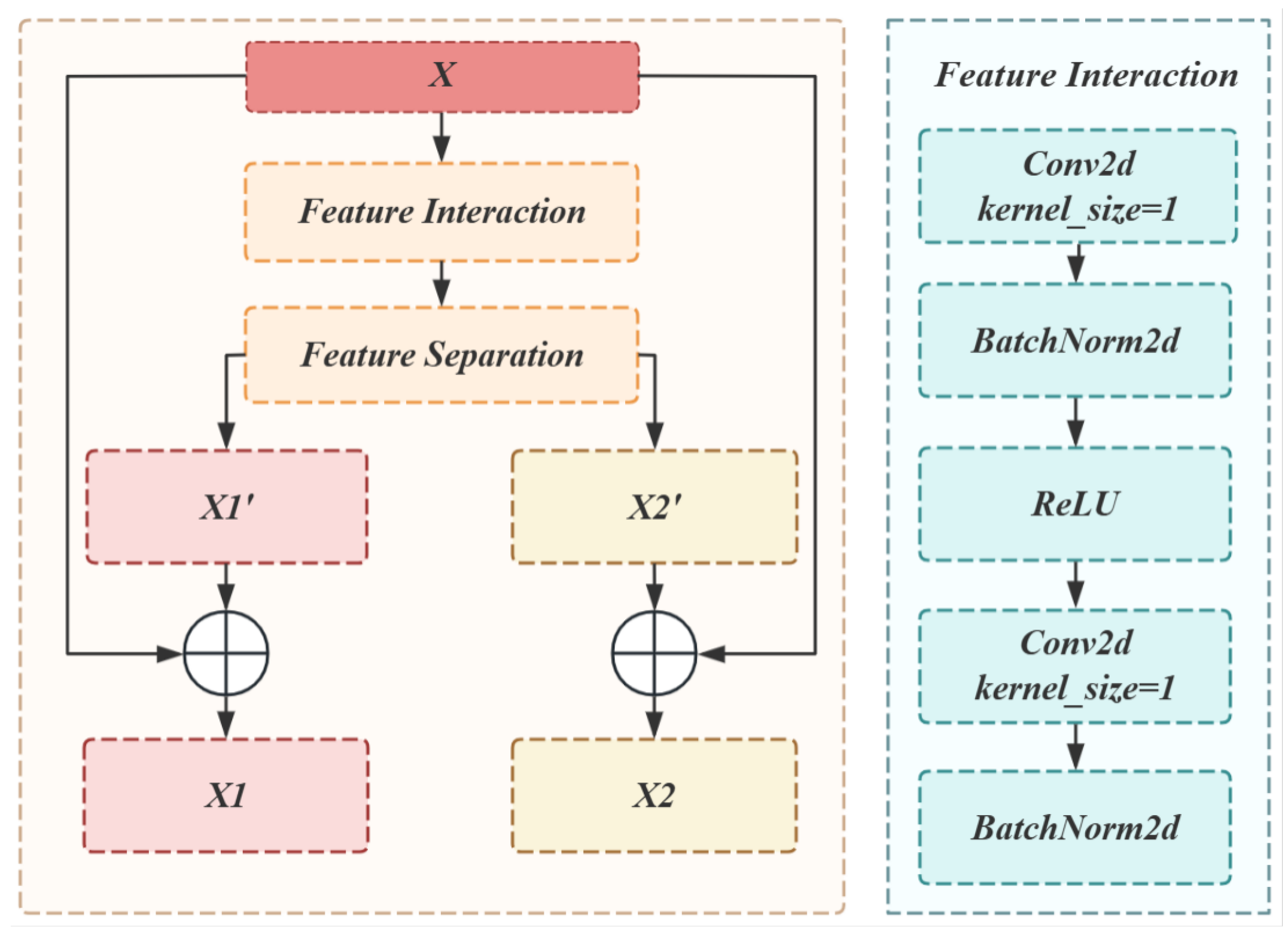

- Propose a global–local collaborative mechanism: The FM module optimizes global features through “interaction–enhancement–attention,” while the DMR module refines local interactions via “slice separation + residual connection.” The collaboration between the two improves the depth and precision of fusion.

- Verify the effectiveness of the lightweight framework: Systematic experiments on the LLVIP and processed KAIST datasets validate the superiority of the framework, providing an efficient and practical technical paradigm for the field of multimodal object detection.

2. Related Work

3. Research Methodology

3.1. GLNet-YOLO: Improved Network Framework

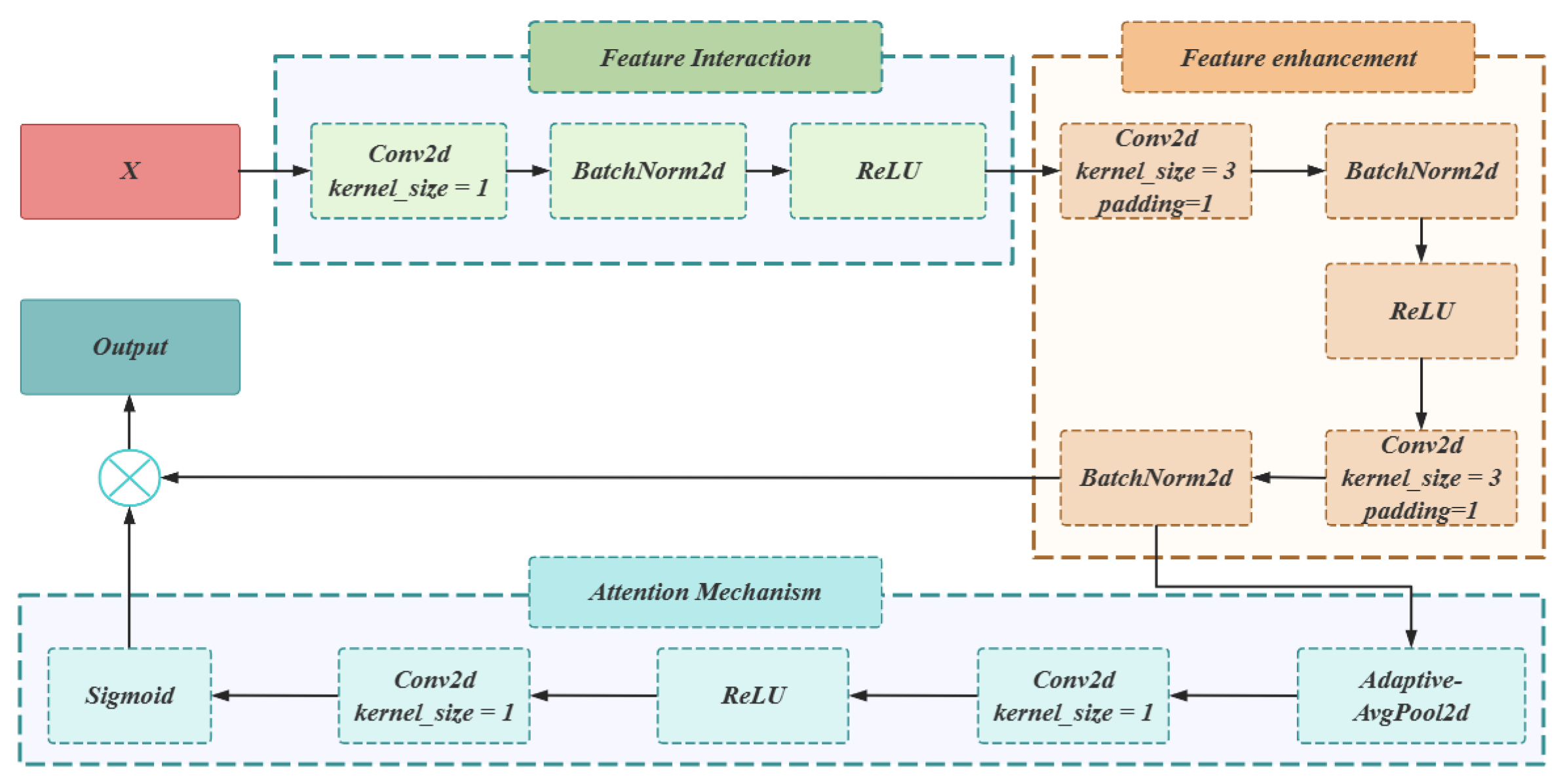

3.2. FM Module: Global Feature Fusion and Enhancement

- Convolution operation (Conv2d):

- Batch normalization (BatchNorm2d):

- ReLU activation function:

3.3. DMR Module: Local Processing and Mode Separation

4. Experimental Results and Analysis

4.1. Experimental Environment

4.2. Dataset

4.3. Evaluation Indicators

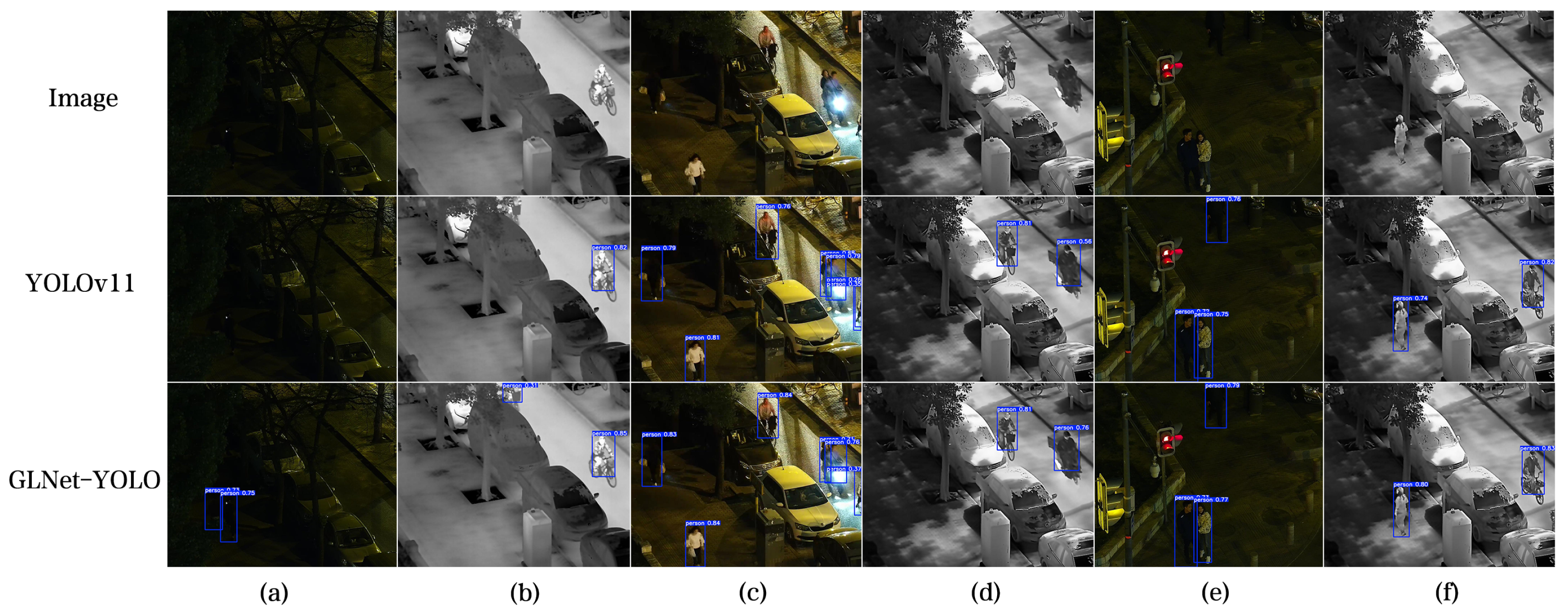

4.4. Model Testing and Evaluation

4.5. Summary of Experimental Results

5. Discussion

5.1. The Trade-Off Between Detection Quantity and Quality

5.2. Synergistic Value of FM and DMR Modules

5.3. Model Limitations and Deployment Challenges

5.4. Broader Context and Future Directions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Lee, W.-Y.; Jovanov, L.; Philips, W. Multimodal pedestrian detection based on cross-modality reference search. IEEE Sens. J. 2024, 24, 17291–17306. [Google Scholar] [CrossRef]

- Dasgupta, K.; Das, A.; Yogamani, S. Spatio-contextual deep network-based multimodal pedestrian detection for autonomous driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15940–15950. [Google Scholar] [CrossRef]

- Zhao, R.; Hao, J.; Huo, H. Research on Multi-Modal Pedestrian Detection and Tracking Algorithm Based on Deep Learning. Future Internet 2024, 16, 194. [Google Scholar] [CrossRef]

- Hamdi, S.; Sghaier, S.; Faiedh, H.; Souani, C. Robust pedestrian detection for driver assistance systems using machine learning. Int. J. Veh. Des. 2020, 83, 140–170. [Google Scholar] [CrossRef]

- Chen, J.; Ding, J.; Ma, J. HitFusion: Infrared and visible image fusion for high-level vision tasks using transformer. IEEE Trans. Multimed. 2024, 26, 10145–10159. [Google Scholar] [CrossRef]

- Gupta, I.; Gupta, S.; Mishra, A.K.; Diwakar, M.; Singh, P.; Pandey, N.K. Deep learning-enabled infrared and visual image fusion. In Proceedings of the International Conference Machine Learning, Advances in Computing, Renewable Energy and Communication; Springer: Singapore, 2024. [Google Scholar]

- Sun, X.; Yu, Y.; Cheng, Q. Adaptive multimodal feature fusion with frequency domain gate for remote sensing object detection. Remote Sens. Lett. 2024, 15, 133–144. [Google Scholar] [CrossRef]

- Chen, J.; Yang, L.; Liu, W.; Tian, X.; Ma, J. LENFusion: A joint low-light enhancement and fusion network for nighttime infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2024, 73, 5018715. [Google Scholar] [CrossRef]

- Du, K.; Li, H.; Zhang, Y.; Yu, Z. CHITNet: A complementary to harmonious information transfer network for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2025, 74, 5005917. [Google Scholar] [CrossRef]

- Tang, L.; Yuan, J.; Zhang, H.; Jiang, X.; Ma, J. PIAFusion: A progressive infrared and visible image fusion network based on illumination aware. Inf. Fusion 2022, 83–84, 79–92. [Google Scholar] [CrossRef]

- Meng, S.; Liu, Y. Multimodal Feature Fusion YOLOv5 for RGB-T Object Detection. In Proceedings of the 2022 China Automation Congress (CAC), Xiamen, China, 25–27 November 2022; pp. 2333–2338. [Google Scholar] [CrossRef]

- Rao, D.; Xu, T.; Wu, X.-J. TGFuse: An infrared and visible image fusion approach based on transformer and generative adversarial network. IEEE Trans. Image Process. 2023; early access. [Google Scholar] [CrossRef]

- Hui, L.; Wu, X.-J. DenseFuse: A fusion approach to infrared and visible images. IEEE Trans. Image Process. 2018, 28, 2614–2623. [Google Scholar] [CrossRef]

- Tang, W.; He, F.; Liu, Y. YDTR: Infrared and visible image fusion via Y-shape dynamic transformer. IEEE Trans. Multimed. 2023, 25, 5413–5428. [Google Scholar] [CrossRef]

- Hwang, S.; Han, D.; Jeon, M. Multispectral Detection Transformer with Infrared-Centric Feature Fusion. arXiv 2025, arXiv:2505.15137. [Google Scholar] [CrossRef]

- Zhang, H.; Zuo, X.; Jiang, J.; Guo, C.; Ma, J. MRFS: Mutually Reinforcing Image Fusion and Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–18 June 2024; pp. 26964–26973. [Google Scholar] [CrossRef]

- Hu, Z.; Kong, Q.; Liao, Q. Multi-Level Adaptive Attention Fusion Network for Infrared and Visible Image Fusion. IEEE Signal Process. Lett. 2025, 32, 366–370. [Google Scholar] [CrossRef]

- Chen, Y.; Yuan, X.; Wang, J.; Wu, R.; Li, X.; Hou, Q.; Cheng, M.-M. YOLO-MS: Rethinking Multi-Scale Representation Learning for Real-Time Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 4240–4252. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Su, Y.; Kang, F.; Wang, L.; Lin, Y.; Wu, Q.; Li, H.; Cai, Z. PC-YOLO11s: A lightweight and effective feature extraction method for small target image detection. Sensors 2025, 25, 348. [Google Scholar] [CrossRef]

- Xie, Y.; Zhang, L.; Yu, X.; Xie, W. YOLO-MS: Multispectral object detection via feature interaction and self-attention guided fusion. IEEE Trans. Cogn. Dev. Syst. 2023, 15, 2132–2143. [Google Scholar] [CrossRef]

- Deng, J.; Bei, S.; Su, S.; Zuo, Z. Feature fusion methods in deep-learning generic object detection: A survey. In Proceedings of the IEEE 9th Joint International Conference IT Artificial Intelligence (ITAIC), Chongqing, China, 11–13 December 2020; pp. 431–437. [Google Scholar] [CrossRef]

- Pan, L.; Diao, J.; Wang, Z.; Peng, S.; Zhao, C. HF-YOLO: Advanced Pedestrian Detection Model with Feature Fusion and Imbalance Resolution. Neural Process. Lett. 2024, 56, 90. [Google Scholar] [CrossRef]

- Du, Q.; Wang, Y.; Tian, L. Attention module based on feature normalization. In Proceedings of the 4th International Conference on Intelligent Computing Human-Computer Interaction (ICHCI), Guangzhou, China, 4–6 August 2023; pp. 438–442. [Google Scholar] [CrossRef]

- Sun, J.; Yin, M.; Wang, Z.; Xie, T.; Bei, S. Multispectral Object Detection Based on Multilevel Feature Fusion and Dual Feature Modulation. Electronics 2024, 13, 443. [Google Scholar] [CrossRef]

- Li, Y.; Sun, L. FocusNet: An infrared and visible image fusion network based on feature block separation and fusion. In Proceedings of the 17th International Conference on Advanced Computer Theory and Engineering (ICACTE), Hefei, China, 13–15 September 2024; pp. 236–240. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, W.; Tang, Y.; Tang, J.; Wu, G. Residual feature aggregation network for image super-resolution. In Proceedings of the IEEE/CVF Conference Computer Vision Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 2356–2365. [Google Scholar] [CrossRef]

- Jia, J.X.; Zhu, C.; Li, M.; Tang, W.; Liu, S.; Zhou, W. LLVIP: A Visible-infrared Paired Dataset for Low-light Vision. arXiv 2021, arXiv:2108.10831. [Google Scholar] [CrossRef]

- Li, C.; Song, D.; Tong, R.; Tang, M. Multispectral Pedestrian Detection via Simultaneous Detection and Segmentation. arXiv 2018, arXiv:1808.04818. [Google Scholar] [CrossRef]

- Zheng, X.; Zheng, W.; Xu, C. A multi-modal fusion YoLo network for traffic detection. Comput. Intell. 2024, 40, e12615. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Khanam, R.; Hussain, M. What is YOLOv5: A deep look into the internal features of the popular object detector. arXiv 2024, arXiv:2407.20892. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Chen, Y.-T.; Shi, J.; Ye, Z.; Mertz, C.; Ramanan, D.; Kong, S. Multimodal Object Detection via Probabilistic Ensembling. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 139–158. [Google Scholar]

- Cao, Y.; Bin, J.; Hamari, J.; Blasch, E.; Liu, Z. Multimodal Object Detection by Channel Switching and Spatial Attention. In Proceedings of the IEEE/CVF Conference Computer Vision Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 403–411. [Google Scholar] [CrossRef]

- Zhao, T.; Yuan, M.; Jiang, F.; Wang, N.; Wei, X. Removal then Selection: A Coarse-to-Fine Fusion Perspective for RGB-Infrared Object Detection. arXiv 2024, arXiv:2401.10731. [Google Scholar] [CrossRef]

- Ma, J.; Zhang, H.; Shao, Z.; Liang, P.; Xu, H. GANMcC: A Generative Adversarial Network With Multiclassification Constraints for Infrared and Visible Image Fusion. IEEE Trans. Instrum. Meas. 2021, 70, 5005014. [Google Scholar] [CrossRef]

- Zhang, H.; Ma, J. SDNet: A Versatile Squeeze-and-Decomposition Network for Real-Time Image Fusion. Int. J. Comput. Vis. 2021, 129, 2761–2785. [Google Scholar] [CrossRef]

- Tang, L.; Xiang, X.; Zhang, H.; Gong, M.; Ma, J. DIVFusion: Darkness-free infrared and visible image fusion. Inf. Fusion 2022, 91, 477–493. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Wu, M.; Yao, Z.; Verbeke, M.; Karsmakers, P.; Gorissen, B.; Reynaerts, D. Data-driven models with physical interpretability for real-time cavity profile prediction in electrochemical machining processes. Eng. Appl. Artif. Intell. 2025, 160, 111807. [Google Scholar] [CrossRef]

- Ge, J.; Yao, Z.; Wu, M.; Almeida, J.H.S., Jr.; Jin, Y.; Sun, D. Tackling data scarcity in machine learning-based CFRP drilling performance prediction through a broad learning system with virtual sample generation (BLS-VSG). Compos. Part B Eng. 2025, 305, 112701. [Google Scholar] [CrossRef]

| Model | Input | mAP@50 | mAP@50-95 |

|---|---|---|---|

| YOLOv3 (Darknet53) [30] | RGB | 85.9 | 43.3 |

| IR | 89.7 | 52.8 | |

| YOLOv5 (CSPD53) [31] | RGB | 90.8 | 50.0 |

| IR | 94.6 | 61.9 | |

| FasterR-CNN [32] | RGB | 91.4 | 49.2 |

| IR | 96.1 | 61.1 | |

| YOLOv8n | RGB | 88.0 | 49.9 |

| IR | 96.3 | 65.2 | |

| YOLOv11n | RGB | 87.4 | 49.7 |

| IR | 95.9 | 63.1 | |

| ProbEn [33] | RGB + IR | 93.4 | 51.5 |

| CSAA [34] | RGB + IR | 94.3 | 59.2 |

| RSDet [35] | RGB + IR | 95.8 | 61.3 |

| GANMcC [36] | RGB + IR | 87.8 | 49.8 |

| DenseFuse [13] | RGB + IR | 88.5 | 50.4 |

| SDNet [37] | RGB + IR | 86.6 | 50.8 |

| DIVFusion [38] | RGB + IR | 89.8 | 52.0 |

| YOLOv11n | RGB + IR | 96.9 | 62.9 |

| GLNet-YOLO (ours) | RGB + IR | 96.6 | 65.9 |

| Model Configuration | map50 | map50-95 | Model Size | Core Changes |

|---|---|---|---|---|

| YOLOv11n (RGB + IR) | 96.9% | 62.9% | 8.16 | Basic dual branch |

| +FM Module | 96.8% (−0.1%) | 65.7% (+2.8%) | 8.17 | Verify FM module |

| +DMR Module | 96.5% (−0.4%) | 65.0% (+2.1%) | 8.17 | Verify DMR module |

| +FM+DMR Module | 96.6% (−0.3%) | 65.9% (+3.0%) | 8.18 | Verify FM+DMR synergy |

| Model | Input | mAP@50 | mAP@50-95 |

|---|---|---|---|

| YOLOv11 | RGB | 43.6 | 13.7 |

| IR | 50.7 | 17.2 | |

| GLNet-YOLO (ours) | RGB + IR | 54.4 | 18.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Zhao, Q.; Xie, X.; Shen, Y.; Ran, J.; Gui, S.; Zhang, H.; Li, X.; Zhang, Z. GLNet-YOLO: Multimodal Feature Fusion for Pedestrian Detection. AI 2025, 6, 229. https://doi.org/10.3390/ai6090229

Zhang Y, Zhao Q, Xie X, Shen Y, Ran J, Gui S, Zhang H, Li X, Zhang Z. GLNet-YOLO: Multimodal Feature Fusion for Pedestrian Detection. AI. 2025; 6(9):229. https://doi.org/10.3390/ai6090229

Chicago/Turabian StyleZhang, Yi, Qing Zhao, Xurui Xie, Yang Shen, Jinhe Ran, Shu Gui, Haiyan Zhang, Xiuhe Li, and Zhen Zhang. 2025. "GLNet-YOLO: Multimodal Feature Fusion for Pedestrian Detection" AI 6, no. 9: 229. https://doi.org/10.3390/ai6090229

APA StyleZhang, Y., Zhao, Q., Xie, X., Shen, Y., Ran, J., Gui, S., Zhang, H., Li, X., & Zhang, Z. (2025). GLNet-YOLO: Multimodal Feature Fusion for Pedestrian Detection. AI, 6(9), 229. https://doi.org/10.3390/ai6090229