Long Short-Term Memory Networks: A Comprehensive Survey

Abstract

1. Introduction

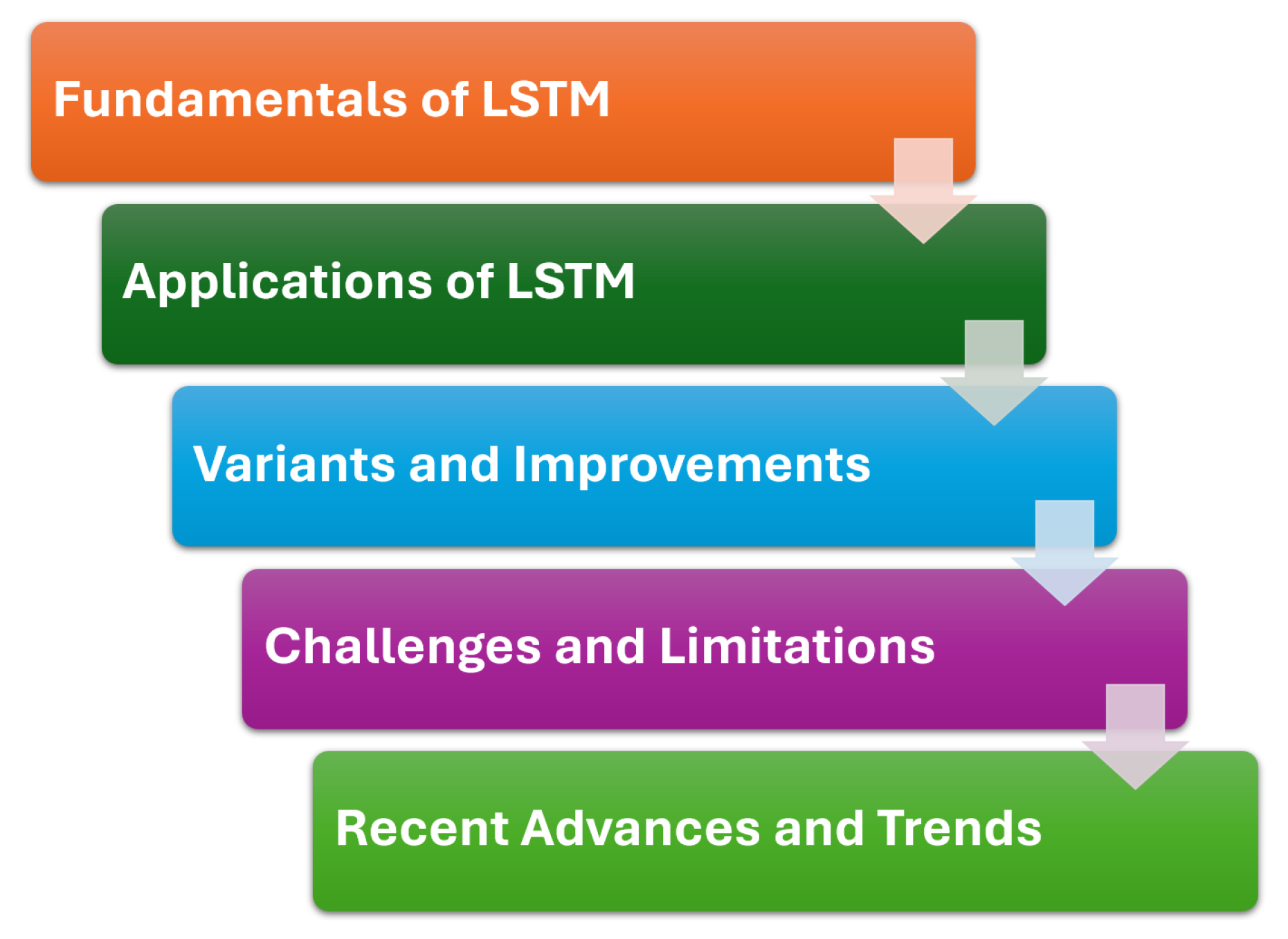

- Providing a clear and comprehensive explanation of the fundamental principles and architecture of LSTM networks, thereby facilitating a solid understanding for researchers and practitioners new to the topic.

- Presenting a systematic review of LSTM applications and highlighting domains where LSTMs have demonstrated significant effectiveness.

- Summarizing and comparing the different enhancements and variants of the LSTM architecture reported in the recent literature.

- Identifying and critically analyzing the challenges and limitations that arise when LSTMs are used in practical implementations.

- Highlighting recent research trends and outlining future research directions, thus offering guidance for subsequent work in the field.

2. Fundamentals of LSTM

2.1. LSTM Architecture and Mechanism

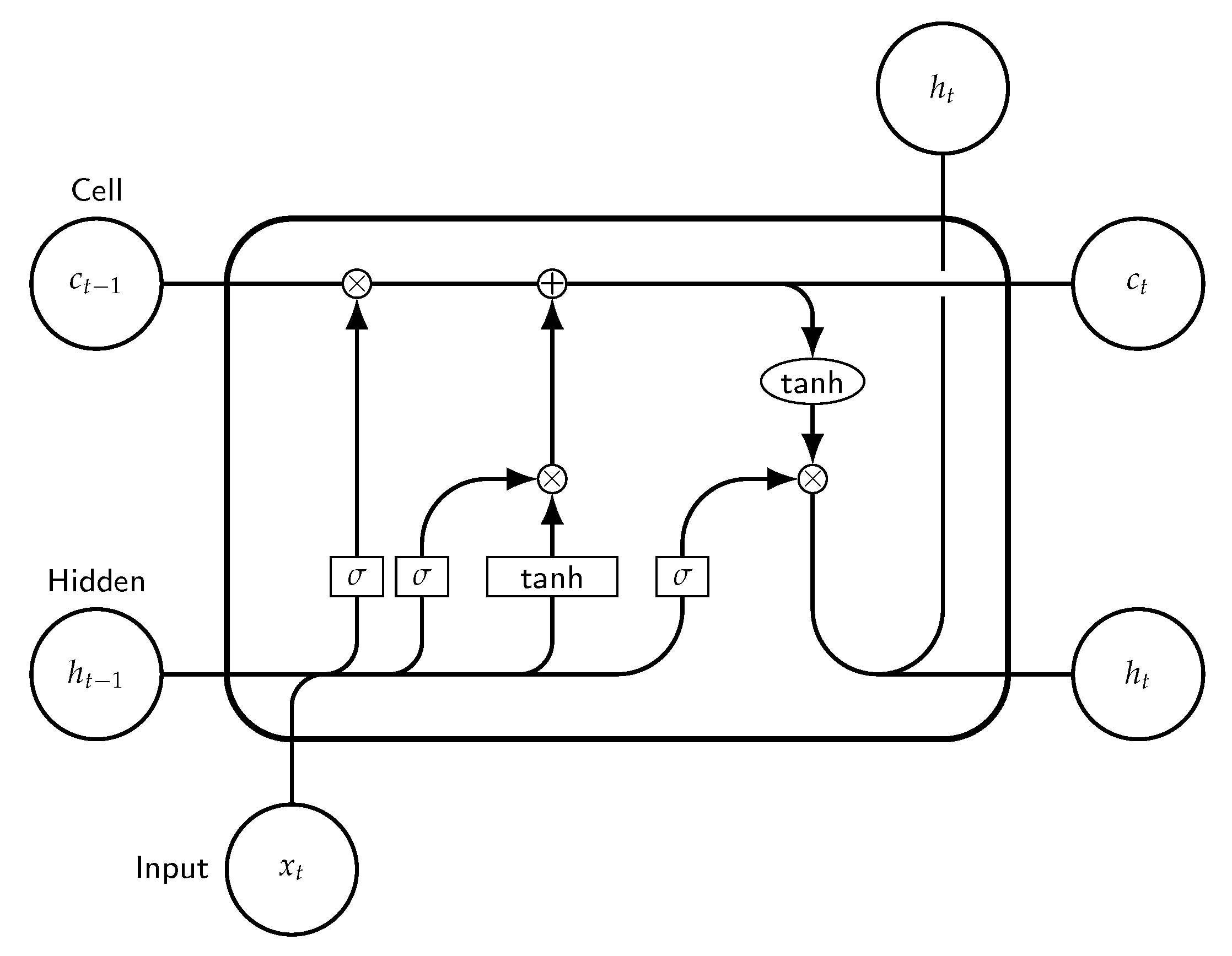

- Cell State (): The cell state serves as the memory unit of the LSTM, carrying relevant information throughout the sequence. It is updated at each time step, allowing the LSTM to retain information over long periods. The cell state is crucial for maintaining context, as it can store information from previous time steps without significant degradation. This attribute enables LSTMs to remember essential details over long sequences, making them suitable for applications like language modeling and time series prediction.

- Hidden State (): The hidden state is the output of the LSTM at time step t and is used for making predictions. It encapsulates information about the input sequence thus far and is passed to subsequent LSTM cells. The hidden state can be interpreted as the filtered version of the cell state, representing the relevant information needed for the current prediction. This dynamic nature of the hidden state allows the model to adapt its outputs based on the evolving context of the input sequence.

- Gates: LSTMs utilize three types of gates to control the flow of information, each serving a distinct purpose:

- –

- Input Gate (): This gate determines how much of the new information from the current input should be added to the cell state. The input gate uses a sigmoid activation function to output values between 0 and 1, effectively acting as a filter. The formula for the input gate isA value obtained by Formula (1) close to one indicates that the information should be fully added to the cell state, whereas a value close to zero implies that little to no information should be added. Here, is the input gate activation, is the weight matrix, is the hidden state from the previous time step, is the current input, and is the bias term.

- –

- Forget Gate (): The forget gate decides which information from the cell state should be discarded. Similar to the input gate, it employs a sigmoid function:The forget gate obtained by Formula (2) enables the LSTM to remove irrelevant information, helping to prevent the cell state from becoming cluttered. This mechanism is essential for maintaining the model’s ability to focus on relevant patterns over time. In this formula, is the forget gate activation, is the weight matrix, is the bias term, and the other variables are as previously defined.

- –

- Output Gate (): This gate controls what part of the cell state will be output as the hidden state. It dictates the information passed to the next LSTM cell:The output gate calculated by applying Formula (3) ensures that the hidden state reflects only the most pertinent information from the cell state, which is crucial for making accurate predictions at each time step. Here, is the output gate activation, is the weight matrix, is the bias term, and the other variables are as defined earlier.

- is the updated hidden state.

- is the sigmoid activation function, which outputs values in the range (0, 1).

- are weight matrices, and are bias vectors, which are learned during training.

2.2. Comparison with Traditional RNNs

2.3. Feature Selection and Hyperparameter Optimization

3. Applications of LSTM

3.1. Natural Language Processing

- Machine Translation [31]: LSTMs are employed in translating text from one language to another. For instance, Google Translate utilizes LSTMs to process sequences of words effectively, improving translation quality across diverse languages. The model captures contextual relationships, allowing it to handle idiomatic expressions and complex grammatical structures, leading to translations that are not only accurate but also contextually relevant.

- Sentiment Analysis [32,33,34]: LSTMs analyze sentiments in text data, such as product reviews or social media posts, and are frequently evaluated on public benchmark datasets, such as the IMDB movie review corpus. Consider a scenario where a company analyzes Twitter data to gauge customer sentiment about a new product launch. By using an LSTM model, the company can accurately classify tweets as positive, negative, or neutral, enabling it to respond promptly to customer feedback and adjust marketing strategies accordingly.

- Text Generation [35,36]: In applications such as creative writing and chatbots, LSTMs are used to generate coherent and contextually relevant text. For example, early text generation systems relied on Stacked LSTM architectures to model long-range dependencies in language. More recent models, such as OpenAI’s GPT-2, are based on the Transformer architecture but still highlight the importance of sequence learning for generating human-like text from a given prompt. Businesses can leverage these capabilities to create automated customer support chatbots that provide accurate and contextually appropriate responses to user inquiries.

- Named Entity Recognition [37,38,39,40]: LSTMs help identify and classify entities in text, such as names of people, organizations, and locations. For example, in healthcare, extracting patient information from clinical notes is crucial. An LSTM-based model can accurately identify and extract entities like drug names, dosages, and medical conditions, facilitating better data management and research.

3.2. Time Series Analysis

- Financial Forecasting [42,43]: LSTMs are widely used in predicting stock prices and market trends by analyzing historical data. For instance, hedge funds employ LSTM models to forecast stock prices based on historical trading data, economic indicators, and news sentiment. By learning patterns from past data, LSTMs help traders make informed decisions about buying or selling stocks at optimal times.

- Weather Forecasting [44,45,46]: Meteorological models utilize LSTMs to predict weather conditions by analyzing historical weather data. An example is using LSTMs to forecast temperature and precipitation based on past climatic data, enabling more accurate predictions. This capability is crucial for agriculture, where farmers can better plan planting and harvesting times based on expected weather patterns.

- Anomaly Detection [47,48]: In fields such as manufacturing and finance, LSTMs can identify anomalies in time series data, such as fraudulent transactions or equipment failures. For example, a bank might use LSTMs to monitor transaction patterns and detect unusual activities that could indicate fraud. The system can automatically flag these transactions for further investigation, enhancing security and reducing losses.

3.3. Speech Recognition

- Voice Assistants [49]: Technologies like Siri and Google Assistant use LSTMs to understand and process spoken commands. For instance, when a user asks their voice assistant to set a reminder, the LSTM model processes the spoken input, maintaining context across the conversation to provide accurate responses. This enhances user experience by enabling more natural interactions.

- Transcription Services [50]: LSTMs facilitate the conversion of spoken language into written text. Companies like Otter.ai utilize LSTMs to provide real-time transcription services for meetings and lectures; in this field, LibriSpeech is one of the most commonly used benchmark datasets for training and evaluation. The model can handle various accents and speech patterns, ensuring that transcriptions are accurate and contextually relevant, which is invaluable for professionals needing precise records of discussions.

- Emotion Recognition [51,52]: By analyzing speech patterns and intonations, LSTMs can identify emotional states, which is useful in applications such as mental health monitoring and customer service. Understanding emotions can lead to improved user experiences and interactions, as customer service representatives can tailor their responses based on the detected emotional tone.

3.4. Other Emerging Applications

- Healthcare [53,54,55,56]: LSTMs analyze patient data over time, predicting health outcomes and personalizing treatment plans. For instance, hospitals can use LSTM models to track vital signs of patients in real-time, predicting potential health deteriorations. This enables timely interventions that can significantly improve patient outcomes, such as early detection of sepsis.

- Robotics [57,58,59]: In robotics, LSTMs help in path planning and decision-making by predicting future states based on past movements and sensory inputs. Consider autonomous vehicles, which use LSTMs to analyze traffic patterns and make real-time navigation decisions. This enhances the vehicle’s ability to operate safely and efficiently in dynamic environments.

- Video Analysis [60,61,62,63]: LSTMs are used in analyzing video sequences for applications such as action recognition and event prediction. For example, security systems can utilize LSTMs to detect unusual activities in surveillance footage. By analyzing sequences of frames, the system can identify potential security threats, enhancing safety in public spaces.

4. Variants and Improvements

4.1. Bidirectional LSTMs

- Architecture: A BiLSTM consists of two LSTM layers for each time step: one processes the sequence from the beginning to the end (forward) and the other processes it from the end to the beginning (backward). The outputs of both layers are concatenated or combined, providing a richer representation that captures context from both sides. This architecture is particularly useful in scenarios where the context surrounding a word is critical for understanding its meaning.

- Example: In a sentence like “The bank of the river,” understanding the word “bank” requires context from both directions to determine whether it refers to a financial institution or the side of a river. A BiLSTM uses the forward layer to gather context from preceding words and the backward layer to gather context from subsequent words, enhancing its ability to disambiguate meaning effectively.

- Applications: This approach is particularly useful in tasks such as named entity recognition, where the model needs to identify proper nouns in context, and machine translation, where syntax and semantics from both directions are critical for accurate translations. For instance, BiLSTMs have been shown to improve the performance of systems like Google Translate, which need to understand entire phrases rather than isolated words.

- Benefits: By leveraging information from both past and future states, BiLSTMs improve the model’s ability to make accurate predictions. This dual-context learning often results in better performance on tasks that require a comprehensive understanding of input sequences, leading to more reliable outputs in applications like sentiment analysis and text generation.

4.2. Stacked LSTMs

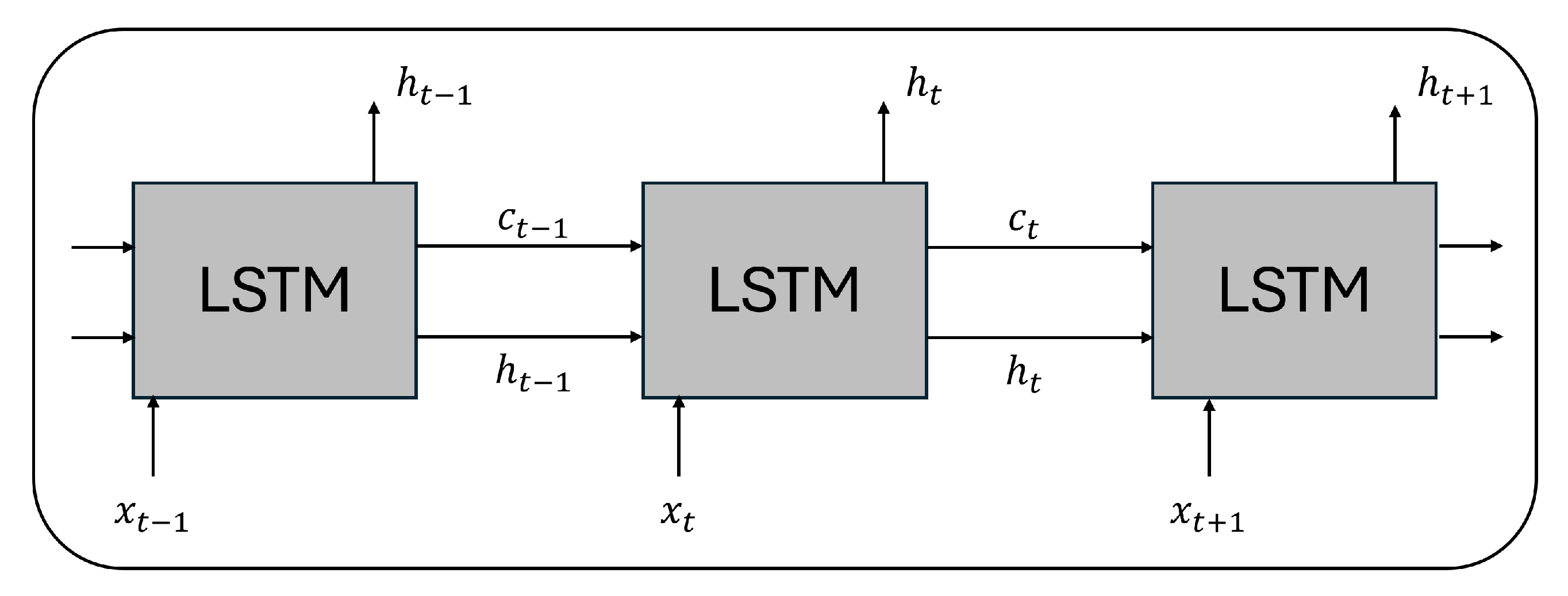

- Architecture: In a Stacked LSTM, the output of one LSTM layer serves as the input to the next layer. This hierarchical structure enables the model to capture high-level features at deeper layers while retaining the temporal dynamics in the lower layers. Typically, the first few layers might focus on capturing simple patterns, while deeper layers can learn more complex relationships in the data.

- Example: In speech recognition, lower layers of a Stacked LSTM might focus on phonetic features, such as phonemes and intonation, while higher layers can capture linguistic structures and context. For instance, a model might first learn to recognize the sounds of words and then understand them in the context of sentences, leading to more accurate transcriptions.

- Applications: Stacked LSTMs are effective in tasks requiring intricate feature extraction, such as video analysis, natural language processing, and handwriting recognition. For example, in video analysis, lower layers might extract motion patterns, while higher layers could recognize actions like running or jumping. This capability allows for sophisticated applications, such as action recognition in sports or surveillance footage analysis.

- Benefits: The increased capacity of Stacked LSTMs allows them to model more complex relationships within the data, leading to improved performance on challenging tasks. However, this complexity requires careful tuning of hyperparameters to avoid overfitting, particularly when working with smaller datasets.

4.3. Attention Mechanisms

- Concept: Instead of treating all input tokens equally, Attention Mechanisms weigh the importance of different tokens for each output token. This selective focus enables the model to capture relevant context more effectively, which is particularly beneficial in long sequences where not all parts of the input are equally important for every output.

- Example: In machine translation, when translating a long sentence, the model can focus on the relevant words that contribute to the meaning of the current output word. For instance, in translating “The cat sat on the mat,” the model might focus on “cat” when generating the word “it,” improving the coherence of the translation. This capability helps in generating more fluent and accurate translations, particularly in complex sentences.

- Applications: Attention Mechanisms are widely used in tasks such as machine translation, text summarization, image captioning, and even video analysis. For example, in text summarization, attention helps the model identify key sentences in a document to create concise summaries that encapsulate the main ideas without losing important context.

- Benefits: The integration of attention with LSTMs leads to significant improvements in performance, particularly for long sequences. It reduces the burden on the LSTM to remember all previous states, allowing it to concentrate on relevant information dynamically. This has led to the development of models like the Transformer, which relies heavily on Attention Mechanisms to achieve state-of-the-art results in various NLP tasks.

5. Challenges and Limitations

5.1. Computational Complexity

- High Resource Consumption: LSTMs require significant computational resources, especially for large datasets and deep architectures. Training an LSTM model can demand substantial CPU or GPU time, making it less feasible for real-time applications. For instance, training a state-of-the-art LSTM for natural language processing tasks may require specialized hardware, like TPUs, which can be costly.

- Scalability Issues: As the size of the network increases, particularly when stacking multiple LSTM layers, scalability becomes a concern. The time taken to train and the memory required can grow exponentially, limiting the model’s applicability in resource-constrained environments. This limitation can hinder the deployment of LSTM-based models in mobile or embedded systems where computational power is limited.

- Inference Speed: The complexity of LSTMs can also affect inference speed, which is critical in applications like real-time speech recognition or chatbots. Slower inference can hinder user experience and system performance, leading to delays that may frustrate users. For example, in a customer support chatbot, any lag in response time can diminish the perceived quality of the service.

5.2. Data Requirements

- Large Datasets: LSTMs typically perform well when trained on large datasets, which may not always be available. In domains with limited data, such as specialized medical applications or niche markets, LSTMs can overfit, leading to poor generalization on unseen data. This issue can result in models that perform well on training data but fail to deliver accurate predictions in real-world scenarios.

- Data Quality: The quality of the training data significantly impacts model performance. Noisy, unstructured, or biased data can result in suboptimal learning outcomes. For example, in natural language processing, poorly annotated datasets can lead to models that misunderstand context or semantics, thereby generating irrelevant or incorrect outputs.

- Domain-Specific Data: Training LSTMs for specialized tasks often requires domain-specific data, which can be difficult and time-consuming to collect. In fields like healthcare, obtaining annotated datasets can involve extensive collaboration with experts and adherence to regulatory standards, complicating the data collection process and extending project timelines.

5.3. Training Difficulties

- Long Training Times: Due to their complexity, LSTMs can take a long time to train, especially with large datasets. This extended training period can be a barrier to rapid prototyping and experimentation. Researchers may find it difficult to iterate quickly on model designs, slowing down the overall development process and delaying the deployment of solutions.

- Hyperparameter Tuning: LSTMs have numerous hyperparameters (e.g., learning rate, number of layers, hidden units) that need to be carefully tuned for optimal performance. Finding the right combination can be challenging and often requires extensive experimentation. This tuning process can be resource-intensive, requiring multiple training runs and potentially consuming significant computational resources.

- Vanishing and Exploding Gradients: Although LSTMs are designed to mitigate the vanishing gradient problem, they are still susceptible to exploding gradients during training. This issue can lead to unstable learning and hinder the convergence of the model. If not properly managed, exploding gradients can cause the model to diverge, resulting in NaN values during training and necessitating the need for gradient clipping or other stabilizing techniques.

6. Recent Advances and Trends

6.1. Innovations in LSTM Architectures

6.2. Performance Comparisons

6.3. Emerging Research Areas

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Taye, M.M. Understanding of machine learning with deep learning: Architectures, workflow, applications and future directions. Computers 2023, 12, 91. [Google Scholar] [CrossRef]

- Gasmi, K.; Ben Ltaifa, I.; Eltoum Abdalrahman, A.; Hamid, O.; Othman Altaieb, M.; Ali, S.; Ben Ammar, L.; Mrabet, M. Hybrid Feature and Optimized Deep Learning Model Fusion for Detecting Hateful Arabic Content. IEEE Access 2025, 13, 131411–131431. [Google Scholar] [CrossRef]

- Gasmi, K.; Hrizi, O.; Ben Aoun, N.; Alrashdi, I.; Alqazzaz, A.; Hamid, O.; Othman Altaieb, M.; Abdalrahman, A.E.M.; Ben Ammar, L.; Mrabet, M.; et al. Enhanced Multimodal Physiological Signal Analysis for Pain Assessment Using Optimized Ensemble Deep Learning. Comput. Model. Eng. Sci. 2025, 143, 2459–2489. [Google Scholar] [CrossRef]

- Mienye, I.D.; Swart, T.G.; Obaido, G. Recurrent neural networks: A comprehensive review of architectures, variants, and applications. Information 2024, 15, 517. [Google Scholar] [CrossRef]

- Ullah, I.; Mahmoud, Q.H. Design and development of RNN anomaly detection model for IoT networks. IEEE Access 2022, 10, 62722–62750. [Google Scholar] [CrossRef]

- Van Houdt, G.; Mosquera, C.; Nápoles, G. A review on the long short-term memory model. Artif. Intell. Rev. 2020, 53, 5929–5955. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Halbouni, A.; Gunawan, T.S.; Habaebi, M.H.; Halbouni, M.; Kartiwi, M.; Ahmad, R. Machine learning and deep learning approaches for cybersecurity: A review. IEEE Access 2022, 10, 19572–19585. [Google Scholar] [CrossRef]

- Behera, R.K.; Jena, M.; Rath, S.K.; Misra, S. Co-LSTM: Convolutional LSTM model for sentiment analysis in social big data. Inf. Process. Manag. 2021, 58, 102435. [Google Scholar] [CrossRef]

- Edara, D.C.; Vanukuri, L.P.; Sistla, V.; Kolli, V.K.K. Sentiment analysis and text categorization of cancer medical records with LSTM. J. Ambient Intell. Humaniz. Comput. 2023, 14, 5309–5325. [Google Scholar] [CrossRef]

- Lindemann, B.; Maschler, B.; Sahlab, N.; Weyrich, M. A survey on anomaly detection for technical systems using LSTM networks. Comput. Ind. 2021, 131, 103498. [Google Scholar] [CrossRef]

- Hua, Y.; Zhao, Z.; Li, R.; Chen, X.; Liu, Z.; Zhang, H. Deep learning with long short-term memory for time series prediction. IEEE Commun. Mag. 2019, 57, 114–119. [Google Scholar] [CrossRef]

- Oruh, J.; Viriri, S.; Adegun, A. Long short-term memory recurrent neural network for automatic speech recognition. IEEE Access 2022, 10, 30069–30079. [Google Scholar] [CrossRef]

- Agarwal, P.; Kumar, S. Electroencephalography-based imagined speech recognition using deep long short-term memory network. ETRI J. 2022, 44, 672–685. [Google Scholar] [CrossRef]

- Fang, K.; Kifer, D.; Lawson, K.; Shen, C. Evaluating the potential and challenges of an uncertainty quantification method for long short-term memory models for soil moisture predictions. Water Resour. Res. 2020, 56, e2020WR028095. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, P.; Yan, R.; Gao, R.X. Long short-term memory for machine remaining life prediction. J. Manuf. Syst. 2018, 48, 78–86. [Google Scholar] [CrossRef]

- Fernando, T.; Denman, S.; McFadyen, A.; Sridharan, S.; Fookes, C. Tree memory networks for modelling long-term temporal dependencies. Neurocomputing 2018, 304, 64–81. [Google Scholar] [CrossRef]

- Muhuri, P.S.; Chatterjee, P.; Yuan, X.; Roy, K.; Esterline, A. Using a long short-term memory recurrent neural network (LSTM-RNN) to classify network attacks. Information 2020, 11, 243. [Google Scholar] [CrossRef]

- Bischl, B.; Binder, M.; Lang, M.; Pielok, T.; Richter, J.; Coors, S.; Thomas, J.; Ullmann, T.; Becker, M.; Boulesteix, A.L.; et al. Hyperparameter optimization: Foundations, algorithms, best practices, and open challenges. WIREs Data Min. Knowl. Discov. 2023, 13, e1484. [Google Scholar] [CrossRef]

- Kazemi, F.; Asgarkhani, N.; Shafighfard, T.; Jankowski, R.; Yoo, D.Y. Machine-Learning Methods for Estimating Performance of Structural Concrete Members Reinforced with Fiber-Reinforced Polymers. Arch. Comput. Methods Eng. 2025, 32, 571–603. [Google Scholar] [CrossRef]

- Huang, L.; Zhou, X.; Shi, L.; Gong, L. Time Series Feature Selection Method Based on Mutual Information. Appl. Sci. 2024, 14, 1960. [Google Scholar] [CrossRef]

- Alalhareth, M.; Hong, S.C. An Improved Mutual Information Feature Selection Technique for Intrusion Detection Systems in the Internet of Medical Things. Sensors 2023, 23, 4971. [Google Scholar] [CrossRef]

- Berahmand, K.; Daneshfar, F.; Salehi, E.S.; Li, Y.; Xu, Y. Autoencoders and their applications in machine learning: A survey. Artif. Intell. Rev. 2024, 57, 28. [Google Scholar] [CrossRef]

- Sakib, S.; Mahadi, M.K.; Abir, S.R.; Moon, A.M.; Shafiullah, A.; Ali, S.; Faisal, F.; Nishat, M.M. Attention-Based Models for Multivariate Time Series Forecasting: Multi-step Solar Irradiation Prediction. Heliyon 2024, 10, e27795. [Google Scholar] [CrossRef]

- Vincent, A.M.; Jidesh, P. An improved hyperparameter optimization framework for AutoML systems using evolutionary algorithms. Sci. Rep. 2023, 13, 4737. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Li, J.; Jiang, X. Research on information leakage in time series prediction based on empirical mode decomposition. Sci. Rep. 2024, 14, 28362. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Zhou, D.J. Using cross-validation methods to select time series models: Promises and pitfalls. Br. J. Math. Stat. Psychol. 2024, 77, 337–355. [Google Scholar] [CrossRef]

- Dhake, H.; Kashyap, Y.; Kosmopoulos, P. Algorithms for Hyperparameter Tuning of LSTMs for Time Series Forecasting. Remote Sens. 2023, 15, 2076. [Google Scholar] [CrossRef]

- Purba, M.R.; Akter, M.; Ferdows, R.; Ahmed, F. A hybrid convolutional long short-term memory (CNN-LSTM) based natural language processing (NLP) model for sentiment analysis of customer product reviews in Bangla. J. Discrete Math. Sci. Cryptogr. 2022, 25, 2111–2120. [Google Scholar] [CrossRef]

- Su, C.; Huang, H.; Shi, S.; Jian, P.; Shi, X. Neural machine translation with Gumbel Tree-LSTM based encoder. J. Vis. Commun. Image Represent. 2020, 71, 102811. [Google Scholar] [CrossRef]

- Gondhi, N.K.; Chaahat; Sharma, E.; Alharbi, A.H.; Verma, R.; Shah, M.A. Efficient Long Short-Term Memory-Based Sentiment Analysis of E-Commerce Reviews. Comput. Intell. Neurosci. 2022, 2022, 3464524. [Google Scholar] [CrossRef] [PubMed]

- Al-Smadi, M.; Talafha, B.; Al-Ayyoub, M.; Jararweh, Y. Using long short-term memory deep neural networks for aspect-based sentiment analysis of Arabic reviews. Int. J. Mach. Learn. Cybern. 2019, 10, 2163–2175. [Google Scholar] [CrossRef]

- Gandhi, U.D.; Malarvizhi Kumar, P.; Chandra Babu, G.; Karthick, G. Sentiment analysis on twitter data by using convolutional neural network (CNN) and long short term memory (LSTM). Wirel. Pers. Commun. 2021, 1–10. [Google Scholar] [CrossRef]

- Perez-Castro, A.; Martínez-Torres, M.d.R.; Toral, S. Efficiency of automatic text generators for online review content generation. Technol. Forecast. Soc. Change 2023, 189, 122380. [Google Scholar] [CrossRef]

- Hajipoor, O.; Nickabadi, A.; Homayounpour, M.M. GPTGAN: Utilizing the GPT language model and GAN to enhance adversarial text generation. Neurocomputing 2025, 617, 128865. [Google Scholar] [CrossRef]

- Ma, P.; Jiang, B.; Lu, Z.; Li, N.; Jiang, Z. Cybersecurity named entity recognition using bidirectional long short-term memory with conditional random fields. Tsinghua Sci. Technol. 2020, 26, 259–265. [Google Scholar] [CrossRef]

- Khalifa, M.; Shaalan, K. Character convolutions for Arabic named entity recognition with long short-term memory networks. Comput. Speech Lang. 2019, 58, 335–346. [Google Scholar] [CrossRef]

- Santoso, J.; Setiawan, E.I.; Purwanto, C.N.; Yuniarno, E.M.; Hariadi, M.; Purnomo, M.H. Named entity recognition for extracting concept in ontology building on Indonesian language using end-to-end bidirectional long short term memory. Expert Syst. Appl. 2021, 176, 114856. [Google Scholar] [CrossRef]

- Lyu, C.; Chen, B.; Ren, Y.; Ji, D. Long short-term memory RNN for biomedical named entity recognition. BMC Bioinform. 2017, 18, 1–11. [Google Scholar] [CrossRef]

- Cheng, Q.; Chen, Y.; Xiao, Y.; Yin, H.; Liu, W. A dual-stage attention-based Bi-LSTM network for multivariate time series prediction. J. Supercomput. 2022, 78, 16214–16235. [Google Scholar] [CrossRef]

- Bukhari, A.H.; Raja, M.A.Z.; Sulaiman, M.; Islam, S.; Shoaib, M.; Kumam, P. Fractional neuro-sequential ARFIMA-LSTM for financial market forecasting. IEEE Access 2020, 8, 71326–71338. [Google Scholar] [CrossRef]

- Cao, J.; Li, Z.; Li, J. Financial time series forecasting model based on CEEMDAN and LSTM. Phys. A Stat. Mech. Its Appl. 2019, 519, 127–139. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by LSTM. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Tukymbekov, D.; Saymbetov, A.; Nurgaliyev, M.; Kuttybay, N.; Dosymbetova, G.; Svanbayev, Y. Intelligent autonomous street lighting system based on weather forecast using LSTM. Energy 2021, 231, 120902. [Google Scholar] [CrossRef]

- Hossain, M.S.; Mahmood, H. Short-term photovoltaic power forecasting using an LSTM neural network and synthetic weather forecast. IEEE Access 2020, 8, 172524–172533. [Google Scholar] [CrossRef]

- Nguyen, H.D.; Tran, K.P.; Thomassey, S.; Hamad, M. Forecasting and Anomaly Detection approaches using LSTM and LSTM Autoencoder techniques with the applications in supply chain management. Int. J. Inf. Manag. 2021, 57, 102282. [Google Scholar] [CrossRef]

- Ergen, T.; Kozat, S.S. Unsupervised anomaly detection with LSTM neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 3127–3141. [Google Scholar] [CrossRef]

- Wubet, Y.A.; Lian, K.Y. Voice conversion based augmentation and a hybrid CNN-LSTM model for improving speaker-independent keyword recognition on limited datasets. IEEE Access 2022, 10, 89170–89180. [Google Scholar] [CrossRef]

- Orosoo, M.; Raash, N.; Treve, M.; Lahza, H.F.M.; Alshammry, N.; Ramesh, J.V.N.; Rengarajan, M. Transforming English language learning: Advanced speech recognition with MLP-LSTM for personalized education. Alex. Eng. J. 2025, 111, 21–32. [Google Scholar] [CrossRef]

- Zhao, J.; Mao, X.; Chen, L. Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed. Signal Process. Control 2019, 47, 312–323. [Google Scholar]

- Du, X.; Ma, C.; Zhang, G.; Li, J.; Lai, Y.K.; Zhao, G.; Deng, X.; Liu, Y.J.; Wang, H. An efficient LSTM network for emotion recognition from multichannel EEG signals. IEEE Trans. Affect. Comput. 2020, 13, 1528–1540. [Google Scholar] [CrossRef]

- Guo, A.; Beheshti, R.; Khan, Y.M.; Langabeer, J.R.; Foraker, R.E. Predicting cardiovascular health trajectories in time-series electronic health records with LSTM models. BMC Med. Inform. Decis. Mak. 2021, 21, 5. [Google Scholar] [CrossRef] [PubMed]

- Yin, J.; Han, J.; Xie, R.; Wang, C.; Duan, X.; Rong, Y.; Zeng, X.; Tao, J. MC-LSTM: Real-time 3D human action detection system for intelligent healthcare applications. IEEE Trans. Biomed. Circuits Syst. 2021, 15, 259–269. [Google Scholar] [CrossRef]

- Srivastava, S.; Sharma, S.; Tanwar, P.; Dubey, G.; Memoria, M. Improving Health Care Analytics: LSTM Networks for Enhanced Risk Assessment. Procedia Comput. Sci. 2025, 259, 11–22. [Google Scholar] [CrossRef]

- Rashid, T.A.; Hassan, M.K.; Mohammadi, M.; Fraser, K. Improvement of variant adaptable LSTM trained with metaheuristic algorithms for healthcare analysis. In Research Anthology on Artificial Intelligence Applications in Security; IGI Global: Hershey, PA, USA, 2021; pp. 1031–1051. [Google Scholar]

- Aslan, S.N.; Özalp, R.; Uçar, A.; Güzeliş, C. New CNN and hybrid CNN-LSTM models for learning object manipulation of humanoid robots from demonstration. Clust. Comput. 2022, 25, 1575–1590. [Google Scholar] [CrossRef]

- Molina-Leal, A.; Gómez-Espinosa, A.; Escobedo Cabello, J.A.; Cuan-Urquizo, E.; Cruz-Ramírez, S.R. Trajectory planning for a mobile robot in a dynamic environment using an LSTM neural network. Appl. Sci. 2021, 11, 10689. [Google Scholar] [CrossRef]

- Park, D.; Hoshi, Y.; Kemp, C.C. A multimodal anomaly detector for robot-assisted feeding using an lstm-based variational autoencoder. IEEE Robot. Autom. Lett. 2018, 3, 1544–1551. [Google Scholar] [CrossRef]

- Bin, Y.; Yang, Y.; Shen, F.; Xie, N.; Shen, H.T.; Li, X. Describing video with attention-based bidirectional LSTM. IEEE Trans. Cybern. 2018, 49, 2631–2641. [Google Scholar] [CrossRef]

- Ge, H.; Yan, Z.; Yu, W.; Sun, L. An attention mechanism based convolutional LSTM network for video action recognition. Multimed. Tools Appl. 2019, 78, 20533–20556. [Google Scholar] [CrossRef]

- Hussain, T.; Muhammad, K.; Ullah, A.; Cao, Z.; Baik, S.W.; De Albuquerque, V.H.C. Cloud-assisted multiview video summarization using CNN and bidirectional LSTM. IEEE Trans. Ind. Inform. 2019, 16, 77–86. [Google Scholar] [CrossRef]

- Ullah, A.; Ahmad, J.; Muhammad, K.; Sajjad, M.; Baik, S.W. Action recognition in video sequences using deep bi-directional LSTM with CNN features. IEEE Access 2017, 6, 1155–1166. [Google Scholar] [CrossRef]

- Imrana, Y.; Xiang, Y.; Ali, L.; Abdul-Rauf, Z. A bidirectional LSTM deep learning approach for intrusion detection. Expert Syst. Appl. 2021, 185, 115524. [Google Scholar] [CrossRef]

- Abduljabbar, R.L.; Dia, H.; Tsai, P.W. Unidirectional and bidirectional LSTM models for short-term traffic prediction. J. Adv. Transp. 2021, 2021, 5589075. [Google Scholar] [CrossRef]

- Huang, C.G.; Huang, H.Z.; Li, Y.F. A bidirectional LSTM prognostics method under multiple operational conditions. IEEE Trans. Ind. Electron. 2019, 66, 8792–8802. [Google Scholar] [CrossRef]

- Mahadevaswamy, U.; Swathi, P. Sentiment analysis using bidirectional LSTM network. Procedia Comput. Sci. 2023, 218, 45–56. [Google Scholar] [CrossRef]

- Ma, M.; Liu, C.; Wei, R.; Liang, B.; Dai, J. Predicting machine’s performance record using the stacked long short-term memory (LSTM) neural networks. J. Appl. Clin. Med. Phys. 2022, 23, e13558. [Google Scholar] [CrossRef]

- Ghimire, S.; Deo, R.C.; Wang, H.; Al-Musaylh, M.S.; Casillas-Pérez, D.; Salcedo-Sanz, S. Stacked LSTM sequence-to-sequence autoencoder with feature selection for daily solar radiation prediction: A review and new modeling results. Energies 2022, 15, 1061. [Google Scholar] [CrossRef]

- Wang, J.; Peng, B.; Zhang, X. Using a stacked residual LSTM model for sentiment intensity prediction. Neurocomputing 2018, 322, 93–101. [Google Scholar] [CrossRef]

- Yu, L.; Qu, J.; Gao, F.; Tian, Y. A novel hierarchical algorithm for bearing fault diagnosis based on stacked LSTM. Shock Vib. 2019, 2019, 2756284. [Google Scholar] [CrossRef]

- Sang, S.; Li, L. A novel variant of LSTM stock prediction method incorporating attention mechanism. Mathematics 2024, 12, 945. [Google Scholar] [CrossRef]

- Lin, J.; Ma, J.; Zhu, J.; Cui, Y. Short-term load forecasting based on LSTM networks considering attention mechanism. Int. J. Electr. Power Energy Syst. 2022, 137, 107818. [Google Scholar] [CrossRef]

- Xiang, L.; Wang, P.; Yang, X.; Hu, A.; Su, H. Fault detection of wind turbine based on SCADA data analysis using CNN and LSTM with attention mechanism. Measurement 2021, 175, 109094. [Google Scholar] [CrossRef]

- Yang, Y.; Xiong, Q.; Wu, C.; Zou, Q.; Yu, Y.; Yi, H.; Gao, M. A study on water quality prediction by a hybrid CNN-LSTM model with attention mechanism. Environ. Sci. Pollut. Res. 2021, 28, 55129–55139. [Google Scholar] [CrossRef] [PubMed]

- Ran, X.; Shan, Z.; Fang, Y.; Lin, C. An LSTM-based method with attention mechanism for travel time prediction. Sensors 2019, 19, 861. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Guo, J. Bidirectional LSTM with attention mechanism and convolutional layer for text classification. Neurocomputing 2019, 337, 325–338. [Google Scholar] [CrossRef]

- Wei, L.; Chen, S.; Lin, J.; Shi, L. Enhancing return forecasting using LSTM with agent-based synthetic data. Decis. Support Syst. 2025, 193, 114452. [Google Scholar] [CrossRef]

- Azkue, M.; Miguel, E.; Martinez-Laserna, E.; Oca, L.; Iraola, U. Creating a Robust SoC Estimation Algorithm Based on LSTM Units and Trained with Synthetic Data. World Electr. Veh. J. 2023, 14, 197. [Google Scholar] [CrossRef]

- Brophy, E.; Wang, Z.; She, Q.; Ward, T. Generative Adversarial Networks in Time Series: A Systematic Literature Review. ACM Comput. Surv. 2023, 55, 1–31. [Google Scholar] [CrossRef]

- Takahashi, S.; Chen, Y.; Tanaka-Ishii, K. Modeling financial time-series with generative adversarial networks. Phys. A Stat. Mech. Its Appl. 2019, 527, 121261. [Google Scholar] [CrossRef]

- Chatterjee, S.; Hazra, D.; Byun, Y.C. GAN-based synthetic time-series data generation for improving prediction of demand for electric vehicles. Expert Syst. Appl. 2025, 264, 125838. [Google Scholar] [CrossRef]

- Deng, F.; Chen, Z.; Liu, Y.; Yang, S.; Hao, R.; Lyu, L. A Novel Combination Neural Network Based on ConvLSTM-Transformer for Bearing Remaining Useful Life Prediction. Machines 2022, 10, 1226. [Google Scholar] [CrossRef]

- Bounoua, I.; Saidi, Y.; Yaagoubi, R.; Bouziani, M. Deep Learning Approaches for Water Stress Forecasting in Arboriculture Using Time Series of Remote Sensing Images: Comparative Study between ConvLSTM and CNN-LSTM Models. Technologies 2024, 12, 77. [Google Scholar] [CrossRef]

- da Silva, D.G.; Geller, M.T.B.; dos Santos Moura, M.S.; de Moura Meneses, A.A. Performance evaluation of LSTM neural networks for consumption prediction. e-Prime Adv. Electr. Eng. Electron. Energy 2022, 2, 100030. [Google Scholar] [CrossRef]

- Haque, S.T.; Debnath, M.; Yasmin, A.; Mahmud, T.; Ngu, A.H.H. Experimental Study of Long Short-Term Memory and Transformer Models for Fall Detection on Smartwatches. Sensors 2024, 24, 6235. [Google Scholar] [CrossRef] [PubMed]

- Trujillo-Guerrero, M.F.; Román-Niemes, S.; Jaén-Vargas, M.; Cadiz, A.; Fonseca, R.; Serrano-Olmedo, J.J. Accuracy Comparison of CNN, LSTM, and Transformer for Activity Recognition Using IMU and Visual Markers. IEEE Access 2023, 11, 106650–106669. [Google Scholar] [CrossRef]

- Curreri, F.; Patanè, L.; Xibilia, M.G. RNN- and LSTM-Based Soft Sensors Transferability for an Industrial Process. Sensors 2021, 21, 823. [Google Scholar] [CrossRef]

- Yunita, A.; Pratama, M.I.; Almuzakki, M.Z.; Ramadhan, H.; Akhir, E.A.P.; Firdausiah Mansur, A.B.; Basori, A.H. Performance analysis of neural network architectures for time series forecasting: A comparative study of RNN, LSTM, GRU, and hybrid models. MethodsX 2025, 15, 103462. [Google Scholar] [CrossRef]

- Crisóstomo de Castro Filho, H.; Abílio de Carvalho Júnior, O.; Ferreira de Carvalho, O.L.; Pozzobon de Bem, P.; dos Santos de Moura, R.; Olino de Albuquerque, A.; Rosa Silva, C.; Guimarães Ferreira, P.H.; Fontes Guimarães, R.; Trancoso Gomes, R.A. Rice Crop Detection Using LSTM, Bi-LSTM, and Machine Learning Models from Sentinel-1 Time Series. Remote Sens. 2020, 12, 2655. [Google Scholar] [CrossRef]

- Jiang, J.; Wan, X.; Zhu, F.; Xiang, D.; Hu, Z.; Mu, S. A deep learning framework integrating Transformer and LSTM architectures for pipeline corrosion rate forecasting. Comput. Chem. Eng. 2025, 204, 109365. [Google Scholar] [CrossRef]

- Gao, S.; Chen, M.; Yang, W.; Zhu, H. TFF-TL: Transformer based on temporal feature fusion and LSTM for dynamic soft sensor modeling of industrial processes. J. Taiwan Inst. Chem. Eng. 2025, 176, 106328. [Google Scholar] [CrossRef]

- Pantopoulou, S.; Cilliers, A.; Tsoukalas, L.H.; Heifetz, A. Transformers and Long Short-Term Memory Transfer Learning for GenIV Reactor Temperature Time Series Forecasting. Energies 2025, 18, 2286. [Google Scholar] [CrossRef]

- Casola, S.; Lauriola, I.; Lavelli, A. Pre-trained transformers: An empirical comparison. Mach. Learn. Appl. 2022, 9, 100334. [Google Scholar] [CrossRef]

- Papa, L.; Russo, P.; Amerini, I.; Zhou, L. A Survey on Efficient Vision Transformers: Algorithms, Techniques, and Performance Benchmarking. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 7682–7700. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Ma, L.; He, G.; Johnson, B.A.; Yan, Z.; Chang, M.; Liang, Y. Transformers for Remote Sensing: A Systematic Review and Analysis. Sensors 2024, 24, 3495. [Google Scholar] [CrossRef] [PubMed]

- Zhang, N.; Song, Y.; Fang, D.; Gao, Z.; Yan, Y. An Improved Deep Convolutional LSTM for Human Activity Recognition Using Wearable Sensors. IEEE Sens. J. 2024, 24, 1717–1729. [Google Scholar] [CrossRef]

- Khatun, M.A.; Yousuf, M.A.; Ahmed, S.; Uddin, M.Z.; Alyami, S.A.; Al-Ashhab, S.; Akhdar, H.F.; Khan, A.; Azad, A.; Moni, M.A. Deep CNN-LSTM With Self-Attention Model for Human Activity Recognition Using Wearable Sensor. IEEE J. Transl. Eng. Health Med. 2022, 10, 1–16. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, R.; Chevalier, G.; Xu, X.; Zhang, Z. Deep Residual Bidir-LSTM for Human Activity Recognition Using Wearable Sensors. Math. Probl. Eng. 2018, 2018, 7316954. [Google Scholar] [CrossRef]

- Wu, Z.; Hu, P.; Liu, S.; Pang, T. Attention Mechanism and LSTM Network for Fingerprint-Based Indoor Location System. Sensors 2024, 24, 1398. [Google Scholar] [CrossRef]

- Cao, M.; Yao, R.; Xia, J.; Jia, K.; Wang, H. LSTM Attention Neural-Network-Based Signal Detection for Hybrid Modulated Faster-Than-Nyquist Optical Wireless Communications. Sensors 2022, 22, 8992. [Google Scholar] [CrossRef]

- Chen, H.; Yang, J.; Fu, X.; Zheng, Q.; Song, X.; Fu, Z.; Wang, J.; Liang, Y.; Yin, H.; Liu, Z.; et al. Water Quality Prediction Based on LSTM and Attention Mechanism: A Case Study of the Burnett River, Australia. Sustainability 2022, 14, 13231. [Google Scholar] [CrossRef]

- Xie, T.; Ding, W.; Zhang, J.; Wan, X.; Wang, J. Bi-LS-AttM: A Bidirectional LSTM and Attention Mechanism Model for Improving Image Captioning. Appl. Sci. 2023, 13, 7916. [Google Scholar] [CrossRef]

- Hassan, N.; Miah, A.; Shin, J. A Deep Bidirectional LSTM Model Enhanced by Transfer-Learning-Based Feature Extraction for Dynamic Human Activity Recognition. Appl. Sci. 2024, 14, 603. [Google Scholar] [CrossRef]

- Lin, Y.; Zhang, Z.; Tan, Y.; Fu, H.; Min, H. Efficient TD3-based path planning of mobile robot in dynamic environments using prioritized experience replay and LSTM. Sci. Rep. 2025, 15, 18331. [Google Scholar] [CrossRef]

- S.K.B., S.; Mathivanan, S.K.; Rajadurai, H.; Cho, J.; Easwaramoorthy, S.V. A multi-modal geospatial–temporal LSTM based deep learning framework for predictive modeling of urban mobility patterns. Sci. Rep. 2024, 14, 31579. [Google Scholar] [CrossRef]

- Zhou, H.; Zhao, Y.; Liu, Y.; Lu, S.; An, X.; Liu, Q. Multi-Sensor Data Fusion and CNN-LSTM Model for Human Activity Recognition System. Sensors 2023, 23, 4750. [Google Scholar] [CrossRef]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A Survey of Methods for Explaining Black Box Models. ACM Comput. Surv. 2018, 51, 1–42. [Google Scholar] [CrossRef]

| Feature | RNNs | LSTMs |

|---|---|---|

| Memory Retention | Short-term | Long-term |

| Vanishing Gradient Problem | Severe | Mitigated |

| Complexity | Simpler | More Complex |

| Gates | None | Three (Input, Forget, Output) |

| Learning Capability | Limited | Enhanced |

| Application Domain | Key Tasks / Use-Cases | Benefits |

|---|---|---|

| Natural Language Processing | Machine Translation, Sentiment Analysis, Text Generation, Named Entity Recognition | Captures long-term dependencies in text and improves contextual understanding and generation quality |

| Time Series Analysis | Financial Forecasting, Weather Forecasting, Anomaly Detection | Learns temporal patterns for better forecasting and detects unusual behaviour in sequential data |

| Speech Processing | Voice Assistants, Speech-to-Text Transcription, Speech Emotion Recognition | Maintains context across spoken input and improves sequence-to-sequence prediction accuracy |

| Healthcare | Patient Monitoring, Outcome Prediction | Enables real-time prediction from evolving physiological signals and supports early intervention |

| Robotics | Path Planning, Navigation, Decision-Making | Predicts future states from sensor data and improves autonomous decision-making |

| Video Analysis | Action Recognition, Event Prediction | Models temporal dynamics in visual sequences to recognise complex activities |

| Variant | Key Features | Applications | Benefits |

|---|---|---|---|

| Bidirectional LSTMs | Processes sequences in both directions | Named Entity Recognition, Machine Translation | Improved context understanding, better disambiguation |

| Stacked LSTMs | Multiple LSTM layers for deeper learning | Speech Recognition, Video Analysis | Enhanced feature extraction, ability to model complex patterns |

| Attention Mechanisms | Focuses on specific input parts for each output | Machine Translation, Text Summarization | Better handling of long sequences, dynamic context focus |

| Challenge | Description | Implications |

|---|---|---|

| Computational Complexity | High resource consumption and scalability issues | Slower training and inference, limited applicability in real-time systems |

| Data Requirements | Need for large, high-quality datasets | Risk of overfitting, poor generalization, and limited domain applicability |

| Training Difficulties | Long training times and hyperparameter tuning challenges | Increased development time and potential instability in learning |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Krichen, M.; Mihoub, A. Long Short-Term Memory Networks: A Comprehensive Survey. AI 2025, 6, 215. https://doi.org/10.3390/ai6090215

Krichen M, Mihoub A. Long Short-Term Memory Networks: A Comprehensive Survey. AI. 2025; 6(9):215. https://doi.org/10.3390/ai6090215

Chicago/Turabian StyleKrichen, Moez, and Alaeddine Mihoub. 2025. "Long Short-Term Memory Networks: A Comprehensive Survey" AI 6, no. 9: 215. https://doi.org/10.3390/ai6090215

APA StyleKrichen, M., & Mihoub, A. (2025). Long Short-Term Memory Networks: A Comprehensive Survey. AI, 6(9), 215. https://doi.org/10.3390/ai6090215