Large Language Models in Cybersecurity: A Survey of Applications, Vulnerabilities, and Defense Techniques

Abstract

1. Introduction

1.1. Research Questions

1.2. Contributions

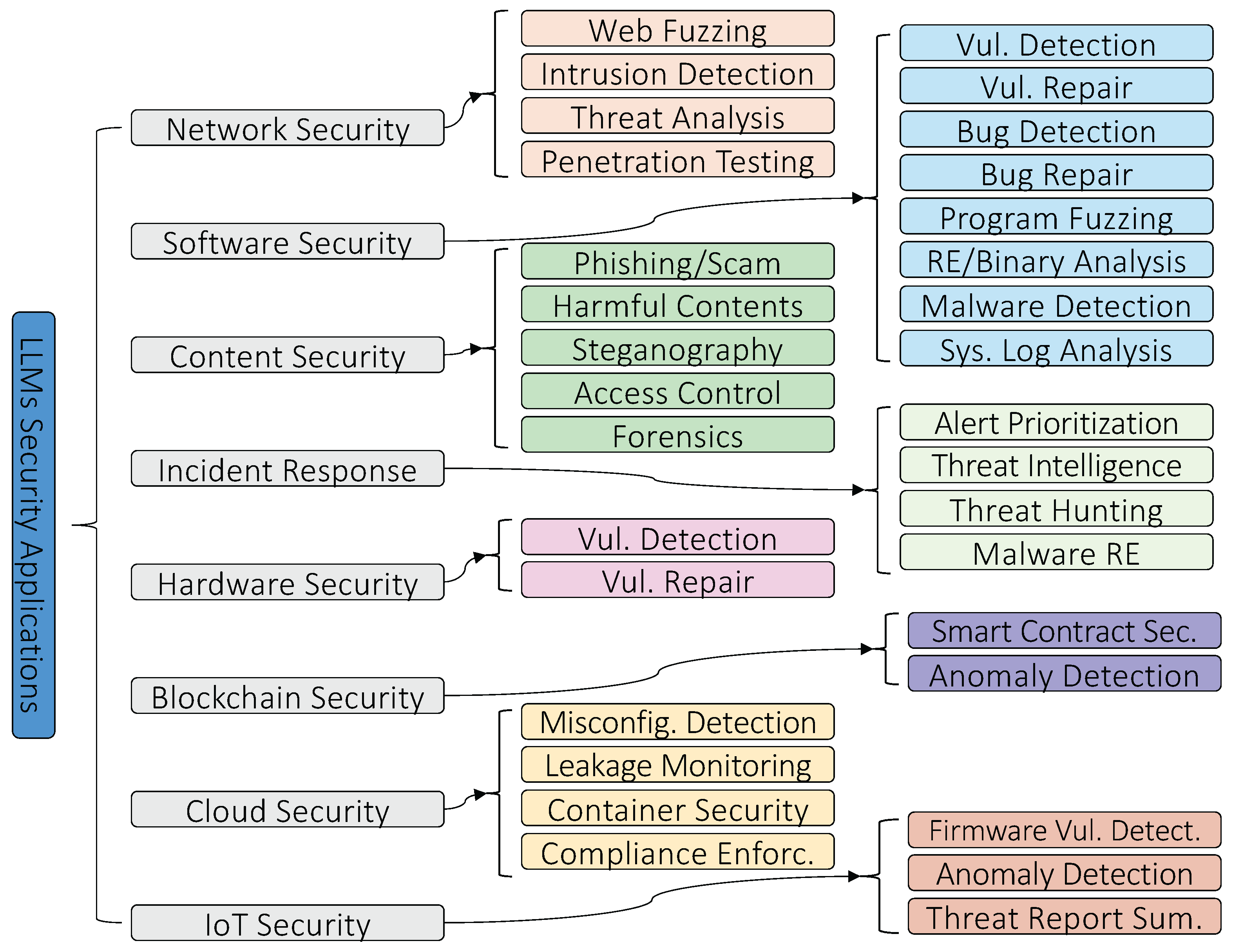

- Applications: We enumerate and evaluate the diverse applications of LLMs in cybersecurity, including hardware design security, intrusion detection, malware detection, and phishing prevention, while analyzing their capabilities in these different contexts to address how LLMs are used.

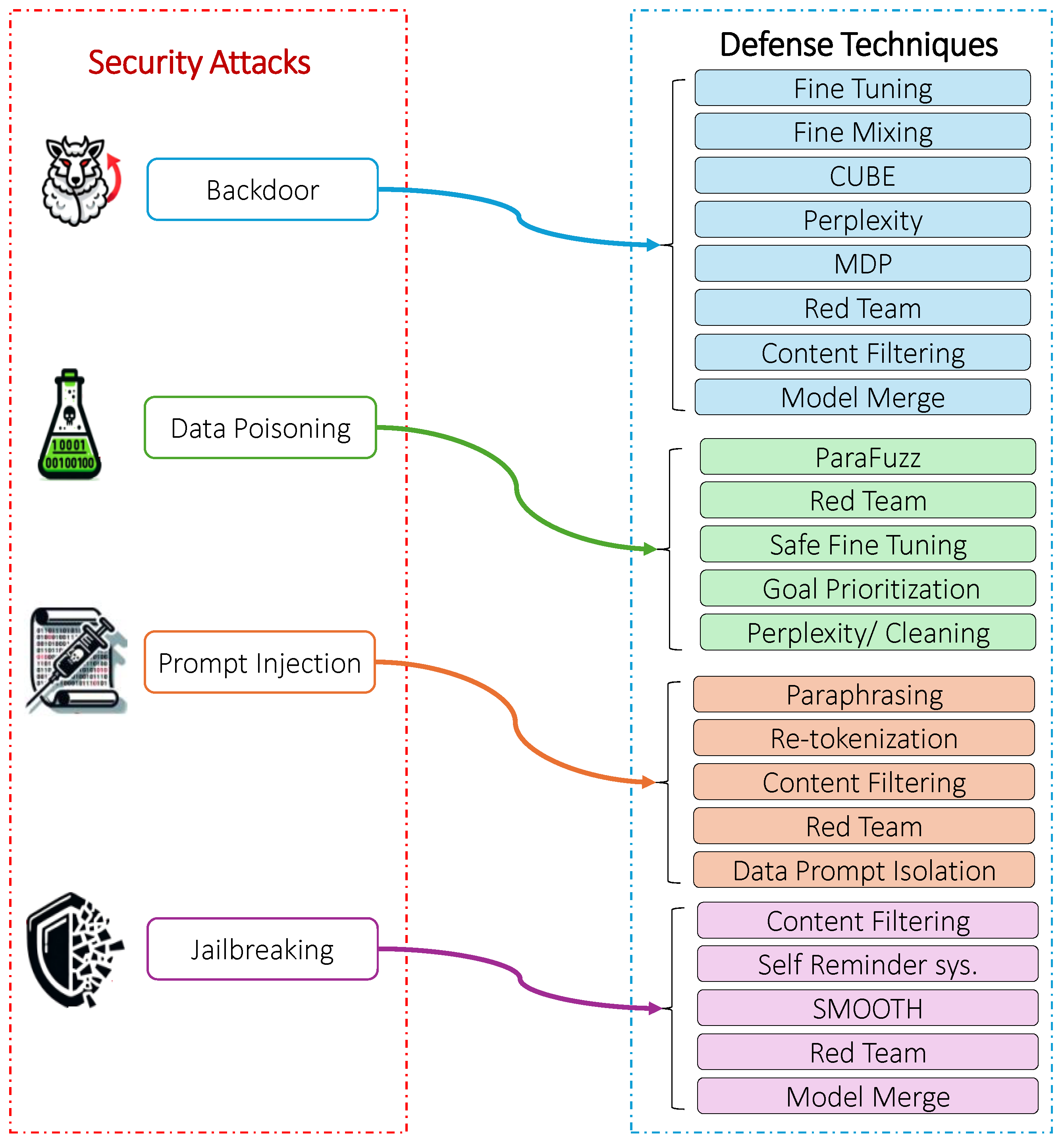

- Vulnerabilities and Mitigation: We systematically enumerate and examine the vulnerabilities in LLMs with respect to their implications for security applications. Our exploration of such an attack surface encompasses aspects like prompt injection, jailbreaking attacks, data poisoning, and backdoor attacks. Moreover, we enumerate and assess the defense techniques that are in place or could be further deployed to reduce these risks and improve LLM security for those critical applications.

- Potential Challenges: We identify the potential challenges that arise in the use of LLMs for specified cybersecurity tasks, calling for more attention and research actions from the community.

1.3. Organization

2. Methodology

- Identification phase: In this phase, we systematically searched six major electronic databases and scientific repositories, including IEEE Xplore, ACM Digital Library, SpringerLink, ScienceDirect, MDPI, and arXiv. Search queries were carefully designed using keyword combinations explicitly targeting our scope: “Large Language Models,” “LLMs,” “Cybersecurity,” “Applications,” “Vulnerabilities,” and “Defense Techniques.” To reflect recent advancements, our searches were limited to studies published within the five-year period from 2021 to 2025, with particular emphasis on the most recent developments in the field. The process yielded 1021 records from databases, with no records identified from registers.

- Screening phase: Before screening, 412 duplicate records were removed, leaving 609 records for title/abstract screening. Two independent reviewers screened each record, excluding 342 articles for the following reasons: off-topic content unrelated to cybersecurity-focused LLM applications (n = 256), publication types limited to editorials, commentaries, or book chapters (n = 48), and non-peer-reviewed or preprint-only sources lacking formal publication (n = 38). Disagreements between reviewers were resolved through discussion or consultation with a third reviewer.

- Eligibility phase: The remaining 267 reports were sought for full-text retrieval, all of which were accessible. These were assessed against predefined inclusion and exclusion criteria:

- (a)

- Inclusion criteria: It was required that studies (1) explicitly discuss applications of LLMs in cybersecurity, (2) examine vulnerabilities or attacks targeting LLMs within cybersecurity contexts, (3) evaluate or propose defense techniques to secure LLMs, or (4) demonstrate empirical, analytical, or technical methodological rigor.

- (b)

- Exclusion criteria: This comprised (1) studies not clearly focused on the intersection of LLMs and cybersecurity, (2) purely theoretical articles without empirical validation or analytical evaluation, (3) articles lacking methodological transparency or reproducibility, and (4) short papers (<6 pages) lacking comprehensive evaluation. Based on these criteria, 44 reports were excluded for the following reasons: no empirical validation (n = 19), theoretical-only without methodological detail (n = 15), and short pages (n = 10). This phase was also conducted independently by two reviewers, with all disagreements resolved by consensus or through a third-party adjudicator to ensure consistency and precision.

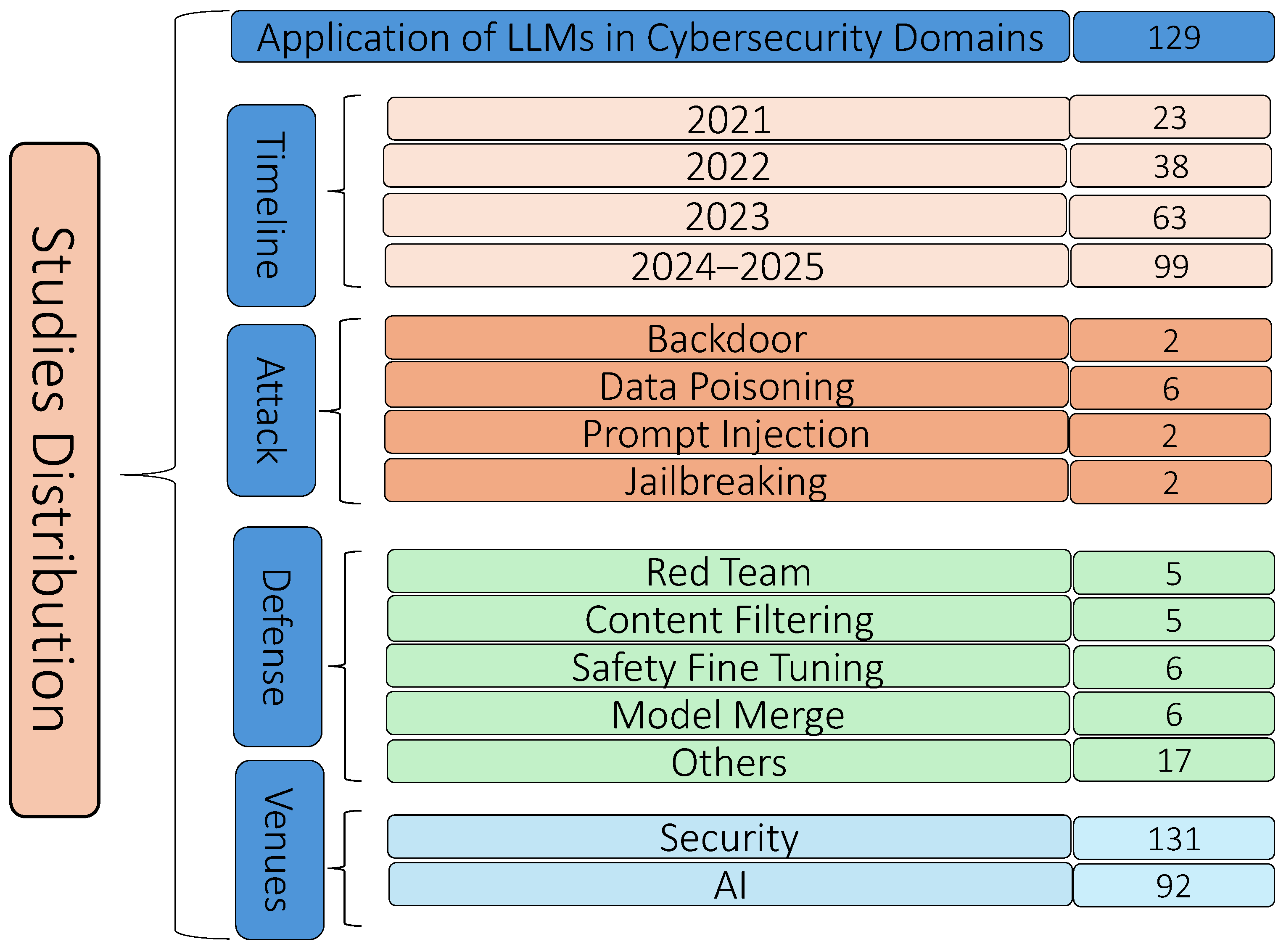

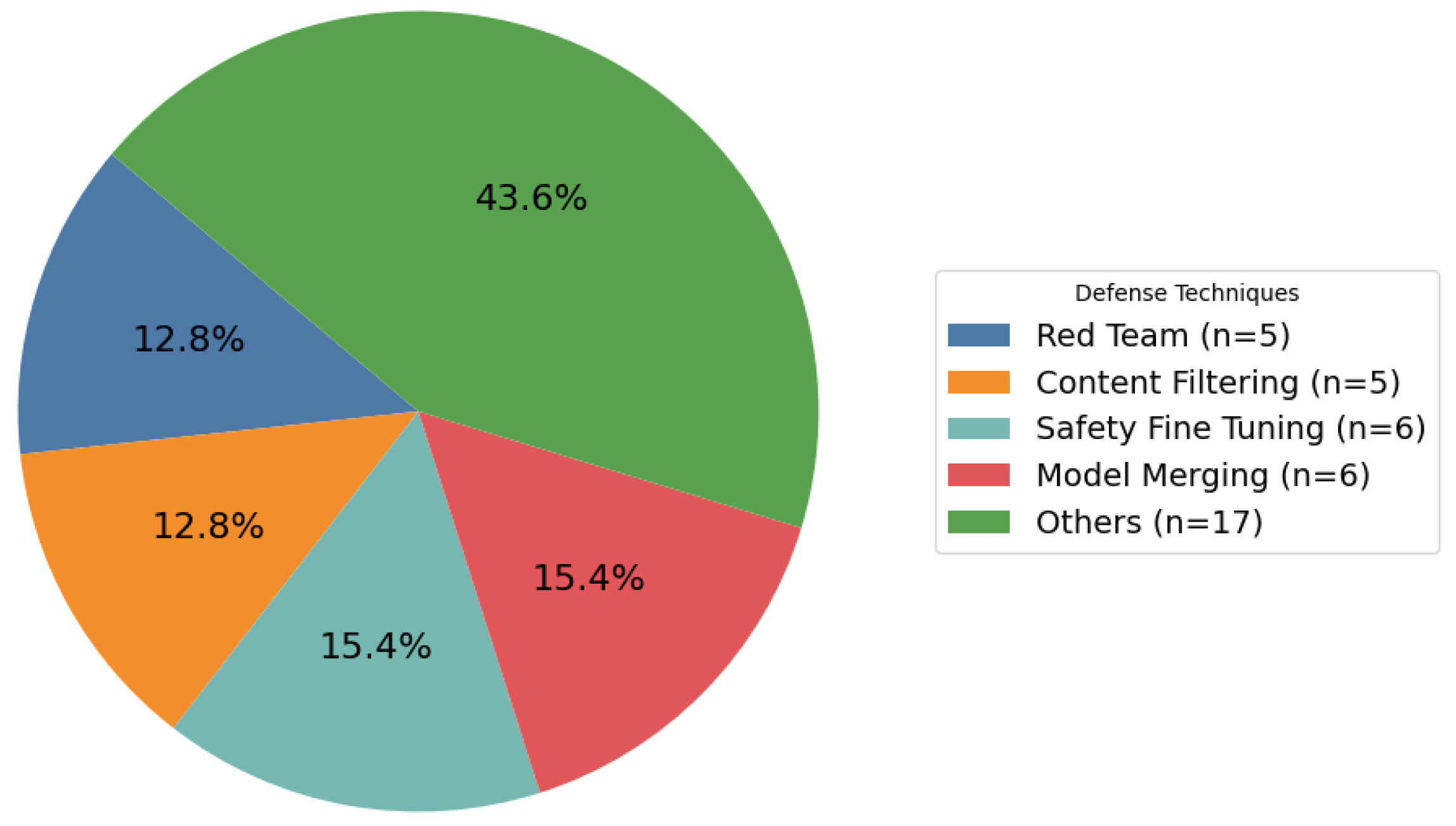

- Inclusion phase: Applying these strict inclusion criteria, a total of 223 studies were selected for comprehensive analysis and inclusion in our final review. These studies were categorized as follows: 129 studies focusing on the application of LLMs in cybersecurity domains; by timeline, including 23 studies from 2021, 38 from 2022, 63 from 2023, and 99 from 2024 to 2025; by attack type, covering backdoor (2 studies), data poisoning (6), prompt injection (2), and jailbreaking (2); by defense techniques, encompassing red teaming (5), content filtering (5), safety fine-tuning (6), model merging (6), and other defenses (17); and by publication venue, with 131 studies published in security-focused venues and 92 in artificial intelligence venues. Figure 2 presents the distribution of the 223 studies included in this survey, categorized by publication timeline, attack type, defense techniques, and publication venue. This classification highlights research trends, the variety of examined attack and defense techniques, and their distribution across both security and AI research domains, thus providing a clear and organized summary of the literature at the intersection of LLMs and cybersecurity.

3. Related Reviews

| Ref | Year | Study Area | Contribution | D | V | O | Domains | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| ➀ | ➁ | ➂ | ➃ | ➄ | |||||||

| [14] | 2023 | LLMs in SoC | Surveys LLM potential, challenges, and outlook for SoC security. | ✕ | ✓ | ✓ | ✕ | ✕ | ✓ | ✕ | ✓ |

| [15] | 2024 | LLMs for blockchain | Covers LLM use in auditing, anomaly detection, and vulnerability repair. | ✕ | ✓ | ✓ | ✕ | ✕ | ✕ | ✓ | ✕ |

| [12] | 2024 | LLM applications | Explores use cases, dataset limitations, and mitigation strategies. | ✓ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✓ |

| [13] | 2024 | GenAI in cybersecurity | Reviews GenAI attack applications in cybersecurity. | ✓ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ |

| [16] | 2024 | LLM in cybersecurity | Highlights LLM capabilities for solving key cybersecurity issues. | ✓ | ✓ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ |

| [17] | 2024 | Survey | Surveys 42 models, their roles in cybersecurity, and known flaws. | ✓ | ✓ | ✓ | ✕ | ✓ | ✓ | ✕ | ✓ |

| Ours | 2025 | Apps, vulns., and defenses | Presents LLM use across domains, identifies vulnerabilities, and proposes defenses. | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

4. Security Domains and Tasks Empowered by LLM-Based Innovations

4.1. LLMs in Network Security

4.1.1. Web Fuzzing

| Author | Ref. | Year | Dataset | Task | Key Contributions | Challenges | Optimized Technique |

|---|---|---|---|---|---|---|---|

| Meng et al. | [20] | 2024 | PROFUZZ | Web Fuzzing | Enhanced state transitions and vuln. discovery. | Weak for closed protocols. | GPT-3.5 for grammar and seed tuning. |

| Liu et al. | [21] | 2023 | GramBeddings, Mendeley | Traffic Detection | CharBERT improves URL detection. | High compute, few adversarial tests. | CharBERT + attention + pooling. |

| Moskal et al. | [22] | 2023 | Sim. forensic exp. | Threat Analysis | Refined ACT loop via sandboxed LLM agents. | Weakness with complex net/env. | FSM + chained prompts. |

| Temara et al. | [23] | 2023 | None | Pentesting | Multi-tool consolidation via LLM. | Pre-2021 limit, prompt sensitivity. | Case-driven extraction. |

| Tihanyi et al. | [24] | 2023 | Form AI | Vuln. Detection | Benchmarked form AI dataset. | Limited scope, costly verification. | Zero-shot + ESBMC. |

| Sun et al. | [25] | 2024 | Solidity, Java CWE | Firmware Vuln. | LLM4Vuln detects misuse + zero-days. | No cross-language support. | Prompt + retrieval + reasoning. |

| Meng et al. | [26] | 2023 | RISC, MIPS | HW Vuln. | NSPG w/HS-BERT for spec extraction. | Doc scarcity, manual labels. | Fine-tuned HS-BERT + MLM. |

| Du et al. | [27] | 2023 | Synthetic SFG | Bug Localization | Graph-based CodeBERT + contrastive loss. | Low scale, limited eval. | HMCBL w/neg. sampling. |

| Joyce et al. | [28] | 2023 | VirusTotal (2006–2023) | Malware Feature Learn. | AVScan2Vec for AV task embedding. | Mix of benign/malware, retrain needs. | Token + label masking w/Siamese fine-tuning. |

| Labonne et al. | [29] | 2023 | Email spam datasets | Phishing Detect. | Spam-T5 outperforms baseline. | Costly training, weak domain generality. | Prefix-tuned Flan-T5. |

| Author | Ref. | Year | Dataset | Task | Key Contributions | Challenges | Optimized Technique |

|---|---|---|---|---|---|---|---|

| Malul et al. | [30] | 2024 | KCFs | Misconfig. Detection | GenKubeSec w/UMI for high accuracy. | Unseen configs, labeled data, scaling. | Fine-tuned LLM + few-shot. |

| Kasula et al. | [31] | 2023 | NSL-KDD, cloud logs | Data Leakage | Real-time detection in dynamic clouds. | Low generalization. | RF + LSTM. |

| Bandara et al. | [32] | 2024 | PBOMs | Container Sec. | DevSec-GPT tracks vulns via blockchain. | LLM overhead. | Llama2 + JSON schema. |

| Nguyen et al. | [33] | 2024 | Compliance data | Compliance | Olla Bench tests LLM reasoning. | Scalability, adaptability. | KG + SEM. |

| Ji et al. | [34] | 2024 | Incident reports | Alert Prioritization | SEVENLLM for multi-task response. | Limited multilinguality. | Prompt-tuned LLM. |

| Gai et al. | [35] | 2023 | Ethereum txns | Anomaly Detect. | BLOCKGPT ranks real-time anomalies. | False positives. | EVM tree + transformer. |

| Ahmad et al. | [36] | 2023 | MITRE, OpenTitan | HW Bug Repair | LLM repair in Verilog, beats CirFix. | Multi-line bug limits. | Prompt tuning + testbench. |

| Tseng et al. | [37] | 2024 | CTI Reports | Threat Intel | GPT-4 extracts IoCs + Regex rules. | Extraction accuracy. | Segmentation + GPT-4. |

| Scanlon et al. | [38] | 2023 | Forensics | Forensic Tasks | GPT-4 used in education + analysis. | Output inconsistency. | Prompt + expert check. |

| Martin et al. | [39] | 2023 | OpenAI corpus | Bias Detect. | ChatGPT shows political bias. | Ethical balance. | GSS-based bias reverse. |

4.1.2. Traffic and Intrusion Detection

4.1.3. Cyber Threat Intelligence (CTI)

4.1.4. Penetration Testing

4.2. LLMs in Software and System Security

4.2.1. Vulnerability Detection

4.2.2. Vulnerability Repair

4.2.3. Bug Detection

4.2.4. Bug Repair

4.2.5. Program Fuzzing

- Identify vulnerabilities: LLMs can analyze previous bug reports to create inputs that uncover similar issues in new or updated systems [81].

- Create variations: They generate various test cases related to sample inputs, ensuring coverage of potential edge cases [82].

- Optimize compilers: By examining compiler code, LLMs craft programs that trigger specific optimizations, revealing compilation process flaws [83].

- Divide testing tasks: A dual-model interaction lets LLMs separate tasks like test case generation and requirements analysis for efficient parallel processing.

4.2.6. Reverse Engineering and Binary Analysis

- DexBert: Proposed by Sun et al. [87], this tool characterizes the binary bytecode of the Android system, improving the specific binary analysis of the Android ecosystem.

- SYMC Framework: Developed by Pei et al. [88], this framework uses group theory to preserve the semantic symmetry of the code during analysis. The approach has demonstrated exceptional generalization and robustness in diverse binary analysis tasks.

- Authorship Analysis: Song et al. [89] applied LLMs to address software authorship analysis challenges, enabling effective organization level verification of Advanced Persistent Threat (APT) malicious software.

4.2.7. Malware Detection

4.2.8. System Log Analysis

4.3. LLMs in Information and Content Security

4.3.1. Phishing and Scam Detection

4.3.2. Harmful Contents Detection

4.3.3. Steganography

4.3.4. Access Control

4.3.5. Forensics

4.4. LLMs in Hardware Security

4.4.1. Hardware Vulnerability Detection

4.4.2. Hardware Vulnerability Repair

4.5. LLMs in Blockchain Security

4.5.1. Smart Contract Security

4.5.2. Transaction Anomaly Detection

4.6. LLMs in Cloud Security

4.6.1. Misconfiguration Detection

4.6.2. Data Leakage Monitoring

4.6.3. Container Security

4.6.4. Compliance Enforcement

4.7. LLMs in Incident Response and Threat Intelligence

4.7.1. Alert Prioritization

4.7.2. Automated Threat Intelligence Analysis

4.7.3. Threat Hunting

4.7.4. Malware Reverse Engineering

4.8. LLMs in IoT Security

4.8.1. Firmware Vulnerability Detection

4.8.2. Behavioral Anomaly Detection

4.8.3. Automated Threat Report Summarization

5. Vulnerabilities and Defense Techniques in LLMs

5.1. Defense Techniques Against Security Attacks on LLMs

- ➀

- Red Team Defenses: This is an effective technique for simulating real-world attack scenarios to identify LLM vulnerabilities [146]. The process begins with an attack scenario simulation, where researchers test LLM responses to issues such as abusive language. This is followed by test case generation using classifiers to create scenarios that help eliminate harmful outputs. Finally, the attack detection process assesses the susceptibility of LLMs to adversarial attacks. Continuous updates to security policies, refinement of procedures, and strengthening of technical defenses ensure that LLMs remain robust and secure against evolving threats. Ganguli et al. [147] proposed an efficient AI-assisted interface to facilitate large-scale Red Team data collection for further analysis. Additionally, their research explored the scalability of different LLM types and sizes under Red Team attacks and their ability to reject various threats.Challenges: This technique poses several challenges, such as its resource-intensive nature and the need for skilled experts to effectively simulate complex attack strategies [148]. Red Teaming is still in its early stages with limited statistical data. However, recent advancements, such as leveraging automated approaches for test case generation and classification, have improved scalability and diversity in Red Teaming efforts [147,149].

- ➁

- Content Filtering: This technique encompasses input and output filtering to protect the integrity and appropriateness of LLM interactions by identifying and intercepting harmful inputs and outputs in real time. Recent advancements have introduced two key approaches: rule-based and learning-based systems. Rule-based systems rely on predefined rules or patterns, such as detecting adversarial prompts with high perplexity values [150]. To neutralize semantically sensitive attacks, Jain et al. [151] utilize paraphrasing and re-tokenization techniques. On the other hand, learning-based systems employ innovative methods, such as an alignment-checking function designed by Cao et al. [152] to detect and block alignment-breaking attacks, enhancing security.Challenges: Despite advancements, this technique still suffers from significant limitations that hinder its effectiveness and robustness [153]. One key issue is the evolving nature of adversarial prompts designed to bypass detection mechanisms. Rule-based filters, while simple, struggle to remain effective against increasingly sophisticated attacks. Learning-based systems, which rely on large and diverse datasets, may fail to fully capture harmful content variations and struggle with balancing sensitivity and specificity [154]. This imbalance can lead to false positives (flagging benign content) or false negatives (missing harmful content). Additionally, the lack of explainability in machine learning-based filtering decisions complicates transparency and acceptance, underscoring the need for more interpretable and trustworthy models.

- ➂

- Safety Fine-Tuning: This widely used technique customizes pre-trained LLMs for specific downstream tasks, offering flexibility and adaptability. Recent research, such as that presented by Xiangyu et al. [155], found that fine-tuning with a few adversarially designed training examples can compromise the safety alignment of LLMs. Additionally, even benign, commonly used datasets can inadvertently degrade this alignment, highlighting the risks of unregulated fine-tuning. Researchers have proposed data augmentation and constrained optimization objectives to address these challenges [156]. Data augmentation enriches the training dataset with a diverse range of samples, including adversarial and edge cases, helping the model generalize safety principles more effectively. Constrained optimization applies additional loss functions or restrictions during training to guide the model toward prioritizing safety without sacrificing task performance. Bianchi et al. [157] and Zhao et al. [158] validate the effectiveness of this technique, suggesting that incorporating a small number of safety-related examples during fine-tuning improves the safety of LLMs without reducing their practical effectiveness. Together, these defensive techniques enhance safety throughout the generative process, reducing the risk of adversarial attacks and unintentional misalignments while preserving the adaptability and effectiveness of fine-tuned LLMs for real-world tasks.Challenges: While widely used, integrating safety-related examples into fine-tuning can limit the generalization of LLMs to benign, non-malicious inputs or degrade performance on specific tasks [159]. Additionally, the reliance on manually curated safety examples or prompt templates demands considerable human expertise and is subject to subjective biases, leading to inconsistencies and affecting model reproducibility across different use cases [160]. Moreover, fine-tuning itself can both enhance safety through the introduction of safety-focused examples and expose the model to new vulnerabilities when adversarial examples are incorporated.

- ➃

- Model Merging: The model merging technique combines multiple models to enhance robustness and improve performance [161]. This method complements other defense strategies by significantly strengthening the resilience of LLMs against adversarial manipulations. By leveraging the diversity of multiple models, it creates a more robust system capable of handling adversarial inputs while maintaining generalization across various tasks [162]. When merging models, different fine-tuned models that are initialized from the same pre-trained backbone share optimization trajectories while diverging in specific parameters tailored to different tasks. These diverging parameters can be merged through arithmetic averaging, allowing the model to generalize better across domain inputs and perform multi-task learning. This idea has been proven effective in fields like Federated Learning (FL) and Continual Learning (CL), where model parameters from different tasks are combined to mitigate conflicts. Zou et al. [163] and Kadhe et al. [164] applied model merging techniques to balance unlearning unsafe responses while minimizing over-defensiveness.Challenges: Despite its potential, model merging faces significant challenges due to a lack of deep theoretical investigation in two key areas [165]. First, the relationship between adversarially updated model parameters derived from unlearning objectives and the embeddings associated with safe responses remains unclear. There is a risk that adversarial training with a limited set of harmful response texts could lead to overfitting, making the model more susceptible to new, unseen jailbreaking prompts. This limited approach may fail to generalize effectively to novel adversarial threats, undermining the robustness of LLMs. Second, controlling the over-defensiveness of merged model parameters presents a significant challenge. While merging aims to improve resilience, it does not provide a clear methodology for preventing over-defensive behavior, where the model may excessively restrict certain types of outputs, limiting its usability and flexibility. This lack of control could hinder the model’s ability to balance safety with task performance, as overly defensive behavior may result in missed or overly cautious responses, impacting user experience and task accuracy [166]. These challenges highlight the need for further research into the theoretical foundations of model merging to ensure its effectiveness and versatility as a defense mechanism.

5.2. Adversarial Attacks

5.2.1. Data Poisoning

5.2.2. Backdoor Attacks

5.3. Prompt Hacking

5.3.1. Jailbreaking Attacks

5.3.2. Prompt Injection

5.3.3. Advanced Defense Tactics: Model Merging, Adversarial Training, and Unlearning

5.4. Real-Life Case Studies and Benchmarking

6. Limitations of Existing Works and Future Research Directions

Ethical and Privacy Considerations

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ABAC | Attribute-Based Access Control |

| ACI-IoT | Army Cyber Institute Internet-of-Things dataset |

| AI | Artificial Intelligence |

| APT | Advanced Persistent Threat |

| BMC | Bounded Model Checking |

| BNN | Binary Neural Network |

| CUBE | Clustering-Based Unsupervised Backdoor Elimination |

| CTI | Cyber Threat Intelligence |

| CVE | Common Vulnerabilities and Exposures |

| CWE | Common Weakness Enumeration |

| DHR | Data Handling Requirements |

| FedRAMP | Federal Risk and Authorization Management Program |

| GAN | Generative Adversarial Network |

| IDS | Intrusion Detection System |

| IoT | Internet of Things |

| KG | Knowledge Graph |

| LLM | Large Language Model |

| MDP | Masking Differential Prompting |

| MIPS | Microprocessor without Interlocked Pipeline Stages |

| NIDS | Network Intrusion Detection System |

| NIST | National Institute of Standards and Technology |

| NLP | Natural Language Processing |

| PBOM | Pipeline Bill of Materials |

| PCA | Principal Component Analysis |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| RAG | Retrieval-Augmented Generation |

| RCE | Remote Code Execution |

| RISC-V | Reduced Instruction Set Computer V (open ISA) |

| RTA | Represent Them All |

| SIEM | Security Information and Event Management |

| SOC | Security Operations Center |

| SoC | System-on-Chip |

| SQLi | SQL Injection |

| SSL | Secure Sockets Layer |

| XAI | Explainable Artificial Intelligence |

| XSS | Cross-Site Scripting |

| ZTA | Zero Trust Architecture |

References

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019. [Google Scholar] [CrossRef]

- OpenAI. GPT-3.5. 2022. Available online: https://platform.openai.com/docs/models/gpt-3-5 (accessed on 11 January 2025).

- OpenAI. GPT-4. 2023. Available online: https://platform.openai.com/docs/models/gpt-4-and-gpt-4-turbo (accessed on 11 January 2025).

- Chen, C.; Su, J.; Chen, J.; Wang, Y.; Bi, T.; Yu, J.; Wang, Y.; Lin, X.; Chen, T.; Zheng, Z. When ChatGPT Meets Smart Contract Vulnerability Detection: How Far Are We? arXiv 2024. [Google Scholar] [CrossRef]

- Bai, Y.; Kadavath, S.; Kundu, S.; Askell, A.; Kernion, J.; Jones, A.; Chen, A.; Goldie, A.; Mirhoseini, A.; McKinnon, C.; et al. Constitutional AI: Harmlessness from AI Feedback. arXiv 2022. [Google Scholar] [CrossRef]

- Houssel, P.R.B.; Singh, P.; Layeghy, S.; Portmann, M. Towards Explainable Network Intrusion Detection using Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Abdallah, A.; Jatowt, A. Generator-Retriever-Generator Approach for Open-Domain Question Answering. arXiv 2024. [Google Scholar] [CrossRef]

- Ou, J.; Lu, J.; Liu, C.; Tang, Y.; Zhang, F.; Zhang, D.; Gai, K. DialogBench: Evaluating LLMs as Human-like Dialogue Systems. arXiv 2024. [Google Scholar] [CrossRef]

- Aguina-Kang, R.; Gumin, M.; Han, D.H.; Morris, S.; Yoo, S.J.; Ganeshan, A.; Jones, R.K.; Wei, Q.A.; Fu, K.; Ritchie, D. Open-Universe Indoor Scene Generation using LLM Program Synthesis and Uncurated Object Databases. arXiv 2024. [Google Scholar] [CrossRef]

- Shayegani, E.; Mamun, M.A.A.; Fu, Y.; Zaree, P.; Dong, Y.; Abu-Ghazaleh, N. Survey of vulnerabilities in large language models revealed by adversarial attacks. arXiv 2023, arXiv:2310.10844. [Google Scholar] [CrossRef]

- Xu, H.; Wang, S.; Li, N.; Wang, K.; Zhao, Y.; Chen, K.; Yu, T.; Liu, Y.; Wang, H. Large Language Models for Cyber Security: A Systematic Literature Review. arXiv 2024. [Google Scholar] [CrossRef]

- Yigit, Y.; Buchanan, W.J.; Tehrani, M.G.; Maglaras, L. Review of Generative AI Methods in Cybersecurity. arXiv 2024. [Google Scholar] [CrossRef]

- Saha, D.; Tarek, S.; Yahyaei, K.; Saha, S.K.; Zhou, J.; Tehranipoor, M.; Farahmandi, F. LLM for SoC Security: A Paradigm Shift. arXiv 2023. [Google Scholar] [CrossRef]

- He, Z.; Li, Z.; Yang, S.; Qiao, A.; Zhang, X.; Luo, X.; Chen, T. Large Language Models for Blockchain Security: A Systematic Literature Review. arXiv 2024. [Google Scholar] [CrossRef]

- Divakaran, D.M.; Peddinti, S.T. LLMs for Cyber Security: New Opportunities. arXiv 2024. [Google Scholar] [CrossRef]

- Ferrag, M.A.; Alwahedi, F.; Battah, A.; Cherif, B.; Mechri, A.; Tihanyi, N. Generative AI and Large Language Models for Cyber Security: All Insights You Need. arXiv 2024. [Google Scholar] [CrossRef]

- Liang, H.; Li, X.; Xiao, D.; Liu, J.; Zhou, Y.; Wang, A.; Li, J. Generative Pre-Trained Transformer-Based Reinforcement Learning for Testing Web Application Firewalls. IEEE Trans. Dependable Secur. Comput. 2024, 21, 309–324. [Google Scholar] [CrossRef]

- Liu, M.; Li, K.; Chen, T.A. DeepSQLi: Deep Semantic Learning for Testing SQL Injection. In Proceedings of the 29th ACM SIGSOFT International Symposium on Software Testing and Analysis (ISSTA’20), Virtual Event, USA, 18–22 July 2020; Association for Computing Machinery: New York, NY, USA; pp. 286–297. [Google Scholar]

- Meng, R.; Mirchev, M.; Böhme, M.; Roychoudhury, A. Large Language Model–Guided Protocol Fuzzing. In Proceedings of the 31st Annual Network and Distributed System Security Symposium (NDSS 2024), San Diego, CA, USA, 26 February–1 March 2024; The Internet Society: Reston, VA, USA, 2024. [Google Scholar]

- Liu, R.; Wang, Y.; Xu, H.; Qin, Z.; Liu, Y.; Cao, Z. Malicious URL Detection via Pretrained Language Model Guided Multi-Level Feature Attention Network. arXiv 2023. [Google Scholar] [CrossRef]

- Moskal, S.; Laney, S.; Hemberg, E.; O’Reilly, U. LLMs Killed the Script Kiddie: How Agents Supported by Large Language Models Change the Landscape of Network Threat Testing. arXiv 2023. [Google Scholar] [CrossRef]

- Temara, S. Maximizing Penetration Testing Success with Effective Reconnaissance Techniques using ChatGPT. arXiv 2023. [Google Scholar] [CrossRef]

- Tihanyi, N.; Bisztray, T.; Jain, R.; Ferrag, M.A.; Cordeiro, L.C.; Mavroeidis, V. The FormAI Dataset: Generative AI in Software Security through the Lens of Formal Verification. In Proceedings of the 19th International Conference on Predictive Models and Data Analytics in Software Engineering, PROMISE 2023, San Francisco, CA, USA, 8 December 2023; ACM: New York, NY, USA, 2023; pp. 33–43. [Google Scholar] [CrossRef]

- Sun, Y.; Wu, D.; Xue, Y.; Liu, H.; Wang, H.; Xu, Z.; Xie, X.; Liu, Y. GPTScan: Detecting Logic Vulnerabilities in Smart Contracts by Combining GPT with Program Analysis. In Proceedings of the 46th IEEE/ACM International Conference on Software Engineering, ICSE 2024, Lisbon, Portugal, 14–20 April 2024; ACM: New York, NY, USA, 2024; pp. 166:1–166:13. [Google Scholar] [CrossRef]

- Meng, X.; Srivastava, A.; Arunachalam, A.; Ray, A.; Silva, P.H.; Psiakis, R.; Makris, Y.; Basu, K. Unlocking Hardware Security Assurance: The Potential of LLMs. arXiv 2023. [Google Scholar] [CrossRef]

- Du, Y.; Yu, Z. Pre-training Code Representation with Semantic Flow Graph for Effective Bug Localization. In Proceedings of the 31st ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, ESEC/FSE 2023, San Francisco, CA, USA, 3–9 December 2023; ACM: New York, NY, USA, 2023; pp. 579–591. [Google Scholar] [CrossRef]

- Joyce, R.J.; Patel, T.; Nicholas, C.; Raff, E. AVScan2Vec: Feature Learning on Antivirus Scan Data for Production-Scale Malware Corpora. In Proceedings of the 16th ACM Workshop on Artificial Intelligence and Security, AISec 2023, Copenhagen, Denmark, 30 November 2023; ACM: New York, NY, USA, 2023; pp. 185–196. [Google Scholar] [CrossRef]

- Labonne, M.; Moran, S. Spam-T5: Benchmarking Large Language Models for Few-Shot Email Spam Detection. arXiv 2023. [Google Scholar] [CrossRef]

- Malul, E.; Meidan, Y.; Mimran, D.; Elovici, Y.; Shabtai, A. GenKubeSec: LLM-Based Kubernetes Misconfiguration Detection, Localization, Reasoning, and Remediation. arXiv 2024. [Google Scholar] [CrossRef]

- Kasula, V.K.; Yadulla, A.R.; Konda, B.; Yenugula, M. Fortifying cloud environments against data breaches: A novel AI-driven security framework. World J. Adv. Res. Rev. 2024, 24, 1613–1626. [Google Scholar] [CrossRef]

- Bandara, E.; Shetty, S.; Mukkamala, R.; Rahman, A.; Foytik, P.B.; Liang, X.; De Zoysa, K.; Keong, N.W. DevSec-GPT—Generative-AI (with Custom-Trained Meta’s Llama2 LLM), Blockchain, NFT and PBOM Enabled Cloud Native Container Vulnerability Management and Pipeline Verification Platform. In Proceedings of the 2024 IEEE Cloud Summit, Washington, DC, USA, 27–28 June 2024; pp. 28–35. [Google Scholar] [CrossRef]

- Nguyen, T.N. OllaBench: Evaluating LLMs’ Reasoning for Human-Centric Interdependent Cybersecurity. arXiv 2024. [Google Scholar] [CrossRef]

- Ji, H.; Yang, J.; Chai, L.; Wei, C.; Yang, L.; Duan, Y.; Wang, Y.; Sun, T.; Guo, H.; Li, T.; et al. SEvenLLM: Benchmarking, Eliciting, and Enhancing Abilities of Large Language Models in Cyber Threat Intelligence. arXiv 2024, arXiv:2405.03446. [Google Scholar] [CrossRef]

- Luo, H.; Luo, J.; Vasilakos, A.V. BC4LLM: A perspective of trusted artificial intelligence when blockchain meets large language models. Neurocomputing 2024, 599, 128089. [Google Scholar] [CrossRef]

- Ahmad, B.; Thakur, S.; Tan, B.; Karri, R.; Pearce, H. Fixing Hardware Security Bugs with Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Tseng, P.; Yeh, Z.; Dai, X.; Liu, P. Using LLMs to Automate Threat Intelligence Analysis Workflows in Security Operation Centers. arXiv 2024, arXiv:2407.13093. [Google Scholar] [CrossRef]

- Scanlon, M.; Breitinger, F.; Hargreaves, C.; Hilgert, J.; Sheppard, J. ChatGPT for digital forensic investigation: The good, the bad, and the unknown. Forensic Sci. Int. Digit. Investig. 2023, 46, 301609. [Google Scholar] [CrossRef]

- Martin, J.L. The Ethico-Political Universe of ChatGPT. J. Soc. Comput. 2023, 4, 1–11. [Google Scholar] [CrossRef]

- López Delgado, J.L.; López Ramos, J.A. A Comprehensive Survey on Generative AI Solutions in IoT Security. Electronics 2024, 13, 4965. [Google Scholar] [CrossRef]

- Zhang, H.; Sediq, A.B.; Afana, A.; Erol-Kantarci, M. Large Language Models in Wireless Application Design: In-Context Learning-enhanced Automatic Network Intrusion Detection. arXiv 2024. [Google Scholar] [CrossRef]

- Pazho, A.D.; Noghre, G.A.; Purkayastha, A.A.; Vempati, J.; Martin, O.; Tabkhi, H. A survey of graph-based deep learning for anomaly detection in distributed systems. IEEE Trans. Knowl. Data Eng. 2023, 36, 1–20. [Google Scholar] [CrossRef]

- Chen, P.; Desmet, L.; Huygens, C. A Study on Advanced Persistent Threats. In Proceedings of the IFIP International Conference on Communications and Multimedia Security, Aveiro, Portugal, 25–26 September 2014; pp. 63–72. [Google Scholar] [CrossRef]

- Aghaei, E.; Al-Shaer, E.; Shadid, W.; Niu, X. Automated CVE Analysis for Threat Prioritization and Impact Prediction. arXiv 2023. [Google Scholar] [CrossRef]

- Cuong Nguyen, H.; Tariq, S.; Baruwal Chhetri, M.; Quoc Vo, B. Towards effective identification of attack techniques in cyber threat intelligence reports using large language models. In Proceedings of the Companion Proceedings of the ACM on Web Conference 2025, Sydney, Australia, 28 April–2 May 2025; pp. 942–946. [Google Scholar]

- Charan, P.V.S.; Chunduri, H.; Anand, P.M.; Shukla, S.K. From Text to MITRE Techniques: Exploring the Malicious Use of Large Language Models for Generating Cyber Attack Payloads. arXiv 2023. [Google Scholar] [CrossRef]

- Grinbaum, A.; Adomaitis, L. Dual use concerns of generative AI and large language models. J. Responsible Innov. 2024, 11, 2304381. [Google Scholar] [CrossRef]

- Ienca, M.; Vayena, E. Dual use in the 21st century: Emerging risks and global governance. Swiss Med. Wkly. 2018, 148, w14688. [Google Scholar] [CrossRef]

- Happe, A.; Kaplan, A.; Cito, J. LLMs as Hackers: Autonomous Linux Privilege Escalation Attacks. arXiv 2024. [Google Scholar] [CrossRef]

- Deng, G.; Liu, Y.; Mayoral-Vilches, V.; Liu, P.; Li, Y.; Xu, Y.; Zhang, T.; Liu, Y.; Pinzger, M.; Rass, S. PentestGPT: An LLM-empowered Automatic Penetration Testing Tool. arXiv 2024. [Google Scholar] [CrossRef]

- Xu, M.; Fan, J.; Huang, X.; Zhou, C.; Kang, J.; Niyato, D.; Mao, S.; Han, Z.; Shen, X.; Lam, K.Y. Forewarned is forearmed: A survey on large language model-based agents in autonomous cyberattacks. arXiv 2025, arXiv:2505.12786. [Google Scholar] [CrossRef]

- Quan, V.L.A.; Phat, C.T.; Nguyen, K.V.; Duy, P.T.; Pham, V.H. XGV-BERT: Leveraging Contextualized Language Model and Graph Neural Network for Efficient Software Vulnerability Detection. arXiv 2023. [Google Scholar] [CrossRef]

- Thapa, C.; Jang, S.I.; Ahmed, M.E.; Camtepe, S.; Pieprzyk, J.; Nepal, S. Transformer-Based Language Models for Software Vulnerability Detection. arXiv 2022. [Google Scholar] [CrossRef]

- Ullah, S.; Han, M.; Pujar, S.; Pearce, H.; Coskun, A.; Stringhini, G. LLMs Cannot Reliably Identify and Reason About Security Vulnerabilities (Yet?): A Comprehensive Evaluation, Framework, and Benchmarks. arXiv 2024. [Google Scholar] [CrossRef]

- Khare, A.; Dutta, S.; Li, Z.; Solko-Breslin, A.; Alur, R.; Naik, M. Understanding the Effectiveness of Large Language Models in Detecting Security Vulnerabilities. arXiv 2024. [Google Scholar] [CrossRef]

- Liu, X.; Tan, Y.; Xiao, Z.; Zhuge, J.; Zhou, R. Not the End of Story: An Evaluation of ChatGPT-Driven Vulnerability Description Mappings. In Findings of the Association for Computational Linguistics: ACL 2023, Toronto, Canada, 9–14 July 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 3724–3731. Available online: https://aclanthology.org/2023.findings-acl.229/ (accessed on 11 January 2025). [CrossRef]

- Zhang, C.; Liu, H.; Zeng, J.; Yang, K.; Li, Y.; Li, H. Prompt-Enhanced Software Vulnerability Detection Using ChatGPT. arXiv 2024. [Google Scholar] [CrossRef]

- Liu, P.; Sun, C.; Zheng, Y.; Feng, X.; Qin, C.; Wang, Y.; Xu, Z.; Li, Z.; Di, P.; Jiang, Y.; et al. Harnessing the Power of LLM to Support Binary Taint Analysis. arXiv 2024. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, J.; Ke, P.; Mi, F.; Wang, H.; Huang, M. Defending Large Language Models Against Jailbreaking Attacks Through Goal Prioritization. arXiv 2024. [Google Scholar] [CrossRef]

- Fu, M.; Tantithamthavorn, C.; Le, T.; Nguyen, V.; Phung, D. VulRepair: A T5-Based Automated Software Vulnerability Repair. In Proceedings of the 30th ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering (ESEC/FSE 2022), Singapore, 14–18 November 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 935–947. [Google Scholar] [CrossRef]

- Zhang, Q.; Fang, C.; Yu, B.; Sun, W.; Zhang, T.; Chen, Z. Pre-trained Model-based Automated Software Vulnerability Repair: How Far are We? arXiv 2023. [Google Scholar] [CrossRef]

- Pearce, H.; Tan, B.; Ahmad, B.; Karri, R.; Dolan-Gavitt, B. Examining Zero-Shot Vulnerability Repair with Large Language Models. arXiv 2022. [Google Scholar] [CrossRef]

- Wu, Y.; Jiang, N.; Pham, H.V.; Lutellier, T.; Davis, J.; Tan, L.; Babkin, P.; Shah, S. How Effective Are Neural Networks for Fixing Security Vulnerabilities. In Proceedings of the 32nd ACM SIGSOFT International Symposium on Software Testing and Analysis, ISSTA’23, Seattle, WA, USA, 17–21 July 2023; ACM: New York, NY, USA, 2023; pp. 1282–1294. [Google Scholar] [CrossRef]

- Alrashedy, K.; Aljasser, A.; Tambwekar, P.; Gombolay, M. Can LLMs Patch Security Issues? arXiv 2024. [Google Scholar] [CrossRef]

- Tol, M.C.; Sunar, B. ZeroLeak: Using LLMs for Scalable and Cost Effective Side-Channel Patching. arXiv 2023. [Google Scholar] [CrossRef]

- Charalambous, Y.; Tihanyi, N.; Jain, R.; Sun, Y.; Ferrag, M.A.; Cordeiro, L.C. Dataset for: A New Era in Software Security: Towards Self-Healing Software via Large Language Models and Formal Verification; Version 1; Zenodo: Genève, Switzerland, 2023. [Google Scholar] [CrossRef]

- Qin, F.; Tucek, J.; Sundaresan, J.; Zhou, Y. Rx: Treating bugs as allergies—A safe method to survive software failures. In Proceedings of the Twentieth ACM Symposium on Operating Systems Principles, Brighton, UK, 23–26 October 2005; pp. 235–248. [Google Scholar]

- Jin, M.; Shahriar, S.; Tufano, M.; Shi, X.; Lu, S.; Sundaresan, N.; Svyatkovskiy, A. InferFix: End-to-End Program Repair with LLMs. arXiv 2023. [Google Scholar] [CrossRef]

- Li, H.; Hao, Y.; Zhai, Y.; Qian, Z. The Hitchhiker’s Guide to Program Analysis: A Journey with Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Lee, J.; Han, K.; Yu, H. A Light Bug Triage Framework for Applying Large Pre-trained Language Model. In Proceedings of the 37th IEEE/ACM International Conference on Automated Software Engineering, ASE’22, Rochester, MI, USA, 10–14 October 2022. [Google Scholar] [CrossRef]

- Yang, A.Z.H.; Martins, R.; Goues, C.L.; Hellendoorn, V.J. Large Language Models for Test-Free Fault Localization. arXiv 2023. [Google Scholar] [CrossRef]

- Li, T.O.; Zong, W.; Wang, Y.; Tian, H.; Wang, Y.; Cheung, S.C.; Kramer, J. Nuances are the Key: Unlocking ChatGPT to Find Failure-Inducing Tests with Differential Prompting. arXiv 2023. [Google Scholar] [CrossRef]

- Fang, S.; Zhang, T.; Tan, Y.; Jiang, H.; Xia, X.; Sun, X. RepresentThemAll: A Universal Learning Representation of Bug Reports. In Proceedings of the 2023 IEEE/ACM 45th International Conference on Software Engineering (ICSE), Melbourne, Australia, 14–20 May 2023; pp. 602–614. [Google Scholar]

- Perry, N.; Srivastava, M.; Kumar, D.; Boneh, D. Do Users Write More Insecure Code with AI Assistants? In Proceedings of the 2023 ACM SIGSAC Conference on Computer and Communications Security, CCS’23, Copenhagen, Denmark, 26–30 November 2023; ACM: New York, NY, USA, 2023; pp. 2785–2799. [Google Scholar] [CrossRef]

- Wei, Y.; Xia, C.S.; Zhang, L. Copiloting the Copilots: Fusing Large Language Models with Completion Engines for Automated Program Repair. In Proceedings of the 31st ACM Joint European Software Engineering Conference and Symposium on the Foundations of Software Engineering, ESEC/FSE’23, San Francisco, CA, USA, 5–7 December 2023; ACM: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Xia, C.S.; Zhang, L. Automated Program Repair via Conversation: Fixing 162 out of 337 Bugs for $0.42 Each using ChatGPT. In Proceedings of the 33rd ACM SIGSOFT International Symposium on Software Testing and Analysis, ISSTA’24, Vienna, Austria, 16–20 September 2024; ACM: New York, NY, USA, 2024; pp. 819–831. [Google Scholar] [CrossRef]

- Xia, C.S.; Zhang, L. Conversational Automated Program Repair. arXiv 2023. [Google Scholar] [CrossRef]

- Boehme, M.; Cadar, C.; Roychoudhury, A. Fuzzing: Challenges and Reflections. IEEE Softw. 2020, 38, 79–86. [Google Scholar] [CrossRef]

- Deng, Y.; Xia, C.S.; Peng, H.; Yang, C.; Zhang, L. Large Language Models are Zero-Shot Fuzzers: Fuzzing Deep-Learning Libraries via Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, C.; Zheng, Y.; Bai, M.; Li, Y.; Ma, W.; Xie, X.; Li, Y.; Sun, L.; Liu, Y. How Effective Are They? Exploring Large Language Model Based Fuzz Driver Generation. In Proceedings of the 33rd ACM SIGSOFT International Symposium on Software Testing and Analysis, ISSTA’24, Vienna, Austria, 16–20 September 2024; ACM: New York, NY, USA, 2024; pp. 1223–1235. [Google Scholar] [CrossRef]

- Deng, Y.; Xia, C.S.; Yang, C.; Zhang, S.D.; Yang, S.; Zhang, L. Large Language Models are Edge-Case Fuzzers: Testing Deep Learning Libraries via FuzzGPT. arXiv 2023. [Google Scholar] [CrossRef]

- Hu, S.; Huang, T.; Ilhan, F.; Tekin, S.F.; Liu, L. Large Language Model-Powered Smart Contract Vulnerability Detection: New Perspectives. In Proceedings of the 5th IEEE International Conference on Trust, Privacy and Security in Intelligent Systems and Applications, TPS-ISA 2023, Atlanta, GA, USA, 1–4 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 297–306. [Google Scholar] [CrossRef]

- Yang, C.; Deng, Y.; Lu, R.; Yao, J.; Liu, J.; Jabbarvand, R.; Zhang, L. WhiteFox: White-Box Compiler Fuzzing Empowered by Large Language Models. Proc. ACM Program. Lang. 2024, 8, 709–735. [Google Scholar] [CrossRef]

- Nurgaliyev, A. Analysis of Reverse Engineering and Cyber Assaults. Ph.D. Thesis, Victoria University, Melbourne, Australia, 2023. [Google Scholar]

- Xu, X.; Zhang, Z.; Su, Z.; Huang, Z.; Feng, S.; Ye, Y.; Jiang, N.; Xie, D.; Cheng, S.; Tan, L.; et al. Symbol Preference Aware Generative Models for Recovering Variable Names from Stripped Binary. arXiv 2024. [Google Scholar] [CrossRef]

- Armengol-Estapé, J.; Woodruff, J.; Cummins, C.; O’Boyle, M.F.P. SLaDe: A Portable Small Language Model Decompiler for Optimized Assembly. arXiv 2024. [Google Scholar] [CrossRef]

- Sun, T.; Allix, K.; Kim, K.; Zhou, X.; Kim, D.; Lo, D.; Bissyandé, T.F.; Klein, J. DexBERT: Effective, Task-Agnostic and Fine-grained Representation Learning of Android Bytecode. arXiv 2023. [Google Scholar] [CrossRef]

- Pei, K.; Li, W.; Jin, Q.; Liu, S.; Geng, S.; Cavallaro, L.; Yang, J.; Jana, S. Exploiting Code Symmetries for Learning Program Semantics. arXiv 2024. [Google Scholar] [CrossRef]

- Song, Q.; Zhang, Y.; Ouyang, L.; Chen, Y. BinMLM: Binary Authorship Verification with Flow-aware Mixture-of-Shared Language Model. arXiv 2022. [Google Scholar] [CrossRef]

- Manuel, D.; Islam, N.T.; Khoury, J.; Nunez, A.; Bou-Harb, E.; Najafirad, P. Enhancing Reverse Engineering: Investigating and Benchmarking Large Language Models for Vulnerability Analysis in Decompiled Binaries. arXiv 2024, arXiv:2411.04981. [Google Scholar] [CrossRef]

- Faruki, P.; Bhan, R.; Jain, V.; Bhatia, S.; El Madhoun, N.; Pamula, R. A survey and evaluation of android-based malware evasion techniques and detection frameworks. Information 2023, 14, 374. [Google Scholar] [CrossRef]

- Botacin, M. GPThreats-3: Is Automatic Malware Generation a Threat? In Proceedings of the 2023 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 25–25 May 2023; pp. 238–254. [Google Scholar] [CrossRef]

- Jakub, B.; Branišová, J. A Dynamic Rule Creation Based Anomaly Detection Method for Identifying Security Breaches in Log Records. Wirel. Pers. Commun. 2017, 94, 497–511. [Google Scholar] [CrossRef]

- Shan, S.; Huo, Y.; Su, Y.; Li, Y.; Li, D.; Zheng, Z. Face It Yourselves: An LLM-Based Two-Stage Strategy to Localize Configuration Errors via Logs. In Proceedings of the 33rd ACM SIGSOFT International Symposium on Software Testing and Analysis, ISSTA’24, Vienna, Austria, 16–20 September 2024; ACM: New York, NY, USA, 2024; pp. 13–25. [Google Scholar] [CrossRef]

- Karlsen, E.; Luo, X.; Zincir-Heywood, N.; Heywood, M. Benchmarking Large Language Models for Log Analysis, Security, and Interpretation. arXiv 2023. [Google Scholar] [CrossRef]

- Han, X.; Yuan, S.; Trabelsi, M. LogGPT: Log Anomaly Detection via GPT. arXiv 2023. [Google Scholar] [CrossRef]

- Kasri, W.; Himeur, Y.; Alkhazaleh, H.A.; Tarapiah, S.; Atalla, S.; Mansoor, W.; Al-Ahmad, H. From vulnerability to defense: The role of large language models in enhancing cybersecurity. Computation 2025, 13, 30. [Google Scholar] [CrossRef]

- Kamath, U.; Keenan, K.; Somers, G.; Sorenson, S. Prompt-based Learning. In Large Language Models: A Deep Dive: Bridging Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2024; pp. 83–133. [Google Scholar]

- Heiding, F.; Schneier, B.; Vishwanath, A.; Bernstein, J.; Park, P.S. Devising and Detecting Phishing: Large Language Models vs. Smaller Human Models. arXiv 2023. [Google Scholar] [CrossRef]

- Cambiaso, E.; Caviglione, L. Scamming the Scammers: Using ChatGPT to Reply Mails for Wasting Time and Resources. arXiv 2023. [Google Scholar] [CrossRef]

- Hanley, H.W.A.; Durumeric, Z. Twits, Toxic Tweets, and Tribal Tendencies: Trends in Politically Polarized Posts on Twitter. arXiv 2024. [Google Scholar] [CrossRef]

- Cai, Z.; Tan, Z.; Lei, Z.; Zhu, Z.; Wang, H.; Zheng, Q.; Luo, M. LMBot: Distilling Graph Knowledge into Language Model for Graph-less Deployment in Twitter Bot Detection. arXiv 2024. [Google Scholar] [CrossRef]

- Anderson, R.; Petitcolas, F. On The Limits of Steganography. IEEE J. Sel. Areas Commun. 1998, 16, 474–481. [Google Scholar] [CrossRef]

- Wang, H.; Yang, Z.; Yang, J.; Chen, C.; Huang, Y. Linguistic Steganalysis in Few-Shot Scenario. IEEE Trans. Inf. Forensics Secur. 2023, 18, 4870–4882. [Google Scholar] [CrossRef]

- Bauer, L.A.; IV, J.K.H.; Markelon, S.A.; Bindschaedler, V.; Shrimpton, T. Covert Message Passing over Public Internet Platforms Using Model-Based Format-Transforming Encryption. arXiv 2021. [Google Scholar] [CrossRef]

- Paterson, K.; Stebila, D. One-Time-Password-Authenticated Key Exchange. In Proceedings of the Australasian Conference on Information Security and Privacy, Sydney, Australia, 5–7 July 2010. [Google Scholar] [CrossRef]

- Rando, J.; Perez-Cruz, F.; Hitaj, B. PassGPT: Password Modeling and (Guided) Generation with Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Selamat, S.R.; Robiah, Y.; Sahib, S. Mapping Process of Digital Forensic Investigation Framework. IJCSNS Int. J. Comput. Sci. Netw. Secur. 2008, 8, 163–169. [Google Scholar]

- Huang, Z.; Wang, Q. Enhancing Architecture-level Security of SoC Designs via the Distributed Security IPs Deployment Methodology. J. Inf. Sci. Eng. 2020, 36, 387–421. [Google Scholar]

- Paria, S.; Dasgupta, A.; Bhunia, S. DIVAS: An LLM-based End-to-End Framework for SoC Security Analysis and Policy-based Protection. arXiv 2023. [Google Scholar] [CrossRef]

- Nair, M.; Sadhukhan, R.; Mukhopadhyay, D. How Hardened is Your Hardware? Guiding ChatGPT to Generate Secure Hardware Resistant to CWEs. In Proceedings of the Cyber Security, Cryptology, and Machine Learning: 7th International Symposium, CSCML 2023, Be’er Sheva, Israel, 29–30 June 2023; pp. 320–336. [Google Scholar] [CrossRef]

- Ahmad, B.; Thakur, S.; Tan, B.; Karri, R.; Pearce, H. On Hardware Security Bug Code Fixes by Prompting Large Language Models. IEEE Trans. Inf. Forensics Secur. 2024, 19, 4043–4057. [Google Scholar] [CrossRef]

- Mohammed, A. Blockchain and Distributed Ledger Technology (DLT): Investigating the use of blockchain for secure transactions, smart contracts, and fraud prevention. Int. J. Adv. Eng. Manag. (IJAEM) 2025, 7, 1057–1068. Available online: https://ijaem.net/issue_dcp/Blockchain%20and%20Distributed%20Ledger%20Technology%20DLT%20Investigating%20the%20use%20of%20blockchain%20for%20secure%20transactions%2C%20smart%20contracts%2C%20and%20fraud%20prevention.pdf (accessed on 10 May 2025). [CrossRef]

- David, I.; Zhou, L.; Qin, K.; Song, D.; Cavallaro, L.; Gervais, A. Do you still need a manual smart contract audit? arXiv 2023. [Google Scholar] [CrossRef]

- Howard, J.D.; Kahnt, T. To be specific: The role of orbitofrontal cortex in signaling reward identity. Behav. Neurosci. 2021, 135, 210. [Google Scholar] [CrossRef]

- Rodler, M.; Li, W.; Karame, G.O.; Davi, L. Sereum: Protecting Existing Smart Contracts Against Re-Entrancy Attacks. In Proceedings of the 26th Annual Network and Distributed System Security Symposium, NDSS 2019, San Diego, CA, USA, 24–27 February 2019; The Internet Society: Reston, VA, USA, 2019. [Google Scholar]

- Rathod, V.; Nabavirazavi, S.; Zad, S.; Iyengar, S.S. Privacy and security challenges in large language models. In Proceedings of the 2025 IEEE 15th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 746–752. [Google Scholar]

- Mitchell, B.S.; Mancoridis, S.; Kashyap, J. On the Automatic Identification of Misconfiguration Errors in Cloud Native Systems. In Proceedings of the 2024 7th Artificial Intelligence and Cloud Computing Conference, Tokyo, Japan, 14–16 December 2024. [Google Scholar]

- Pranata, A.A.; Barais, O.; Bourcier, J.; Noirie, L. Misconfiguration discovery with principal component analysis for cloud-native services. In Proceedings of the 2020 IEEE/ACM 13th International Conference on Utility and Cloud Computing (UCC), Leicester, UK, 7–10 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 269–278. [Google Scholar]

- Ariffin, M.A.M.; Rahman, K.A.; Darus, M.Y.; Awang, N.; Kasiran, Z. Data leakage detection in cloud computing platform. Int. J. Adv. Trends Comput. Sci. Eng. 2019, 8, S1. [Google Scholar] [CrossRef]

- Vaidya, C.; Khobragade, P.K.; Golghate, A.A. Data Leakage Detection and Security Using Cloud Computing. Int. J. Eng. Res. Appl. 2016, 6, 1–4. [Google Scholar]

- Lanka, P.; Gupta, K.; Varol, C. Intelligent threat detection—AI-driven analysis of honeypot data to counter cyber threats. Electronics 2024, 13, 2465. [Google Scholar] [CrossRef]

- Henze, M.; Matzutt, R.; Hiller, J.; Mühmer, E.; Ziegeldorf, J.H.; van der Giet, J.; Wehrle, K. Complying with data handling requirements in cloud storage systems. IEEE Trans. Cloud Comput. 2020, 10, 1661–1674. [Google Scholar] [CrossRef]

- Dye, O.; Heo, J.; Cankaya, E.C. Reflection of Federal Data Protection Standards on Cloud Governance. arXiv 2024, arXiv:2403.07907. [Google Scholar] [CrossRef]

- Hassanin, M.; Moustafa, N. A comprehensive overview of large language models (llms) for cyber defences: Opportunities and directions. arXiv 2024, arXiv:2405.14487. [Google Scholar] [CrossRef]

- Molleti, R.; Goje, V.; Luthra, P.; Raghavan, P. Automated threat detection and response using LLM agents. World J. Adv. Res. Rev. 2024, 24, 79–90. [Google Scholar] [CrossRef]

- Fieblinger, R.; Alam, M.T.; Rastogi, N. Actionable cyber threat intelligence using knowledge graphs and large language models. In Proceedings of the 2024 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Vienna, Austria, 8–12 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 100–111. [Google Scholar]

- Ali, T.; Kostakos, P. HuntGPT: Integrating machine learning-based anomaly detection and explainable AI with large language models (LLMs). arXiv 2023, arXiv:2309.16021. [Google Scholar]

- Schwartz, Y.; Benshimol, L.; Mimran, D.; Elovici, Y.; Shabtai, A. LLMCloudHunter: Harnessing LLMs for Automated Extraction of Detection Rules from Cloud-Based CTI. arXiv 2024. [Google Scholar] [CrossRef]

- Mitra, S.; Neupane, S.; Chakraborty, T.; Mittal, S.; Piplai, A.; Gaur, M.; Rahimi, S. LOCALINTEL: Generating Organizational Threat Intelligence from Global and Local Cyber Knowledge. arXiv 2024. [Google Scholar] [CrossRef]

- Rong, H.; Duan, Y.; Zhang, H.; Wang, X.; Chen, H.; Duan, S.; Wang, S. Disassembling Obfuscated Executables with LLM. arXiv 2024. [Google Scholar] [CrossRef]

- Lu, H.; Peng, H.; Nan, G.; Cui, J.; Wang, C.; Jin, W.; Wang, S.; Pan, S.; Tao, X. MALSIGHT: Exploring Malicious Source Code and Benign Pseudocode for Iterative Binary Malware Summarization. arXiv 2024. [Google Scholar] [CrossRef]

- Patsakis, C.; Casino, F.; Lykousas, N. Assessing LLMs in Malicious Code Deobfuscation of Real-world Malware Campaigns. arXiv 2024. [Google Scholar] [CrossRef]

- Bouzidi, M.; Gupta, N.; Cheikh, F.A.; Shalaginov, A.; Derawi, M. A novel architectural framework on IoT ecosystem, security aspects and mechanisms: A comprehensive survey. IEEE Access 2022, 10, 101362–101384. [Google Scholar] [CrossRef]

- Zhao, B.; Ji, S.; Zhang, X.; Tian, Y.; Wang, Q.; Pu, Y.; Lyu, C.; Beyah, R. UVSCAN: Detecting Third-Party Component Usage Violations in IoT Firmware. In Proceedings of the 32nd USENIX Security Symposium (USENIX Security 23), Anaheim, CA, USA, 9–11 August 2023; pp. 3421–3438. [Google Scholar]

- Li, S.; Min, G. On-Device Learning Based Vulnerability Detection in Iot Environment. J. Ind. Inf. Integr. 2025, 47, 100900. [Google Scholar] [CrossRef]

- Li, Y.; Xiang, Z.; Bastian, N.D.; Song, D.; Li, B. IDS-Agent: An LLM Agent for Explainable Intrusion Detection in IoT Networks. In Proceedings of the NeurIPS 2024 Workshop on Open-World Agents, Vancouver, BC, Canada, 14 December 2024. [Google Scholar]

- Su, J.; Jiang, C.; Jin, X.; Qiao, Y.; Xiao, T.; Ma, H.; Wei, R.; Jing, Z.; Xu, J.; Lin, J. Large Language Models for Forecasting and Anomaly Detection: A Systematic Literature Review. arXiv 2024. [Google Scholar] [CrossRef]

- Feng, X.; Liao, X.; Wang, X.; Wang, H.; Li, Q.; Yang, K.; Zhu, H.; Sun, L. Understanding and securing device vulnerabilities through automated bug report analysis. In Proceedings of the SEC’19: Proceedings of the 28th USENIX Conference on Security Symposium, Santa Clara, CA, USA, 14–16 August 2019. [Google Scholar]

- Baral, S.; Saha, S.; Haque, A. An Adaptive End-to-End IoT Security Framework Using Explainable AI and LLMs. In Proceedings of the 2024 IEEE 10th World Forum on Internet of Things (WF-IoT), Ottawa, ON, Canada, 10–13 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 469–474. [Google Scholar]

- Pathak, P.N. How Do You Protect Machine Learning from Attacks? 2023. Available online: https://www.linkedin.com/advice/1/how-do-you-protect-machine-learning-from-attacks (accessed on 28 January 2024).

- Kaddour, J.; Harris, J.; Mozes, M.; Bradley, H.; Raileanu, R.; McHardy, R. Challenges and Applications of Large Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Tornede, A.; Deng, D.; Eimer, T.; Giovanelli, J.; Mohan, A.; Ruhkopf, T.; Segel, S.; Theodorakopoulos, D.; Tornede, T.; Wachsmuth, H.; et al. AutoML in the Age of Large Language Models: Current Challenges, Future Opportunities and Risks. arXiv 2024. [Google Scholar] [CrossRef]

- Computer Security Resource Center. Information Systems Security (INFOSEC). 2023. Available online: https://csrc.nist.gov/glossary/term/information_systems_security (accessed on 28 January 2024).

- CLOUDFLARE. What Is Data Privacy? 2023. Available online: https://www.cloudflare.com/learning/privacy/what-is-data-privacy/ (accessed on 28 January 2024).

- Phanireddy, S. LLM security and guardrail defense techniques in web applications. Int. J. Emerg. Trends Comput. Sci. Inf. Technol. 2025, 221–224. [Google Scholar] [CrossRef]

- Ganguli, D.; Lovitt, L.; Kernion, J.; Askell, A.; Bai, Y.; Kadavath, S.; Mann, B.; Perez, E.; Schiefer, N.; Ndousse, K.; et al. Red Teaming Language Models to Reduce Harms: Methods, Scaling Behaviors, and Lessons Learned. arXiv 2022. [Google Scholar] [CrossRef]

- Röttger, P.; Vidgen, B.; Nguyen, D.; Waseem, Z.; Margetts, H.; Pierrehumbert, J. HateCheck: Functional Tests for Hate Speech Detection Models. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Bangkok, Thailand, 1–6 August 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021. [Google Scholar] [CrossRef]

- Perez, F.; Ribeiro, I. Ignore Previous Prompt: Attack Techniques For Language Models. arXiv 2022. [Google Scholar] [CrossRef]

- Alon, G.; Kamfonas, M. Detecting Language Model Attacks with Perplexity. arXiv 2023. [Google Scholar] [CrossRef]

- Jain, N.; Schwarzschild, A.; Wen, Y.; Somepalli, G.; Kirchenbauer, J.; yeh Chiang, P.; Goldblum, M.; Saha, A.; Geiping, J.; Goldstein, T. Baseline Defenses for Adversarial Attacks Against Aligned Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Cao, Z.; Xu, Y.; Huang, Z.; Zhou, S. ML4CO-KIDA: Knowledge Inheritance in Dataset Aggregation. arXiv 2022. [Google Scholar] [CrossRef]

- Marsoof, A.; Luco, A.; Tan, H.; Joty, S. Content-filtering AI systems–limitations, challenges and regulatory approaches. Inf. Commun. Technol. Law 2023, 32, 64–101. [Google Scholar] [CrossRef]

- Manzoor, H.U.; Shabbir, A.; Chen, A.; Flynn, D.; Zoha, A. A survey of security strategies in federated learning: Defending models, data, and privacy. Future Internet 2024, 16, 374. [Google Scholar] [CrossRef]

- Qi, X.; Panda, A.; Lyu, K.; Ma, X.; Roy, S.; Beirami, A.; Mittal, P.; Henderson, P. Safety Alignment Should Be Made More Than Just a Few Tokens Deep. arXiv 2024. [Google Scholar] [CrossRef]

- Fayaz, S.; Ahmad Shah, S.Z.; ud din, N.M.; Gul, N.; Assad, A. Advancements in data augmentation and transfer learning: A comprehensive survey to address data scarcity challenges. Recent Adv. Comput. Sci. Commun. (Former. Recent Pat. Comput. Sci.) 2024, 17, 14–35. [Google Scholar] [CrossRef]

- Bianchi, F.; Suzgun, M.; Attanasio, G.; Röttger, P.; Jurafsky, D.; Hashimoto, T.; Zou, J. Safety-Tuned LLaMAs: Lessons From Improving the Safety of Large Language Models that Follow Instructions. In Proceedings of the The Twelfth International Conference on Learning Representations, ICLR 2024, Vienna, Austria, 7–11 May 2024; OpenReview: Hanover, NH, USA, 2024. [Google Scholar]

- Zhao, J.; Deng, Z.; Madras, D.; Zou, J.; Ren, M. Learning and Forgetting Unsafe Examples in Large Language Models. In Proceedings of the Forty-first International Conference on Machine Learning, ICML 2024, Vienna, Austria, 21–27 July 2024; OpenReview: Hanover, NH, USA, 2024. [Google Scholar]

- Qi, X.; Zeng, Y.; Xie, T.; Chen, P.Y.; Jia, R.; Mittal, P.; Henderson, P. Fine-tuning aligned language models compromises safety, even when users do not intend to! arXiv 2023, arXiv:2310.03693. [Google Scholar] [CrossRef]

- Papakyriakopoulos, O.; Choi, A.S.G.; Thong, W.; Zhao, D.; Andrews, J.; Bourke, R.; Xiang, A.; Koenecke, A. Augmented datasheets for speech datasets and ethical decision-making. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; pp. 881–904. [Google Scholar]

- Wortsman, M.; Ilharco, G.; Gadre, S.Y.; Roelofs, R.; Gontijo-Lopes, R.; Morcos, A.S.; Namkoong, H.; Farhadi, A.; Carmon, Y.; Kornblith, S.; et al. Model soups: Averaging weights of multiple fine-tuned models improves accuracy without increasing inference time. arXiv 2022. [Google Scholar] [CrossRef]

- Lu, W.; Luu, R.K.; Buehler, M.J. Fine-tuning large language models for domain adaptation: Exploration of training strategies, scaling, model merging and synergistic capabilities. npj Comput. Mater. 2025, 11, 84. [Google Scholar] [CrossRef]

- Zou, A.; Wang, Z.; Carlini, N.; Nasr, M.; Kolter, J.Z.; Fredrikson, M. Universal and Transferable Adversarial Attacks on Aligned Language Models. arXiv 2023. [Google Scholar] [CrossRef]

- Kadhe, S.R.; Ahmed, F.; Wei, D.; Baracaldo, N.; Padhi, I. Split, Unlearn, Merge: Leveraging Data Attributes for More Effective Unlearning in LLMs. arXiv 2024. [Google Scholar] [CrossRef]

- Yadav, P.; Vu, T.; Lai, J.; Chronopoulou, A.; Faruqui, M.; Bansal, M.; Munkhdalai, T. What Matters for Model Merging at Scale? arXiv 2024, arXiv:2410.03617. [Google Scholar] [CrossRef]

- Xing, W.; Li, M.; Li, M.; Han, M. Towards robust and secure embodied ai: A survey on vulnerabilities and attacks. arXiv 2025, arXiv:2502.13175. [Google Scholar]

- Xu, D.; Fan, S.; Kankanhalli, M. Combating misinformation in the era of generative AI models. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 9291–9298. [Google Scholar]

- Schwarzschild, A.; Goldblum, M.; Gupta, A.; Dickerson, J.P.; Goldstein, T. Just How Toxic is Data Poisoning? A Unified Benchmark for Backdoor and Data Poisoning Attacks. arXiv 2021. [Google Scholar] [CrossRef]

- Kurita, K.; Michel, P.; Neubig, G. Weight Poisoning Attacks on Pre-trained Models. arXiv 2020. [Google Scholar] [CrossRef]

- Shah, V. Machine Learning Algorithms for Cybersecurity: Detecting and Preventing Threats. Rev. Esp. Doc. Cient. 2021, 15, 42–66. [Google Scholar]

- Yan, L.; Zhang, Z.; Tao, G.; Zhang, K.; Chen, X.; Shen, G.; Zhang, X. ParaFuzz: An Interpretability-Driven Technique for Detecting Poisoned Samples in NLP. arXiv 2023. [Google Scholar] [CrossRef]

- Continella, A.; Fratantonio, Y.; Lindorfer, M.; Puccetti, A.; Zand, A.; Krügel, C.; Vigna, G. Obfuscation-Resilient Privacy Leak Detection for Mobile Apps Through Differential Analysis. In Proceedings of the Network and Distributed System Security Symposium, San Diego, CA, USA, 26 February–1 March 2017. [Google Scholar]

- Shu, M.; Wang, J.; Zhu, C.; Geiping, J.; Xiao, C.; Goldstein, T. On the Exploitability of Instruction Tuning. arXiv 2023. [Google Scholar] [CrossRef]

- Aghakhani, H.; Dai, W.; Manoel, A.; Fernandes, X.; Kharkar, A.; Kruegel, C.; Vigna, G.; Evans, D.; Zorn, B.; Sim, R. TrojanPuzzle: Covertly Poisoning Code-Suggestion Models. arXiv 2024. [Google Scholar] [CrossRef]

- Liu, K.; Dolan-Gavitt, B.; Garg, S. Fine-Pruning: Defending Against Backdooring Attacks on Deep Neural Networks. arXiv 2018. [Google Scholar] [CrossRef]

- Chen, Z.; Xiang, Z.; Xiao, C.; Song, D.; Li, B. AgentPoison: Red-teaming LLM Agents via Poisoning Memory or Knowledge Bases. arXiv 2024. [Google Scholar] [CrossRef]

- Chen, C.; Sun, Y.; Gong, X.; Gao, J.; Lam, K.Y. Neutralizing Backdoors through Information Conflicts for Large Language Models. arXiv 2024, arXiv:2411.18280. [Google Scholar] [CrossRef]

- Chowdhury, A.G.; Islam, M.M.; Kumar, V.; Shezan, F.H.; Jain, V.; Chadha, A. Breaking down the defenses: A comparative survey of attacks on large language models. arXiv 2024, arXiv:2403.04786. [Google Scholar] [CrossRef]

- Zhang, Z.; Lyu, L.; Ma, X.; Wang, C.; Sun, X. Fine-mixing: Mitigating Backdoors in Fine-tuned Language Models. arXiv 2022. [Google Scholar] [CrossRef]

- Cui, G.; Yuan, L.; He, B.; Chen, Y.; Liu, Z.; Sun, M. A Unified Evaluation of Textual Backdoor Learning: Frameworks and Benchmarks. arXiv 2022. [Google Scholar] [CrossRef]

- Xi, Z.; Du, T.; Li, C.; Pang, R.; Ji, S.; Chen, J.; Ma, F.; Wang, T. Defending Pre-trained Language Models as Few-shot Learners against Backdoor Attacks. arXiv 2023. [Google Scholar] [CrossRef]

- Wolk, M.H. The iphone jailbreaking exemption and the issue of openness. Cornell J. Law Public Policy 2009, 19, 795. [Google Scholar]

- Mondillo, G.; Colosimo, S.; Perrotta, A.; Frattolillo, V.; Indolfi, C.; del Giudice, M.M.; Rossi, F. Jailbreaking large language models: Navigating the crossroads of innovation, ethics, and health risks. J. Med. Artif. Intell. 2025, 8, 6. [Google Scholar] [CrossRef]

- Kumar, A.; Agarwal, C.; Srinivas, S.; Li, A.J.; Feizi, S.; Lakkaraju, H. Certifying LLM Safety against Adversarial Prompting. arXiv 2024. [Google Scholar] [CrossRef]

- Wu, F.; Xie, Y.; Yi, J.; Shao, J.; Curl, J.; Lyu, L.; Chen, Q.; Xie, X. Defending chatgpt against jailbreak attack via self-reminder. Nat. Mach. Intell. 2023, 5, 1486–1496. [Google Scholar] [CrossRef]

- Jin, H.; Hu, L.; Li, X.; Zhang, P.; Chen, C.; Zhuang, J.; Wang, H. JailbreakZoo: Survey, Landscapes, and Horizons in Jailbreaking Large Language and Vision-Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Robey, A.; Wong, E.; Hassani, H.; Pappas, G.J. SmoothLLM: Defending Large Language Models Against Jailbreaking Attacks. arXiv 2024. [Google Scholar] [CrossRef]

- Crothers, E.; Japkowicz, N.; Viktor, H. Machine Generated Text: A Comprehensive Survey of Threat Models and Detection Methods. arXiv 2023. [Google Scholar] [CrossRef]

- Rababah, B.; Wu, S.T.; Kwiatkowski, M.; Leung, C.K.; Akcora, C.G. SoK: Prompt Hacking of Large Language Models. arXiv 2024, arXiv:2410.13901. [Google Scholar] [CrossRef]

- Learn Prompting. Your Guide to Generative AI. 2023. Available online: https://learnprompting.org (accessed on 28 January 2024).

- Schulhoff, S. Instruction Defense. 2024. Available online: https://learnprompting.org/docs/prompt_hacking/defensive_measures/instruction (accessed on 11 October 2024).

- Gonen, H.; Iyer, S.; Blevins, T.; Smith, N.A.; Zettlemoyer, L. Demystifying Prompts in Language Models via Perplexity Estimation. arXiv 2024. [Google Scholar] [CrossRef]

- Liu, Y.; Jia, Y.; Geng, R.; Jia, J.; Gong, N.Z. Formalizing and Benchmarking Prompt Injection Attacks and Defenses. arXiv 2024. [Google Scholar] [CrossRef]

- Shi, J.; Yuan, Z.; Liu, Y.; Huang, Y.; Zhou, P.; Sun, L.; Gong, N.Z. Optimization-based Prompt Injection Attack to LLM-as-a-Judge. arXiv 2024. [Google Scholar] [CrossRef]

- Yang, H.; Xiang, K.; Ge, M.; Li, H.; Lu, R.; Yu, S. A comprehensive overview of backdoor attacks in large language models within communication networks. IEEE Netw. 2024, 38, 211–218. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, H.; Yang, F.; Liu, N.; Deng, H.; Cai, H.; Wang, S.; Yin, D.; Du, M. Explainability for large language models: A survey. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–38. [Google Scholar] [CrossRef]

- Koloskova, A.; Allouah, Y.; Jha, A.; Guerraoui, R.; Koyejo, S. Certified Unlearning for Neural Networks. arXiv 2025. [Google Scholar] [CrossRef]

- Liu, Y.; Yao, Y.; Ton, J.F.; Zhang, X.; Guo, R.; Cheng, H.; Klochkov, Y.; Taufiq, M.F.; Li, H. Trustworthy LLMs: A Survey and Guideline for Evaluating Large Language Models’ Alignment. arXiv 2024. [Google Scholar] [CrossRef]

- Raiaan, M.A.K.; Mukta, M.S.H.; Fatema, K.; Fahad, N.M.; Sakib, S.; Mim, M.M.J.; Ahmad, J.; Ali, M.E.; Azam, S. A review on large Language Models: Architectures, applications, taxonomies, open issues and challenges. IEEE Access 2024, 12, 26839–26874. [Google Scholar] [CrossRef]

- Mishra, M.; Stallone, M.; Zhang, G.; Shen, Y.; Prasad, A.; Soria, A.M.; Merler, M.; Selvam, P.; Surendran, S.; Singh, S.; et al. Granite code models: A family of open foundation models for code intelligence. arXiv 2024, arXiv:2405.04324. [Google Scholar] [CrossRef]

- Moore, E.; Imteaj, A.; Rezapour, S.; Amini, M.H. A survey on secure and private federated learning using blockchain: Theory and application in resource-constrained computing. IEEE Internet Things J. 2023, 10, 21942–21958. [Google Scholar] [CrossRef]

- Cai, X.; Xu, H.; Xu, S.; Zhang, Y.; Yuan, X. Badprompt: Backdoor attacks on continuous prompts. Adv. Neural Inf. Process. Syst. 2022, 35, 37068–37080. [Google Scholar]

- Huang, F. Data cleansing. In Encyclopedia of Big Data; Springer: Berlin/Heidelberg, Germany, 2022; pp. 275–279. [Google Scholar]

- Wan, A.; Wallace, E.; Shen, S.; Klein, D. Poisoning language models during instruction tuning. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; PMLR: New York, NY, USA, 2023; pp. 35413–35425. [Google Scholar]

- Wang, B.; Yao, Y.; Shan, S.; Li, H.; Viswanath, B.; Zheng, H.; Zhao, B.Y. Neural cleanse: Identifying and mitigating backdoor attacks in neural networks. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 20–22 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 707–723. [Google Scholar]

- Das, B.C.; Amini, M.H.; Wu, Y. Security and privacy challenges of large language models: A survey. ACM Comput. Surv. 2024, 57, 1–39. [Google Scholar] [CrossRef]

- Yao, H.; Lou, J.; Qin, Z. Poisonprompt: Backdoor attack on prompt-based large language models. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 7745–7749. [Google Scholar]

- Charfeddine, M.; Kammoun, H.M.; Hamdaoui, B.; Guizani, M. Chatgpt’s security risks and benefits: Offensive and defensive use-cases, mitigation measures, and future implications. IEEE Access 2024, 12, 30263–30310. [Google Scholar] [CrossRef]

- Sun, R.; Chang, J.; Pearce, H.; Xiao, C.; Li, B.; Wu, Q.; Nepal, S.; Xue, M. SoK: Unifying Cybersecurity and Cybersafety of Multimodal Foundation Models with an Information Theory Approach. arXiv 2024. [Google Scholar] [CrossRef]

- Kshetri, N. Transforming cybersecurity with agentic AI to combat emerging cyber threats. Telecommun. Policy 2025, 49, 102976. [Google Scholar] [CrossRef]

- Zhang, S.; Li, H.; Sun, K.; Chen, H.; Wang, Y.; Li, S. Security and Privacy Challenges of AIGC in Metaverse: A Comprehensive Survey. ACM Comput. Surv. 2025, 57, 1–37. [Google Scholar] [CrossRef]

- Brundage, M.; Avin, S.; Wang, J.; Belfield, H.; Krueger, G.; Hadfield, G.; Khlaaf, H.; Yang, J.; Toner, H.; Fong, R.; et al. Toward trustworthy AI development: Mechanisms for supporting verifiable claims. arXiv 2020, arXiv:2004.07213. [Google Scholar] [CrossRef]

- Gunning, D.; Stefik, M.; Choi, J.; Miller, T.; Stumpf, S.; Yang, G.Z. XAI—Explainable artificial intelligence. Sci. Robot. 2019, 4, eaay7120. [Google Scholar] [CrossRef] [PubMed]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Caruana, M.M.; Borg, R.M. Regulating Artificial Intelligence in the European Union. In The EU Internal Market in the Next Decade–Quo Vadis? Brill: Singapore, 2025; p. 108. [Google Scholar]

- Protection, F.D. General data protection regulation (GDPR). In Intersoft Consulting; European Union: Brussels, Belgium, 2018; Volume 24. [Google Scholar]

- NIST AI. Artificial Intelligence Risk Management Framework (AI RMF 1.0); NIST AI 100-1. 2023. Available online: https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf (accessed on 10 May 2025).

| Security Domains | Security Tasks | Total |

|---|---|---|

| Network Security | Web fuzzing (4) Traffic and intrusion detection (5) Cyber threat analysis (4) Penetration test (7) | 20 |

| Software and System Security | Vulnerability detection (7) Vulnerability repair (7) Bug detection (8) Bug repair (4) Program fuzzing (6) Reverse engineering and binary analysis (7) Malware detection (4) System log analysis (5) | 47 |

| Information and Content Security | Phishing and scam detection (4) Harmful contents detection (4) Steganography (3) Access control (3) Forensics (3) | 17 |

| Hardware Security | Hardware vulnerability detection (3) Hardware vulnerability repair (3) | 6 |

| Blockchain Security | Smart contract security (4) Transaction anomaly detection (4) | 8 |

| Cloud Security | Misconfiguration detection (3) Data leakage monitoring (3) Container security (3) Compliance enforcement (3) | 12 |

| Incident Response and Threat Intel. | Alert prioritization (2) Automated threat intelligence analysis (3) Threat hunting (3) Malware reverse engineering (3) | 11 |

| IoT Security | Firmware Vulnerability Detection (3) Behavioral Anomaly Detection (2) Automated Threat Report Summarization (3) | 8 |

| Application Domain | Representative Model | Dataset | Accuracy/Key Metric | Pros (Strengths) | Cons (Limitations) |

|---|---|---|---|---|---|

| Network Security | GPTFuzzer [18] | PROFUZZ | High success in SQLi/XSS/RCE payload generation | Generates targeted payloads using RL, bypasses WAFs | Limited to certain protocols; needs fine-tuning for closed systems |

| Software and System Security | LATTE [58] | Real firmware dataset | Discovered 37 zero-day vulns | Combines LLM with automated taint analysis | Risk of false positives; high compute cost |

| Software and System Security | ZeroLeak [65] | Side channel apps dataset | Effective side channel mitigation | Specialized for side-channel vulnerabilities | Limited cross language applicability |

| Blockchain Security | GPTLENS [82] | Ethereum transactions | Reduced false positives in smart contract analysis | Dual phase vuln. scenario generation | Contextual understanding still limited |

| Cloud Security | GenKubeSec [30] | Kubernetes configs | Precision: 0.990, Recall: 0.999 | Automated reasoning; surpasses rule based tools | Limited cross platform generality |

| Cloud Security | Secure Cloud AI [31] | NSL-KDD, cloud logs | Accuracy: 94.78% | Hybrid RF + LSTM for real time detection | Needs scaling for large environment |

| Incident Response and Threat Intel | SEVENLLM [34] | Incident reports | Improved IoC detection, reduced false positives | Multitask alert prioritization | Limited multilingual coverage |

| IOT Security | LLM4Vuln [25] | Firmware in Solidity and Java | Enhanced detection across languages | RAG and prompt engineering improves reasoning | Need more in architecture diversity |

| IOT Security | IDS-Agent [137] | ACI-IOT’23, CIC-IOT’23 | Recall: 61% for zero-day attacks | Combines reasoning, memory retrieval, and external knowledge | Moderate precision, room for higher automation |

| Technique | Backdoor | Jailbreaking | Data Poisoning | Prompt Injection |

|---|---|---|---|---|

| ParaFuzz | ✕ | ✕ | ✓ | ✕ |

| CUBE | ✓ | ✕ | ✕ | ✕ |

| Masking Differential Prompting | ✓ | ✕ | ✕ | ✕ |

| Self Reminder System | ✕ | ✓ | ✕ | ✕ |

| Content Filtering | ✓ | ✓ | ✓ | ✓ |

| Red Team | ✓ | ✓ | ✓ | ✓ |

| Safety Fine-Tuning | ✓ | ✕ | ✓ | ✕ |

| A Goal Prioritization | ✕ | ✓ | ✕ | ✕ |

| Model Merge | ✓ | ✕ | ✓ | ✕ |

| Prompt Engineering | ✓ | ✓ | ✓ | ✓ |

| Smooth | ✕ | ✓ | ✕ | ✕ |

| Author | Ref. | RT | CF | SFT | MM | CE | GP | FM | P | PF | SF | S | DPI | PH | SR | C | CU | MDP | R | PI |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Alone et al. | [150] | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Bianchi et al. | [157] | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Cao et al. | [152] | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Contiella et al. | [172] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ |

| Cui et al. | [180] | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Ribeiroet et al. | [147] | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Ganguali et al. | [147] | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Gonen et al. | [192] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Jain et al. | [151] | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ |

| Jin et al. | [186] | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Kupmar et al. | [184] | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Liu et al. | [198] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Perez et al. | [149] | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Xiangyu et al. | [155] | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Robey et al. | [187] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Schulhoff et al. | [191] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Shan et al. | [170] | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ |

| Wang et al. | [104] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ |

| Wu et al. | [185] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Xi et al. | [181] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ |

| Yan et al. | [171] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Zhang et al. | [179] | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ |

| Zhao et al. | [158] | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| Zou et al. | [163] | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✓ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jaffal, N.O.; Alkhanafseh, M.; Mohaisen, D. Large Language Models in Cybersecurity: A Survey of Applications, Vulnerabilities, and Defense Techniques. AI 2025, 6, 216. https://doi.org/10.3390/ai6090216

Jaffal NO, Alkhanafseh M, Mohaisen D. Large Language Models in Cybersecurity: A Survey of Applications, Vulnerabilities, and Defense Techniques. AI. 2025; 6(9):216. https://doi.org/10.3390/ai6090216

Chicago/Turabian StyleJaffal, Niveen O., Mohammed Alkhanafseh, and David Mohaisen. 2025. "Large Language Models in Cybersecurity: A Survey of Applications, Vulnerabilities, and Defense Techniques" AI 6, no. 9: 216. https://doi.org/10.3390/ai6090216

APA StyleJaffal, N. O., Alkhanafseh, M., & Mohaisen, D. (2025). Large Language Models in Cybersecurity: A Survey of Applications, Vulnerabilities, and Defense Techniques. AI, 6(9), 216. https://doi.org/10.3390/ai6090216