1. Introduction

Cybersecurity research has traditionally relied on diverse data sources to understand, detect, and mitigate threats. These include network traffic data [

1,

2,

3,

4], simulation environments [

5], open-source anti-virus vendor databases [

6], surveys [

7,

8], and even social media [

9]. More recently, global news media has emerged as a promising source for cyber intelligence, providing real-time and contextual insights into cyber incidents. For instance, studies like [

10] examined 25 years of news articles from Swedish newspapers without using any Natural Language Processing (NLP) techniques. While [

11] utilized data from 14 news portals and used preliminary NLP techniques like LDA-based topic modeling, Ref. [

12] employed both sentiment analysis and LDA. However, these approaches predominantly relied on keyword-based extraction techniques, which often fail to capture semantically relevant content that lacks explicit keywords. For example, critical topics such as “Data Breach at Global Bank Affects Millions” or “Hackers Exploit Zero-Day Vulnerability” are directly related to cybersecurity but may be overlooked due to the absence of predefined keywords like “Cybersecurity” or “Cyber Safety”. These limitations necessitate a shift toward more advanced techniques capable of extracting meaningful insights from large, heterogeneous datasets.

Industrial cyber–physical systems (ICPSs), which integrate computational and physical processes, are foundational to modern industries such as manufacturing, energy, and transportation [

8,

13]. While ICPSs enable operational efficiency and innovation, their interconnected nature also exposes them to sophisticated cyber threats, including ransomware attacks and Advanced Persistent Threats (APTs) [

14]. The growing reliance on ICPSs underscores the need for effective threat detection and mitigation strategies. Traditional cybersecurity tools often lack the scalability, semantic depth, and analytical rigor required to process and analyze the vast volumes of data ICPSs generate, leaving them vulnerable to operational disruptions and data breaches [

15]. The challenges of real-time monitoring, accurate classification, and actionable insights for ICPS security call for a comprehensive, innovative approach.

To comprehensively address the pressing challenges in cybersecurity, this study poses the following research question: “How can a GPT-based framework enhance the accuracy and efficiency of cyber threat detection and analysis in industrial cyber–physical systems?” Subsequently, to this research question, we propose a Generative Pretrained Transformer (GPT)-based framework that integrates multiple advanced technologies into a cohesive system for cyber threat detection and analysis. Unlike prior studies that focused on isolated techniques like keyword-based topic extraction [

10,

11,

12], clustering [

16], or anomaly detection [

1,

2], our framework combines semantic classification using GPT, knowledge graph construction [

17], clustering, regression analysis [

18], and anomaly detection using spectral residual (SR) transformation and Convolutional Neural Networks (CNNs) [

19]. Evaluated on a dataset of 9018 cyber events from 44 global news sources, the system achieved exceptional performance with a precision of 0.999, recall of 0.998, and an F1-score of 0.998. This work not only advances the theoretical understanding of applying AI in cybersecurity but also provides a scalable, actionable tool for improving ICPS resilience, setting a new benchmark in cyber threat intelligence and analysis.

In summary, the following are the core contributions:

The introduction of a comprehensive framework for delivering actionable intelligence, including visualization of prominent attack types and affected industries, the identification of correlated factors and unique event clusters, and anomaly detection in ICPS data, supporting enhanced cyber resilience and proactive threat management.

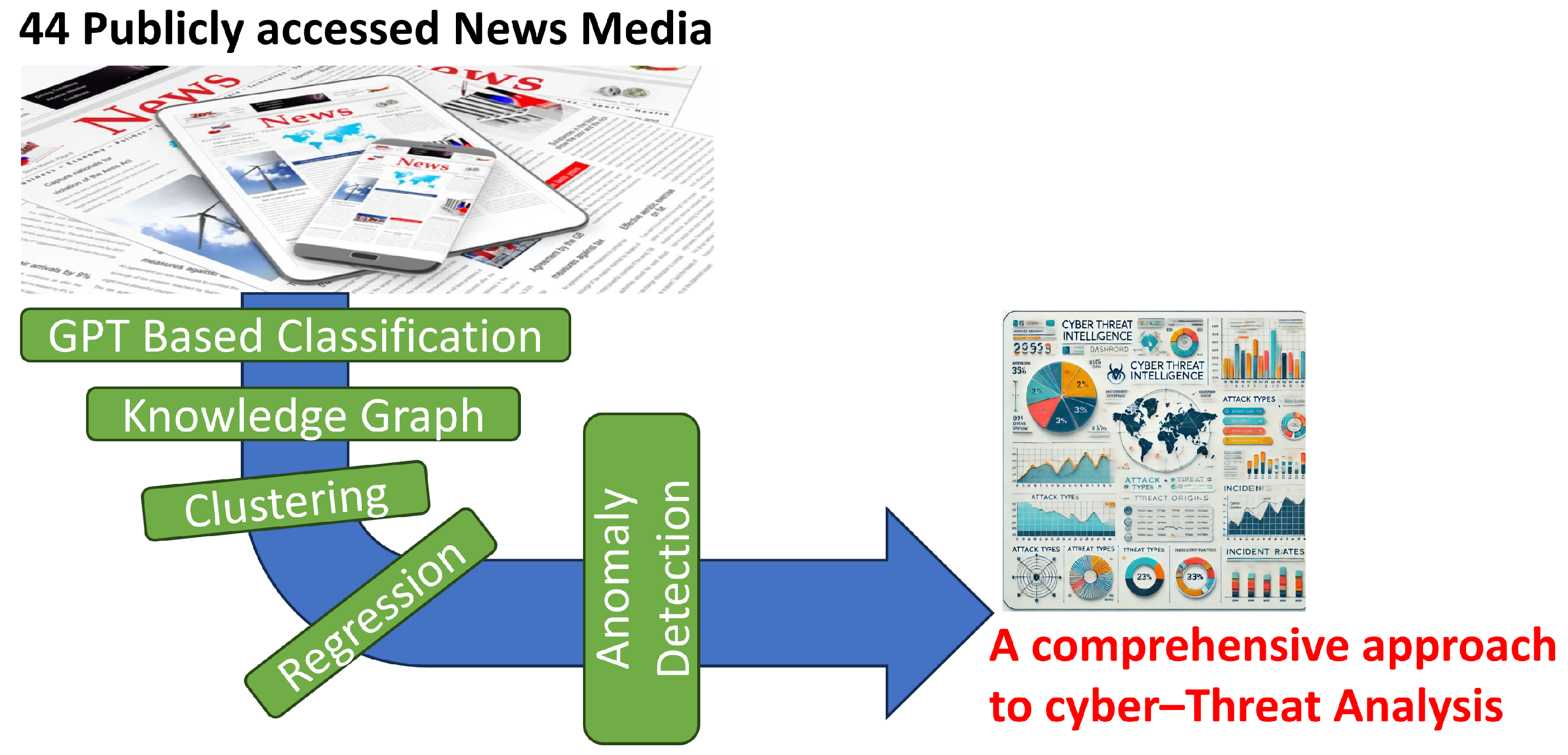

With mathematical rigor, this study lays the theoretical foundation of a novel framework integrating GPT with advanced AI techniques, including semantic classification, knowledge graph construction, clustering, regression analysis, and anomaly detection, to provide a comprehensive approach to cyber threat analysis (as shown in

Figure 1).

The production of extensive cyber intelligence data from 44 news media sources through a fully automated process over an extended period, spanning from 25 September 2023 to 25 November 2025 (14 months), demonstrating practical applicability.

A comprehensive evaluation of the proposed system with a fully automated GPT-based categorization of 9018 cyber events (i.e., precision, recall, and F1-score of 0.999, 0.998, and 0.998, respectively) into four categories; visualizations of the most prominent attack types and the affected industries along with their intricate relationships via knowledge graphs; the identification of 11 correlated factors associated with significant cyber events; the detection of five unique clusters with AI-driven insights on significant cyber events; and the automated identification six anomalies from time-series cyber data.

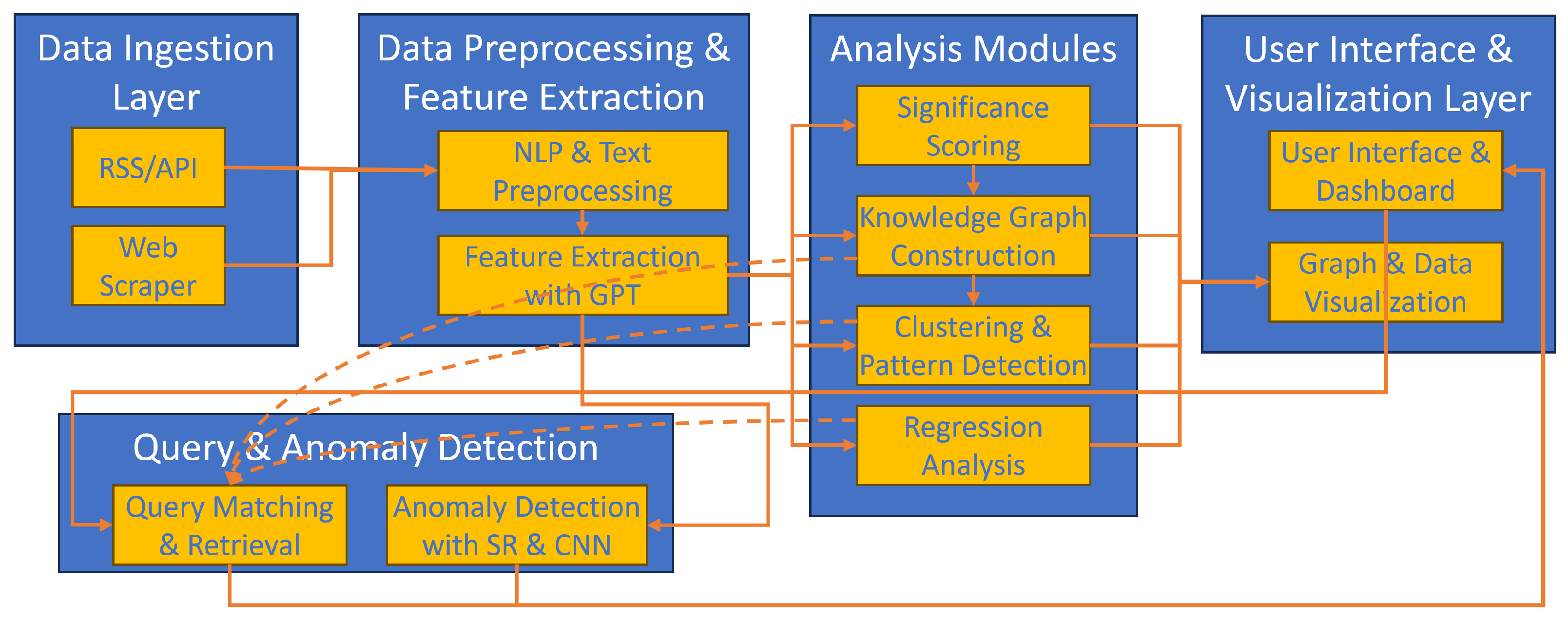

Section 2 details the system architecture, explaining each layer involved in the cyber analytics system.

Section 3 discusses the mathematical modeling techniques employed for cyber threat categorization and analysis.

Section 4 outlines our experimentation process and data collection methodologies.

Section 5 presents the results, including detailed analyses of knowledge graphs, regression, clustering, and anomaly detection. In

Section 6, the discussion synthesizes findings and contextualizes them within the broader field of cyber threat intelligence. Finally,

Section 7 concludes the paper by summarizing the research contributions and suggesting directions for future research.

3. Mathematical Modeling

This section presents the mathematical foundations of our cyber analytics system, which processes, categorizes, and analyzes cyber-related news articles. Key components include classification, significance scoring, knowledge graph construction, clustering, query matching, regression analysis, and anomaly detection. The notation used throughout is summarized in

Table 1.

3.1. Industry and Attack Type Classification

Given each article

i with content

, we classify it by industry and attack type using probabilistic classification. Define industry categories

and attack types

. For each

, classification maximizes the conditional probabilities:

Using GPT-based embeddings, each probability and is computed within a high-dimensional feature space. This classification has a complexity of , where d is the dimensionality of the embedding space.

3.2. Significance Score Calculation

To evaluate the importance of each event, we define a significance score

based on affected countries and their geopolitical strengths:

The score is then mapped to a discrete level

based on thresholds

:

3.3. Knowledge Graph Construction

The knowledge graph represents relationships between attack types

and industries

. The weight

of each edge is calculated based on aggregated significance levels:

where

is the set of articles linking

and

. Sparse matrix representations and regularization via

penalty can be used to stabilize

values.

3.4. Clustering Analysis

Each article

i is represented by a feature vector

. Clustering minimizes within-cluster variance using K-means, given by

where

is the centroid of cluster

k. The complexity is

, where

N is the number of articles,

d is the feature dimension,

K is the number of clusters, and

T the number of iterations.

3.5. Cosine Similarity for Query Matching

To match user queries

q, cosine similarity is calculated between query vector

and each article vector

:

It is important to clarify that cosine similarity was chosen primarily for its efficiency and appropriateness in dealing with relatively short texts, such as news titles and descriptions typically under 800 words. This metric effectively captures the angular similarity between text vectors, which is suitable for our dataset where document length variability is limited, thus minimizing the impact of its sensitivity to document length. However, we acknowledge that cosine similarity may not fully capture deeper semantic meanings and is susceptible to issues in high-dimensional sparsity [

22].

For large datasets, Approximate Nearest Neighbors (ANNs) optimize comparisons, making retrieval efficient even with high-dimensional vectors. To address the implementation of ANNs for mitigating high-dimensional sparsity in our cyber threat assessment framework, we integrated the Annoy library, which is designed for memory-efficient and fast querying in high-dimensional spaces. The ANN method uses a forest of trees to approximate the nearest neighbors, significantly reducing query time with minimal loss of accuracy. We tuned the parameters such as the number of trees and the search-k parameter based on empirical tests that optimized for both speed and memory usage. This approach allowed us to efficiently handle sparse data by enhancing the scalability and responsiveness of our system without the need for exhaustive search methods [

23].

FetchXML Query: In the context of our research, it is imperative to retrieve a precise subset of records from the news database

dfs_newsanalytics entity. These records must be unprocessed and related to specific types of cyber events. To formalize this in our study, we define Equation (

7) as follows:

where the following are denoted:

E represents the entity dfs_newsanalytics, from which records are fetched.

is an indicator function that equals 1 if the record x has not been processed (i.e., crd69_cyberprocessed ), and 0 otherwise.

is another indicator function that equals 1 if the event code of record x is within the specified critical cyber event categories {Nation State Hacking, Globally Disruptive Cyber Attack, Ransomware Attack News, Cybersecurity News}, and 0 otherwise.

This equation succinctly captures the filtering logic applied to select relevant records for our analysis, ensuring that only pertinent, unprocessed data are considered in the ensuing stages of our cyber threat assessment framework. An actual implementation of FetchXML query out of the mathematical model represented in Equation (

7) is shown in

Appendix A.

3.6. Regression Analysis for Significance Prediction

Regression models quantify relationships between features (e.g., industry, country) and the significance level .

Linear Regression: We can model the significance level

as a linear function of factors such as industry

, attack type

, and country

c:

where

are regression coefficients and

is the error term.

Logistic Regression: To estimate the probability

of a significant attack, we use logistic regression:

where

are logistic regression coefficients.

Time-Series Regression for Trend Analysis To analyze temporal trends, we apply a time-series regression model on the significance scores

:

where

T is the period,

are parameters, and

is the error term.

3.7. Anomaly Detection Using SR + CNN

Anomaly detection in time-series data uses SR and CNN to detect spikes or dips in cyber incident frequencies.

Spectral Residual Transformation: Given

as the incident frequency over time, the Discrete Fourier Transform (DFT) is applied:

Log-transformed amplitude and residual are calculated:

Reconstructed spectrum

combines

with the original phase:

Apply inverse DFT to generate the saliency map

:

CNN-Based Anomaly Classification: A CNN classifies segments of

as normal or anomalous, using overlapping windows

of length

w:

The CNN produces an anomaly score

, with threshold

:

3.8. Unified Loss Function for Optimization

To optimize the system, a combined loss function

incorporates feature extraction, relationship modeling, and anomaly detection:

where

is defined by

The weights are optimized via cross-validation, ensuring a balanced performance across tasks.

4. Experimentation

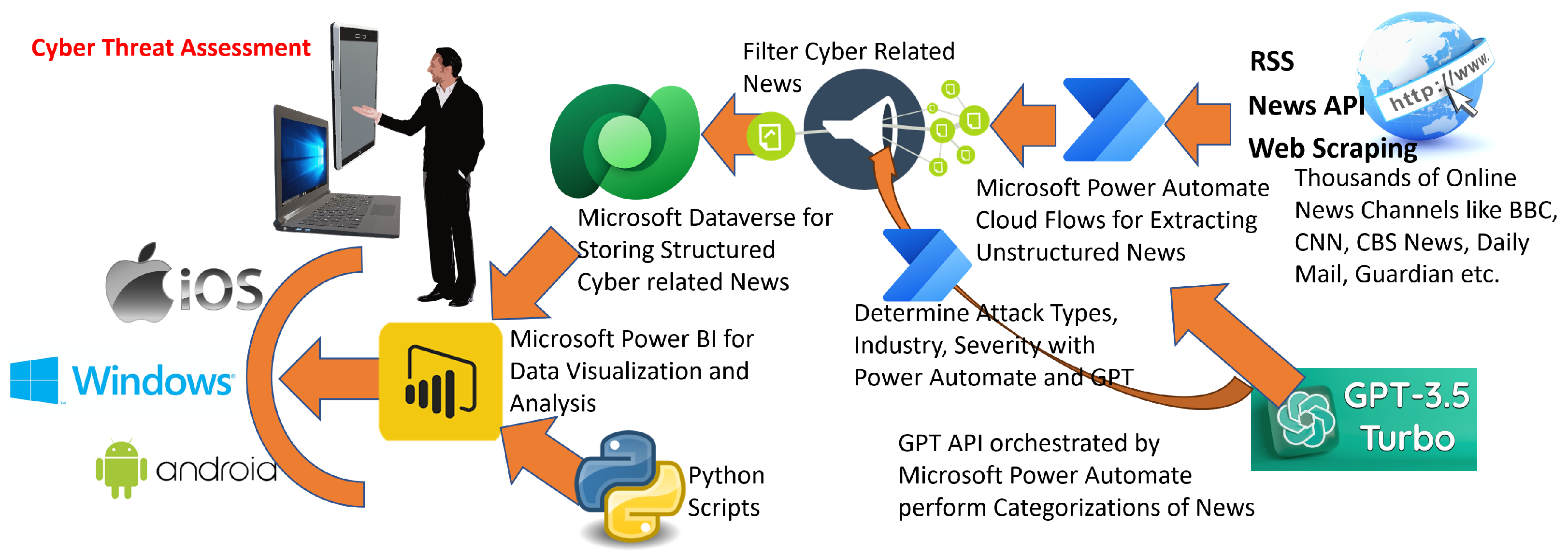

To assess the efficacy of the proposed GPT-based cyber analytics framework, an extensive monitoring initiative was conducted across 44 prominent news portals, including but not limited to BBC, CNN, Dark Reading, The Hacker News, and Security Weekly, over a duration extending from 25 September 2023 to 25 November 2024. These news sources were systematically incorporated into the monitoring list over time, employing Web Scraping, Application Programming Interface (API), and Really Simple Syndication (RSS) methodologies for efficient and comprehensive news data extraction. As depicted in

Figure 3, Microsoft Power Automate was utilized throughout the data acquisition phase spanning approximately 15 months, functioning primarily as an orchestrator to process and manage the retrieval of news articles via API interactions with OpenAI’s GPT-3.5 Turbo model. The term “GPT model” refers broadly to the class of Generative Pretrained Transformer architectures, encompassing open-source variants such as GPT-J, GPT-NeoX, and others. Within the context of this paper, all references to “GPT model” explicitly denote GPT-3.5 Turbo (OpenAI).

Given the inherent unstructured characteristics of news articles, conventional analytical techniques such as knowledge graph construction, regression analysis, and anomaly detection are typically not directly applicable. To address this limitation, the GPT-3.5 Turbo model was employed to systematically transform these unstructured articles into structured data formats, thus facilitating subsequent analytical processes involving knowledge graphs, regression analyses, and anomaly detection methodologies. It is noteworthy that while prior research efforts have applied analytical models such as anomaly detection and regression primarily to structured cybersecurity datasets [

18,

20], this research distinctly extends these analytical capabilities to unstructured news articles through the proposed GPT-based analytic framework.

Upon successful conversion into structured datasets, the news articles pertinent to cybersecurity, hacking, or information warfare were specifically filtered, resulting in the generation of a dedicated cybersecurity dataset. This dataset, developed explicitly for this research initiative, is publicly accessible and available at

https://github.com/DrSufi/CyberNews (accessed on 15 March 2025). Subsequently, the newly compiled dataset underwent rigorous analytical procedures aimed at fulfilling the overarching objectives of this study. As illustrated in

Figure 3, the dataset was securely stored within Microsoft Dataverse. FetchXML queries (such as the one shown in

Appendix A) were used to obtain data from the tables in Microsoft Dataverse. Subsequently, Microsoft Power BI was leveraged for robust data analysis and sophisticated visualization purposes. The analytical outcomes were derived through the execution of Python scripts embedded within the Power BI environment.

Finally, leveraging the versatile capabilities of Microsoft Power BI for data visualization and analysis ensured that the resultant dashboards and analytical reports could be conveniently disseminated to diverse decision-making stakeholders concerned with cybersecurity issues. This accessibility extended across various operating environments (including Windows, iOS, and Android) and multiple device form factors, such as desktops, tablets, and mobile devices.

In regard to data protection, prior to transmission to data pipeline, news records were sanitized to remove IP addresses, user credentials, identifiable organization-specific information, and any confidential metadata. Only news headers and description were passed for classification using the GPT-3.5 Turbo model. On the OpenAI side, data privacy was assured by OpenAI’s data privacy agreement located at

https://openai.com/enterprise-privacy/ (accessed on 18 April 2025). After the classification and categorization, outputs are stored in a compliance-hardened Microsoft Dataverse environment with controlled user access, role-based permissions, and activity logging. These measures collectively ensure the ethical and secure usage of external generative models, thereby mitigating data exposure risks during semantic classification.

5. Results

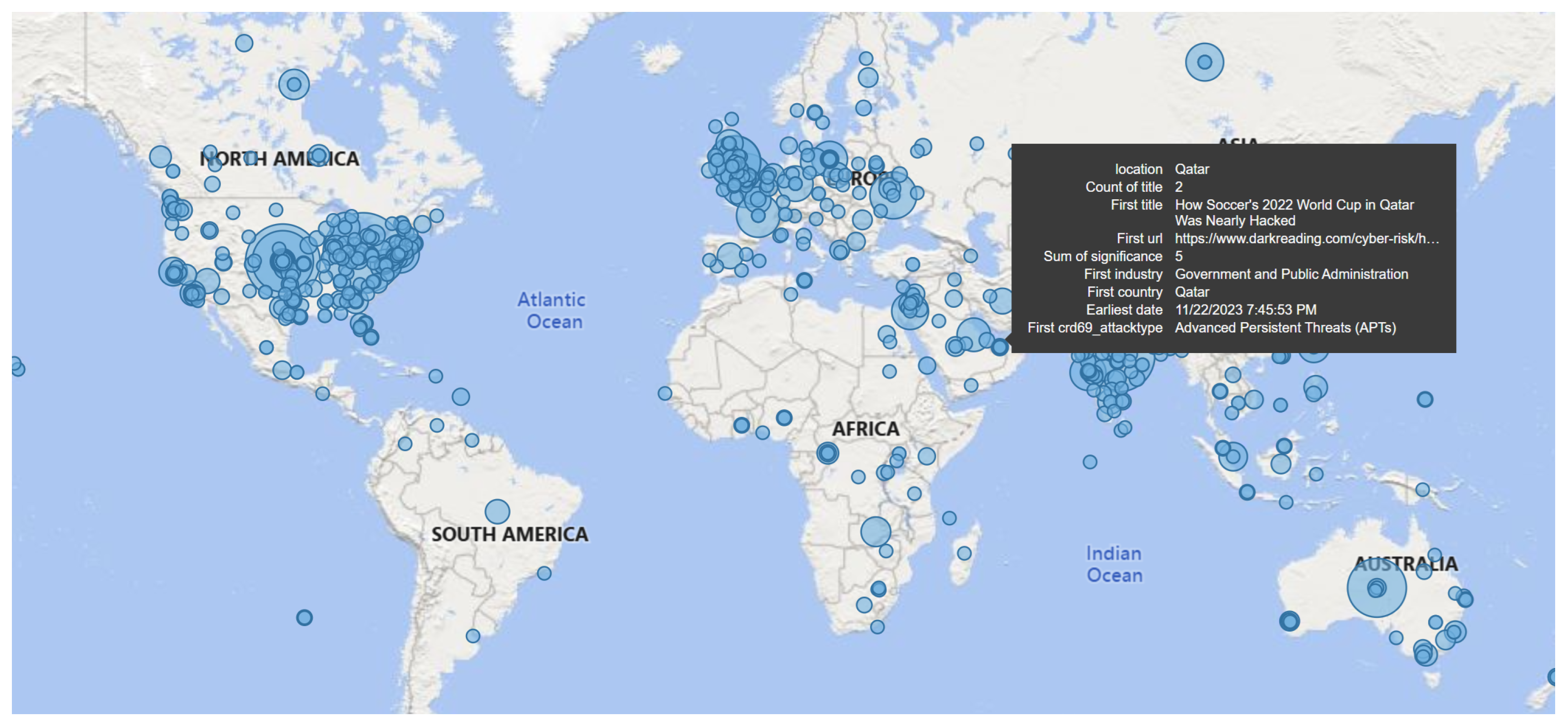

GPT APIs classified and categorized the news reports into four main categories, as shown in

Table 2. Furthermore, GPT APIs classified each of these cyber events into 15 effected industries (i.e., ‘Critical Infrastructure’, ‘Healthcare and Public Health’, ‘Financial Services’, ‘Government and Public Administration’, ‘Information Technology and Telecommunications’, ‘Manufacturing and Industrial’, ‘Retail and E-commerce’, ‘Education and Research’, ‘Media and Entertainment’, ‘Hospitality and Tourism’, ‘Energy and Utilities’, ‘Transportation and Logistics’, ‘Agriculture and Food Services’, ‘Real Estate and Construction’, ‘Professional Services’) and 15 attack types (i.e., ‘Malware’, ‘Phishing’, ‘Denial-of-Service (DoS) and Distributed Denial-of-Service (DDoS) Attacks’, ‘Man-in-the-Middle (MitM) Attacks’, ‘SQL Injection (SQLi)’, ‘Cross-Site Scripting (XSS)’, ‘Password Attacks’, ‘Insider Threats’, ‘Advanced Persistent Threats (APTs)’, ‘Zero-Day Exploits’, ‘Social Engineering Attacks’, ‘Business Email Compromise (BEC)’, ‘Supply Chain Attacks’, ‘Drive-By Downloads’, ‘Cryptojacking’). GPT also extracted the locations of these events as portrayed in

Figure 4. Eventually, these 9018 cyber-related events were used for evaluating knowledge graphs, regression-based correlation, clustering-based pattern matching, and CNN-based anomaly detection.

5.1. Comprehensive Insights with Knowledge Graph

Knowledge graphs within the cyber domain have been demonstrated to be highly effective in elucidating complex relationships for scientists, analysts, strategists, and policymakers [

17]. However, current research has yet to explore the direct generation of knowledge graphs from cyber-related news reports.

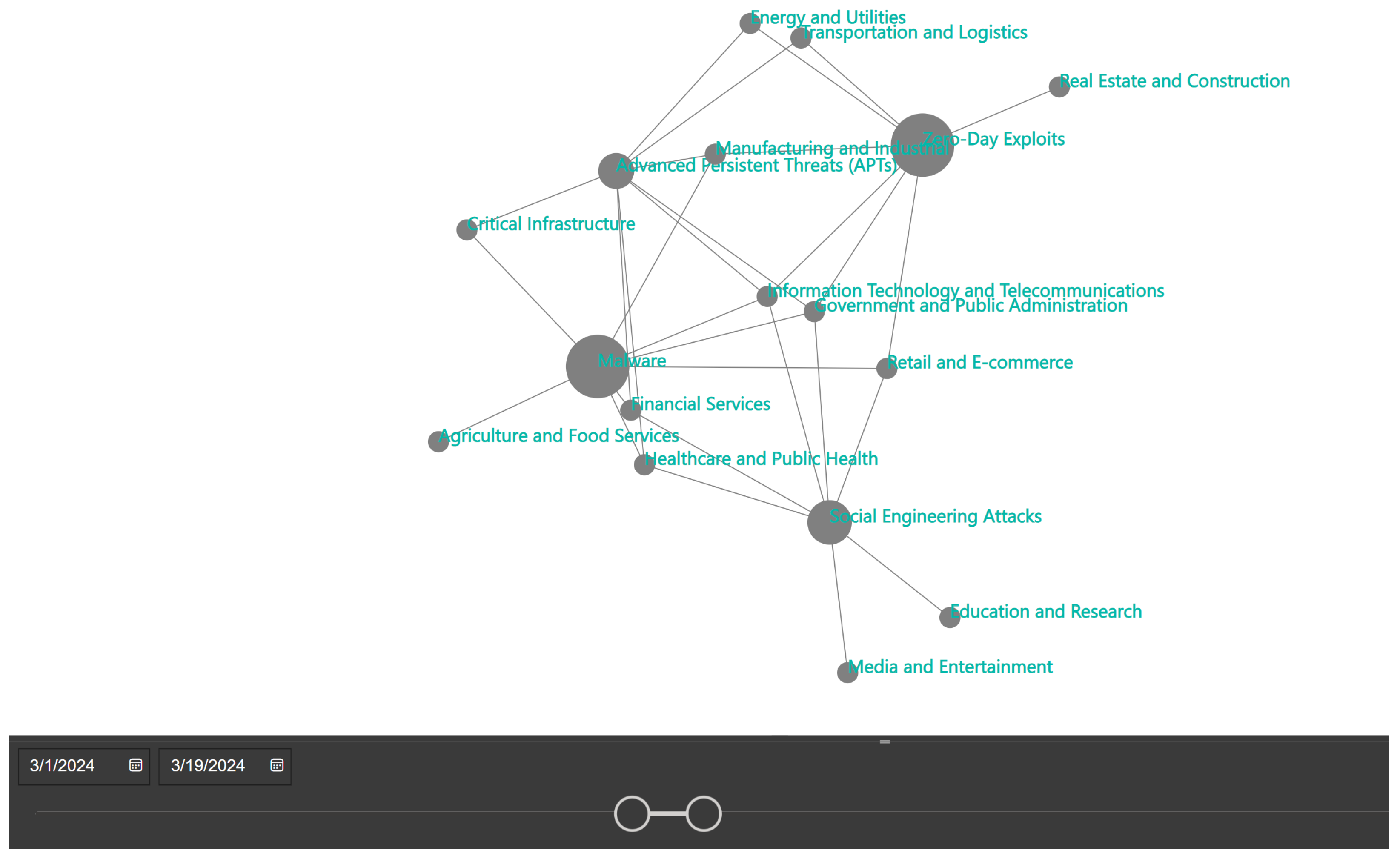

Figure 5 and

Figure 6 illustrate nuanced relationships between various attack types and the impacted industries within a specific timeframe, spanning from 1 March 2024 to 19 March 2024.

During this period, the “Information Technology and Telecommunications” industry experienced the highest incidence of cyber-related events, followed by “Government and Public Administration”, “Financial Services”, and “Healthcare and Public Health” (

Figure 5). Concurrently, attack types such as “Zero-Day Exploits”, “Malware”, “Social Engineering Attacks”, “Advanced Persistent Threats (APTs)”, “Supply Chain Attacks”, and “Ransomware” emerged as the most prevalent (

Figure 6). These visualizations underscore the critical role of knowledge graphs in capturing intricate patterns and trends within cyber threat landscapes on any given timeframe.

To ensure robustness in the knowledge graphs’ construction, particularly when aggregated significance levels exhibit high variability or sparsity, the proposed system implements several methodological safeguards. Firstly, normalization techniques such as min–max scaling or z-score normalization are applied to stabilize the variability of significance scores across diverse cyber events, thus maintaining consistency in edge weighting. Secondly, thresholding mechanisms are employed to mitigate the impact of sparse connections by retaining only relationships that surpass statistically validated significance thresholds. Additionally, robustness is further enhanced by incorporating frequency-based filtering, wherein nodes or edges that consistently demonstrate sparse or erratic significance scores across the dataset are systematically evaluated or excluded. Collectively, these methodological strategies guarantee the structural stability, reliability, and interpretability of the resulting knowledge graph.

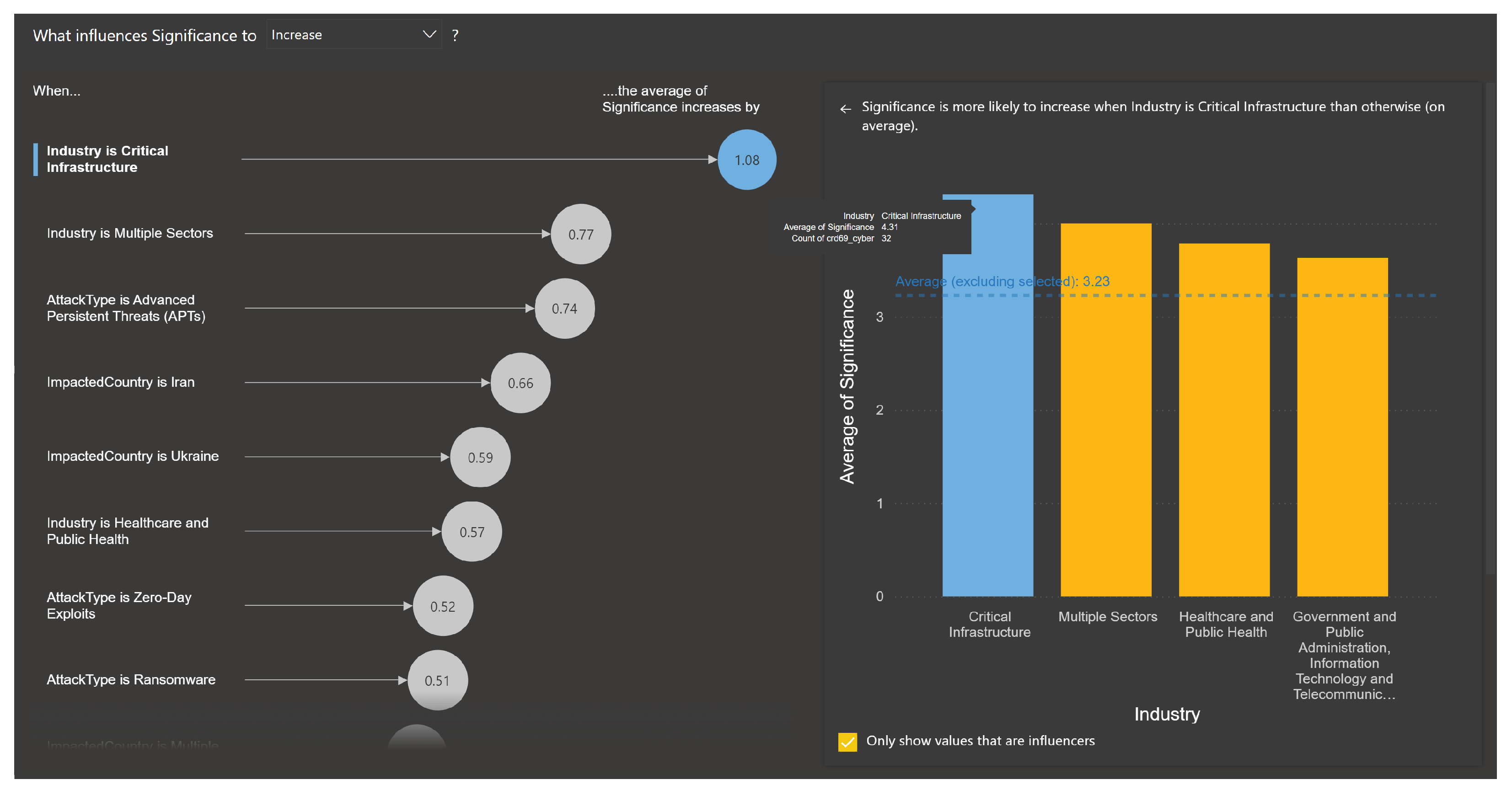

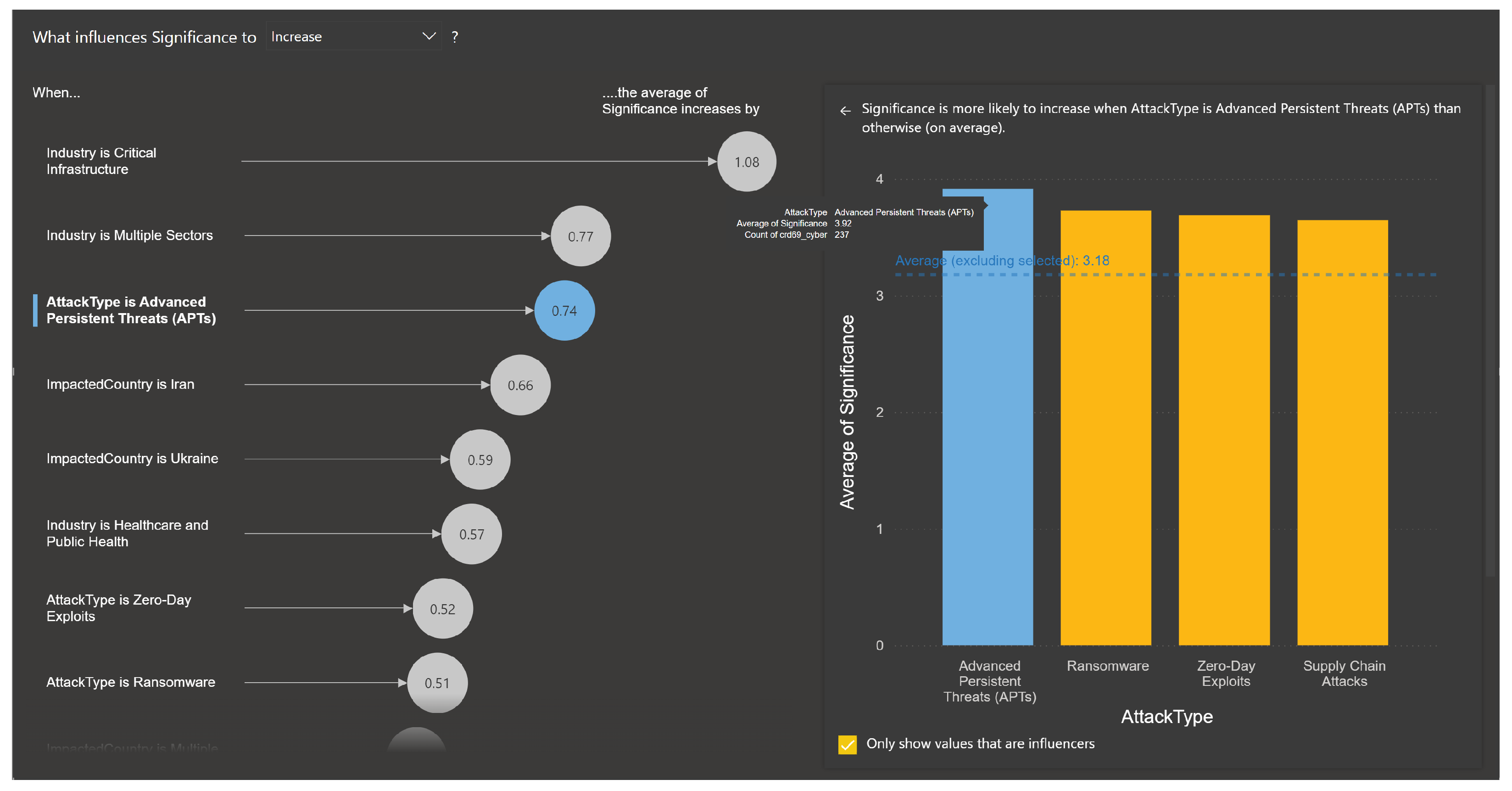

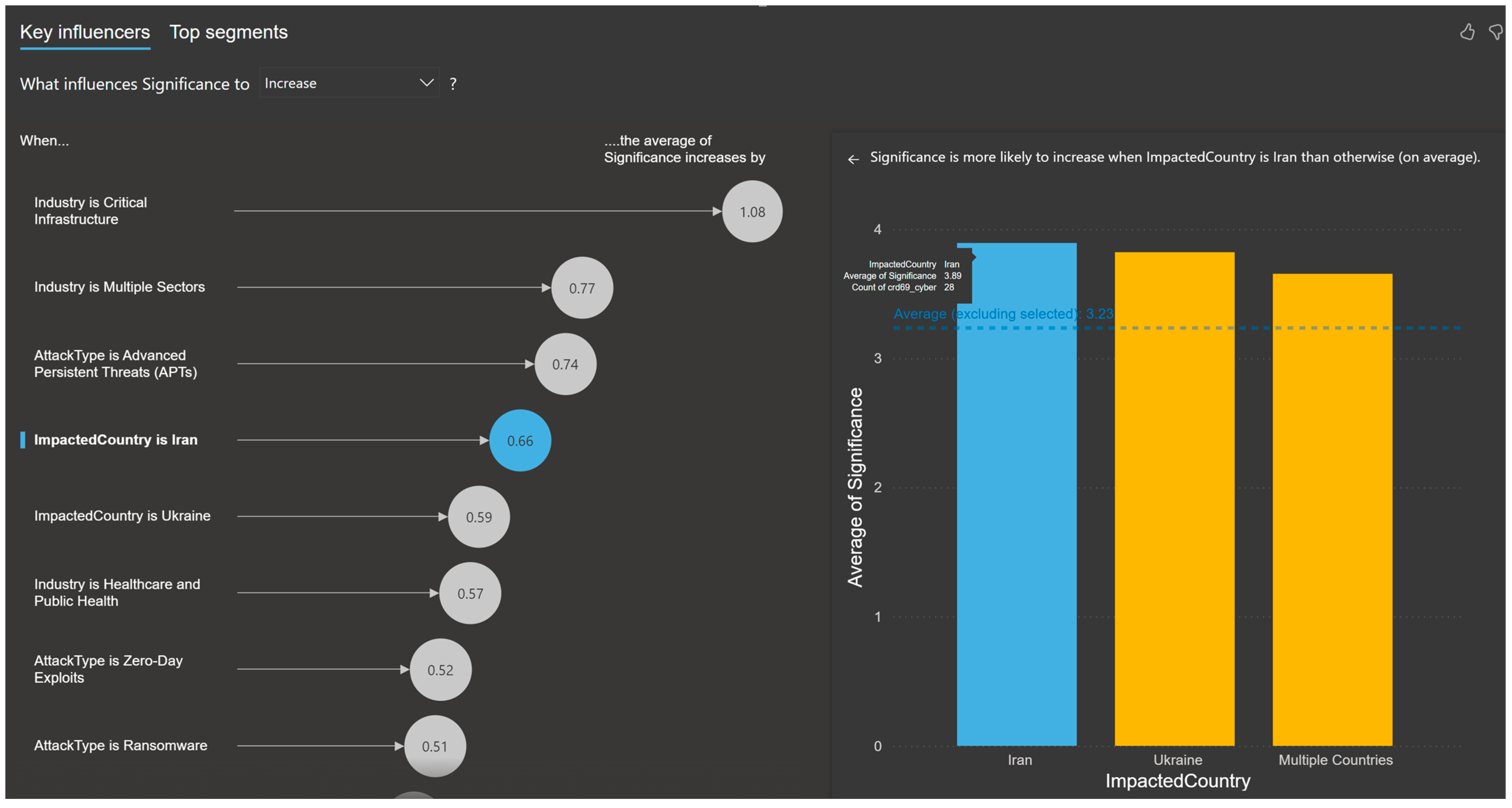

5.2. Regression Analysis to Identify Key Influencers

The regression analysis module identifies the factors influencing the significance (

S) of events by quantifying the relationship between event attributes (industries in

Figure 7, attack types in

Figure 8, and impacted countries in

Figure 9) and their average significance score (

S). Logistic regression was used on all the categorical values within Microsoft Power BI environment, enabling the determination of key factors driving significance, as shown in

Figure 7,

Figure 8 and

Figure 9. Given the diverse set of explanatory variables (e.g., industry categories, attack types, impacted countries), potential multicollinearity may influence the robustness and interpretability of our regression model. To mitigate this risk, Variance Inflation Factor (VIF) was computed to detect multicollinearity among predictors. Variables exhibiting VIF values greater than five were considered highly collinear, prompting either feature selection or regularization techniques like ridge regression. These approaches ensured reliable inference of each factor’s impact on event significance.

Through this process, we examined how changes in attributes (independent variables) influence

S (dependent variable). The regression model generated insights by measuring the average increase (

) in significance for specific factors. The relationships are presented in a structured manner using a notation system portrayed in

Table 3).

The key relationships derived from the regression analysis are presented in two tables (

Table 4 and

Table 5): one for the descriptive factors and another for the corresponding equations (capturing the factors that influence the increase in

S).

The regression analysis highlights the significant influence of specific factors on event significance. Industries such as critical infrastructure and attack types such as Advanced Persistent Threats () exhibit the highest increases in S. Similarly, geopolitical contexts, as seen in events affecting countries like Iran (), also show strong impacts.

These insights provide actionable knowledge for prioritizing response strategies and resource allocation, ensuring focused efforts on high-impact factors. Therefore, regression techniques have been used in previous cyber-related studies [

18]. However, according to the existing literature, this study represents the first documented application of regression analysis on cyber data aggregated from open-source news media.

5.3. Clustering Intricate Patterns on Cyber Events

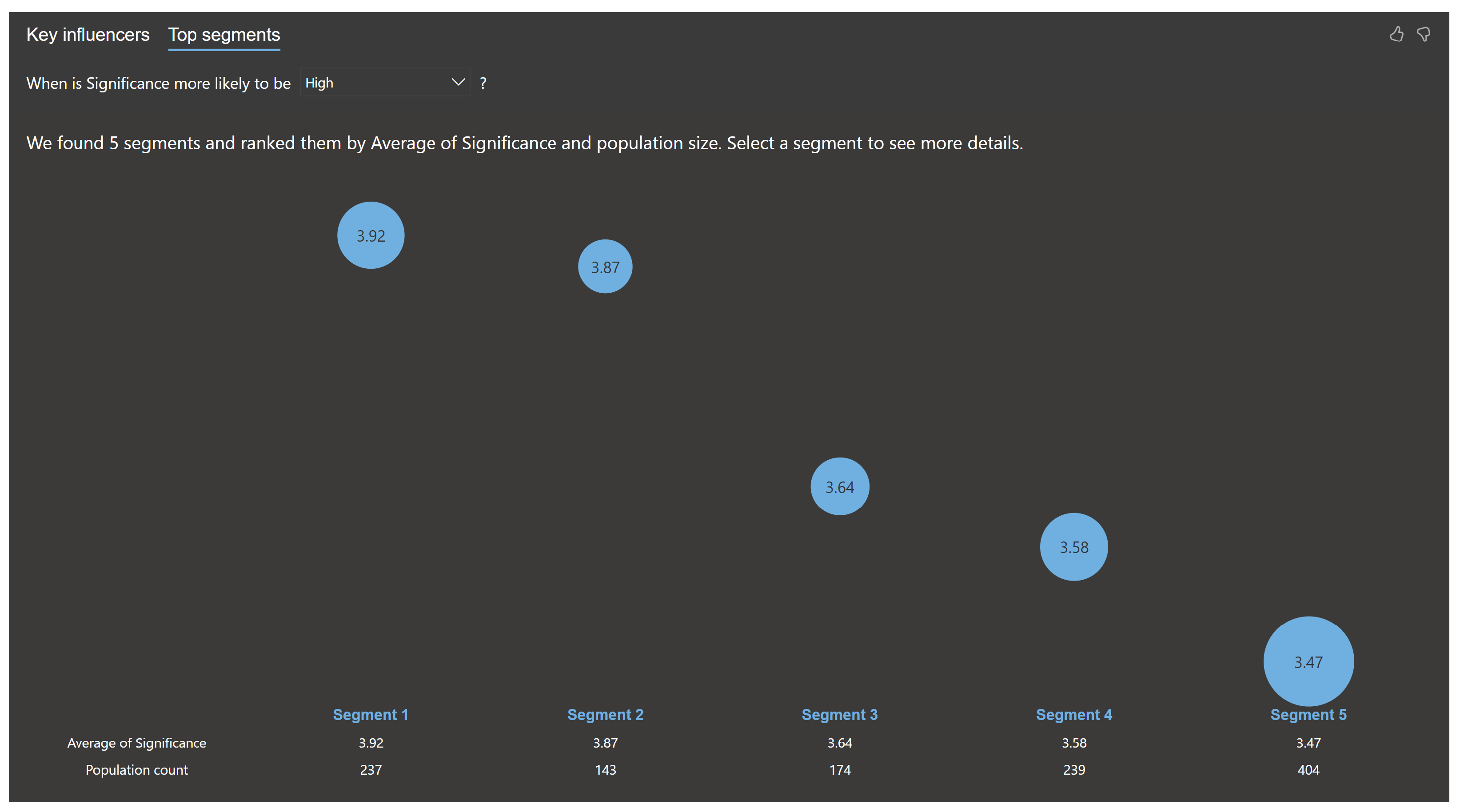

Cluster analysis was conducted to identify top segments based on factors influencing the significance (

S) of events. Using Power BI’s visualization of top segments (as shown in

Figure 10), five distinct clusters were identified, each representing a group of factors contributing to high significance scores. The results are summarized below.

Table 6 highlights the segmentation of high-significance events based on attack types, impacted countries, and industries. Segment 1 emphasizes the criticality of Advanced Persistent Threats (APTs), while segment 2 showcases the compound impact of Zero-Day Exploits and multiple impacted countries.

To provide a structured, quantitative representation, the relationships in each cluster are expressed mathematically as shown in

Table 7.

Table 7 provides the mathematical representation of the segments, clearly defining the conditions under which the average significance (

S) for each cluster is observed.

This K-means clustering analysis highlights the segmentation of high-significance events based on attack types, impacted countries, and industries. The optimal number of clusters (K) was determined using Silhouette analysis, where the average Silhouette width was calculated for cluster sizes ranging from 2 to 10. The optimal K was selected based on the highest average Silhouette score, ensuring maximum cohesion within clusters and separation between them. This systematic determination of K significantly enhanced the interpretability and robustness of our clustering analysis, although slight variations in K could moderately influence cluster characteristics.

In segment 1, events involving Advanced Persistent Threats (APTs) exhibit the highest significance, with an average , surpassing the overall average by 0.67 units. In segment 2, events with Zero-Day Exploits and multiple countries impacted demonstrate high significance (), 0.63 units above the average. In segment 3, events where attack types are specified (not None), the United States is impacted, and the industry is neither IT nor Government result in , 0.40 units above the average. In segment 4, events with Zero-Day Exploits but not involving multiple impacted countries score , 0.34 units higher than the average. In segment 5, events excluding specific attack types (e.g., Social Engineering, Zero-Day Exploits, or APTs), countries (e.g., United States), and industries (e.g., IT or Government) have , 0.22 units above the average.

Insights leveraged with clustering can guide the prioritization of resources and risk mitigation strategies in cyber incident management, and hence, it was utilized by other researchers in the cyber domain [

16]. However, this study is the first to report the application of clustering analysis on cyber data synthesized from open-source news media, as identified in the existing body of literature.

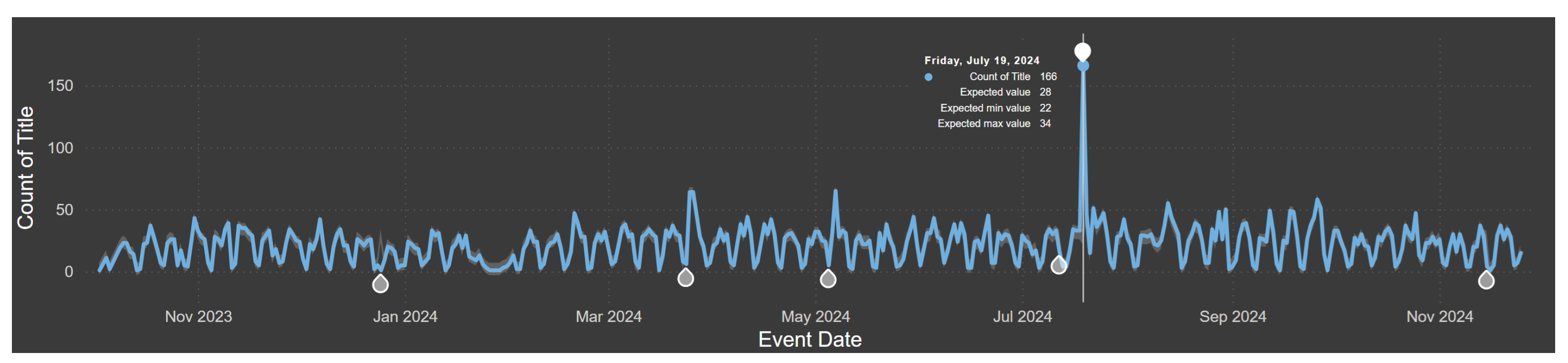

5.4. Anomaly Detection for Identifying Abnormal Patterns in Cyber Events

An anomaly detection algorithm was applied to analyze cyber-related event data, utilizing a sensitivity setting of 75%. The analysis identified significant deviations from expected event counts over the observation period, as shown in

Figure 11. Notably, a pronounced spike was detected on 19 July 2024, where the observed count reached 166, significantly exceeding the expected range of 22 to 34. Such anomalies provide critical insights into unusual activity patterns, potentially indicating heightened cyber threats or significant events within that timeframe. This result highlights the effectiveness of anomaly detection in isolating periods of abnormal activity, which can guide further investigation into underlying causes and facilitate timely response strategies. As depicted in

Figure 11, there were six anomalies identified during the selected timeframe by the CNN+SR algorithms [

19]. Even though anomaly detection algorithms have been used to harness insights on cyber data [

1,

2], this study marks the first instance in the literature where anomaly detection has been applied to cyber data aggregated from open-source news media.

It should be noted that in this anomaly detection approach, overlapping windows in the saliency map were used to ensure that transient anomalies are not missed. A sliding window of length w with an overlap of 50% was employed, balancing anomaly detection sensitivity and computational efficiency. Computationally, the CNN classification step has complexity , where N represents the number of window segments, w is the window length, and f is the CNN’s feature dimension. Although larger overlaps provide finer temporal resolution, they incrementally increase computation; thus, the 50% overlap was chosen as a practical trade-off between accuracy and computational overhead.

6. Discussion

This section evaluates the system’s ability to categorize and identify cyber-related events using a novel dataset created specifically for this study, consisting of 9018 cyber events. To establish a robust ground truth, 2000 records were selected for detailed evaluation to test the system’s effectiveness across varied cyber threat scenarios. This subset approach allows for a concentrated analysis while maintaining the integrity of ongoing and future validations.

To assess the efficacy of the proposed system in categorizing and identifying cyber-related events, the performance metrics—precision, recall, and F1-score—were evaluated across four primary categories:

Cybersecurity News,

Nation State Hacking,

Ransomware Attack News, and

Globally Disruptive Cyber Attacks. The evaluation results are summarized in

Table 8.

The evaluation of precision, recall, and F1-score was conducted using the following equations:

Here, TP represents the value of true positives, TN the true negatives, FP the false positives, and FN the false negatives. These metrics provide an evaluation of the system’s ability to correctly classify and identify relevant cyber-related incidents while minimizing false positives and negatives.

The system demonstrated high accuracy, as evidenced by the overall precision, recall, and F1-score values of 0.999, 0.998, and 0.998, respectively. Among the individual categories, the Nation State Hacking category achieved the highest recall of 0.999, indicating the system’s ability to correctly identify nearly all relevant instances of this event type. Meanwhile, the Globally Disruptive Cyber Attack category achieved a perfect precision score of 1.000, reflecting its exceptional ability to avoid false positives.

Although the Ransomware Attack News category exhibited slightly lower performance metrics compared to the others, its precision (0.978), recall (0.971), and F1-score (0.975) remained consistently high, highlighting the robustness of the classification process. The marginally lower scores in this category can be attributed to the more nuanced characteristics of ransomware-related events, which pose additional challenges for accurate classification.

Figure 12 illustrates a 3D surface plot that depicts the performance metrics of recall, precision, and F1-score for four different types of cyber attacks: Zero-Day Exploits, APTs, Malware, and Social Engineering Attacks. This offers a comprehensive view of the effectiveness of the GPT-based classification of these metrics across varied attack scenarios.

On the other hand, the 3D surface plot in

Figure 13 presents a detailed visualization of performance metrics—recall, precision, and F1-score—across four key industries vulnerable to cyber attacks: Information Technology and Telecom, Financial Services, Government and Public Administration, and Healthcare and Public Health.

It should be noted that the GPT-based categorization and classification method demonstrated in this research has achieved an impressive F1-score of up to 98%. To mitigate potential hallucinations or inconsistencies arising from GPT-3.5 Turbo model outputs (for categorization and classification), Schema-Constrained Prompt Engineering was integrated into the system. The prompts submitted to GPT were designed using a constrained vocabulary of 15 industry sectors and 15 attack types, effectively guiding the model to choose from a closed label space. As shown in

Table 8, this mechanism empirically contributed to the exceptionally high observed precision (ranging between 0.979 and 1) and recall (ranging between 0.933 and 0.999), substantiating the reliability of GPT-assisted classification for cyber threat analysis. Compared to other NLP-based methods such as topic extraction, the results of this study are substantially higher [

24,

25]. This study leverages Microsoft Power BI’s visualization tools for regression, clustering, as well as anomaly detection. Power BI enhances these methods with explainable AI technology, providing users with clear, natural language explanations of how insights are derived, including confidence levels and the specific data subsets used from the total 9018 records. This approach not only enhances transparency but also allows users to precisely evaluate and trust the decision-making process facilitated by the framework.

The comprehensive evaluation confirms the robustness and reliability of the system in identifying and categorizing diverse cyber-related incidents. These metrics underscore the potential of the proposed approach for real-time monitoring and analysis of cyber events, providing actionable insights for stakeholders.

7. Conclusions

For cyber-related studies, GPT was used in [

20], the aggregation of news articles was used in [

10,

11,

12], anomaly detection was used in [

1,

2], regression was used in [

18], clustering was used in [

16], and knowledge graph construction was used in [

17]. However, this paper introduces the first fully automated solution integrating these technologies. Beyond proposing a robust framework underpinned by mathematical rigor, this research also demonstrates the extraction and valuation of cyber intelligence from open-source news articles, leveraging AI-driven analytical insights.

The primary users of this cyber threat assessment framework are cybersecurity teams, risk management professionals, and strategic decision-makers in sectors utilizing industrial cyber–physical systems (ICPS) such as manufacturing, energy, and transportation. These stakeholders use the framework to proactively detect and mitigate cyber threats, inform strategic planning, and ensure regulatory compliance. Additionally, researchers in cybersecurity can leverage the system’s analytical outputs to advance the development of new security technologies and methodologies.

This study, while offering a comprehensive GPT-based framework for cyber threat analysis, is limited by its reliance on predefined attack and industry categories, potentially overlooking emerging or unconventional threats [

26,

27]. Future research will aim to enhance the framework by incorporating dynamic learning mechanisms to adapt to novel attack patterns and expanding the temporal and contextual scope of data sources for greater applicability. Moreover, future research endeavors should investigate innovative uses of language models (as shown in [

28,

29,

30,

31]) for better categorization and classification of cyber-related news articles.