1. Introduction

Full-body human motion reconstruction and editing have attracted significant attention across a wide range of domains, including entertainment, sports analysis, training, education, and collaborative work. In professional settings such as film production or athletic performance evaluation, motion capture typically relies on high-end optical systems or dense arrays of inertial measurement units (IMUs). Although these systems offer high accuracy and detailed motion data, their high cost, complex calibration requirements, and need for technical expertise limit their broader adoption for consumer-facing applications. In contrast, consumer-level solutions seek to increase accessibility by leveraging sparse sensor configurations that avoid dependence on dedicated motion capture hardware, thereby enabling deployment across a wider range of VR/AR and related applications. However, such systems face two fundamental challenges: the severely under-constrained nature of sparse input, particularly due to the lack of lower-limb tracking, and the limited computational capacity of mobile platforms. These dual constraints jointly hinder real-time generation of high-fidelity full-body motion with both physical plausibility and temporal coherence.

Existing solutions can be broadly categorized into three groups. IMU-based methods attach six 3DoF sensors to key body parts [

1,

2], enabling portable motion capture but lacking global positional references and suffering from cumulative drift. Methods based on consumer VR devices utilize 6DoF signals from head-mounted displays (HMDs) and controllers [

3,

4], offering lightweight deployment but often encountering lower-body ambiguity due to limited observations below the torso. To address these issues, HMD-Poser [

5] integrates VR and optional IMU inputs in a unified deep learning framework with real-time capability. However, its Transformer-centric design—applying global self-attention across all spatiotemporal tokens—introduces substantial computational and memory overhead, and entangles spatial semantics across body parts, making it difficult to impose anatomical constraints or modular control over specific regions. Other methods incorporate additional 6DoF trackers [

6,

7] to improve accuracy, but their reliance on external base stations reduces portability and limits applicability in untethered setups. Despite these limitations, DragPoser emphasizes the practical importance of accurate end-effector localization, offering valuable insights for region-aware optimization in VR/AR motion reconstruction.

This work introduces LGSIK-Poser, a lightweight and skeleton-aware framework that extends the scalable design of HMD-Poser and incorporates constraint-inspired refinement strategies from DragPoser. The framework integrates grouped temporal modeling via LSTM, masked graph convolution (GConv) for anatomically informed spatial reasoning, and region-specific inverse kinematics (IK) optimization guided by original input cues to improve end-effector localization, particularly for the hands. In addition, user-specific anthropometric priors are incorporated to enable personalized SMPL shape estimation. LGSIK-Poser demonstrates competitive or superior reconstruction accuracy, enhanced precision for hand and foot motion, and significantly improved computational efficiency, making it well-suited for real-time deployment under sparse and practical input configurations.

2. Related Work

Recent research in human motion reconstruction (HMR) has increasingly focused on sparse-input scenarios, where only partial observations from limited sensors such as head-mounted displays (HMDs), inertial measurement units (IMUs), or 6DoF trackers are available. Compared to camera-based approaches that require external viewpoints, sensor-based methods offer improved portability, robustness to occlusion, and real-time performance, making them especially suitable for VR/AR applications. This section reviews representative sensor-based HMR methods, grouped by input modality.

2.1. HMR from Sparse IMU Sensors

Sparse IMU-based systems typically use six 3DoF sensors attached to the head, pelvis, forearms, and lower legs to reconstruct full-body motion. These systems are portable, robust to occlusion and lighting, and do not require external cameras. SIP [

8] first demonstrated feasibility with an optimization-based offline method. Later works [

1,

9] adopted deep recurrent models to improve runtime performance but still lacked global translation estimation.

Recent methods introduced structural and physical priors to mitigate drift. TransPose [

2] combined RNN-based regression with supporting foot constraints, TIP [

10] employed a Transformer with stationary-point prediction, PIP [

11] integrated a physics-aware optimizer, and PNP [

12] modeled non-inertial effects by compensating fictitious forces in IMU signals. Despite these advances, the absence of absolute spatial anchors leads to cumulative position drift.

2.2. HMR from Sparse VR Inputs

Sparse VR signals typically include 6DoF poses (position and orientation) from head-mounted displays (HMDs) and handheld controllers. These signals provide partial yet widely accessible motion cues in consumer VR setups, especially in untethered, lightweight systems. Existing reconstruction methods can be broadly categorized into physics-based simulation and learning-based modeling.

Physics-based methods such as QuestSim [

13] and QuestEnvSim [

14] leverage reinforcement learning with NVIDIA IsaacGym [

15] to synthesize physically plausible full-body motion. However, their reliance on non-differentiable simulation and GPU-intensive computation makes them impractical for real-time deployment on HMDs with limited resources. In contrast, learning-based approaches directly regress full-body motion from sparse VR inputs. Deterministic models [

4,

16] and generative frameworks such as VAEs [

17], normalizing flows [

18], and diffusion models [

3,

19] have shown promising performance in offline settings. Nevertheless, they often struggle with ambiguity in lower-body motion due to the absence of informative signals below the torso, resulting in unstable or anatomically inconsistent predictions.

To address these limitations, HMD-Poser [

5] introduces a hybrid framework that combines sparse HMD/controller signals and optional IMU inputs. It employs an LSTM–Transformer architecture for spatiotemporal modeling and includes an online shape regression module for user-specific adaptation. Despite its effectiveness, the monolithic Transformer introduces considerable computational overhead and lacks architectural modularity, restricting interpretability and hindering region-specific motion reasoning.

Building on the scalability and personalization capabilities of HMD-Poser, LGSIK-Poser is proposed as a skeleton-aware and lightweight framework that enhances structural interpretability and shape adaptability. The body is decomposed into semantically grouped partitions, each processed independently through group-specific LSTM modeling. Masked graph convolution is applied to capture spatial relationships among groups, enabling modular spatial reasoning aligned with human anatomy. This design contributes to better preservation of anatomical semantics. In addition, user-specific anthropometric priors, including height, gender, and limb lengths, are incorporated to regress personalized SMPL shape parameters. Compared to Transformer-based designs such as HMD-Poser, which applies self-attention over long temporal sequences, this modular approach reduces computational and memory overhead and supports anatomically structured modeling.

2.3. HMR from Sparse 6DoF Tracker Inputs

Some commercial VR/AR systems support additional 6DoF trackers (e.g., HTC VIVE Trackers) providing absolute position and orientation measurements to enhance full-body tracking beyond HMD and controllers. SparsePoser [

6] reconstructs high-quality full-body motion from six 6DoF trackers typically placed on pelvis, feet, and hands, using a convolutional autoencoder trained on motion capture data and learned IK modules for end-effector refinement. DragPoser [

7] extends this with a latent space optimization framework enforcing hard kinematic constraints focused on precise end-effector positioning and a temporal predictor for motion continuity. It supports robustness to missing data and variable sensor configurations.

While SparsePoser and DragPoser demonstrate strong reconstruction accuracy and flexibility through the use of additional 6DoF trackers, their effectiveness is closely tied to outside-in tracking systems such as Lighthouse. These systems estimate tracker positions via structured light or laser sweeps emitted from external base stations. This reliance imposes additional hardware requirements, reduces portability, and limits compatibility with standard VR and IMU-only setups. Moreover, their performance can degrade significantly when root position input is unreliable or unavailable—a condition that frequently arises in lightweight consumer scenarios where only head-mounted displays and handheld controllers are used.

Despite these limitations, DragPoser provides valuable insights by demonstrating the importance of satisfying hard kinematic constraints through optimization to improve local pose accuracy, especially for end-effectors. Inspired by this, the method proposed here integrates a region-specific inverse kinematics (IK) optimization module directly into the network. Guided by original input cues, this embedded IK module enhances end-effector localization—particularly for the hands—while maintaining real-time performance. Unlike DragPoser’s iterative latent-space optimization, the proposed approach is non-iterative, avoiding costly optimization loops and enabling efficient, end-to-end refinement within the model.

3. Method

The goal of this work is to reconstruct full-body human motion—including joint rotations, global positions, and mesh vertices—based on sparse and heterogeneous input signals typically available in VR/AR settings. These inputs may include head-mounted displays (HMDs), handheld controllers, and a small number of optional IMUs. The task involves estimating SMPL pose and shape parameters in a unified and temporally coherent manner, despite varying input configurations.

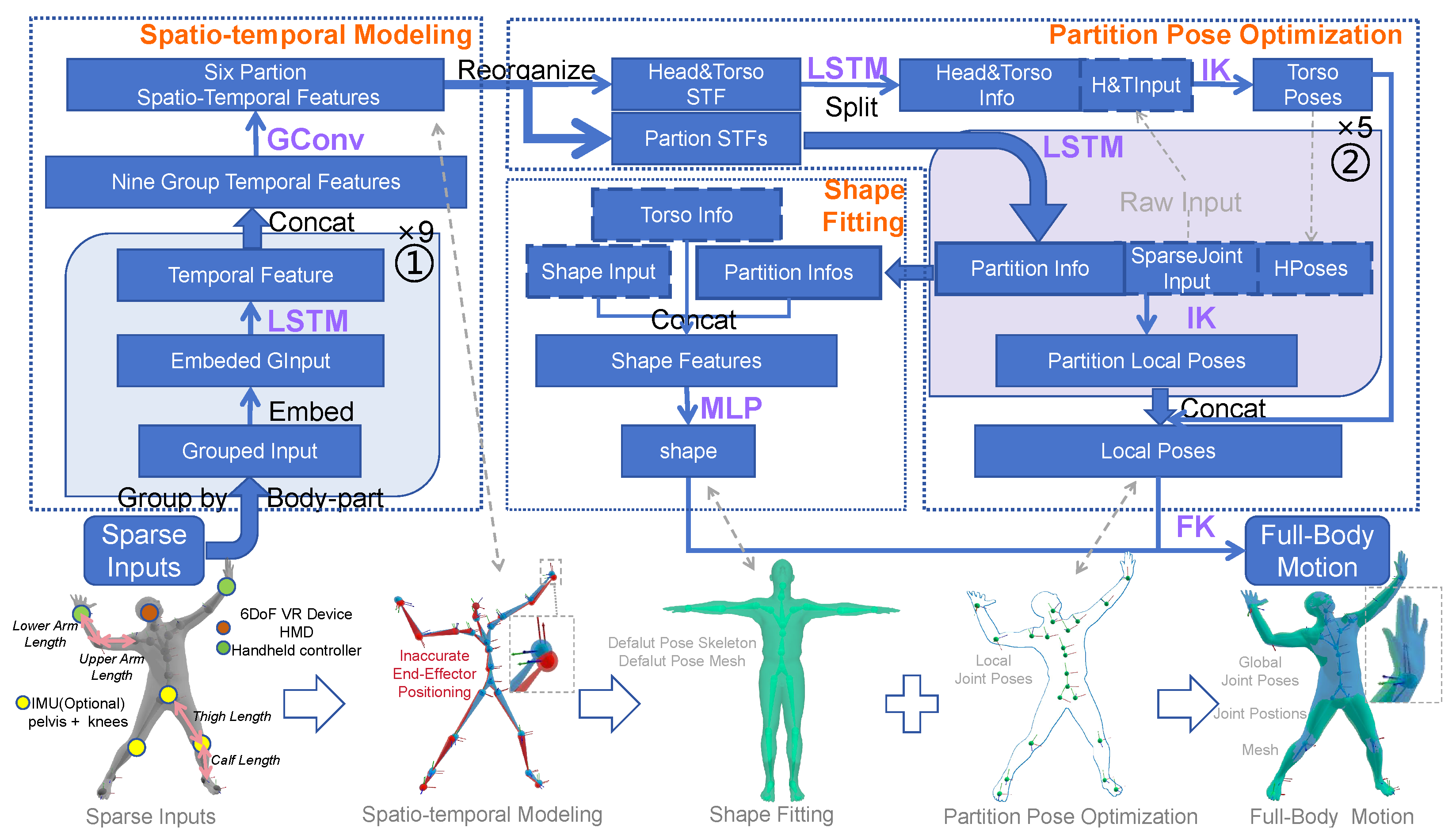

To this end, the proposed framework consists of three main modules: grouped spatio-temporal modeling, partition-based pose optimization, and shape fitting. An overview of the entire pipeline is shown in

Figure 1. The top portion of the figure illustrates how features are processed through each module. This includes LSTM-based temporal encoding, spatial fusion using graph convolution, local joint rotation refinement, and final mesh reconstruction via forward kinematics. The bottom row complements the diagram with 3D visualizations of key stages. These include the sparse input, outputs from intermediate modules, and the final mesh result. Dashed lines connect each 3D visualization to its corresponding stage in the processing flow.

3.1. Grouped Spatio-Temporal Modeling

The same input representation as HMD-Poser [

5] is adopted, where each joint is encoded as a concatenation of 6D rotation, angular velocity, position, and linear velocity. For joints equipped with IMUs, acceleration is also included. To enhance the accuracy of hand motion, HMD-Poser further incorporates relative poses with respect to the head coordinate frame, which is also retained here. To ensure compatibility across diverse capture scenarios under a unified framework, the input dimensionality remains fixed across all settings, with missing observations zero-padded accordingly.

Building upon this input design, a novel semantic grouping strategy is introduced to organize the full-body input into nine anatomically meaningful regions: torso, head, left and right arms, left and right legs, upper and lower body, and shape. Each group reorganizes joint-level information based on anatomical or functional affinity. The torso group captures holistic motion by integrating signals from the pelvis, head, arms, and legs. The head group includes absolute head states along with the dynamic representations of both hands expressed in the head coordinate frame, which serve as auxiliary cues to enhance head motion modeling.

The left and right arm groups combine 6DoF representations from the head and corresponding hands, incorporating both global and head-relative components. The leg groups consist of IMU-derived rotations and accelerations from the pelvis and respective knees, complemented by head positional cues to address the lack of direct spatial measurements in the lower limbs. The upper body group emphasizes coordinated movement by fusing signals from the head, pelvis, and hand dynamics relative to the head. In contrast, the lower body group incorporates pelvis and bilateral knee data along with head-referenced positional cues for leg modeling. Finally, the shape group encodes static anthropometric priors, including body height and segmental limb lengths such as upper/lower arms and legs.

Formally, the grouped input is expressed as follows:

where each

corresponds to one group with dimensionality

depending on the number of selected channels.

Each group feature is first embedded via a learnable linear layer and then temporally encoded by a group-specific LSTM:

To model inter-group relationships in a structured manner, these temporal features are fused using a graph convolution that respects anatomical topology. The high-level output feature

is computed as follows:

where

is the stacked group-wise temporal representation,

is a learnable weight matrix,

is a fixed binary mask encoding anatomical connectivity, ⊙ denotes element-wise multiplication, and

is a learnable bias term. The output dimension is set as

to preserve group-wise structural consistency.

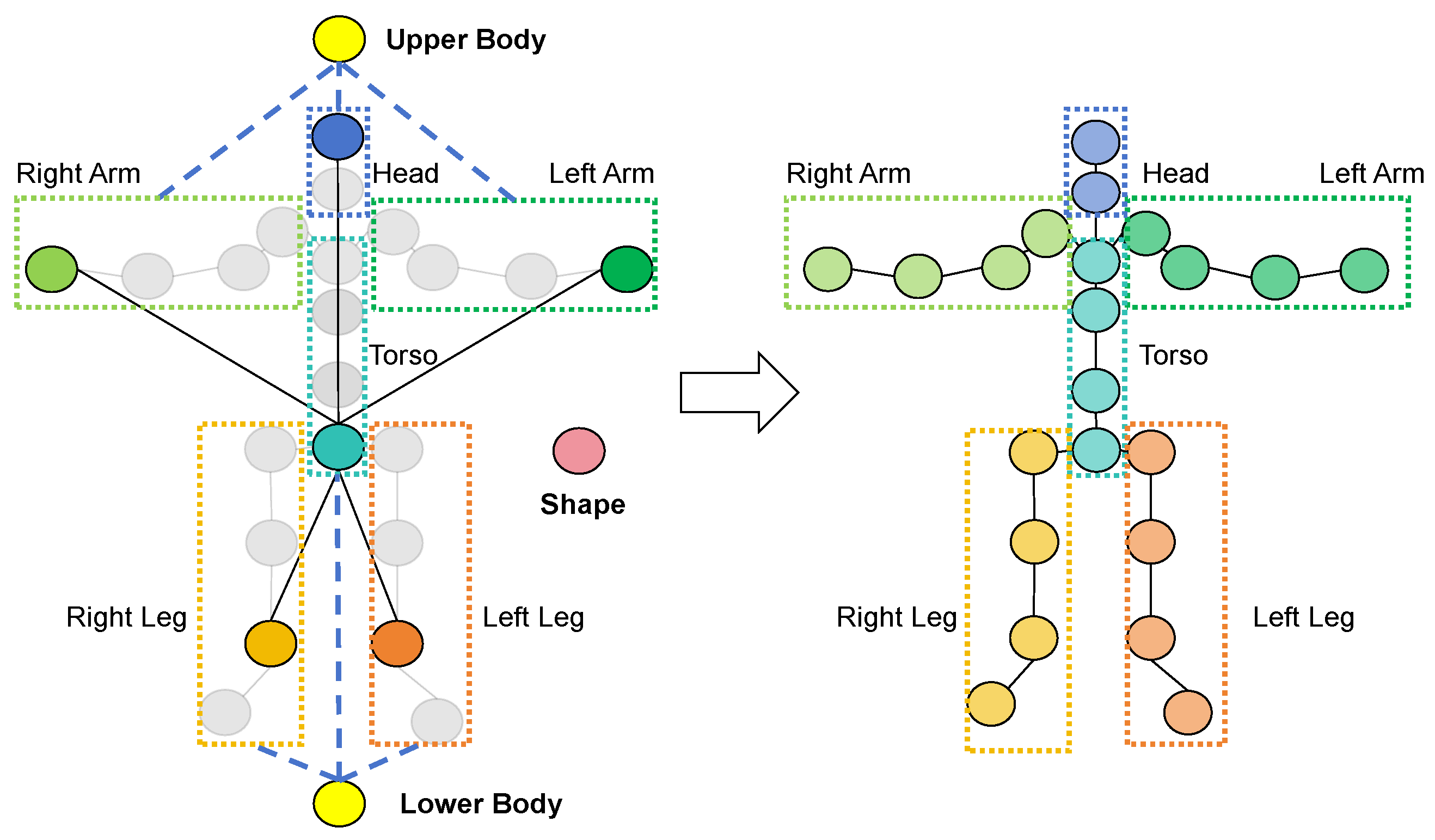

Figure 2 provides a visual abstraction of the anatomical grouping and information flow patterns regulated by the adjacency mask

. The figure conveys its functional structure by depicting the topology of semantic groups and their representative joints. The torso group serves as a central integrator, directly linked to all functional regions to aggregate full-body context. Limb and head groups are connected only to the torso and their associated upper or lower body regions to maintain semantic locality. The shape group broadcasts anthropometric priors to all other groups without receiving input in return. To improve readability, its outward connections are omitted from the diagram but are fully active in the underlying computational graph. This design ensures anatomically grounded and computationally efficient message propagation.

To support hierarchical reasoning, the GConv module stacks three such layers. The first two layers operate on all nine groups to extract rich inter-group representations, while the final layer outputs fused features for six anatomical regions, excluding auxiliary groupings such as shape and composite upper/lower body. This progressive abstraction facilitates accurate and interpretable motion reconstruction across both global and local scales.

3.2. Partition-Based Pose Optimization

The six fused anatomical region features produced by the final GConv layer serve as holistic spatial representations that form the basis for more detailed local pose refinement. Each partition is further processed independently by a dedicated unidirectional LSTM, which enriches the spatial features with temporal dynamics by associating the current partition state with its historical context.

Building on these refined partition-level spatio-temporal features, partition-based inverse kinematics (IK) optimization modules hierarchically refine joint rotations within each anatomical region. As illustrated in the pose optimization component of

Figure 1, the root and head partitions are jointly optimized: their LSTM outputs are concatenated with the original sparse inputs corresponding to these partitions and passed through a multi-layer perceptron (MLP) to produce updated root (pelvis) joint rotations, anchoring the global skeleton pose.

Similarly, for each of the remaining four limb partitions, the corresponding MLP takes as input (1) the partition’s LSTM-refined features, (2) the raw sparse input from the end-effector (serving as a skip connection), and (3) the predicted root rotation features to ensure consistency and coordination. This hierarchical refinement scheme enables precise local joint rotation adjustment guided by both global context and direct sensor signals, supporting accurate and natural full-body pose reconstruction necessary for immersive VR/AR applications.

3.3. Shape Fitting via SMPL Parameter Regression

Previous methods estimate body shape using limited sources of information. For example, HMD-Poser [

5] relies solely on pose features, while SparsePoser [

6] and DragPoser [

7] depend on ground truth SMPL annotations obtained through optimization or optical scanning. In contrast, our method incorporates lightweight anthropometric priors, including gender, height, and limb lengths, as additional inputs to the shape estimation module. These cues provide a practical and scalable alternative to SMPL annotations, which typically require expensive equipment, time-consuming procedures, and offline processing. Anthropometric data, by comparison, can be intuitively measured or user-reported, making them more accessible and suitable for rapid personalization in real-world VR/AR scenarios. This design choice improves applicability without sacrificing accuracy.

As illustrated in the “Shape Fitting” component of

Figure 1, the module receives temporally refined features from the grouped LSTM stage, prior to pose-specific MLP refinements. These features are concatenated with the anthropometric priors and passed through a dedicated multi-layer perceptron (MLP) to regress the SMPL shape parameters

. Using features extracted before pose refinement preserves global skeletal context, such as posture and inter-joint correlations, which facilitates more reliable shape estimation. The predicted

is then combined with the optimized joint rotations and passed through the SMPL forward kinematics module to obtain the final 3D joint locations and full-body mesh.

To enable online inference, per frame shape parameters are predicted within a sliding window of consecutive frames. Although each frame yields an individual estimate, temporal coherence is encouraged during training by applying a shape consistency loss across the window. This regularization promotes stable and smooth shape predictions over time, effectively suppressing unrealistic fluctuations and ensuring visually consistent avatar rendering in real-time applications.

3.4. Training LGSIK-Poser

The overall loss function

is defined as a weighted sum of multiple components: root orientation loss

, local pose loss

, global pose loss

, joint position loss

, sparse joint position loss

, shape consistency loss

, and multi-scale velocity loss

:

All position and rotation-related losses are computed as the mean squared error (MSE) between predicted and ground truth values. The sparse joint position loss is applied only to a subset of key joints, specifically the root and both hands, which are directly observed in typical VR configurations. This term enforces higher accuracy at locations with direct sensor input, helping to anchor visible joints and reduce the solution space during optimization. The shape consistency loss promotes temporal stability of the SMPL shape parameters by minimizing their deviation from the per sequence mean.

To enforce temporal smoothness and mitigate accumulated motion drift, a multi-scale velocity loss

is adopted following [

2]. It is defined as follows:

where

T denotes the sequence length,

n is the frame interval,

represents the predicted joint positions at frame

t,

represents the corresponding ground truth positions, and

is the Euclidean norm. This loss penalizes discrepancies in joint displacements over multiple time scales, promoting smooth and consistent motion trajectories.

Each loss term is weighted by a corresponding coefficient during training, and jointly optimized to ensure spatial accuracy, temporal stability, and structural consistency.

4. Experiments

The evaluation protocol established in recent works [

4,

5,

16] is followed, and all experiments are conducted on the AMASS dataset [

20]. The same data split as HMD-Poser [

5] is adopted, utilizing three subsets—CMU [

21], BMLrub [

22], and HDM05 [

23]—each randomly partitioned into 90% for training and 10% for testing.

Rather than adopting a shared default shape across all motion sequences, as is commonly carried out in prior works [

4,

16], a more realistic strategy introduced by HMD-Poser [

5] is followed. Specifically, ground truth body shape parameters are used solely to generate reference joint positions via the SMPL model [

24] during training. These parameters are neither supplied to the model nor used as supervision targets. Instead, the shape is implicitly inferred, allowing both body shape and joint positions to be predicted simultaneously. This setup better reflects real-world usage scenarios, where precise user-specific shape annotations are typically unavailable.

Implementation details. The weights

,

,

,

,

,

, and

in Equation (

4) are set to 10, 50, 10, 1000, 1000, 10, and 5, respectively. These values are empirically chosen to balance the scales of different loss terms according to their typical magnitudes under accurate reconstruction and the relative importance of each component. Larger weights on

and

emphasize precise localization of both global and sparse keypoints, which is crucial for applications such as VR rendering and physical interaction. Position-related weights enhance joint accuracy, while orientation and smoothness terms promote temporal coherence and natural articulation. This configuration was determined through preliminary experiments to balance accuracy and motion stability, though more fine-grained tuning is possible for specific inputs or tasks.

The model is implemented in PyTorch [

25] v2.1.2 and trained using the AdamW optimizer [

26] with a batch size of 64 and an initial learning rate of

. A ReduceLROnPlateau scheduler is used to dynamically adjust the learning rate, with a decay factor of 0.1 and a patience of 5 epochs. The training data is segmented using a sliding window of 40 frames with a stride of 20, enabling partial overlap between segments to better capture diverse temporal dynamics. A total of 150 training epochs is used.

Evaluation Metrics. Following [

5], performance is quantitatively measured using a comprehensive set of metrics: The first category measures the tracking accuracy and includes the MPJRE(Mean Per-Joint Rotation Error [degrees]), MPJPE (Mean Per-Joint Position Error [cm]), H-PE(Hand), U-PE(Upper), L-PE(Lower), and R-PE(Root). The second category reflects the smoothness of the generated motions and includes the MPJVE(Mean Per-Joint Velocity Error [cm/s]) and Jitter (

m/s

3). These metrics jointly capture rotational and positional accuracy, temporal stability, and localized joint reconstruction errors, thereby providing a thorough assessment of reconstruction quality.

4.1. Comparisons

To ensure a fair and comprehensive evaluation, the proposed method is assessed both quantitatively and qualitatively against state-of-the-art approaches. Quantitative results measure reconstruction accuracy under standardized protocols and varying sensor configurations, while qualitative visualizations emphasize motion continuity, realism, and generalizability across subjects. Together, these comparisons demonstrate the robustness and effectiveness of the approach in diverse settings.

4.1.1. Quantitative Results

To ensure fair comparison, each method is evaluated under sensor configurations aligned with its design. TransPose [

2] and PIP [

11] are based on six inertial measurement units (IMUs); in this study, both implementations are extended by incorporating positional information of the head and hands derived from VR devices. In contrast, AvatarPoser [

4] and AvatarJLM [

16] rely exclusively on VR inputs, including a head-mounted display (HMD) and two handheld controllers.

For HMD-Poser [

5] and the proposed method, evaluations are conducted under three sensor configurations: (1) VR-only, using an HMD and two handheld controllers; (2) VR+2IMUs, with two additional IMUs placed near the knees; (3) VR+3IMUs, adding a third IMU near the pelvis. Quantitative results under each setting are reported in the following tables.

Offline Full-Sequence Evaluation

Following the evaluation protocol of HMD-Poser, an offline setting is adopted in which the entire test sequence is provided to each method as input. Performance metrics are computed for each sequence individually and then averaged across all sequences. The corresponding results are presented in

Table 1.

While the standard offline evaluation protocol is widely adopted, it introduces potential bias when sequences vary significantly in length—particularly when a small number of long sequences dominate the dataset. To mitigate this issue, a length-weighted variant of the protocol is additionally reported, in which each sequence contributes proportionally to its frame count. The corresponding results are presented in

Table 2, providing a more reliable estimate of frame-level performance across the entire dataset.

Table 1 and

Table 2 illustrate the impact of different sequence-averaging strategies, under which the proposed method consistently outperforms the baseline in MPJPE, H-PE, U-PE, L-PE, and R-PE. These improvements reflect more accurate reconstruction of both global structure and fine-grained limb motion, with particularly notable gains in critical regions such as the hands and root, which are vital for precise interaction in VR/AR environments.

However, higher MPJRE and Jitter values are observed for the proposed method compared to HMD-Poser. This discrepancy is considered a result of explicitly optimizing the positions of end-effectors (e.g., handheld controllers), which enhances endpoint accuracy but may compromise pose smoothness and rotational consistency. Such behavior reflects an inherent trade-off in modeling flexibility: spatial constraints imposed on critical joints improve global alignment but reduce degrees of freedom, potentially affecting temporal continuity.

Nonetheless, as shown in

Table 2, where sequence lengths are taken into account during averaging, the performance differences in MPJRE and Jitter become negligible. This indicates that the increased jitter observed in equal-weighted evaluation is likely amplified by shorter or noisier sequences. Under more realistic conditions, the proposed method is shown to maintain comparable continuity.

Online Sliding Window Evaluation

The results discussed above are obtained under an offline evaluation protocol, in which the entire input sequence is made available to the model. This enables full temporal context to be leveraged when predicting each frame, thereby facilitating comprehensive error analysis and upper-bound performance estimation. However, such a setting does not reflect the requirements of real-time deployment. In practical scenarios, motion reconstruction systems are expected to operate causally, producing each frame based solely on current and past observations without access to future frames. In addition, constraints on latency and memory impose the need to function within a limited temporal window.

To more accurately simulate deployment conditions, an online evaluation is conducted using a sliding window strategy. At each time step, the model is provided with a fixed-length window of 40 frames—consisting of 39 past frames and the current frame—and is required to predict only the current frame. This formulation imposes stricter temporal constraints and processing delays, offering a more realistic assessment of the method’s suitability for real-time applications. The quantitative results of this evaluation are summarized in

Table 3.

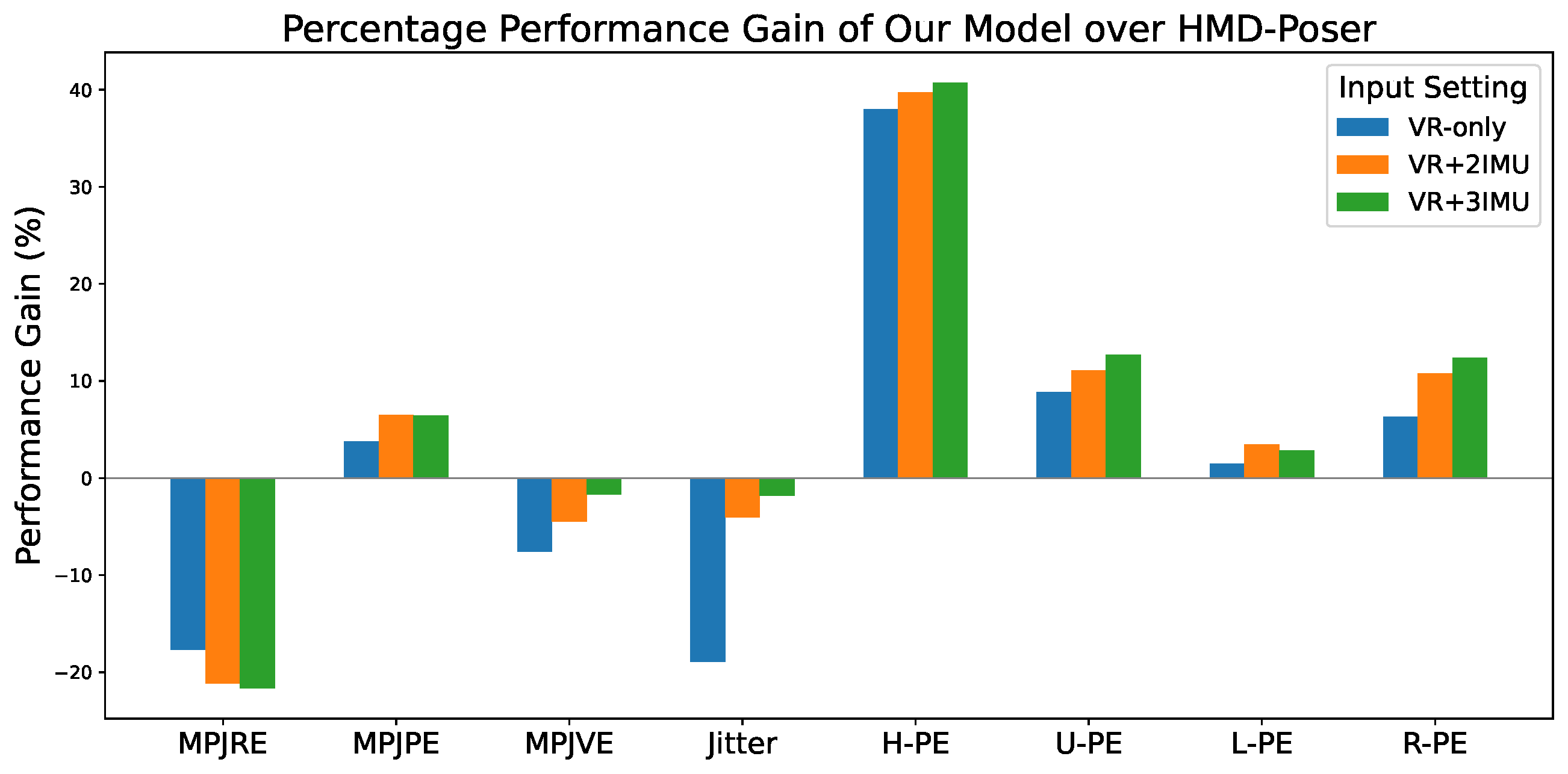

The results in

Table 3 reveal performance trends that are consistent with those observed in the offline evaluations (

Table 1 and

Table 2), showing clear improvements in localization accuracy with competitive continuity. To complement the tabular results and facilitate comparison, the relative gains of the proposed method over HMD-Poser are further visualized in

Figure 3.

As shown in

Figure 3, the trade-off between positional accuracy and the combined aspects of rotational precision and temporal continuity inherent in the proposed method is clearly illustrated. Notably, the approach achieves substantial improvements over HMD-Poser in hand localization accuracy. Furthermore, the gap in continuity-related metrics between the proposed method and HMD-Poser progressively narrows as the number of IMUs increases, reaching an almost negligible level, which further underscores the robustness of the design.

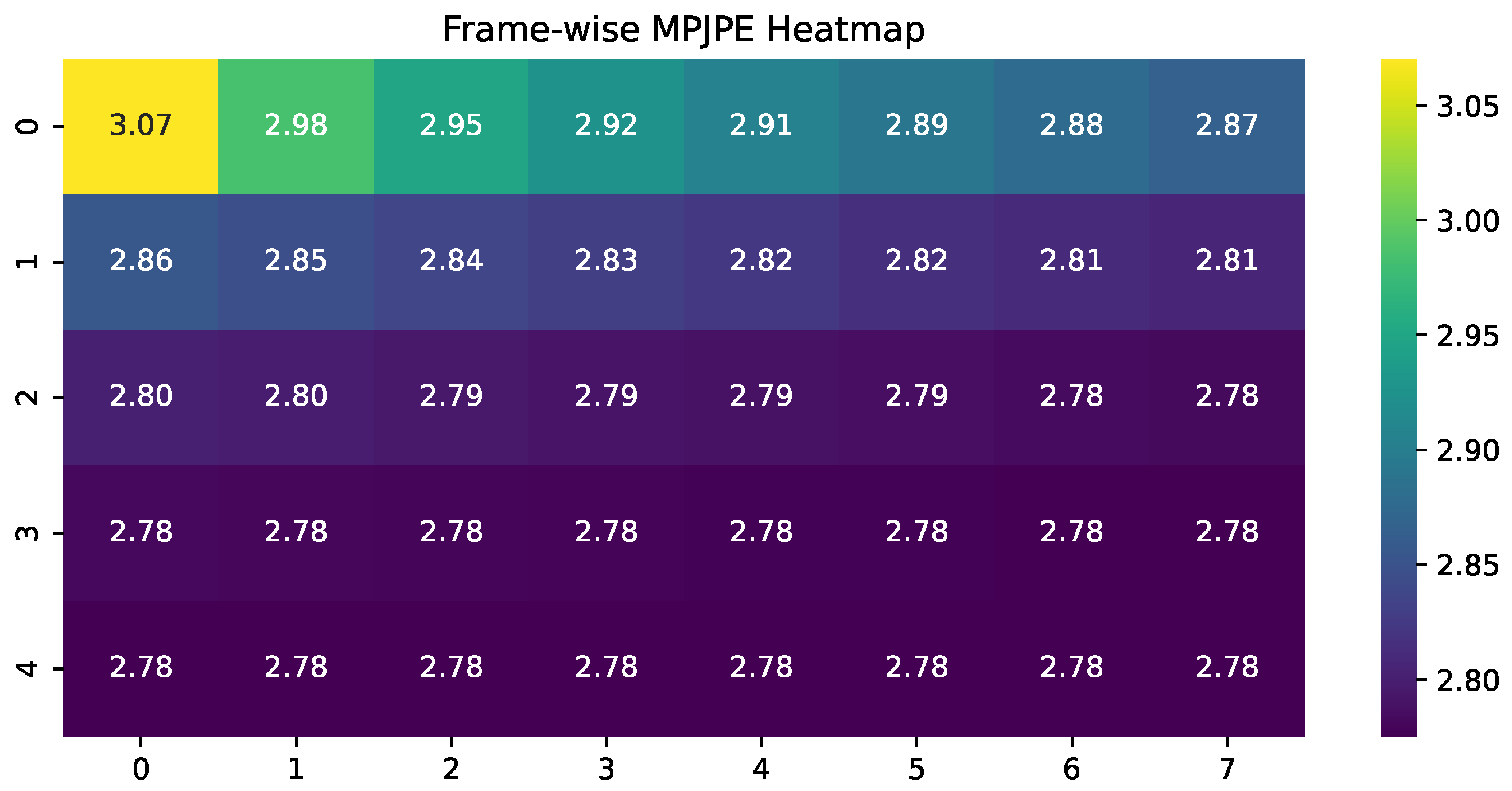

On the other hand, the online evaluation shows improved MPJPE and regional accuracy compared to offline results. This improvement is primarily attributed to the evaluation strategy, which considers only the last frame of each input window. Given that the model processes input sequences causally using a unidirectional LSTM, predictions become progressively more accurate as more past context is accumulated. As a result, early frame predictions typically exhibit higher errors, while the final frame achieves the lowest error, reflecting the model’s strength in real-time inference where future information is unavailable. To illustrate this temporal trend,

Figure 4 visualizes the frame-wise MPJPE across the sliding window.

As shown in

Figure 4, the model’s prediction error starts at approximately 3.07 cm and steadily decreases over the first 20 frames, reflecting a continuous improvement in accuracy as temporal context accumulates. In the final 16 frames, the MPJPE stabilizes around 2.78 cm with negligible variation, indicating that once sufficient past information is integrated, the model reaches a reliable level of performance. This trend highlights the effectiveness of the model’s temporal encoding and suggests a practical deployment strategy: in latency-tolerant scenarios, buffering a few frames for joint inference can reduce the number of forward passes while maintaining high accuracy, which is especially advantageous in resource-constrained or high-frequency applications.

4.1.2. Qualitative Results

To complement the quantitative results, qualitative comparisons are provided to highlight the effectiveness of LGSIK-Poser in reconstructing full-body motion from sparse input. Visualizations were generated using

aitviewer [

27], an open-source tool for rendering SMPL-based human models. Corresponding video results for each qualitative comparison, covering different poses, motion continuity, and subject diversity, are available at

https://github.com/Lucifer-G0/LGSIK-Poser (accessed on 6 August 2025).

Figure 5 presents comparisons between LGSIK-Poser and HMD-Poser on four challenging postures. In the badminton racket swing (

Figure 5a), LGSIK-Poser yields more accurate wrist position and rotation than HMD-Poser. In the fast kicking motion (

Figure 5b), HMD-Poser produces visibly implausible leg configurations, while LGSIK-Poser outputs exhibit smaller deviations and improved realism. For the standing posture in a card playing motion performed by a female subject (

Figure 5c), HMD-Poser severely distorts body shape and fails to match correct proportions, whereas LGSIK-Poser more closely resembles the ground truth in both pose and silhouette. In the final case, involving a tilted head and arms akimbo posture (

Figure 5d), HMD-Poser shows large pelvis shifts and incorrect shape estimates, while LGSIK-Poser maintains more plausible shape and pose fidelity.

Although both methods demonstrate similar temporal stability in most frames of the static comparisons, the corresponding videos, particularly the one associated with the kicking motion (

Figure 5b), show that HMD-Poser occasionally produces noticeable pose discontinuities or unnatural deformations. In contrast, LGSIK-Poser maintains more coherent and physically plausible motion under such challenging scenarios. This improved robustness is likely due to the use of L2-based loss functions, which better constrain large errors during training and promote smoother predictions.

To evaluate temporal consistency and motion realism,

Figure 6 shows reconstructed sequences of a badminton hitting motion. Both methods demonstrate plausible continuity, but LGSIK-Poser predictions align more closely with the ground truth, particularly in hand and lower-body positions, which tend to drift in HMD-Poser results.

Figure 7 highlights the robustness of LGSIK-Poser across subjects with diverse body shapes and genders. Nine subjects with varying height, body proportions, and clothing perform multiple actions. Ground truth poses (blue) are overlaid with LGSIK-Poser predictions (colored by subject). Across different actions and morphologies, the predictions appear visually plausible and consistent with physical proportions, with no significant deviations observed in pose or shape.

4.2. Ablation Studies

Table 4 reports the average results under three typical sparse input configurations. Each variant removes or alters a specific component of our model (Ours), illustrating the impact of individual modules on accuracy and motion quality. Metrics include joint rotation error (MPJRE), joint position error (MPJPE), motion smoothness (MPJVE and Jitter), and per-region position errors (H-PE, U-PE, L-PE, R-PE).

Removing graph convolution (noG) slightly improves motion continuity (lower MPJVE and jitter), likely because the absence of structured connectivity relaxes spatial constraints, allowing full information propagation across joint embeddings. This greater freedom results in smoother outputs but degrades both position and rotation accuracy. The trade-off suggests that graph convolution effectively encodes skeletal topology and local dependencies, which are essential for precise pose reconstruction but may restrict motion flexibility.

Disabling the pose optimization module (noIK) leads to performance drops in both joint rotation and position, especially in upper-body and root areas, validating the necessity of our partition-based optimization design. When the shape-related embedding group is removed (noShape), the model exhibits reduced stability and smoothness, suggesting that even coarse anthropometric priors like limb length and body height help to regularize pose and shape predictions.

Replacing our task-specific loss with the original loss formulation from HMD-Poser (hploss) leads to the lowest MPJRE but significantly degrades joint positioning, particularly in the root and hands. This highlights a trade-off between rotation precision and spatial accuracy. Our design prioritizes reliable end-effector placement over marginal improvements in joint rotation, which better aligns with the perceptual demands of VR/AR applications. In these scenarios, users are highly sensitive to inaccuracies in hand and root location, while minor rotation errors often go unnoticed. Overall, the ablation results demonstrate that each component of our method, including graph-based spatial modeling, hierarchical pose refinement, shape fitting, and task-oriented loss, contributes meaningfully to achieving a balance between accuracy, stability, and motion plausibility.

4.3. Inference Efficiency

To assess the computational efficiency of the proposed method, several representative approaches are compared, including AvatarPoser [

4], AvatarJLM [

16], and HMD-Poser [

5]. All methods are implemented and evaluated under a unified PyTorch framework on the same hardware setup (NVIDIA RTX 3090 GPU), using a consistent sliding window of 40 frames for temporal modeling during inference. The data sampling rate is fixed at 60 FPS across all methods to ensure fairness in runtime comparison.

As shown in

Table 5, our method achieves a favorable balance between model complexity and runtime efficiency. It contains only 3.74 M parameters and requires 0.15 GFLOPs per inference, which is significantly lower than AvatarJLM (25.85 M, 1.04 GFLOPs) and HMD-Poser (9.55 M, 0.38 GFLOPs). The model size (48.8 MB) remains compact. In terms of latency, our approach achieves an inference delay of 15.71 ms and a throughput of 63.65 FPS, which meets the real-time requirement under a 60 Hz sampling rate. While AvatarPoser runs faster (104.28 FPS), it is optimized for a simpler setup and lacks generalization to more complex configurations, primarily due to its lower parameter count and simplified model pipeline. These results demonstrate that our method offers competitive efficiency and is well-suited for real-time human motion reconstruction in practical VR applications.

It is worth noting that an inference speed of 205.7 FPS on an NVIDIA RTX 3080 GPU was reported in the HMD-Poser paper. To ensure fairness, all models—including AvatarPoser, AvatarJLM, and HMD-Poser—were implemented within the same PyTorch framework using a unified forward kinematics (FK) implementation, consistent with the publicly available code from HMD-Poser. Under this setup, performance comparable to the results reported in their respective papers was achieved by both AvatarPoser and AvatarJLM, whereas a significant discrepancy was observed for HMD-Poser. To investigate this issue, the runtime of the FK stage alone was measured based on HMD-Poser’s public implementation, resulting in an average of 6.54 ms per frame. This suggests that even without considering network inference time, the maximum achievable frame rate is approximately 153 FPS, which is notably lower than the 205.7 FPS reported in the paper. The discrepancy may stem from differences in the FK stage implementation or other pipeline variations not publicly documented in the original work. Despite this, clear advantages in terms of parameter count and computational cost are demonstrated by our method, confirming its lightweight nature under consistent evaluation settings.

5. Discussion

LGSIK-Poser achieves accurate and efficient full-body motion reconstruction across diverse sparse input configurations by combining anatomically guided body partitioning with a lightweight, skeleton-aware graph reasoning module. This module employs a hand-crafted adjacency matrix aligned with true skeletal connectivity, restricting feature interactions to anatomically relevant neighbors and thereby enhancing interpretability and modularity. Unlike Transformer-based methods with dense, entangled attention maps that obscure feature dependencies, this structured design enables transparent, anatomically meaningful information flow. With fewer parameters and lower computational cost than monolithic Transformers, LGSIK-Poser effectively balances accuracy, interpretability, and real-time suitability for deployment on resource-constrained platforms such as standalone VR devices.

The region-specific inverse kinematics refinement module significantly improves local pose quality, particularly in hand localization. Across all sensor configurations, LGSIK-Poser consistently reduces hand position errors compared to HMD-Poser, achieving up to 48% relative improvement in the VR-only setting. Accurate hand tracking is critical for immersive VR and AR applications because hands are the main interface for interaction and visual feedback. Improved hand tracking not only leads to better quantitative results but also enhances perceptual realism and user embodiment.

Root joint localization also benefits from targeted optimization. However, improvements in lower-limb accuracy are less pronounced. This limitation arises from the limited availability of position-guided inputs and the small number of IMUs placed on the legs. Complex leg dynamics are difficult to infer using sparse orientation data alone. Examples include asymmetric gait patterns, rapid directional changes, and foot–ground contact interactions. The weak constraints on global root position further complicate this issue. These challenges highlight the difficulty of resolving lower-body ambiguities under sparse input conditions. To address this, future work could consider leveraging physical contact cues or exploring generative modeling approaches to better constrain plausible leg motion.

Despite showing slightly higher jitter in equal-weighted offline evaluation, LGSIK-Poser performs comparably under length-weighted metrics. It also achieves consistently lower joint position errors during online inference with causal sliding windows. These results confirm the model’s robustness under temporally constrained and partially observable conditions. They demonstrate its practical suitability for real-time deployment in consumer-grade VR systems.

6. Conclusions

This paper introduces LGSIK-Poser, a real-time, skeleton-aware framework for full-body human motion reconstruction from sparse VR/AR inputs. The model accommodates diverse sensor configurations including head-mounted displays, controllers, and up to three optional inertial measurement units without requiring architecture changes. By combining grouped temporal modeling, masked graph convolution, and region-specific inverse kinematics refinement, LGSIK-Poser achieves accurate, consistent, and interpretable pose reconstruction while significantly improving runtime efficiency over state-of-the-art baselines. This capability is especially valuable for VR and AR applications such as fitness, virtual meetings, and gaming, where precise and efficient full-body tracking enhances immersion and interaction quality.

Although the method performs well across various inputs, modeling complex lower-limb motions remains challenging under sparse setups. In particular, it struggles to accurately capture ambiguous leg actions, such as fast kicking motions and distinguishing between slow movements and slight tremors. These challenges stem from limited positional input and the inherent difficulty of resolving subtle leg dynamics using mainly sparse orientation data. Additionally, small increases in jitter indicate potential for improving temporal stability during rapid motions.

Future work will focus on enhancing lower-limb motion fidelity through improved task-specific constraints and scene-aware priors. In particular, incorporating foot–ground contact constraints based on environmental geometry aims to enforce more physically plausible leg motion. Additionally, diffusion-based generative models will be investigated to better model lower-body dynamics and reduce ambiguities in under-constrained scenarios.

Author Contributions

Conceptualization, L.L.; methodology, L.L. and J.L.; software, L.L.; validation, L.L. and J.L.; formal analysis, L.L.; investigation, L.L. and J.L.; data curation, J.L.; writing—original draft preparation, L.L.; writing—review and editing, J.L. and W.Z.; visualization, L.L.; supervision, W.Z.; project administration, W.Z.; funding acquisition, W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by National Natural Science Foundation of China Regional Project (grant number 61966007) and Guangxi Natural Science Foundation for the surface project (grant number 2022GXNSFAA035629). The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Huang, Y.; Kaufmann, M.; Aksan, E.; Black, M.J.; Hilliges, O.; Pons-Moll, G. Deep inertial poser: Learning to reconstruct human pose from sparse inertial measurements in real time. ACM Trans. Graph. 2018, 37, 185. [Google Scholar] [CrossRef]

- Yi, X.; Zhou, Y.; Xu, F. TransPose: Real-time 3D human translation and pose estimation with six inertial sensors. ACM Trans. Graph. 2021, 40, 86. [Google Scholar] [CrossRef]

- Du, Y.; Kips, R.; Pumarola, A.; Starke, S.; Thabet, A.; Sanakoyeu, A. Avatars Grow Legs: Generating Smooth Human Motion from Sparse Tracking Inputs with Diffusion Model. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 17–24 June 2023; pp. 481–490. [Google Scholar] [CrossRef]

- Jiang, J.; Streli, P.; Qiu, H.; Fender, A.; Laich, L.; Snape, P.; Holz, C. AvatarPoser: Articulated Full-Body Pose Tracking from Sparse Motion Sensing. In Computer Vision—ECCV 2022: Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part V; Springer: Berlin/Heidelberg, Germany, 2022; pp. 443–460. [Google Scholar] [CrossRef]

- Dai, P.; Zhang, Y.; Liu, T.; Fan, Z.; Du, T.; Su, Z.; Zheng, X.; Li, Z. HMD-Poser: On-Device Real-time Human Motion Tracking from Scalable Sparse Observations. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 874–884. [Google Scholar] [CrossRef]

- Ponton, J.L.; Yun, H.; Aristidou, A.; Andujar, C.; Pelechano, N. SparsePoser: Real-time Full-body Motion Reconstruction from Sparse Data. ACM Trans. Graph. 2023, 43, 5. [Google Scholar] [CrossRef]

- Ponton, J.L.; Pujol, E.; Aristidou, A.; Andujar, C.; Pelechano, N. Dragposer: Motion reconstruction from variable sparse tracking signals via latent space optimization. Comput. Graph. Forum 2024, 44, e70026. [Google Scholar] [CrossRef]

- von Marcard, T.; Rosenhahn, B.; Black, M.J.; Pons-Moll, G. Sparse Inertial Poser: Automatic 3D Human Pose Estimation from Sparse IMUs. Comput. Graph. Forum 2017, 36, 349–360. [Google Scholar] [CrossRef]

- Nagaraj, D.; Schake, E.; Leiner, P.; Werth, D. An RNN-Ensemble approach for Real Time Human Pose Estimation from Sparse IMUs. In Proceedings of the 3rd International Conference on Applications of Intelligent Systems (APPIS 2020), Las Palmas de Gran Canaria, Spain, 7–9 January 2020. [Google Scholar] [CrossRef]

- Jiang, Y.; Ye, Y.; Gopinath, D.; Won, J.; Winkler, A.W.; Liu, C.K. Transformer Inertial Poser: Real-time Human Motion Reconstruction from Sparse IMUs with Simultaneous Terrain Generation. In SA ’22: SIGGRAPH Asia 2022 Conference Papers; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Yi, X.; Zhou, Y.; Habermann, M.; Shimada, S.; Golyanik, V.; Theobalt, C.; Xu, F. Physical Inertial Poser (PIP): Physics-aware Real-time Human Motion Tracking from Sparse Inertial Sensors. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 13157–13168. [Google Scholar] [CrossRef]

- Yi, X.; Zhou, Y.; Xu, F. Physical Non-inertial Poser (PNP): Modeling Non-inertial Effects in Sparse-inertial Human Motion Capture. In SIGGRAPH ’24: ACM SIGGRAPH 2024 Conference Papers; Association for Computing Machinery: New York, NY, USA, 2024. [Google Scholar] [CrossRef]

- Winkler, A.; Won, J.; Ye, Y. QuestSim: Human Motion Tracking from Sparse Sensors with Simulated Avatars. In SA ’22: SIGGRAPH Asia 2022 Conference Papers; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Lee, S.; Starke, S.; Ye, Y.; Won, J.; Winkler, A. QuestEnvSim: Environment-Aware Simulated Motion Tracking from Sparse Sensors. In SIGGRAPH ’23: ACM SIGGRAPH 2023 Conference Proceedings: Association for Computing Machinery: New York, NY, USA, 2023. [CrossRef]

- Makoviychuk, V.; Wawrzyniak, L.; Guo, Y.; Lu, M.; Storey, K.; Macklin, M.; Hoeller, D.; Rudin, N.; Allshire, A.; Handa, A.; et al. Isaac Gym: High Performance GPU-Based Physics Simulation for Robot Learning. arXiv 2021, arXiv:2108.10470. [Google Scholar]

- Zheng, X.; Su, Z.; Wen, C.; Xue, Z.; Jin, X. Realistic Full-Body Tracking from Sparse Observations via Joint-Level Modeling. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 14632–14642. [Google Scholar] [CrossRef]

- Dittadi, A.; Dziadzio, S.; Cosker, D.; Lundell, B.; Cashman, T.; Shotton, J. Full-Body Motion from a Single Head-Mounted Device: Generating SMPL Poses from Partial Observations. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 11667–11677. [Google Scholar] [CrossRef]

- Aliakbarian, S.; Cameron, P.; Bogo, F.; Fitzgibbon, A.; Cashman, T.J. FLAG: Flow-based 3D Avatar Generation from Sparse Observations. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 13243–13252. [Google Scholar] [CrossRef]

- Tang, J.; Wang, J.; Ji, K.; Xu, L.; Yu, J.; Shi, Y. A Unified Diffusion Framework for Scene-aware Human Motion Estimation from Sparse Signals. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 21251–21262. [Google Scholar] [CrossRef]

- Mahmood, N.; Ghorbani, N.; Troje, N.F.; Pons-Moll, G.; Black, M. AMASS: Archive of Motion Capture As Surface Shapes. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5441–5450. [Google Scholar] [CrossRef]

- Carnegie Mellon University. CMU MoCap Dataset. Available online: http://mocap.cs.cmu.edu (accessed on 6 August 2025).

- Troje, N.F. Decomposing Biological Motion: A Framework for Analysis and Synthesis of Human Gait Patterns. J. Vis. 2002, 2, 2. [Google Scholar] [CrossRef]

- Müller, M.; Röder, T.; Clausen, M.; Eberhardt, B.; Krüger, B.; Weber, A. Documentation Mocap Database HDM05; Technical Report CG-2007-2; Universität Bonn: Bonn, Germany, 2007. [Google Scholar]

- Loper, M.; Mahmood, N.; Romero, J.; Pons-Moll, G.; Black, M.J. SMPL: A skinned multi-person linear model. ACM Trans. Graph. 2015, 34, 248. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates Inc.: Red Hook, NY, USA, 2019. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv, 2019; arXiv:1711.05101. [Google Scholar] [CrossRef]

- Kaufmann, M.; Vechev, V.; Mylonopoulos, D. AITViewer. 2022. Available online: https://github.com/eth-ait/aitviewer (accessed on 6 August 2025).

Figure 1.

Overview of the proposed framework. The model comprises three core modules: (1) grouped spatio-temporal modeling using LSTMs and a graph convolutional network (GConv) with mask-based message passing; (2) partition-based pose optimization that refines joint rotations per anatomical group; and (3) shape fitting that estimates SMPL shape parameters and reconstructs the full-body mesh via forward kinematics. ① ×9 denotes processing across nine semantic groups, and ② ×5 applies to five local joint partitions. Bottom row: 3D visualizations of key stages, aligned with each module through dashed connectors.

Figure 1.

Overview of the proposed framework. The model comprises three core modules: (1) grouped spatio-temporal modeling using LSTMs and a graph convolutional network (GConv) with mask-based message passing; (2) partition-based pose optimization that refines joint rotations per anatomical group; and (3) shape fitting that estimates SMPL shape parameters and reconstructs the full-body mesh via forward kinematics. ① ×9 denotes processing across nine semantic groups, and ② ×5 applies to five local joint partitions. Bottom row: 3D visualizations of key stages, aligned with each module through dashed connectors.

Figure 2.

Visualization of group-wise message passing topology inspired by anatomical adjacency. Each super-node denotes a semantic group located at a representative joint. The illustrated connections reflect the structure induced by the fixed binary mask , which governs inter-group communication in the graph convolution. The torso acts as a global integrator, while the shape group distributes static anthropometric priors to other groups. For clarity, edges from the shape node are not shown but are present in the model implementation.

Figure 2.

Visualization of group-wise message passing topology inspired by anatomical adjacency. Each super-node denotes a semantic group located at a representative joint. The illustrated connections reflect the structure induced by the fixed binary mask , which governs inter-group communication in the graph convolution. The torso acts as a global integrator, while the shape group distributes static anthropometric priors to other groups. For clarity, edges from the shape node are not shown but are present in the model implementation.

Figure 3.

Relative performance gain (%) of the proposed method over HMD-Poser across multiple metrics under online evaluation. Positive values indicate better performance.

Figure 3.

Relative performance gain (%) of the proposed method over HMD-Poser across multiple metrics under online evaluation. Positive values indicate better performance.

Figure 4.

Heatmap of average MPJPE for each frame in the 40-frame sliding window, rearranged as a grid. Later frames show lower errors, indicating improved prediction with accumulated temporal context.

Figure 4.

Heatmap of average MPJPE for each frame in the 40-frame sliding window, rearranged as a grid. Later frames show lower errors, indicating improved prediction with accumulated temporal context.

Figure 5.

Qualitative comparisons with HMD-Poser [

5] on challenging poses. Each block shows the ground truth (GT, blue), the prediction from HMD-Poser (yellow), and LGSIK-Poser (green), rendered together with GT for direct comparison. Sub-figures: (

a) badminton racket swing; (

b) fast kicking motion; (

c) female standing card playing; (

d) female standing card playing with tilted head and arms akimbo.

Figure 5.

Qualitative comparisons with HMD-Poser [

5] on challenging poses. Each block shows the ground truth (GT, blue), the prediction from HMD-Poser (yellow), and LGSIK-Poser (green), rendered together with GT for direct comparison. Sub-figures: (

a) badminton racket swing; (

b) fast kicking motion; (

c) female standing card playing; (

d) female standing card playing with tilted head and arms akimbo.

Figure 6.

Sequence visualization of a badminton motion. Rows correspond to the ground truth (GT), HMD-Poser + GT overlay, and LGSIK-Poser + GT overlay. Colors progress from lighter to darker from left to right, indicating temporal progression from earlier to later frames.

Figure 6.

Sequence visualization of a badminton motion. Rows correspond to the ground truth (GT), HMD-Poser + GT overlay, and LGSIK-Poser + GT overlay. Colors progress from lighter to darker from left to right, indicating temporal progression from earlier to later frames.

Figure 7.

Visualization of different subjects across multiple actions. Each column corresponds to a different body shape and gender; rows show various actions. Ground truth is visualized in blue, and LGSIK-Poser predictions are color-coded per subject.

Figure 7.

Visualization of different subjects across multiple actions. Each column corresponds to a different body shape and gender; rows show various actions. Ground truth is visualized in blue, and LGSIK-Poser predictions are color-coded per subject.

Table 1.

Offline evaluation with equal-weight averaging. All sequences contribute equally regardless of length. Bold values indicate the best performance for each input setting.

Table 1.

Offline evaluation with equal-weight averaging. All sequences contribute equally regardless of length. Bold values indicate the best performance for each input setting.

| Input | Method | MPJRE | MPJPE | MPJVE | Jitter | H-PE | U-PE | L-PE | R-PE |

|---|

| 6IMU | Transpose [2] | 3.05 | 4.57 | 22.41 | 7.98 | 3.83 | 3.05 | 6.76 | 4.62 |

| PIP [11] | 2.45 | 4.54 | 19.02 | 8.13 | 4.54 | 3.15 | 6.53 | 4.54 |

| VR-only | AvatarPoser [4] | 2.94 | 5.84 | 26.60 | 13.97 | 4.58 | 3.24 | 9.59 | 5.05 |

| AvatarJLM [16] | 2.81 | 5.03 | 20.91 | 6.94 | 2.01 | 3.00 | 7.96 | 4.58 |

| HMD-Poser [5] | 2.28 | 3.19 | 17.47 | 6.07 | 1.65 | 1.67 | 5.40 | 3.02 |

| Ours | 2.71 | 3.05 | 19.32 | 8.29 | 0.85 | 1.43 | 5.38 | 2.66 |

| VR+2IMU | HMD-Poser [5] | 1.83 | 2.27 | 13.28 | 5.96 | 1.39 | 1.51 | 3.35 | 2.74 |

| Ours | 2.27 | 2.15 | 14.14 | 7.13 | 0.82 | 1.35 | 3.31 | 2.37 |

| VR+3IMU | HMD-Poser [5] | 1.73 | 1.89 | 11.03 | 5.35 | 1.27 | 1.46 | 2.46 | 2.37 |

| Ours | 2.18 | 1.88 | 13.10 | 7.25 | 0.83 | 1.31 | 2.71 | 2.04 |

Table 2.

Offline evaluation with length-weighted averaging. Each sequence contributes proportionally to its frame count. Bold values indicate the best performance for each input setting.

Table 2.

Offline evaluation with length-weighted averaging. Each sequence contributes proportionally to its frame count. Bold values indicate the best performance for each input setting.

| Input | Method | MPJRE | MPJPE | MPJVE | Jitter | H-PE | U-PE | L-PE | R-PE |

|---|

| VR-only | HMD-Poser [5] | 2.72 | 3.91 | 18.62 | 5.97 | 1.56 | 1.82 | 6.93 | 3.47 |

| Ours | 2.94 | 3.58 | 18.76 | 8.29 | 0.93 | 1.60 | 6.43 | 3.14 |

| VR+2IMU | HMD-Poser [5] | 2.19 | 2.76 | 13.44 | 5.64 | 1.55 | 1.76 | 4.21 | 3.20 |

| Ours | 2.42 | 2.47 | 13.08 | 5.81 | 0.91 | 1.51 | 3.85 | 2.79 |

| VR+3IMU | HMD-Poser [5] | 2.07 | 2.42 | 12.51 | 5.73 | 1.60 | 1.73 | 3.42 | 2.78 |

| Ours | 2.31 | 2.13 | 11.88 | 5.76 | 0.91 | 1.46 | 3.10 | 2.37 |

Table 3.

Online evaluation using sliding window input (window size = 40, step = 1). Only the last frame of each window is used for evaluation. Bold values indicate the best performance for each input setting.

Table 3.

Online evaluation using sliding window input (window size = 40, step = 1). Only the last frame of each window is used for evaluation. Bold values indicate the best performance for each input setting.

| Input | Method | MPJRE | MPJPE | MPJVE | Jitter | H-PE | U-PE | L-PE | R-PE |

|---|

| VR-only | HMD-Poser [5] | 2.32 | 3.20 | 18.23 | 7.13 | 1.37 | 1.58 | 5.54 | 2.86 |

| Ours | 2.73 | 3.08 | 19.61 | 8.48 | 0.85 | 1.44 | 5.46 | 2.68 |

| VR+2IMU | HMD-Poser [5] | 1.89 | 2.32 | 13.69 | 6.88 | 1.36 | 1.53 | 3.47 | 2.69 |

| Ours | 2.29 | 2.17 | 14.30 | 7.16 | 0.82 | 1.36 | 3.35 | 2.40 |

| VR+3IMU | HMD-Poser [5] | 1.80 | 2.03 | 13.00 | 7.13 | 1.40 | 1.50 | 2.81 | 2.34 |

| Ours | 2.19 | 1.90 | 13.22 | 7.26 | 0.83 | 1.31 | 2.73 | 2.05 |

Table 4.

Ablation study results. Each variant disables or modifies one component of the full model, and the performance is averaged over three sparse input settings. Bold values indicate the best performance under each metric across the methods.

Table 4.

Ablation study results. Each variant disables or modifies one component of the full model, and the performance is averaged over three sparse input settings. Bold values indicate the best performance under each metric across the methods.

| Method | MPJRE | MPJPE | MPJVE | Jitter | H-PE | U-PE | L-PE | R-PE |

|---|

| noG | 2.44 | 2.54 | 15.41 | 7.15 | 0.89 | 1.45 | 4.11 | 2.55 |

| noIK | 2.47 | 2.60 | 16.89 | 7.18 | 1.11 | 1.47 | 4.24 | 2.48 |

| noShape | 2.74 | 2.70 | 17.94 | 9.23 | 0.96 | 1.63 | 4.23 | 2.73 |

| hploss | 2.03 | 2.64 | 20.02 | 9.79 | 2.12 | 1.68 | 4.02 | 2.54 |

| Ours | 2.40 | 2.38 | 15.71 | 7.63 | 0.84 | 1.37 | 3.85 | 2.38 |

Table 5.

Comparison of runtime efficiency and model complexity between HMD-Poser and our method.

Table 5.

Comparison of runtime efficiency and model complexity between HMD-Poser and our method.

| Method | Params (M) | FLOPs (GFLOPs) | Size (MB) | Delay (ms) | FPS |

|---|

| AvatarPoser [16] | 3.34 | 0.13 | 15.8 | 9.59 | 104.28 |

| AvatarJLM [16] | 25.85 | 1.04 | 243.5 | 29.11 | 34.35 |

| HMD-Poser | 9.55 | 0.38 | 98.6 | 20.20 | 49.50 |

| Ours | 3.74 | 0.15 | 48.8 | 15.71 | 63.65 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).