1. Introduction and Research Contribution

The integration of generative artificial intelligence (GAI) into educational contexts has reignited enduring debates about AI’s pedagogical roles. While initial discussions emphasized its capacity to support higher-order thinking [

1], more recent perspectives highlight GAI’s potential to foster dialogic interaction and metacognitive engagement [

2,

3]. In this view, GAI emerges not merely as a cognitive aid, but as a facilitator of interpretive and ethical inquiry. Recent classroom studies further demonstrate that GAI can scaffold vocabulary development, narrative production, and multimodal engagement among young learners, particularly when integrated into culturally and developmentally responsive design frameworks [

4,

5].

Nonetheless, we acknowledge that a growing body of critical scholarship questions whether generative AI in education promotes ethical and epistemically diverse learning or merely reinforces algorithmic monocultures, commercial logics, and cognitive reductionism [

6]. In particular, concerns have been raised that GAI may dehumanize learning, marginalize sociocultural plurality, and perpetuate extractive data practices. This paper does not dismiss such critiques; rather, it takes them seriously and proposes a design-based pedagogical response—one that aims to reframe AI as a culturally situated, ethically constrained, and educator-mediated tool for interpretive growth in the humanities.

Critical scholarship further underscores the reductive application of AI technologies in education, privileging behavioral compliance, efficiency, and quantifiable metrics [

7,

8,

9]. These tendencies risk marginalizing the affective, interpretive, and culturally embedded dimensions of learning. Such concerns are especially salient in the humanities, where symbolic reasoning and narrative complexity are integral to meaning-making [

10,

11]. Emerging assistive technologies, such as the Smart AI Reading Assistant (SARA), attempt to address these concerns by incorporating visual tracking and individualized feedback to support ethical and affective reading comprehension [

12].

Despite the expanding literature on AI in education, the domain of literary comprehension in primary schooling remains underexplored, particularly in relation to normative, developmental, and semantic safeguards for AI-generated content. This paper addresses this gap by introducing a modular, design-informed framework that supports interpretive engagement with literary texts among learners aged 7–12. At this stage, students transition from literal decoding to symbolic understanding [

13], acquiring competencies such as recognizing metaphor, irony, narrative stance, and intertextual connections. These processes align with the multiliteracies framework [

14,

15], which foregrounds meaning-making across semiotic modes and diverse cultural contexts.

Canonical texts, such as Shakespearean works commonly featured in Anglophone curricula, pose significant challenges to young readers due to their syntactic density, historical idioms, and abstract imagery [

16]. Yet these works offer unique opportunities for symbolic and intercultural exploration, particularly when read alongside postcolonial, oral, or regional literary traditions [

17]. Commercial Large Language Models (LLMs), however, frequently produce oversimplified or developmentally inappropriate outputs [

2,

3], leaving educators without reliable mechanisms to mediate AI use in pedagogically sound ways [

18].

Meanwhile, recent advances such as GenAIReading illustrate how large language models, when combined with image generation and interactive textbooks, can improve comprehension and symbolic access to abstract literary content [

19].

To address these limitations, we propose a modular framework that leverages the ChatGPT API, augmented by DALL·E for visual scaffolding. Grounded in design-based research and multimodal pedagogy [

15,

20], the system enables age-aligned transformations of literary texts, such as simplification, visual-narrative retellings, lexical variation, and multilingual augmentation. At its core lies the

Ethical–Pedagogical Validation Layer (EPVL), a real-time auditing component that evaluates AI outputs along four dimensions: (1) developmental suitability, (2) semantic fidelity, (3) cultural sensitivity, and (4) ethical transparency [

21,

22].

Each dimension is implemented through embedded prompting logic and curated criteria. Semantic fidelity entails preserving not only lexical meaning but also tone and interpretive nuance [

23]. Cultural sensitivity involves identifying context-deficient, biased, or Eurocentric metaphors [

24]. Ethical transparency encompasses traceability of generative processes and detection of problematic reductions or omissions [

25].

While the framework ensures developmental alignment and semantic fidelity, it also directly addresses the critical need for systematic bias detection and trust calibration in generative AI–mediated learning [

26]. LLMs are known to replicate cultural stereotypes and ideological assumptions embedded in training data, which can lead to distortions in literary content, subtly reinforcing Eurocentric metaphors, gendered tropes, or ideological framing that may contradict curricular goals [

27,

28]. Such risks are especially pronounced in primary humanities education, where nuanced symbolic reasoning and intercultural perspectives are central to learning outcomes. To mitigate these threats and reinforce educators’ trust in AI-assisted adaptations, the EPVL embeds an explicit bias-auditing mechanism alongside interpretive and ethical checks, ensuring that each AI-generated output aligns with normative expectations for cultural sensitivity and epistemic fairness [

29]. This bias-aware layer transforms the system into an accountable co-author rather than an unchecked generator, supporting educators’ professional judgment while guarding against subtle automation biases that could otherwise go unnoticed.

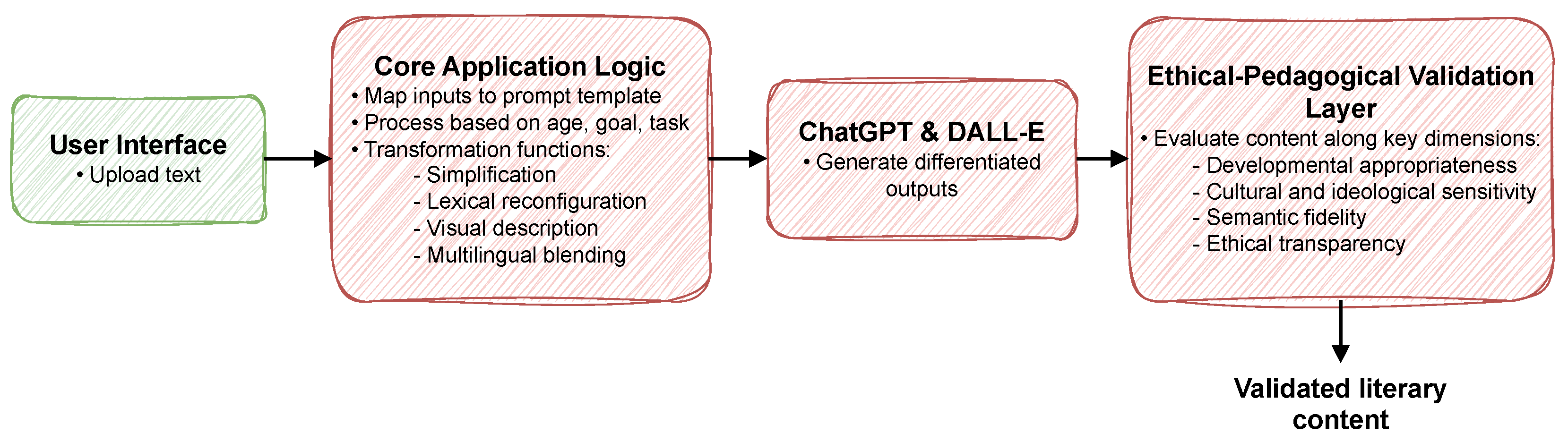

Figure 1 illustrates the system architecture. The framework acts as an intermediary between educators and commercial GAI platforms, enabling pedagogically oriented queries without requiring technical expertise. It incorporates evolving curricular and ethical guidelines, such as those from ministries or expert bodies, and routes each query through the EPVL before returning validated, developmentally appropriate responses. This design sustains educator agency and institutional oversight while decoupling pedagogical governance from proprietary infrastructures.

This study explores how GAI, when embedded within a pedagogically structured and ethically regulated infrastructure, can enhance the accessibility, emotional resonance, and interpretive depth of literary texts for young learners. A comprehensive overview of the pedagogical theories and instructional frameworks underpinning this work is provided in

Appendix A.

To inform the system’s refinement, a pilot evaluation was conducted with eight primary school educators, who interacted directly with the implemented framework across all core modules. While limited in scope, this preliminary study provided formative feedback on usability, interpretive scaffolding, and alignment with instructional objectives, laying the groundwork for broader empirical validation.

Rather than treating generative AI as inherently harmful or naively beneficial, this paper advances a middle path: a normative and pedagogically grounded approach that directly responds to critical concerns while harnessing the potential of AI to foster symbolic reasoning, cultural sensitivity, and developmental alignment in literary education.

Summary of Contributions

This paper makes both conceptual and infrastructural contributions to the field of AI in education, with a focus on primary literary pedagogy. Specifically, it offers:

Overall, the framework advances a scalable, ethically grounded, and pedagogically rich approach to GAI integration in primary literary education, anchored in teacher agency and normative accountability.

2. System Architecture

The architecture of the framework is guided by four principal design imperatives. First, it prioritizes the preservation of literary meaning and emotional resonance during all transformations, ensuring that simplification or translation does not result in semantic erosion [

17]. Second, it upholds the agency and ethical discernment of the educator, positioning them not as passive users of algorithmic output, but as reflective co-authors within a pedagogical dialogue [

33]. Third, it commits to inclusive access by enabling all educators, regardless of technical background, to engage with AI meaningfully, without requiring prompt engineering or programming expertise [

31]. Fourth, it embeds ethical evaluation within the generative process itself, rather than as an external filter, aligning AI behavior with pedagogical intention and normative responsibility. The architecture of the proposed framework is illustrated in

Figure 2.

Technically, the system unfolds across four interconnected layers. The user interface layer is implemented as a browser-based graphical interface, accessible to non-technical users, particularly teachers in primary and lower secondary settings. Here, educators can upload texts, activate desired transformation functions, preview outputs, and export finalized content. The frontend is built using React.js, with role-specific dashboards enabling differentiated access for educators, curriculum designers, and institutional reviewers.

The core application logic maps these inputs to curated prompt templates and organizes them into instructional workflows. Prompt handling is mediated through a controlled pipeline that standardizes the request structure before forwarding it to the underlying generative engine. This middleware is implemented as a set of modular microservices, orchestrated using Docker containers and managed via a RESTful API gateway. Workflow coordination is supported by Node.js and Express.js, enabling asynchronous processing and modular extension.

The generative engine layer utilizes the ChatGPT API and, when visual output is requested, integrates with DALL·E to produce AI-generated illustrations that align with the pedagogical intent of the task. To minimize latency and ensure auditability, the system includes support for on-premise or regionally hosted inference endpoints via OpenAI-compatible interfaces. In test environments, LLaMA2 and Mistral-7B are used for benchmarking alternative deployments.

Finally, the EPVL continuously audits each generative output in real time, flagging deviations from predefined criteria of developmental appropriateness, cultural sensitivity, semantic fidelity, and ethical transparency. The validator provides an interpretive report indicating whether the output meets standards of developmental appropriateness, cultural and linguistic sensitivity, semantic fidelity, and ethical transparency. If violations are flagged, the educator is alerted with suggested revisions or cautions, preserving their agency while ensuring alignment with normative expectations.

To ensure scalability, all validated artifacts are stored in a version-controlled NoSQL database (MongoDB), while metadata logs are indexed in ElasticSearch for retrospective auditing and longitudinal analysis.

Unlike conventional automation pipelines, this dual-loop model, combining generative action with reflective audit, echoes a socio-constructivist orientation [

34], where the machine is not positioned as an instructional authority but rather as a responsive mediator that participates in the learner’s interpretive process. By embedding evaluation within generation, the system fosters a dynamic feedback loop akin to dialogic scaffolding, aligning technological action with pedagogical responsiveness.

2.1. The Ethical–Pedagogical Validation Layer (EPVL)

At the heart of the framework lies the EPVL, a meta-level component designed to audit all AI-generated content prior to classroom use. Its operation follows a four-stage pipeline: ingestion (capturing generated output), assessment (evaluating content across defined normative dimensions), annotation (adding interpretive feedback and suggested revisions), and export (delivering the validated content to the educator interface). This process enables transparent oversight while preserving the teacher’s role as the ultimate pedagogical authority.

Importantly, the EPVL is not merely a technical layer; it is an infrastructural manifestation of ethical intentionality. It enacts the principle that ethical scrutiny should be embedded within the generative process, not retrofitted after deployment.

Provenance of Validation Criteria and Prompt Templates: The EPVL is designed as a modular and extensible architecture, capable of embedding pedagogical, cultural, and ethical criteria directly within the generative loop. This study does not offer a final or universal set of validation standards. Instead, it demonstrates the architectural affordance of the system, its capacity to translate evolving normative directives into executable validation logic across diverse educational environments.

As part of this normative logic, the EPVL specifies how different forms of bias should be detected and annotated, operationalizing cultural and ideological sensitivity through a structured categorization scheme. To guide bias-sensitive auditing, the EPVL applies a practical categorization framework grounded in current NLP bias scholarship [

27,

29,

35]. In the current prototype, embedded validation templates and policy guides the GPT-based assessment to identify language features falling into (1) Data Bias, arising from culturally dominant corpora; (2) Representational Bias, involving stereotypical or reductive portrayals; and (3) Ideological Bias, concerning unintended moral or political framings. While this taxonomy informs the EPVL’s design logic and teacher-facing feedback, fully automated multi-class bias detection remains an objective for future development and empirical validation. These annotation categories are summarized in

Table 1, which outlines the EPVL’s normative dimensions along with examples of flagged content.

At the proof-of-concept stage, the system was configured using publicly available international documents, including the UNESCO Recommendation on the Ethics of Artificial Intelligence [

22], the OECD Principles on AI [

30], the PACE Guidelines for AI in Education, and the European Commission’s draft AI Literacy Framework [

36]. These documents provided a normative substrate for simulating ethical evaluation and illustrated the system’s ability to operationalize abstract guidelines.

However, the design explicitly anticipates localized adaptation. Ministries of education, curriculum agencies, and other institutional actors can define country-specific rules for developmental appropriateness, cultural sensitivity, and representational norms. For instance, a national authority may embed rules to flag Eurocentric metaphors in postcolonial settings, or to ensure modest visual depictions of women in conservative cultural contexts.

This normative modularity distinguishes the EPVL from general-purpose content filters or fixed compliance systems. It allows sovereign, transparent, and interpretable ethical mediation at the point of generation, without modifying the underlying commercial LLM infrastructure. In doing so, it supports what Biesta [

37] terms

subjectification: preserving the teacher’s role as a moral and interpretive agent in educational practice.

Instructional Integration of Validation Rules: Unlike general-purpose platforms such as ChatGPT, which offer limited capacity for sovereign customization, the EPVL enables institutions to embed their own normative criteria directly into the validation process. This is achieved through editable prompt templates and hierarchical rubrics structured in machine-readable formats (e.g., JSON, YAML). These templates allow for the insertion of evaluative logic concerning age-appropriateness, linguistic register, ideological stance, or culturally sensitive representation.

For example, a ministry of education may define a prohibited lexicon, prescribe visual modesty guidelines, or flag metaphors incompatible with local discourse norms. These rule sets are ingested dynamically at runtime, without requiring model retraining or external API dependencies. As a result, the validator supports multilingual, culturally contextualized, and policy-compliant operation, offering an institutional layer of control that would be infeasible within standard LLM deployments.

Technical Backbone: The EPVL operates via a controlled invocation of GPT-4-turbo, selected for its extended context window and capacity for structured linguistic evaluation. Rather than enforcing rigid, rule-based filters, it operationalizes pedagogical rubrics through prompt-based conditioning, supporting context-aware analysis across multiple genres and educational levels.

Internally, the validator examines four core dimensions:

Developmental Appropriateness: Is the output aligned with the cognitive and emotional maturity of the target age group? For instance, abstract notions such as “existential dread” are flagged as developmentally misaligned for learners under ten.

Cultural and Ideological Sensitivity: Does the content reflect implicit biases, exclusionary framings, or ethnocentric narratives? Phrases that exoticize rural communities as “primitive” are highlighted for revision.

Semantic Fidelity: Does the output retain the interpretive core, tone, and narrative coherence of the source material? Misrepresenting a tragic scene as humorous, for example, is flagged for distortion.

Ethical Transparency: Are emotionally charged claims or ideological generalizations framed with care and contextual nuance? Oversimplified statements such as “rich people are evil” trigger pedagogical reconsideration.

Each flagged segment appears within an interactive validation panel adjacent to the generated output. Every annotation includes a descriptive label, contextual rationale, and, when applicable, a suggested revision. Educators can accept, modify, or override each suggestion and may annotate their decisions for professional reflection or institutional review. This ensures that while validation is AI-assisted, interpretive responsibility remains firmly human-centered.

Future Integration and Metadata Structuring: To facilitate integration with Learning Management Systems (LMS), institutional repositories, or national educational infrastructures, the EPVL is designed with structured metadata output. Each validation report is formatted using a JSON schema, enabling machine-readable entries for: flag_label, description, confidence_score, suggested_revision, and educator_response. This design supports version control, audit trails, and downstream integration into educator dashboards, data governance frameworks, and research on AI-assisted pedagogy.

2.2. Runtime Workflow and Evaluation Strategy

While the previous section outlined the architecture and normative scaffolding of the EPVL, this section describes its runtime behavior and evaluation logic. Once a generative model (e.g., GPT-4-turbo) produces an educational transformation (e.g., a simplified narrative or adapted dialogue), the EPVL initiates a secondary validation pass. This is conducted via a model-as-critic protocol: a prompt-based evaluation sequence issued either to the same model instance or to a distinct inference endpoint. The system uses dimension-specific prompts, e.g., “Assess whether this text is developmentally appropriate for learners aged 8–10”, to elicit validation responses.

Each evaluation is processed asynchronously and in parallel across the four core dimensions (semantic fidelity, age-appropriateness, cultural sensitivity, and interpretive integrity). Depending on the system configuration, these validations may be:

Zero-shot: Direct prompting with abstract criteria,

Few-shot: Prompting with 1–3 exemplars per dimension,

Chain-of-Thought (CoT): Prompting that elicits stepwise justification before final verdict.

Table 2 presents illustrative examples of how each validation dimension is operationalized through specific prompting strategies and representative model outputs.

The evaluation is non-deterministic and multi-pass, allowing the validator to issue layered feedback. To mitigate variability across validation runs, temperature settings can be fixed and outputs averaged or rerun with consensus scoring when necessary. Each flagged issue includes a rationale, a severity label (e.g., “minor deviation”, “critical misalignment”), and, where applicable, a revision suggestion. These annotations are not enforced or auto-applied; they appear adjacent to the original output within the educator interface. The teacher remains the final arbiter, with the ability to accept, dismiss, or rewrite flagged segments. This workflow balances transparency, auditability, and professional autonomy.

In scenarios where validations conflict, for instance, an output is semantically faithful but ideologically controversial, the EPVL surfaces a structured trade-off report. This report includes a matrix identifying the conflicting dimensions, severity ratings per dimension, a concise textual summary of the tension, and, where applicable, alternative reformulations. The educator is prompted to reflect and decide, without automatic prioritization, as pedagogical intent and local values vary across learning settings.

It is acknowledged that the model-as-critic protocol inherits the epistemic limitations of the underlying LLM, including the risk of hallucinated evaluations, false positives, or inconsistent judgments across runs. For this reason, the validator is designed as a recommendation system, not a gatekeeping layer, and all outputs are subject to human review.

Technically, each validation interaction is logged using a structured metadata schema, including fields such as: dimension, prompt, LLM_response, flag_type, confidence_estimate, suggested_revision, and educator_action. Metadata can be stored in JSON or interoperable formats for export to Learning Management Systems (LMS), audit dashboards, or research environments. Future versions may support differential analysis over time to track instructional adaptation patterns or flag recurrent validation mismatches across classroom contexts.

This evaluation strategy operationalizes ethical mediation as an iterative, educator-centered process, prioritizing interpretive transparency, pedagogical fit, and institutional accountability in the use of generative AI for education.

3. Integrated Module Suite for Pedagogical Transformation

This section presents the four core modules of the proposed AI-supported framework, each addressing a distinct pedagogical challenge in literary education for primary learners. Designed to work in synergy, the modules are grounded in established instructional theories and operate under the oversight of the EPVL. Module 1 enables age-appropriate simplification of complex literary texts, maintaining emotional and semantic fidelity. Module 2 invites learners into active co-construction of meaning through adjective removal and reinvention, cultivating stylistic awareness and critical literacy. Module 3 implements a multilingual augmentation strategy, gradually embedding German keywords to foster translanguaging and intercultural competence. Module 4 leverages visual generation to recast literary excerpts into semiotic narratives, enhancing multimodal comprehension and interpretive depth. Together, these modules operationalize a responsive and educator-centered approach to AI integration, aligning technological transformation with ethical, developmental, and curricular priorities.

3.1. Module 1: Age-Based Text Simplification

Canonical literary texts often pose significant challenges to young learners due to their dense syntax, metaphorical complexity, and culturally specific references. The Age-Based Text Simplification module addresses these barriers by leveraging LLMs to generate differentiated versions tailored to specific age ranges, preserving semantic and affective depth while reducing linguistic complexity.

Figure 3 illustrates the interface walkthrough of the Age-Based Text Simplification module, including input selection, AI-generated output, and validator feedback.

Educators input a literary excerpt and specify the learner’s age. The system then returns a version aligned to the target developmental stage, accompanied by an EPVL audit report. The EPVL ensures compliance with ethical and pedagogical criteria, including developmental appropriateness, semantic fidelity, cultural sensitivity, and emotional safety. For example, the line “led them closer to death” may be adapted to “made choices that were not safe” for younger audiences.

The module’s pedagogical foundation draws on Vygotsky’s zone of proximal development [

34], cognitive load theory [

38], and the multiliteracies framework [

15], supporting scaffolded access to texts beyond independent comprehension. It offers two main simplification trajectories: for ages 10–12, syntactic and idiomatic simplification; for ages 6–8, reconstruction using high-frequency vocabulary and linear narrative.

Sample transformations demonstrate age alignment. From he Tempest, the line “All lost! To prayers, to prayers! All lost!” is rendered as “We’ve lost everything! Pray quickly!” for older children and “Everything is gone! Let’s pray now!” for younger readers. The EPVL verifies these for affective continuity, cultural respect, and readability.

In another case, a Macbeth excerpt flagged for distressing content was revised from “led them closer to death” to “led them closer to their final day”, balancing semantic coherence and emotional appropriateness. Each decision by the validator is documented with explanatory metadata.

Assessment tools include cloze completions, emotion-matching tasks, and reflective rewording prompts. These enhance engagement and metalinguistic awareness while the EPVL ensures transparency and traceability.

Simplification is framed not as content dilution but as ethically accountable, developmentally guided interpretive reconfiguration. By offering parallel views of source and simplified texts, the system cultivates critical literacy and interpretive agency.

3.2. Module 2: Lexical Reinvention Through Adjective Removal

This module implements a scaffolded activity that reframes learners as co-constructors of meaning by engaging them in the removal and reinvention of adjectives within literary excerpts. By eliminating descriptive qualifiers and inviting users to reinsert them based on context and tone, the module fosters metalinguistic awareness, emotional literacy, and stylistic sensitivity.

Grounded in reader-response theory [

39], critical literacy frameworks [

40,

41], and lexical development research [

42], the activity treats adjectives not as isolated lexical units but as semantically and ideologically charged expressions. The system selects passages rich in description, prompts the language model to remove adjectives while preserving grammaticality, and presents the modified text to users. Learners then propose reinsertion candidates guided by semantic inference and narrative cues.

For example, from The Tempest, Act I:

Original: “Now would I give a thousand furlongs of sea for an acre of barren ground, long heath, brown furze, anything.”

Stripped: “Now would I give a thousand furlongs of sea for an acre of ground, heath, furze, anything.”

Reinventions such as “safe ground,” “dry heath,” or “tangled furze” require attentiveness to the character’s psychological state, narrative context, and atmospheric tone. This process supports critical engagement with language and its rhetorical effects.

The EPVL audits both the stripped base and reinsertion attempts for developmental appropriateness, semantic coherence, and ethical framing. For instance, the use of “ugly” to describe a female character’s clothing was flagged for Stereotypical Association, prompting a revision to “unusual attire”, a shift preserving descriptive deviation without reinforcing bias. Such interventions reflect the validator’s role as a dialogic scaffold rather than a prescriptive censor.

Pedagogically, the module advances three goals: enhancing lexical repertoires through generative usage; strengthening interpretive engagement with tone and character; and fostering critical literacy by treating language as a site of power and perspective. These aims correspond with international benchmarks such as CCSS.ELA-LITERACY.L.4.5.

Ultimately, adjective reinvention is positioned not as a reductive language task, but as a dialogic literary intervention. It transforms the seemingly mechanical act of simplification into an opportunity for ethical reasoning and interpretive agency, with AI serving as a guide toward deeper textual awareness.

3.3. Module 3: Multilingual Integration Through Incremental German Keywords

This module implements a staged multilingual strategy that cultivates cross-linguistic awareness, semantic flexibility, and intercultural resonance. By incrementally embedding German keywords into canonical English texts, particularly Shakespearean excerpts, it enacts a form of translanguaging pedagogy where linguistic hybridity is treated as an interpretive affordance rather than a cognitive burden.

The design is grounded in translanguaging theory [

43], which views bilinguals as drawing on a unified linguistic repertoire, and in Cummins’ Interdependence Hypothesis [

44], which posits that conceptual knowledge in one language supports transfer to another. Within this paradigm, German terms with symbolic, emotional, or narrative salience serve as cognitive anchors for meaning reconstruction.

Implementation unfolds across three levels of multilingual density. In Stage 1, high-frequency nouns or adjectives (e.g., Meer, Himmel) are introduced into English phrases to encourage inferential reasoning. Stage 2 adds verbs and modifiers (e.g., zittern, Flammen), increasing syntactic and semantic complexity. Stage 3 presents hybrid passages with intensified code-switching, supported by glossaries or visual cues.

An example based on The Tempest illustrates the progression:

Original: “The sky, it seems, would pour down stinking pitch, but that the sea, mounting to the welkin’s cheek, dashes the fire out.”

Stage 1: “The Himmel would pour down pitch, but the Meer rises to stop the fire.”

Stage 2: “The Himmel pours pitch as the Meer zittert, climbing to stop the Flammen.”

Stage 3: “In this Stur, the Himmel darkens and the Meer brüll, rising to the Gesicht of the sky to drown the Flammen with rage.”

Each stage intensifies the cognitive and linguistic load, prompting learners to reconstruct meaning through phonetic, syntactic, and contextual cues. The EPVL audits these insertions to ensure lexical accuracy, contextual fit, and intercultural appropriateness. For example, the term Zorn (“rage”) was flagged for Emotional Overextension, and replaced by Unruhe (“unease”), which preserved affective tension while mitigating stereotyping risks.

The module pursues three pedagogical aims:

Receptive Bilingualism: embedding target-language terms within meaningful narrative frames.

Metalinguistic Agility: encouraging learners to negotiate and contrast meaning across languages.

Intercultural Empathy: exposing students to emotionally salient lexical items from other linguistic traditions.

These aims align with the Council of Europe’s Language Education Policy [

45] and UNESCO’s Global Citizenship Education framework [

46], both of which promote plurilingual competence and intercultural understanding.

Assessment is formative and embedded in reading tasks, such as maintaining personal glossaries, annotating texts with semantic predictions, and reflecting on tonal or atmospheric shifts induced by linguistic blending. These activities foster a deeper awareness of how multilingual integration reshapes literary experience.

In sum, this module reframes translation as a generative act of literary co-construction. By leveraging AI-generated modulation and validator-informed feedback, it treats literature as a plural medium, resonant across linguistic, cultural, and symbolic dimensions.

3.4. Module 4: Semiotic Recasting Through Visual Re-Narration

This module addresses a recurring barrier in early literary education: the semantic opacity of canonical texts, often due to abstract diction, historical idiom, and a lack of sensory grounding. To bridge this gap, the Semiotic Recasting module transforms selected literary excerpts into AI-generated visual narratives. These images do not merely illustrate; they reinterpret the text semiotically, foregrounding metaphor, affect, and structure through visual composition. The aim is to support multimodal comprehension and foster interpretive engagement across diverse learner profiles.

The pedagogical rationale draws on multimodal literacy theory [

20], which views meaning as distributed across semiotic systems, and on the multiliteracies framework [

15], which advocates representational diversity in globalized classrooms.

The module operates in three stages:

Text-to-Prompt Conversion: A literary passage is translated into structured prompts encoding affective tone, metaphorical density, and symbolic markers.

Image Generation: AI systems (e.g., DALL·E) produce a visual sequence aligned with the encoded semantics. For example, “Life’s but a walking shadow” may be rendered as a blurred figure crossing a dissolving corridor, evoking transience and existential fragility.

Reconstructive Engagement: Learners analyze and interpret the generated visuals through reverse narration, metaphor decoding, and fidelity evaluation.

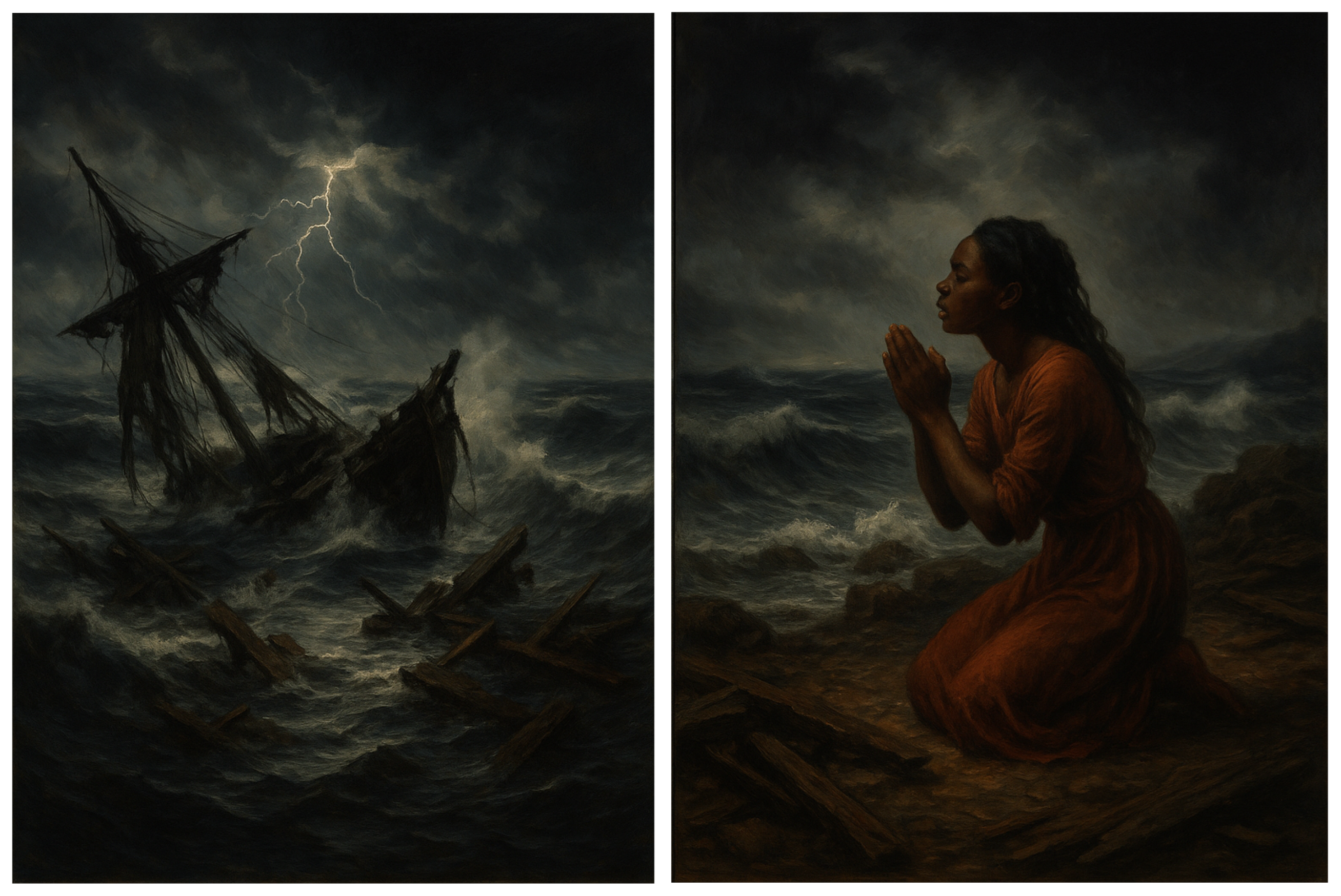

Figure 4 presents a sample output based on

The Tempest, showing storm debris and a praying figure. This re-narrates the line: “Now would I give a thousand furlongs of sea for an acre of barren ground.”.

All images pass through the EPVL, which audits for cultural sensitivity, symbolic coherence, and developmental alignment. For example, a generated image of a kneeling, long-haired figure in chiaroscuro was flagged for: (

i)

Gender-Coded Representation, implying passive femininity; and (

ii)

Ambiguous Moral Othering, due to shadow saturation. Based on UNESCO’s emotional safety guidelines [

22], the validator suggested neutralizing contrast gradients and postural cues. The revised image preserved symbolic force while avoiding stereotype activation.

The module supports three pedagogical goals:

Cross-modal Interpretation: Enhancing figurative reasoning through multi- semiotic decoding.

Affective Entry Points: Leveraging emotionally resonant imagery to support learners challenged by textual abstraction.

Inclusive Comprehension: Scaffolding access for neurodiverse, multilingual, or early-stage readers.

Formative evaluation is embedded via journaling, image-to-text reconstructions, and alternative renderings. Learners reflect on visual fidelity and develop representational awareness.

In sum, this module reframes literary access not through simplification but through interpretive amplification. By integrating ethical validation with visual re-narration, it positions literature as a multimodal and inclusive experience.

4. Pilot Feedback from Educators

As part of the formative development cycle, a pilot study was conducted to gather preliminary insights from educators regarding the proposed AI-powered framework. The objective was to evaluate usability, perceived pedagogical alignment, and integration feasibility prior to learner-facing implementation. Importantly, the pilot focused exclusively on educator experience; no students were involved.

This pilot study was not intended to yield generalizable findings but to function as an initial design probe exploring the usability and pedagogical plausibility of the proposed framework. We acknowledge the limitations in both sample size and geographic scope—only eight educators, all based in Cyprus, participated—and we did not apply any formal sampling or stratification criteria. Our intention was not to evaluate efficacy at scale, but rather to collect early impressions from practitioners familiar with the local primary curriculum, in order to refine the system’s instructional scaffolds and interface dynamics prior to classroom deployment.

Eight primary school teachers in Cyprus explored the system independently and completed a structured feedback instrument. Participants rated five core dimensions on a five-point Likert scale:

Pedagogical relevance (Q1): 4.4;

Ease of use and navigation (Q2): 4.3;

Differentiated instruction support (Q3): 4.6;

Curricular alignment (Q4): 4.1;

Perceived student value (Q5, hypothetical): 4.5.

While these scores suggest high initial acceptance, the absence of inferential statistics and limited participant diversity caution against strong conclusions.

Participants also provided open-ended comments. A light-touch thematic analysis was performed using inductive coding to identify recurring themes:

Multimodal engagement: “The visual transformation capabilities could offer an entry point for students who struggle with text-heavy formats.”

Creative stimulation: “Even without classroom use, I can see how the structure invites student creativity and analytical thinking.”

Interface accessibility: “Intuitive,” “well scaffolded,” particularly for educators unfamiliar with AI tools.

Suggested refinements: Templates, curriculum-specific paths (e.g., myths, folk tales), multilingual or voice-over supports.

While these comments were predominantly positive, no critical dissent or negative reactions were recorded, limiting interpretive balance. Future iterations should systematically elicit critical perspectives and include qualitative analysis with tools such as NVivo.

Therefore, this feedback should be considered context-specific and exploratory, offering design-oriented insights rather than evaluative evidence. Its primary function was to inform interface refinement and pedagogical alignment at the pre-deployment stage.

Overall, the pilot functioned as a formative design probe, yielding early indications of conceptual fit and usability. Yet its limitations are clear: absence of classroom deployment, small sample (N = 8), no triangulation, and lack of diversity in participant background. Further large-scale, classroom-embedded studies will be required to assess learner impact, teacher-AI interaction dynamics, and long-term pedagogical efficacy.

Although these initial results are encouraging, they must be interpreted with caution due to several methodological and contextual limitations, as outlined below.

5. Limitations and Future Research Directions

The proposed framework introduces a pedagogically grounded and ethically regulated approach to literary text transformation through generative AI. While conceptually robust and technically promising, its current instantiation reveals a number of design boundaries that simultaneously highlight important trajectories for future investigation, refinement, and scaling.

A first set of limitations concerns the architectural structure and epistemic behavior of the system. As a modular middleware operating between educators and commercial LLMs, the framework preserves pedagogical agency but inherits the opacity, non-determinism, and stochastic inconsistencies of black-box generative models [

23,

25]. The EPVL functions not as a rule-enforcing filter but as a dialogic interpretive aid. Nevertheless, its reliance on large-context models, such as GPT-4-turbo, raises challenges of verifiability, symbolic traceability, and predictable consistency. These issues may prove particularly restrictive under regulatory regimes that demand high levels of explainability and auditability, such as the EU AI-Act. Research is needed on how interpretive fidelity could be strengthened through ensemble prompting strategies, teacher-in-the-loop feedback mechanisms, and context-aware regeneration. Additional technical work should explore the potential of semi-symbolic or hybrid architectures to increase transparency without sacrificing generative capacity. Moreover, the framework’s compliance with data protection standards (e.g., GDPR) and national protocols on educational data use must be ensured, especially when handling persistent learner annotations.

Closely linked to these concerns is the question of infrastructural sovereignty. While the current implementation depends on third-party LLMs, future iterations might benefit from the deployment of nationally governed or sovereign models trained on localized corpora and subject to domestic regulatory oversight. Such architectures could support greater cultural alignment, stronger institutional trust, and stricter compliance with privacy standards. However, they would not eliminate the inherent limitations of generative systems, such as output variability or the necessity of human interpretive mediation. Investigating the feasibility, cost, and pedagogical impact of national LLM infrastructures thus constitutes an important future direction.

A second class of limitations relates to the current interaction model. The system assumes a linear workflow in which educators submit a source text, receive an AI-transformed output, and review validation suggestions. This unidirectionality restricts opportunities for responsive refinement, dialogic engagement, and student-informed revision. Future iterations should consider bidirectional interfaces that support live editing, iterative improvement, or scaffolded co-creation, better reflecting constructivist pedagogical paradigms [

33,

34].

Additional limitations stem from the lack of integration with learning analytics ecosystems and student-facing platforms. Without compatibility with standards such as xAPI or LMS protocols, the framework cannot yet support personalized scaffolding, longitudinal learner tracking, or formative feedback loops. Future development should prioritize interoperability and the definition of operational indicators, such as educator revision rates, annotation convergence, or interpretive consistency, to assess the framework’s educational efficacy.

Despite being model-agnostic by design, the system has so far been tested on a narrow subset of LLMs (OpenAI, Meta). Broader empirical benchmarking, including open-source, low-resource, and culturally diverse models, is necessary to evaluate performance robustness, narrative variation, and potential interpretive biases, particularly in metaphorical or emotionally charged texts.

While the EPVL integrates bias-auditing aligned with normative frameworks, detecting and interpreting bias in LLM-generated outputs is inherently complex and context-dependent. Current methods may yield false positives, flagging innocuous phrases as biased, or may fail to capture subtle ideological framings embedded in narrative structures. As cultural and ideological sensitivity requires local contextualization, bias detection should be viewed as a reflective aid rather than a definitive arbiter, with human-centered interpretation remaining central to pedagogical decision-making. Ongoing refinement and educator feedback will be necessary to align system outputs with diverse classroom realities and cultural perspectives.

Moreover, although the validation schema draws on international guidelines (UNESCO, OECD, PACE), it has not yet been localized to reflect specific curricula, age-tiered learning goals, or sociocultural contexts. Future work should explore how customizable prompt templates, community-informed ontologies, and rule-layer modularity can support normative adaptation across diverse educational environments. Without such localization, the framework risks misalignment with national instructional standards or community values.

Finally, the broader scalability of the framework is contingent not only on technical design but also on institutional readiness and policy alignment. Cross-system implementation will require investment in teacher professional development, AI literacy, platform integration, and regulatory harmonization. Initial deployments should follow a Design-Based Research (DBR) methodology within pilot schools to assess feasibility, cultural fit, and curricular compatibility under authentic instructional conditions.

At the time of writing, the framework has been tested in simulated environments and is entering a pilot design phase under institutional oversight. The limitations outlined above do not undermine the conceptual integrity of the system, but rather define the boundaries of its current implementation. We view them not as fixed constraints but as design frontiers, each inviting empirical inquiry, technical refinement, and collaborative adaptation. Advancing this agenda will require a combination of architectural innovation, ethical accountability, and co-developed deployment protocols grounded in educational practice.

6. Potential Applications in Other Educational Domains

While this study focuses on literary text transformation in primary education, the modular architecture and pedagogical foundations of the proposed framework enable adaptation across multiple educational domains. By customizing the prompt structure, validation criteria, and representational outputs, the same system can support ethically regulated, teacher-guided AI interventions in diverse curricular contexts. Each application domain may require tailored adjustments to the EPVL, localized policy alignment, and subject-specific knowledge representations.

6.1. History and Civic Education

The framework can be adapted to scaffold critical engagement with historical narratives, political texts, and civic discourse. For instance, simplified versions of foundational documents (e.g., the Universal Declaration of Human Rights or national constitutions) can be generated for younger learners. Validation modules can be extended to detect biased, revisionist, or ideologically skewed framings, using history-specific markers such as contextual omissions or loaded metaphors. The EPVL would need to include discipline-aware criteria, e.g., sensitivity to contested events or colonial terminology, and align with national history curriculum standards.

6.2. Science and Environmental Education

In science education, the framework can support conceptual development through controlled simplification of technical content and the generation of multimodal representations (e.g., labeled diagrams, cause-effect sequences). The EPVL would need to incorporate domain-specific thresholds for accuracy and avoid oversimplified analogies that may introduce misconceptions. For example, a prompt might convert a complex explanation of photosynthesis into a visual-narrative scaffold for ages 8–10, while validation logic ensures it retains core scientific integrity. In environmental education, the system could help adapt policy briefs or global sustainability reports (e.g., IPCC summaries) for school-level discussion.

6.3. Multilingual and Heritage Language Education

Given the system’s support for translanguaging and lexical reinvention, it can be applied to heritage language revitalization and multilingual instruction. Prompts can be designed to incorporate controlled code-switching or dual-language text generation, while validation logic ensures phonological appropriateness, grammatical consistency, and cultural respect. In Indigenous or minoritized language contexts, the EPVL could be extended to flag loanwords, misused idioms, or culturally misaligned analogies. Integration with community-based lexicons or teacher-supplied phrase banks would further support local adaptation.

6.4. Ethics, Philosophy, and Socioemotional Learning

The framework can also facilitate classroom engagement with ethical dilemmas, philosophical questions, or SEL (Social–Emotional Learning) scenarios. Teachers might input morally complex narratives or perspective-based conflicts, and the system could output simplified variants with annotated framings (e.g., “from the child’s point of view”, “from a community lens”). The EPVL would need to include affective balance checks, cultural sensitivity tags, and tone detection to prevent emotional overexposure or misframing of ethical conflicts. For example, a scenario about sharing resources could be rendered with differing emotional tones for class debate.

6.5. Special Education and Neurodiverse Learners

The modularity and multimodal affordances of the system make it suitable for differentiated instruction. Prompts can be adapted to produce texts with reduced syntactic complexity, explicit discourse markers, and pictorial scaffolds for learners with cognitive or linguistic differences. Teachers might request metaphor-free explanations or structured storyboards for visual learners. The EPVL would need to be customized to validate against Universal Design for Learning (UDL) principles, ensuring accessibility across neurotypes. In this domain, feedback from occupational therapists or special educators could be incorporated into prompt tuning or validation thresholds.

These examples illustrate the potential of the framework to function as a generalizable pedagogical mediator across disciplines and learner profiles. What remains consistent is the system’s commitment to ethical transparency, local relevance, and the irreplaceable role of the teacher as an interpretive and moral agent. Broad deployment will require ongoing empirical validation, domain-specific prompt engineering, and close alignment with institutional guidelines, curricular standards, and cultural contexts.

7. Conclusions

This paper has presented a modular, ethically anchored framework for the pedagogical transformation of literary texts in primary education through generative AI. Rather than advancing a new algorithm, the contribution lies in the specification and implementation of a functional system architecture, guided by normative principles and designed for real-world adaptability.

Central to the system is the EPVL: a meta-evaluative layer that governs all AI-generated outputs through transparent and editable criteria. This allows educational institutions to tailor content transformations to cultural, developmental, and ethical constraints without modifying the generative backend. This mechanism not only supports developmental and semantic alignment but also foregrounds systematic bias-aware auditing and ethical oversight. By embedding a practical bias taxonomy and policy-based validation directly within the generative loop, the EPVL aims to mitigate risks of cultural stereotyping and ideological distortion, fostering calibrated trust in AI-assisted adaptations.

The framework has now been fully implemented and subjected to an initial pilot with educators. While not yet tested in classroom conditions, the pilot confirms the system’s usability and alignment with teacher expectations, validating the conceptual design. Educator feedback has already been integrated into the platform, refining its templates, scaffolds, and instructional flow.

Critically, the system does not burden teachers with technical prompt design. Instead, it offers a structured, bias-aware interface that encapsulates generative potential within clear pedagogical and ethical boundaries, avoiding both over-reliance on proprietary systems and unsustainable complexity.

The limitations outlined above should guide future research and practical implementations. Future work must focus on empirical deployment across diverse classrooms, evaluating impact on student engagement, interpretive development, teacher agency and the accuracy and reliability of embedded bias-auditing. Nevertheless, this pilot-informed implementation positions the framework as a viable response to global calls for ethical, human-centered AI integration in education.