Artificial Intelligence Empowering Dynamic Spectrum Access in Advanced Wireless Communications: A Comprehensive Overview

Abstract

1. Introduction

- This study organizes the presentation of AI methodologies applied for DSA operations, including deep learning (DL), supervised and unsupervised learning, reinforcement learning (RL), and metaheuristic algorithms. A systematic classification system helps specialists understand which intelligent techniques perform well for sensing, prediction, and spectrum allocation functions.

- The paper includes in-depth research comparing AI-based techniques alongside traditional spectrum management protocols. The evaluation analyzes different approaches regarding their ability to perform accurately while being adaptable with minimum latency and computational complexity through scalable systems, demonstrating their operational weaknesses in practical deployment conditions.

- This review establishes connections between AI implementations, modern wireless ecosystems, stretching from 5G to 6G, and Internet of Things (IoT)-based networks. The technical aspect of the review highlights the capability of AI to develop real-time spectrum perception while achieving dynamic resource allocation in ultra-dense heterogeneous and mobile network environments.

2. Background and Related Work

2.1. Dynamic Frequency Assignment and ML Approaches

2.2. RL-Based DSA in 5G Networks

2.3. Challenges and Opportunities in Intelligent 5G Networks

2.4. Spectrum Sharing (SS) in Integrated TNT and NTN

2.5. AI-Driven Spectrum Sharing Approaches

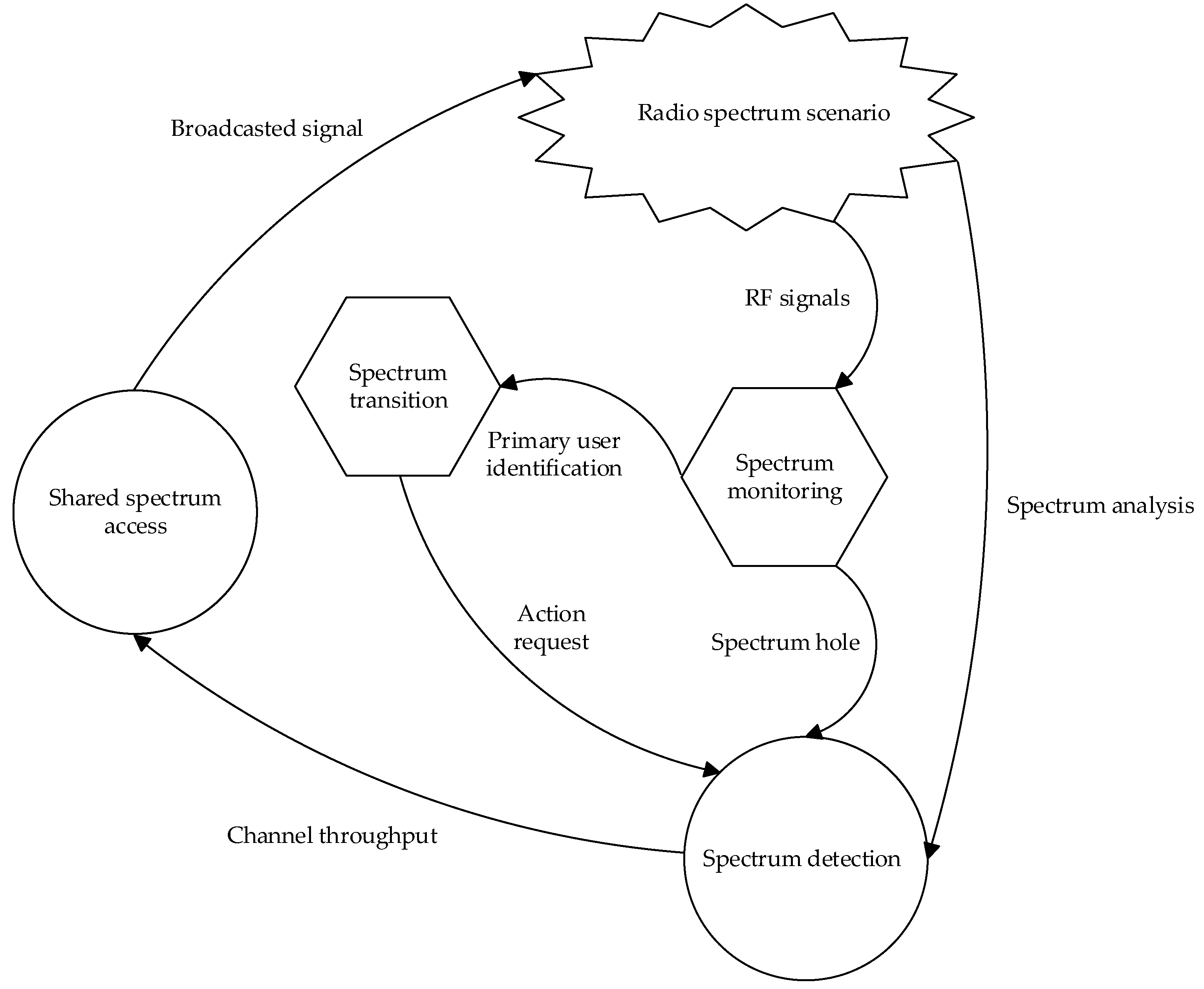

3. Fundamentals of DSA and Allocation

3.1. DSA for 5G and Beyond

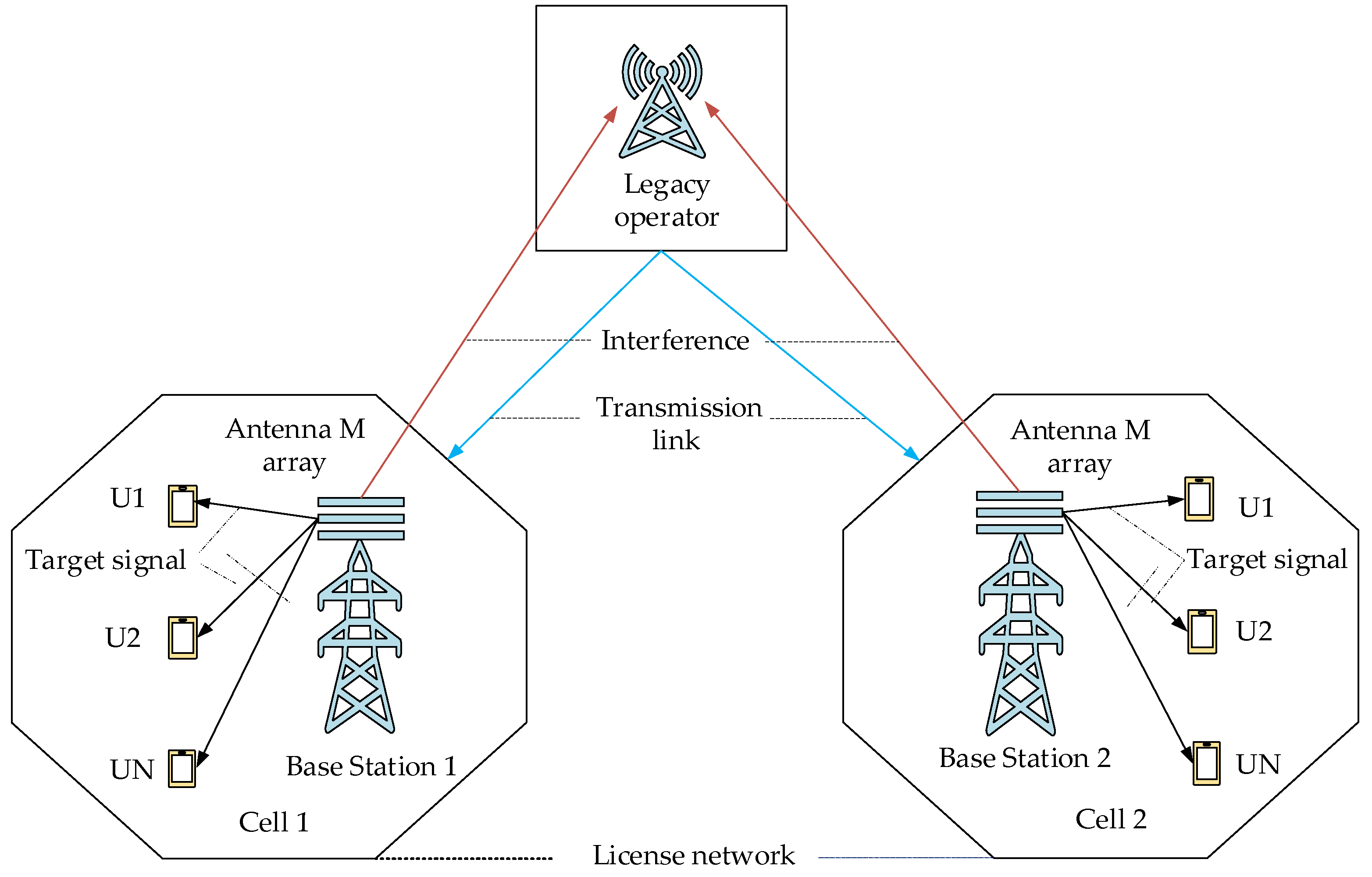

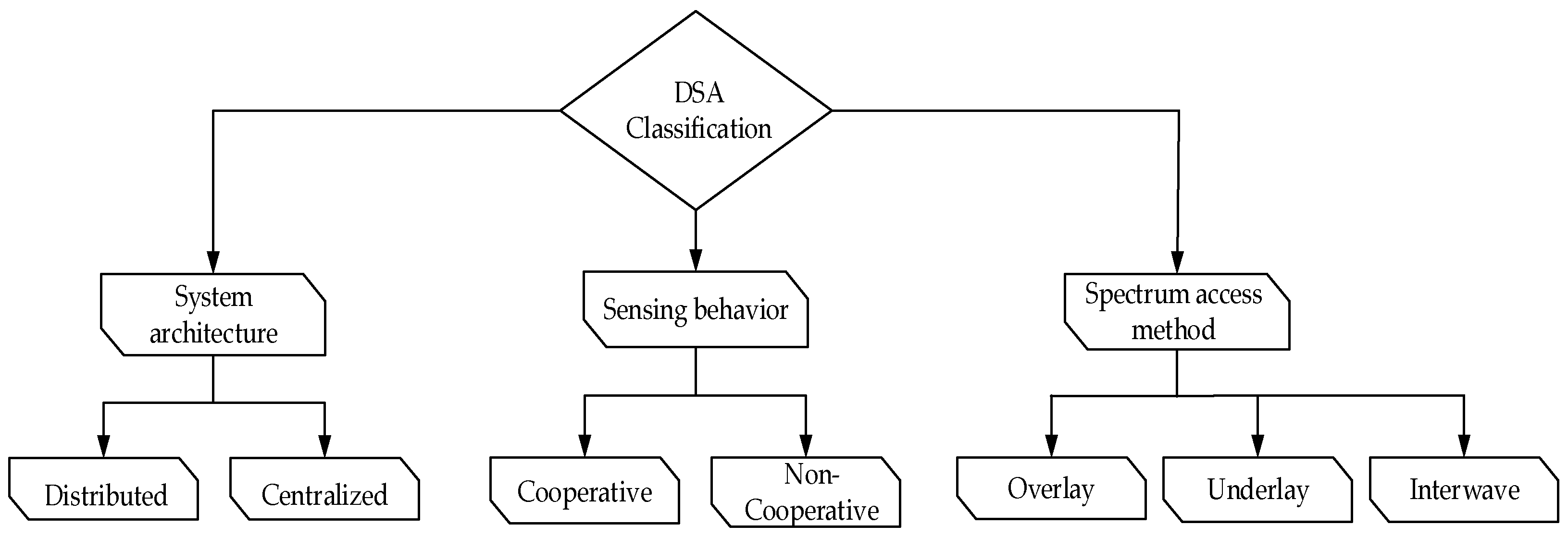

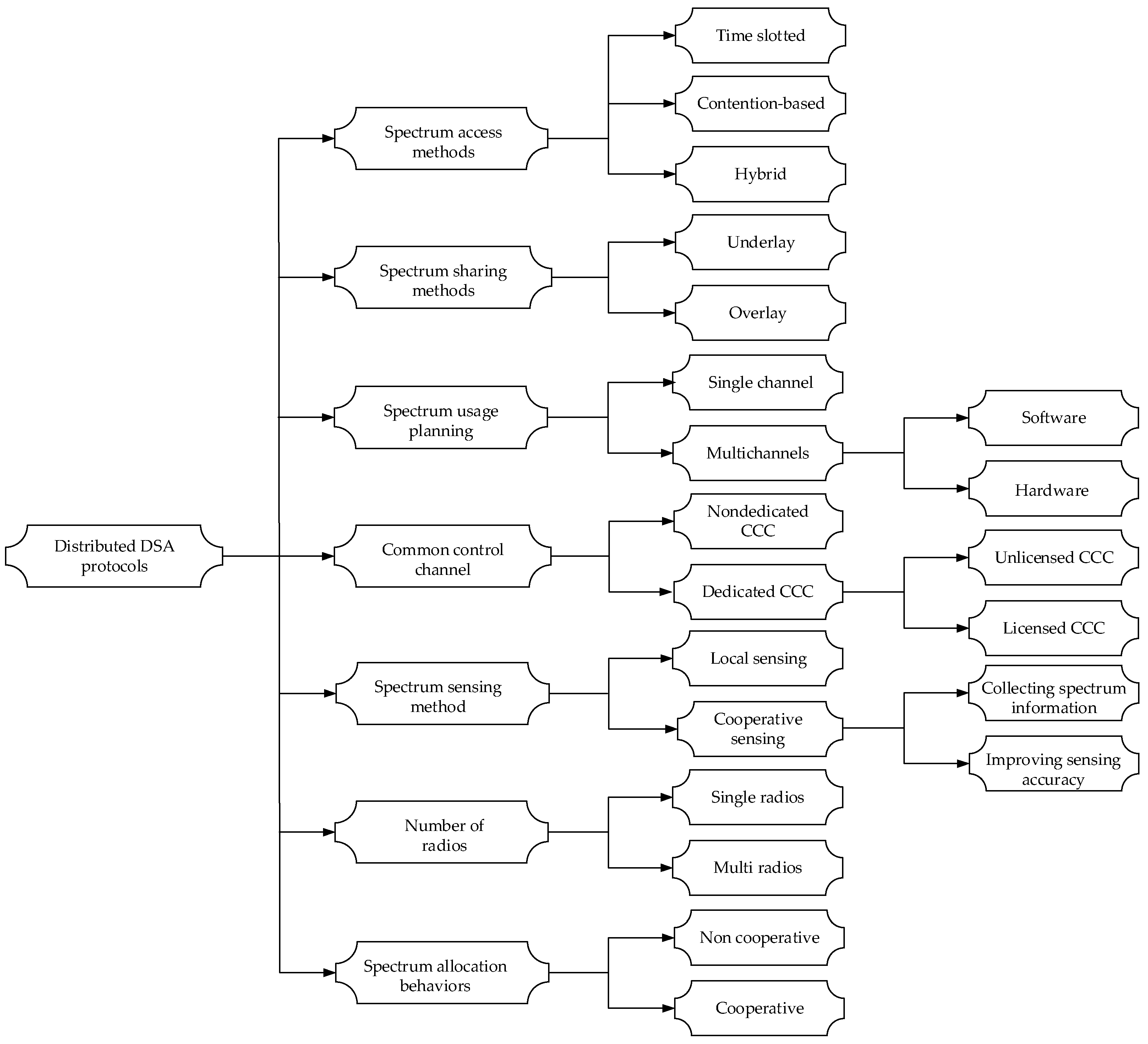

3.2. Different Classifications of DSA

3.2.1. Architecture-Based Classification

3.2.2. Spectrum Sensing Behavior-Based Classification

3.2.3. Spectrum Access Method-Based Classification

3.3. Regulatory and Policy Considerations

3.4. Key Challenges in DSA

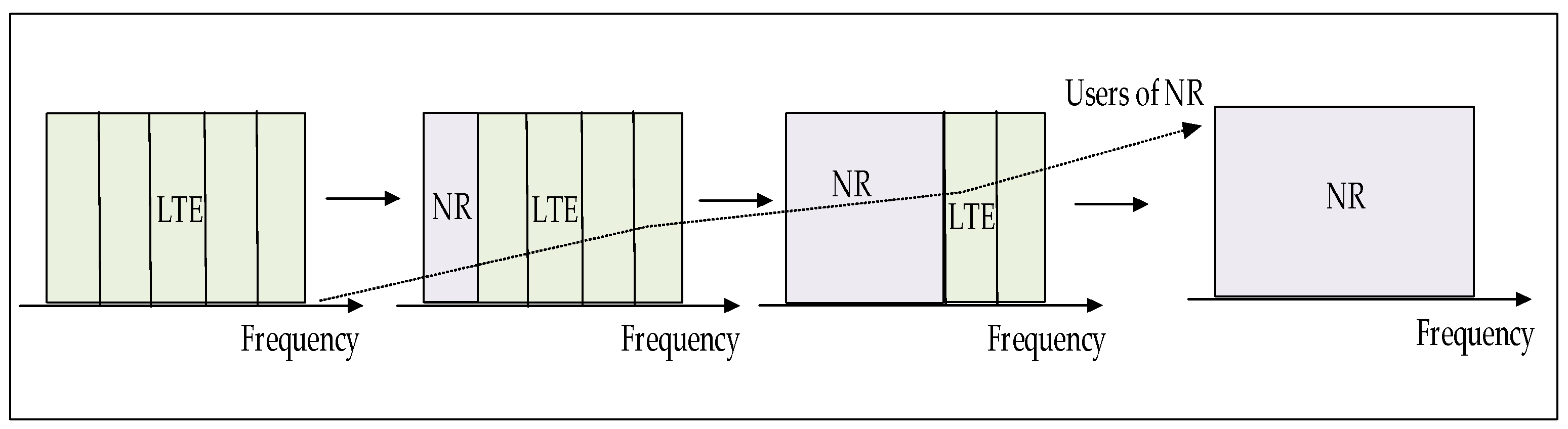

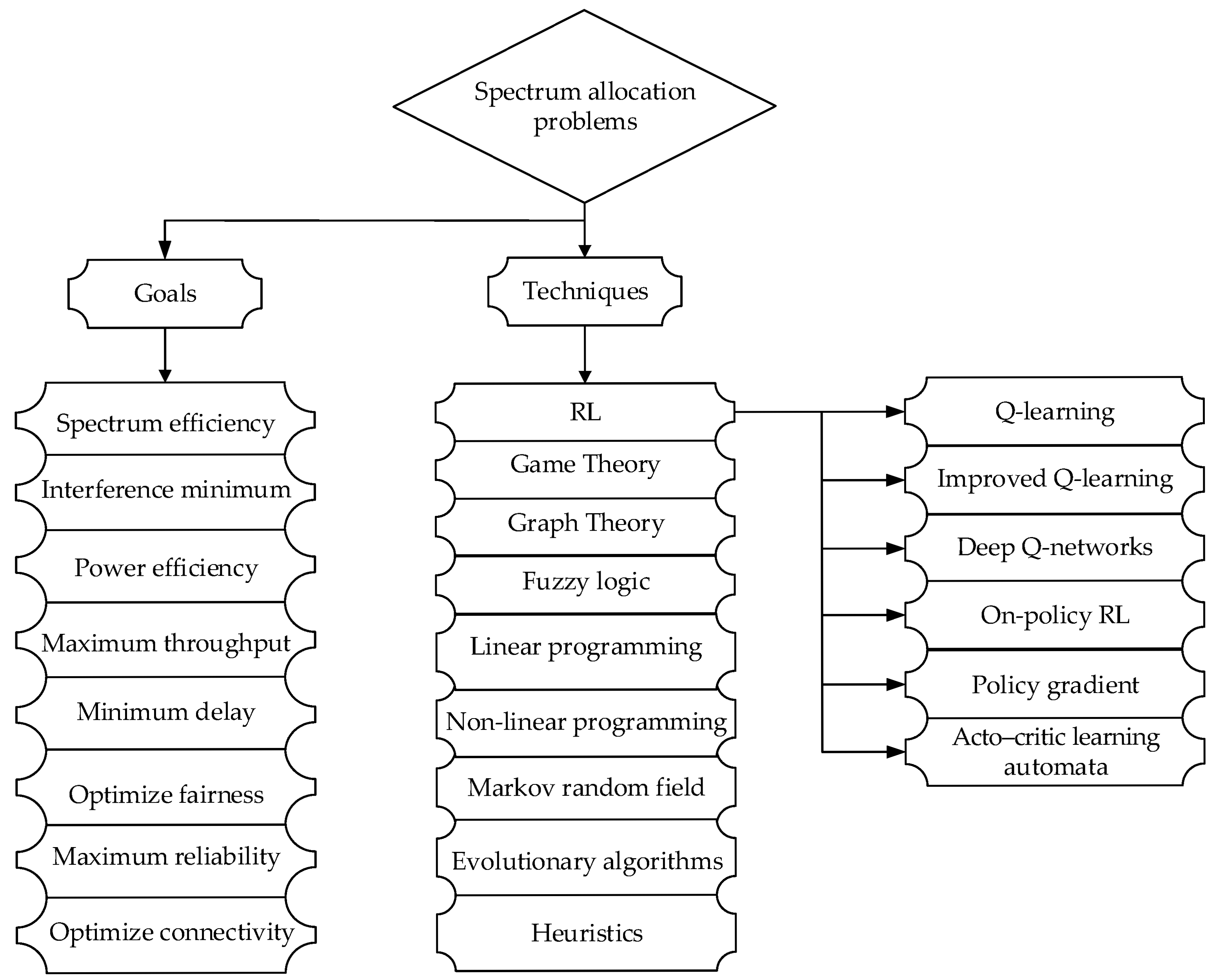

4. AI Techniques in DSA

4.1. ML Techniques

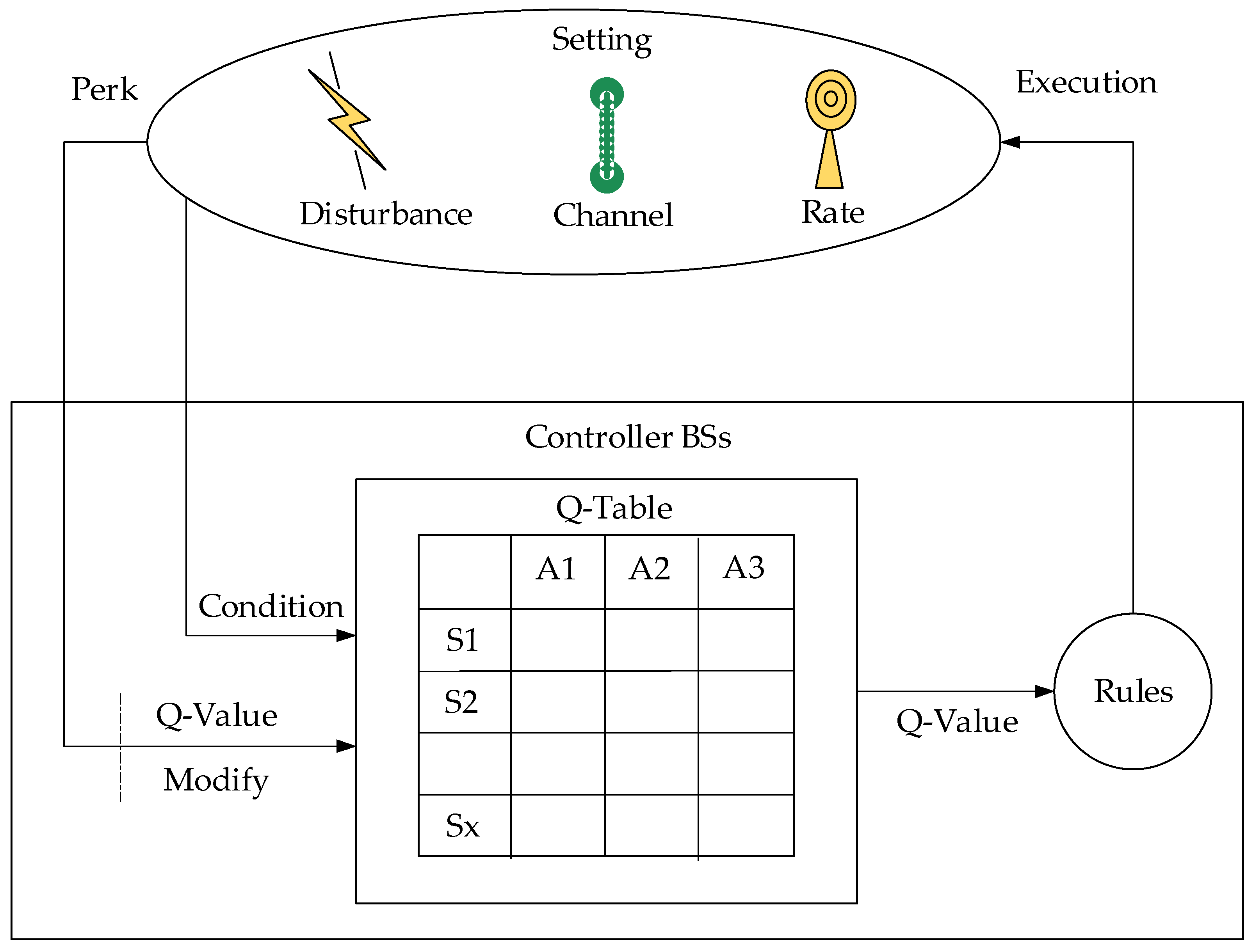

RL for DSA

- Q-Learning-Based DSA

- 2.

- Deep Q Network (DQN)-Based DSA

- 3.

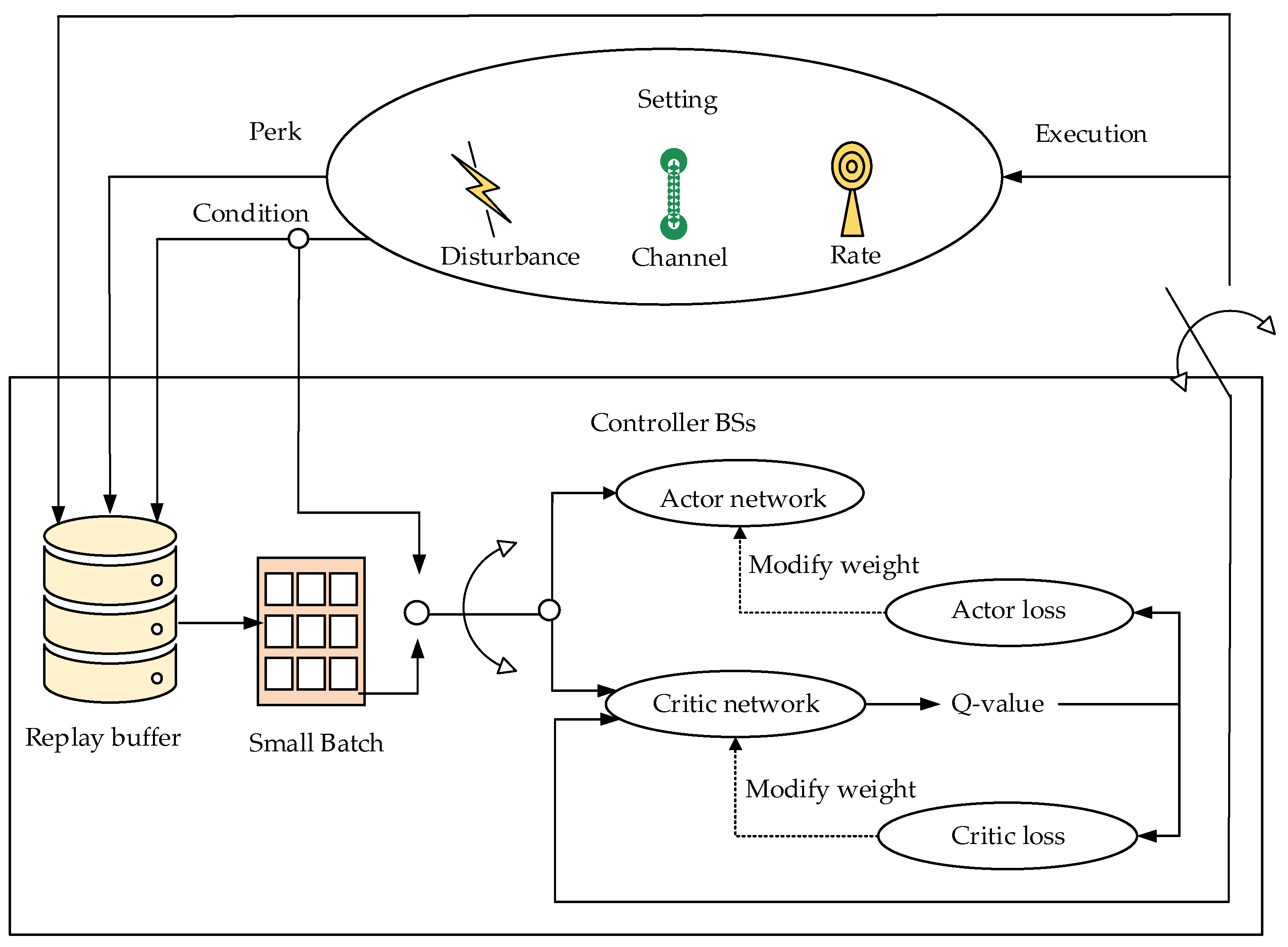

- Deep Deterministic Policy Gradient (DDPG)-Based DSA

- 4.

- Twin Delayed Deep Deterministic (TD3)-Based DSA

- 5.

- Deep Reinforcement Learning (DRL)-Based DSA

4.2. DL Techniques

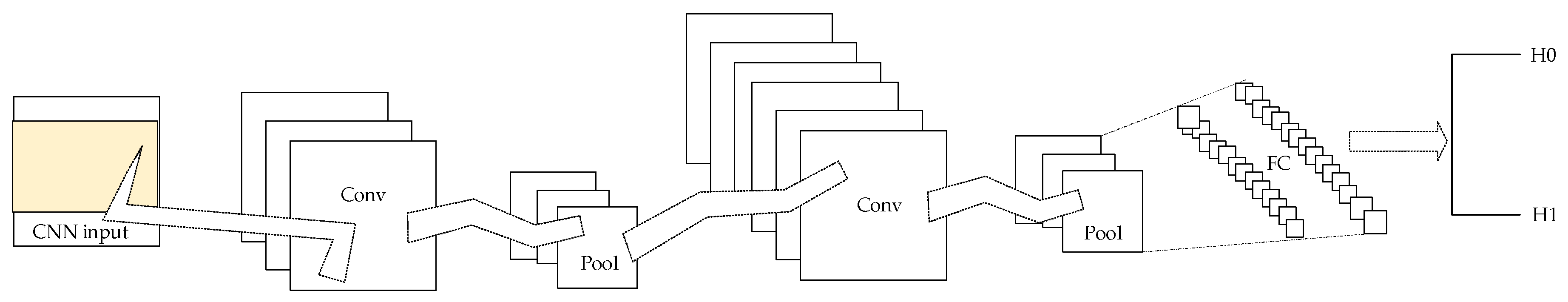

4.2.1. CNN-Based DSA

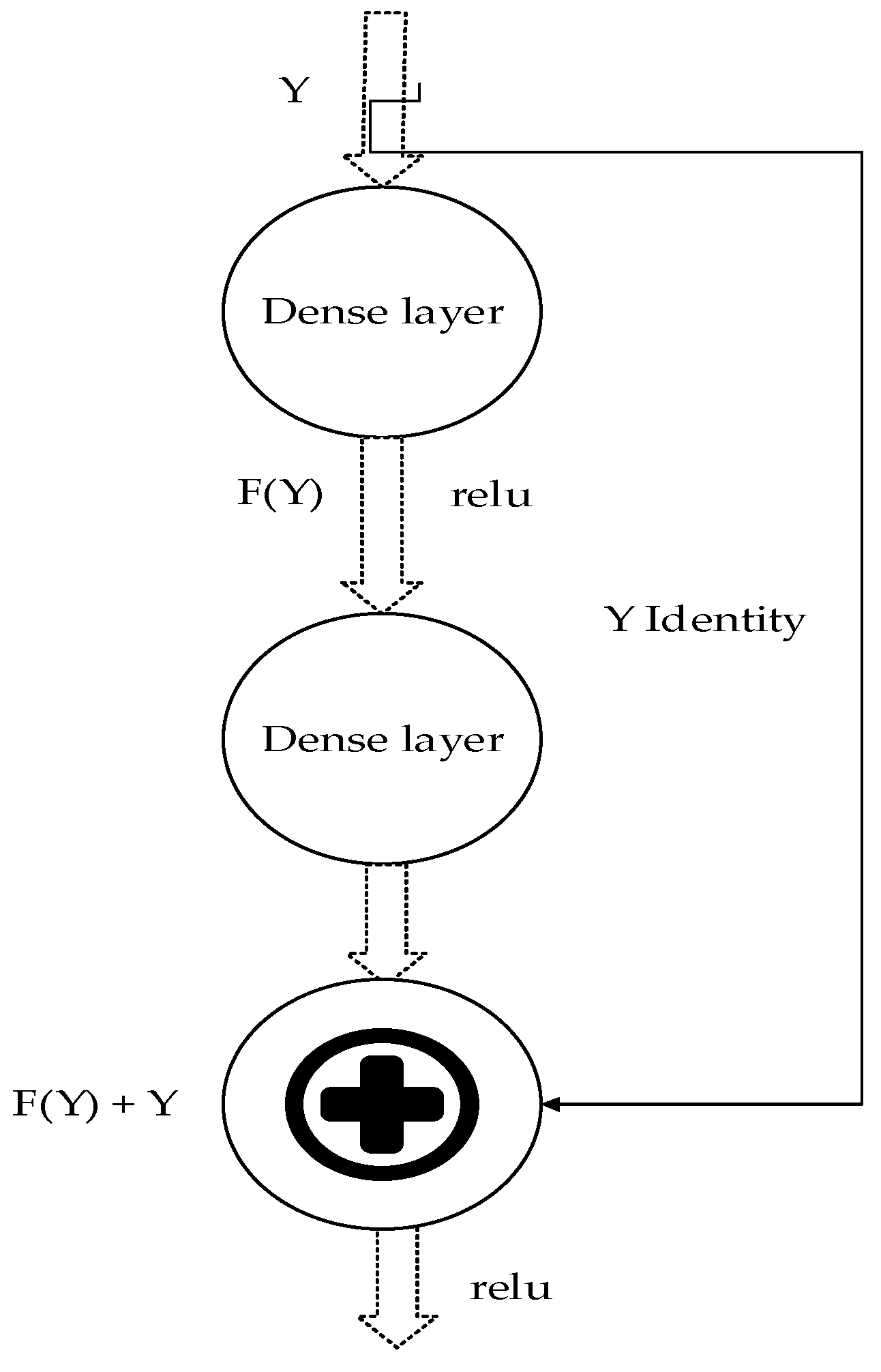

4.2.2. Residual Networks (ResNets)-Based DSA

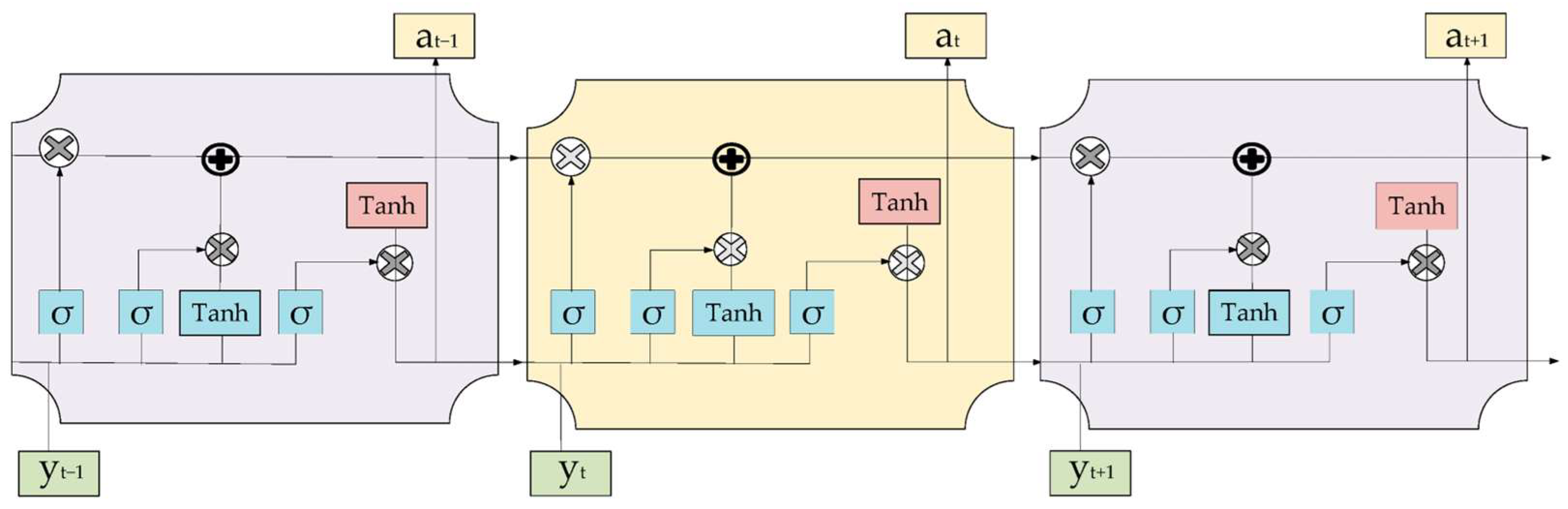

4.2.3. LSTM-Based DSA

4.2.4. Alternative Neural Network (NN) Approaches for Spectrum Sensing

4.3. Large Language Models (LLM)-Based DSA

5. Effectiveness of AI in Addressing Spectrum Management (SM) Challenges

5.1. AI Techniques in MA

5.2. Spectrum Sensing and Interference

5.3. Natural Language Processing (NLP) in Network Security & Automation

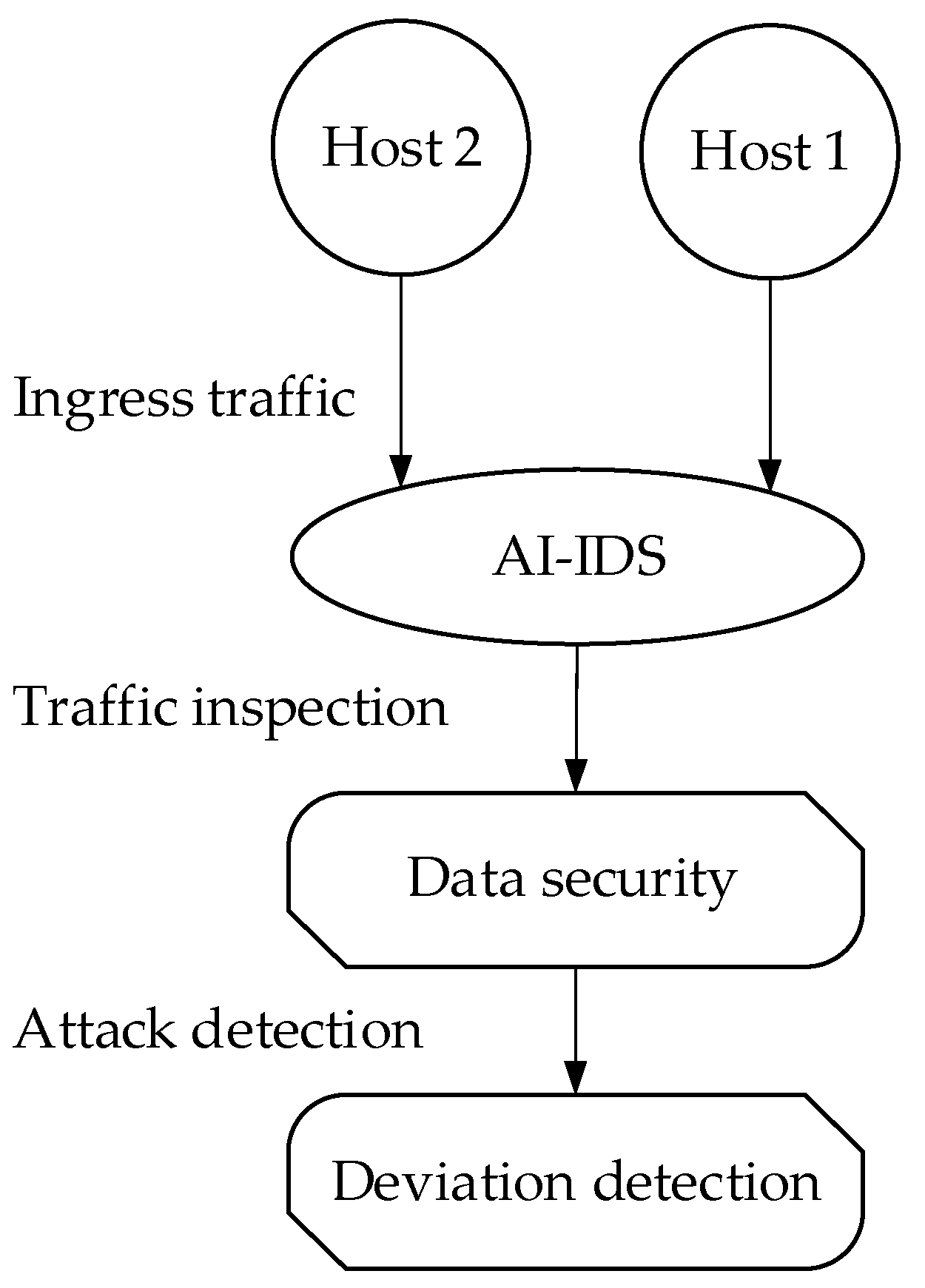

5.4. Detection and Techniques for Security and Privacy

5.5. Comparison of AI and Traditional Methods

6. Applications of AI in DSA for 5G, 6G, and Beyond

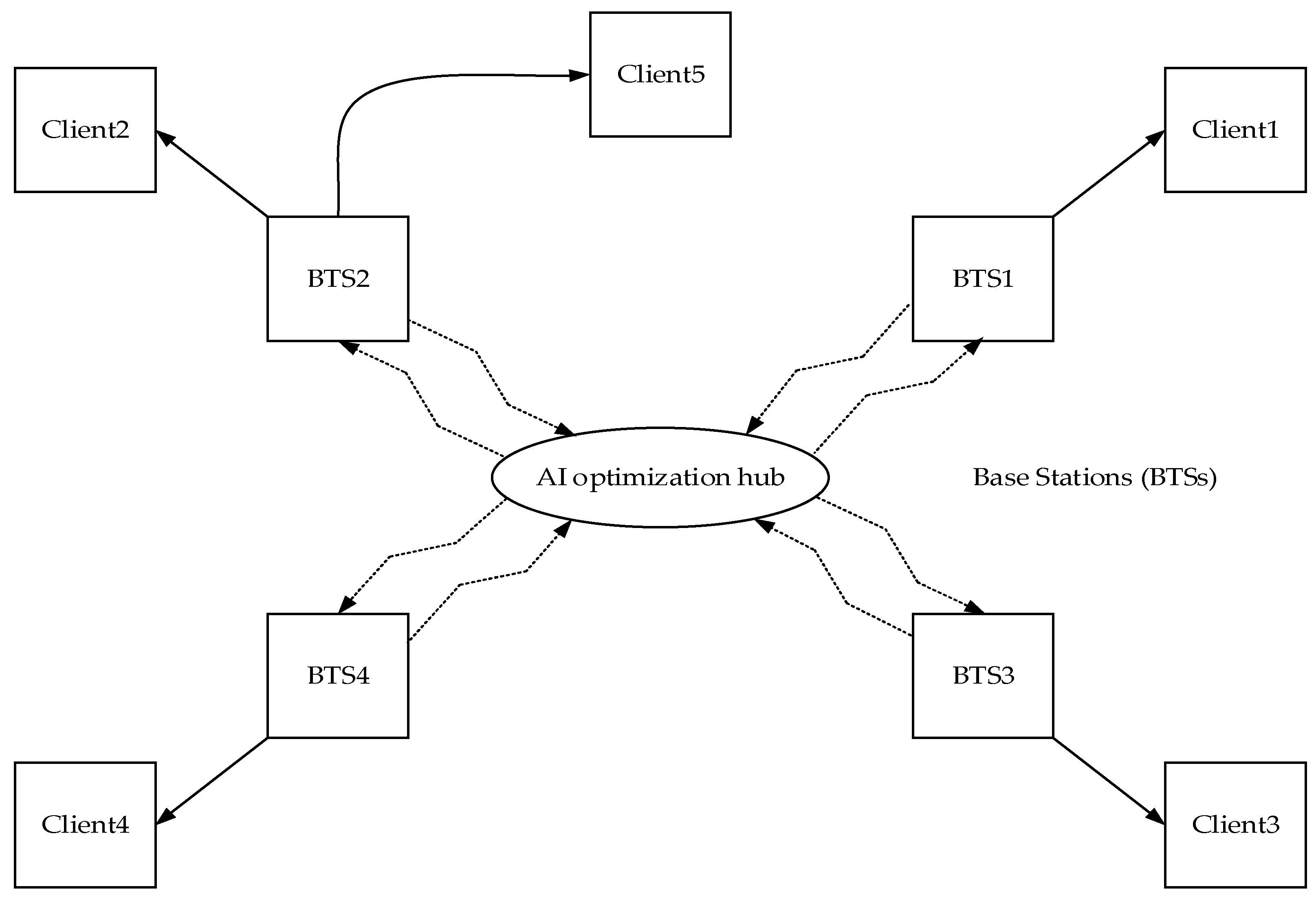

6.1. AI-Driven Connectivity in Dense Urban 5G/6G Networks

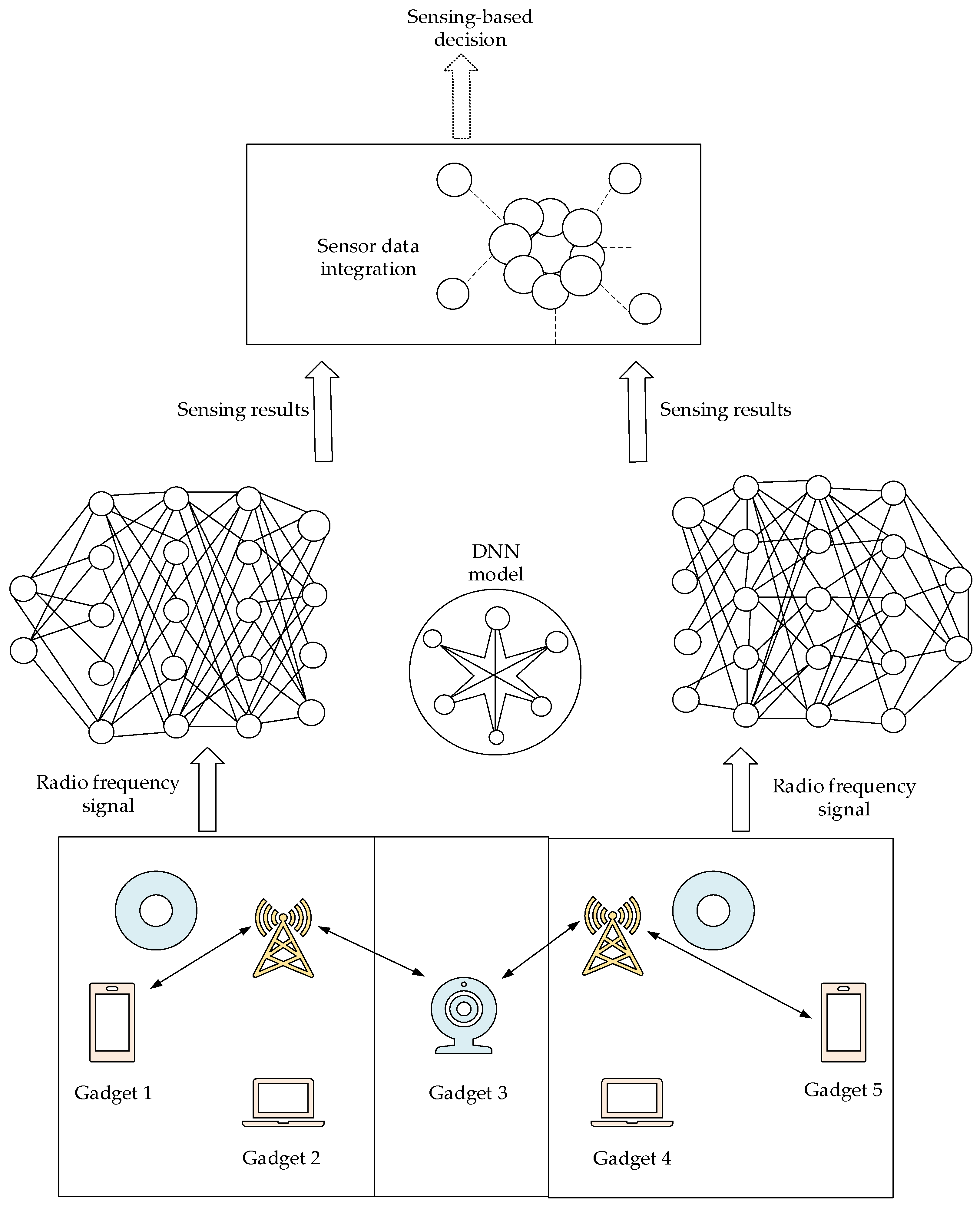

6.2. AI for IoT and Edge Network Management and Security

6.3. AI-Driven Network Security for Cloud Communication Systems

6.4. Core Applications of Network Slicing

6.5. Generative AI Models Use

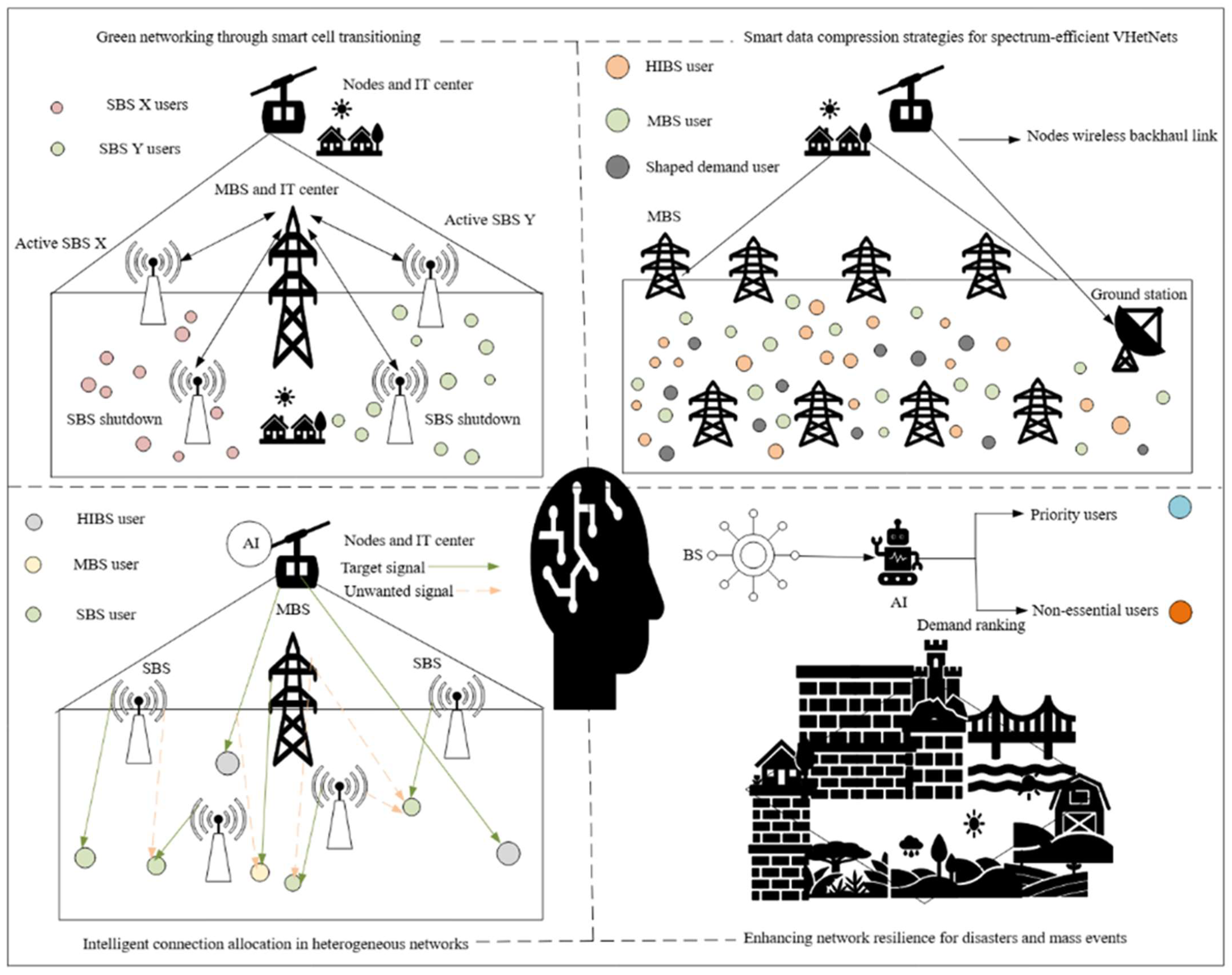

6.5.1. Green Networking Through Smart Cell Transitioning

6.5.2. Intelligent Connection Allocation in Heterogeneous Networks

6.5.3. Smart Data Compression Strategies for Spectrum-Efficient VHetNets

6.5.4. Enhancing Network Resilience for Disasters and Mass Events

6.5.5. Exploring New Revenue Streams with Generative AI (GenAI) in Telecom

6.6. Open-Source Datasets for Wireless Communication Research

7. Challenges and Limitations of the DSA

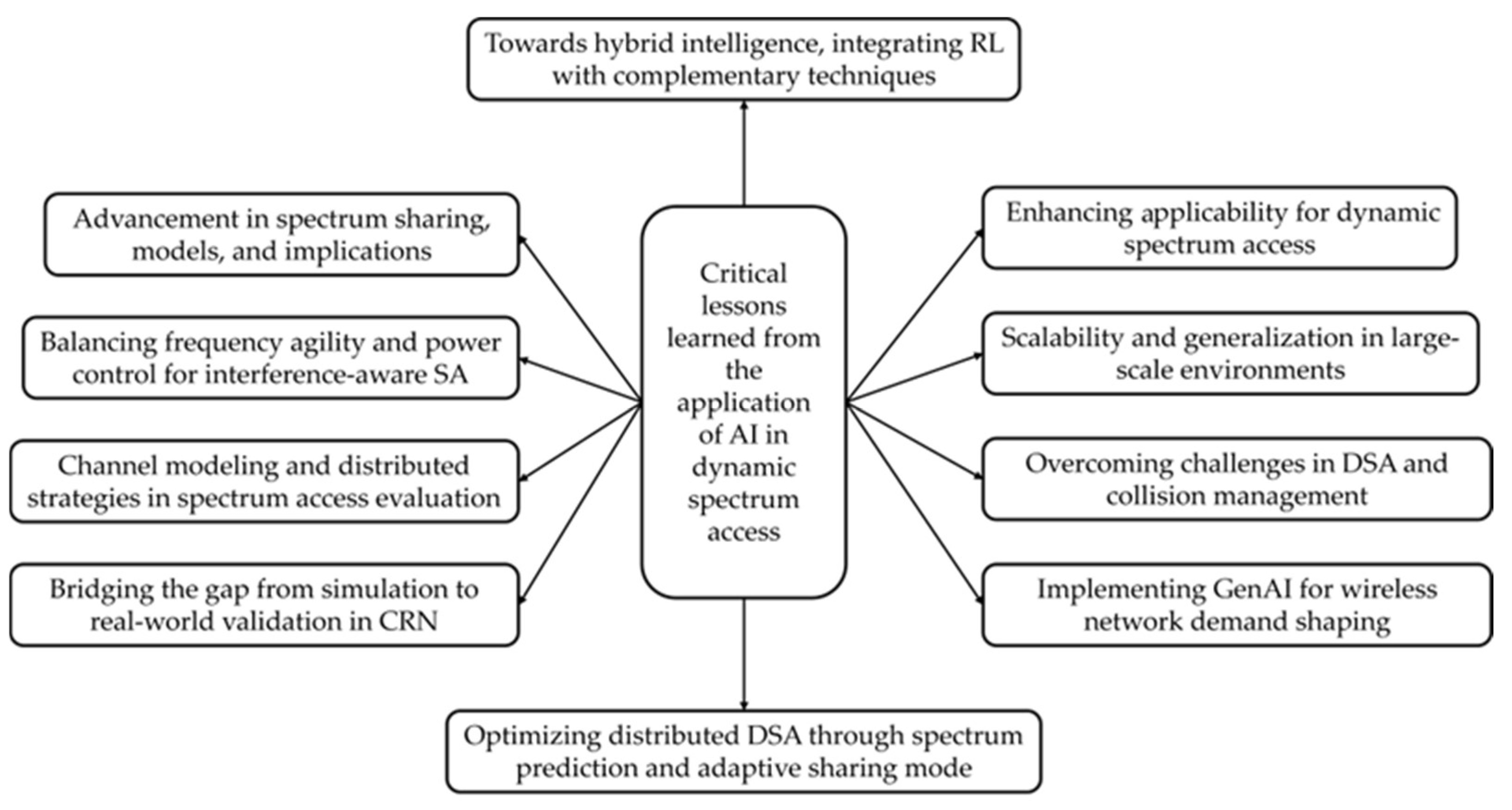

8. Recent Trends, Future Research Directions, and Lessons Learned

8.1. Recent Trends

8.2. Future Research Directions

8.3. Lesson Learned

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 3GPP | 3rd Generation Partnership Project |

| 4G | Fourth Generation |

| 5G | Fifth Generation |

| 6G | Sixth Generation |

| AI | Artificial Intelligence |

| AMC | Automatic Modulation Classification |

| ANNs | Artificial Neural Networks |

| APs | Access Points |

| AR/VR | Augmented/Virtual Reality |

| B5G | Beyond 5G |

| BGP | Border Gateway Protocol |

| BS | Base Station |

| BW | Bandwidth |

| CBRS | Citizens Broadband Radio Service |

| CNNs | Convolutional Neural Networks |

| CR | Cognitive Radio |

| CRNs | Cognitive Radio Networks |

| CRS | Cyclic Redundancy Synchronization |

| CRNs | Cognitive Radio Networks |

| CSI | Channel State Information |

| CTDE | Centralized Training and Decentralized Execution |

| CUS | Citizens’ Use Spectrum |

| CWNs | Cognitive Wireless Networks |

| DA | Data Augmentation |

| DCS | Digital Cellular System |

| DCSS | Dynamic and Collaborative Spectrum Sharing |

| DDPG | Deep Deterministic Policy Gradient |

| DDQN | Double Deep Q-Network |

| Dec-POMP | Decentralized Partially Observable Markov Decision Process |

| DL | Deep Learning |

| DNNs | Deep Neural Networks |

| DQN | Deep Q-Network |

| DRL | Deep Reinforcement Learning |

| DSA | Dynamic Spectrum Access |

| ED | Energy Detection |

| E-GSM-900 | Extended Global System for Mobile Communications 900 MHz |

| eMBB | Enhanced Mobile Broadband |

| FCC | Federal Communications Commission |

| FL | Federated Learning |

| FM | Frequency Modulation |

| FR | Frequency Range |

| GANs | Generative Adversarial Networks |

| GenAI | Generative AI |

| GDPR | General Data Protection Regulation |

| HCSSA | Hierarchical Cognitive Spectrum Sharing Architecture |

| HD | Half Duplex |

| HDRL | Hierarchical Deep Reinforcement Learning |

| HetNets | Heterogeneous Networks |

| HIBS | High-Altitude IMT-Based Station |

| IBFD | In Band Full Duplex |

| IDS | Intrusion Detection Systems |

| IEEE | Institute of Electrical and Electronics Engineers |

| IoT | Internet of Things |

| ITU | International Telecommunication Union |

| KNN | k-Nearest Neighbors |

| LEO | Low Earth Orbit |

| LLMs | Large Language Models |

| LoRa | Long Range |

| LoRaWAN | Long Range Wide Area Network |

| LP | Linear Programming |

| LPEES | Lightweight Policy Enforcement and Evaluation System |

| LSA | Licensed Shared Access |

| LSTM | Long Short-Term Memory |

| LTE | Long Term Evolution |

| MA | Multiple Access |

| MAB | Multi-Armed Bandit |

| MAC | Medium Access Control |

| MADRL | Multi-Agent Deep Reinforcement Learning |

| MARL | Multi-Agent Reinforcement Learning |

| MBN | Mobile Broadband Network |

| MIMO | Multiple-Input Multiple-Output |

| ML | Machine Learning |

| MLPs | Multi-Layer Perceptrons |

| mMTC | Massive Machine-Type Communication |

| mmWave | Millimeter Wave |

| MSBT | Multicast Source-Based Tree |

| NARNET | Nonlinear Autoregressive Neural Network |

| NFV | Network Functions Virtualization |

| NLP | Natural Language Processing |

| NN | Neural Network |

| NOMA | Non-Orthogonal Multiple Access |

| NTNs | Non-Terrestrial Networks |

| NR | New Radio |

| OTFS | Orthogonal Time-Frequency Space |

| PA | Power Allocation |

| PAL | Priority Access License (CBRS) |

| PCA | Principal Component Analysis |

| PCAST | President’s Council of Advisors on Science and Technology |

| PIBF | Priority Index-Based Framework |

| PLA | Physical Layer Authentication |

| PLS | Physical Layer Security |

| PUs | Primary Users |

| QF | Quality Function |

| QL | Quality Learning |

| QoE | Quality of Experience |

| QoS | Quality of Service |

| QT | Quality Table |

| QV | Quality Value |

| RA | Resource Allocation |

| RAN | Radio Access Network |

| ResNet | Residual Networks |

| RFR | Random Forest Regressor |

| RIS | Reconfigurable Intelligent Surfaces |

| RL | Reinforcement Learning |

| RNNs | Recurrent Neural Networks |

| SA | Spectrum Allocation |

| SAGINs | Space–Air–Ground Integrated Networks |

| SCF | Spectral Correlation Function |

| SDN | Software Defined Networking |

| SE | Spectral Efficiency |

| SIM | Spectrum Interference Management |

| SINR | Signal-to-Interference-Plus-Noise Ratio |

| SM | Spectrum Management |

| SMPC | Secure Multi-Party Computation |

| SNR | Signal-to-Noise Ratio |

| SS | Spectrum Sharing |

| STFT | Short Time Fourier Transform |

| SUs | Secondary Users |

| SVM | Support Vector Machines |

| TD3 | Twin Delayed Deep Deterministic Policy Gradient |

| TL | Transfer Learning |

| TNs | Terrestrial Networks |

| TPAAD | Two-Phase Authentication for Attack Detection |

| TV | Television |

| TVWS | TV White Spaces |

| V2X | Vehicle-to-Everything |

| VANETs | Vehicular Ad Hoc Networks |

| UAV | Unmanned Aerial Vehicle |

| URLLC | Ultra-Reliable Low-Latency Communications |

| VHetNets | Virtual Heterogeneous Networks |

| Wi-Fi | Wireless Fidelity |

| WNs | Wireless Networks |

| WRAN | Wireless Regional Area Network |

| WT | Wavelet Transform |

| WRC | World Radiocommunication Conference |

| XAI | Explainable AI |

| ZKPs | Zero-Knowledge Proofs |

References

- Singh, S.; Anand, V. Load balancing clustering and routing for IoT-enabled wireless sensor networks. Int. J. Netw. Manag. 2023, 33, e2244. [Google Scholar] [CrossRef]

- dos Santos Junior, E.; Souza, R.D.; Rebelatto, J.L. Hybrid multiple access for channel allocation-aided eMBB and URLLC slicing in 5G and beyond systems. Internet Technol. Lett. 2021, 4, e294. [Google Scholar] [CrossRef]

- Jawad, A.T.; Maaloul, R.; Chaari, L. A comprehensive survey on 6G and beyond: Enabling technologies, opportunities of machine learning and challenges. Comput. Netw. 2023, 237, 110085. [Google Scholar] [CrossRef]

- Ravi, B.; Kumar, M.; Hu, Y.; Hassan, S.; Kumar, B. Stochastic modeling and performance analysis in balancing load and traffic for vehicular ad hoc networks: A review. Int. J. Netw. Manag. 2023, 33, e2224. [Google Scholar] [CrossRef]

- Sun, R.; Cheng, N.; Li, C.; Chen, F.; Chen, W. Knowledge-Driven Deep Learning Paradigms for Wireless Network Optimization in 6G. IEEE Netw. 2024, 38, 70–78. [Google Scholar] [CrossRef]

- Taleb, T.; Benzaïd, C.; Addad, R.A.; Samdanis, K. AI/ML for beyond 5G systems: Concepts, technology enablers & solutions. Comput. Netw. 2023, 237, 110044. [Google Scholar] [CrossRef]

- Sabur, A. Lightweight Flow-Based Policy Enforcement for SDN-Based Multi-Domain Communication. Int. J. Netw. Manag. 2025, 35, e2312. [Google Scholar] [CrossRef]

- Geraci, G.; López-Pérez, D.; Benzaghta, M.; Chatzinotas, S. Integrating Terrestrial and Non-Terrestrial Networks: 3D Opportunities and Challenges. IEEE Commun. Mag. 2022, 61, 42–48. [Google Scholar] [CrossRef]

- Çiloğlu, B.; Koç, G.B.; Shamsabadi, A.A.; Ozturk, M.; Yanikomeroglu, H. Strategic Demand-Planning in Wireless Networks: Can Generative-AI Save Spectrum and Energy? IEEE Commun. Mag. 2025, 63, 134–141. [Google Scholar] [CrossRef]

- Khanh, Q.V.; Hoai, N.V.; Manh, L.D.; Le, A.N.; Jeon, G. Wireless Communication Technologies for IoT in 5G: Vision, Applications, and Challenges. Wirel. Commun. Mob. Comput. 2022, 2022, 3229294. [Google Scholar] [CrossRef]

- Frieden, R. The evolving 5G case study in United States unilateral spectrum planning and policy. Telecommun. Policy 2020, 44, 102011. [Google Scholar] [CrossRef]

- Biswas, S.; Bishnu, A.; Khan, F.A.; Ratnarajah, T. In-Band Full-Duplex Dynamic Spectrum Sharing in Beyond 5G Networks. IEEE Commun. Mag. 2021, 59, 54–60. [Google Scholar] [CrossRef]

- Obite, F.; Usman, A.D.; Okafor, E. An overview of deep reinforcement learning for spectrum sensing in cognitive radio networks. Digit. Signal Process. 2021, 113, 103014. [Google Scholar] [CrossRef]

- Doshi, A.; Yerramalli, S.; Ferrari, L.; Yoo, T.; Andrews, J.G. A deep reinforcement learning framework for conten-tion-based spectrum sharing. IEEE J. Sel. Areas Commun. 2021, 39, 2526–2540. [Google Scholar] [CrossRef]

- Imoize, A.L.; Obakhena, H.I.; Anyasi, F.I.; Adelabu, M.A.; Kavitha, K.; Faruk, N. Spectral Efficiency Bounds of Cell-Free Massive MIMO Assisted UAV Cellular Communication. In Proceedings of the 2022 IEEE Nigeria 4th International Conference on Disruptive Technologies for Sustainable Development (NIGERCON), Lagos, Nigeria, 5–7 April 2022; pp. 1–5. [Google Scholar]

- Elhachmi, J. Distributed reinforcement learning for dynamic spectrum allocation in cognitive radio-based internet of things. IET Netw. 2022, 11, 207–220. [Google Scholar] [CrossRef]

- He, X.; Luo, M.; Hu, Y.; Xiong, F. Data sharing mode of dispatching automation system based on distributed machine learning. Int. J. Netw. Manag. 2024, 35, e2269. [Google Scholar] [CrossRef]

- Nisa, N.; Khan, A.S.; Ahmad, Z.; Abdullah, J. TPAAD: Two-phase authentication system for denial of service attack detection and mitigation using machine learning in software-defined network. Int. J. Netw. Manag. 2024, 34, e2258. [Google Scholar] [CrossRef]

- Rahman, A.; Khan, M.S.I.; Montieri, A.; Islam, M.J.; Karim, M.R.; Hasan, M.; Kundu, D.; Nasir, M.K.; Pescapè, A. BlockSD-5GNet: Enhancing security of 5G network through blockchain-SDN with ML-based bandwidth prediction. Trans. Emerg. Telecommun. Technol. 2024, 35, e4965. [Google Scholar] [CrossRef]

- Saha, R.K.; Cioffi, J.M. Dynamic Spectrum Sharing for 5G NR and 4G LTE Coexistence—A Comprehensive Review. IEEE Open J. Commun. Soc. 2024, 5, 795–835. [Google Scholar] [CrossRef]

- Khan, A.; Ahmad, S.; Ali, I.; Hayat, B.; Tian, Y.; Liu, W. Dynamic mobility and handover management in software-defined networking-based fifth-generation heterogeneous networks. Int. J. Netw. Manag. 2025, 35, e2268. [Google Scholar] [CrossRef]

- Yu, P.; Zhou, F.; Zhang, X.; Qiu, X.; Kadoch, M.; Cheriet, M. Deep Learning-Based Resource Allocation for 5G Broadband TV Service. IEEE Trans. Broadcast. 2020, 66, 800–813. [Google Scholar] [CrossRef]

- Kurunathan, H.; Huang, H.; Li, K.; Ni, W.; Hossain, E. Machine Learning-Aided Operations and Communications of Unmanned Aerial Vehicles: A Contemporary Survey. IEEE Commun. Surv. Tutorials 2023, 26, 496–533. [Google Scholar] [CrossRef]

- Matinmikko-Blue, M.; Yrjola, S.; Ahokangas, P. Spectrum Management in the 6G Era: The Role of Regulation and Spectrum Sharing. In Proceedings of the 2020 2nd 6G Wireless Summit (6G SUMMIT), Levi, Finland, 17–20 March 2020; pp. 1–5. [Google Scholar]

- Inamdar, M.A.; Kumaraswamy, H.V. Energy efficient 5G networks: Techniques and challenges. In Proceedings of the 2020 International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 10–12 September 2020; pp. 1317–1322. [Google Scholar]

- Sharma, H.; Kumar, N. Deep learning based physical layer security for terrestrial communications in 5G and beyond networks: A survey. Phys. Commun. 2023, 57, 102002. [Google Scholar] [CrossRef]

- Cao, Y.; Lien, S.-Y.; Liang, Y.-C.; Niyato, D. Multi-Tier Deep Reinforcement Learning for Non-Terrestrial Networks. IEEE Wirel. Commun. 2024, 31, 194–201. [Google Scholar] [CrossRef]

- Heydarishahreza, N.; Han, T.; Ansari, N. Spectrum Sharing and Interference Management for 6G LEO Satellite-Terrestrial Network Integration. IEEE Commun. Surv. Tutorials 2024. [Google Scholar] [CrossRef]

- Zhang, L.; Wei, Z.; Wang, L.; Yuan, X.; Wu, H.; Xu, W. Spectrum Sharing in the Sky and Space: A Survey. Sensors 2022, 23, 342. [Google Scholar] [CrossRef]

- Khalid, M.; Ali, J.; Roh, B.-H. Artificial Intelligence and Machine Learning Technologies for Integration of Terrestrial in Non-Terrestrial Networks. IEEE Internet Things Mag. 2024, 7, 28–33. [Google Scholar] [CrossRef]

- Si, J.; Huang, R.; Li, Z.; Hu, H.; Jin, Y.; Cheng, J.; Al-Dhahir, N. When Spectrum Sharing in Cognitive Networks Meets Deep Reinforcement Learning: Architecture, Fundamentals, and Challenges. IEEE Netw. 2023, 38, 187–195. [Google Scholar] [CrossRef]

- Tang, C.; Chen, Y.; Chen, G.; Du, L.; Liu, H. A Dynamic and Collaborative Spectrum Sharing Strategy Based on Multi-Agent DRL in Satellite-Terrestrial Converged Networks. IEEE Trans. Veh. Technol. 2024, 74, 7969–7984. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhang, Q.; Ge, J.; Liang, Y.-C. Hierarchical Cognitive Spectrum Sharing in Space-Air-Ground Integrated Networks. IEEE Trans. Wirel. Commun. 2024, 24, 1430–1447. [Google Scholar] [CrossRef]

- Mehmood Mughal, D.; Mahboob, T.; Tariq Shah, S.; Kim, S.H.; Young Chung, M. Deep learning-based spectrum sharing in next generation multi-operator cellular networks. Int. J. Commun. Syst. 2025, 38, e5964. [Google Scholar] [CrossRef]

- Zhao, Q.; Zou, H.; Tian, Y.; Bariah, L.; Mouhouche, B.; Bader, F.; Almazrouei, E.; Debbah, M. Artificial Intelligence-Enabled Dy-namic Spectrum Management. In Intelligent Spectrum Management Towards 6G; Wiley & Sons: Hoboken, NJ, USA, 2025; pp. 73–89. [Google Scholar] [CrossRef]

- Brown, C.; Ghasemi, A. Evolution Toward Data-Driven Spectrum Sharing: Opportunities and Challenges. IEEE Access 2023, 11, 99680–99692. [Google Scholar] [CrossRef]

- Li, F.; Shen, B.; Guo, J.; Lam, K.-Y.; Wei, G.; Wang, L. Dynamic Spectrum Access for Internet-of-Things Based on Federated Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2022, 71, 7952–7956. [Google Scholar] [CrossRef]

- Das, S.K.; Rahman, S.; Mohjazi, L.; Imran, M.A.; Rabie, K.M. Reinforcement Learning-Based Resource Allocation for M2M Communications over Cellular Networks. In Proceedings of the 2022 IEEE Wireless Communications and Networking Conference (WCNC), Austin, TX, USA, 10–13 April 2022; pp. 1473–1478. [Google Scholar]

- Zhang, Y.; He, D.; He, W.; Xu, Y.; Guan, Y.; Zhang, W. Dynamic spectrum allocation by 5G base station. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC), Limassol, Cyprus, 15–19 June 2020; pp. 1463–1467. [Google Scholar]

- Srinivasan, M.; Kotagi, V.J.; Murthy, C.S.R. A Q-Learning Framework for User QoE Enhanced Self-Organizing Spectrally Efficient Network Using a Novel Inter-Operator Proximal Spectrum Sharing. IEEE J. Sel. Areas Commun. 2016, 34, 2887–2901. [Google Scholar] [CrossRef]

- Ahmad, W.S.H.M.W.; Radzi, N.A.M.; Samidi, F.S.; Ismail, A.; Abdullah, F.; Jamaludin, M.Z.; Zakaria, M.N. 5G Technology: Towards Dynamic Spectrum Sharing Using Cognitive Radio Networks. IEEE Access 2020, 8, 14460–14488. [Google Scholar] [CrossRef]

- Haldorai, A.; Sivaraj, J.; Nagabushanam, M.; Roberts, M.K.; Karras, D.A. Cognitive Wireless Networks Based Spectrum Sensing Strategies: A Comparative Analysis. Appl. Comput. Intell. Soft Comput. 2022, 2022, 6988847. [Google Scholar] [CrossRef]

- Parvini, M.; Zarif, A.H.; Nouruzi, A.; Mokari, N.; Javan, M.R.; Abbasi, B.; Ghasemi, A.; Yanikomeroglu, H. Spectrum Sharing Schemes From 4G to 5G and Beyond: Protocol Flow, Regulation, Ecosystem, Economic. IEEE Open J. Commun. Soc. 2023, 4, 464–517. [Google Scholar] [CrossRef]

- Zheleva, M.; Anderson, C.R.; Aksoy, M.; Johnson, J.T.; Affinnih, H.; DePree, C.G. Radio Dynamic Zones: Motivations, Challenges, and Opportunities to Catalyze Spectrum Coexistence. IEEE Commun. Mag. 2023, 61, 156–162. [Google Scholar] [CrossRef]

- Alkhayyat, A.; Abedi, F.; Bagwari, A.; Joshi, P.; Jawad, H.M.; Mahmood, S.N.; Yousif, Y.K. Fuzzy logic, genetic algorithms, and artificial neural networks applied to cognitive radio networks: A review. Int. J. Distrib. Sens. Netw. 2022, 18, 15501329221113508. [Google Scholar] [CrossRef]

- Hindia, M.N.; Qamar, F.; Ojukwu, H.; Dimyati, K.; Al-Samman, A.M.; Amiri, I.S. On platform to enable the cog-nitive radio over 5G networks. Wirel. Pers. Commun. 2020, 113, 1241–1262. [Google Scholar] [CrossRef]

- Bhattarai, S.; Park, J.-M.; Lehr, W. Dynamic Exclusion Zones for Protecting Primary Users in Database-Driven Spectrum Sharing. IEEE/ACM Trans. Netw. 2020, 28, 1506–1519. [Google Scholar] [CrossRef]

- Wei, M.; Li, X.; Xie, W.; Hu, C. Practical Performance Analysis of Interference in DSS System. Appl. Sci. 2023, 13, 1233. [Google Scholar] [CrossRef]

- Al-Dulaimi, O.M.K.; Al-Dulaimi, M.K.H.; Alexandra, M.O.; Al-Dulaimi, A.M.K. Performing strategic spectrum sensing study for the cognitive radio networks. In Proceedings of the 2022 International Conference on Communications, Information, Electronic and Energy Systems (CIEES), Veliko Tarnovo, Bulgaria, 24–26 November 2022; pp. 1–6. [Google Scholar]

- Perera, L.; Ranaweera, P.; Kusaladharma, S.; Wang, S.; Liyanage, M. A Survey on Blockchain for Dynamic Spectrum Sharing. IEEE Open J. Commun. Soc. 2024, 5, 1753–1802. [Google Scholar] [CrossRef]

- Barb, G.; Alexa, F.; Otesteanu, M. Dynamic Spectrum Sharing for Future LTE-NR Networks. Sensors 2021, 21, 4215. [Google Scholar] [CrossRef]

- Ivanov, A.; Tonchev, K.; Poulkov, V.; Manolova, A. Probabilistic Spectrum Sensing Based on Feature Detection for 6G Cognitive Radio: A Survey. IEEE Access 2021, 9, 116994–117026. [Google Scholar] [CrossRef]

- El Azaly, N.M.; Badran, E.F.; Kheirallah, H.N.; Farag, H.H. Performance analysis of centralized dynamic spectrum access via channel reservation mechanism in cognitive radio networks. Alex. Eng. J. 2021, 60, 1677–1688. [Google Scholar] [CrossRef]

- Kakkavas, G.; Tsitseklis, K.; Karyotis, V.; Papavassiliou, S. A Software Defined Radio Cross-Layer Resource Allocation Approach for Cognitive Radio Networks: From Theory to Practice. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 740–755. [Google Scholar] [CrossRef]

- Babu, R.G.; Amudha, V.; Karthika, P. Architectures and Protocols for Next-Generation Cognitive Networking. Mach. Learn. Cogn. Comput. Mob. Commun. Wirel. Netw. 2020, 155–177. [Google Scholar] [CrossRef]

- Zuo, J.; Joe-Wong, C. Combinatorial multi-armed bandits for resource allocation. In Proceedings of the 2021 55th Annual Con-ference on Information Sciences and Systems (CISS), Baltimore, MD, USA, 24–26 March 2021; pp. 1–4. [Google Scholar]

- Kang, S.; Joo, C. Low-Complexity Learning for Dynamic Spectrum Access in Multi-User Multi-Channel Networks. IEEE Trans. Mob. Comput. 2020, 20, 3267–3281. [Google Scholar] [CrossRef]

- Moos, J.; Hansel, K.; Abdulsamad, H.; Stark, S.; Clever, D.; Peters, J. Robust Reinforcement Learning: A Review of Foundations and Recent Advances. Mach. Learn. Knowl. Extr. 2022, 4, 13. [Google Scholar] [CrossRef]

- Trabelsi, N.; Fourati, L.C.; Chen, C.S. Interference management in 5G and beyond networks: A comprehensive survey. Comput. Netw. 2023, 239, 110159. [Google Scholar] [CrossRef]

- Mughees, A.; Tahir, M.; Sheikh, M.A.; Ahad, A. Towards Energy Efficient 5G Networks Using Machine Learning: Taxonomy, Research Challenges, and Future Research Directions. IEEE Access 2020, 8, 187498–187522. [Google Scholar] [CrossRef]

- Alhammadi, A.; Shayea, I.; El-Saleh, A.A.; Azmi, M.H.; Ismail, Z.H.; Kouhalvandi, L.; Saad, S.A. Artificial intel-ligence in 6G wireless networks: Opportunities, applications, and challenges. Int. J. Intell. Syst. 2024, 2024, 8845070. [Google Scholar] [CrossRef]

- Li, F.; Nie, W.; Lam, K.-Y.; Wang, L. Network traffic prediction based on PSO-LightGBM-TM. Comput. Netw. 2024, 254, 110810. [Google Scholar] [CrossRef]

- Pisa, P.S.; Costa, B.; Gonçalves, J.A.; de Medeiros, D.S.V.; Mattos, D.M.F. A Private Strategy for Workload Forecasting on Large-Scale Wireless Networks. Information 2021, 12, 488. [Google Scholar] [CrossRef]

- Fourati, H.; Maaloul, R.; Chaari, L.; Jmaiel, M. Comprehensive survey on self-organizing cellular network approaches applied to 5G networks. Comput. Netw. 2021, 199, 108435. [Google Scholar] [CrossRef]

- Jiang, W.; Zhang, Y.; Han, H.; Huang, Z.; Li, Q.; Mu, J. Mobile traffic prediction in consumer applications: A mul-timodal deep learning approach. IEEE Trans. Consum. Electron. 2024, 70, 3425–3435. [Google Scholar] [CrossRef]

- Zhang, G.; Zhou, H.; Wang, C.; Xue, H.; Wang, J.; Wan, H. Forecasting time series albedo using NARnet based on EEMD decomposition. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3544–3557. [Google Scholar] [CrossRef]

- Sarma, S.S.; Hazra, R.; Mukherjee, A. Symbiosis Between D2D Communication and Industrial IoT for Industry 5.0 in 5G mm-Wave Cellular Network: An Interference Management Approach. IEEE Trans. Ind. Inform. 2021, 18, 5527–5536. [Google Scholar] [CrossRef]

- Albinsaid, H.; Singh, K.; Biswas, S.; Li, C.-P. Multi-Agent Reinforcement Learning-Based Distributed Dynamic Spectrum Access. IEEE Trans. Cogn. Commun. Netw. 2021, 8, 1174–1185. [Google Scholar] [CrossRef]

- Albinsaid, H.; Singh, K.; Biswas, S.; Li, C.-P.; Alouini, M.-S. Block Deep Neural Network-Based Signal Detector for Generalized Spatial Modulation. IEEE Commun. Lett. 2020, 24, 2775–2779. [Google Scholar] [CrossRef]

- Pan, L.; Rashid, T.; Peng, B.; Huang, L.; Whiteson, S. Regularized softmax deep multi-agent q-learning. Adv. Neural Inf. Process. Syst. 2021, 34, 1365–1377. [Google Scholar]

- Naous, T.; Itani, M.; Awad, M.; Sharafeddine, S. Reinforcement Learning in the Sky: A Survey on Enabling Intelligence in NTN-Based Communications. IEEE Access 2023, 11, 19941–19968. [Google Scholar] [CrossRef]

- Alabi, C.A.; Idakwo, M.A.; Imoize, A.L.; Adamu, T.; Sur, S.N. AI for spectrum intelligence and adaptive resource management. In Artificial Intelligence for Wireless Communication Systems; CRC Press: Boca Raton, FL, USA, 2024; pp. 57–83. [Google Scholar]

- Ma, J.; Ushiku, Y.; Sagara, M. The Effect of Improving Annotation Quality on Object Detection Datasets: A Preliminary Study. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–20 June 2022; pp. 4849–4858. [Google Scholar]

- Ienca, M. Don’t pause giant AI for the wrong reasons. Nat. Mach. Intell. 2023, 5, 470–471. [Google Scholar] [CrossRef]

- Kocoń, J.; Cichecki, I.; Kaszyca, O.; Kochanek, M.; Szydło, D.; Baran, J.; Bielaniewicz, J.; Gruza, M.; Janz, A.; Kanclerz, K.; et al. ChatGPT: Jack of all trades, master of none. Inf. Fusion 2023, 99, 101861. [Google Scholar] [CrossRef]

- Ozpoyraz, B.; Dogukan, A.T.; Gevez, Y.; Altun, U.; Basar, E. Deep Learning-Aided 6G Wireless Networks: A Comprehensive Survey of Revolutionary PHY Architectures. IEEE Open J. Commun. Soc. 2022, 3, 1749–1809. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, Y.-Q.; Wang, H.; Guo, D. Deep STFT-CNN for Spectrum Sensing in Cognitive Radio. IEEE Commun. Lett. 2020, 25, 864–868. [Google Scholar] [CrossRef]

- El-Shafai, W.; Fawzi, A.; Sedik, A.; Zekry, A.; El-Banby, G.M.; Khalaf, A.A.M.; El-Samie, F.E.A.; Abd-Elnaby, M. Convolutional neural network model for spectrum sensing in cognitive radio systems. Int. J. Commun. Syst. 2022, 35, e5072. [Google Scholar] [CrossRef]

- Cai, L.; Cao, K.; Wu, Y.; Zhou, Y. Spectrum Sensing Based on Spectrogram-Aware CNN for Cognitive Radio Network. IEEE Wirel. Commun. Lett. 2022, 11, 2135–2139. [Google Scholar] [CrossRef]

- Wang, Q.; Guo, B. CNN-SVM Spectrum Sensing in Cognitive Radio Based on Signal Covariance Matrix. J. Phys. Conf. Ser. 2022, 2395, 012052. [Google Scholar] [CrossRef]

- Duan, Y.; Huang, F.; Xu, L.; Gulliver, T.A. Intelligent spectrum sensing algorithm for cognitive internet of vehicles based on KPCA and improved CNN. Peer-to-Peer Netw. Appl. 2023, 16, 2202–2217. [Google Scholar] [CrossRef]

- Chae, K.; Kim, Y. DS2MA: A Deep Learning-Based Spectrum Sensing Scheme for a Multi-Antenna Receiver. IEEE Wirel. Commun. Lett. 2023, 12, 952–956. [Google Scholar] [CrossRef]

- Suriya, M.; Sumithra, M.G. Enhancing cooperative spectrum sensing in flying cell towers for disaster management using convolutional neural networks. In Proceedings of the EAI International Conference on Big Data Innovation for Sustainable Cognitive Computing: BDCC 2018, Coimbatore, India, 13–15 December 2018; pp. 181–190. [Google Scholar]

- Shafiq, M.; Gu, Z. Deep Residual Learning for Image Recognition: A Survey. Appl. Sci. 2022, 12, 8972. [Google Scholar] [CrossRef]

- Chandra, S.S.; Upadhye, A.; Saravanan, P.; Gurugopinath, S.; Muralishankar, R. Deep Neural Network Architectures for Spectrum Sensing Using Signal Processing Features. In Proceedings of the 2021 IEEE International Conference on Distributed Computing, VLSI, Electrical Circuits and Robotics (DISCOVER), Nitte, India, 19–20 November 2021; pp. 129–134. [Google Scholar]

- Ren, X.; Mosavat-Jahromi, H.; Cai, L.; Kidston, D. Spatio-Temporal Spectrum Load Prediction Using Convolutional Neural Network and ResNet. IEEE Trans. Cogn. Commun. Netw. 2021, 8, 502–513. [Google Scholar] [CrossRef]

- Gai, J.; Zhang, L.; Wei, Z. Spectrum Sensing Based on STFT-ImpResNet for Cognitive Radio. Electronics 2022, 11, 2437. [Google Scholar] [CrossRef]

- Zhen, P.; Zhang, B.; Chen, Z.; Guo, D.; Ma, W. Spectrum Sensing Method Based on Wavelet Transform and Residual Network. IEEE Wirel. Commun. Lett. 2022, 11, 2517–2521. [Google Scholar] [CrossRef]

- Xiao, J.; Zhou, Z. Research progress of RNN language model. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 27–29 June 2020; pp. 1285–1288. [Google Scholar]

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) Network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Roopa, V.; Pradhan, H.S. Deep Learning Based Intelligent Spectrum Sensing in Cognitive Radio Networks. IETE J. Res. 2024, 70, 8425–8445. [Google Scholar] [CrossRef]

- Bkassiny, M. A Deep Learning-based Signal Classification Approach for Spectrum Sensing using Long Short-Term Memory (LSTM) Networks. In Proceedings of the 2022 6th International Conference on Information Technology, Information Systems and Electrical Engineering (ICITISEE), Yogyakarta, Indonesia, 13–14 December 2022; pp. 667–672. [Google Scholar]

- Chen, W.; Wu, H.; Ren, S. CM-LSTM Based Spectrum Sensing. Sensors 2022, 22, 2286. [Google Scholar] [CrossRef]

- Wang, L.; Hu, J.; Jiang, R.; Chen, Z. A Deep Long-Term Joint Temporal–Spectral Network for Spectrum Prediction. Sensors 2024, 24, 1498. [Google Scholar] [CrossRef]

- Arunachalam, G.; SureshKumar, P. Optimized Deep Learning Model for Effective Spectrum Sensing in Dynamic SNR Scenario. Comput. Syst. Sci. Eng. 2023, 45, 1279–1294. [Google Scholar] [CrossRef]

- Al Daweri, M.S.; Abdullah, S.; Ariffin, K.A.Z. A Migration-Based Cuttlefish Algorithm With Short-Term Memory for Optimization Problems. IEEE Access 2020, 8, 70270–70292. [Google Scholar] [CrossRef]

- Zhang, Y.; Luo, Z. A Review of Research on Spectrum Sensing Based on Deep Learning. Electronics 2023, 12, 4514. [Google Scholar] [CrossRef]

- Nasser, A.; Chaitou, M.; Mansour, A.; Yao, K.C.; Charara, H. A Deep Neural Network Model for Hybrid Spectrum Sensing in Cognitive Radio. Wirel. Pers. Commun. 2021, 118, 281–299. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, W.; Qin, Z.; Zhang, Y.; Gao, H.; Pan, M.; Lin, J. Deep Neural Network-Based Robust Spectrum Sensing: Exploiting Phase Difference Distribution. In Proceedings of the ICC 2021—IEEE International Conference on Communications, Montreal, QC, Canada, 4–23 June 2021; pp. 1–7. [Google Scholar]

- Zhang, X.; Ma, Y.; Liu, Y.; Wu, S.; Jiao, J.; Gao, Y.; Zhang, Q. Robust DNN-Based Recovery of Wideband Spectrum Signals. IEEE Wirel. Commun. Lett. 2023, 12, 1712–1715. [Google Scholar] [CrossRef]

- Zhao, R.; Ruan, Y.; Li, Y.; Li, T.; Zhang, R. CCD-GAN for Domain Adaptation in Time-Frequency Localization-Based Wideband Spectrum Sensing. IEEE Commun. Lett. 2023, 27, 2521–2525. [Google Scholar] [CrossRef]

- Li, X.; Hu, Z.; Shen, C.; Wu, H.; Zhao, Y. TFF_aDCNN: A Pre-Trained Base Model for Intelligent Wideband Spectrum Sensing. IEEE Trans. Veh. Technol. 2023, 72, 12912–12926. [Google Scholar] [CrossRef]

- Huang, C.; Zhou, H.; Zaïane, O.R.; Mou, L.; Li, L. Non-autoregressive Translation with Layer-Wise Prediction and Deep Supervision. Proc. AAAI Conf. Artif. Intell. 2022, 36, 10776–10784. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Rubenstein, P.K.; Asawaroengchai, C.; Nguyen, D.D.; Bapna, A.; Borsos, Z.; Quitry, F.D.C.; Chen, P.; Badawy, D.E.; Han, W.; Kharitonov, E.; et al. Audiopalm: A large language model that can speak and listen. arXiv 2023, arXiv:2306.12925. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Szott, S.; Kosek-Szott, K.; Gawlowicz, P.; Gomez, J.T.; Bellalta, B.; Zubow, A.; Dressler, F. Wi-Fi Meets ML: A Survey on Improving IEEE 802.11 Performance With Machine Learning. IEEE Commun. Surv. Tutor. 2022, 24, 1843–1893. [Google Scholar] [CrossRef]

- Yang, B.; Cao, X.; Huang, C.; Guan, Y.L.; Yuen, C.; Di Renzo, M.; Niyato, D.; Debbah, M.; Hanzo, L. Spectrum-learning-aided reconfig-urable intelligent surfaces for “green” 6G networks. IEEE Netw. 2022, 35, 20–26. [Google Scholar] [CrossRef]

- Bosso, C.; Sen, P.; Cantos-Roman, X.; Parisi, C.; Thawdar, N.; Jornet, J.M. Ultrabroadband Spread Spectrum Techniques for Secure Dynamic Spectrum Sharing Above 100 GHz Between Active and Passive Users. In Proceedings of the 2021 IEEE International Symposium on Dynamic Spectrum Access Networks (DySPAN), Angeles, CA, USA, 13–15 December 2021; pp. 45–52. [Google Scholar]

- Tekbıyık, K.; Akbunar, O.; Ekti, A.R.; Gorcin, A.; Kurt, G.K.; Qaraqe, K.A. Spectrum Sensing and Signal Identification With Deep Learning Based on Spectral Correlation Function. IEEE Trans. Veh. Technol. 2021, 70, 10514–10527. [Google Scholar] [CrossRef]

- Sharma, S.; Arjunan, T. Natural language processing for detecting anomalies and intrusions in unstructured cyber-security data. Int. J. Inf. Cybersecur. 2023, 7, 1–24. [Google Scholar]

- Ali, Z.; Tiberti, W.; Marotta, A.; Cassioli, D. Empowering Network Security: BERT Transformer Learning Approach and MLP for Intrusion Detection in Imbalanced Network Traffic. IEEE Access 2024, 12, 137618–137633. [Google Scholar] [CrossRef]

- Kuznetsov, O.; Frontoni, E.; Kryvinska, N.; Smirnov, O.; Imoize, A.L. Computational Modeling of Enhanced Spread Spectrum Codes for Asynchronous Wireless Communication. In Computational Modeling and Simulation of Advanced Wireless Communication Systems; CRC Press: Boca Raton, FL, USA, 2024; pp. 403–447. [Google Scholar]

- Ali, S.; Rehman, S.U.; Imran, A.; Adeem, G.; Iqbal, Z.; Kim, K.-I. Comparative Evaluation of AI-Based Techniques for Zero-Day Attacks Detection. Electronics 2022, 11, 3934. [Google Scholar] [CrossRef]

- Wu, Y.; Zou, B.; Cao, Y. Current Status and Challenges and Future Trends of Deep Learning-Based Intrusion Detection Models. J. Imaging 2024, 10, 254. [Google Scholar] [CrossRef]

- Drîngă, B.; Elhajj, M. Performance and Security Analysis of Privacy-Preserved IoT Applications. In Proceedings of the 2023 IEEE International Conference on Communication, Networks and Satellite (COMNETSAT), Malang, Indonesia, 23–25 November 2023; pp. 549–556. [Google Scholar]

- Stahl, B.C.; Antoniou, J.; Bhalla, N.; Brooks, L.; Jansen, P.; Lindqvist, B.; Kirichenko, A.; Marchal, S.; Rodrigues, R.; Santiago, N.; et al. A systematic review of artificial intelligence impact assessments. Artif. Intell. Rev. 2023, 56, 12799–12831. [Google Scholar] [CrossRef]

- Shafin, R.; Liu, L.; Chandrasekhar, V.; Chen, H.; Reed, J.; Zhang, J.C. Artificial Intelligence-Enabled Cellular Networks: A Critical Path to Beyond-5G and 6G. IEEE Wirel. Commun. 2020, 27, 212–217. [Google Scholar] [CrossRef]

- Moustafa, N. A new distributed architecture for evaluating AI-based security systems at the edge: Network TON_IoT datasets. Sustain. Cities Soc. 2021, 72, 102994. [Google Scholar] [CrossRef]

- Olabanji, S.O.; Marquis, Y.A.; Adigwe, C.S.; Ajayi, S.A.; Oladoyinbo, T.O.; Olaniyi, O.O. AI-Driven Cloud Security: Examining the Impact of User Behavior Analysis on Threat Detection. Asian J. Res. Comput. Sci. 2024, 17, 57–74. [Google Scholar] [CrossRef]

- Khan, M.J.; Khan, M.A.; Beg, A.; Malik, S.; El-Sayed, H. An overview of the 3GPP identified Use Cases for V2X Services. Procedia Comput. Sci. 2022, 198, 750–756. [Google Scholar] [CrossRef]

- Walia, J.S.; Hämmäinen, H.; Kilkki, K.; Flinck, H.; Yrjölä, S.; Matinmikko-Blue, M. A Virtualization Infrastructure Cost Model for 5G Network Slice Provisioning in a Smart Factory. J. Sens. Actuator Netw. 2021, 10, 51. [Google Scholar] [CrossRef]

- Liu, J.; Shu, L.; Lu, X.; Liu, Y. Survey of Intelligent Agricultural IoT Based on 5G. Electronics 2023, 12, 2336. [Google Scholar] [CrossRef]

- Zhou, F.; Yu, P.; Feng, L.; Qiu, X.; Wang, Z.; Meng, L.; Kadoch, M.; Gong, L.; Yao, X. Automatic Network Slicing for IoT in Smart City. IEEE Wirel. Commun. 2020, 27, 108–115. [Google Scholar] [CrossRef]

- Çiloğlu, B.; Koç, G.B.; Ozturk, M.; Yanikomeroglu, H. Cell Switching in HAPS-Aided Networking: How the Obscurity of Traffic Loads Affects the Decision. IEEE Trans. Veh. Technol. 2024, 73, 17782–17787. [Google Scholar] [CrossRef]

- Shamsabadi, A.A.; Yadav, A.; Yanikomeroglu, H. Enhancing Next-Generation Urban Connectivity: Is the Integrated HAPS-Terrestrial Network a Solution? IEEE Commun. Lett. 2024, 28, 1112–1116. [Google Scholar] [CrossRef]

- Datasets. IEEE Dataport. Available online: https://ieee-dataport.org/datasets (accessed on 20 February 2025).

- Zarif, A.H. AoI Minimization in Energy Harvesting and Spectrum Sharing Enabled 6G Networks. IEEE Trans. Green Commun. Netw. 2022, 6, 2043–2054. [Google Scholar] [CrossRef]

- Tekbiyik, K.; Akbunar, Ö.; Ekti, A.R.; Görçin, A.; Kurt, G.K. COSINE: Cellular Communication SIgNal Dataset. IEEE Dataport, 20 January 2020. Available online: https://ieee-dataport.org/open-access/cosine-cellular-communication-signal-dataset (accessed on 22 May 2025).

- KU Leuven LTE Dataset. Available online: https://www.esat.kuleuven.be/wavecorearenberg/research/NetworkedSystems/projects/massive-mimo (accessed on 26 May 2025).

- Everett, E.; Shepard, C.; Zhong, L.; Sabharwal, A. SoftNull: Many-Antenna Full-Duplex Wireless via Digital Beamforming. IEEE Trans. Wirel. Commun. 2016, 15, 8077–8092. [Google Scholar] [CrossRef]

- El-Hajj, M. Enhancing Communication Networks in the New Era with Artificial Intelligence: Techniques, Applications, and Future Directions. Network 2025, 5, 1. [Google Scholar] [CrossRef]

- Alabi, C.A.; Imoize, A.L.; Giwa, M.A.; Faruk, N.; Tersoo, S.T.; Ehime, A.E. Artificial Intelligence in Spectrum Management: Policy and Regulatory Considerations. In Proceedings of the 2023 2nd International Conference on Multidisciplinary Engineering and Applied Science (ICMEAS), Abuja, Nigeria, 1–3 November 2023; pp. 1–6. [Google Scholar]

- Gupta, A.; Kausar, R.; Tanwar, S.; Alabdulatif, A.; Vimal, V.; Aluvala, S. Efficient spectrum sharing in 5G and beyond network: A survey. Telecommun. Syst. 2025, 88, 1–37. [Google Scholar] [CrossRef]

- Panda, S.B.; Swain, P.K.; Imoize, A.L.; Tripathy, S.S.; Lee, C. A Robust Spectrum Allocation Framework Towards Inference Management in Multichannel Cognitive Radio Networks. Int. J. Commun. Syst. 2025, 38, e6057. [Google Scholar] [CrossRef]

- Alozie, E.; Faruk, N.; Oloyede, A.; Sowande, O.; Imoize, A.; Abdulkarim, A. Intelligent process of spectrum handoff in cognitive radio network. SLU J. Sci. Technol. 2022, 4, 205. [Google Scholar] [CrossRef]

- Behera, J.R.; Imoize, A.L.; Singh, S.S.; Tripathy, S.S.; Bebortta, S. Optimizing Priority Queuing Systems with Server Reservation and Temporal Blocking for Cognitive Radio Networks. Telecom 2024, 5, 21. [Google Scholar] [CrossRef]

- Bovenzi, G.; Cerasuolo, F.; Ciuonzo, D.; Di Monda, D.; Guarino, I.; Montieri, A.; Persico, V.; Pescapé, A. Mapping the Landscape of Generative AI in Network Monitoring and Management. IEEE Trans. Netw. Serv. Manag. 2025, 22, 2441–2472. [Google Scholar] [CrossRef]

| Reference | Methodology | Findings | Limitations | Future Scope |

|---|---|---|---|---|

| [7] | Proposed the lightweight policy enforcement and evaluation system (LPEES), a framework that confines the border gateway protocol (BGP) to the control plane, enabling SDN-based data plane packet switching. Implemented a trust-based routing policy and ensured conflict-free flow rules. | 27 Gbps throughput, a 22.7% improvement over traditional BGP’s 22 Gbps, and reduced communication delay by an average of 17%. In a 32-domain system, LPEES’s control plane converged in about 52 s, whereas the traditional approach required 120 s, 56% improvement, and detected conflicts in flow rules within 9 milliseconds for 1400 rules. | Potential challenges include ensuring compatibility with existing infrastructures, managing policy enforcement complexity across domains, and maintaining security and privacy in inter-domain communications. | Plans to enhance LPEES by incorporating formal models for traffic engineering and conducting further evaluations to assess how various communication parameters affect trust between domains. |

| [9] | Strategic demand planning using GenAI for demand labeling, shaping, and rescheduling in WNs | GenAI enhances spectrum and energy efficiency by compressing and converting content, optimizing user association, load balancing, and interference management. | Implementation feasibility, AI hardware requirements, and real-time adaptability. | Expanding GenAI applications in WNs, optimizing AI-based demand-shaping for large-scale deployments. |

| [10] | Survey on wireless communication technologies for IoT in 5G, covering vision, applications, and challenges. | IoT in 5G enables massive connectivity with low latency, supporting applications like smart cities and intelligent transportation. Technologies like SigFox, Long Range (LoRa), Wireless Fidelity (Wi-Fi), and Long Range Wide Area Network (LoRaWAN) offer extensive coverage and low energy consumption. | Security vulnerabilities in IoT devices, gateways, edge, and cloud servers; high energy consumption. | Research on security-aware IoT frameworks, energy-efficient communication, and cloud-edge hybrid solutions for optimized RA. |

| [11] | Case study on U.S. unilateral 5G spectrum planning and its impact on global SA. | The U.S. Federal Communications Commission (FCC) has aggressively auctioned 5G spectrum without International Telecommunication Union (ITU) consensus, prioritizing national interests over global coordination. This strategy provides short-term benefits but risks long-term industry challenges. | Potential ITU deadlock on spectrum planning, trade disputes, retaliation from other nations, and compatibility issues for wireless devices. | A balanced approach between national spectrum policies and international coordination is needed to prevent market fragmentation and ensure global interoperability. |

| [12] | Survey existing dynamic spectrum sharing (DSS) approaches, propose an in-band full-duplex (IBFD) CBRS network architecture, and design joint beamformers for interference mitigation—numerical analysis of IBFD vs. half duplex (HD) CBRS systems. | IBFD-assisted CBRS improves the performance of priority access license (PAL) and general authorized access (GAA) users while reducing interference toward radar systems compared to half-duplex solutions. The proposed two-step beamformer design enhances detection probability while maintaining QoS constraints. | Trade-offs between radar/mobile broadband network (MBN) transmit power, antenna count, SI cancellation, detection probability, and QoS requirements—complexity of IBFD implementation and real-world integration with DSS. | Further research on IBFD implementation in DSS, optimization of beamforming techniques, and addressing interference management for large-scale networks. |

| [13] | Surveyed DRL applications in spectrum sensing for CRNs, proposing a theoretical model for cooperative spectrum sensing using DRL to enhance detection. | DRL can effectively address noise uncertainty and reduce reliance on prior knowledge of PUs, potentially overcoming limitations of traditional spectrum sensing methods. | Noted challenges include the complexity of DRL models, the need for extensive training data, and the difficulty in real-time implementation within dynamic radio environments. | Encouraged future research to focus on developing efficient DRL algorithms tailored for spectrum sensing, improving model training techniques, and exploring real-world deployments in CRNs. |

| [14] | Formulated decentralized medium access as a partially observable Markov decision process; developed a distributed DRL algorithm with recurrent Q-learning for base stations. | The proposed framework achieves proportional fairness in throughput, matching the performance of adaptive energy detection (ED) thresholds, and is robust to channel fading. | Deploying individual deep Q-networks (DQNs) at each base station without parameter sharing, addressing scalability and convergence in large networks. | Suggested extending the framework to other decision-making problems like rate control, beam selection, and coordinated scheduling in WNs. |

| [15] | Utilized stochastic modeling to analyze load balancing and traffic distribution in vehicular ad hoc networks (VANETs). Applied queueing theory and probabilistic models for network performance assessment. | Stochastic models improve load balance, reduce congestion, and enhance QoS in VANETs. Found that mobility-aware stochastic techniques optimize network RA. | High computational complexity in real-time implementations. Limited evaluation in heterogeneous VANET environments. | Enhancing stochastic models for real-time applications and integrating AI-driven mobility prediction for better load balancing and congestion control. |

| [16] | Deep multi-user RL using deep neural networks (DNN), Q-learning, and cooperative multi-agent systems for CR-based IoT. | Enhanced spectrum utilization, improved user satisfaction, and reduced network interference. | Preliminary implementation lacks security considerations and scalability to larger networks. | Integration with 6G CRNs, blockchain for security, fog computing for IoT, and quantum computing for large-scale data processing. |

| [17] | Multicast source-based tree (MSBT) for point-to-multipoint transmissions; cloud scheduling with AI and big data. | Improved efficiency in large data transfers; concurrent data reception enhances scheduling performance. | Scalability and adaptability across different data center environments have not been fully explored. | Extend to diverse applications, optimize real-world deployment, and enhance communication protocols. |

| [18] | Two-phase authentication for attack detection (TPAAD) using SVM and KNN on CICDoS 2017 dataset in SDN environment. | Improved DoS attack detection with reduced false positives, lower CPU utilization, optimized control channel BW, and better packet delivery ratio. | Tested only in a simulated SDN environment, not validated on real networks. | Implement and evaluate in a real-world SDN environment. |

| [19] | BlockSD-5GNet: blockchain-SDN-network functions virtualization (NFV)-based 5G security framework with ML-based BW prediction (using random forest regressor (RFR)) in an IoT scenario. | Improved BW estimation, enhanced security against threats, better RA, and robustness against failures. | Limited scalability due to fewer nodes, reliance on traditional ML models, lack of real-world implementation, and blockchain overhead. | Implement real-world deployment, integrate advanced ML/DL models, enhance blockchain consensus mechanisms, and optimize energy consumption for IoT devices. |

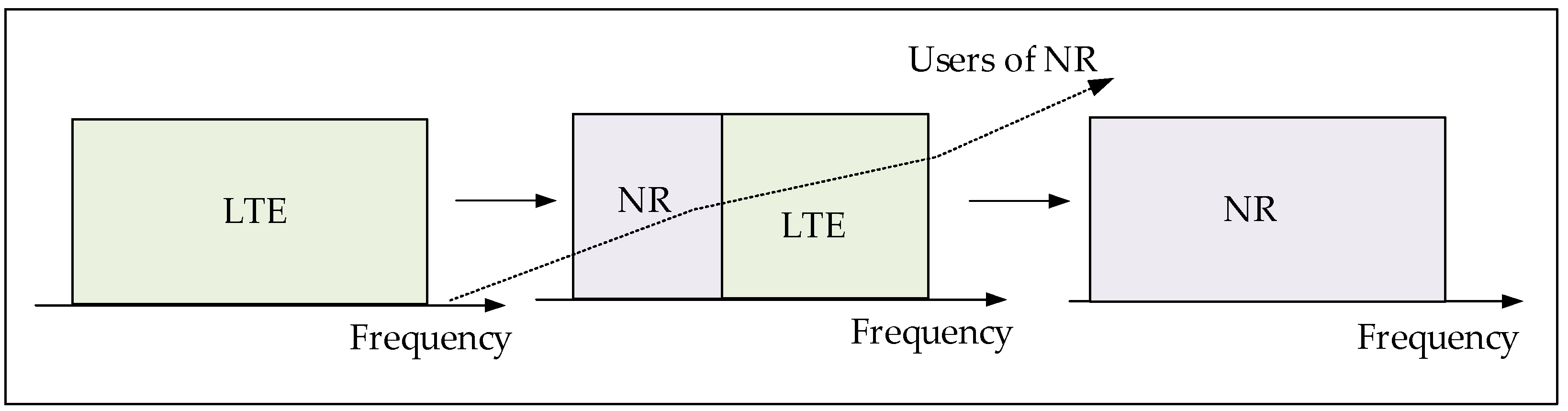

| [20] | Literature review and comparative analysis of DSS for LTE-NR coexistence. | Identifies key DSS challenges, deployment options, and the 3rd Generation Partnership Project (3GPP) standardization efforts; discusses interference, mixed numerology, and backward compatibility issues. | System complexity, regulatory challenges, interference, and increased power consumption. | Enhancing DSS flexibility, optimizing RA, improving scheduling, reducing control overhead, and addressing cyclic redundancy synchronization (CRS) interference |

| [21] | An SDN-based cell selection scheme was proposed using linear programming (LP) and multi-attribute decision-making for optimized handover management in 5G/beyond-5G (B5G), heterogeneous networks (HetNets). | Reduced handovers by 39%, minimized ping-pong effect, improved system throughput, and enhanced load balancing. | Focused only on LP optimization, lacking predictive analytics; real-world deployment challenges not addressed. | Plans to integrate ML with LP for better mobility management, improved throughput, and reduced latency. |

| [22] | Proposed a DL-based RA framework for 5G television (TV) broadcasting using long short-term memory (LSTM) for demand prediction and DRL with convex optimization for BW and PA. | Improved energy efficiency while maintaining QoS, accurate multicast service demand prediction, and optimized power and BW allocation. | Limited by the complexity of DL models and the need for improved demand prediction accuracy. | The plan is to enhance the DL model with more layers and explore 5G techniques like millimeter-wave (mmWave) beamforming and SDN/NFV for better TV service delivery. |

| [23] | Survey of ML techniques in unmanned aerial vehicle (UAV) operations and communications, categorizing ML applications into feature extraction, environment modeling, planning, and control. Analyzed existing research and identified gaps. | Enhance UAV automation, improving feature extraction, prediction, planning, and control. CNN is widely used for image processing, while DRL is effective for UAV control and scheduling. Integration of ML models is increasing. | Lack of end-to-end ML-based UAV solutions. Security, reliability, and trustworthiness of ML applications remain unresolved challenges. | Future research should focus on developing an end-to-end ML framework for UAV operations and improving security, reliability, and trust for UAV automation. |

| [24] | Analysis of SM in 5G and its evolution towards 6G, focusing on regulatory frameworks and SS models. | 6G will require complex SM due to diverse frequency bands and increased SS. Localized and unlicensed spectrum use will grow alongside traditional mobile networks. | Current regulatory frameworks may not keep up with rapid technological advancements, posing challenges for dynamic SA. | Research should explore adaptive SM models for 6G, integrating dynamic sharing mechanisms and flexible regulatory approaches. |

| [26] | Comprehensive survey on DL-based physical layer security (PLS) techniques for 5G and beyond networks, analyzing threats, DL applications, and performance metrics. | DL enhances PLS by detecting attacks, improving physical layer authentication (PLA), secure beamforming, and automatic modulation classification (AMC). DL-based models can predict evolving security threats. | Existing DL-based PLS techniques need improvements in real-time adaptability, robustness to adversarial attacks, and computational efficiency. | Future research should focus on integrating DL with advanced wireless technologies, such as non-orthogonal multiple access (NOMA), massive multiple-input multiple-output (MIMO), mmWave, and quantum communications, for enhanced security. |

| [27] | Developed a multi-tier DRL framework for NTNs, optimizing SA, trajectory planning, and user association across space, air, and ground tiers. | Demonstrated improved overall throughput, reduced signaling overhead, and enhanced handover performance in NTNs through adaptive DRL configurations. | High computational complexity, challenges in orchestration across different tiers, and a lack of a unified optimization approach. | Integrating advanced learning techniques like split learning for distributed computing and adversarial learning for enhanced model training in dynamic NTN environments. |

| [28] | Explores SS and interference management in low Earth orbit (LEO) satellite-TNs using cognitive SS, exclusion zones, beam-power optimization, reconfigurable intelligent surfaces (RIS), and AI-driven techniques. | Enhances spectrum efficiency, interference control, and adaptability through various mitigation strategies. | High computational complexity, regulatory issues, real-time processing constraints, and RIS/AI implementation challenges. | Integrating large language models (LLMs) with DRL for adaptive SM, orthogonal time frequency space (OTFS) for link stability, AI for interference detection, and RIS for optimized signal control. |

| [29] | Reviews SS in aerial/space-ground networks, analyzing spectrum utilization rules, sharing modes (interweave, underlay, overlay), and key technologies like cooperative sensing and beam management. | Identifies challenges such as spectrum instability, beam misalignment, and interference in aerial/space networks, emphasizing the need for efficient SA | High mobility leads to dynamic spectrum conditions, beam misalignment reduces communication quality, and cooperative sensing integration remains complex. | Develop fast spectrum sensing methods, energy-efficient algorithms, and advanced beam management techniques to improve SS in integrated networks. |

| [30] | Investigates AI/ML-driven approaches for integrating TN and NTN in 6G, focusing on adaptive RA, intelligent routing, autonomous network operation, and SM. | AI/ML enhances NTN performance by optimizing resource utilization, improving connectivity, and enabling autonomous decision-making for efficient SA. | Cross-layer optimization, efficient handover management, and regulatory/standardization challenges in global 6G-NTN deployment. | Future research includes ML-based link-level enhancements, mmWave for higher data rates, ultra-low latency IoT applications, and regulatory frameworks for standardized NTN integration. |

| [31] | Introduces a multi-agent reinforcement learning (MARL)-based SS scheme for cognitive networks (CNs) to enhance decision-making and spectrum efficiency. Explains explainable DRL to improve convergence efficiency. | MARL optimizes spectrum utilization with self-decision-making in dynamic environments, reducing latency and improving efficiency. Explainable DRL enhances transparency in decision-making. | Key challenges include multi-objective function formulation, multi-dimensional action space, partial channel state information, and communication overhead in MARL-based SS. | Future work should focus on macro- and micro-strategic planning for heterogeneous networks, multi-agent transmission protocol design, and enhancing DRL explainability for real-world SS applications. |

| [32] | Proposes a cooperative multichannel SS framework for satellite-TN using a dynamic and collaborative SS (DCSS) algorithm. Utilizes multi-agent deep reinforcement learning (MADRL) with double deep Q-network (DDQN) for multichannel assignment and deep deterministic policy gradient (DDPG) for PA under a centralized training and decentralized execution (CTDE) framework. | DCSS enhances spectrum efficiency by optimizing multichannel allocation and power distribution. Simulation results confirm its superiority over existing approaches. | Co-channel interference from inter-system, inter-cell, and intra-cell interactions; real-time global information acquisition; decentralized decision-making in dynamic spectrum environments. | Further refinement of decentralized partially observable Markov decision process (Dec-POMDP) frameworks improved the adaptability of learning models and enhanced spectrum efficiency in large-scale networks. |

| [33] | Hierarchical cognitive spectrum sharing architecture (HCSSA) framework with policy iteration–based beamforming (PIBF) and low-complexity beamforming schemes for SS in space-air-ground integrated networks (SAGINs). | Enhances spectrum efficiency by prioritizing aerial networks over terrestrial networks, improving overall network performance. | Non-convex optimization, channel estimation errors, and interference management. | Refining robust beamforming strategies and optimizing performance under dynamic CSI conditions. |

| Current Paper | Review of papers (2020–2025) from central databases, categorized by AI type, task, and use case; compared techniques on performance and feasibility, and identified trends and research gaps. | RL (e.g., DQN), CNNs, and LSTMs are widely used. AI improves spectrum efficiency and adaptability, supports real-time, cross-layer decisions, and shows promising results in Edge AI. | Lack of model transparency, limited training data, high resource demands, poor scalability and generalization, and few standard benchmarks. | Develop explainable AI (XAI), use federated and edge learning, apply transfer/meta-learning, explore neuromorphic models, and create open datasets and standards. |

| Classification | Architecture | Spectrum Sensing Behavior | Spectrum Access Method | Advantages | Challenges |

|---|---|---|---|---|---|

| Non-Cooperative DSA | Decentralized | Devices individually sense the spectrum and make independent decisions. | Spectrum access is based solely on local sensing results. No information sharing occurs. | Reduced communication overhead; faster decision-making. | Higher risk of false detection; susceptible to interference and spectrum sensing errors. |

| Cooperative DSA | Distributed or centralized | Devices collaborate by sharing sensing data to improve accuracy. | Spectrum access decisions are made collectively, using data fusion (raw data sharing) or decision fusion (final decision sharing). | Enhanced detection accuracy, reduces false alarms, and is more reliable spectrum sensing. | Increased complexity, requires a dedicated control channel, and has synchronization issues. |

| Interweave DSA | Decentralized or hybrid | Detects and utilizes spectrum holes when PUs are inactive. | Opportunistic access: SUs can only be transmitted when a spectrum hole is detected. Transmission stops when the PUs return | Efficient spectrum utilization; no interference with PUs. | Requires precise sensing; spectrum holes may be scarce, limiting transmission opportunities. |

| Overlay DSA | Decentralized or centralized | Knowledge of PU signals is required to minimize interference. | Allow simultaneous transmissions by employing interference cancellation or relaying PUs’ messages. | Supports concurrent transmission; more efficient than interweave DSA. | High computational complexity; requires knowledge of PUs signals. |

| Underlay DSA | Decentralized or hybrid | No explicit spectrum hole detection; operates under strict interference constraints. | SUs transmit simultaneously with PUs but must control power levels to avoid exceeding interference thresholds. | Continuous transmission without waiting for spectrum holes; better spectrum efficiency. | Transmission power limitations; requires advanced interference control mechanisms. |

| Service Type | Frequency Range |

|---|---|

| E-GSM-900 (Mobile Communication) | 880–915 MHz, 925–960 MHz |

| DCS (Mobile Communication) | 1710–1785 MHz, 1805–1880 MHz |

| FM Radio (Broadcasting) | 88–108 MHz |

| Standard C Band (Satellite Communication) | 5.850–6.425 GHz, 3.625–4.200 GHz |

| Non-Directional Radio Beacon (Navigation) | 190–1535 kHz |

| ML Techniques | Learning Type | Key Features | Advantages | Limitations | Future Directions |

|---|---|---|---|---|---|

| Support Vector Machines (SVM) | Supervised learning | Classify data by finding optimal hyperplanes | High accuracy for classification; adequate with small datasets | Computationally expensive with large datasets; sensitive to noise | Integration with DL for feature extraction |

| K-Means Clustering | Unsupervised learning | Group data points based on similarity | Scalable and straightforward; efficient for large datasets | Requires a predefined number of clusters; sensitive to initialization | Adaptive clustering for real-time SM |

| Gradient Follower (GF) | Supervised learning | Optimize decisions using gradient-based methods | Fast convergence; widely used in optimization problems | May get stuck in local minima; requires careful parameter tuning | Hybrid approaches with metaheuristic optimization |

| Artificial Neural Networks (ANNs) | Supervised learning | Mimics human brain structure for pattern recognition | Highly effective in modeling complex, nonlinear relationships | Requires large datasets; computationally intensive | Application in intelligent spectrum sensing |

| Long Short-Term Memory (LSTM) | Supervised learning | A type of recurrent neural network (RNN) designed for sequential data | Addresses long-term dependencies; effective for time-series forecasting | High computational complexity; requires significant training data | Lightweight LSTM models for edge devices |

| Autoregressive Neural Network (NARNET) | Supervised learning | Forecasts time-series data using past values | Excellent for nonlinear time-series prediction; high accuracy | Limited to autoregressive modeling; requires careful tuning | Integration with hybrid statistical-ML approaches |

| Reinforcement Learning (RL) | Reinforcement learning | Learns optimal decisions through rewards and penalties | Adaptable in dynamic environments; effective for RA | High training time; may struggle with exploration–exploitation balance | Federated RL for decentralized SM |

| Technique | Type | Key Components | Strengths | Limitations | Future Directions |

|---|---|---|---|---|---|

| Q-Learning (QL) | Model-free RL | QT, state–action pairs | Simple, effective for small state–action spaces | Computationally infeasible for large state/action spaces | Integrating function approximation (e.g., NNs) to handle large state–action spaces |

| Deep Q-Network (DQN) | Value-based Deep RL | NN, experience replay | Handles large state spaces, prevents instability via experience replay | Struggles with continuous action spaces, overestimates QVs | Exploring distributional RL and improved target update mechanisms to mitigate overestimation |

| Deep Deterministic Policy Gradient (DDPG) | Actor–Critic RL | Actor network, critic network, experience replay | Handles continuous action spaces, learns deterministic policies | Sensitive to hyperparameters, suffers from QV overestimation | Enhancing exploration strategies using entropy regularization and hybrid models |

| Twin Delayed Deep Deterministic Policy Gradient (TD3) | Improved Actor–Critic RL | Dual critic networks, delayed policy updates, clipped Gaussian noise | More stable learning reduces overestimation and prevents overfitting | Higher computational cost, slower training due to delayed updates | Reducing computational complexity through lightweight architectures and efficient parallelization |

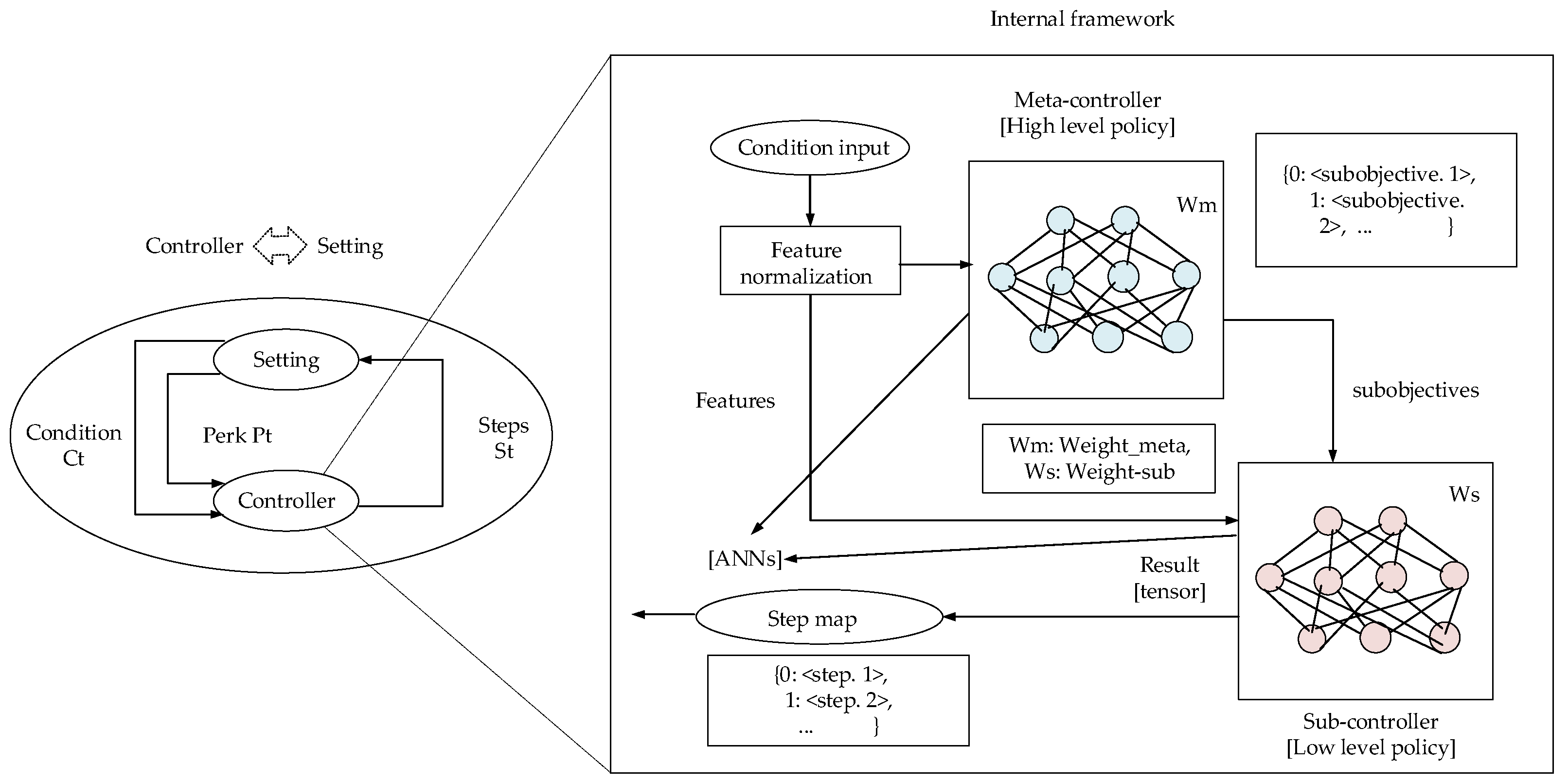

| HDRL (Hierarchical Deep Reinforcement Learning) | Hierarchical | Uses multiple hierarchical policies for decision-making | Scalable, efficient in complex environments | Requires careful reward design, increased training complexity | Extend the multi-level hierarchy, apply it to large-scale multi-agent systems |

| DRL (Deep Reinforcement Learning) | Hybrid (Value and Policy-based) | Combines DL with RL for feature extraction and decision-making | Learning directly from high-dimensional inputs, eliminating manual feature engineering | High training time requires large datasets | Improve sample efficiency, enhance interpretability, and integrate with neuromorphic computing |

| DL Techniques | Key Features | Advantages | Limitations | Future Directions |

|---|---|---|---|---|

| Recurrent Neural Networks (RNNs) | Processes sequential spectrum data and captures temporal dependencies. | Effective for dynamic spectrum sensing. | Struggles with long-term dependencies and vanishing gradients. | Optimization with attention mechanisms. |

| Long Short-Term Memory (LSTM) | Retains long-term dependencies with gating mechanisms. | High accuracy in dynamic spectrum environments. | Computationally expensive. | Hybrid models with RL for adaptive sensing. |

| Artificial Neural Networks (ANNs) | Wavelet transform for noise reduction, feature extraction. | High fault tolerance, adaptive learning. | Performance degrades at low SNR. | Improved feature extraction and hybrid models. |

| Hybrid Spectrum Sensing (HSS) with ANN | Uses multiple detectors for feature extraction. | Higher detection probability with more detectors. | Requires significant computational resources. | Optimization for real-time applications. |

| Deep Neural Networks (DNNs) with Phase-Difference (PD) | Handles noise uncertainty and carrier frequency mismatch. | Improved detection in low-SNR conditions. | Dependent on training data quality. | Enhancing robustness with adaptive learning. |

| DNN with Robust Alternating Direction Multiplier Method (RADMM) | Stable spectrum recovery with numerical differential gradients. | Efficient at low SNRs. | High computational complexity. | Reducing computational overhead for real-time applications. |

| Generative Adversarial Networks (GANs) | Consistency constraints and TL for domain adaptation. | Improved classification accuracy. | Requires extensive training data. | Applying GANs for real-world noisy spectrum environments. |

| Deep CNN with Time–Frequency Fusion | Uses PCA and TL for feature extraction. | High accuracy in low-SNR conditions. | Increased model complexity. | Exploring lightweight CNN architectures for real-time processing. |

| Residual Neural Networks (ResNet) | Uses residual connections to overcome vanishing gradients. | Efficient feature extraction with deeper networks. | High computational cost. | Reducing complexity through model pruning and quantization. |

| Aspect | Traditional ML | DL Techniques | LLMs |

|---|---|---|---|

| Architecture | Rule-based or statistical models (e.g., SVM, RF). | Neural networks (CNNs, RNNs). | Transformer-based, large-scale (e.g., GPT, BERT). |

| Feature Engineering | Manual and domain-specific. | Partially automated (learns features from data). | Fully automated through self-attention and large-scale pretraining. |

| Training Paradigm | Supervised learning on task-specific data | Supervised or semi-supervised learning. | Pretrain on large corpora, then adapt (e.g., instruction tuning, prompt engineering). |

| Adaptability to New Tasks | Requires retraining from scratch. | Requires fine-tuning with labeled data. | Few-shot and in-context learning; task adaptation without parameter updates. |

| Data Requirement | Task-specific labeled datasets. | Large, labeled datasets for each task. | Massive unlabeled data for pretraining; minimal labeled data for adaptation. |

| Reasoning Ability | Limited (rule-based inference). | Pattern recognition but limited logical reasoning. | Capable of multi-step rationale (e.g., chain-of-thought). |

| Multimodal Capability | Minimal (needs specialized models). | Requires separate architectures (e.g., CNN for vision, RNN for text). | Unified multimodal models (e.g., CLIP, AudioPaLM) for text, vision, audio. |

| Interpretability | High (simple models are interpretable). | Moderate (black box, but visualizable). | Low (black-box nature; difficult to explain decisions). |

| Interference time | Fast and lightweight. | Moderate (depends on model size). | Often slow due to large size and complexity. |

| On-device Feasibility | High (lightweight models). | Possible with optimized models. | Challenging; requires compression or distillation. |

| Energy Efficiency | High (low compute demands). | Moderate (training can be energy-intensive). | Low (training can consume hundreds of MWh). |

| Application in DSA | Basic spectrum prediction, anomaly detection. | Spectrum sensing, modulation classification. | Intelligent, context-aware SA, spoofing detection, cooperative reasoning. |

| Technique | Description | Key Features | Applications |

|---|---|---|---|

| Federated Learning | A decentralized learning technique where models are trained on local devices without sharing raw data. | Data remains on local devices and only model updates are shared, enhancing privacy and reducing data transmission. | Mobile networks, IoT systems, healthcare, and finance. |

| Differential Privacy | A method to ensure that individual data points cannot be distinguished within a dataset, offering privacy guarantees. | Adds noise to data or queries, ensuring individual privacy while maintaining overall utility. | Data analytics, machine learning, and statistical reporting. |

| Homomorphic Encryption | Enables computations on encrypted data without needing to decrypt it first. | Allows for encrypted data analysis and maintains data confidentiality while still enabling insights. | Secure data processing, cloud computing, and privacy-preserving analytics. |

| Secure Multi-Party Computation (SMPC) | Protocols for joint computations on data from multiple parties while keeping individual input confidential. | Ensures that no party can access another’s data, while still enabling computation on shared data | Collaborative data analysis, joint research, and financial services |

| Homomorphic Encryption- ML | Combines homomorphic encryption with ML to allow secure data analysis without exposing sensitive information. | ML on encrypted data, ensuring privacy during data analysis and model training. | Healthcare, finance, IoT, and secure AI models. |

| Privacy-Preserving Data Mining | Techniques such as clustering or classification ensure data privacy while performing mining operations. | Applies privacy-preserving algorithms like secure k-means or secure decision trees. | Data mining, customer behavior analysis, and sensitive data mining. |

| Zero-Knowledge Proofs (ZKPs) | A cryptographic method to prove the validity of a statement without revealing the underlying data. | Ensures privacy by proving knowledge of a fact without revealing the fact itself. | Authentication, blockchain, and identity verification. |

| Aspect | AI Techniques | Conventional Methods |

|---|---|---|

| Latency | Low latency (e.g., ~10 ms for DL) | Higher latency (e.g., ~25 ms) |

| Accuracy | High, especially for complex and dynamic data | Moderate to low, suitable for static conditions |

| Computational Power | Requires GPUs/TPUs and parallel processing | Minimal hardware requirements |

| Energy Consumption | High, due to intensive training and inference | Low, suitable for battery-powered devices |

| Scalability | Easily scalable in distributed systems | Limited scalability due to rule-based designs |

| Data Requirements | Requires large, labeled datasets for training | Minimal data, mostly rule-based or heuristics |

| Interpretability | Often low (black-box models) | High (easy to understand decision process) |

| Adaptability | Self-adapts using continuous learning | Requires manual updates for changes |

| Resource Requirements | High memory and storage needs | Optimized for constrained environments |

| Regulatory Compliance | Complex, especially in privacy-sensitive domains | Easier to control and audit |

| Deployment Complexity | High, requires training pipelines and updates | Low, often plug-and-play |

| Learning Capability | Learn from past and real-time data | Static behavior, no learning ability |

| Maintenance | Requires frequent updates, monitoring, and retraining | Low maintenance once the rules are defined |

| Security | Vulnerable to adversarial attacks and poisoning | More predictable but limited in attack detection |

| Use Cases | Dynamic SM, anomaly detection, traffic prediction, intrusion detection | Fixed routing, static traffic shaping, threshold-based monitoring |

| Dataset | Access | Area | Technology | Key Data | Description |

|---|---|---|---|---|---|

| IEEE DataPort [127] | Open | Varies | Various | Multi-modal datasets across wireless domains | A central repository of IEEE-hosted datasets, covering wireless sensing, 5G/6G, V2X, CSI, radar, and ML-focused experiments. Supports benchmarking, reproducibility, and publication-linked data access. |

| Tarbiat Modares University [128] | Open | Simulation | 6G networks | Artificial intelligence and machine learning in spectrum sharing | A neural network is used to approximate the Q-value function. The state is given as the input, and the Q-value of all possible actions is generated as the output. |

| COSINE (2020) [129] | Open | Laboratory | Cellular | SNR, temporal signal patterns | 55 GB cellular dataset from lab tests that are useful for 5G/6G ML. |

| KU Leuven LTE Dataset [130] | Partly Open | Campus | LTE | SINR, throughput | University testbed data focused on LTE performance under realistic conditions. |

| RICE Wireless Dataset (2016) [131] | Open | Laboratory | Experimental | Channel characteristics, signal logs | Dataset for physical layer and experimental protocol studies using testbed radio hardware. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gbenga-Ilori, A.; Imoize, A.L.; Noor, K.; Adebolu-Ololade, P.O. Artificial Intelligence Empowering Dynamic Spectrum Access in Advanced Wireless Communications: A Comprehensive Overview. AI 2025, 6, 126. https://doi.org/10.3390/ai6060126

Gbenga-Ilori A, Imoize AL, Noor K, Adebolu-Ololade PO. Artificial Intelligence Empowering Dynamic Spectrum Access in Advanced Wireless Communications: A Comprehensive Overview. AI. 2025; 6(6):126. https://doi.org/10.3390/ai6060126

Chicago/Turabian StyleGbenga-Ilori, Abiodun, Agbotiname Lucky Imoize, Kinzah Noor, and Paul Oluwadara Adebolu-Ololade. 2025. "Artificial Intelligence Empowering Dynamic Spectrum Access in Advanced Wireless Communications: A Comprehensive Overview" AI 6, no. 6: 126. https://doi.org/10.3390/ai6060126

APA StyleGbenga-Ilori, A., Imoize, A. L., Noor, K., & Adebolu-Ololade, P. O. (2025). Artificial Intelligence Empowering Dynamic Spectrum Access in Advanced Wireless Communications: A Comprehensive Overview. AI, 6(6), 126. https://doi.org/10.3390/ai6060126