YOLOv11-4ConvNeXtV2: Enhancing Persimmon Ripeness Detection Under Visual Challenges

Abstract

1. Introduction

- An enhanced YOLOv11 architecture incorporating a ConvNeXtV2 backbone with FCMAE pretraining and Global Response Normalization is proposed. This integration leverages context completion mechanisms to address occlusion challenges, achieving 3.8–6.3-percentage-point improvements in mAP@0.5:0.95 compared to baseline YOLO variants through enhanced channel-wise feature discrimination for persimmon detection under adverse conditions.

- A comprehensive persimmon dataset is constructed featuring diverse orchard conditions including occlusion patterns, varying illumination, and blur scenarios. The dataset encompasses 4921 annotated images with systematic sub-block organization that preserves spatial relationships and local structural integrity essential for robust model training.

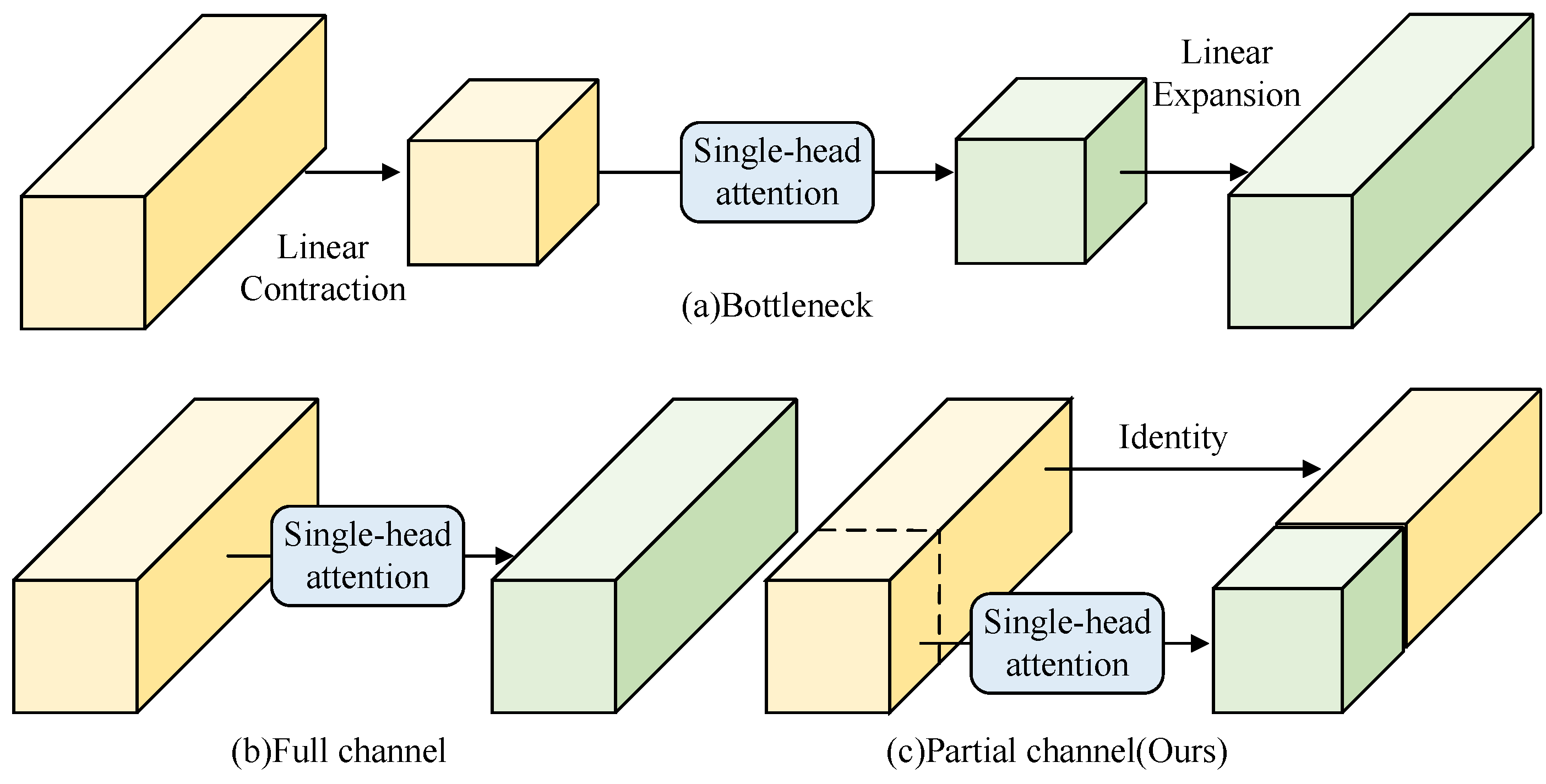

- A single-head attention-based HEAD detection model is constructed to efficiently capture and integrate multi-scale global and local features, significantly improving detection accuracy for blurred and occluded persimmons.

2. Materials and Methods

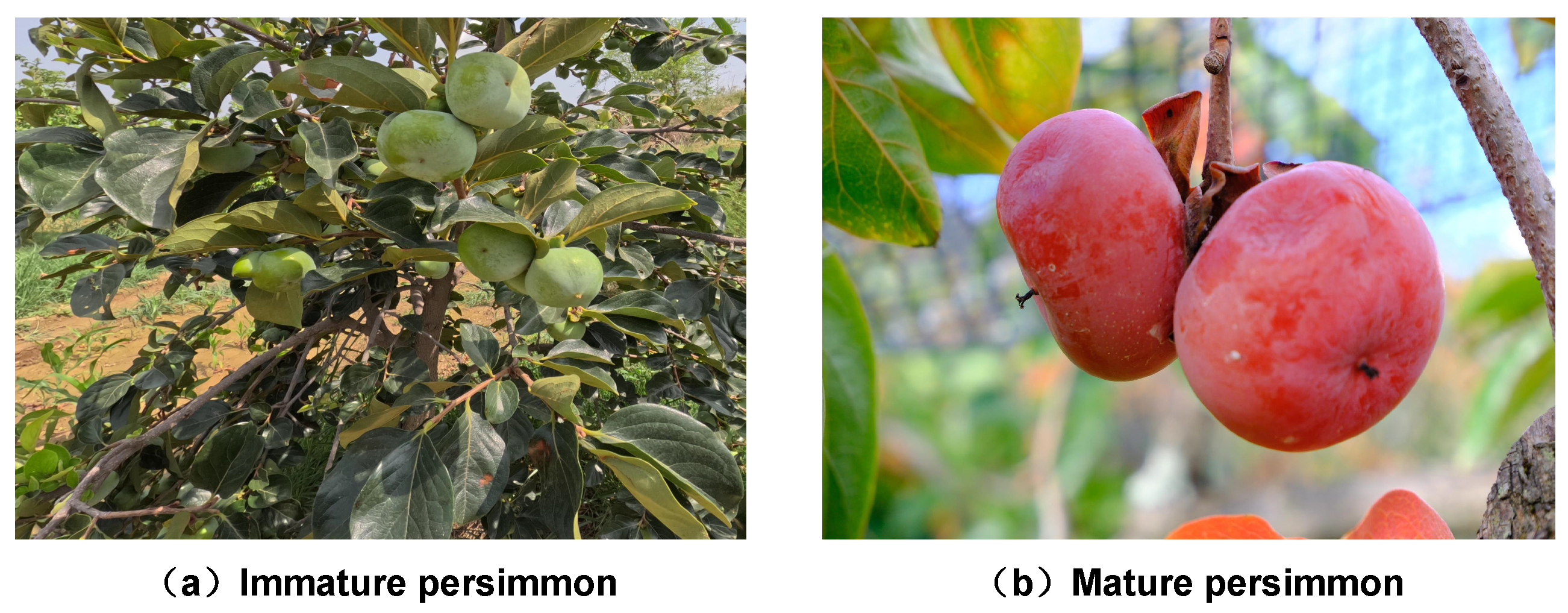

2.1. Dataset

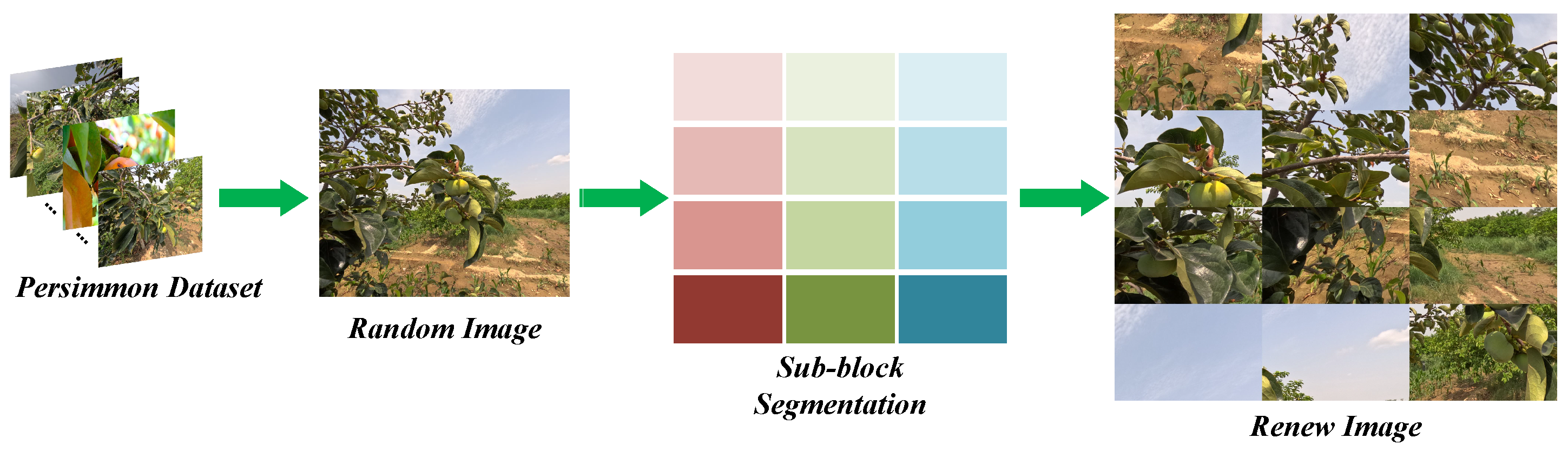

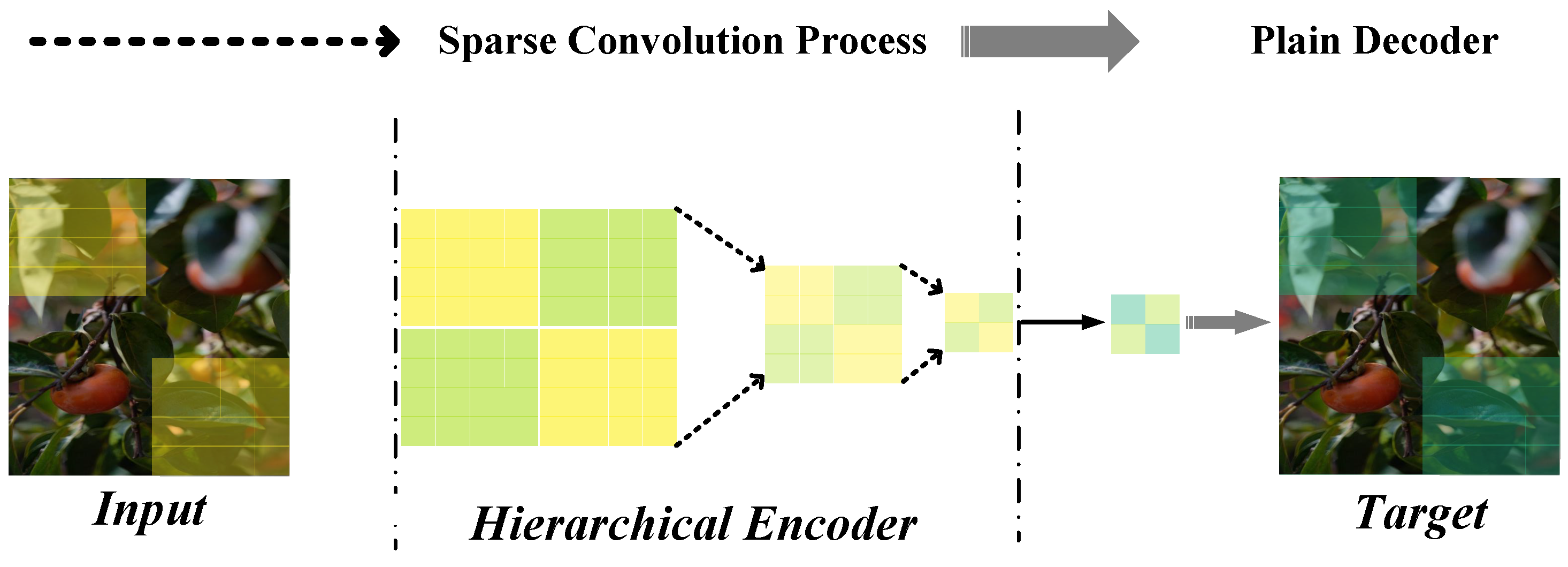

2.1.1. The Algorithm Principle of Sub-Block Segmentation

2.1.2. Dataset Construction

2.2. Methods

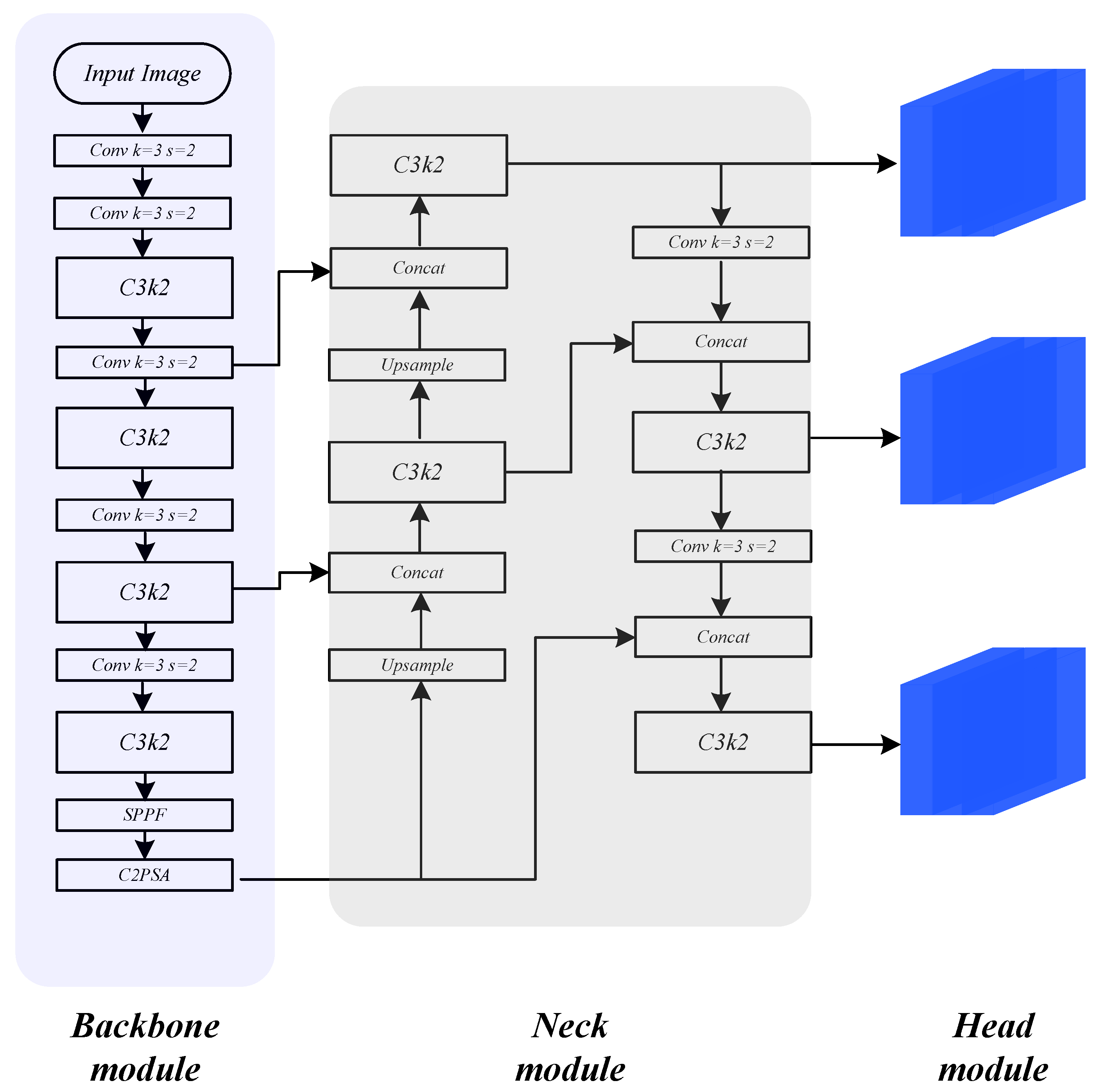

2.2.1. The Algorithm Principle of YOLOv11

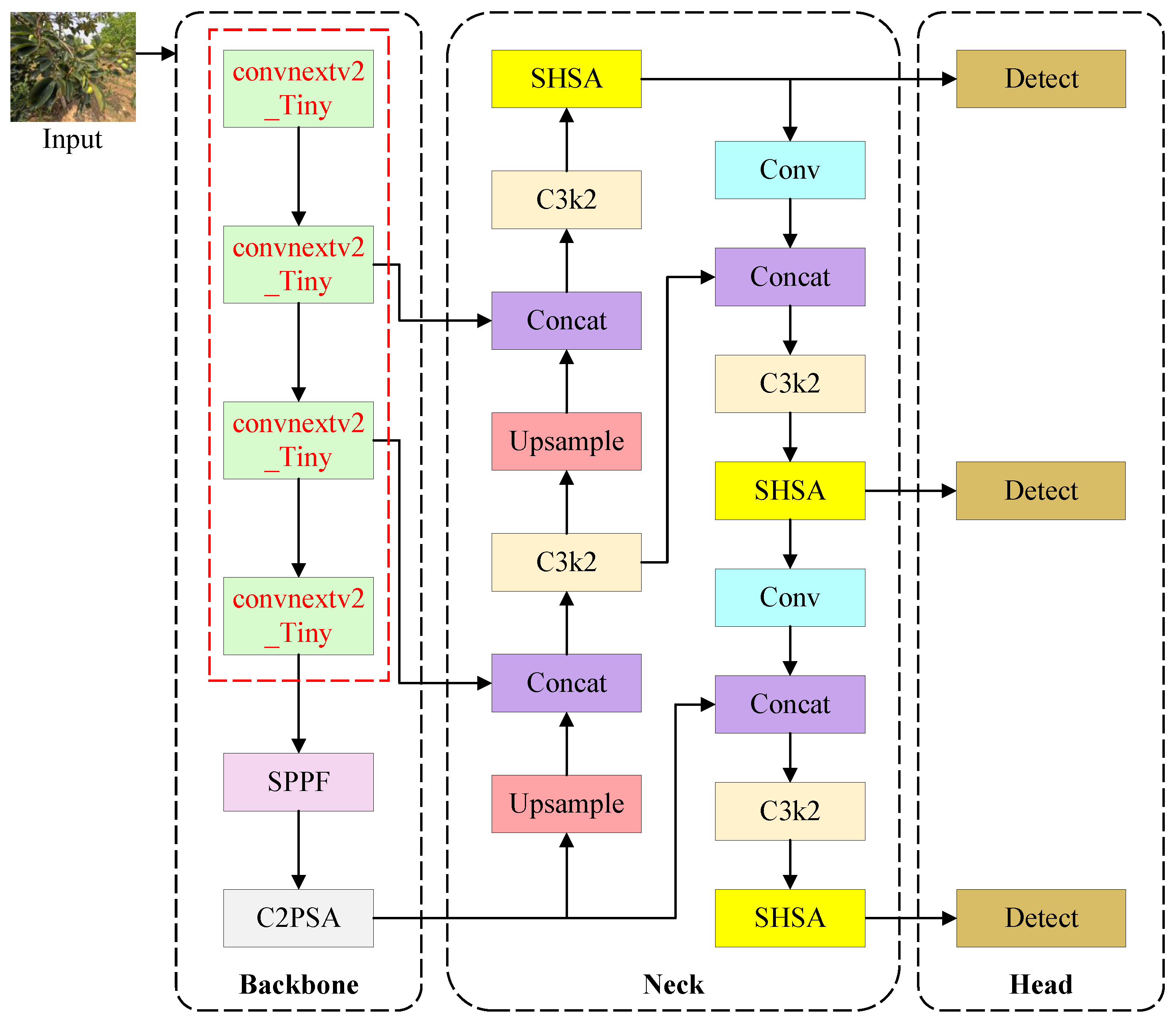

2.2.2. Overall Architecture of the YOLOv11-4ConvNeXtV2 Model

2.3. Description of Key Modules

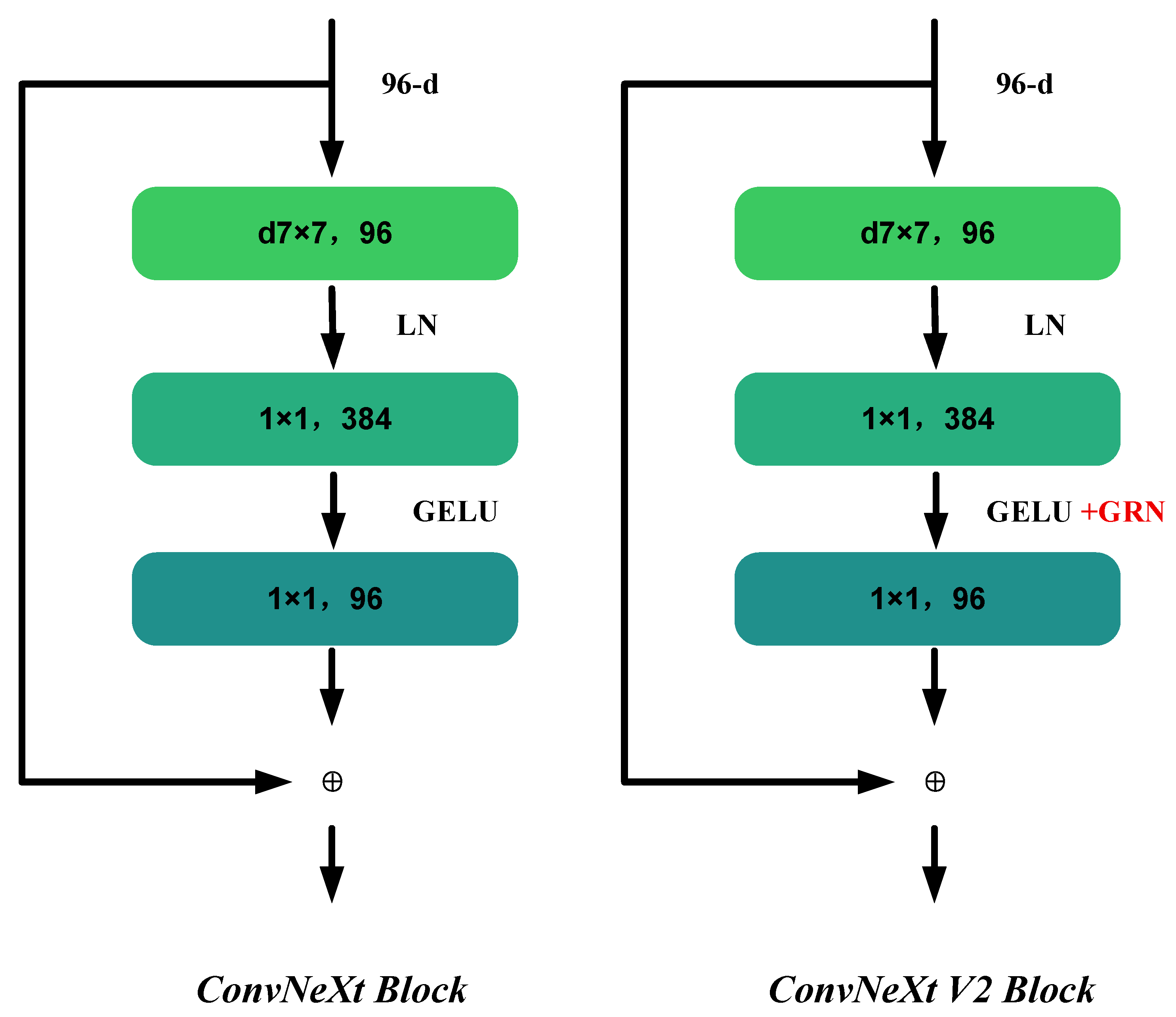

2.3.1. ConvNeXtV2 Backbone with Global Response Normalization

- Global Response Aggregation:

- Channel Competition Normalization:

- Feature Calibration:

2.3.2. Fully Convolutional Masked Auto-Encoder

2.3.3. SHSA Detection Head

3. Experimental Setup and Evaluation Metrics

3.1. Experimental Environment

3.2. Evaluation Metrics

4. Results and Analysis

4.1. Backbone Network Ablation Study

4.2. Comparative Experiments with Different Detection Models

5. Discussion

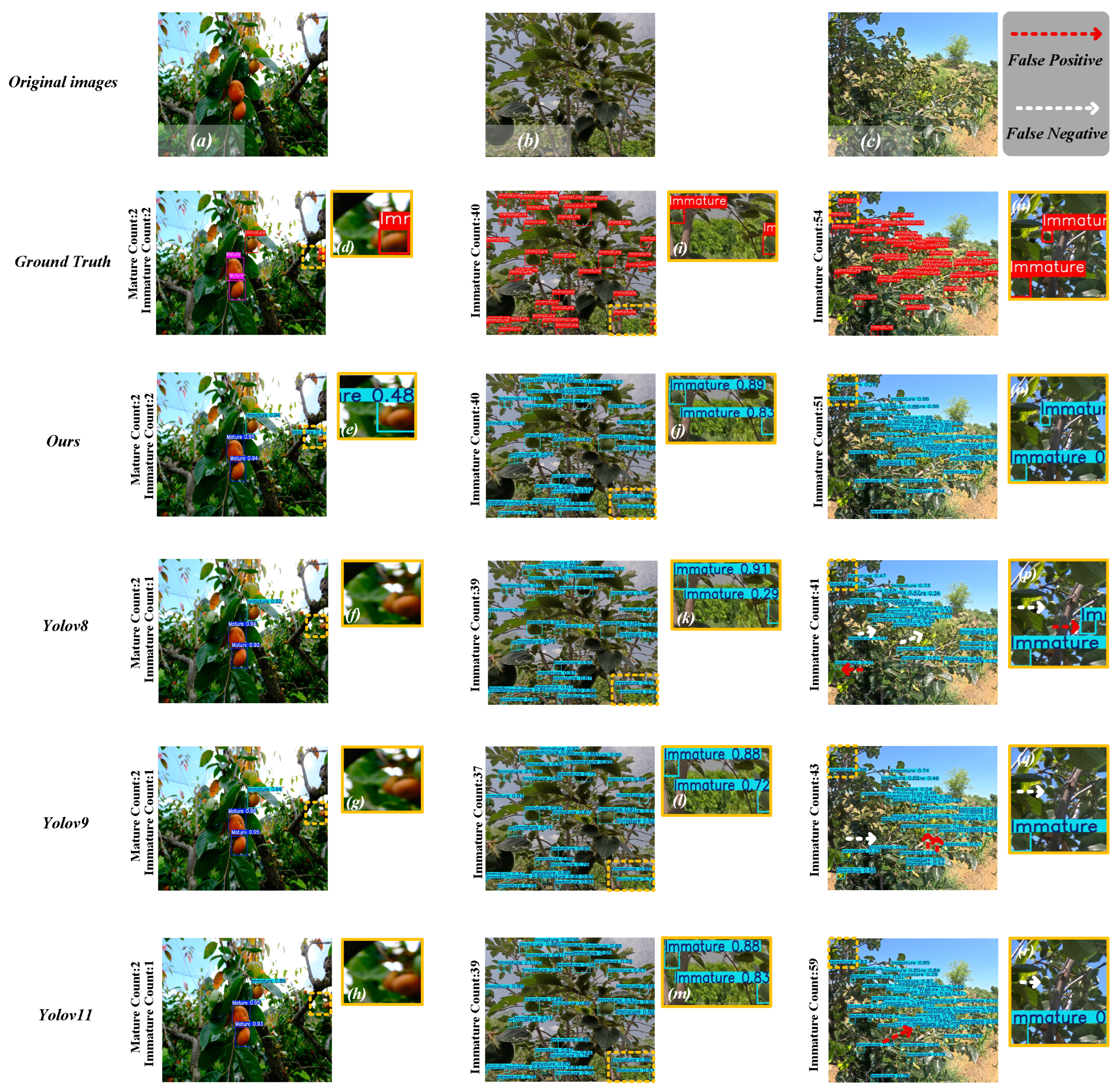

5.1. Quantitative Results and Robustness Analysis

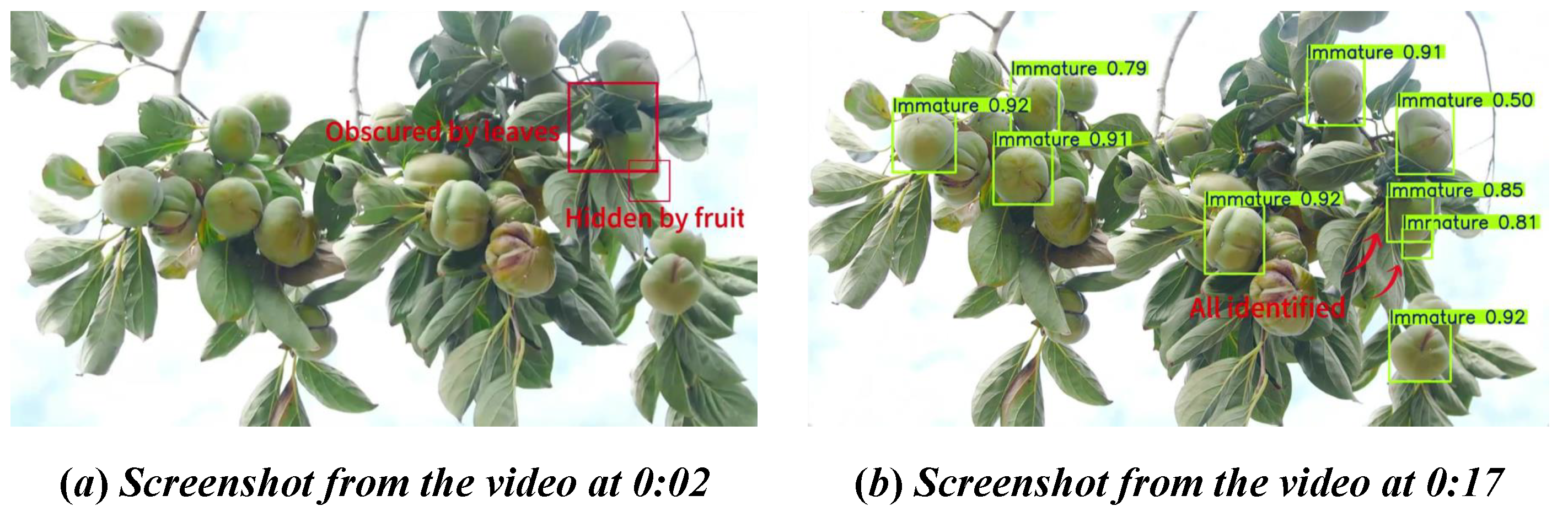

5.1.1. Qualitative Comparison Under Challenging Orchard Scenarios

5.1.2. Comparison of Small-Object Detection Results

5.2. Real-Time Video Evaluation

6. Conclusions and Future Work

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| BCE | Binary Cross-Entropy |

| CIoU | Complete Intersection over Union |

| CNN | Convolutional Neural Network |

| ConvNeXt V2 | Convolutional Next Version 2 |

| FCMAE | Fully Convolutional Masked Auto-Encoder |

| FLOPs | Floating Point Operations |

| FPN | Feature Pyramid Network |

| FPS | Frames Per Second |

| GIoU | Generalized Intersection over Union |

| GRN | Global Response Normalization |

| IoU | Intersection over Union |

| mAP | Mean Average Precision |

| RGB | Red Green Blue |

| SHSA | Single-Head Self-Attention |

| YOLO | You Only Look Once |

References

- Cuong, N.H.H. The Model for the Classification of the Ripeness Stage of Pomegranate Fruits in Orchards Using. Preprints 2021. [Google Scholar] [CrossRef]

- Hamza, R.; Chtourou, M. Comparative study on deep learning methods for apple ripeness estimation on tree. In Proceedings of the International Conference on Intelligent Systems Design and Applications, Online, 13–15 December 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 1325–1340. [Google Scholar]

- Cuong, N.H.H.; Trinh, T.H.; Meesad, P.; Nguyen, T.T. Improved YOLO object detection algorithm to detect ripe pineapple phase. Comput. Electron. Agric. 2023, 43, 1365–1381. [Google Scholar] [CrossRef]

- Xu, X.; Zhou, B.; Xu, Y.; Li, W. A target detection method for persimmon based on an improved fifth version of the you only look once algorithm. Eng. Appl. Artif. Intell. 2024, 137, 109139. [Google Scholar] [CrossRef]

- Suzuki, M.; Masuda, K.; Asakuma, H.; Takeshita, K.; Baba, K.; Kubo, Y.; Ushijima, K.; Uchida, S.; Akagi, T. Deep Learning Predicts Rapid Over-softening and Shelf Life in Persimmon Fruits. Hortic. J. 2022, 91, 408–415. [Google Scholar] [CrossRef]

- Cao, Z.; Mei, F.; Zhang, D.; Liu, B.; Wang, Y.; Hou, W. Recognition and Detection of Persimmon in a Natural Environment Based on an Improved YOLOv5 Model. Electronics 2023, 12, 785. [Google Scholar] [CrossRef]

- Hao, F.; Zhang, Z.; Ma, D.; Kong, H.; Li, Y.; Wang, J.; Chen, X.; Liu, S.; Wang, M.; Zhang, L.; et al. GSBF-YOLO: A lightweight model for tomato ripeness detection in natural environments. J.-Real-Time Image Process. 2025, 22, 47. [Google Scholar] [CrossRef]

- Dong, Y.; Qiao, J.; Liu, N.; He, Y.; Li, S.; Hu, X.; Zhang, C. GPC-YOLO: An Improved Lightweight YOLOv8n Network for the Detection of Tomato Maturity in Unstructured Natural Environments. Sensors 2025, 25, 1502. [Google Scholar] [CrossRef] [PubMed]

- Xiao, B.; Nguyen, M.; Yan, W.Q. Apple ripeness identification from digital images using transformers. Multimed. Tools Appl. 2024, 83, 7811–7825. [Google Scholar] [CrossRef]

- Wu, M.; Lin, H.; Shi, X.; Zhu, S.; Zheng, B. MTS-YOLO: A multi-task lightweight and efficient model for tomato fruit bunch maturity and stem detection. Horticulturae 2024, 10, 1006. [Google Scholar] [CrossRef]

- Tandion, P.; Chen, L.; Wang, M.; Liu, S.; Zhang, H.; Li, Y.; Chen, X.; Wang, J.; Liu, Y.; Chen, Z.; et al. Comparison of the Latest Version of Deep Learning YOLO Model in Automatically Detecting the Ripeness Level of Oil Palm Fruit. Agriculture 2024, 14, 1234. [Google Scholar]

- Wang, J.; Chen, L.; Liu, S.; Zhang, H.; Li, Y.; Chen, X.; Wang, M.; Liu, Y.; Chen, Z.; Wang, K.; et al. Melon ripeness detection by an improved object detection algorithm. Comput. Electron. Agric. 2023, 201, 107234. [Google Scholar]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: A review. Precis. Agric. 2023, 24, 1183–1219. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Mutha, S.A.; Shah, A.M.; Ahmed, M.Z. Maturity detection of tomatoes using deep learning. SN Comput. Sci. 2021, 2, 441. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.; Feichtenhofer, C.; Darrell, T.; Xie, S. ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14376–14386. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7464–7475. [Google Scholar]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv8: A state-of-the-art YOLO model. arXiv 2023, arXiv:2301.12546. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16000–16009. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. Int. Conf. Mach. Learn. 2019, 97, 6105–6114. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Rezatofighi, S.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Wang, C.; Liao, H.; Wu, Y.; Chen, P.; Hsieh, J.; Yeh, I. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

| Augmentation Method | Description | Images Generated |

|---|---|---|

| Contrast Enhancement | Linear pixels transform with , ; simulates lighting variation | 703 |

| Gaussian Noise Injection | Additive noise to mimic sensor/compression artifacts | 703 |

| Random Rotation | Random angle rotation to simulate camera view angle changes | 703 |

| Horizontal Flipping | Mirroring for object orientation diversity | 703 |

| Random Erasing | Occlusion simulation by zeroing out random patches | 703 |

| Sub-Block Segmentation Method | Original image sub-block segmentation and recombination | 703 |

| Total | Original dataset + 6 augmentation methods | 4921 |

| The Number of ConvNextV2 Modules | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|---|---|

| 0 | 0.895 | 0.655 | 0.864 | 0.685 |

| 1 | 0.896 | 0.677 | 0.794 | 0.587 |

| 3 | 0.848 | 0.710 | 0.789 | 0.576 |

| 4 | 0.959 | 0.837 | 0.884 | 0.748 |

| 5 | 0.871 | 0.698 | 0.782 | 0.561 |

| 7 | 0.957 | 0.806 | 0.866 | 0.714 |

| Methods | mAP@0.5 | mAP@0.5:0.95 |

|---|---|---|

| YOLOv5n | 0.864 | 0.685 |

| YOLOv8n | 0.857 | 0.697 |

| YOLOv9t | 0.851 | 0.693 |

| YOLOv10n | 0.870 | 0.710 |

| YOLOv11n | 0.855 | 0.689 |

| YOLOv12n | 0.862 | 0.698 |

| YOLOv11-4ConvNeXtV2 (ours) | 0.884 | 0.748 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, B.; Zhang, Z.; Zhang, X. YOLOv11-4ConvNeXtV2: Enhancing Persimmon Ripeness Detection Under Visual Challenges. AI 2025, 6, 284. https://doi.org/10.3390/ai6110284

Zhang B, Zhang Z, Zhang X. YOLOv11-4ConvNeXtV2: Enhancing Persimmon Ripeness Detection Under Visual Challenges. AI. 2025; 6(11):284. https://doi.org/10.3390/ai6110284

Chicago/Turabian StyleZhang, Bohan, Zhaoyuan Zhang, and Xiaodong Zhang. 2025. "YOLOv11-4ConvNeXtV2: Enhancing Persimmon Ripeness Detection Under Visual Challenges" AI 6, no. 11: 284. https://doi.org/10.3390/ai6110284

APA StyleZhang, B., Zhang, Z., & Zhang, X. (2025). YOLOv11-4ConvNeXtV2: Enhancing Persimmon Ripeness Detection Under Visual Challenges. AI, 6(11), 284. https://doi.org/10.3390/ai6110284