From Black Box to Glass Box: A Practical Review of Explainable Artificial Intelligence (XAI)

Abstract

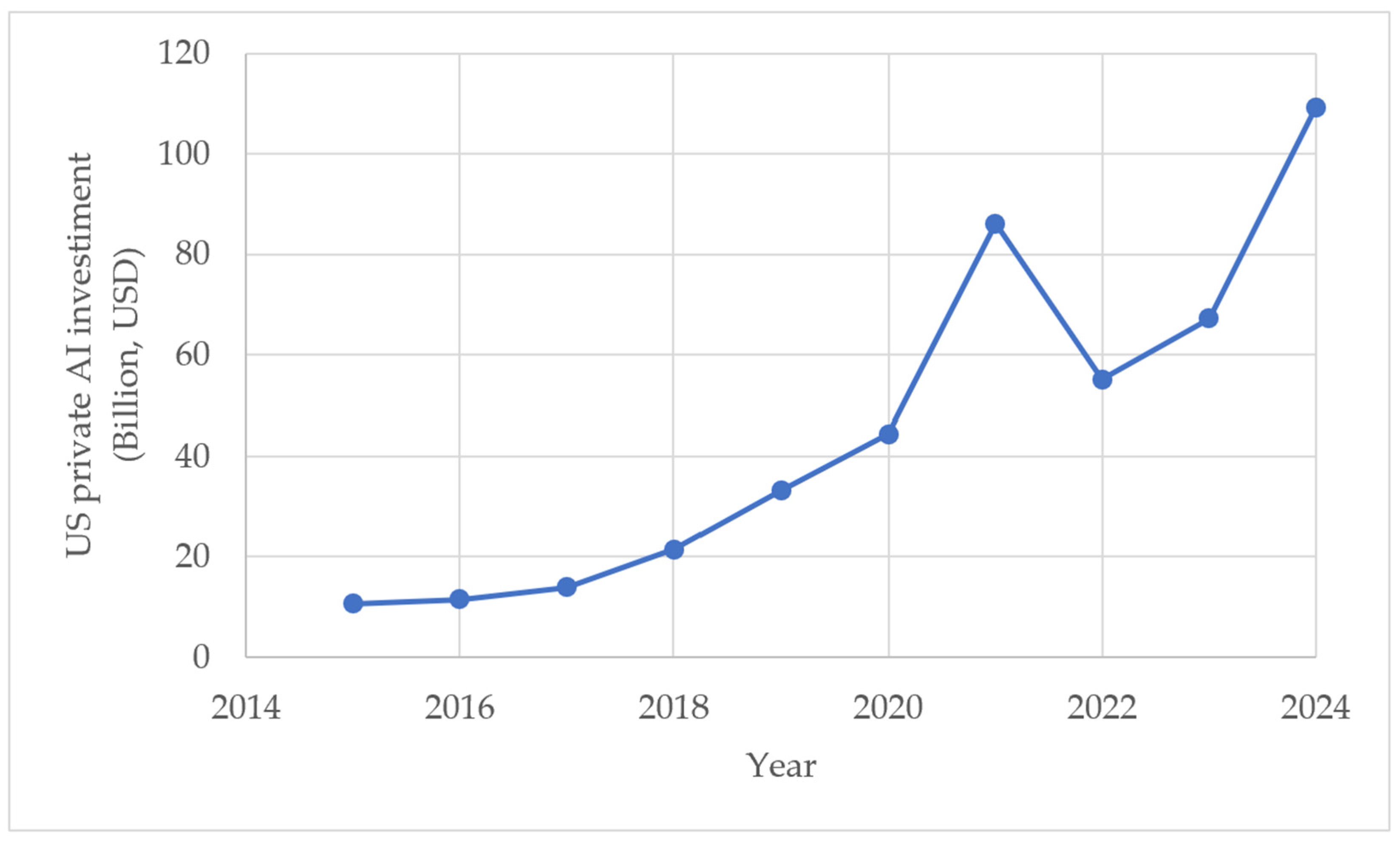

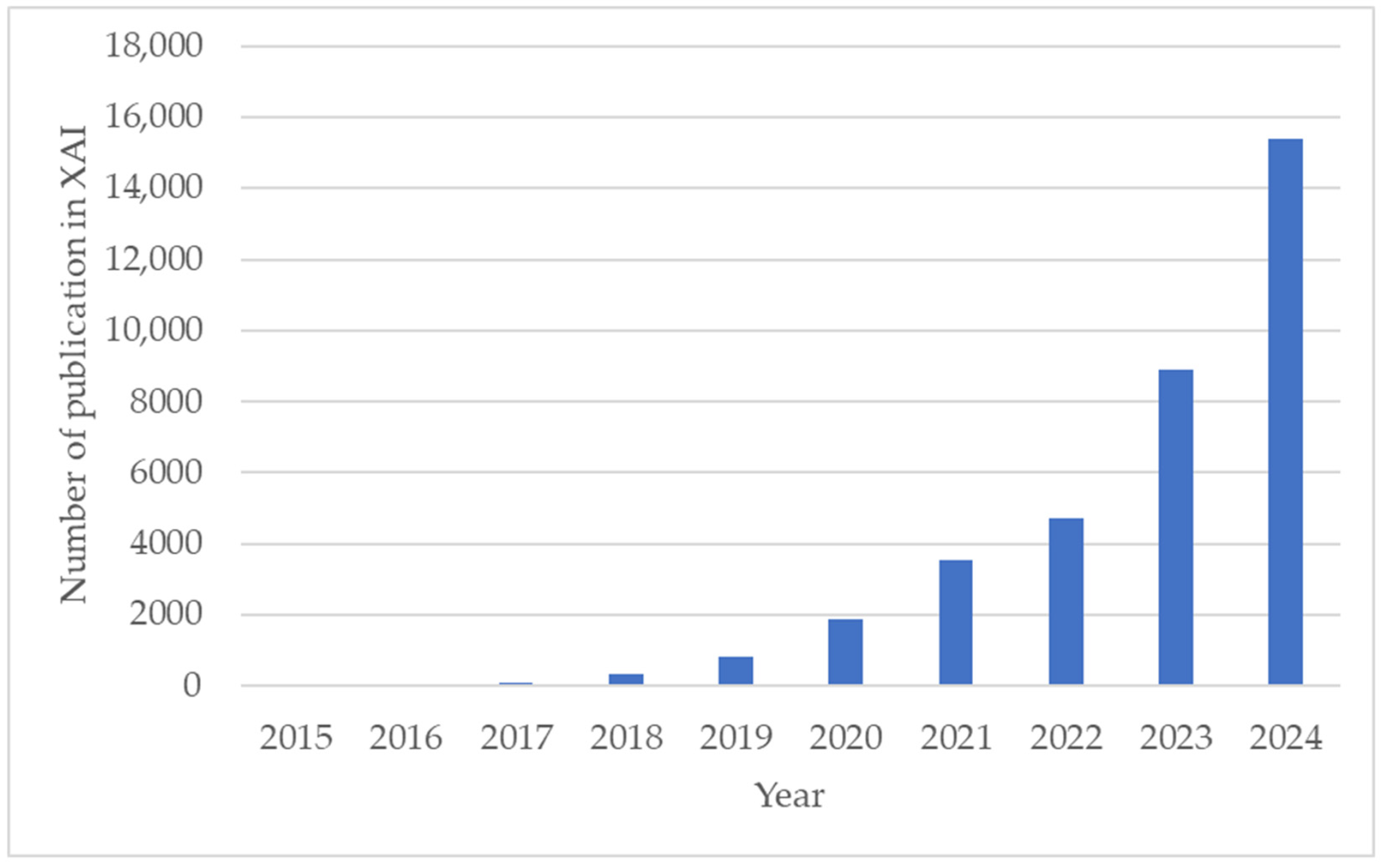

1. Introduction

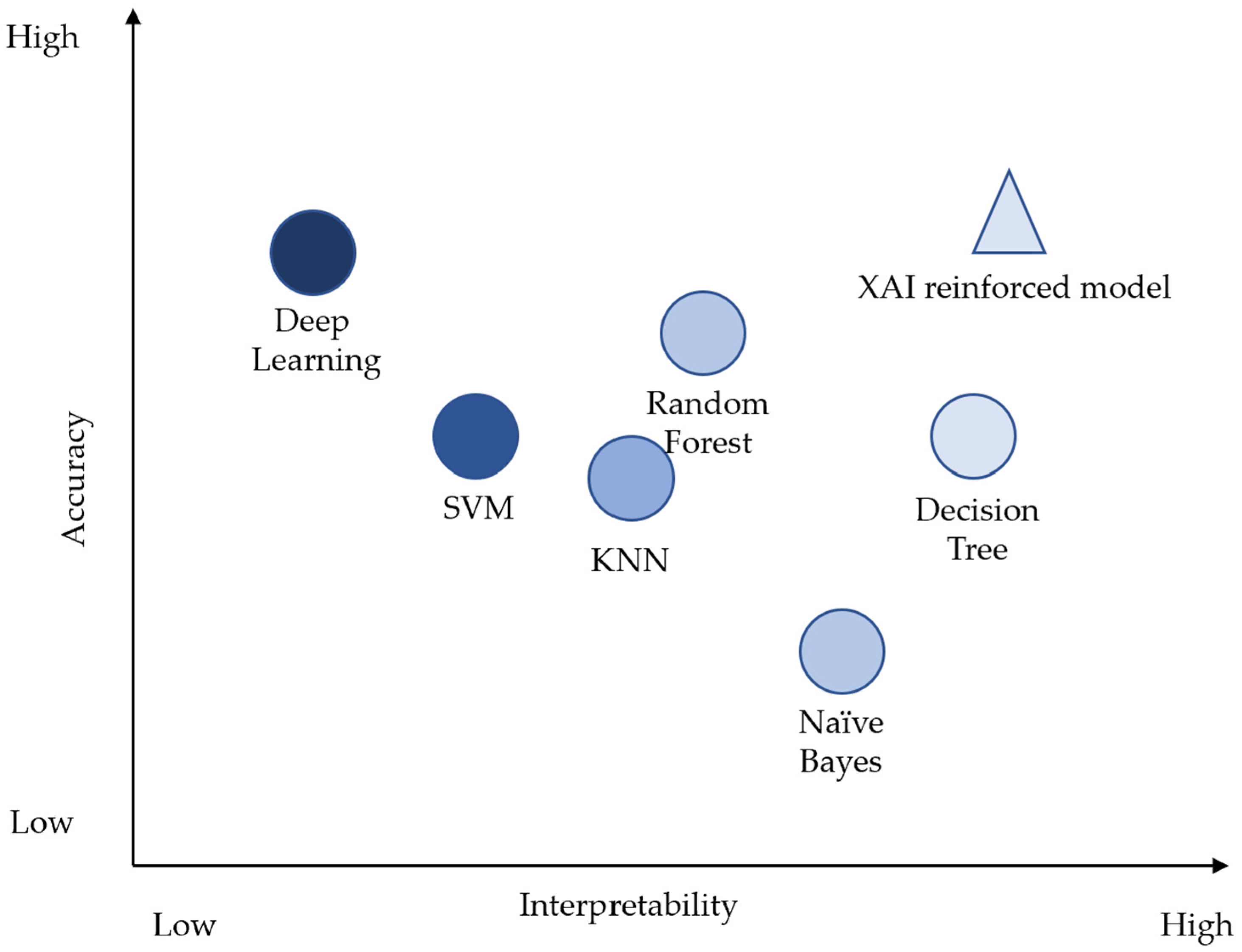

2. Key Concepts

2.1. Transparency

2.2. Interpretability

2.3. Marginal Transparency

2.4. Marginal Interpretability

3. Key Methods

3.1. Summary of Open-Source XAI Toolkits

3.2. Model-Agnostic Methods

3.2.1. Local Interpreatable Model-Agnostic Explanations (LIME)

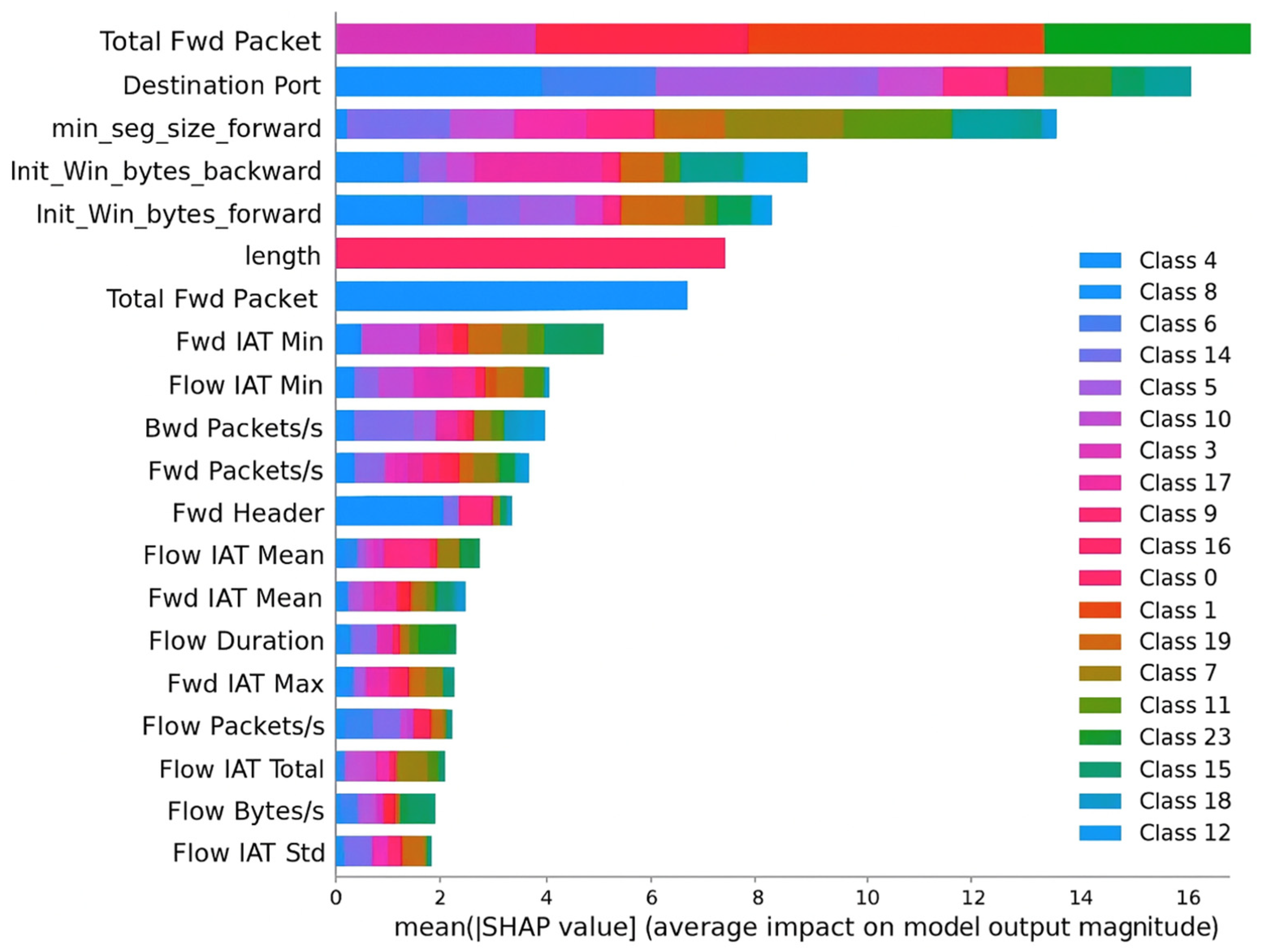

3.2.2. Shapley Additive Explanations (SHAP)

3.3. Model-Specific Methods

3.3.1. Decision Trees

3.3.2. Interpretable Neural Networks

3.3.3. Explainability in Large Language Models (LLMs)

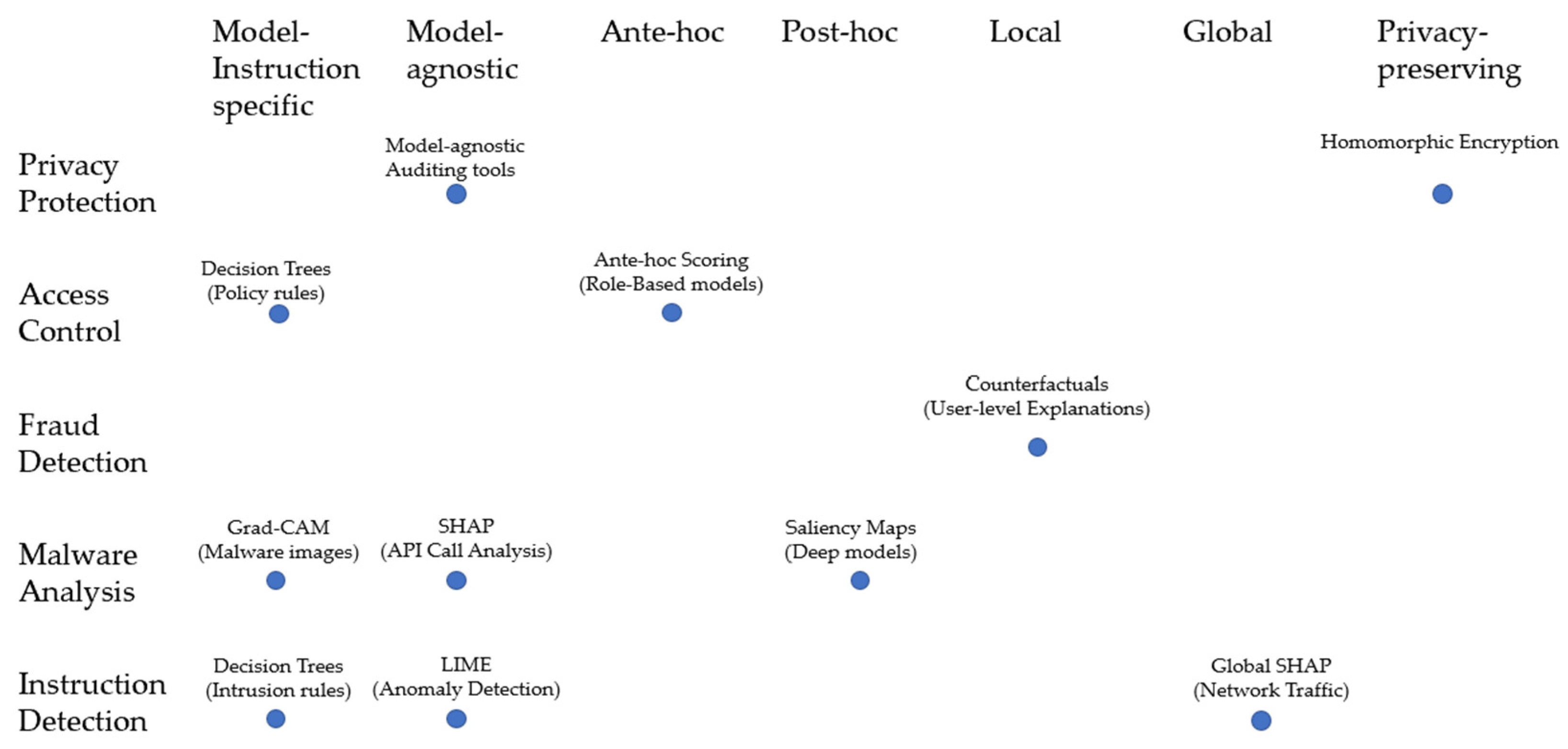

4. Taxonomy of Explainable AI

4.1. Ante-Hoc vs. Post-Hoc Approaches

4.2. Local vs. Global Explanations

4.3. Privacy-Preserving Explainability

4.4. Visual Taxonomy of XAI Applications

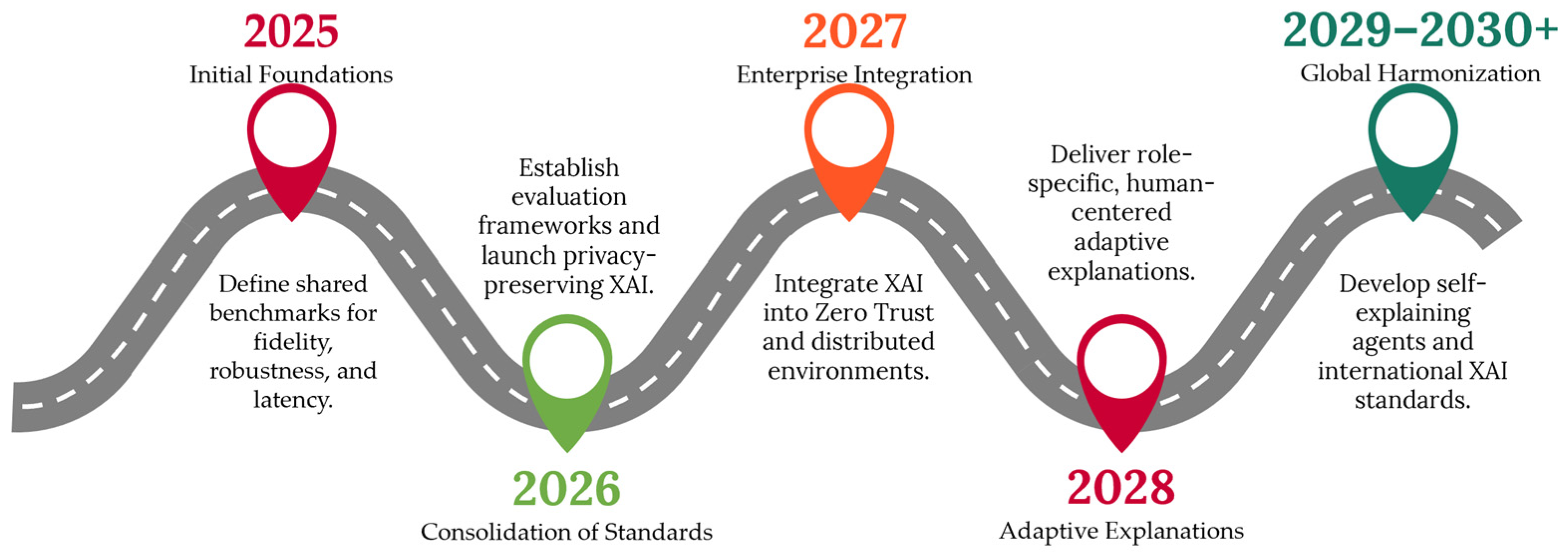

5. Research Roadmap for 2025–2030+

5.1. Short-Term (2025–2026): Establishing Foundations

5.2. Mid-Term (2027–2028): Integration and Expansion

5.3. Long-Term (2029–2030+): Towards Global Explainable Security

5.4. Synthesis

6. Applications of Explainable AI in Data Privacy and Security

6.1. Enhancing Transparency in Security Decision-Making

6.2. Privacy-Preserving Explanations

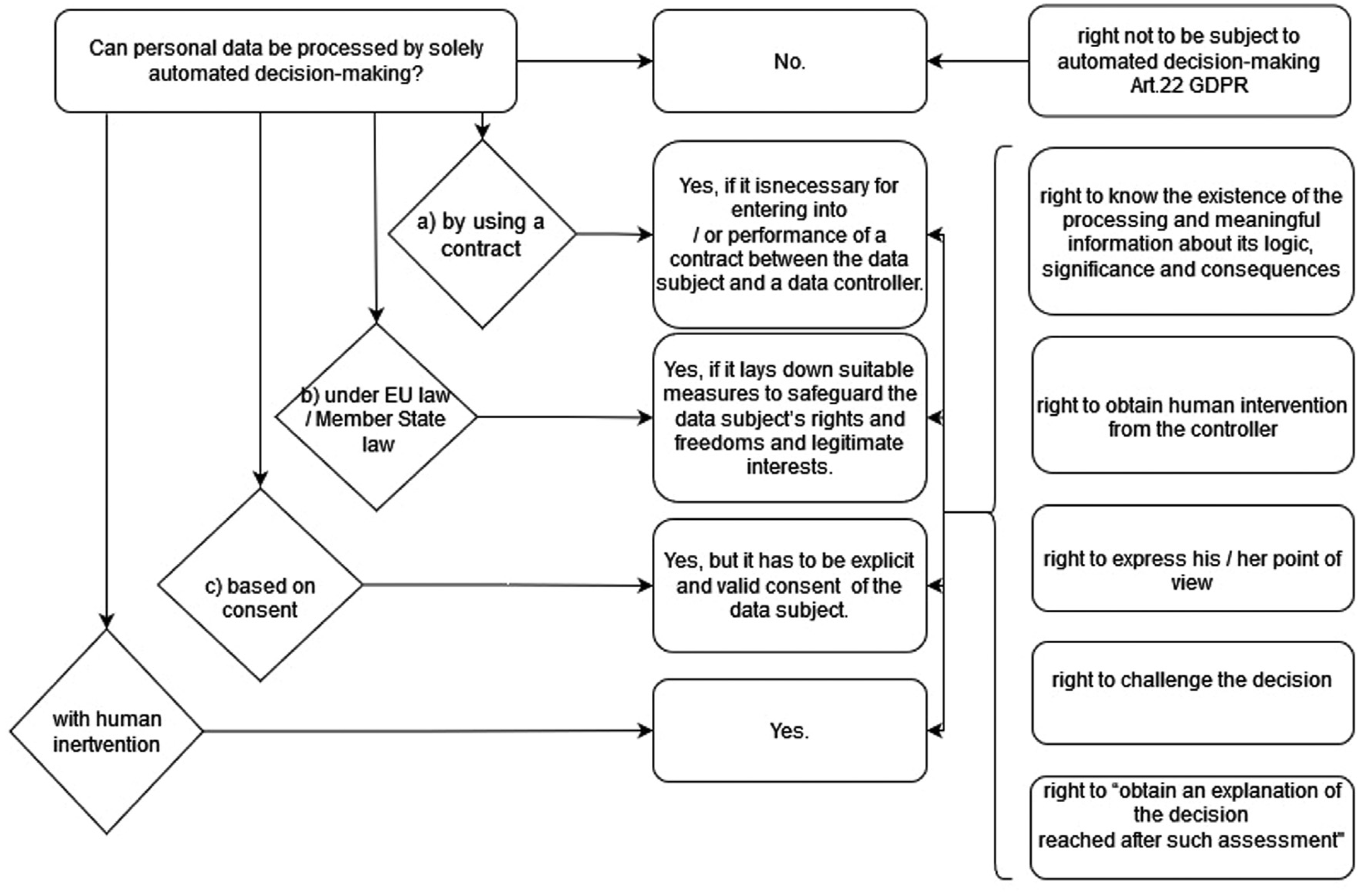

6.3. Trustworthy AI for Compliance and Regulation

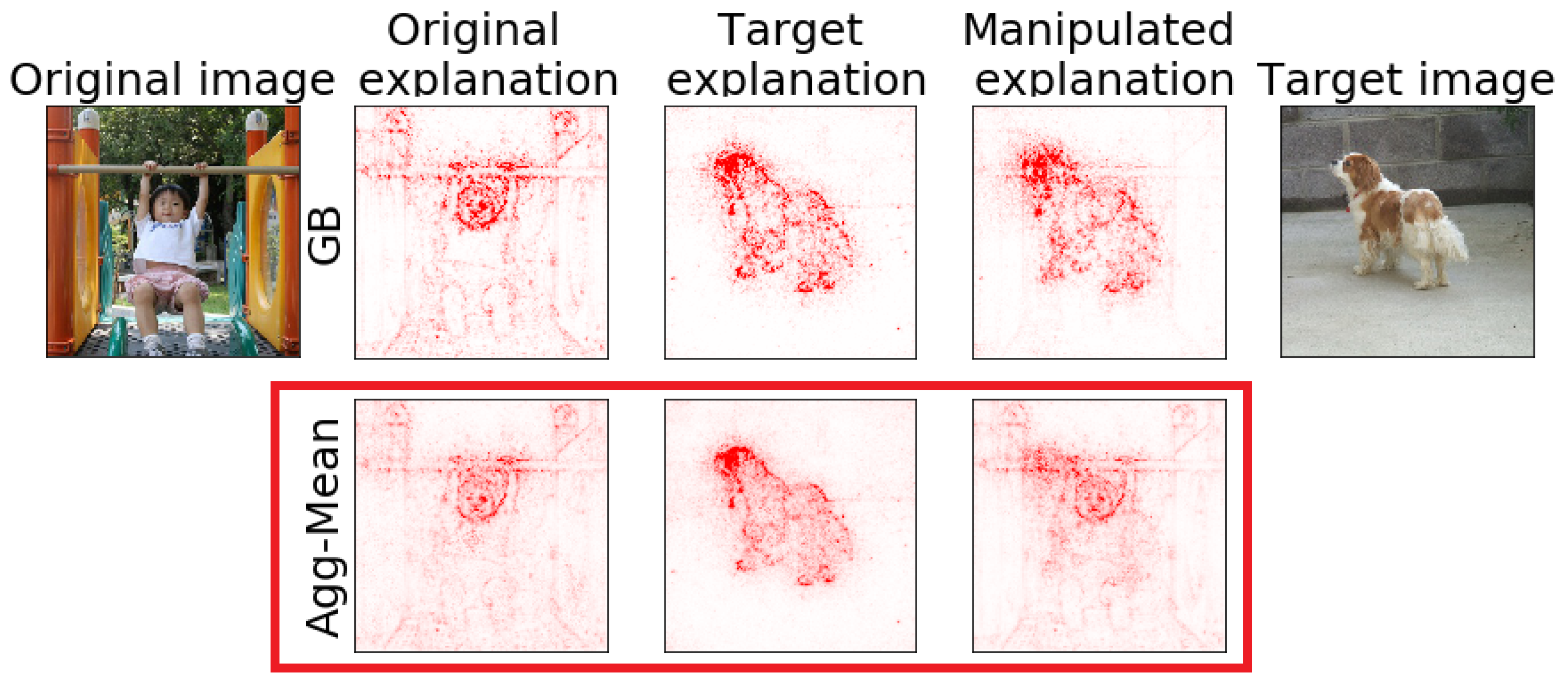

6.4. Securing AI Models Against Adversarial Threats

6.5. Human-in-the-Loop Security Systems

6.6. Other Application Examples

7. Limitations, Synthesis and Conclusion

7.1. Limitations

7.2. Synthesis and Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Selmy, H.A.; Mohamed, H.K.; Medhat, W. Big data analytics deep learning techniques and applications: A survey. Inf. Syst. 2024, 120, 102318. [Google Scholar] [CrossRef]

- Bhattacherjee, A.; Badhan, A.K. Convergence of data analytics, big data, and machine learning: Applications, challenges, and future direction. In Data Analytics and Machine Learning: Navigating the Big Data Landscape; Springer: Singapore, 2024; pp. 317–334. [Google Scholar]

- Wang, Y.; He, Y.; Wang, J.; Li, K.; Sun, L.; Yin, J.; Zhang, M.; Wang, X. Enhancing intent understanding for ambiguous prompt: A human-machine co-adaption strategy. Neurocomputing 2025, 646, 130415. [Google Scholar] [CrossRef]

- He, Y.; Li, S.; Wang, J.; Li, K.; Song, X.; Yuan, X.; Li, K.; Lu, K.; Huo, M.; Tang, J.; et al. Enhancing low-cost video editing with lightweight adaptors and temporal-aware inversion. arXiv 2025, arXiv:2501.04606. [Google Scholar]

- Xin, Y.; Luo, S.; Liu, X.; Zhou, H.; Cheng, X.; Lee, C.E.; Du, J.; Wang, H.; Chen, M.; Liu, T.; et al. V-petl bench: A unified visual parameter-efficient transfer learning benchmark. Adv. Neural Inf. Process. Syst. 2024, 37, 80522–80535. [Google Scholar]

- Zhou, Y.; Fan, Z.; Cheng, D.; Yang, S.; Chen, Z.; Cui, C.; Wang, X.; Li, Y.; Zhang, L.; Yao, H. Calibrated self-rewarding vision language models. Adv. Neural Inf. Process. Syst. 2024, 37, 51503–51531. [Google Scholar]

- Fan, Y.; Hu, Z.; Fu, L.; Cheng, Y.; Wang, L.; Wang, Y. Research on Optimizing Real-Time Data Processing in High-Frequency Trading Algorithms using Machine Learning. In Proceedings of the 2024 6th International Conference on Intelligent Control, Measurement and Signal Processing (ICMSP), Xi’an, China, 29 November–1 December 2024; pp. 774–777. [Google Scholar]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting black-box models: A review on explainable artificial intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Doshi-Velez, F.; Kim, B. Towards a rigorous science of interpretable machine learning. arXiv 2017, arXiv:1702.08608. [Google Scholar] [CrossRef]

- Our World in Data. Annual Private Investment in Artificial Intelligence. Available online: https://ourworldindata.org/grapher/private-investment-in-artificial-intelligence (accessed on 15 September 2025).

- Van Der Waa, J.; Nieuwburg, E.; Cremers, A.; Neerincx, M. Evaluating XAI: A comparison of rule-based and example-based explanations. Artif. Intell. 2021, 291, 103404. [Google Scholar] [CrossRef]

- Lipton, Z.C. The mythos of model interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue 2018, 16, 31–57. [Google Scholar] [CrossRef]

- Gunning, D.; Aha, D. DARPA’s explainable artificial intelligence (XAI) program. AI Mag. 2019, 40, 44–58. [Google Scholar]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Gilpin, L.H.; Bau, D.; Yuan, B.Z.; Bajwa, A.; Specter, M.; Kagal, L. Explaining explanations: An overview of interpretability of machine learning. In Proceedings of the 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA), Turin, Italy, 1–3 October 2018; pp. 80–89. [Google Scholar]

- Carvalho, D.V.; Pereira, E.M.; Cardoso, J.S. Machine learning interpretability: A survey on methods and metrics. Electronics 2019, 8, 832. [Google Scholar] [CrossRef]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A survey of methods for explaining black box models. ACM Comput. Surv. (CSUR) 2018, 51, 1–42. [Google Scholar] [CrossRef]

- Kadir, M.A.; Mosavi, A.; Sonntag, D. Evaluation metrics for xai: A review, taxonomy, and practical applications. In Proceedings of the 2023 IEEE 27th International Conference on Intelligent Engineering Systems (INES), Nairobi, Kenya, 26–28 July 2023; pp. 000111–000124. [Google Scholar]

- Page-Hoongrajok, A.; Mamunuru, S.M. Approaches to intermediate microeconomics. East. Econ. J. 2023, 49, 368–390. [Google Scholar] [CrossRef]

- Varian, H.R. Intermediate Microeconomics: A Modern Approach, 9th ed.; Elsevier: New York, NY, USA, 2014; p. 356. ISBN 978-0393934243. [Google Scholar]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Arya, V.; Bellamy, R.K.; Chen, P.-Y.; Dhurandhar, A.; Hind, M.; Hoffman, S.C.; Houde, S.; Liao, Q.V.; Luss, R.; Mojsilović, A.; et al. Ai explainability 360 toolkit. In Proceedings of the 3rd ACM India Joint International Conference on Data Science & Management of Data (8th ACM IKDD CODS & 26th COMAD), Bangalore, India, 2–4 January 2021; pp. 376–379. [Google Scholar]

- Klaise, J.; Van Looveren, A.; Vacanti, G.; Coca, A. Alibi: Algorithms for Monitoring and Explaining Machine Learning Models. 2020. Available online: https://github.com/SeldonIO/alibi (accessed on 15 September 2025).

- Dion, K. Designing an Interactive Interface for FACET: Personalized Explanations in XAI; Worcester Polytechnic Institute: Worcester, MA, USA, 2024. [Google Scholar]

- Yang, W.; Le, H.; Laud, T.; Savarese, S.; Hoi, S.C. Omnixai: A library for explainable ai. arXiv 2022, arXiv:2206.01612. [Google Scholar] [CrossRef]

- Robeer, M.; Bron, M.; Herrewijnen, E.; Hoeseni, R.; Bex, F. The Explabox: Model-Agnostic Machine Learning Transparency & Analysis. arXiv 2024, arXiv:2411.15257. [Google Scholar] [CrossRef]

- Fel, T.; Hervier, L.; Vigouroux, D.; Poche, A.; Plakoo, J.; Cadene, R.; Chalvidal, M.; Colin, J.; Boissin, T.; Bethune, L.; et al. Xplique: A deep learning explainability toolbox. arXiv 2022, arXiv:2206.04394. [Google Scholar] [CrossRef]

- Safavian, S.R.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Song, Y.-Y.; Lu, Y. Decision tree methods: Applications for classification and prediction. Shanghai Arch. Psychiatry 2015, 27, 130–135. [Google Scholar]

- Caruana, R.; Lou, Y.; Gehrke, J.; Koch, P.; Sturm, M.; Elhadad, N. Intelligible models for healthcare: Predicting pneumonia risk and hospital 30-day readmission. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, NSW, Australia, 10–13 August 2015; pp. 1721–1730. [Google Scholar]

- Shaik, T.; Tao, X.; Xie, H.; Li, L.; Higgins, N.; Velásquez, J.D. Towards Transparent Deep Learning in Medicine: Feature Contribution and Attention Mechanism-Based Explainability. Hum.-Centric Intell. Syst. 2025, 5, 209–229. [Google Scholar] [CrossRef]

- Zhang, Q.; Wu, Y.N.; Zhu, S.-C. Interpretable convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8827–8836. [Google Scholar]

- Singh, C.; Inala, J.P.; Galley, M.; Caruana, R.; Gao, J. Rethinking interpretability in the era of large language models. arXiv 2024, arXiv:2402.01761. [Google Scholar] [CrossRef]

- Rai, D.; Zhou, Y.; Feng, S.; Saparov, A.; Yao, Z. A practical review of mechanistic interpretability for transformer-based language models. arXiv 2024, arXiv:2407.02646. [Google Scholar] [CrossRef]

- Almenwer, S.; El-Sayed, H.; Sarker, M.K. Classification Method in Vision Transformer with Explainability in Medical Images for Lung Neoplasm Detection. In Proceedings of the International Conference on Medical Imaging and Computer-Aided Diagnosis, Manchester, UK, 19–21 November 2024; pp. 85–99. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Holzinger, A.; Langs, G.; Denk, H.; Zatloukal, K.; Müller, H. Causability and explainability of artificial intelligence in medicine. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1312. [Google Scholar] [CrossRef] [PubMed]

- Molnar, C. Interpretable Machine Learning; Lulu.com: Morrisville, NC, USA, 2020. [Google Scholar]

- Puiutta, E.; Veith, E.M. Explainable reinforcement learning: A survey. In International Cross-Domain Conference for Machine Learning and Knowledge Extraction; Springer: Cham, Switzerland, 2020; pp. 77–95. [Google Scholar]

- Bhatt, U.; Xiang, A.; Sharma, S.; Weller, A.; Taly, A.; Jia, Y.; Ghosh, J.; Puri, R.; Moura, J.M.; Eckersley, P. Explainable machine learning in deployment. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 648–657. [Google Scholar]

- Truex, S.; Baracaldo, N.; Anwar, A.; Steinke, T.; Ludwig, H.; Zhang, R.; Zhou, Y. A hybrid approach to privacy-preserving federated learning. In Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, London, UK, 15 November 2019; pp. 1–11. [Google Scholar]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and open problems in federated learning. Found. Trends® Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Xin, Y.; Luo, S.; Jin, P.; Du, Y.; Wang, C. Self-training with label-feature-consistency for domain adaptation. In International Conference on Database Systems for Advanced Applications; Springer: Cham, Switzerland, 2023; pp. 84–99. [Google Scholar]

- Wang, J.; He, Y.; Li, K.; Li, S.; Zhao, L.; Yin, J.; Zhang, M.; Shi, T.; Wang, X. MDANet: A multi-stage domain adaptation framework for generalizable low-light image enhancement. Neurocomputing 2025, 627, 129572. [Google Scholar] [CrossRef]

- Xin, Y.; Du, J.; Wang, Q.; Lin, Z.; Yan, K. Vmt-adapter: Parameter-efficient transfer learning for multi-task dense scene understanding. Proc. AAAI Conf. Artif. Intell. 2024, 38, 16085–16093. [Google Scholar] [CrossRef]

- Yi, M.; Li, A.; Xin, Y.; Li, Z. Towards understanding the working mechanism of text-to-image diffusion model. Adv. Neural Inf. Process. Syst. 2024, 37, 55342–55369. [Google Scholar]

- Xin, Y.; Du, J.; Wang, Q.; Yan, K.; Ding, S. Mmap: Multi-modal alignment prompt for cross-domain multi-task learning. Proc. AAAI Conf. Artif. Intell. 2024, 38, 16076–16084. [Google Scholar] [CrossRef]

- Wang, R.; He, Y.; Sun, T.; Li, X.; Shi, T. UniTMGE: Uniform Text-Motion Generation and Editing Model via Diffusion. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; pp. 6104–6114. [Google Scholar]

- Stafford, V. Zero trust architecture. NIST Spec. Publ. 2020, 800, 59p. [Google Scholar]

- Khan, M.J. Zero trust architecture: Redefining network security paradigms in the digital age. World J. Adv. Res. Rev. 2023, 19, 105–116. [Google Scholar] [CrossRef]

- Allendevaux, S. How US State Data Protection Statutes Compare in Scope to Safeguard Information and Protect Privacy Using Iso/Iec 27001: 2013 and Iso/Iec 27701: 2019 Security and Privacy Management System Requirements as an Adequacy Baseline. Ph.D. Thesis, Northeastern University, Boston, MA, USA, 2021. [Google Scholar]

- Singh, K.; Kashyap, A.; Cherukuri, A.K. Interpretable Anomaly Detection in Encrypted Traffic Using SHAP with Machine Learning Models. arXiv 2025, arXiv:2505.16261. [Google Scholar] [CrossRef]

- Lukács, A.; Váradi, S. GDPR-compliant AI-based automated decision-making in the world of work. Comput. Law Secur. Rev. 2023, 50, 105848. [Google Scholar] [CrossRef]

- Baniecki, H.; Biecek, P. Adversarial attacks and defenses in explainable artificial intelligence: A survey. Inf. Fusion 2024, 107, 102303. [Google Scholar] [CrossRef]

- Amershi, S.; Weld, D.; Vorvoreanu, M.; Fourney, A.; Nushi, B.; Collisson, P.; Suh, J.; Iqbal, S.; Bennett, P.N.; Inkpen, K.; et al. Guidelines for human-AI interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 4–9 May 2019; pp. 1–13. [Google Scholar]

- Patil, S.; Varadarajan, V.; Mazhar, S.M.; Sahibzada, A.; Ahmed, N.; Sinha, O.; Kumar, S.; Shaw, K.; Kotecha, K. Explainable artificial intelligence for intrusion detection system. Electronics 2022, 11, 3079. [Google Scholar] [CrossRef]

- Galli, A.; La Gatta, V.; Moscato, V.; Postiglione, M.; Sperlì, G. Explainability in AI-based behavioral malware detection systems. Comput. Secur. 2024, 141, 103842. [Google Scholar] [CrossRef]

- Gupta, S.; Singh, B. An intelligent multi-layer framework with SHAP integration for botnet detection and classification. Comput. Secur. 2024, 140, 103783. [Google Scholar] [CrossRef]

- Nazim, S.; Alam, M.M.; Rizvi, S.S.; Mustapha, J.C.; Hussain, S.S.; Suud, M.M. Advancing malware imagery classification with explainable deep learning: A state-of-the-art approach using SHAP, LIME and Grad-CAM. PLoS ONE 2025, 20, e0318542. [Google Scholar] [CrossRef] [PubMed]

| Evaluation Item | Description |

|---|---|

| Research Question | How explainable AI methods (model-agnostic and model-specific) contribute to trustworthy and transparent AI systems across domains |

| Time Period | 2015–2024 |

| Databases Searched | Scopus, IEEE Xplore, Web of Science |

| Inclusion Criteria | Peer-reviewed English-language journal and conference papers on XAI methods or applications |

| Performance Measures | Accuracy, F1-score, fidelity, latency, interpretability |

| Qualitative Evaluation | Thematic analysis of advantages, limitations, and emerging research gaps |

| Organization/Project | Tool | Highlights | Reference |

|---|---|---|---|

| IBM | AIX360 (v0.3.0) | Broad library of interpretability and explanation methods | [23] |

| SeldonIO | Alibi (v0.9.6) | Global/local explainers including counterfactuals and confidence metrics | [24] |

| BCG X | Facet (v2.0) | Geometric inference of feature interactions and dependencies | [25] |

| OmniXAI | OmniXAI (v1.2.2) | Multi-data, multi-model XAI toolkit with GUI | [26] |

| Explabox | Explabox (v0.7.1) | Auditing pipeline for explainability, fairness, robustness | [27] |

| Xplique | Xplique (v1.3.3) | Toolkit for deep-learning model introspection | [28] |

| Model Type | Typical Domain | Example Study | Accuracy (%) | Interpretability Level 1 |

|---|---|---|---|---|

| Decision Tree | Credit Scoring/Risk Analysis | [31] | 85–90 | ★★★★★ |

| Random Forest | Drug Discovery/Genomics | [16] | 88–93 | ★★★★☆ |

| CNN (Grad-CAM) | Medical Imaging | [36] | 90–95 | ★★★☆☆ |

| Attention-based NN | NLP/Healthcare | [37] | 92–96 | ★★★☆☆ |

| Interpretable NN (Glass-box) | Finance/Tabular Data | [33] | 87–94 | ★★★★☆ |

| Study | XAI Method | Application Domain | Main Performance Metric(s) | Interpretability Metric |

|---|---|---|---|---|

| [57] | LIME on DT/RF/SVM | Intrusion detection | Demonstrated high detection accuracy and balanced precision–recall across multiple classifiers | Qualitative per-class LIME analyses |

| [58] | SHAP, LIME, LRP, Grad-CAM | Behavioral malware detection | Reported consistently high detection rates and AUC values across evaluated detectors | Comparative usefulness of four XAI techniques |

| [59] | SHAP integrated in multilayer pipeline | Botnet detection & classification | Achieved competitive F1-scores and low false-positive rates on benchmark datasets | Global + local SHAP attributions |

| [60] | SHAP, LIME, Grad-CAM | Malware classification | Outperformed conventional CNN baselines in accuracy and robustness | Visual + feature-level explanations |

| [55] | Robust/adv. XAI | Adversarial settings for XAI | Synthesizes empirical studies on model robustness and explanation fidelity | Stability and robustness of explanations |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Huang, D.; Yao, J.; Dong, J.; Song, L.; Wang, H.; Yao, C.; Chu, W. From Black Box to Glass Box: A Practical Review of Explainable Artificial Intelligence (XAI). AI 2025, 6, 285. https://doi.org/10.3390/ai6110285

Liu X, Huang D, Yao J, Dong J, Song L, Wang H, Yao C, Chu W. From Black Box to Glass Box: A Practical Review of Explainable Artificial Intelligence (XAI). AI. 2025; 6(11):285. https://doi.org/10.3390/ai6110285

Chicago/Turabian StyleLiu, Xiaoming, Danni Huang, Jingyu Yao, Jing Dong, Litong Song, Hui Wang, Chao Yao, and Weishen Chu. 2025. "From Black Box to Glass Box: A Practical Review of Explainable Artificial Intelligence (XAI)" AI 6, no. 11: 285. https://doi.org/10.3390/ai6110285

APA StyleLiu, X., Huang, D., Yao, J., Dong, J., Song, L., Wang, H., Yao, C., & Chu, W. (2025). From Black Box to Glass Box: A Practical Review of Explainable Artificial Intelligence (XAI). AI, 6(11), 285. https://doi.org/10.3390/ai6110285