Abstract

The increasing use of artificial intelligence (AI) in athlete health monitoring and injury prediction presents both technological opportunities and complex ethical challenges. This narrative review critically examines 24 empirical and conceptual studies focused on AI-driven injury forecasting systems across diverse sports disciplines, including professional, collegiate, youth, and Paralympic contexts. Applying an IMRAD framework, the analysis identifies five dominant ethical concerns: privacy and data protection, algorithmic fairness, informed consent, athlete autonomy, and long-term data governance. While studies commonly report the effectiveness of AI models—such as those employing decision trees, neural networks, and explainability tools like SHAP and HiPrCAM—few offers robust ethical safeguards or athlete-centered governance structures. Power asymmetries persist between athletes and institutions, with limited recognition of data ownership, transparency, and the right to contest predictive outputs. The findings highlight that ethical risks vary by sport type and competitive level, underscoring the need for sport-specific frameworks. Recommendations include establishing enforceable data rights, participatory oversight mechanisms, and regulatory protections to ensure that AI systems align with principles of fairness, transparency, and athlete agency. Without such frameworks, the integration of AI in sports medicine risks reinforcing structural inequalities and undermining the autonomy of those it intends to support.

1. Introduction

Artificial intelligence (AI) has transformed modern sports science, fundamentally reshaping how athlete performance, injury risk, and health outcomes are monitored and managed. AI systems are now integrated into elite and amateur sports environments, powering applications that range from injury risk forecasting and tactical analysis to load optimization and medical diagnostics. Their capabilities are enabled by access to large-scale physiological, biomechanical, psychological, and contextual data collected through wearable sensors, imaging, and real-time location tracking [1]. With these systems now increasingly embedded into daily training and competition routines, the promise of AI lies in its potential to enhance performance, improve decision-making, and reduce injury-related absences.

However, these technological advancements have introduced complex ethical dilemmas. Integrating AI into athlete health monitoring raises urgent concerns about data privacy, algorithmic bias, informed consent, and the erosion of player autonomy [2,3]. Many AI systems deployed in sports medicine rely on opaque machine learning algorithms trained on datasets that may reflect underlying social, physiological, or gender-based disparities. These biases can lead to unfair decision-making, unequal treatment, or an over-reliance on predictive outputs without human oversight [4]. Moreover, biometric surveillance technologies—such as smart mouthguards, real-time biosensors, and wearable ECG monitors—have blurred the lines between voluntary participation and professional compliance, especially in high-performance or contract-sensitive environments [5].

Despite these challenges, there is currently no unified ethical framework governing the use of AI in the context of athlete health. Existing legal protections, such as HIPAA, FERPA, or GDPR, provide only partial safeguards and are inconsistently applied across national jurisdictions and sports disciplines [6]. Furthermore, professional leagues’ collective bargaining agreements (CBAs) in professional leagues often lack explicit provisions for data ownership, long-term health impacts, or the right to opt out of AI-driven monitoring [2]. These regulatory gaps are particularly concerning in high-risk sports, such as contact and combat disciplines, youth athletics, and Paralympic contexts, where the ethical stakes are elevated [7,8].

Recent studies have also highlighted a methodological limitation in the current literature: many investigations focus on algorithmic performance (e.g., accuracy or AUC) without incorporating broader ethical evaluations [9]. Even when ethical concerns are mentioned, they are often addressed only superficially, lacking engagement with practical implications for athlete agency, medical decision-making, or data governance [10,11]. Given the growing adoption of AI in sports medicine and the significant ethical, legal, and social implications accompanying it, a comprehensive interdisciplinary analysis is urgently needed. Such an approach must consider the technical efficacy of AI systems and the ethical principles that govern their development, deployment, and evaluation in real-world athletic environments.

An emerging body of literature have also highlighted a methodological limitation in the current literature: many investigations focus on algorithmic performance (e.g., accuracy or AUC) without incorporating broader ethical evaluations [9]. Even when ethical concerns are mentioned, they are often addressed only superficially, lacking engagement with practical implications for athlete agency, medical decision-making, or data governance [10,11]. Given the growing adoption of AI in sports medicine and the significant ethical, legal, and social implications accompanying it, a comprehensive interdisciplinary analysis is urgently needed. Such an approach must consider the technical efficacy of AI systems and the ethical principles that govern their development, deployment, and evaluation in real-world athletic environments.

Objectives of the Study

This review aims to provide an evidence-informed analysis of the ethical considerations involved in applying AI technologies for athlete health monitoring and injury prediction across various sports disciplines. Specifically, the study seeks to:

- Map the ethical debate concerning AI-driven health monitoring tools in professional and amateur sports.

- Identify and categorize ethical concerns about AI usage, including privacy, autonomy, informed consent, and bias.

- Evaluate the bias mitigation strategies proposed in the literature and assess their relevance to sports-specific contexts.

- Analyze regulatory frameworks and implementation challenges, especially in high-stakes domains such as youth, contact, or Paralympic sports.

- Propose actionable recommendations for AI’s ethical development and application in sports medicine.

2. Methods

2.1. Research Design and Scope

This study employs a narrative review guided by PRISMA methodology, drawing on empirical and conceptual academic sources to examine the ethical dimensions of artificial intelligence (AI) applications in athlete health data collection and injury prediction. The analysis focuses on AI systems implemented or proposed within professional and amateur sports settings, with an emphasis on machine learning-based monitoring tools that process biometric, physiological, and performance-related data. The goal is to synthesize cross-disciplinary insights into bias mitigation, informed consent, privacy, and autonomy across diverse sports contexts.

The review includes:

- Empirical studies that report on bias detection or mitigation strategies in AI health applications.

- Conceptual or theoretical frameworks that explore ethics in sports AI.

- Systematic reviews or meta-analyses in sports science, AI ethics, or medical informatics.

- Case studies focusing on professional, youth, or high-risk sports.

2.2. Search Strategy and Data Sources

To ensure a comprehensive and transparent literature search, we developed a structured query using relevant terms related to artificial intelligence, ethical considerations, bias mitigation, and athlete health data. The search was conducted in January 2025, guided by PRISMA principles of transparency in reporting. Searches were carried out across the major academic databases (PubMed, Web of Science, Scopus, SPORTDiscus, and Semantic Scholar) to ensure coverage of both biomedical and sport science domains. Given the rapidly evolving nature of AI research, additional screening of preprint servers (e.g., medRxiv, SSRN) was performed to capture emerging contributions. The following Boolean search string was used:

(“artificial intelligence” OR “AI” OR “machine learning”) AND (“injury prediction” OR “health monitoring”) AND (“sport” OR “athlete”) AND (“ethic” OR “bias” OR “privacy” OR “consent” OR “autonomy” OR “data governance”)

The search was limited to:

- Peer-reviewed studies (empirical, theoretical, or reviews)

- English-language publications

- Studies with accessible abstracts or full texts

- Publications involving amateur or professional athletes in any sports discipline

No restrictions were placed on publication year, sport type, or level of competition. Approximately 500 records were initially retrieved. Trained reviewers screened titles and abstracts against the inclusion criteria (outlined above), after which 24 studies were retained for full-text analysis. Additional full texts were obtained through open-access sources or institutional subscriptions when available.

2.3. Inclusion and Exclusion Criteria

Studies were included if they met the following criteria (Table 1):

Table 1.

Analytical categories for included studies.

- AI Focus: Research must use artificial intelligence, machine learning, or data-driven analytics in health monitoring, injury prediction, or medical decision support in sports contexts.

- Bias or Ethics Component: The study must address algorithmic bias or ethical concerns, including privacy, informed consent, data ownership, transparency, or athlete autonomy.

- Athletic Population: Participants must be amateur or professional athletes in any sports discipline (e.g., football, athletics, combat sports).

- Study Type: Accepted formats included empirical studies (cross-sectional, experimental, or cohort), theoretical essays, narrative reviews, or ethical analyses with transparent methodology.

- Language and Accessibility: Only English-language studies with full-text access were considered for detailed analysis.

Studies were excluded if:

- They focused solely on performance analytics without health-related AI applications.

- Ethical concerns were not explicitly discussed or derivable from the methodology.

- They applied AI outside sports contexts (e.g., in general, clinical populations).

- They were editorials or lacked a structured methods section.

2.4. Consistency with PRISMA 2020

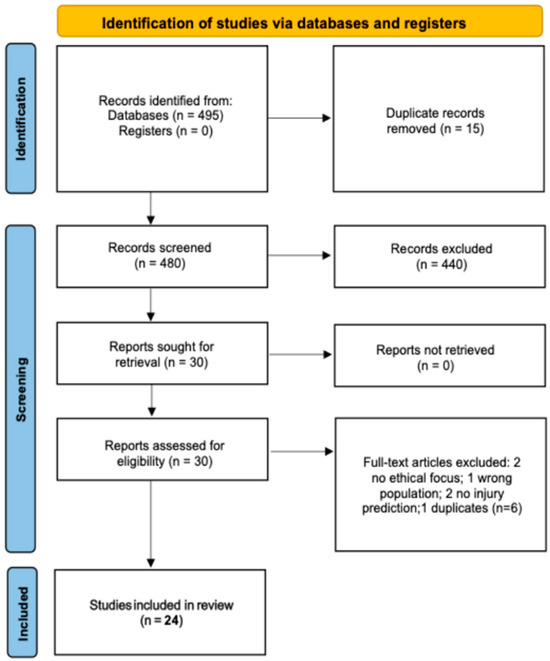

To ensure methodological transparency, the selection procedure followed the PRISMA 2020 framework. The process involved three consecutive stages:

- (1)

- Identification—495 records were retrieved from five academic databases and two preprint servers.

- (2)

- Screening—titles and abstracts were evaluated against predefined inclusion criteria;

- (3)

- Eligibility—full-text articles were assessed in detail based on ethical relevance, methodological transparency, and athlete population.

Studies were excluded during abstract screening if they lacked relevance to AI in sports health, failed to mention ethical dimensions, or applied AI outside the context of sport. At the full-text stage, common reasons for exclusion included missing methodological sections, absence of bias-related discussion, or lack of access to full content. The selection flow is documented in Figure 1, which summarizes the number of records excluded at each step.

Figure 1.

Prisma 2020 flow diagram of study selection.

2.5. Data Extraction Process

To guide the synthesis, we organized each included study around a set of recurring thematic categories. Rather than coding exhaustively as in a systematic review, these categories served as analytical lenses to ensure consistency in how key dimensions were discussed across diverse study types. Table 1 outlines the categories used.

Two coders independently reviewed each article. Disagreements were resolved via consensus, and selected quotes were extracted for qualitative synthesis, particularly in studies where bias or ethical trade-offs were discussed in narrative form [2,9].

2.6. Analytical Framework

After extraction, data were analyzed using a thematic synthesis approach, integrating inductive and deductive coding strategies. Ethical themes were first mapped based on predefined categories informed by prior AI ethics frameworks (e.g., privacy, fairness, explainability, consent). Emerging themes—such as “data commercialization pressure” or “athlete passivity in decision-making chains”—were also incorporated.

2.7. Quality Assessment

To enhance the rigor of this narrative review, the quality of included studies was appraised using established critical appraisal tools. Empirical studies were evaluated with the Critical Appraisal Skills Programme (CASP) checklists appropriate to their design (cross-sectional, cohort, or qualitative). Mixed-methods papers were additionally assessed with the Mixed Methods Appraisal Tool (MMAT, 2018 version). Conceptual and ethical analyses, which lack standardized appraisal instruments, were evaluated based on transparency of argumentation, explicit engagement with established AI ethics frameworks, and clarity of methodological positioning. This structured appraisal did not serve as a basis for exclusion. Still, it informed the narrative synthesis by giving higher weight to higher-quality and more transparent studies in the interpretation of themes.

2.8. Rationale for Narrative Review and Study Diversity

The present review adopted a narrative format to accommodate the high heterogeneity of the included literature. The studies encompassed a wide spectrum of empirical, conceptual, and methodological contributions, ranging from machine learning model validations to normative discussions on data ethics, athlete autonomy, and governance frameworks. This diversity in study type, focus, and methodological rigor made a purely systematic synthesis impractical. As a result, the narrative approach allowed for a more flexible integration of technical and ethical insights while preserving thematic coherence. The use of structured appraisal tools helped organize the material and ensure transparency but was not used to exclude studies from inclusion. Instead, varying levels of methodological transparency and conceptual depth were integrated proportionally into the synthesis.

3. Results

This section presents the key findings from the 24 included studies, encompassing technical evaluations of AI-driven injury prediction models and in-depth ethical considerations specific to various sports contexts. Through a thematic analysis of empirical data and conceptual discussions, the results illuminate methodological heterogeneity, uneven data quality, and varied definitions of injury risk, converging with broader concerns regarding privacy, fairness, and athlete autonomy. These findings underscore the complexity of integrating AI into sports medicine, highlighting both the potential benefits for injury prevention and the pressing need for robust governance frameworks.

To contextualize these findings, Table 2 provides an overview of the 24 included studies, combining methodological profiles with a structured quality appraisal. This summary highlights the heterogeneity of the evidence base, ranging from high-quality empirical studies grounded in robust datasets to conceptual analyses that offer normative and theoretical perspectives.

Table 2.

Overview and Critical Appraisal of Included Studies on AI, Ethics, and Athlete Health.

3.1. Study Quality and Characteristics

Empirical studies based on machine learning methods and sports data (Van Eetvelde, Phatak, Gabbett, Nassis, aus der Fünten, Hecksteden) are characterized by relatively high methodological quality (Table 1). Large datasets and transparency of study design particularly enhance their credibility, although the ethical dimension often remains limited, with quality ratings ranging from high to moderate. More recent works combine empirical analysis with ethical reflection (West, Impellizzeri, Yung, Morgulev, MacLean, Akpınar Kocakulak & Saygin, Musat). They demonstrate considerable innovation, such as federated learning or load-management tools, but also showing limitations arising from immature data or weaker peer review. Their quality has been assessed as highly variable, from high to low. Ethical and neuroethical foundations, represented by Purcell and Rommelfanger, have provided a framework for reflecting on privacy and neuroethics in sport. Although these do not include empirical research, their theoretical contribution has been recognized as significant, justifying a high rating. Systematic reviews and protocols (Bullock, Claudino, Collins, Diniz, Elstak) offer broad perspectives and methodological standards. TRIPOD-AI and PROBAST-AI are particularly important as reference points for future research, even though ethical issues are still addressed only marginally. This group was predominantly rated high or moderate. Studies integrating applied ethics and multidisciplinary approaches (Gibson & Nelson, Hudson, Mateus, Palermi, Rossi) highlight the growing importance of transparent models and ethical frameworks in the implementation of AI. While their overall quality is moderate, primarily, Rossi’s classic work stands out as being rated highly. Overall, the qualitative assessment indicates that empirical ML studies are the most methodologically robust. In contrast, conceptual works and preprints, though valuable for ethical debate, receive lower ratings due to their limited evidential basis.

3.2. Diversity of Methodologies and Sports Contexts

The included studies encompass various designs—from systematic reviews mapping early applications of machine learning (ML) in sports [12,23,27] to empirical papers developing predictive models for injuries [26], as well as conceptual and theoretical essays [2,29]. Despite this breadth, several overarching observations emerge. First, researchers commonly employ a range of techniques—regression analyses [14], ensemble ML and neural networks [26], and even bespoke algorithms [13]—yet consistently report high variability in predictive accuracy. This discrepancy is often attributed to limited sample sizes, unrepresentative datasets [23], or inconsistent definitions of injury severity [12].

Moreover, the sports covered vary significantly in terms of data availability and risk profiles. Elite soccer [15,26], rugby [7,13], basketball [10], endurance running [20], power-based track events [17], and Paralympic contexts [8] all pose distinct data challenges. Some rely on rich GPS metrics [27], while others turn to wearable sensors or subjective wellness surveys [11,22]. In each case, the level of competition—youth, amateur, or professional [2]—can further shift both the predictive focus and the ethical stakes [5].

What emerges from this heterogeneity is not only a portrait of methodological creativity but also a challenge for comparison across contexts. On the one hand, the use of neural networks and ensemble models demonstrates the field’s ambition to embrace advanced computational techniques. On the other hand, the continued reliance on regression approaches in foundational works such as Gabbett [14] reflects the persistence of simpler, more interpretable methods that remain influential in applied sport science. This duality shows that sports AI research is situated between innovation and pragmatism, with empirical practice often constrained by the realities of available datasets and measurement tools.

The variation across sports disciplines also underscores that AI cannot be meaningfully separated from its domain of application. Injury risks in rugby or American football are embedded in collision dynamics that demand rapid, real-time monitoring solutions. At the same time, endurance sports prioritize longitudinal load management, and Paralympic sports raise distinct ethical and inclusivity concerns. These differences caution against the use of universal models for injury prediction and underscore the need to tailor AI solutions to the unique physiological, organizational, and ethical characteristics of each sport. In this sense, methodological diversity is not a weakness but a reflection of contextual specificity. It strengthens the argument that ethical evaluation must accompany technical design from the outset, as the tools developed for one setting may introduce bias or harm in another.

3.3. Methodological Transparency and Reporting Gaps in Empirical AI Research

A closer inspection of the empirical studies reveals varying levels of detail regarding AI model architecture, training protocols, and performance metrics. Van Eetvelde et al. [12] implemented ensemble classifiers, including Random Forest and XGBoost, with hyperparameter optimization performed via grid search and 10-fold cross-validation. The reported performance reached an AUC of 0.81 and a recall of 0.75. Yung et al. [20] developed a Bayesian injury prediction model, which was evaluated using log-loss and posterior predictive checks. However, their work remained at the preprint stage with limited replication.

In contrast, several studies failed to report essential validation metrics—such as calibration accuracy, class balance performance, or sensitivity-specificity trade-offs—making comparisons difficult. Moreover, only a minority disclosed the exact input feature sets or addressed potential overfitting through regularization or dropout. These inconsistencies highlight a broader issue in sports AI research: while algorithmic performance is frequently cited, methodological transparency remains limited, impeding model interpretability, reproducibility, and ethical appraisal.

Although performance metrics such as accuracy or AUC are frequently cited in studies applying AI to injury prediction, a closer examination reveals substantial inconsistencies in how models are reported, validated, and interpreted. Critical details—including model architecture, input feature selection, validation schemes, or sensitivity-specificity trade-offs—are often underreported or omitted entirely. These omissions hinder not only the reproducibility and comparability of results but also limit ethical and clinical appraisals, particularly in high-stakes domains such as elite sport.

To illustrate the heterogeneity in methodological rigor, Table 3 summarizes the key methodological and technical characteristics of four fully verified empirical studies that implemented machine learning models for injury prediction in sport. These studies were selected because they provide complete information on model architecture, validation design, and performance metrics, while also addressing (to varying degrees) issues of explainability and overfitting control. This focused comparison captures the most transparent and methodologically mature examples currently available in the field, highlighting the persistent lack of standardization in how AI-driven injury prediction tools are developed, validated, and reported in sports science.

Table 3.

Summary of Methodological Rigor in Selected AI-Based Injury Prediction Studies.

3.4. Overarching Ethical Themes in AI-Driven Injury Prediction

Across these studies, four recurring ethical dimensions become prominent: privacy and data protection, fairness and inclusivity, transparency and explainability, and human oversight and autonomy. Table 4 summarizes how each dimension is represented in the literature, along with representative studies. The concern for privacy and data protection is particularly acute in contexts where athletes have little say over invasive monitoring practices [3,5]. At the same time, fairness and inclusivity issues arise when models are developed predominantly with male, elite athlete datasets [12] or fail to account for sex-specific or disability-related differences [8]. Researchers also emphasize the importance of transparency and explainability for validating predictive outputs and building trust among coaches, medical staff, and the athletes themselves [29,33]. Finally, there is broad agreement that human oversight must remain central to deploying AI: even sophisticated models can mislabel or overestimate risk without contextual, expert input [17].

Table 4.

Ethical dimensions in AI-driven injury prediction with representative studies.

These four dimensions are not isolated concerns but interdependent aspects of ethical AI deployment in sport. For example, the question of fairness cannot be divorced from privacy: underrepresented groups are not only more vulnerable to biased predictions but also less likely to benefit from strong data governance protections. Similarly, transparency overlaps with autonomy, since athletes cannot meaningfully exercise agency if they lack access to understandable explanations of how AI-generated risk scores are derived. This interconnection suggests that ethical evaluation should not treat privacy, fairness, transparency, and autonomy as separate silos but as mutually reinforcing principles.

Another critical observation is the asymmetry of power implicit in these dimensions. Studies such as MacLean [2] and Karkazis & Fishman [3] emphasize that athletes are frequently positioned as passive data subjects. At the same time, teams, leagues, and commercial technology vendors wield control over both the collection and interpretation of biometric data. This structural imbalance exacerbates the risks of privacy breaches, biased algorithms, and opaque decision-making, because the individuals most affected by AI predictions—the athletes—are the least empowered to contest or shape those outcomes. Ethical themes, therefore, cannot be reduced to technical challenges; they are embedded in the organizational and contractual architecture of modern sport.

Moreover, the literature demonstrates that these four dimensions manifest differently depending on the sporting context. In professional soccer, the scale and granularity of data collection make privacy a dominant concern, whereas in Paralympic sport, inclusivity and fairness emerge as particularly pressing. In collision sports such as rugby, the urgency of concussion management amplifies debates over autonomy and oversight, since decisions often need to be made rapidly and with potentially career-altering consequences. Such contextual variation illustrates that ethical dimensions are not abstract or universal categories but living tensions that take shape within specific sports, athlete populations, and institutional settings.

Finally, it is worth noting that the emphasis placed on these themes has shifted over time. Earlier reviews [12] acknowledged issues of fairness and data quality; however, more recent conceptual contributions [19,33] have brought transparency and autonomy into sharper focus. This evolution reflects both advances in technical tools—such as explainable AI modules—and heightened awareness of governance failures in athlete data management. Together, these trends suggest that future research will need to move beyond identifying ethical risks toward developing concrete. These sport-specific frameworks operationalize privacy, fairness, transparency, and autonomy as enforceable standards rather than aspirational values.

3.5. Manifestations of Bias

In parallel with these ethical themes, the literature identifies multiple forms of bias that can skew AI systems toward unfair or erroneous conclusions [23,25]. Table 5 outlines the most frequently discussed bias types, their typical sources, and potential consequences for injury prediction. Sampling bias remains especially pervasive in sports settings with limited multi-team collaboration (Rossi et al., 2018), while inconsistent injury definitions often drive label bias [12]. Feature bias similarly surfaces when tools rely heavily on physical load metrics at the expense of overlooked psychosocial or environmental factors [15]. Meanwhile, although less systematically documented, confirmation and reporting bias are also evident in proprietary “trade secret” models that lack transparent peer review [19,23].

Table 5.

Bias types in AI injury prediction with representative studies.

These different manifestations of bias highlight that technical imperfections in algorithm design are only one part of the problem. Many biases originate upstream, at the level of dataset composition, measurement practices, and definitional conventions. For instance, sampling bias reflects structural inequalities in sports science research, where elite male athletes are consistently overrepresented in available datasets, leaving youth, female, and Paralympic populations understudied. This imbalance means that algorithms trained on such data risk systematically underperforming—or even misclassifying risk—in populations that differ from the training sample. Thus, what may appear as a purely statistical limitation is, in fact, a reflection of broader social inequities.

Label bias also underscores how seemingly technical choices are ethically consequential. Injury definitions vary across sports and even across institutions within the same sport, leading to discrepancies in what counts as an “injury event.” Van Eetvelde et al. [12] show how such definitional inconsistencies undermine the comparability of prediction models, since the same physiological outcome may be coded differently depending on local practices. This highlights the need for consensus-driven, standardized definitions of injury categories if AI models are to achieve meaningful validity across contexts.

Feature bias demonstrates another critical tension between what is easy to measure and what is most relevant for injury prediction. While load metrics, such as GPS-derived running distances or accelerometry, are readily available, psychosocial variables like stress, sleep, and recovery quality are more challenging to quantify. As Nassis et al. [15] argue, this imbalance creates blind spots that reduce predictive power and risk of excluding essential determinants of athlete health. It also illustrates the danger of technological determinism: the assumption that what can be measured most efficiently is necessarily what matters most.

Finally, confirmation and reporting biases shed light on the influence of commercial interests in shaping AI applications in sport. Proprietary models, often marketed as competitive advantages, may conceal methodological weaknesses behind claims of predictive accuracy. Bullock et al. [23] and Impellizzeri et al. [19] both highlight that without transparent peer review, such models remain effectively unverifiable, raising concerns about accountability. This not only weakens trust in AI applications but also exposes athletes to the risk of decisions being made based on flawed or untested algorithms.

Together, these forms of bias reveal that fairness in AI-driven injury prediction cannot be achieved solely through technical refinements. Addressing them requires structural reforms in how datasets are collected, standardized, and shared, as well as cultural shifts toward transparency and open science. In this way, the literature demonstrates that bias is not an incidental flaw but a systemic challenge that reflects the intersection of technology, ethics, and institutional practice.

3.6. Sport-Specific Risk Profiles and Contextual Differences

One strength of the data corpus is its ability to examine how each sport’s unique demands shape AI’s ethical and technical challenges. Table 6 summarizes variations in commonly used data, risk factors, and moral priorities across different contexts. As shown, professional men’s soccer benefits from highly detailed performance logs yet also faces ethical pitfalls regarding data privacy in contract negotiations [27]. By contrast, youth and amateur athletes often lack access to sophisticated tracking tools, which compounds sampling biases [11,21]. In collision sports, the urgency of potential concussive injuries underscores the importance of real-time AI alerts but also heightens privacy concerns [7]. Altogether, these contextual differences reinforce the caution that universal, monolithic AI solutions risk overlooking sport- or demographic-specific nuances [8,17].

Table 6.

Sport-specific contexts of AI implementation with representative studies.

This diversity of contexts illustrates that AI systems cannot be treated as neutral, universally applicable tools. Instead, they are embedded in the specific risk structures, cultural expectations, and organizational priorities of each sport. For example, soccer’s extensive use of GPS and biometric monitoring makes it a fertile ground for predictive modeling but also exposes players to contractual risks when data are used in negotiations, as Elstak et al. [27] emphasize. In contrast, youth athletes, whose participation is often framed in terms of development and enjoyment rather than financial reward, face unique ethical considerations when subjected to monitoring technologies that they may not fully understand or be able to consent to.

Collision sports such as rugby and American football present yet another ethical layer. Here, the immediacy of concussive risk has driven the adoption of real-time monitoring devices, including smart mouthguards [7]. While these tools offer the promise of rapid intervention, they also intensify debates about data ownership and the right of athletes to limit or refuse intrusive monitoring. The urgency of health protection in collision sports may justify greater surveillance, but this justification must be carefully balanced against athletes’ autonomy and dignity.

Paralympic contexts offer a critical lens for understanding inclusivity in AI development. Palermi et al. [8] show that athletes with disabilities often face dual vulnerabilities: heightened medical risks and underrepresentation in the datasets that train AI models. When AI fails to account for these unique physiological profiles, it results not only in reduced predictive accuracy but also in an ethical failure to treat these athletes equitably. This underscores the need for sport-specific frameworks that explicitly include marginalized groups, rather than if models trained on able-bodied athletes will generalize.

The comparative evidence also reveals how different levels of competition reshape ethical and technical priorities. In elite professional settings, the sheer volume and precision of data invite sophisticated AI applications but also elevate concerns about surveillance and control. In amateur and developmental contexts, the scarcity of reliable data creates methodological obstacles while amplifying fairness issues, as limited resources often preclude access to advanced monitoring systems. These asymmetries reflect broader inequalities in sport, reminding us that AI is not only a technological innovation but also a reflection of existing structural disparities.

Altogether, the literature suggests that contextual differences are not secondary details but defining conditions for both the effectiveness and the ethics of AI in sports medicine. Universal, one-size-fits-all approaches are therefore inadequate. Instead, effective governance and ethical evaluation must be sport-specific, recognizing that what counts as appropriate monitoring in one discipline may be intrusive, unfair, or even harmful in another. In this way, Table 4 provides not just a descriptive overview of contexts but also a roadmap for understanding why AI in sport must always be situated, negotiated, and adapted rather than universally imposed.

3.7. Mitigation Strategies and Best Practices

In response to these challenges, authors propose various approaches aimed at aligning AI-driven injury prediction with clinical effectiveness and ethical standards [18,23]. A central theme is improving data breadth and quality, including multi-club or multi-league data-sharing initiatives [15], open science practices [19], and more robust sampling protocols that incorporate female, youth, or Paralympic athletes [8]. Some studies advocate for federated learning, which enables training on distributed data without requiring centralized collection, thereby protecting privacy [22]. Equally significant is the push for model transparency and thorough validation. Researchers underscore the importance of user-friendly explainability modules—such as SHAP values or anomaly detection readouts—to demystify AI outputs for coaches and athletes alike [29,33]. Yet, these technical measures must be paired with human oversight policies, including clear guidelines on when AI “red flags” should prompt further medical evaluation [17] rather than trigger automatic benching decisions [2]. Overall, these mitigation strategies underscore a shared recognition: no single technical fix can address the multifaceted ethical concerns that arise. Instead, AI-driven injury prediction must be contextualized within robust governance frameworks [3,5] and anchored in open, collaborative practices among sports scientists, medical staff, and athletes themselves [16,18]. The comparison of this work is presented in Table 7.

Table 7.

Mitigation strategies and best practices with representative studies.

What becomes evident from this synthesis is that mitigation strategies can be categorized into two interrelated areas: technical safeguards and institutional governance mechanisms. Technical approaches include the development of privacy-preserving methods, such as federated learning, data anonymization, and explainable AI modules. These solutions aim to improve predictive performance while reducing ethical risks. However, as several authors note, technical safeguards alone cannot resolve deeper issues of fairness or power asymmetry [29,33]. Without supportive governance frameworks, even the most advanced algorithms risk reproducing the very inequities they are designed to avoid.

Open science emerges as a critical best practice. Impellizzeri et al. [19] emphasize that transparency in data access, model validation, and publication of results is essential for fostering accountability. This approach aligns AI development with broader scientific norms of reproducibility and peer scrutiny, countering the secrecy of proprietary “black-box” models. Open science also has a democratizing effect, as smaller clubs or research institutions gain the ability to evaluate and adapt AI systems without depending on commercial vendors. In this way, data sharing and open access practices serve both ethical and practical ends, enabling more equitable distribution of technological benefits across the sporting landscape.

Another promising avenue is the diversification of datasets to include female athletes, youth players, and Paralympic competitors. Palermi et al. [8] argue that inclusivity must be built into the design of AI systems from the outset, rather than treated as an afterthought. Without such efforts, predictive models will continue to overrepresent elite male athletes and produce biased outputs that disadvantage underrepresented groups. Inclusivity, therefore, is not simply a matter of fairness but also of accuracy: diverse datasets lead to more generalizable and reliable predictions.

At the same time, federated learning and related privacy-preserving techniques represent a frontier in AI development for sport. Musat et al. [22] demonstrate how these methods allow distributed datasets to be utilized without requiring centralization, thereby protecting athlete privacy while still benefiting from large-scale training data. Although technically complex and still evolving, such approaches exemplify how ethical and technical imperatives can be aligned. They also suggest a future in which clubs or national federations can collaborate on injury prediction research without surrendering sensitive data to third-party companies.

Human oversight remains the indispensable counterpart to these technical measures. Hecksteden et al. [17] caution against automation bias, where coaches or medical staff defer too readily to AI outputs. To mitigate this risk, MacLean [2] and others argue for explicit policies that clarify when and how AI recommendations should be acted upon. Such guidelines not only preserve the role of expert judgment but also protect athletes from being reduced to passive subjects of algorithmic decision-making. Oversight mechanisms thus function as both a safeguard and a means of preserving the human values—such as care, trust, and accountability—that sport organizations claim to uphold.

Taken together, the literature demonstrates that mitigation strategies and best practices are not optional add-ons, but essential conditions for the responsible deployment of AI in sport. Table 5 illustrates how these strategies align with various technical and ethical challenges, providing a framework for future research and policy development. The overarching lesson is clear: the promise of AI in athlete health cannot be realized without embedding ethical reflection and participatory governance into the very fabric of technological innovation.

3.8. Long-Term Governance Challenges

A recurring topic—highlighted in several conceptual and policy-oriented papers—concerns the long-term ramifications of AI-managed athlete data. Table 8 outlines the governance domains where oversight remains weak or absent. MacLean [2] and Karkazis & Fishman [3] both note that athletes rarely enjoy formal co-ownership over biometric data, enabling teams or commercial vendors to use these data indefinitely and for profit [5,13]. Likewise, post-career data use and predictive labeling can follow athletes into contract negotiations or retirement, raising questions about consent withdrawal and the right to challenge historically generated risk profiles [7,23].

Table 8.

Explainability and transparency challenges with representative studies.

These findings underscore that the governance challenges associated with AI in sport extend far beyond the immediate risks of injury prediction or daily monitoring practices. They touch on the very structures of ownership, accountability, and power within modern sport. The persistent absence of athlete co-ownership rights over biometric data illustrates a fundamental asymmetry: while athletes generate the data through their training and competition, institutions and companies retain the authority to monetize and control it. This imbalance raises serious ethical and legal questions, particularly as data are increasingly seen as commercial assets with enduring value beyond an athlete’s active career.

The issue of consent withdrawal further complicates governance. In many current systems, athletes are asked to provide broad, open-ended consent to data collection as a condition of participation or employment. Yet the long-term storage and reuse of these data—sometimes years after an athlete has retired—means that initial consent is stretched far beyond its reasonable scope. Authors such as Wang et al. [9] highlight that meaningful consent must be dynamic and revocable, allowing athletes to opt out of ongoing data use. While the GDPR (Art. 7.3) formally provides for the withdrawal of consent, its enforcement in elite sport settings is often hindered by structural power asymmetries, employment contracts, or the pressure to conform to team expectations.

Another long-term challenge concerns the potential for predictive labeling to shape an athlete’s career trajectory in ways that extend beyond the field of play. Once an AI system generates a risk profile, that label may persist in institutional records and influence decisions about team selection, contract negotiations, or insurance coverage. Rush and Osborne [6] caution that such labels can become self-fulfilling prophecies: athletes tagged as “high risk” may receive fewer opportunities, thereby reinforcing the perception that the model’s prediction was accurate. This dynamic not only raises ethical concerns about fairness but also introduces a novel form of structural discrimination into sport. To mitigate this risk, labeling should be accompanied by contextual interpretation from qualified staff rather than used as an automatic exclusionary criterion. Incorporating human-in-the-loop mechanisms, ethical guidelines for label communication, and regular audits of decision outcomes can help ensure that AI-generated risk assessments inform rather than dictate decisions. Moreover, providing athletes with access to explanations and appeals processes can further strengthen fairness and transparency.

Commercialization pressures also loom large in this debate. Companies that design wearable sensors, data platforms, or proprietary algorithms often operate with incentives that prioritize profitability over athlete welfare. As MacLean [2] observes, the absence of strong oversight enables these actors to indefinitely appropriate athlete data, transforming personal health information into a market commodity. This trend risks undermining trust in AI systems, as athletes may perceive that their data are being exploited for corporate gain rather than used to safeguard their health. Addressing this concern will require stronger regulation, potentially modeled on frameworks such as the GDPR, but adapted to the unique conditions of sport.

Finally, the literature suggests that governance challenges cannot be solved solely through technical fixes or individual contracts. What is needed is a structural shift toward multi-level governance frameworks that involve athletes directly in decision-making about their data. Proposals include the creation of independent oversight bodies, athlete-led ethics committees, and collective bargaining provisions that enshrine data rights as a standard component of professional contracts. These initiatives would help rebalance the power asymmetry that currently characterizes the management of athlete data, ensuring that the benefits of AI innovation are distributed more equitably.

Altogether, the evidence demonstrates that long-term governance challenges are among the most pressing issues facing AI in sport. Table 9 provides a structured overview of the domains where oversight is weakest. At the same time, the accompanying literature shows that without enforceable rights and robust institutional mechanisms, athletes will remain vulnerable to exploitation. Governance, therefore, is not an afterthought but a foundational requirement if AI-driven injury prediction is to advance in a way that is ethically sound, socially just, and sustainable over time.

Table 9.

Governance domains and oversight gaps with representative studies.

4. General Discussion

4.1. Ethical Tensions in AI-Driven Athlete Monitoring

Recent advances in AI-driven athlete health monitoring and injury prediction have revealed the promise of data-intensive analytics, as well as the ethical and governance challenges that arise when such systems are deployed in real-world sports environments [12]. On the one hand, numerous studies have demonstrated that machine learning techniques—ranging from classical models, such as random forests, to deep neural networks and newer explainable methods—can capture complex risk factors more effectively than traditional statistical approaches [23,26]. By integrating large-scale physiological, biomechanical, and contextual data, AI systems can predict elevated injury risk, recommend proactive interventions, and potentially reduce time lost to injury [20,28]. While some machine learning models remain opaque (‘black-box’), others—such as decision trees or logistic regression—are inherently interpretable but may require large volumes of high-quality data to produce accurate and stable predictions in real-world sport settings. This trade-off between model transparency and data demand reflects a central tension in applied sports analytics, where datasets are often limited in size and heterogeneity, making simpler yet data-hungry models less effective in practice. On the other hand, the rapid uptake of these technologies has exposed profound ethical vulnerabilities. Among these are data ownership, privacy concerns, and the risk that overly institution-centric monitoring regimes will undermine athlete autonomy [2,3].

One of the central findings in the current literature is that ethical dilemmas arise from the power asymmetry between athletes and the organizations that collect and manage their data [3,5]. While AI can provide valuable insights for optimizing training loads or return-to-play decisions, institutional stakeholders—teams, leagues, clubs, and technology vendors—often own or control the resulting data sets [2]. This imbalance becomes especially evident in high-performance settings where contracts may require athletes to wear tracking devices or comply with biometric surveillance as a condition of team membership, leaving limited room for genuine informed consent [5]. Without robust governance, athletes can be placed in a precarious position where the performance benefits of AI analytics overshadow their right to privacy or the potential harms of predictive labeling.

A second issue relates to fairness and inclusivity. As multiple authors note, AI systems designed for one context—often elite male sports—may fail to generalize accurately across other populations, such as youth athletes, female players, or Paralympic competitors [8,11,27]. Historically, male and elite-level athletes have been overrepresented in training data, creating the risk of unintentional bias for underrepresented groups [12]. Even subtle data omission (e.g., failing to capture menstrual cycle information or not accounting for growth spurts in youth) may distort injury predictions and result in inequitable outcomes [18,23]. Conceptual frameworks increasingly call for sport-specific guidelines and bias audits to ensure that algorithms do not perpetuate structural disparities or discriminate against demographics [15,24].

Explainability and transparency also feature prominently in debates about ethical AI in sports. Coaches, trainers, and athletes often express reluctance to trust a “black-box” model whose outputs they cannot interpret [34]. Yet, as they argue, complex neural networks can mask the factors contributing to a given risk score, making it difficult for stakeholders to validate or contest AI-driven decisions. Several studies highlight how local interpretable models or feature-importance metrics (e.g., SHAP values) can demystify predictions [1,34]. However, the existence of explainability methods does not automatically guarantee that athletes themselves have access to, or agency over, these explanations [5]. The AI’s risk outputs are often delivered exclusively to medical staff or team management. As a result, athletes remain excluded from conversations about their health, perpetuating the notion that their role is merely to comply with data-driven instructions.

Studies also highlight the necessity for human oversight and “human-in-the-loop” approaches. Although advanced algorithms can detect nuanced patterns in athlete load data, they should ideally function as a supplement—rather than a replacement—for clinical judgment and contextual knowledge [16]. Over-reliance on algorithmic outputs poses the danger of automation bias, where staff defers critical decisions to an AI system that might overlook essential qualitative or individualized factors [18,23,25]. Maintaining a balance between data-driven insight and expert discernment ensures that athletes receive personalized care rather than cookie-cutter prescriptions based on population-level patterns. This balance is particularly crucial in contact and youth sports, where the physical risks of injury and the ethical stakes of consent and safety are heightened [7,21].

Although the General Data Protection Regulation (GDPR) is binding across the European Union, its interpretation and enforcement may vary between national data protection authorities, primarily when sports organizations operate across borders or engage with both public and private entities. This creates inconsistencies in how athlete data rights are protected and monitored in practice.

4.2. Algorithmic Fairness and Bias Mitigation

While the preceding discussion highlights the structural and ethical challenges of AI-based injury prediction, addressing these issues also requires concrete methodological interventions. A growing body of empirical and conceptual work proposes technical and procedural strategies to mitigate bias, enhance transparency, and promote equitable model performance. These strategies operate on three levels: data handling and class balance, model interpretability, and privacy-preserving computation. Together, they represent emerging standards for ethically responsible AI deployment in sports.

Ensuring fairness in AI-driven injury prediction models requires deliberate strategies to mitigate algorithmic bias, particularly in the context of small datasets, class imbalance, and heterogeneous athlete profiles. Several studies attempted to address such concerns through both technical and ethical approaches, though with varying degrees of rigor and transparency.

One frequently cited challenge is the class imbalance inherent in injury datasets, where injury cases constitute only a small minority of observations. For instance, Freitas et al. [29] reported that only 0.2% of their observations corresponded to actual injuries, making naive classification methods likely to favor the non-injured class. To address this, they implemented cost-sensitive learning, assigning greater penalties to misclassifying injury cases. In addition, they applied undersampling techniques, such as k-means clustering, to reduce the overrepresentation of non-injured instances, thereby improving class balance. Similarly, Hecksteden et al. [17] employed the ROSE (Random Over-Sampling Examples) technique to synthetically generate balanced training sets within their cross-validation folds, improving sensitivity without compromising specificity.

Another layer of bias mitigation involves controlling model complexity and preventing overfitting, especially given the relatively small sample sizes standard in elite sport. Hecksteden et al. [17] limited their models to 11 pre-defined predictors selected a priori, thereby minimizing the risk of spurious associations and enhancing interpretability. This approach aligns with recent recommendations advocating for feature parsimony and theoretical coherence in high-stakes AI applications [10].

Explainability is another critical dimension of ethical AI deployment. To enhance transparency, several studies incorporated model-agnostic interpretability techniques. In particular, SHAP (SHapley Additive exPlanations) values were used to determine the contribution of individual features to model outputs. Angus and Sirisena [30] applied SHAP to identify key predictors in their AI-SPOT system, such as “team1 accurate shots.” They found that domain-specific features related to gameplay dynamics significantly influenced injury predictions. Similarly, Hecksteden et al. [17] recommended SHAP-based inspection to detect overfitting artifacts and hidden data biases, especially in black-box ensemble models like GBM or XGBoost.

Emerging research suggests that federated learning may offer a promising solution to reconcile predictive performance with data privacy and fairness concerns. Rather than pooling athlete data centrally, federated approaches train models locally on decentralized datasets and aggregate updates via secure protocols. This paradigm not only mitigates privacy risks but also reduces sampling bias by preserving institutional heterogeneity [22]. While not yet widespread in sports science, the potential of federated architectures to support cross-club collaboration without compromising sensitive health data merits further exploration. Collectively, these techniques represent a growing methodological toolkit for bias mitigation in AI-based sports injury prediction. However, consistent reporting, empirical benchmarking, and ethical oversight remain essential to ensure that such methods meaningfully contribute to fairness, transparency, and athlete trust.

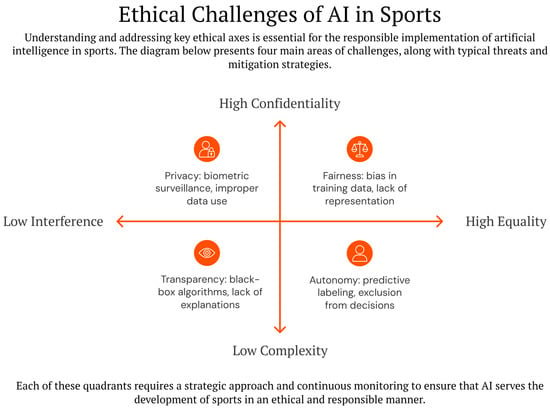

4.3. Ethical Axes and Mitigation Strategies

A cross-cutting analysis of the included studies reveals that ethical concerns surrounding AI in sport cluster into five interrelated axes: privacy, fairness, transparency, autonomy, and governance. Each axis encompasses distinct risks, real-world manifestations, and potential mitigation approaches. To avoid redundancy and synthesize dispersed insights, we consolidate these issues into a unified framework that maps common ethical risks to practical examples and mitigation strategies (Table 10). This tabular approach helps to clarify how abstract ethical principles translate into day-to-day dilemmas for athletes, coaches, and sport organizations. In addition to Table 10, we present a graphical conceptual model (Figure 2) that visually integrates these five ethical dimensions. The figure illustrates the dynamic interplay between these axes and highlights the systemic nature of ethical challenges in AI deployment. For instance, a single application—such as an injury prediction model—may simultaneously affect athlete privacy (via biometric data collection), fairness (due to population-level bias), and autonomy (by limiting return-to-play agency). Similarly, governance failures may exacerbate risks across all other domains.

Table 10.

Ethical Challenges in AI Application in Sports.

Figure 2.

Conceptual Framework of Ethical Challenges in AI Applications in Sport.

Taken together, this synthesis emphasizes the need for interdisciplinary governance models, participatory design processes involving athletes, and transparent technical documentation. It also demonstrates how different stakeholders—data scientists, sports physicians, federations, and policymakers—can intervene at multiple levels to safeguard ethical integrity. Rather than addressing ethical issues in isolation, this approach encourages sport organizations to adopt holistic and proactive strategies that recognize the interdependence of privacy, equity, agency, and accountability in AI-enhanced environments.

These ethical tensions are not new, but AI magnifies their scope and urgency. As McNamee [31] emphasized, elite sport has long struggled with normative ambiguity, particularly when technological innovation outpaces governance capacity. Similarly, Pitsiladis et al. [32], writing in the context of sports genomics, warned against premature implementation of data-intensive technologies without robust privacy safeguards—a lesson directly transferable to biometric AI systems. These earlier contributions offer a crucial foundation upon which to build AI-specific governance strategies in today’s data-driven sporting landscape.

4.4. Integrative Governance and Cross-Domain Relevance of Ethical AI in Sport

The ethical dimension of athlete health monitoring is by no means novel—indeed, as Bernstein et al. [35] argued in their seminal review, many ethical issues in sports medicine come about because the traditional relationship between doctor and patient is altered or absent. Similarly, Testoni et al. [36] observed that elite sports physicians must negotiate not only medical imperatives but also quid pro quo arrangements and institutional performance pressures: physicians must balance issues like protecting versus sharing health information, as well as issues regarding autonomous informed consent versus paternalistic decision-making [36]. These foundational observations underscore how contemporary AI-driven systems in sport—characterized by advanced monitoring, predictive algorithms, and large-scale biometric data streams—amplify rather than replace pre-existing moral tensions around consent, confidentiality, and athlete autonomy.

Finally, a consistent theme in the literature is that mitigating these risks requires long-term governance strategies and multi-stakeholder collaboration. Beyond addressing day-to-day implementation details, sports organizations need frameworks that codify data rights, involve athletes in policymaking, and establish clear lines of accountability [2,5]. Such measures might include contractual reforms in professional leagues, the creation of ethics boards with athlete representation, or the adoption of transparent auditing standards enforced at national or international levels [5,11]. Equally important is an ethos of open science—so that methodologies, data structures, and validation results are shared in ways that permit independent scrutiny and replication [18].

Although this review focuses on the specific context of elite and youth sports, many of the ethical concerns raised—particularly those related to algorithmic bias, informed consent, and data governance—are equally relevant in adjacent fields such as occupational health monitoring, education, or personalized medicine. In each of these domains, predictive analytics intersect with human decision-making, raising similar questions about transparency, autonomy, and structural inequality. The analytical framework proposed here may thus offer a valuable lens for examining ethical risks across broader contexts of AI deployment.

In sum, while AI holds substantial promise for advancing injury prevention and athlete health, the collective evidence underscores that technology alone cannot resolve the deeper structural and ethical tensions in sports environments. Ongoing research emphasizes the need to integrate participatory governance, fair data practices, and meaningful athlete engagement at every stage of AI development and deployment [20]. Achieving this vision requires a shift from top-down management of “sports data subjects” to an athlete-centered model of care, emphasizing that the actual value of AI in sports lies not simply in predictive accuracy, but in fostering well-being, equity, and autonomy for all participants.

Moreover, while the present review focuses specifically on elite sports settings, the ethical concerns and governance tensions identified—such as surveillance asymmetries, model opacity, or consent under institutional pressure—may also apply to adjacent domains. These include military training programs, workplace performance analytics, or consumer-grade health monitoring platforms. Future research could explore whether similar athlete-centered governance frameworks could be adapted for these parallel contexts.

4.5. Practical Implications and Stakeholder Perspectives

The ethical deployment of AI in sports injury prediction demands more than abstract principles; it requires actionable strategies that reflect the diverse realities of stakeholders such as athletes, coaches, sports physicians, club managers, sponsors, insurers, and governing bodies. This section articulates practical pathways for operationalizing core ethical values—privacy, fairness, transparency, and autonomy—within the constraints of real-world settings. One of the most urgent implementation gaps lies in the codification of athletes’ data rights. While legal frameworks such as GDPR and HIPAA establish basic protections, they rarely address domain-specific challenges in elite sport. Practical examples include collective bargaining agreements (CBAs) that enshrine the right to opt out of biometric monitoring or demand transparency about data reuse. However, many such contracts remain vague or outdated. Developing binding, sport-specific data charters, negotiated with athlete unions and implemented across clubs and federations, could provide a stronger foundation for consent, data ownership, and long-term health protections.

A second challenge involves structural inequalities between clubs or federations, particularly concerning technological infrastructure and access to advanced AI tools. Disparities in model accuracy, data quality, and technical staffing often reflect broader financial inequalities, potentially compounding ethical risks. To mitigate these asymmetries, federated learning approaches—whereby clubs retain local data but participate in centralized model training—could offer privacy-preserving collaboration without requiring full data sharing. Yet their viability depends on robust governance, trusted intermediaries, and a cultural shift toward cooperative innovation even among competitors.

In addition, sponsors and insurers play an increasingly influential role in the governance of athlete data. Performance-based insurance models and sponsor-driven wearable technologies may create new pressures to disclose or analyze health data beyond the athlete’s control. Clarifying the roles and responsibilities of commercial actors through contractual safeguards, data access policies, and ethical oversight mechanisms is essential to prevent conflicts of interest and safeguard athlete autonomy. At the athlete level, contestability tools—such as interactive dashboards, explainable AI modules (e.g., SHAP or LIME), and individual risk reports—can help athletes and support staff understand and challenge algorithmic outputs. These tools must be designed with usability in mind, ensuring that insights are accessible to non-specialists. Criteria for explainability should not be defined solely by technical standards, but also by the capacity of the average athlete to act meaningfully on the information provided.

Finally, the harmonization of national and supranational governance structures—such as recommendations from the EU High-Level Expert Group on AI, FIFA, IOC, or UNESCO—is key to ensuring consistent application of ethical principles. Integrating these frameworks into local practices will require not only regulatory coordination but also grassroots engagement to align technical implementation with athlete-centered values.

5. Conclusions

This review systematically examined the ethical landscape of artificial intelligence (AI) applications in athlete health monitoring and injury prediction, analyzing 24 academic studies spanning technical, conceptual, and interdisciplinary domains. The findings reveal a critical disjunction between the growing technical capabilities of AI in sports, especially in predictive accuracy and data integration, and the underdeveloped ethical frameworks governing its use. They may be formulated in the following five conclusions:

- Across professional and amateur sports, AI systems for injury prediction consistently raise issues of data privacy, athlete autonomy, informed consent, algorithmic fairness, and governance gaps.

- Despite technical methods (e.g., explainable AI), biases often persist due to non-representative datasets and incomplete integration of ethical safeguards in model design.

- Existing frameworks (HIPAA, FERPA, GDPR) provide only partial coverage, especially in domains such as youth sports, contact sports, and Paralympic contexts, where the ethical stakes are higher.

- Tools like SHAP and Bayesian visualizations offer greater model explainability but rarely extend meaningful decision rights to athletes, leaving them with limited avenues to contest AI-generated risk scores.

- Institutional control of athlete data, combined with the absence of enforceable data rights, underscores a persistent disjunction between AI’s expanding predictive capabilities and underdeveloped oversight mechanisms.

6. Recommendations

Artificial intelligence (AI) is becoming increasingly central to modern sports, enabling the prediction of injuries and the monitoring of athlete health. While these advancements promise unprecedented insights into performance and risk management, they also introduce complex ethical dilemmas around privacy, autonomy, and fairness. The following recommendations aim to address these challenges and chart a path toward more responsible and equitable AI adoption in sports medicine:

- Establish Athlete Data Rights. Athletes need enforceable rights over their biometric and health data, including the ability to access, correct, and control its use. These rights should appear in collective bargaining agreements, institutional ethics policies, and national or international laws. Contracts must explicitly allow athletes to consent to or withdraw from AI-based monitoring, ensuring they can challenge data-driven decisions affecting their well-being or career.

- Develop Sport-Specific Ethical Frameworks. Because ethical risks vary across different sports, federations should create tailored guidelines that define risk thresholds, acceptable trade-offs, and inclusive standards for underrepresented groups (e.g., Paralympic athletes, youth players, and women’s leagues). This context-sensitive approach guards against one-size-fits-all policies that overlook unique needs within each sporting domain.

- Institutionalize Participatory Governance. AI deployment must involve stakeholders at every level, including athletes, coaches, medical staff, and AI specialists. Establishing ethics boards within sports organizations ensures shared responsibility for data governance policies, providing mechanisms for regular reviews and iterative improvements. This collaborative model prioritizes athlete welfare and autonomy in the adoption of AI.

- Ensure Transparency, Explainability, and Contestability. AI systems should incorporate clear explainability modules alongside athlete-facing dashboards to prevent unseen biases or unchecked decision-making. Such tools enable athletes and staff to understand, question, or appeal risk scores that can influence crucial decisions, such as team selection or contract negotiations. Standardized appeal procedures further support fairness and trust in AI-driven outcomes.

- Create Independent Oversight Structures. Finally, an independent, interdisciplinary oversight body-potentially modeled on clinical or public health ethics boards-should monitor AI usage, evaluate fairness, and address harm or rights violations. With representatives from sports law, bioethics, data science, and athlete advocacy, this body can recommend corrective actions or even suspend AI tools that fail to meet ethical standards.

7. Future Research

The synthesis of 24 included studies highlights several critical gaps that future research should address. First, empirical investigations remain overly concentrated on elite male athletes, limiting the generalizability of findings to youth, female, and Paralympic populations. Expanding datasets to reflect diverse demographics and sport-specific risk profiles is crucial for reducing sampling and representational bias. Second, the technical development of explainable AI has outpaced its ethical integration. While methods such as SHAP and interpretable Bayesian models are advancing, there is limited evidence that these tools enhance athletes’ agency or decision-making power. Future studies should therefore evaluate not only predictive accuracy but also athlete comprehension, trust, and capacity to contest AI-driven outcomes. Third, there is a need for longitudinal research on the long-term consequences of biometric data use, particularly around consent withdrawal, data portability, and post-career governance. Ultimately, interdisciplinary collaborations—combining sports science, data science, law, and bioethics—will be crucial for developing governance frameworks that can adapt to rapidly evolving technologies.

8. Study Limitations

Several limitations of this review should be acknowledged. First, although the search strategy followed PRISMA principles and encompassed multiple databases, the synthesis was conducted as a narrative review rather than a full systematic review with meta-analysis. This approach, while suitable for mapping conceptual and ethical dimensions, may have introduced selection bias in the inclusion and interpretation of studies. Second, the heterogeneity of the 24 included studies—spanning empirical machine learning applications, conceptual essays, and policy-oriented analyses—limited the possibility of direct quantitative comparison across methodologies and outcomes. Third, only English-language publications were included, potentially excluding relevant perspectives published in other languages. Finally, as AI technologies evolve rapidly, the evidence base analyzed here may quickly become outdated, underscoring the need for continuous monitoring of emerging literature and practices.

Author Contributions

Conceptualization, Z.W.; methodology, Z.W.; visualization, Z.W.; writing—original draft preparation, Z.W., G.J., K.J.S. and T.G.; writing—review and editing, Z.W., G.J., K.J.S. and T.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Higher Education, Republic of Poland, grant number MNiSW/2024/DAP/225.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article material. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mateus, N.; Abade, E.; Coutinho, D.; Gómez, M.-Á.; Peñas, C.L.; Sampaio, J. Empowering the Sports Scientist with Artificial Intelligence in Training, Performance, and Health Management. Sensors 2025, 25, 139. [Google Scholar] [CrossRef]

- MacLean, E. The Case of Tracking Athletes’ Every Move: Biometrics in Professional Sports and the Legal and Ethical Implications of Data Collection. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Karkazis, K.; Fishman, J.R. Tracking U.S. Professional Athletes: The Ethics of Biometric Technolog. Am. J. Bioeth. 2016, 17, 45–60. [Google Scholar] [CrossRef]

- Zhang, J.; Li, J. Mitigating Bias and Error in Machine Learning to Protect Sports Data. Comput. Intell. Neurosci. 2022, 2022, 4777010. [Google Scholar] [CrossRef] [PubMed]

- Purcell, R.H.; Rommelfanger, K.S. Biometric Tracking from Professional Athletes to Consumers. Am. J. Bioeth. 2017, 17, 72–74. [Google Scholar] [CrossRef]

- Rush, C.; Osborne, B. Benefits and Concerns Abound, Regulations Lack in Collegiate Athlete Data Collection. J. Leg. Asp. Sport 2022, 32, 62–94. [Google Scholar] [CrossRef] [PubMed]

- Gibson, R.B.; Nelson, A. Smart Mouthguards and Contact Sport: The Data Ethics Dilemma. J. Med. Ethics 2024, 51, 508–511. [Google Scholar] [CrossRef]

- Palermi, S.; Vecchiato, M.; Saglietto, A.; Niederseer, D.; Oxborough, D.; Ortega-Martorell, S.; Olier, I.; Castelletti, S.; Baggish, A.; Maffessanti, F.; et al. Unlocking the Potential of Artificial Intelligence in Sports Cardiolog: Does It Have a Role in Evaluating Athlete’s Heart? Eur. J. Prev. Cardiol. 2024, 31, 470–482. [Google Scholar] [CrossRef]

- Wang, D.-Y.; Ding, J.; Sun, A.-L.; Liu, S.-G.; Jiang, D.; Li, N.; Yu, J.-K. Artificial Intelligence Suppression as a Strategy to Mitigate artifici al Intelligence Automation Bias. J. Am. Med. Inform. Assoc. 2023, 30, 1684–1692. [Google Scholar] [CrossRef]

- Hudson, D.; Den Hartigh, R.J.R.; Meerhoff, L.R.A.; Atzmueller, M. Explainable Multi-Modal and Local Approaches to Modelling Injuries in Sports Data. In Proceedings of the 2023 IEEE International Conference on Data Mining Workshops (ICDMW), Shanghai, China, 1–4 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 949–957. [Google Scholar]

- Akpinar Kocakulak, N.; Sayagin, A.S. The Importance of Nano Biosensors and Ethical Elements in Sport Performance Analysis. Nat. Appl. Sci. J. 2020, 3, 17–27. [Google Scholar] [CrossRef]

- van Eetvelde, H.; Mendonça, L.D.M.; Ley, C.; Seil, R.; Tischer, T. Machine Learning Methods in Sport Injury Prediction and Prevention: A Systematic Review. J. Exp. Orthop. 2021, 8, 27. [Google Scholar] [CrossRef]

- Phatak, A.A.; Wieland, F.G.; Vempala, K.; White, P.E.; Koch, T.; Narayan, V. Artificial Intelligence Based Body Sensor Network Framework—Narrative Review: Proposing an End-to-End Framework Using Wearable Sensors, Real-Time Location Systems and Artificial Intelligence/Machine Learning Algorithms for Data Collection, Data Mining and Knowledge Discovery in Sports and Healthcare. Sports Med. Open 2021, 7, 79. [Google Scholar] [CrossRef] [PubMed]

- Gabbett, T.J. The Development and Application of an Injury Prediction Model for Noncontact, Soft-Tissue Injuries in Elite Collision Sport Athletes. J. Strength. Cond. Res. 2010, 24, 2593–2603. [Google Scholar] [CrossRef]

- Nassis, G.P.; Verhagen, E.; Brito, J.; Figueiredo, P.; Krustrup, P. A Review of Machine Learning Applications in Soccer with an Emphasis on Injury Risk. Biol. Sport 2023, 40, 233–239. [Google Scholar] [CrossRef] [PubMed]

- Hecksteden, A.; Schmartz, G.P.; Egyptien, Y.; aus der Fünten, K.; Keller, A.; Meyer, T. Forecasting Football Injuries by Combining Screening, Monitoring and Machine Learning. Sci. Med. Footb. 2022, 7, 214–228. [Google Scholar] [CrossRef]

- Hecksteden, A.; Keller, N.; Zhang, G.; Meyer, T.; Hauser, T. Why Humble Farmers May in Fact Grow Bigger Potatoes: A Call for Street-Smart Decision-Making in Sport. Sports Med. Open 2023, 9, 94. [Google Scholar] [CrossRef] [PubMed]

- West, S.; Shrier, I.; Impellizzeri, F.M.; Clubb, J.; Ward, P.; Bullock, G. Training-Load Management Ambiguities and Weak Logic: Creating Potential Consequences in Sport Training and Performance. Int. J. Sports Physiol. Perform. 2025, 20, 481–484. [Google Scholar] [CrossRef]

- Impellizzeri, F.M.; Shrier, I.; McLaren, S.J.; Coutts, A.J.; McCall, A.; Slattery, K.; Jeffries, A.C.; Kalkhoven, J.T. Understanding Training Load as Exposure and Dose. Sports Med. 2023, 53, 1667–1679. [Google Scholar] [CrossRef]

- Yung, K.K.Y.; Wu, P.P.Y.; aus der Fünten, K.; Hecksteden, A.; Meyer, T. Using a Bayesian Network to Classify Time to Return to Sport Based on Football Injury Epidemiological Data. PLoS ONE 2025, 20, e0314184. [Google Scholar] [CrossRef]

- Morgulev, E. The Looming Threat of Digital Determinism and Discrimination: Commentary on “The Feudal Glove of Talent-Selection Decisions in Sport–Strengthening the Link between Subjective and Objective Assessments” by Bar-Eli et al. (2023). Asian J. Sport Exerc. Psychol. 2024, 4, 29–30. [Google Scholar] [CrossRef]

- Musat, C.L.; Mereuta, C.; Nechita, A.; Tutunaru, D.; Voipan, A.E.; Voipan, D.; Mereuta, E.; Gurau, T.V.; Gurău, G.; Nechita, L.C. Diagnostic Applications of AI in Sports: A Comprehensive Review of Injury Risk Prediction Methods. Diagnostics 2024, 14, 2516. [Google Scholar] [CrossRef]