Artificial Neural Network, Attention Mechanism and Fuzzy Logic-Based Approaches for Medical Diagnostic Support: A Systematic Review

Abstract

1. Introduction

- Which algorithms or techniques of artificial neural networks, attention mechanisms, and fuzzy logic were selected to be integrated?

- How was the integration between algorithms or techniques of artificial neural networks, attention mechanisms, and fuzzy logic performed?

- What impact does the integration of algorithms or techniques of artificial neural networks, attention mechanisms, and fuzzy logic have on the outcome of the proposal?

- What are the characteristics of the input data and of the data to be predicted, classified or inferred?

- What methods or metrics were used to assess results?

- We identify and summarize the techniques and/or algorithms of artificial neural networks, attention mechanisms, and fuzzy logic that have been integrated and applied to medical diagnostic support.

- We classify and describe the integration of artificial neural networks, attention mechanisms, and fuzzy logic algorithms or techniques.

- We analyze and condense the information on the impact of integrating algorithms or techniques from artificial neural networks, attention mechanisms, and fuzzy logic into the models proposed in the publications.

- We identify future research lines of approaches using artificial neural networks, attention mechanisms, and fuzzy logic algorithms or techniques.

2. Background and Related Work

2.1. Artificial Neural Networks (ANNs)

2.2. Attention Mechanisms

2.3. Fuzzy Logic

3. Methodology

3.1. Eligibility Criteria

3.2. Sources of Information

3.3. Search Strategy

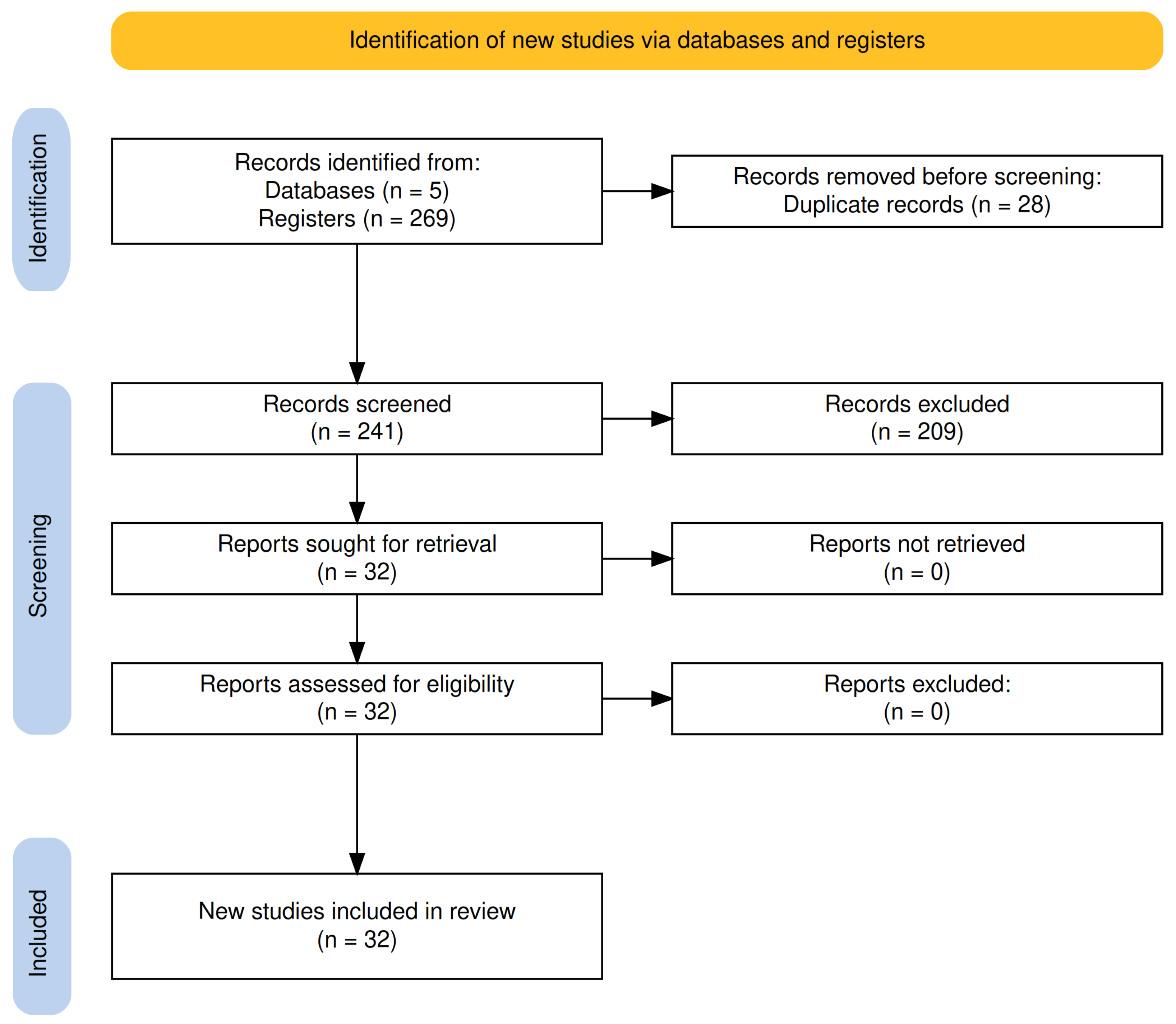

3.4. Selection Process

- Search by source: For each database, we applied the previously defined search string, adapting it according to the particularities of each platform’s search engine. Some inclusion criteria, such as publication period (2020–2025) and language (English), were incorporated directly into the search strings to refine the results from the beginning.

- Title and abstract retrieval: We used a bibliographic reference manager to retrieve the list of publications obtained from each source, including abstracts, when available. This information enabled us to perform an efficient initial filtering of the publications.

- Removal of duplicates: With the help of the bibliographic reference manager, we identified and removed duplicate publications, ensuring that each publication considered in the following stages was unique.

- Review of titles and abstracts: We subsequently reviewed and analyzed the titles and abstracts of each publication to assess their correspondence with the inclusion and exclusion criteria defined in the protocol of our systematic review. At this stage, we discarded all those publications that did not achieve the objectives of our systematic review.

- Advanced review of the full text: In cases where the title and abstract did not provide sufficient information to determine the publication’s relevance, we accessed the full text to further evaluate its potential inclusion.

- Quality assessment: To minimize the risk of bias resulting from the methodological quality of the included publications, we conducted a quality assessment for each preselected publication. This analysis allowed us to ensure that the final publications had the level of scientific rigor necessary to answer our research questions.

3.5. Risk of Bias Assessment (Quality Assessment)

- The question is answered completely = 1.

- The question is answered in a general way = 0.5.

- The question is not answered = 0.

- Q-01: Is the information on the source of the publication clear?

- Q-02: Does the publication have the basic sections of a scientific report?

- Q-03: Do they define the problem they address?

- Q-04: Do they describe what the input (source) data are?

- Q-05: Do they describe what the output data (prediction or classification) is?

- Q-06: Are the artificial neural networks, attentional mechanisms, and fuzzy logic algorithms mentioned?

- Q-07: Is the integration between artificial neural network algorithms, attention mechanisms, and fuzzy logic clearly described?

- Q-08: Do they use metrics to evaluate the results?

- Q-09: Is there any mention of the impact of the integration of the algorithms on the results?

- Q-10: Do they clearly present results?

- Q-11: Does the discussion section consider the implications of the proposal and compare their results with similar ones?

3.6. Data Extraction and Synthesis of Results

- General information about the publication.

- Information about the source of the publication, including publication type, journal name, and date.

- Details about the datasets used, including general characteristics, pre-processing techniques, and descriptions of the target data.

- Technical information on the algorithms and approaches applied, specifically artificial neural networks, attention mechanisms, and fuzzy logic.

- Details on the integration between the approaches, including how they were combined, the impact of such integration on the results, the evaluation metrics used, and possible areas of opportunity reported by the authors.

3.7. Information Bias

4. Results

4.1. Study Selection

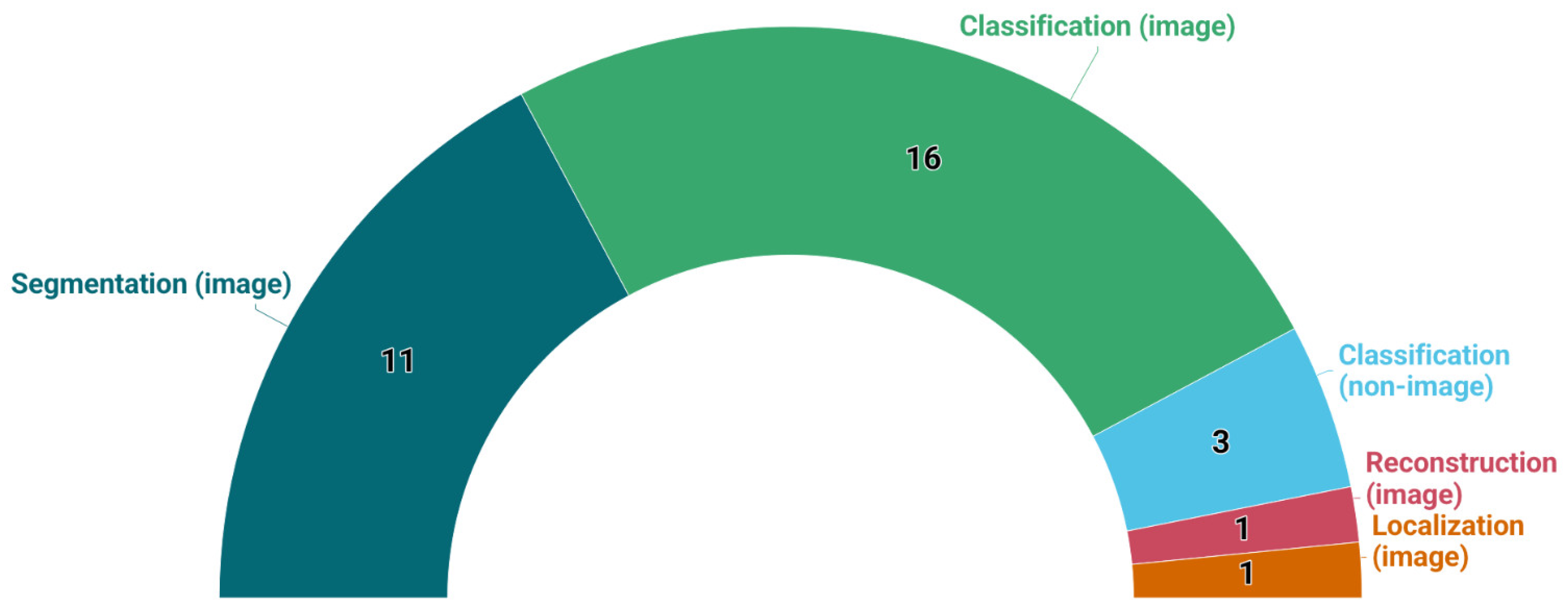

4.2. Study Characteristics

4.3. Risk of Bias Within Studies

4.4. Synthesis of Results

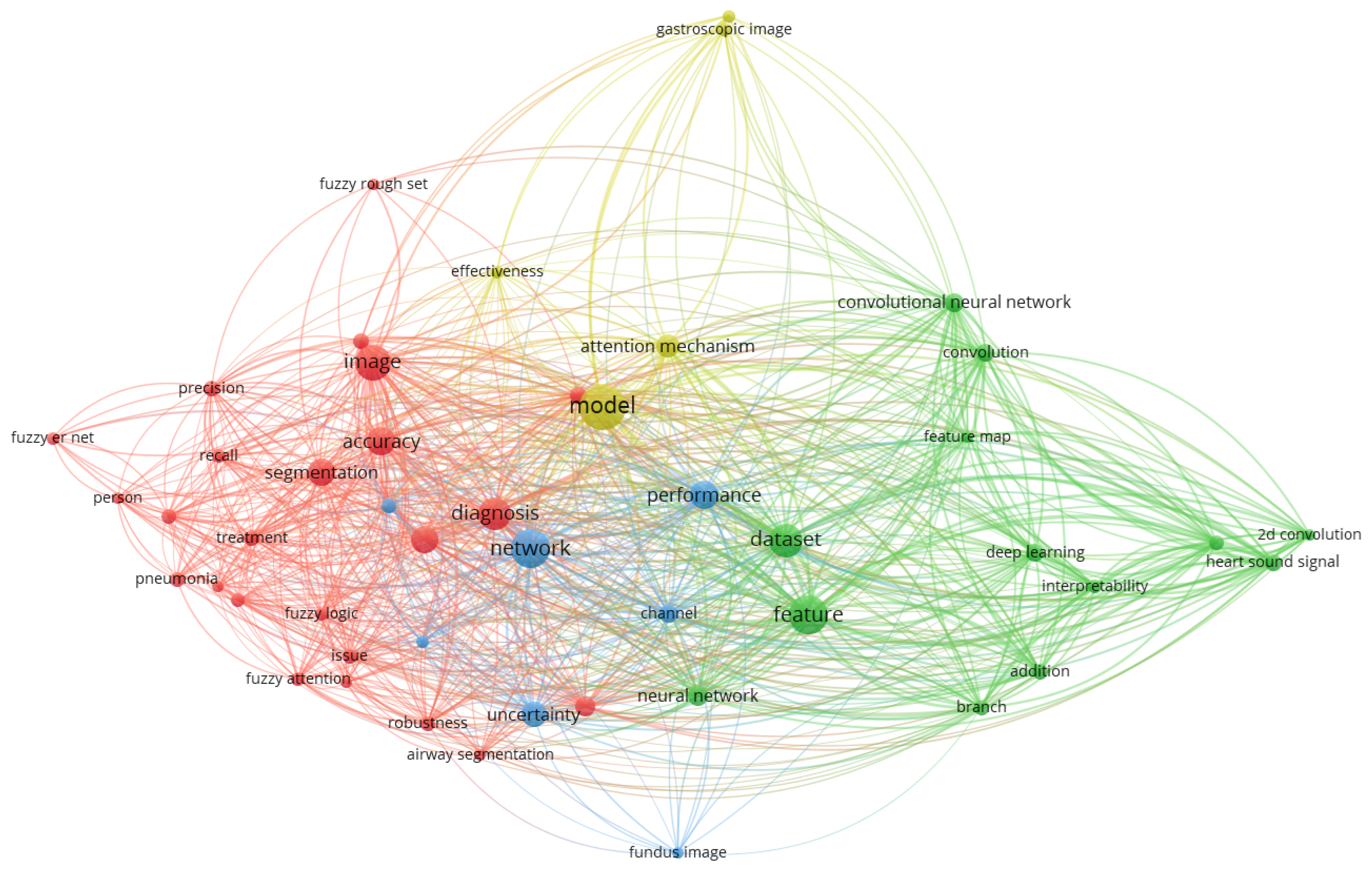

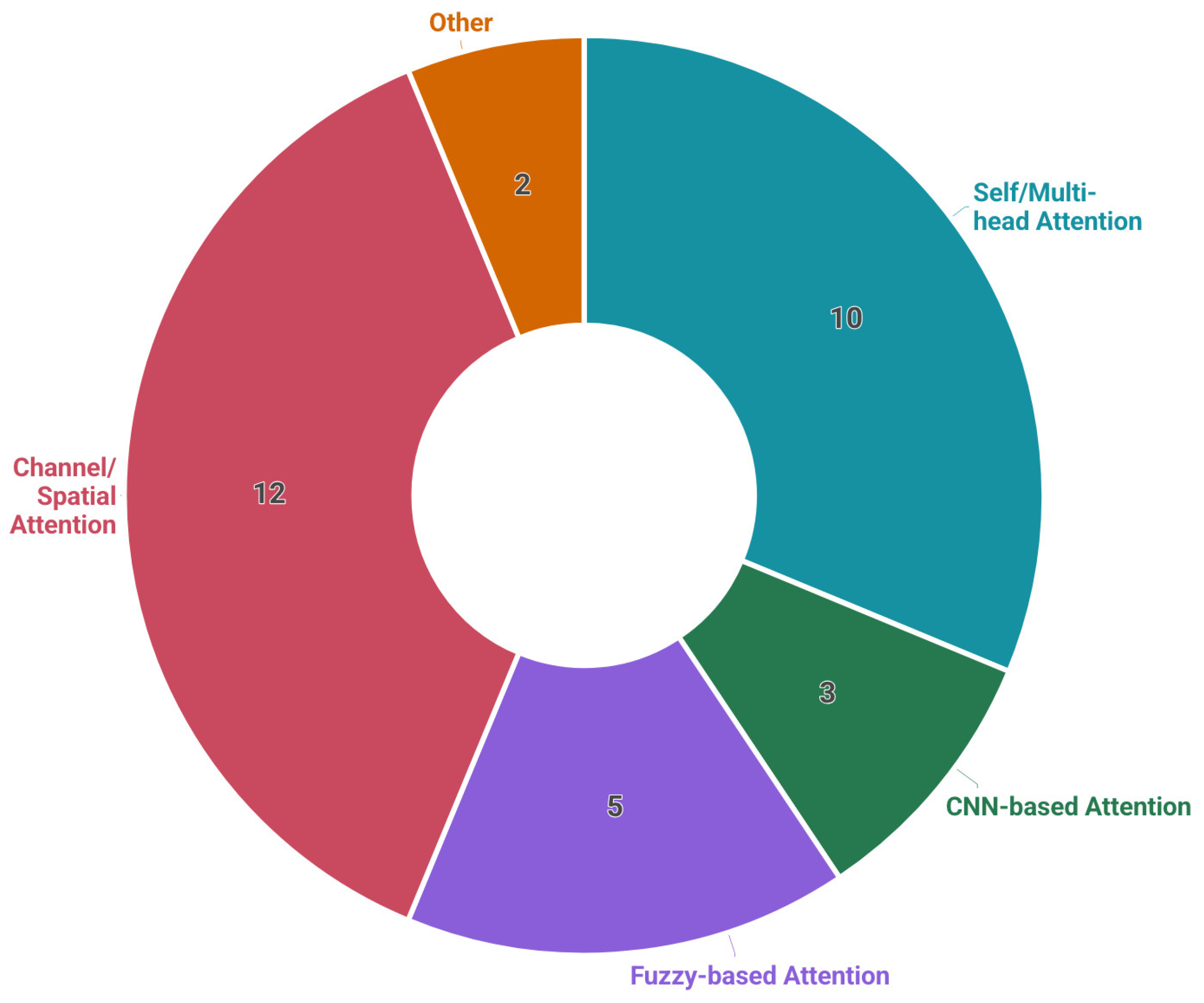

4.4.1. RQ1: What Algorithms or Techniques of Artificial Neural Networks, Attention Mechanisms, and Fuzzy Logic Were Selected to Be Integrated?

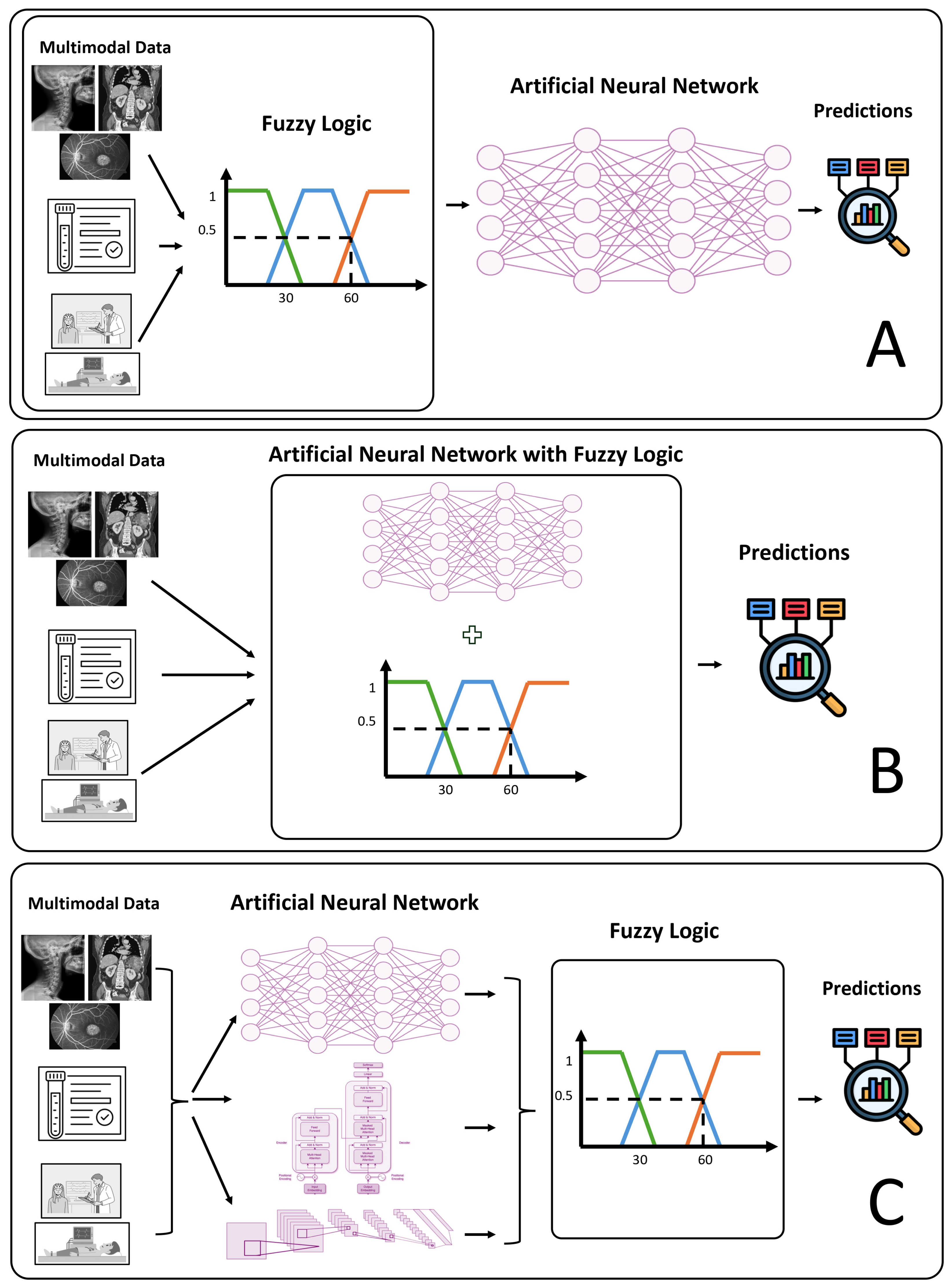

4.4.2. RQ2: How Was the Integration Between Algorithms or Techniques of Artificial Neural Networks, Attention Mechanisms, and Fuzzy Logic Performed?

- (A)

- Integration of fuzzy logic as pre-processing or initial data transformation

- (B)

- Integration of Fuzzy Logic directly into Attention Mechanisms

- (C)

- Integration of Fuzzy Logic into the Architecture of Artificial Neural Networks

- (D)

- Integration of Fuzzy Logic in Post-processing Stages or Result Fusion

- (E)

- Integration of Fuzzy Logic in the loss function or for optimization

- (F)

- Hybrid Neuro-Fuzzy Systems as key components

4.4.3. RQ3: What Impact Does the Integration of Algorithms or Techniques of Artificial Neural Networks, Attention Mechanisms, and Fuzzy Logic Have on the Outcome of the Proposal?

- (A)

- Impact of Attention Mechanisms

- (B)

- Impact of Fuzzy Logic Techniques

- (C)

- Impact of Combining Attention and Fuzzy Logic

4.4.4. RQ4: What Are the Characteristics of the Input Data and of the Data to Be Predicted, Classified, or Inferred?

- (A)

- Characteristics of Input Data

- Computed tomography (CT): Chest CT scans are used for lung and airway segmentation, as in [27,28]. Abdominal CT scans are also used for organ segmentation, such as the pancreas in [10]. Additionally, CT images are utilized for image reconstruction, as seen in [40], and for lung cancer classification in [32].

- Magnetic resonance imaging (MRI): Brain MRIs are commonly used for the diagnosis of brain tumors in [15,18], as well as for predicting Alzheimer’s disease using PET in [38]. Cardiac MRIs are used for segmentation in [24,37], and breast MRIs are used for cancer classification in [33]. Refs. [9,39,42] also use MRI as input data.

- Histopathological images: [13] uses histopathological images of different tissues for classification.

- Other imaging modalities: Ref. [22] uses gastric endoscopy images to locate polyps. Ref. [34] uses peripheral blood smear images for the diagnosis of acute lymphoblastic leukemia. Refs. [19,31] use dermatoscopy images for the detection and classification of skin cancer. [43] uses images of diabetic foot ulcers for segmentation.

- Data augmentation: Widely applied to improve the robustness and generalization of models; techniques include rotations (horizontal, vertical, and random), scaling or zooming, adding noise, or using generative adversarial networks (GANs) for data augmentation as in [19].

- Audio signals: Ref. [14] applies resampling at 1000 Hz, filtering (bandpass 25–400 Hz), denoising, and extraction of features from the time domain (mean, variance), frequency domain (FFT), and time-frequency domain (MFCCs).

- EEG signals: Ref. [17] applies bandpass filtering, notch filter for power frequency interference, wavelet denoising, frequency band decomposition, and data segmentation.

- mRNA expression data: Ref. [36] uses Z-score and min–max normalization and feature selection with ANOVA and Relief algorithm.

- MRI: The Multioutput Takagi–Sugeno–Kang Fuzzy System in [39] addresses interference and intrinsic noise in rs-fMRI data.

- (B)

- Characteristics of data to be predicted, classified, or inferred

- Two classes: For the diagnosis of autism in [39], Parkinson’s disease in [17], breast cancer in [16,33], prostate cancer metastasis in [36], pediatric oral health screening in [44], acute lymphoblastic leukemia in [34], skin cancer in [31], lymph node metastasis in [35], and cardiovascular disease in [14].

- Seven classes: For the classification of dermatoscopy lesions in [19].

- Segmentation masks: [9] produces image segmentation using contour lines. Ref. [27] generates an edge mask for lung organ segmentation. Ref. [10] produces a mask for pancreatic segmentation, as well as a 3D reconstruction of the organ. Ref. [43] generates segmentation masks for diabetic foot ulcer images. Ref. [28] produces a mask and 3D reconstruction for airway segmentation. Refs. [24,37] focus on segmenting cardiac MRI images to identify regions such as the right ventricle (RV), left ventricle (LV), and left ventricular myocardium (MYO).

- Probability maps: Ref. [25] generates probability maps of segmentation regions for fundus OCT images.

- Specified segment: Ref. [15] performs segmentation of a specified area in brain MRI images.

4.4.5. RQ5: What Methods or Metrics Were Used to Assess Results?

- (A)

- Classification and Overall Performance Metrics

- Accuracy (ACC): Represents the percentage of correct predictions made by the model out of the total number of predictions.

- Precision (Pre): Measures the proportion of true positives among all instances classified as positive by the model, indicating the reliability of positive predictions.

- Recall (Rec)/Sensitivity (SEN)/true-positive rate (TPR): Indicates the proportion of true positives that the model correctly identified out of all really positive instances.

- F1-score (F1)/F-Measure: This is the harmonic mean of precision and recall, providing a balance between the two metrics, which is especially useful in unbalanced data sets.

- Area under the curve (AUC): Quantifies the ability of a classifier to distinguish between classes, being the area under the receiver operating characteristic (ROC) curve.

- Specificity (SPE)/true-negative rate (TNR): Measures the proportion of true negatives that the model correctly identified out of all really negative instances.

- Positive predictive value (PPV): This represents the probability that a positive result is really positive.

- False-positive rate (FPR): Indicates the proportion of false positives out of the total number of actual negative cases.

- Quadratic weighted kappa (kappa): Measures the degree of agreement between two evaluators (or between the model and the actual values), penalizing more strongly the most distant classification errors. It is helpful in ordinal classification problems.

- (B)

- Segmentation and Region Similarity Metrics

- Dice similarity coefficient (DSC/Dice coefficient): A widely used metric for measuring the overlap between the predicted segmentation and the true target area, with higher values indicating greater similarity.

- Jaccard similarity coefficient (JSC/Jaccard coefficient/intersection over union (IoU)): Similar to the Dice coefficient, it measures the overlap between two sets, being the ratio of the intersection area to the union area.

- Volumetric overlap error (VOE): Quantifies the volume of error between the predicted segmentation and the actual volume of the target area, expressed as a percentage of nonmatch.

- Relative absolute volume difference (RAVD): Measures the relative volume difference between the predicted and true segmentation, normalized by the volume of the true segmentation.

- Pixel accuracy (PA): The ratio of correctly classified pixels to the total number of pixels in the image.

- Class pixel accuracy (CPA): The average pixel accuracy for each class individually.

- Mean pixel accuracy (MPA): The average pixel accuracy is calculated for each class and then averaged across all classes.

- (C)

- Distance and Surface Metrics

- Hausdorff distance (HD/95HD/HD95): Measures the maximum distance between points in two sets, often used to evaluate the difference between segmentation contours. The 95th percentile (95HD/HD95) reduces sensitivity to outliers.

- Average surface distance (ASD/ASSD): Calculates the average distance between the surfaces of the predicted segmentation and the true target area. Sometimes, ASSD is used to emphasize that the average considers both surfaces, not just one direction. Often, ASD and ASSD are synonymous.

- Root mean square surface distance (RMSD): Measures the square root of the mean of the squared differences between surface distances.

- (D)

- Specific Image Quality and Reconstruction Metrics

- Peak signal-to-noise ratio (PSNR): A measure of image reconstruction quality where higher values indicate greater fidelity to the original image.

- Structural similarity index (SSIM): Evaluates the similarity of two images in terms of luminance, contrast, and structure, providing a metric that is more closely aligned with human perception.

- Mean squared error (MSE): Measures the average of the squares of the errors between the predicted values and the actual values, commonly used in regression and reconstruction.

- Mean absolute error (MAE): Calculates the average of the absolute differences between the predicted values and the actual values, providing a measure of the average magnitude of the errors.

- (E)

- Specific Airway Segmentation Metrics

- Detected length ratio (DLR): Proportion of the total length of the airways that has been correctly detected.

- Detected branch ratio (DBR): Proportion of the branches of the airways that have been correctly detected.

- Continuity and completeness F-score (CCF): Combines two key aspects of airway segmentation: continuity (how well the branches are connected) and completeness (how much of the actual tree was segmented), using an F-score formula (harmonic mean).

5. Discussion

5.1. Artificial Neural Network (ANN) Algorithms

5.2. Attention Mechanism Algorithms

- Residual Connection Attention is utilized for refined segmentation of organs, such as the pancreas in [10], as well as for segmentation of 3D medical images in [42]. Its goal is to improve feature representation and suppress irrelevant information or perform iterative fusion of information at different depths of the network.

- Hybrid Attention is employed in medical image reconstruction in [40], where information from different layers or modules is combined to enhance the quality of the reconstructed image.

- Temporal and Spatial Attention is essential for processing medical time series or data that combine temporal and spatial aspects, such as skin cancer detection through a hybrid network with GRU and CNN proposed by [31], where sequence and temporal dependencies are critical.

5.3. Fuzzy Logic Algorithms

5.4. Integration of Artificial Neural Network Algorithms, Attention Mechanisms, and Fuzzy Logic

5.5. Criteria for Integrating Fuzzy Logic with Artificial Neural Network Models

- If the image data has ambiguous boundaries or significant noise, fuzzy data pre-processing or fuzzy attention could be very advantageous.

- If the model needs to reason explicitly with data uncertainty or provide smoother responses, integration at the architecture level or as a hybrid system may be more appropriate.

- If it is necessary to improve the robustness of final decisions or combine multiple predictions, post-processing techniques will be suitable.

- If the learning process itself is affected by label ambiguity or optimization, a fuzzy loss function or fuzzy optimization algorithm could be the key.

5.6. Data and Metrics

- Classification and overall performance: Accuracy (ACC), precision (Pre), recall (Rec)/sensitivity (SEN)/true-positive rate (TPR), F1-score (F1), area under the curve (AUC), specificity (SPE)/true-negative rate (TNR), positive predictive value (PPV), false-positive rate (FPR), and quadratic weighted kappa (kappa).

- Segmentation and region similarity: Dice similarity coefficient (DSC), Jaccard similarity coefficient (JSC)/intersection over union (IoU), volumetric overlap error (VOE), relative absolute volume difference (RAVD), pixel accuracy (PA), class pixel accuracy (CPA), and mean pixel accuracy (MPA).

- Distance and surface: Hausdorff distance (HD/95HD), average surface distance (ASD/ASSD), and root mean square symmetric surface distance (RMSD).

- Image/reconstruction quality: Peak signal-to-noise ratio (PSNR), structural similarity index (SSIM), mean squared error (MSE), and mean absolute error (MAE).

- Airway segmentation: Detected length ratio (DLR), detected branch ratio (DBR), and continuity and completeness F-score (CCF).

5.7. Final Remarks on the Findings

5.8. Comparison with Other Reviews

5.9. Strengths and Limitations

- Absence of meta-analysis: Our systematic review was based on a narrative synthesis of the evidence found. This methodological choice means that the review does not include meta-analyses. It is crucial to note that the PRISMA methodology does not require conducting a meta-analysis; therefore, the absence of a quantitative synthesis typical of a meta-analysis should not be interpreted as a methodological weakness or a failure to comply with the PRISMA methodology.

- Clinical, regulatory, and ethical applicability: The study’s focus was strictly limited to the analysis of the integration of computational techniques. Consequently, the analysis of regulatory barriers (such as FDA/CE certification), real-world deployment challenges, or ethical gaps related to algorithmic bias was outside the scope and focus of this systematic review.

- Computational resource considerations: Similarly, detailed evaluation of computational costs, resource consumption (such as GPU memory or inference latency), and optimization of model parameter counts were not part of the data inclusion or extraction criteria.While these aspects are crucial for real-time applicability, their comprehensive analysis is considered a future line of research.

5.10. Implications for Medical Practice and Research

5.10.1. Implications for Medical Practice

- Improved diagnosis and segmentation: The integration of artificial neural networks (ANNs), attention mechanisms, and fuzzy logic has consistently demonstrated improved performance in different medical diagnosis and segmentation tasks. The diversity of the tasks suggests considerable potential for assisting healthcare professionals in decision making, offering greater accuracy in identifying pathologies such as cancer, heart disease, or neurological disorders.

- Versatility in data modalities: Hybrid models are adaptable to a wide range of medical data, including various images (CT, MRI, X-rays, and ultrasound, among others), as well as nonimage data such as sound signals, EEG, and mRNA expression matrices. This versatility enables the techniques to be applied across multiple medical specialties, including cardiology, neurology, oncology, and dermatology, thereby expanding their potential impact.

- Uncertainty and relevance management: Attention mechanisms enable models to focus on the most relevant characteristics, thereby improving diagnostic accuracy and efficiency. Complementarily, fuzzy logic is crucial for managing the ambiguity and granularity inherent in medical data. Attention to ambiguous data is particularly valuable in clinical contexts where the boundaries between normal and pathological can be blurred or where data is noisy or incomplete, which could lead to more robust and potentially more interpretable diagnoses for medical professionals.

5.10.2. Implications for Research

- Need for rigorous ablation studies: Future research should prioritize the inclusion of ablation studies to directly evaluate the impact of integrating each technique. Our observation that only 19 of the 32 publications included clear and concise information on the specific impact of attention mechanisms or fuzzy logic in their ablation studies is a significant limitation to conclusively and definitively attributing performance improvements to the aforementioned techniques. Evaluating each technique is crucial for the internal validity and replicability of the findings.

- Standardization of metrics and clinical evaluation: Heterogeneity in the reporting of performance metrics (percentages or decimal values) makes direct comparisons between publications difficult. Research should move toward standardization in reporting results. In addition, it is essential that, beyond statistical significance, the clinical significance of the observed improvements be thoroughly explored to ensure that computational advances have a real and tangible impact on patient care.

- Generalization of models: The use of in-house or private hospital datasets in some publications raises questions about the generalization of models to more diverse populations or different clinical settings. Future research should consider the use of larger and more diverse public datasets or techniques that ensure better generalizability to data not seen during training.

- Optimization of computational complexity: The integration of multiple networks and techniques, while improving performance, can increase computational complexity in terms of computational resource consumption (CPU and memory). Future research should optimize models by reducing the number of parameters and minimizing processing and memory resource requirements while maintaining accuracy.

5.11. Suggestions for Future Lines of Research

- (A)

- Improving generalization and robustness

- Explore adversarial learning and transfer learning to improve the application of large models on medical datasets and build models with stronger generalization capabilities, as seen in [13].

- Utilize large-scale samples to evaluate proposed methods and address the high specificity observed among subjects, as seen in [17].

- Consider the generalization of the model to the global population, as seen in [38].

- (B)

- Multimodal data integration

- Include multimodal data to build models with stronger generalization capabilities, as seen in [13].

- Collate datasets containing both images and related textual information and explore the use of CLIP models to improve generalization and performance, as seen in [11].

- Expand the proposed methodologies to multimodal image datasets, as seen in [23].

- Merge multiple data sources (heart sound signals, images, clinical data, etc.), as seen in [14].

- (C)

- Real-time applications

- Develop new versions of models for video data, enabling real-time identification of pathological conditions, as seen in [22].

- (D)

- Exploring generative models and contrastive learning

- Explore generative models for synthesizing images and utilize contrastive learning approaches to address the data imbalance problem, as observed in [11].

- (E)

- Expansion to new tasks and data types

- Extend the methods to more types of medical imaging tasks, such as tumor segmentation, specific organ segmentation, and lesion detection, as seen in [42].

- Apply the model to the automated classification of skin lesions from digital photographs (particularly facial skin diseases such as acne, rosacea, etc.), as seen in [19].

- Apply the proposed model to 3D medical image processing, as seen in [37].

- (F)

- Hybrid model optimization

- Optimize individual models to reduce the number of parameters while maintaining prediction accuracy, addressing computational complexity, as observed in [16].

- Explore the use of swarm intelligence optimization algorithms, as seen in [39].

- (G)

- New fuzzy logic techniques or types of training

6. Conclusions

- Enhance generalization and robustness by exploring adversarial and transfer learning, utilizing larger and more diverse public datasets, and assessing the applicability of models to global populations.

- Expand multimodal data integration, combining diverse sources such as images, clinical data, and text for more comprehensive diagnoses.

- Develop real-time applications, such as identifying pathological conditions in video data.

- Explore generative models and contrastive learning to synthesize images and handle data imbalance.

- Extend methods to new tasks and new types of medical data.

- Optimize hybrid models to minimize computational resource consumption and reduce the number of model parameters without compromising accuracy.

- Investigate new fuzzy logic techniques or training types, such as introducing fuzzy cost functions or semi-supervised and unsupervised learning.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Most Important Key Data Extracted to Answer the Research Questions of Our Systematic Review

| Ref. | Application Area | Input Data | Output Data | ANN Model | Attention Mechanism | Fuzzy Logic Technique | Integration Method | Impact on Results |

|---|---|---|---|---|---|---|---|---|

| [38] | Classification (Alzheimer’s disease) | Image | 3 classes | CNN, MLP | Hybrid Attention Mechanism (HAM) | Fuzzy Color Method and Fuzzy Stacking | They utilize two convolutional modules, combined with a Hybrid Attention module (comprising a Channel Attention module and a Spatial Attention module based on CNN and MLP) and a Fuzzy Supervised Contrastive Loss to alleviate label stiffness and enhance the network’s generalization ability. | The use of the attention technique (Hybrid Attention) improved 3 metrics compared to when the attention technique was not used: ACC: 4.28%, SEN: 4.85%, SPE: 4.99%. The use of the fuzzy logic technique (Fuzzy Supervised Contrastive Loss) improved 3 metrics compared to when the fuzzy logic technique was not used: ACC: 10.74%, EN: 10.05%, SPE: 8.45%. |

| [39] | Classification (Autism) | Image | 2 classes | Transformer, MLP | Multihead Self-Attention (Transformer) | Fuzzy Rough CNN | First, the model utilizes a Multioutput Takagi–Sugeno–Kang Fuzzy System to address the interference caused by inherent human body noise and equipment factors during the rs-fMRI data collection process, thereby providing a highly interpretable feature for subsequent work. Second, they employ an encoder–decoder architecture (MLP + Transformer). The encoder maps input data to a low-dimensional hidden representation, while the decoder maps the hidden representation back to the original data space. Finally, they use an MLP for classification. | The use of the fuzzy logic technique (Multioutput Takagi–Sugeno–Kang Fuzzy System) improved 2 metrics compared to when the fuzzy logic technique was not used: ACC: 24.6%, SPE: 59.9%. |

| [18] | Classification (Brain tumor) | Image | 3 classes | CNN, Random-Coupled Neural Network, Graph Neural Network | Contextual Attention Network and Substructure Aware Graph Neural Network Attention | Multioutput Takagi–Sugeno–Kang Fuzzy System | The workflow of the proposal is A) pre-processing and feature extraction using Contextual Attention Network with Convolutional Auto Encoder (CAN-CAE). B) Segmentation (clustering) using Adaptively Regularized Kernel-based Fuzzy C-Means (ARKFCM). C) Classification using Random-Coupled Neural Network with Substructure Aware Graph Neural Network Attention (RCNN-SAGNNA). | No data |

| [16] | Classification (Breast tumor malignancy) | Image | 2 classes | CNN, Transformer | Multihead Attention | Feature Selective Enhancement Module | They use four models, one CNN-based and 3 Transformer-based; the four models generate four individual malignancy scores. Finally, they fused malignancy scores utilizing learnable fuzzy measures. | No data |

| [33] | Classification (Cancer detection) | Image | 2 classes | MLP | Self-Attention Mechanism | Fuzzy Attention Layer | The architecture has three cascaded components. The Type-2 Fuzzy Subsystem (component 1) takes raw data as input and outputs the corresponding fuzzy samples. In this way, the fuzzy samples are transferred to a better representation through the graph-based Generalized Correntropy Auto-encoder (component 2). The Fuzzy Graph Structure Attention Network (component 3) further models the structural uncertainty or fuzziness and extracts the structural information. | The use of attention and fuzzy logic techniques (Fuzzy Attention layer) improved 2 metrics compared to when the attention and fuzzy logic techniques were not used: ACC: 0.0158, F1: 0.0133 |

| [32] | Classification (Cancer) | Image | NA | CNN, MLP | Channel Attention Module (CAM) and Position Attention Module (PAM) | Adaptive Neuro-Fuzzy Inference System (ANFIS) | The model uses three CNN-based networks (DAV-Net, EfficientNet, and DRN). The first CNN-based network (DAV-Net) used a position attention module and a Channel Attention module for segmentation. For classification, they use a fuzzy-ER net layer based on fuzzy partition, rule generation, and categorization of new patterns. | No data |

| [14] | Classification (Cardiovascular disease) | Sound | 2 classes | 1D-CNN, 2D-CNN, MLP | Attention Mechanism based on Self-Attention | Type-2 Fuzzy Subsystem | They utilize a network comprising 1D-CNN and 2D-CNN with an attention mechanism (transformer-based) and MLP. Then, they use a fuzzy inference system as a classifier. | No data |

| [29] | Classification (Diabetic Retinopathy Grading) | Image | 5 classes | CNN, MLP | Fuzzy-Enhanced Holistic Attention Mechanism | Fuzzy-Enhanced Holistic Attention (FEHA) | First, a multiscale feature encoder based on CNN is applied to encode a set of feature maps from different scales. Then, for each scale feature map, fuzzy-enhanced holistic attention is used to generate channel-spatial attention weights by modeling the interaction of channel information and spatial location (fuzzy membership functions are applied in channel-spatial attention). Then, a fuzzy learning-based cross-scale fusion module is employed to integrate feature representations. Finally, the classifier is implemented using a fully connected layer with a softmax function to predict the grading results. | The use of attention and fuzzy logic techniques (Fuzzy-Enhanced Holistic Attention) improved 4 metrics compared to when the attention and fuzzy logic techniques were not used (averaged): ACC: 2.92%, kappa: 3.73%, Pre: 2.93%, F1-score: 4.08%. |

| [11] | Classification (Glaucoma Stages) | Image | 3 classes | CNN, Transformer, MLP | Self-Attention Mechanism | Fuzzy Layer | They utilize CNN for feature extraction, then employ a Fuzzy Joint Attention Module, comprising two attention blocks: a Local–Global Channel Attention block and a Local–Global Spatial Attention block (CNN with Self-Attention), along with a fuzzy layer to convert feature maps into fuzzy maps. Finally, they use an MLP as a classifier. | The use of the fuzzy logic technique (fuzzy layer) improved 3 metrics compared to when the fuzzy logic technique was not used: ACC: 1.9%, F1: 2.2%, AUC: 0.01%. The use of attention technique (Channel Attention) improved 3 metrics compared to when the attention technique was not used: ACC: 1.9%, F1: 2.2%, AUC: 0.01%. The use of attention technique (Spacial Attention) improved 3 metrics compared to when the attention technique was not used: ACC: 1.7%, F1: 2%, AUC: 0.01%. |

| [13] | Classification (Cell structures) | Image | 5 to 9 classes | CNN, Transformer | Self-Attention Mechanism | Fuzzy-Guided Cross-Attention | They use CNN for multigranular feature extraction and also extract three fuzzy features from input images by applying different membership functions. Then, use fuzzy-guided cross-attention (a Self-Attention Mechanism from the Transformer) for feature fusion and processing. Furthermore, finally, use an MLP for classification. | The use of the attention technique (Fuzzy-Guided Cross-Attention) improved 3 metrics compared to when the attention technique was not used: ACC: 2.7%, TPR: 2.4%, PPV: 3.15%. The use of the fuzzy logic technique (Universal Fuzzy Feature) improved 3 metrics compared to when the fuzzy logic technique was not used: ACC: 6%, TPR: 5.4%, PPV: 6.3%. |

| [44] | Classification (Horal health) | Image | 2 classes | MLP | Multihead Self-Attention | Fuzzy Activation Function | First, they use image enhancement with a fuzzy color method as a pre-processing step. Then, they use a Swin transformer to process data and make the classification. | The use of the fuzzy logic technique (Fuzzy color method) improved 4 metrics compared to when the fuzzy logic technique was not used: ACC: 0.0262, Pre: 0.0238, Rec: 0.0329, F1: 0.0161 |

| [23] | Classification (Lung Disorder) | Image | 3 classes | CNN, Transformer, MLP | Hybrid Attention Mechanism (Channel Attention and Spatial Attention) | Fuzzy-Rough Set-Based Fitness Function. | The architecture utilizes EfficientNet and Vision Transformer for feature extraction (CNN-based models), then incorporates a Hybrid Attention Mechanism, and finally employs an MLP for class predictions. The Fuzzy-Enhanced Firefly Algorithm (Fuzzy Logic) improves the algorithm’s adaptability during the optimization procedure. | The use of the fuzzy logic technique (Fuzzy-Enhanced Firefly Algorithm) improved 4 metrics compared to when the fuzzy logic technique was not used: ACC: 1.7%, F1: 1.8%, Pre: 1.8%, Rec: 1.9%. |

| [35] | Classification (Lymph node metastasis) | Image | 2 classes | CNN, MLP | Spatial-Channel Attention | Fuzzy Supervised Contrastive Loss | The model consists of three key parts: (1) CNN for feature extraction with fuzzy c-means clustering. (2) The Spatial-Channel Attention block consists of a spatial branch and a channel branch, which is used for the feature map. (3) The classification network takes the representations from the previous two parts as input. | The use of the attention technique (Spatial-Channel Attention) improved 3 metrics compared to when the attention technique was not used: Pre: 0.022, Rec: 0.022, F1: 0.002. The use of the fuzzy logic technique (Fuzzy C-Means Clustering) improved 3 metrics compared to when the fuzzy logic technique was not used: Pre: 0.032, Rec: 0.008, F1: 0.013. |

| [34] | Classification (Lymphoblastic leukemia) | Image | 2 classes | CNN, MLP | Fuzzy Attention-based Feature Extraction | Fuzzy C-Means Clustering | Their model is based on a combination of CNN and MLP, featuring two pathways, and then the features extracted from both pathways are combined. They add a Feature Selective Enhancement module, which focuses the model’s attention on relevant morphological patterns. The Feature Selective Enhancement module is based on a CNN with a member function layer and a fuzzy rule layer. | No data |

| [17] | Classification (Parkinson’s disease) | Signal | 2 classes | CNN, GRU, MLP | Dual Attention Network (Danet) | Learnable Fuzzy Measure | First, they use a fuzzy entropy algorithm in the pre-processing stage. They employ a 3-module architecture (temporal separable convolution, dual attention network, and GRU network) and subsequently calculate the classification using an MLP. | The use of the attention technique (Dual Attention Network) improved 4 metrics compared to when the attention technique was not used: ACC: 0.836, Pre: 0.853, Rec: 0.80, F1: 0.83. The use of the fuzzy logic technique (Fuzzy Entropy Algorithm) improved 4 metrics compared to when the fuzzy logic technique was not used: ACC: 5.44%, Pre: 5.46%, Rec: 5.24%, F1: 5.44%. |

| [12] | Classification (Pneumonia) | Image | 3 classes | CNN, MLP | One Channel Attention Module (CAM) and two Spatial Attention Modules (Samavg and Sammax). | Adaptively Regularized Kernel-Based Fuzzy C-Means | The architecture uses CNN for feature extraction first. Then, the feature vector passes through three branches, which are treated by one Channel Attention Module (CAM) and two Spatial Attention Modules (SAMavg and SAMmax). The new feature map serves as input for the Fuzzy Channel Selection (FCS) module, which generates a feature map with the top channels. The classification layer then processes this flattened feature to predict the image’s output. | The use of the fuzzy logic technique (Fuzzy Channel Selection) improved 5 metrics compared to when the fuzzy logic technique was not used: ACC: 2.55%, Pre: 2.43%, Rec: 1.93%, F1: 3.1%, AUC: 2.18%. |

| [36] | Classification (Prostate cancer) | Data array | 2 classes | MLP, Graph Neural Network | Multihead Attention | Self-Organizing Fuzzy Inference System | They applied a classifier model to assess the discrimination ability of the selected features. This classifier model consists of three layers: a Graph Generator layer, a Graph Fuzzy Attention Network (GFAT) layer, and a Fully Connected (FC) layer. | No data |

| [31] | Classification (Skin cancer) | Image | 2 classes | CNN, GRU | Temporal and Spatial Attention Module | Fuzzy Entropy Algorithm | In the pre-processing stage, the region of interest (ROI) is segmented using a Fuzzy C-Means (DicL-FCM) clustering technique. Then, the classification is performed by the Convolutional Gated Recurrent Network (TA_CGRNet), which is designed by hybridizing the Gated Recurrent Unit (GRU) and CNN with a temporal and spatial attention module. | No data |

| [19] | Classification (Skin lesion) | Image | 7 classes | GANs, CNN, MLP | Efficient Channel Integrating Spatial Attention Module | Fuzzy C-Means (Dicl-FCM) Clustering | 3 CNN-based models with channel and spatial attention mechanisms (MLFF-InceptionV3, MLFF-Xception, and MLFF-DenseNet121) are used to compute decision scores. Then, a Fuzzy Rank-Based Ensemble approach is employed to reduce the dispersion of individual-based predictions and enhance classification accuracy. | No data |

| [28] | Organ segmentation (Airway) | Image | Mask and 3D reconstruction of organ | CNN | Fuzzy Attention Layer | Fuzzy Channel Selection Module | Encoder–decoder architecture based on CNN with residual connections between encoder and decoder. Each residual connection has a fuzzy attention layer between. The fuzzy attention layer takes both feature representations from the encoder and decoder layers and applies a learnable Gaussian membership function to them. | The use of attention and fuzzy logic techniques (Fuzzy Attention layer) improved 2 metrics compared to when the attention and fuzzy logic techniques were not used in a base model: Pre: 0.0015, DLR: 1.39%, DBR: 2.18%, AMR: 0.34%, CCF: 0.0051 |

| [15] | Organ segmentation (Brain tumor) | Image | Area segment | CNN, Transformer, MLP | Multihead Self-Attention (Transformer), Refine Module | Fuzzy-Enhanced Firefly Algorithm (FEFA) | They utilize a hybrid joint that combines Transformer and convolution (Hybrid-Transformer) and subsequently propose a refinement module to preserve and refine the downsampled features, which incorporates an attention mechanism based on average and maximum pooling. Finally, a fuzzy selector is proposed to process the segmentation results further. | No data |

| [9] | Organ segmentation (Cardiac left atrium) | Image | Image with green and red lines | CNN, Transformer | Multihead Self-Attention Mechanism | Ancho-Wise Fuzziness Module | Encoder–decoder architecture, convolution for contraction, and the Transformer for expansion, with a mask matrix in the middle that incorporates fuzziness elements. | The use of the attention technique (Self-Attention Mechanism) improved 4 metrics compared to when the attention technique was not used: DICE: 2.8%, JSC: 3.77%, HD: 2.33, ASD: 1.54. |

| [24] | Organ segmentation (Cardiac) | Image | Image with 3 areas and black background | CNN | Higher-Performance Dyconv Structure as an Attention Mechanism | Fuzzy-ER Netlayer | Encoder–decoder architecture based on CNN with residual connections between encoder and decoder. They include a spatial fuzzy convolutional layer (SFConv) and a channel fuzzy convolutional layer (CFConv) in the encoder and decoder. They add a dyConv structure (CNN-based) that calculates the attention of the feature map in the channel or spatial dimension. | The use of the attention technique (Channel and Spatial Attention) improved 2 metrics compared to when the attention technique was not used in a base model: DSC: 1.63%, HD: 1.12 mm. The use of the fuzzy logic technique (fuzzy convolutional module) improved 2 metrics compared to when the fuzzy logic technique was not used in a base model: ACC: DSC: 3.05%, HD: 1.72 mm. |

| [37] | Organ segmentation (Cardiac) | Image | Image with segmentation area | CNN | Fuzzy Attention Module (Fuzzy Channel Attention and Fuzzy Spatial Attention) | Fuzzy C-Means Clustering (FCM). | Encoder–decoder architecture based on CNN with residual connections between encoder and decoder. They add a Fuzzy Channel Attention Module on the encoder and a Fuzzy Spatial Attention Module on the decoder. Based on the attention mechanism, fuzzy membership is used to recalibrate the importance of the pixel value of each local area. | No data |

| [43] | Organ segmentation (Diabetic foot ulcers) | Image | Segmentation masks | CNN | Attention Gate Module | Fuzzy Selector | They employ an encoder–decoder architecture, utilizing a fuzzy logic approach to the activation function and attention gates. | No data |

| [25] | Organ segmentation (Fundus/eyes) | Image | Probability maps of segmentation regions | CNN | Dual Attention Mechanism (Spatial-Channel), Attention Refinement Module | Fuzzy Convolutional Module | First, feature discretization based on the rough fuzzy set is performed. Then, the data serves as input to the encoder–decoder architecture with skip connections, which introduces a dual attention mechanism that combines spatial regions and feature channels. Next, the attention refinement module captures multiscale contextual information as feature maps. Finally, two CNN layers generate probability maps of segmentation regions | The use of the fuzzy logic technique (Fuzzy C-Means Clustering) improved 5 metrics compared to when the fuzzy logic technique was not used: DSC: 0.02, HD95: 0.02, ASD: 0.02, SEN: 0.02, SPE: 0.05. |

| [27] | Organ segmentation (Lung) | Image | Border mask | CNN | Fuzzy Attention-based Module | Fuzzy Attention Gate (FAG) | They propose a Fuzzy Attention-based Transformer-like (encoder–decoder architecture). The encoder and decoder are based on CNN, and they are connected with residual connections. In each residual connection, they add a "fuzzy attention module" to focus on pertinent regions of the encoder and decoder outputs, utilizing four Gaussian membership functions. Finally, they use a feature fusion module to focus on image borders. | The use of attention and fuzzy logic techniques (Fuzzy Attention) improved 6 metrics compared to when the attention and fuzzy logic technique were not used: IoU: 0.83, Pre: 0.39%, DLR: 4.03, DBR: 4.12, AMR: 0.47, CCF: 2.24. |

| [42] | Organ segmentation (Multiorgan) | Image | Reconstructed image | CNN | Iterative Attention Fusion Module | Fuzzy Learning Module | Encoder–decoder architecture based on CNN with residual connections between encoder and decoder. Each residual connection utilizes a fuzzy operation to suppress irrelevant information. The decoder utilizes a target attention mechanism based on a CNN to further enhance the feature representation. The output is a mask. Finally, they use an ensemble of multiview masks to generate a 3D representation of the segmentation. | The use of attention technique (Attention Fusion) improved 2 metrics compared to when the attention technique was not used: DSC: 0.59%; HD95: 0.7 mm. The use of attention and fuzzy logic techniques (Attention Fusion + Fuzzy Learning) improved 2 metrics compared to when the attention and fuzzy logic techniques were not used: DSC: 1.24%; HD95: 1.52 mm. |

| [10] | Organ segmentation (Pancreas) | Image | Mask and 3D reconstruction of organ | CNN | Target Attention Mechanism Based on CNN | Fuzzy Skip Connections | Encoder–decoder architecture based on CNN with residual connections between encoder and decoder. Each residual connection utilizes a fuzzy operation to suppress irrelevant information. The decoder uses a target attention mechanism based on CNN to further improve the feature representation. The output is a mask. Finally, they use an ensemble of multiview masks to generate a 3D representation of the segmentation. | The use of attention and fuzzy logic techniques (Target attention + fuzzy skip connections) improved 4 metrics compared to when the attention and fuzzy logic techniques were not used: DSC: 7.1%, VOE: 10.24%, ASSD: 0.55 mm, RMSD: 0.17 mm. |

| [41] | Organ segmentation (Subretinal Fluid Lesions) | Image | NA | CNN | Dual Attention Mechanism (Spatial and Channel), and Attention Refinement Module | Fuzzy Clustering | First, they applied a fuzzy clustering of OCT fundus images. Then, the fuzzy set was combined with the rough set in a rough approximation space to construct a fuzzy rough model for feature discretization in SD-OCT fundus images. Finally, the images after feature discretization are used as input data, and the deep attention modules are employed to capture multiscale information within the fully convolutional neural network architecture. | The use of attention technique (Dual attention Mechanism) improved 3 metrics compared to when the attention technique was not used: DSC: 0.0376, HD95: 0.0794, ASD: 0.0799. |

| [40] | Reconstruction (COVID-19 CT reconstruction) | Image | Reconstructed image | CNN, LSTM, MLP | Attention Mechanism based on CNN and others based on Conv-LSTM | Fuzzy Network | They utilized a fuzzy neural information processing block, which combines a CNN and a fuzzy network (to match the output of the fuzzy neurons in the membership function layer according to specific rules) and then performed fuzzy logic operations to calculate the uncertainty of the input pixels. Then, they utilize feature extraction with a CNN that incorporates residual connections (MGLDB module) and two attention modules, based on CNN and Conv-LSTM, to reconstruct the image. | No data |

| [22] | Tissue location (Polyp positions) | Image | Area coordinates (square) | CNN, MLP | Hybrid Attention Mechanism | Fuzzy Pooling | The architecture utilizes a Channel Attention block and a Spatial Attention block, combined with a CNN, to compute the coordinates of gastric polyps. Then, they employ a CNN based on fuzzy pooling to perform feature extraction of the image. Then, the model integrates both outputs and inputs them into the FCN to obtain the coordinates of gastric polyps. | No data |

Appendix B. Results of the Risk of Bias Assessment of the Included Publications on Our Systematic Review

| Ref. | Q-01 | Q-02 | Q-03 | Q-04 | Q-05 | Q-06 | Q-07 | Q-08 | Q-09 | Q-10 | Q-11 | Score | Status |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [9] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 9.5 | Included |

| [13] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 11 | Included |

| [40] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 9 | Included |

| [11] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 9.5 | Included |

| [27] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 9.5 | Included |

| [10] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 0.5 | 1 | 0 | 9 | Included |

| [42] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 10 | Included |

| [22] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 9 | Included |

| [29] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 9.5 | Included |

| [41] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 11 | Included |

| [23] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0.5 | 10.5 | Included |

| [39] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0.5 | 10.5 | Included |

| [15] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0.5 | 0 | 8.5 | Included |

| [18] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0.5 | 9.5 | Included |

| [43] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 0.5 | 0 | 8 | Included |

| [12] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 9.5 | Included |

| [44] | 1 | 1 | 1 | 1 | 1 | 0.5 | 0.5 | 1 | 0.5 | 1 | 1 | 9.5 | Included |

| [28] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 11 | Included |

| [38] | 1 | 1 | 1 | 1 | 0.5 | 1 | 1 | 1 | 0.5 | 1 | 0 | 9 | Included |

| [33] | 0.5 | 0.5 | 1 | 1 | 1 | 1 | 1 | 0.5 | 0.5 | 0.5 | 0 | 7.5 | Included |

| [35] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0.5 | 1 | 0.5 | 10 | Included |

| [14] | 1 | 1 | 1 | 1 | 0.5 | 0.5 | 0.5 | 1 | 0 | 1 | 0.5 | 8 | Included |

| [34] | 1 | 0.5 | 1 | 1 | 0.5 | 0.5 | 0.5 | 1 | 0 | 1 | 1 | 8 | Included |

| [36] | 1 | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 9.5 | Included |

| [16] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 8.5 | Included |

| [32] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0.5 | 10 | Included |

| [17] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0.5 | 1 | 1 | 10.5 | Included |

| [24] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0.5 | 1 | 1 | 10.5 | Included |

| [31] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 10 | Included |

| [25] | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0.5 | 1 | 0.5 | 10 | Included |

| [19] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 9 | Included |

| [37] | 1 | 0.5 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 9 | Included |

References

- Vanstone, M.; Monteiro, S.; Colvin, E.; Norman, G.; Sherbino, J.; Sibbald, M.; Dore, K.; Peters, A. Experienced physician descriptions of intuition in clinical reasoning: A typology. Diagnosis 2019, 6, 259–268. [Google Scholar] [CrossRef]

- Wen, X. Deep learning framework for enhanced MRI analysis in healthcare diagnosis. Expert Syst. Appl. 2025, 292, 128487. [Google Scholar] [CrossRef]

- Aggarwal, C.C. Neural Networks and Deep Learning: A Textbook; Springer International Publishing: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Kufel, J.; Bargiel-Laczek, K.; Kocot, S.; Kozlik, M.; Bartnikowska, W.; Janik, M.; Czogalik, L.; Dudek, P.; Magiera, M.; Lis, A.; et al. What Is Machine Learning, Artificial Neural Networks and Deep Learning?—Examples of Practical Applications in Medicine. Diagnostics 2023, 13, 2582. [Google Scholar] [CrossRef] [PubMed]

- Phuong, N.H.; Kreinovich, V. Fuzzy logic and its applications in medicine. Int. J. Med Inform. 2001, 62, 165–173. [Google Scholar] [CrossRef] [PubMed]

- Bojadziev, G.; Bojadziev, M. Fuzzy Sets, Fuzzy Logic, Applications; Advances in Fuzzy Systems—Applications and Theory; World Scientific: Singapore, 1996; Volume 5. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Fang, M.; Wang, Z.; Pan, S.; Feng, X.; Zhao, Y.; Hou, D.; Wu, L.; Xie, X.; Zhang, X.Y.; Tian, J.; et al. Large models in medical imaging: Advances and prospects. Chin. Med J. 2025, 138, 1647–1664. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, X. Anchorwise Fuzziness Modeling in Convolution–Transformer Neural Network for Left Atrium Image Segmentation. IEEE Trans. Fuzzy Syst. 2024, 32, 398–408. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, C.; Ding, W.; Sun, S.; Yue, X.; Fujita, H. Target-aware U-Net with fuzzy skip connections for refined pancreas segmentation. Appl. Soft Comput. 2022, 131, 109818. [Google Scholar] [CrossRef]

- Das, D.; Nayak, D.R. FJA-Net: A Fuzzy Joint Attention Guided Network for Classification of Glaucoma Stages. IEEE Trans. Fuzzy Syst. 2024, 32, 5438–5448. [Google Scholar] [CrossRef]

- Roy, A.; Bhattacharjee, A.; Oliva, D.; Ramos-Soto, O.; Alvarez-Padilla, F.J.; Sarkar, R. FA-Net: A Fuzzy Attention-aided Deep Neural Network for Pneumonia Detection in Chest X-Rays. In Proceedings of the 2024 IEEE 37th International Symposium on Computer-Based Medical Systems (CBMS), Guadalajara, Mexico, 26–28 June 2024; pp. 338–343. [Google Scholar] [CrossRef]

- Ding, W.; Zhou, T.; Huang, J.; Jiang, S.; Hou, T.; Lin, C.T. FMDNN: A Fuzzy-Guided Multigranular Deep Neural Network for Histopathological Image Classification. IEEE Trans. Fuzzy Syst. 2024, 32, 4709–4723. [Google Scholar] [CrossRef]

- Xiao, F.; Liu, H.; Lu, J. A new approach based on a 1D + 2D convolutional neural network and evolving fuzzy system for the diagnosis of cardiovascular disease from heart sound signals. Appl. Acoust. 2024, 216, 109723. [Google Scholar] [CrossRef]

- Wu, D.; Nie, L.; Mumtaz, R.A.; Agarwal, K. A LLM-Based Hybrid-Transformer Diagnosis System in Healthcare. IEEE J. Biomed. Health Inform. 2024, 29, 6428–6439. [Google Scholar] [CrossRef]

- Singh, V.K.; Mohamed, E.M.; Abdel-Nasser, M. Aggregating efficient transformer and CNN networks using learnable fuzzy measure for breast tumor malignancy prediction in ultrasound images. Neural Comput. Appl. 2024, 36, 5889–5905. [Google Scholar] [CrossRef]

- Li, J.; Li, X.; Mao, Y.; Yao, J.; Gao, J.; Liu, X. Classification of Parkinson’s disease EEG signals using 2D-MDAGTS model and multi-scale fuzzy entropy. Biomed. Signal Process. Control 2024, 91, 105872. [Google Scholar] [CrossRef]

- Srinivasan, P.S.; Regan, M. Enhancing Brain Tumor Diagnosis with Substructure Aware Graph Neural Networks and Fuzzy Linguistic Segmentation. In Proceedings of the 2024 Second International Conference on Intelligent Cyber Physical Systems and Internet of Things (ICoICI), Coimbatore, India, 28–30 August 2024; pp. 1613–1618. [Google Scholar] [CrossRef]

- Li, H.; Li, W.; Chang, J.; Zhou, L.; Luo, J.; Guo, Y. Dermoscopy lesion classification based on GANs and a fuzzy rank-based ensemble of CNN models. Phys. Med. Biol. 2022, 67, 185005. [Google Scholar] [CrossRef]

- Amato, F.; López, A.; Peña-Méndez, E.M.; Vaňhara, P.; Hampl, A.; Havel, J. Artificial neural networks in medical diagnosis. J. Appl. Biomed. 2013, 11, 47–58. [Google Scholar] [CrossRef]

- Jones, A. Comprehensive Machine Learning Techniques: A Guide for the Experienced Analyst; Walzone Press: Pittsfield, MA, USA, 2025; ISBN 979-8-230-43387-3. [Google Scholar]

- Ma, X.; Wang, H.; Ren, X.; Ma, Y. A Hybrid Attention-Based Fuzzy Pooling Network Model for Locating Polyp Positions in Gastroscopic Image in Internet of Medical Things. IEEE Internet Things J. 2025, 1. [Google Scholar] [CrossRef]

- Pearly, A.A.; Karthik, B. HybridNet-X: A Hybrid Deep Learning Network with Fuzzy-Enhanced Firefly Algorithm for Lung Disorder Diagnosis Using X-Ray Images. In Proceedings of the 2024 International Conference on Innovative Computing, Intelligent Communication and Smart Electrical Systems (ICSES), Chennai, India, 12–13 December 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Luo, Y.; Fang, Y.; Zeng, G.; Lu, Y.; Du, L.; Nie, L.; Wu, P.Y.; Zhang, D.; Fan, L. DAFNet: A dual attention-guided fuzzy network for cardiac MRI segmentation. AIMS Math. 2024, 9, 8814–8833. [Google Scholar] [CrossRef]

- Chen, Q.; Zeng, L.; Lin, C. A deep network embedded with rough fuzzy discretization for OCT fundus image segmentation. Sci. Rep. 2023, 13, 328. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, H.; Xiao, J.; Nie, L.; Shao, J.; Liu, W.; Chua, T.S. SCA-CNN: Spatial and Channel-Wise Attention in Convolutional Networks for Image Captioning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6298–6306. [Google Scholar] [CrossRef]

- Zhang, S.; Fang, Y.; Nan, Y.; Wang, S.; Ding, W.; Ong, Y.S.; Frangi, A.F.; Pedrycz, W.; Walsh, S.; Yang, G. Fuzzy Attention-Based Border Rendering Orthogonal Network for Lung Organ Segmentation. IEEE Trans. Fuzzy Syst. 2024, 32, 5462–5476. [Google Scholar] [CrossRef]

- Nan, Y.; Ser, J.D.; Tang, Z.; Tang, P.; Xing, X.; Fang, Y.; Herrera, F.; Pedrycz, W.; Walsh, S.; Yang, G. Fuzzy Attention Neural Network to Tackle Discontinuity in Airway Segmentation. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 7391–7404. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; He, Z.; Wang, X.; Su, W.; Tan, J.; Deng, Y.; Xie, S. Cross-Scale Fuzzy Holistic Attention Network for Diabetic Retinopathy Grading From Fundus Images. IEEE Trans. Emerg. Top. Comput. Intell. 2025, 9, 2164–2178. [Google Scholar] [CrossRef]

- PRISMA-P Group; Moher, D.; Shamseer, L.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst. Rev. 2015, 4, 1. [Google Scholar] [CrossRef]

- Rai, A.K.; Agarwal, S.; Gupta, S.; Agarwal, G. An effective fuzzy based segmentation and twin attention based convolutional gated recurrent network for skin cancer detection. Multimed. Tools Appl. 2023, 83, 52113–52140. [Google Scholar] [CrossRef]

- Murthy, N.N.; Thippeswamy, K. Fuzzy-ER Net: Fuzzy-based Efficient Residual Network-based lung cancer classification. Comput. Electr. Eng. 2025, 121, 109891. [Google Scholar] [CrossRef]

- Quan, T.; Yuan, Y.; Song, Y.; Zhou, T.; Qin, J. Fuzzy Structural Broad Learning for Breast Cancer Classification. In Proceedings of the 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), Kolkata, India, 28–31 March 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, T.; Xue, G. Fuzzy attention-based deep neural networks for acute lymphoblastic leukemia diagnosis. Appl. Soft Comput. 2025, 171, 112810. [Google Scholar] [CrossRef]

- Luo, Y.; Xin, J.; Liu, S.; Feng, J.; Ruan, L.; Cui, W.; Zheng, N. Lymph Node Metastasis Classification Based on Semi-Supervised Multi-View Network. In Proceedings of the 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Republic of Korea, 16–19 December 2020; pp. 675–680. [Google Scholar] [CrossRef]

- Emdadi, M.; Pedram, M.M.; Eshghi, F.; Mirzarezaee, M. Graph Fuzzy Attention Network Model for Metastasis Prediction of Prostate Cancer Based on mRNA Expression Data. Int. J. Fuzzy Syst. 2024, 27, 1702–1711. [Google Scholar] [CrossRef]

- Yang, R.; Yu, J.; Yin, J.; Liu, K.; Xu, S. An FA-SegNet Image Segmentation Model Based on Fuzzy Attention and Its Application in Cardiac MRI Segmentation. Int. J. Comput. Intell. Syst. 2022, 15, 24. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, H.; Zhang, G.; Liu, X.; Huang, W.; Han, X.; Li, X.; Martin, M.; Tao, L. Contrastive Learning for Prediction of Alzheimer’s Disease Using Brain 18F-FDG PET. IEEE J. Biomed. Health Inform. 2023, 27, 1735–1746. [Google Scholar] [CrossRef]

- Zhang, S.; Xiao, L.; Huang, H.; Hu, Z. Attention-stacking adaptive fuzzy neural networks for autism diagnosis. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Lisbon, Portugal, 3–6 December 2024; pp. 5654–5661. [Google Scholar] [CrossRef]

- Wang, C.; Lv, X.; Shao, M.; Qian, Y.; Zhang, Y. A novel fuzzy hierarchical fusion attention convolution neural network for medical image super-resolution reconstruction. Inf. Sci. 2023, 622, 424–436. [Google Scholar] [CrossRef]

- Chen, Q.; Zeng, L.; Ding, W. FRCNN: A Combination of Fuzzy-Rough-Set-Based Feature Discretization and Convolutional Neural Network for Segmenting Subretinal Fluid Lesions. IEEE Trans. Fuzzy Syst. 2025, 33, 350–364. [Google Scholar] [CrossRef]

- Ding, W.; Geng, S.; Wang, H.; Huang, J.; Zhou, T. FDiff-Fusion: Denoising diffusion fusion network based on fuzzy learning for 3D medical image segmentation. Inf. Fusion 2024, 112, 102540. [Google Scholar] [CrossRef]

- Purwono, P.; Nataliani, Y.; Purnomo, H.D.; Timotius, I.K. Fuzzy Logic and Attention Gate for Improved U-Net with Genetic Algorithm for DFU Image Segmentation. In Proceedings of the 2024 International Conference on Information Technology Research and Innovation (ICITRI), Jakarta, Indonesia, 5–6 September 2024; pp. 135–140. [Google Scholar] [CrossRef]

- Bhat, S.; Birajdar, G.K.; Patil, M.D. Pediatric oral health detection using Swin transformer. In Proceedings of the 2024 Third International Conference on Distributed Computing and Electrical Circuits and Electronics (ICDCECE), Ballari, India, 26–27 April 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Bali, B.; Garba, E.J. Neuro-fuzzy Approach for Prediction of Neurological Disorders: A Systematic Review. SN Comput. Sci. 2021, 2, 307. [Google Scholar] [CrossRef]

- De Campos Souza, P.V. Fuzzy neural networks and neuro-fuzzy networks: A review the main techniques and applications used in the literature. Appl. Soft Comput. 2020, 92, 106275. [Google Scholar] [CrossRef]

- Ravichandran, B.D.; Keikhosrokiani, P. Classification of Covid-19 misinformation on social media based on neuro-fuzzy and neural network: A systematic review. Neural Comput. Appl. 2023, 35, 699–717. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Ma, G.; Zhang, G. Fuzzy Machine Learning: A Comprehensive Framework and Systematic Review. IEEE Trans. Fuzzy Syst. 2024, 32, 3861–3878. [Google Scholar] [CrossRef]

| Scientific Electronic Databases | Search Strings |

|---|---|

| IEEE Xplore | ("Abstract”:neur* OR “Abstract”:net* OR “Abstract”:model*) AND (“Abstract”:atten*) AND (“Abstract”:fuzz*) AND (“Abstract”:medic* OR “Abstract”: diagn*) Filters Applied: 2020–2025 |

| Science Direct | Find articles with these terms: (“neural network” OR model) AND attention AND fuzzy AND (medic OR diagnostic) Year(s): 2020–2025 Title, abstract or author-specified keywords: “neural network” AND attention AND fuzzy AND diagnostic |

| Springer Link | Keywords: (“neural network” OR model) AND attention AND fuzzy AND (medic OR diagnostic) Title: fuzz* AND (neur* OR net* OR model*) Start year: 2020 End year: 2025 |

| Web of Science | AB = (neur* OR net* OR model) AND AB = atten* AND AB = fuzz* AND AB = (medic* OR diagn*) TI = fuzz* AND TI = (neur* OR net* OR model*) Start year: 2020 End year: 2025 |

| ACM Digital Library | Search items from: The ACM Guide to Computing Literature Title: fuzz* AND (neur* OR net* OR model*) Abstract: (“neur* net*” OR model*) AND atten* AND fuzz* AND (medic* OR diagn*) Publication Date: Jan 2020–Dec 2025 |

| Type of Disease | Condition | References |

|---|---|---|

| Cancer and Tumors | Skin Cancer and Skin Lesions | [19,31] |

| Lung Cancer | [13,32] | |

| Breast Cancer | [16,33] | |

| Brain Tumor | [15,18] | |

| Colon Cancer | [13] | |

| Lymphoblastic Leukemia | [34] | |

| Lymph Node Metastasis | [35] | |

| Prostate Cancer | [36] | |

| Heart Conditions | Heart (Segmentation) | [9,24,37] |

| Heart Abnormalities | [14] | |

| Neurological or Brain Diseases | Alzheimer’s Disease | [38] |

| Autism | [39] | |

| Parkinson’s Disease | [17] | |

| Pulmonary Conditions | COVID-19 | [28,40] |

| Pneumonia | [12] | |

| Lung Disorders | [23] | |

| Ocular Conditions | Glaucoma | [11] |

| Subretinal Fluid Lesions | [41] | |

| Fundus Segmentation | [25] | |

| Gastrointestinal and Abdominal Conditions | Gastric Polyps | [22] |

| Pancreas | [10] | |

| Abdominal Multiorgan (Segmentation) | [42] | |

| Conditions Related to Diabetes | Diabetic Foot Ulcers | [43] |

| Diabetic Retinopathy | [29] | |

| Oral Health | Healthy Teeth | [44] |

| Artificial Neural Network | Details of Artificial Neural Networks |

|---|---|

| Convolutional neural networks (CNNs) | CNNs were used in 28 publications, making them the most widely used type of neural network. They are mainly applied to tasks involving the classification or segmentation of medical images, such as MRIs, CT scans, and X-rays. In some cases, they were integrated with spatial attention and fuzzy logic mechanisms to improve the interpretation of relevant regions. |

| Multilayer perceptrons (MLPs) | MLPs were utilized in 18 publications primarily due to their simplicity, which makes them suitable for combination with other types of neural networks, attention modules, or fuzzy modules. We also identified their use as a final layer for classification tasks. |

| Transformers | Transformers appear in seven publications and stand out for their ability to model complex and temporal relationships between input features. They were often integrated with fuzzy modules to guide attention with fuzzy logic. |

| Gated recurrent units (GRUs) | GRUs were used in two publications, mainly applied to medical time series (e.g., physiological signal monitoring). |

| Graph neural networks (GNNs) | GNNs were used in two publications, as they have become a state-of-the-art method for predicting associations between diseases. |

| Other less common approaches | Long short-term memory networks (LSTM). Generative adversarial networks (GANs). Random-coupled neural network (RCNN). |

| Attention Mechanisms | Details of the Attention Mechanisms |

|---|---|

| Self-Attention/Multihead Attention (Transformer style) | This type of attention appears in 10 publications, generally with the Transformers structure to focus on contextual or spatial features [9,11,13,14,15,16,33,36,39,44]. |

| Channel-Spatial Attention | This type of attention is used in 12 publications, with versions such as: - Dual Attention (channel + spatial) [12,19,23,25,35,41]. - Hybrid Attention Mechanism (HAM) [22,31,38]. - Iterative Attention Fusion Modules [42]. These mechanisms are effective in image processing-based models, enabling the prioritization of key regions of the human body for segmentation tasks. Generally, this type of attention is based on convolutional neural networks. |

| Fuzzy Attention modules | Some mechanisms, such as Fuzzy Attention Gate [28] or Fuzzy-Enhanced Holistic Attention [29], integrate fuzzy logic directly into the attention process. We identified this type of attention in five publications [27,28,29,34,37]. |

| Additional attention mechanisms | We also identified other concepts, such as the Attention Gate Module [43] and specialized networks, including the Contextual Attention Network [18]. |

| Fuzzy Logic Techniques | Details of Fuzzy Logic Techniques |

|---|---|

| Fuzzy networks and fuzzy layers | Fuzzy networks and fuzzy layers, as used in publications, combine the nonlinear representation capacity of neural networks with the approximate reasoning characteristic of fuzzy logic. These techniques made it possible to handle the uncertainty, ambiguity, and imprecision inherent in much medical data, offering greater interpretability in models by making them more understandable from a human logic or human language perspective. |

| Fuzzy clustering | We observed that fuzzy clustering techniques were used to identify fuzzy patterns between classes and improve the segmentation or grouping of features. Their flexibility in the presence of ambiguity makes them useful in diagnoses with poorly defined boundaries in images. |

| Hybrid fuzzy techniques | We identified hybrid fuzzy techniques, including the Fuzzy-Enhanced Firefly Algorithm, Fuzzy Entropy, Fuzzy Rank-Based Ensemble, and Fuzzy Stacking, which indicated that fuzzy logic was integrated into both the architecture and feature processing. |

| Other fuzzy techniques | Fuzzy Attention. Fuzzy Pooling. Fuzzy Activation Functions. Fuzzy Contrastive Loss. Fuzzy Skip Connections. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zacarias-Morales, N.; Pancardo, P.; Hernández-Nolasco, J.A.; Garcia-Constantino, M. Artificial Neural Network, Attention Mechanism and Fuzzy Logic-Based Approaches for Medical Diagnostic Support: A Systematic Review. AI 2025, 6, 281. https://doi.org/10.3390/ai6110281

Zacarias-Morales N, Pancardo P, Hernández-Nolasco JA, Garcia-Constantino M. Artificial Neural Network, Attention Mechanism and Fuzzy Logic-Based Approaches for Medical Diagnostic Support: A Systematic Review. AI. 2025; 6(11):281. https://doi.org/10.3390/ai6110281

Chicago/Turabian StyleZacarias-Morales, Noel, Pablo Pancardo, José Adán Hernández-Nolasco, and Matias Garcia-Constantino. 2025. "Artificial Neural Network, Attention Mechanism and Fuzzy Logic-Based Approaches for Medical Diagnostic Support: A Systematic Review" AI 6, no. 11: 281. https://doi.org/10.3390/ai6110281

APA StyleZacarias-Morales, N., Pancardo, P., Hernández-Nolasco, J. A., & Garcia-Constantino, M. (2025). Artificial Neural Network, Attention Mechanism and Fuzzy Logic-Based Approaches for Medical Diagnostic Support: A Systematic Review. AI, 6(11), 281. https://doi.org/10.3390/ai6110281