4.1. Performance Improvement Before and After KD

4.1.1. Analysis Using Conventional Performance Metrics

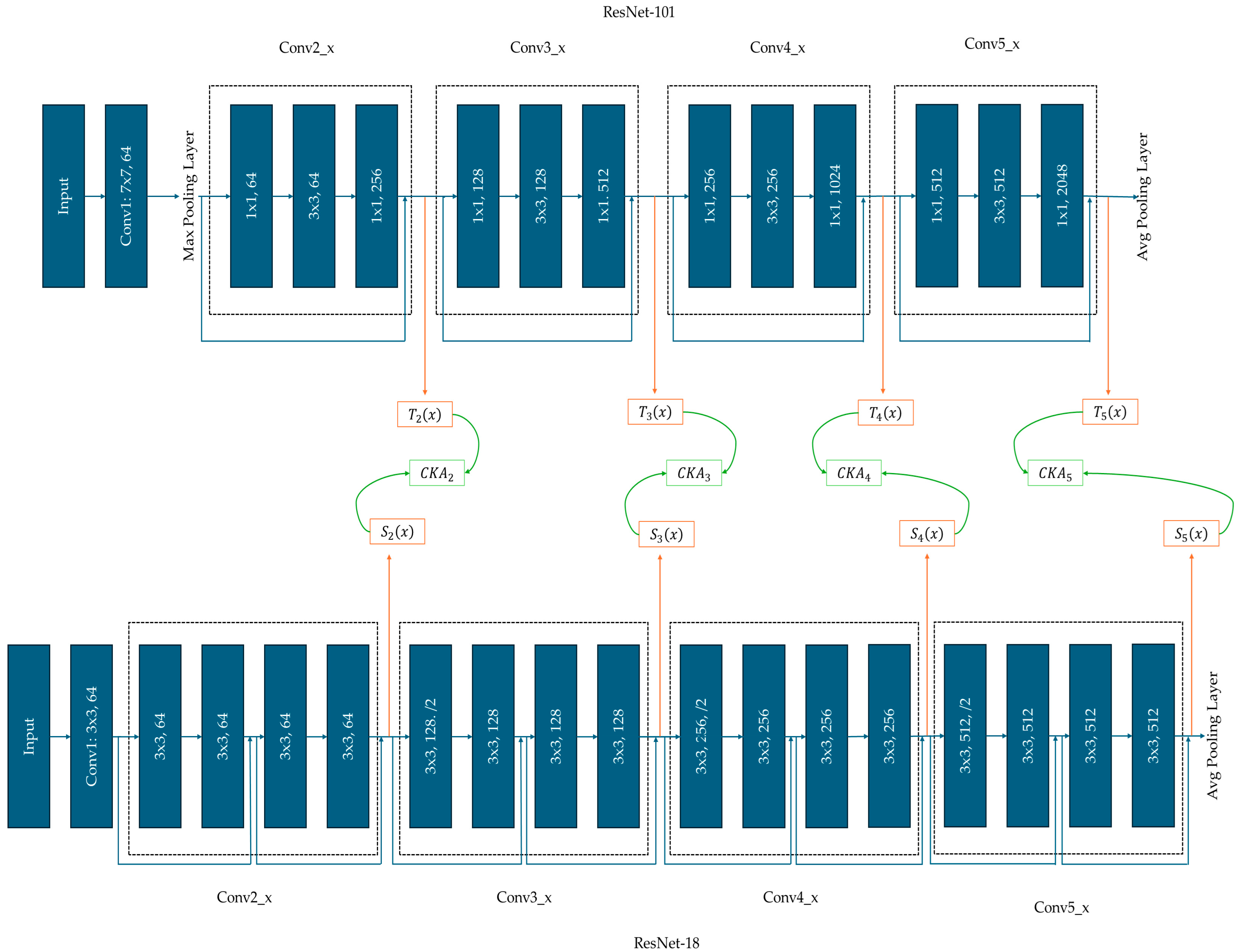

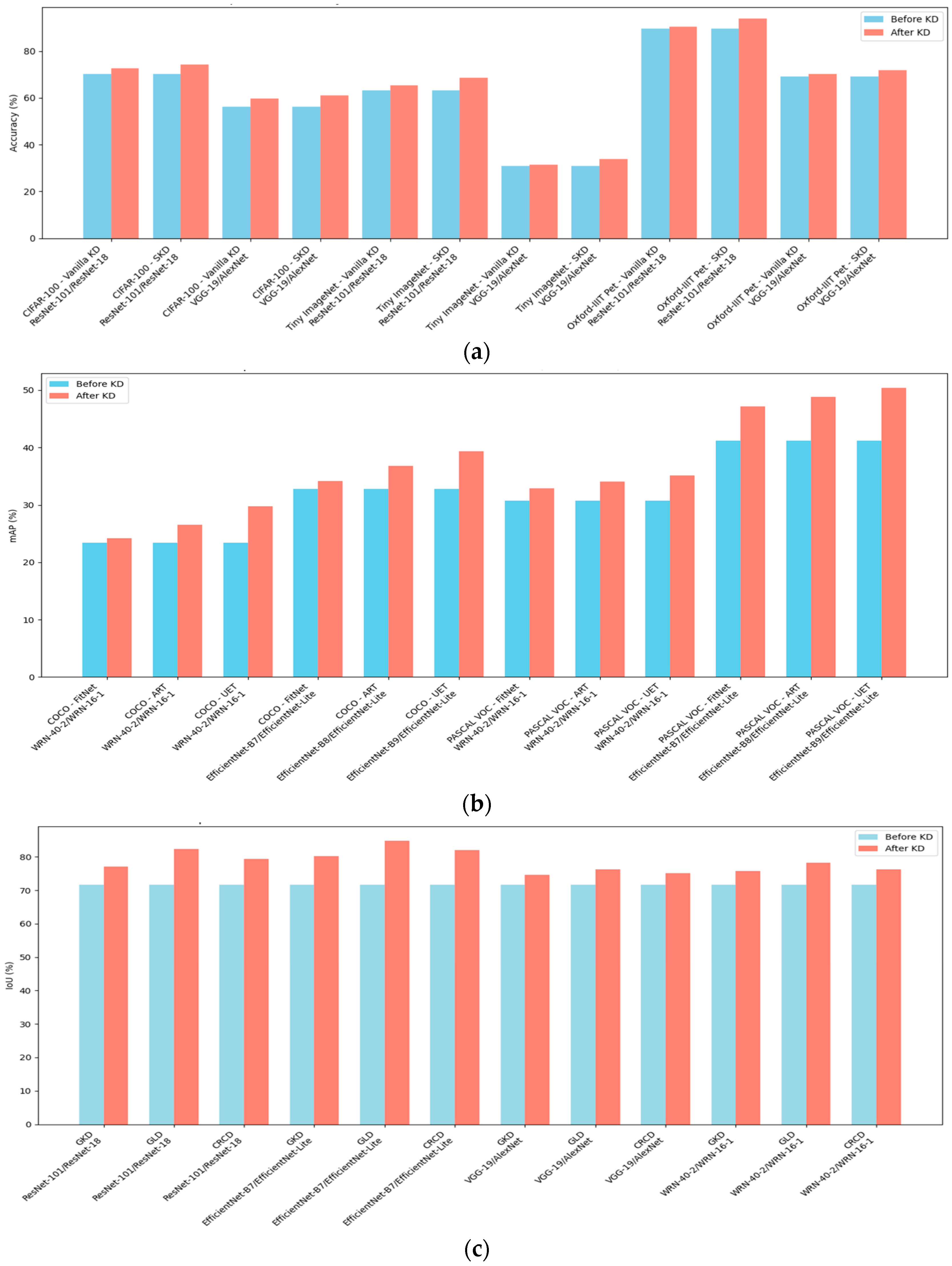

As shown in

Figure 2, KD consistently improves student model performance across all experiments, datasets, and metrics—demonstrating its effectiveness in enhancing generalization.

In

Figure 2a, accuracy improvements are most evident in the Oxford-IIIT Pet dataset and more modest in Tiny ImageNet. The former’s lower class count and distinct visual features facilitate easier knowledge transfer. In contrast, Tiny ImageNet’s high interclass similarity poses a greater challenge. ResNet-18 consistently outperforms AlexNet before and after KD, owing to its deeper architecture and skip connections, which improve feature extraction and mitigate gradient issues, making it more effective for complex classification tasks.

Figure 2b shows mAP results for object detection, with student models performing slightly better on PASCAL VOC than COCO, likely due to VOC’s simpler scenes and categories. Across both datasets, EfficientNet-Lite surpasses WRN-16-1, benefiting from compound scaling that optimizes depth, width, and resolution for nuanced feature extraction.

In

Figure 2c, KD leads to improved IoU scores in image segmentation, particularly for EfficientNet-derived students, which maintain high-resolution features vital for accurate segmentation. ResNet-based students follow, aided by depth and residual connections, while WRN-16-1 and especially AlexNet lag behind, limited by their shallower architectures.

While these improvements across accuracy, mAP, and IoU confirm KD’s utility, traditional metrics capture only surface-level performance. They miss how well the student internalizes the teacher’s knowledge—especially in complex or relational tasks. This limitation motivates the need for KRS, which assesses both feature alignment and output agreement, offering a more complete picture of knowledge transfer quality.

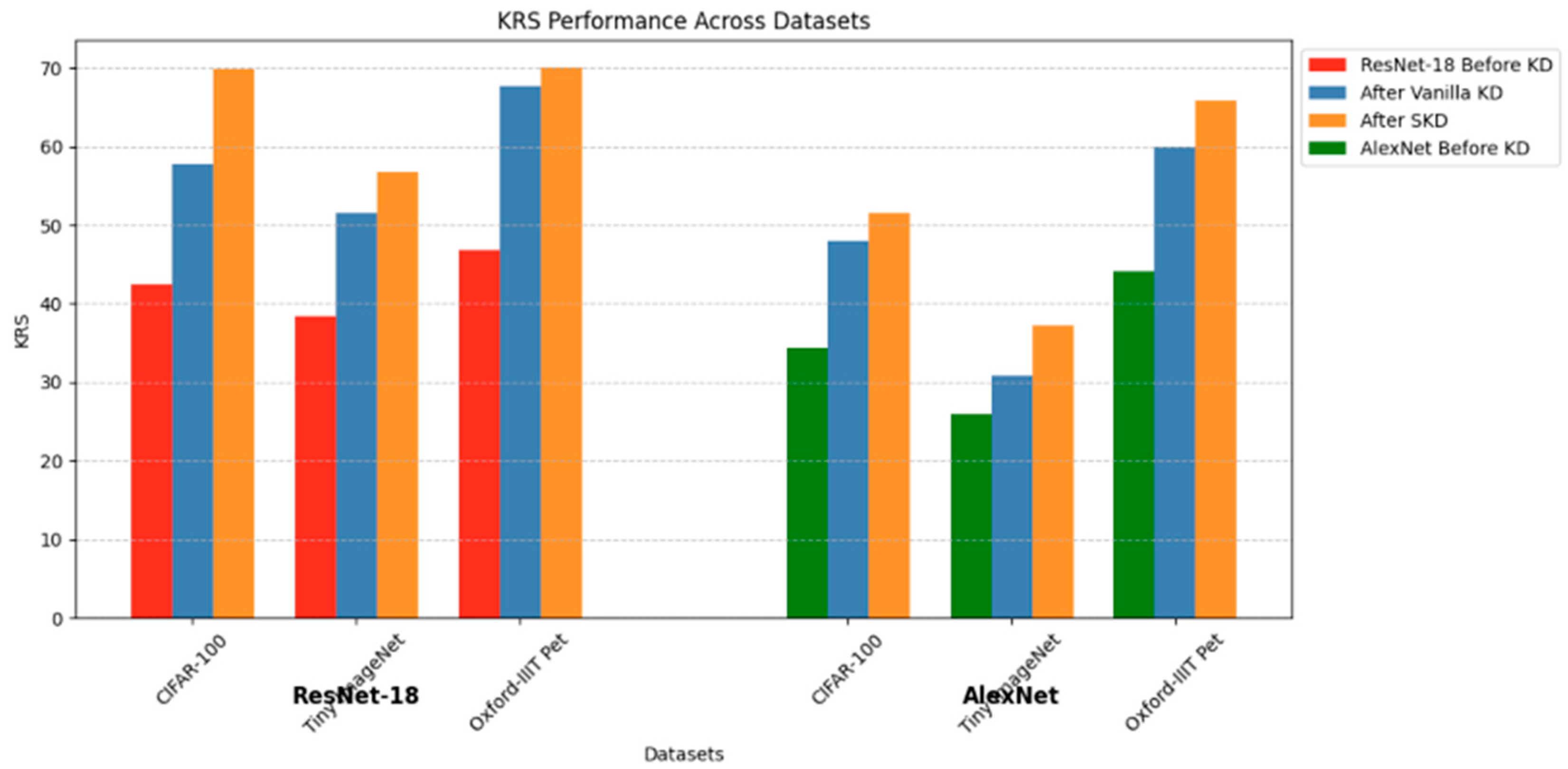

4.1.2. Student Model Performance Using KRS Before and After KD

After evaluating KD performance using standard metrics, we next examine the baseline KRS between teacher and student models before distillation. As defined in Equation (8), KRS combines Feature Similarity Score (FSS) and Average Output Agreement (AOA), weighted by α and β. For image classification tasks, we set α = 0.3 and β = 0.7 to emphasize logit-based outputs. For object detection and segmentation, α = 0.7 and β = 0.3 prioritize feature alignment, which is critical for spatial reasoning.

Figure 3 presents results for image classification task. Across all datasets, KD consistently improves KRS for both ResNet-18 and AlexNet, though the magnitude varies by KD method, architecture, and dataset complexity. ResNet-18 shows higher baseline KRS and greater improvements, e.g., on CIFAR-100, its KRS increases from 42.5 (pre-KD) to 57.7 (Vanilla KD) and 69.8 (SKD)—a 27.3-point gain. By contrast, AlexNet rises from 34.4 to 48.0 (Vanilla KD) and 51.5 (SKD), gaining 17.1 points. This pattern holds for Tiny ImageNet and Oxford-IIIT Pet, highlighting ResNet-18’s superior retention capability.

SKD consistently outperforms Vanilla KD, thanks to its student-friendly strategy that tailors teacher outputs to the student’s capacity. On Tiny ImageNet, AlexNet gains 6.5 points with SKD vs. 4.8 with Vanilla KD; ResNet-18 improves by 18.4 vs. 13.2 points, respectively. This shows SKD’s effectiveness, particularly for simpler models like AlexNet.

These results underscore the role of architecture in knowledge retention. ResNet-18’s residual connections support better optimization and representation learning, enabling it to absorb complex knowledge more effectively. In contrast, AlexNet’s shallow design limits its retention capacity, as reflected in its consistently lower KRS gains.

The data reveals that simpler datasets like Oxford-IIIT Pet tend to yield higher KRS gains, especially for smaller models. For example, AlexNet improves by 21.8 points on Oxford-IIIT Pet using SKD, compared to 13.2 on CIFAR-100 and 11.3 on Tiny ImageNet. A notable exception occurs with ResNet-18 on CIFAR-100, where the KRS gain (27.3 points) exceeds the 23.2-point gain on Oxford-IIIT Pet. This can be attributed to CIFAR-100’s greater feature complexity, which provides richer knowledge for SKD to transfer. ResNet-18’s residual connections help it exploit this complexity more effectively. In contrast, simpler datasets offer less room for improvement once baseline performance is high.

Thus, while simpler datasets generally yield better gains, complex datasets can amplify SKD’s benefits when paired with deeper student architectures.

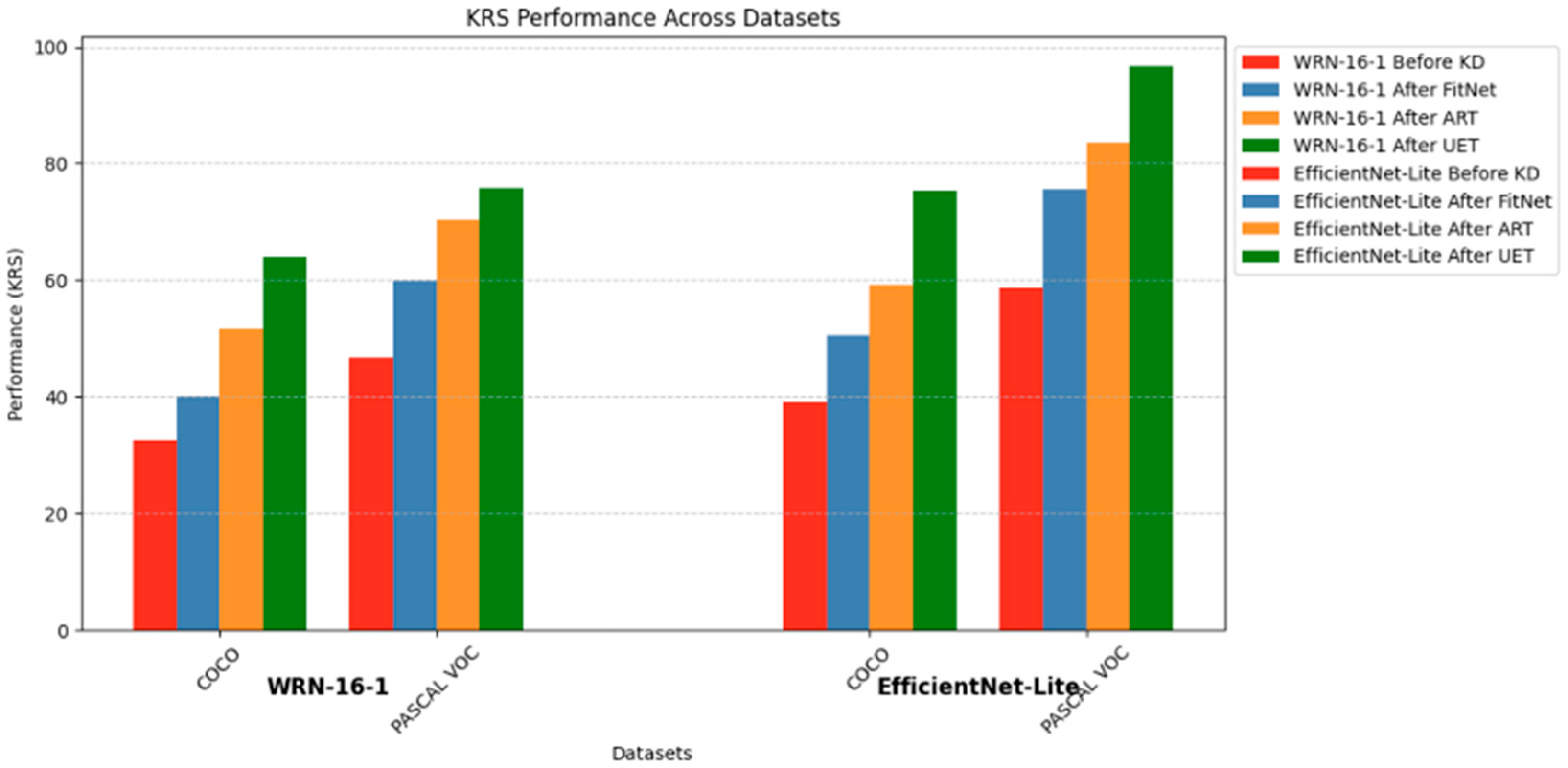

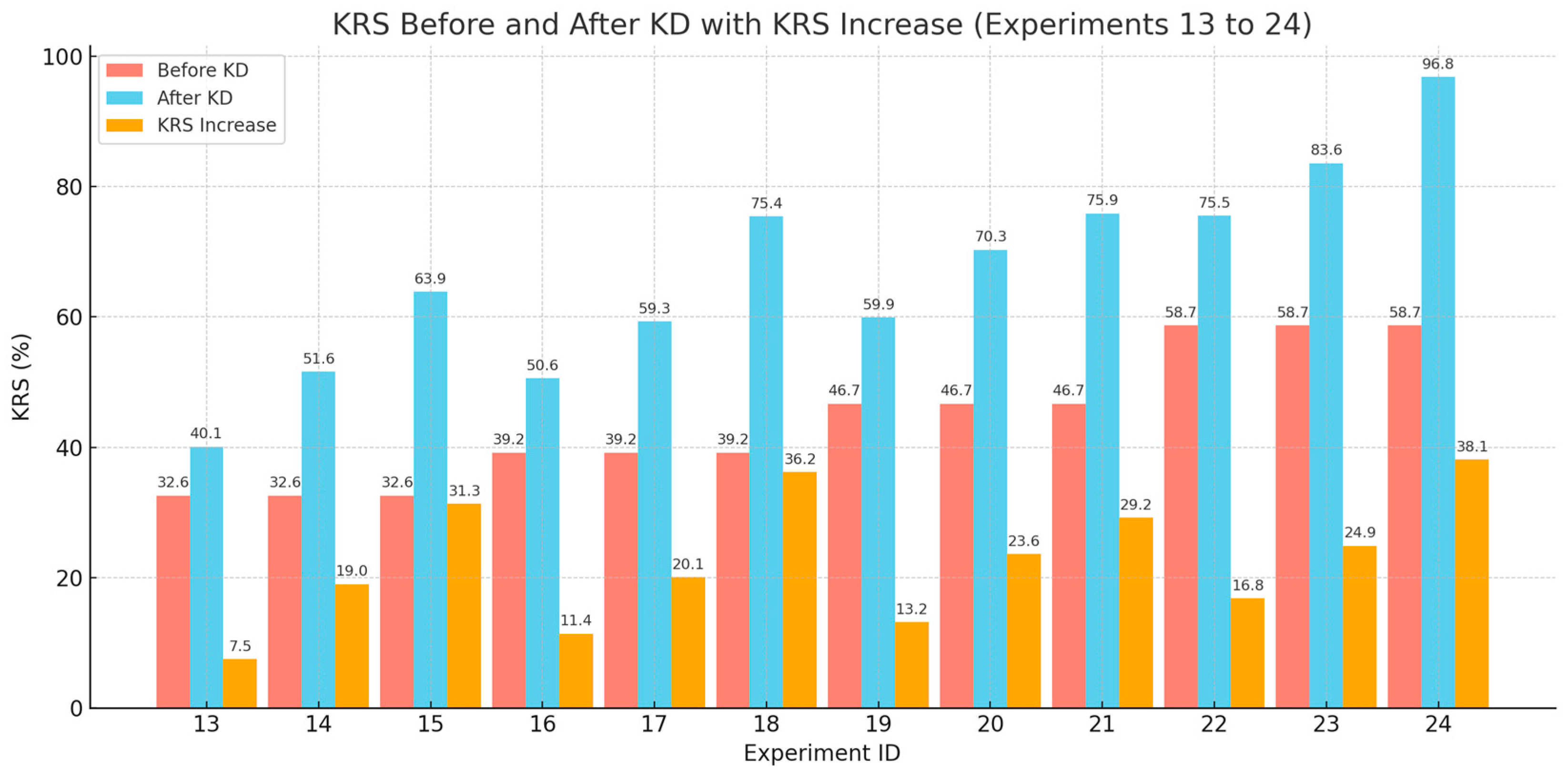

We next examine experiments for object detection. As shown in

Figure 4, UET consistently delivers the highest KRS improvements, followed by ART and FitNet. For example, on COCO (Experiment 15), UET improves KRS by 31.3 points, compared to 19 for ART. On PASCAL VOC (Experiment 21), UET again leads with a 29.2-point gain.

This pattern holds across architectures. In one experiment, UET boosts EfficientNet-Lite’s KRS by 38.1 points, outperforming ART (24.9) and FitNet (16.8), demonstrating UET’s robustness in integrating uncertainty estimation for improved feature and output alignment.

Dataset complexity also plays a role. PASCAL VOC, being simpler, yields higher KRS gains than the more complex COCO. While COCO offers richer features, its diversity can challenge student models’ ability to fully absorb the teacher’s knowledge. In contrast, VOC’s simplicity enables more effective alignment and transfer.

Across all setups, FitNet shows steady but modest gains—e.g., only 13.2 points in Experiment 19—highlighting its limited capacity to exploit complex knowledge, especially compared to more advanced methods like UET.

Overall, these findings affirm the superiority of UET and illustrate how KD effectiveness depends on both the distillation method and dataset complexity, underscoring the need for task-appropriate strategies.

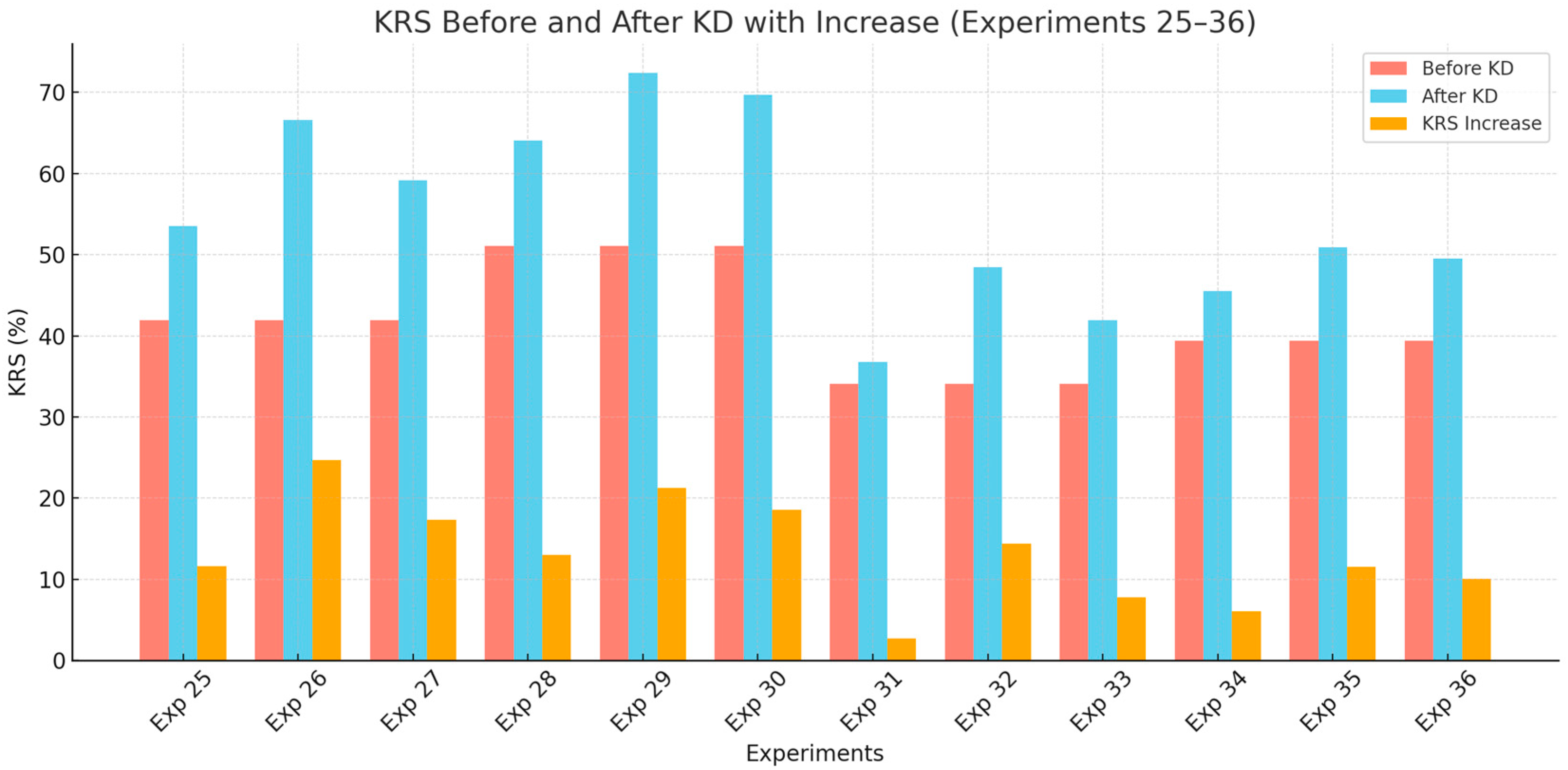

Finally, we analyze experiments focused on image segmentation. Across all setups, KRS significantly improves after applying KD, with GLD consistently achieving the highest gains, followed by CRCD and then GKD (

Figure 5).

For example, in the ResNet-101/ResNet-18 pair, GLD improves KRS by 24.7 points, outperforming CRCD (17.3) and GKD (11.6). The same trend appears in the EfficientNet-B7/EfficientNet-Lite pair, where GLD achieves a 21.3-point gain, exceeding CRCD (18.6) and GKD (13.0). GLD’s edge is likely due to its stronger ability to model both global and local relationships.

In the VGG-19/AlexNet pair, all gains are smaller due to AlexNet’s limited capacity. GLD still leads (14.4 points), but CRCD (7.8) and GKD (2.7) show more modest improvements. A similar pattern is observed in the WRN-40-2/WRN-16-1 pair, with GLD (11.5) ahead of CRCD (10.1) and GKD (6.1).

Overall, GLD emerges as the most effective segmentation KD method, while CRCD is a strong alternative for deeper networks. GKD, though simpler, offers consistent—albeit smaller—gains.

These differences reinforce the importance of choosing the right KD method and aligning it with the model’s capacity and task type. The design of KRS reflects this need: by tuning α and β, it shifts emphasis between output agreement and feature similarity. This task-aware flexibility allows KRS to effectively evaluate knowledge transfer across diverse KD scenarios—without structural modification—supporting its potential as a broadly applicable evaluation metric.

4.2. Validation of the KRS Metric

This section presents the results of multiple validation strategies conducted to assess the effectiveness and reliability of the proposed KRS metric as a performance indicator for knowledge distillation models.

4.2.1. Correlation Between KRS and Standard Performance Metrics

To validate the reliability of the proposed KRS metric, we first examined its correlation with established performance metrics across different tasks. For image classification (Experiments 1 to 12), we computed the Pearson correlation between KRS and the student model’s accuracy after knowledge distillation. The results revealed a strong positive correlation (r = 0.943, p = 0.000005), indicating that KRS is highly aligned with the conventional accuracy metric in classification tasks.

For object detection (Experiments 13 to 24), we analyzed the relationship between KRS and mAP. The computed correlation was also strong (r = 0.884, p = 0.0001), suggesting that KRS effectively reflects model performance in detection tasks.

Lastly, for image segmentation (Experiments 25 to 36), the correlation between KRS and IoU was found to be exceptionally high (r = 0.968, p = 0.00000025), demonstrating that KRS closely tracks the performance of student models in segmentation scenarios. These results collectively support the validity of KRS as a reliable metric for evaluating knowledge distillation outcomes across different task types.

While the KRS metric incorporates elements such as CKA, KL divergence, and IoU, which are also present in some knowledge distillation (KD) loss functions, it is crucial to emphasize that KRS is exclusively used as a post hoc evaluation tool, not during training. This ensures that all KD models, regardless of their internal training loss components, are assessed using the same standardized criteria. The consistent improvements in KRS for models like SKD that do not explicitly use CKA or IoU—demonstrate that high KRS values are not exclusive to methods that share its components. Thus, KRS does not reward methods simply for overlapping mechanisms; instead, it objectively measures how well the student retains and reflects the teacher’s knowledge, ensuring a fair evaluation across diverse KD tasks and approaches.

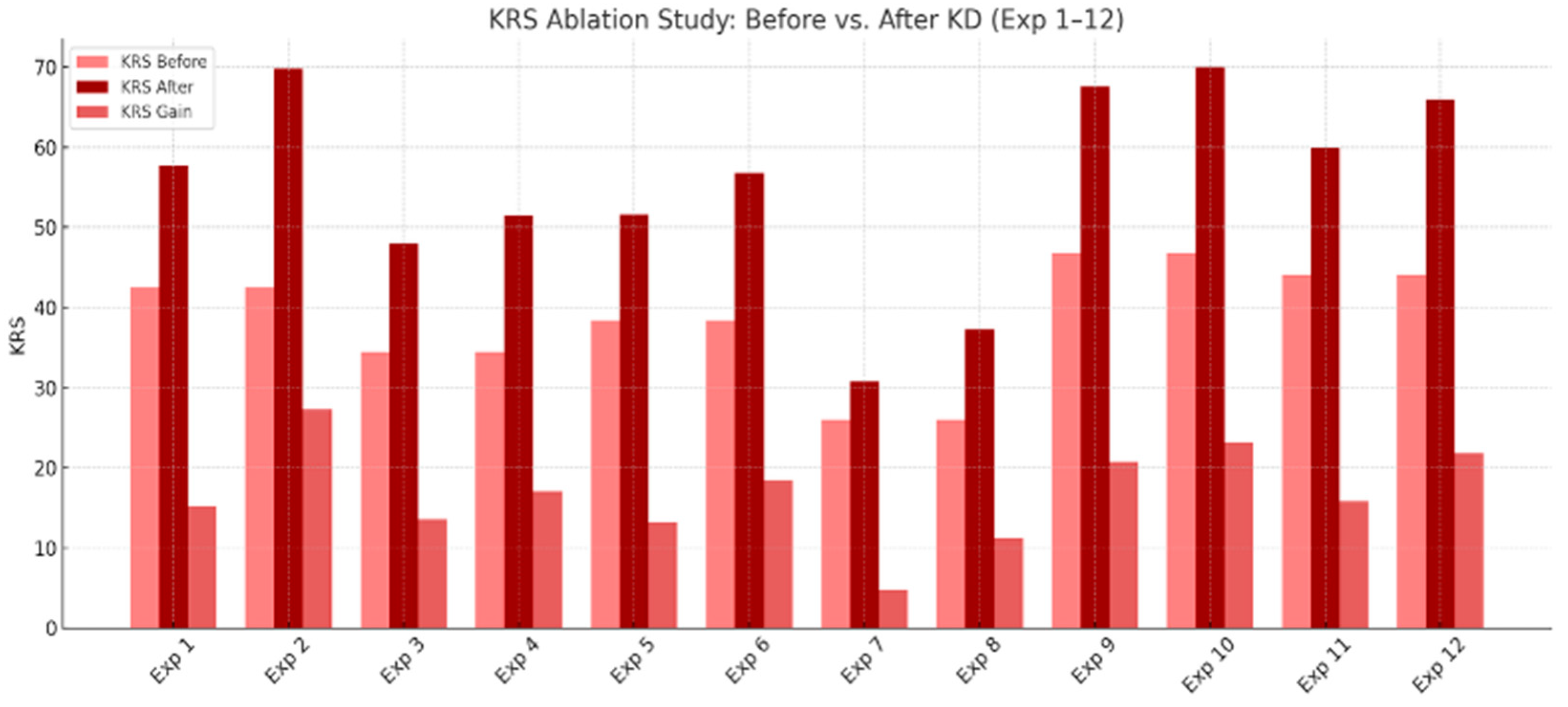

4.2.2. Ablation Study: Decomposing KRS Before and After KD

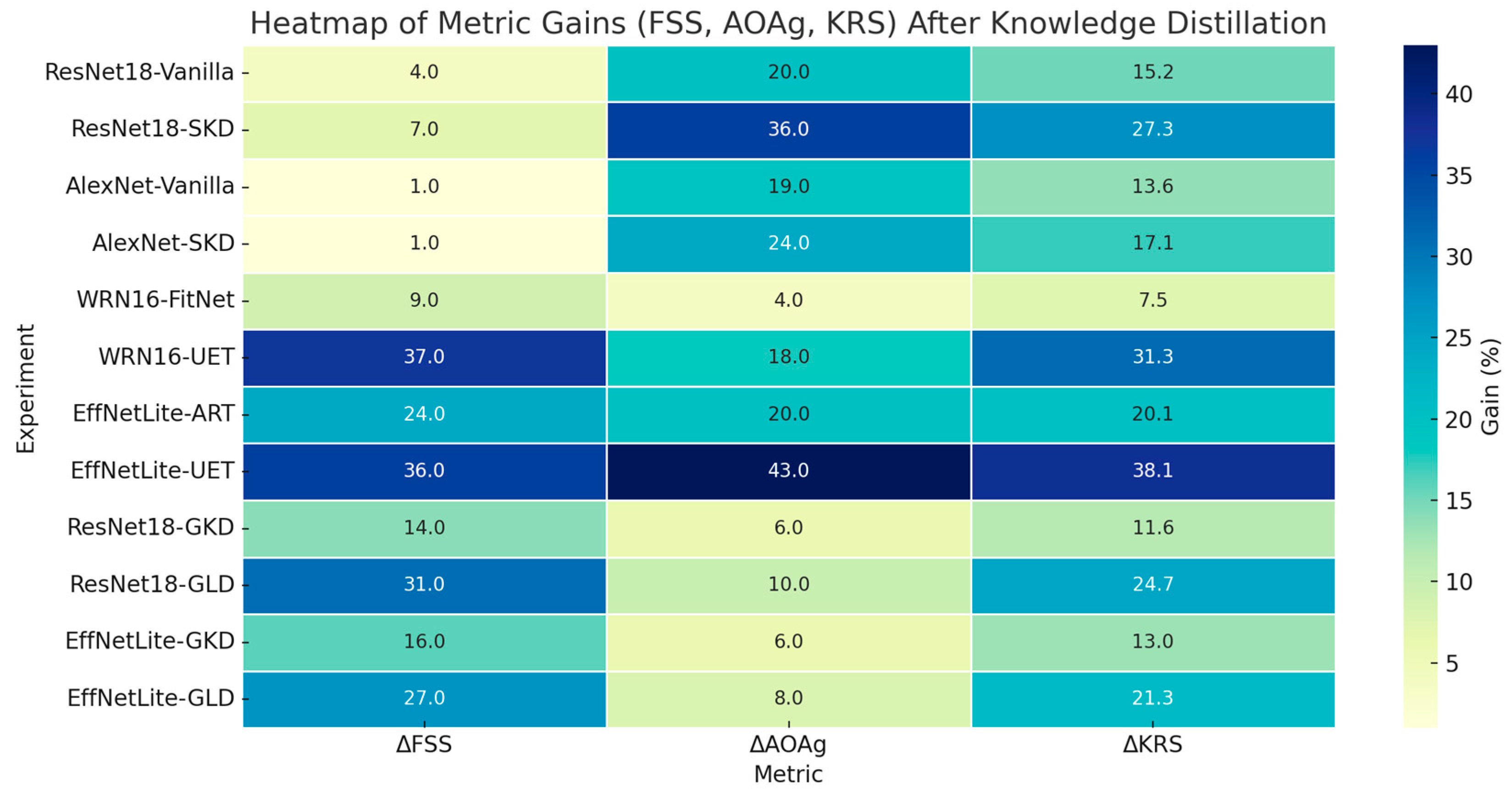

To assess how KRS reflects knowledge transfer, we conducted an ablation analysis using its two components—Feature Similarity Score (FSS) and Average Output Agreement (AOAg)—before and after KD. In image classification, both FSS and AOAg improved after KD, especially with SKD, which consistently outperformed Vanilla KD. For example, ResNet-18 on CIFAR-100 showed FSS rising from 32 to 39 and AOAg from 47 to 83 under SKD, resulting in a substantial KRS gain. Deeper models like ResNet-18 saw larger improvements than shallower ones like AlexNet, reinforcing the role of student capacity. These results validate KRS as a meaningful composite metric, sensitive to both technique and architecture. Results are shown in

Figure 5.

In object detection, KRS also increased across all KD methods. UET consistently yielded the highest gains compared to ART and FitNet, regardless of dataset. For instance, WRN-16-1 distilled via UET on COCO showed stronger improvement than on PASCAL VOC with FitNet—demonstrating that KRS captures nuanced variations based on KD method and dataset complexity.

Notably, KRS gains were larger in setups involving compact student models or challenging datasets, highlighting KD’s greater impact where capacity is constrained or tasks are complex. Overall, KRS successfully tracked improvements in feature alignment and output behavior, validating its robustness and interpretability for evaluating KD effectiveness in object detection (

Figure 6).

In image segmentation, KRS again increased consistently post-KD, as can be seen in

Figure 7. GLD produced the highest gains, excelling in holistic output transfer. CRCD performed well in deeper models due to its dense relational modeling, while GKD lagged, especially with limited capacity students like AlexNet. These patterns confirm that KRS is sensitive to both KD method and student architecture, reinforcing its utility in dense prediction tasks.

4.2.3. Sensitivity to KD Quality

To further evaluate the reliability of KRS, we examined its sensitivity to the quality of KD methods applied across different tasks. In this context, KD quality refers to the extent to which each method improves the performance and knowledge retention of the student model. A reliable metric should consistently reflect higher gains when stronger KD strategies are employed.

We first rank the performance of the KD methods used in this study depending on the average improvement of the student in terms of the conventional metrics used. Then, we also rank each KD method based on the average increase in the KRS. Finally, we compare the two rankings as shown in

Table 5.

The table offers a dual perspective on how different KD strategies perform in enhancing both traditional task-based metrics and the proposed KRS, which captures retained knowledge more holistically. Across both rankings, Vanilla KD consistently appears as the least effective method, suggesting that while it offers basic improvements, it lacks the sophistication of more modern techniques in transferring knowledge. FitNet follows closely, indicating only moderate gains in both traditional metrics and knowledge retention. In contrast, UET emerges as the top-performing method in both categories. Its superior placement suggests that UET not only maximizes conventional performance outcomes but also enables the student model to internalize a substantial amount of the teacher’s knowledge, as reflected in the high KRS gains. Similarly, GLD and ART show consistently strong performance, placing them among the top-tier KD techniques across both evaluation dimensions.

To confirm this observation quantitatively, we computed a Kendall’s τ correlation coefficient between the two rankings. The result is τ = 1.0, indicating perfect concordance between the ordering of KD methods by conventional performance gains and their ordering by KRS gains. This statistical confirmation reinforces the validity of KRS as a reliable metric for assessing KD quality.

Importantly, this convergence does not imply redundancy. While KRS rankings align with conventional evaluations (ensuring validity), KRS additionally integrates internal feature similarity with external output agreement, providing a richer and more interpretable view of knowledge retention. This dual perspective addresses transparency concerns by ensuring that improvements in representational and predictive alignment are both captured. Thus, KRS functions as a complementary measure: consistent with traditional task outcomes while simultaneously offering insight into the underlying knowledge transfer process.

4.2.4. Trade-Off (Imbalance) Analysis

While the previous section established that KRS rankings are consistent with conventional performance-based rankings, it is also important to examine whether KRS provides additional interpretive value in situations where improvements in feature similarity (FSS) and output agreement (AOAg) are imbalanced. Across all experiments, both FSS and AOAg improved after KD, with no true conflict cases (i.e., one increasing while the other decreased). However, the degree of improvement often differed substantially, creating scenarios where relying on a single component could overemphasize one aspect of knowledge retention at the expense of the other.

Table 6 illustrates representative examples of such imbalance. In classification tasks (e.g., CIFAR-100 SKD, Exp. 2; Oxford-IIIT Pet SKD, Exp. 12), AOAg exhibited much larger gains than FSS. Because classification places higher emphasis on predictive alignment, KRS—using the task-aware weight setting of (

α,

β) = (0.3, 0.7)—tracked these AOAg-dominant improvements, yielding composite gains of +27.3 and +21.8, respectively. By contrast, in detection and segmentation tasks (e.g., COCO ART, Exp. 14; Oxford-IIIT Pet GLD, Exp. 26), FSS improved much more strongly than AOAg. With weights (0.7, 0.3), KRS reflected these FSS-dominant trends, yielding composite gains of +19.0 and +24.7, respectively. In some cases, such as COCO UET (Exp. 18), FSS exhibited extremely large improvements (+44 vs. +18 for AOAg), and KRS accordingly produced a strong but moderated gain of +36.2. Finally, in cases where both components improved modestly and more evenly (e.g., COCO FitNet, Exp. 13), KRS reflected the balanced contribution (+7.5).

This analysis shows that KRS behaves in a predictable and interpretable way, moderating imbalances between FSS and AOAg according to the task-aware weights. The predicted and observed ΔKRS values match exactly because of the linear sensitivity property, reinforcing the transparency of the formulation. More importantly, this moderation ensures that KRS provides a stable, single indicator that avoids misleading interpretations when improvements are disproportionately concentrated in one component. Thus, KRS complements the separate reporting of FSS and AOAg by integrating them into an interpretable composite score, directly addressing concerns regarding the added value of KRS beyond its components.

4.2.5. Architectural Generalization

Architectural generalization assesses how consistently a KD method performs across varying teacher–student network combinations. In the context of this study, it also serves as a validation mechanism for the KRS—determining whether KRS remains a reliable metric when applied to diverse model architectures.

Our findings reveal that high-performing KD methods such as UET, GLD, and ART yield substantial improvements in KRS across a wide range of architectural pairings. These include deep-to-shallow (e.g., ResNet-101 to ResNet-18), resource-constrained (e.g., EfficientNet-B9 to EfficientNet-Lite), and structurally different networks (e.g., VGG-19 to AlexNet). The consistency in KRS improvements across these combinations confirms its adaptability and reliability, regardless of the architectural design.

Furthermore, methods like Vanilla KD and FitNet, which are more sensitive to teacher–student alignment, demonstrated lower KRS gains especially in less compatible pairs—reinforcing that KRS can also capture limitations in knowledge transfer. This sensitivity strengthens the argument that KRS effectively reflects the internal learning dynamics of the student model beyond surface-level metrics like accuracy or mAP.

In summary, the alignment between KRS trends and architectural variations supports KRS not only as a reliable performance indicator but also as a metric with demonstrated applicability across heterogeneous model configurations. It provides nuanced insight into the effectiveness of KD methods in real-world applications where model structures are rarely standardized.

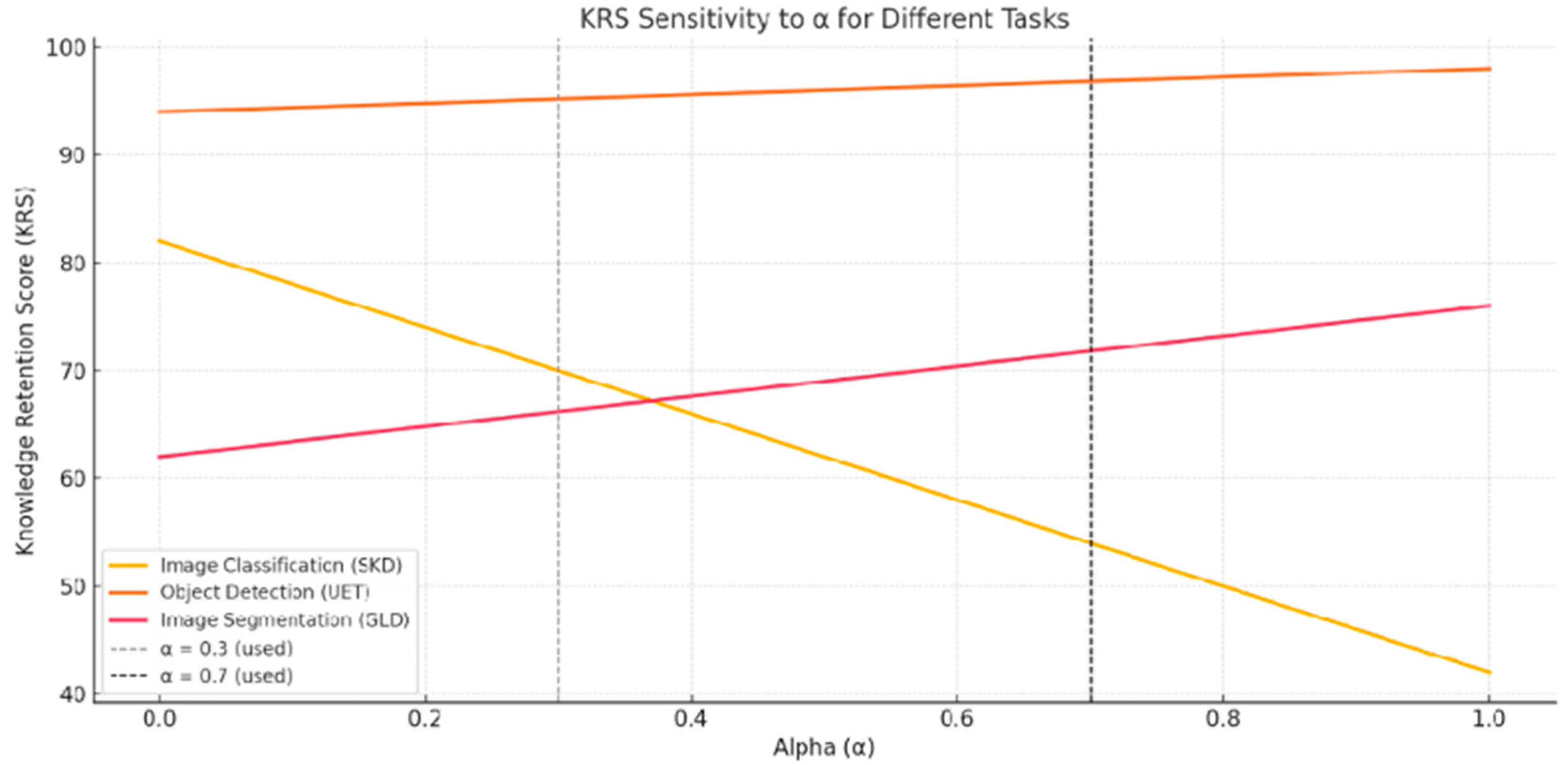

4.2.6. Sensitivity Analysis of KRS to α and β

To assess the impact of the weighting parameters

α and

β on the KRS, we conducted a sensitivity analysis by systematically varying

α from 0 to 1 (with

β = 1 −

α) in increments of 0.01. The results for three representative tasks—image classification (Oxford-IIIT, ResNet101/18 using SKD), object detection (PASCAL VOC, EfficientNetB7/Lite using UET), and image segmentation (Oxford-IIIT, EfficientNetB7/Lite using GLD)—are illustrated in

Figure 8.

These specific teacher–student experiment pairs were selected because they yielded the highest performance in conventional task metrics (e.g., accuracy, mAP, mIoU) after knowledge distillation. Using top-performing pairs ensures that the observed trends in KRS reflect successful knowledge transfer scenarios and are not confounded by poorly trained student models.

The graph reveals distinct trends. For image classification, the KRS values are highest when α is near zero, indicating that the output agreement component contributes most significantly to knowledge retention in classification tasks. In contrast, both object detection and image segmentation show increasing KRS values as α increases, with peak scores occurring at α = 1. This suggests that feature similarity plays a more substantial role in measuring knowledge transfer for spatially complex tasks such as detection and segmentation.

The vertical dashed lines at α = 0.3 and α = 0.7 in the figure mark the specific weightings used in our main experiments. These values were selected based on a balance between task characteristics and interpretability: α = 0.3 emphasizes output alignment for classification tasks, while α = 0.7 emphasizes feature similarity for detection and segmentation tasks. Although these settings do not always correspond to the global maxima of KRS for each task, they demonstrate stable and reasonably high scores, supporting the robustness of our design.

This sensitivity analysis validates the rationale for adopting a composite metric. While individual components such as feature similarity or output agreement may dominate in specific task types, KRS offers a tunable and unified framework that maintains consistency across diverse applications. The ability to flexibly assign weights to each component ensures that KRS remains a reliable and interpretable measure of knowledge retention regardless of the underlying task.

4.2.7. Generalization to Transformer Architectures

To evaluate the generalizability of the KRS across architectural paradigms, we conducted an experiment using a transformer-based teacher–student pair: ViT-B/16 and DeiT-S on the CIFAR-100 classification task. As shown in

Table 7, the DeiT-S student achieved a KRS of 45.9 before knowledge distillation, which improved to 62.3 after applying Vanilla KD. This 16.4-point increase was driven by a substantial rise in AOAg, from 54% to 74%, and a moderate gain in FSS, from 27% to 35%. These improvements suggest that KRS remains sensitive and interpretable even in transformer-based architectures, where internal representation alignment and output mimicry differ fundamentally from those in CNNs. Interestingly, the same teacher–student pair exhibited minimal gain under SKD, with KRS improving only from 45.9 to 48.3. This modest gain can be explained by the SKD design, which discards soft labels and focuses instead on intra-class compactness and inter-class separation. However, transformers like DeiT-S, which rely heavily on attention-based mechanisms rather than hierarchical spatial encoding, benefit significantly from soft-target supervision to refine their decision boundaries. Without access to the teacher’s softened logits, SKD fails to guide the student toward smoother class probability distributions—resulting in limited improvements in AOAg and KRS. Moreover, SKD’s feature supervision may not align well with transformer attention maps, which differ structurally from convolutional feature hierarchies, further limiting gains in FSS. These results affirm that KRS effectively generalizes across architectures and reflects model-specific dynamics. While CNN-based students like ResNet-18 benefit more from feature-level alignment under SKD, transformer-based students like DeiT-S achieve greater retention when soft target guidance is preserved, as in Vanilla KD. This finding reinforces KRS as a reliable metric for evaluating knowledge retention regardless of architectural class, provided that the KD method is compatible with the inductive biases of the model.

4.2.8. Comparative Evaluation of KRS Against Baseline Retention Metric

To evaluate the effectiveness of the proposed KRS, we compared it against its constituent components—FSS and AOAg—across 36 KD experiments involving diverse tasks, teacher–student architectures, and distillation strategies. Although KRS is computed from FSS and AOAg, this comparison is essential to determine whether the composite formulation offers practical advantages in interpretability, generalizability, and correlation with actual student improvement. As visualized in the heatmap (

Figure 9), KRS captures retention behavior in a manner that balances the strengths and biases of FSS and AOAg. For example, in ResNet-18 trained using SKD, both FSS and AOAg exhibited substantial gains (7 and 36 points, respectively), yet KRS rose by 27.3 points—neither exaggerating the high AOAg nor neglecting the FSS. In another case, VGG-19 distilled to AlexNet under SKD showed an AOAg increase of 24 points with minimal FSS gain (1 point), but KRS moderated this with a more proportionate 17.1-point improvement. Such moderation prevents misleading interpretations that could occur when relying solely on a single retention axis. The heatmap also reveals that in many cases where only one component shows significant change, KRS reflects a tempered yet informative summary of the actual retention behavior—highlighting its robustness across architectural and task diversity. While alternative retention metrics such as Flow of Solution Procedure (FSP), Activation Flow Similarity (AFS), and Attention Similarity offer value in certain constrained contexts, their broader applicability remains limited. FSP, for instance, assumes architectural compatibility and requires matched intermediate feature maps, making it unsuitable for heterogeneous teacher–student pairs. AFS and attention-based metrics similarly rely on architectural features like explicit attention modules or transformer blocks, which are not available in many convolutional or lightweight models. These metrics are also primarily designed for image classification and lack extensibility to spatial tasks like object detection and segmentation. Moreover, publicly available implementations of these metrics are limited and often task-specific, hindering reproducibility and integration into broad KD pipelines. KRS addresses these limitations by offering an interpretable framework for quantifying knowledge retention that is applicable across multiple task types and adaptable to different model architectures. It provides a holistic measure that aligns well with actual student performance trends across KD methods, as supported by the experimental patterns in

Figure 9. These findings affirm KRS as a scalable and scientifically grounded metric for evaluating the effectiveness of knowledge transfer in deep neural networks.

4.2.9. Statistical Significance Analysis

To further validate the effectiveness of the proposed KRS, a formal statistical analysis was conducted across 36 knowledge distillation experiments, spanning classification, detection, and segmentation tasks. The goal was to assess whether the observed improvements in KRS after distillation were statistically meaningful and aligned with performance gains in conventional metrics such as accuracy, mAP, and IoU.

Paired t-tests were applied to compare pre- and post-distillation KRS values within each task group. The results indicated statistically significant improvements in all three categories: classification (p = ), detection (p = ), and segmentation (p = ), each well below the conventional alpha threshold of 0.05. These findings suggest that KD methods consistently lead to a measurable increase in knowledge retention as captured by KRS.

To quantify the variability in KRS improvements, 95% confidence intervals were computed for each task type. For classification, the average post-KRS was 54.76 (CI: [49.60, 59.92]), an increase from a pre-KRS mean of 38.28. Similarly, detection tasks showed an increase from 44.58 (CI: [37.54, 51.62]) to 66.92 (CI: [57.99, 75.84]), while segmentation increased from 42.13 (CI: [38.24, 46.03]) to 55.70 (CI: [50.06, 61.34]). These intervals reinforce the conclusion that the gains observed in KRS are not due to random variation but reflect consistent improvements attributable to distillation.

Figure 10 presents a box plot comparing the distribution of KRS values before and after distillation across the three task categories. The visual representation complements the statistical tests by highlighting the shift in median and interquartile ranges, offering an intuitive summary of KRS behavior across tasks.

Overall, these results confirm that KRS captures statistically significant improvements in knowledge retention across multiple KD strategies and task types, further validating its role as a reliable and interpretable metric in knowledge distillation evaluation.

4.2.10. Qualitative Analysis of Knowledge Retention Behavior

While earlier sections presented quantitative evaluations of KRS, a deeper understanding of knowledge retention benefits from qualitative analysis. This section offers visualizations that illustrate behavioral differences between student and teacher models before and after distillation, highlighting both representational and output-level alignment.

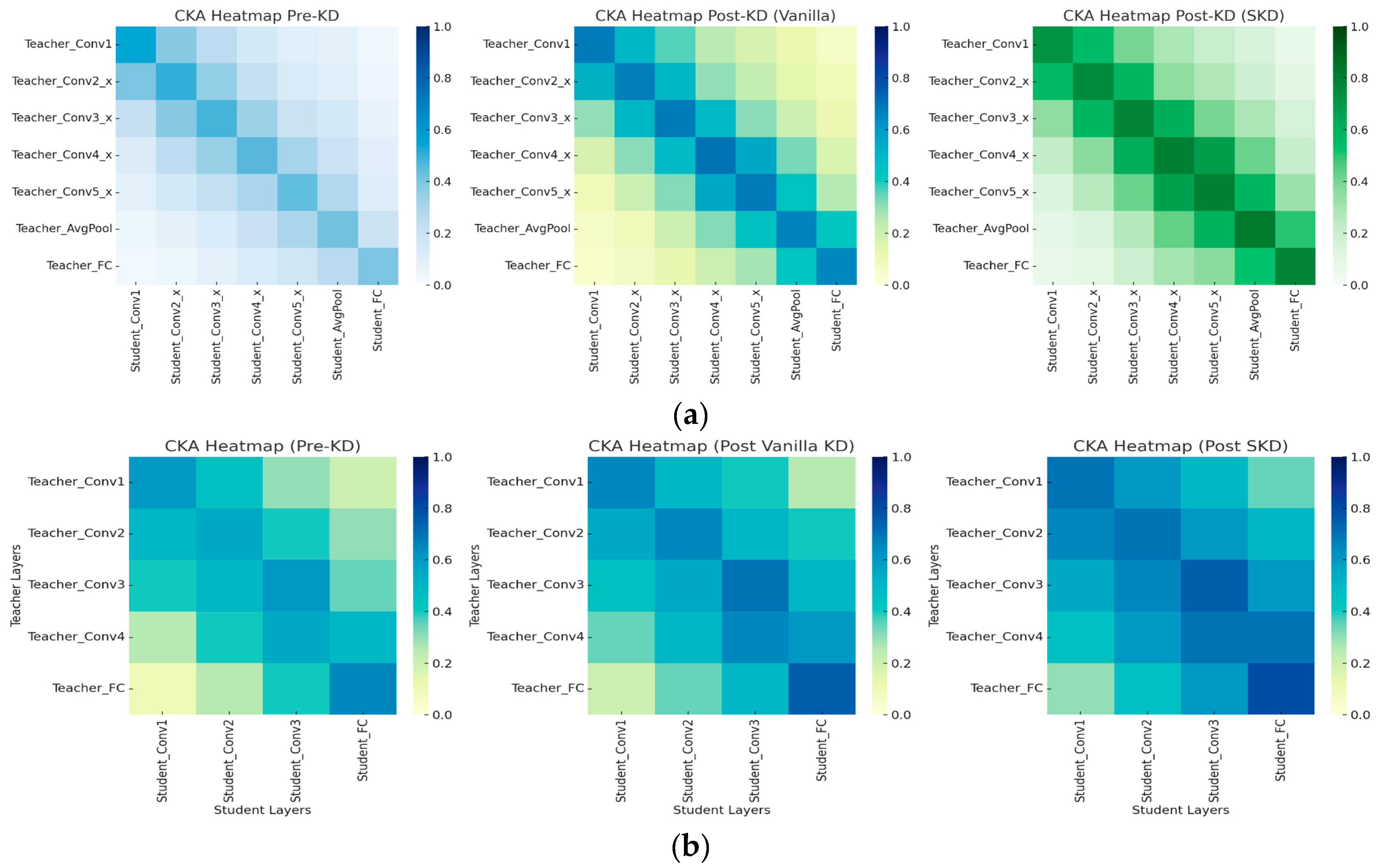

We focus on two representative examples using Cross-layer Centered Kernel Alignment (CKA) heatmaps and CIFAR-100 output comparisons. These are contextualized with corresponding KRS values to show how the metric captures meaningful improvements in both internal feature alignment and predictive consistency.

Figure 11 shows CKA heatmaps for ResNet-101 → ResNet-18 and VGG-19 → AlexNet, before KD, after Vanilla KD, and after SKD. In the ResNet pair, pre-KD similarity is low across most convolutional layers. Vanilla KD improves alignment in intermediate and deeper layers, while SKD further strengthens it—particularly in Conv4_x and Conv5_x. These trends align with KRSs rising from 42.5 (pre-KD) to 57.7 (Vanilla KD) and 69.8 (SKD).

In the VGG to AlexNet case, initial alignment is minimal. Vanilla KD modestly improves mid-level features, while SKD enhances similarity in fully connected layers. KRS follows this trend, increasing from 34.4 to 48.0 (Vanilla KD) and 51.5 (SKD).

These layer-wise visualizations confirm that KRS reflects tangible improvements in representational mimicry. Thus, KRS serves not only as a scalar score but also as an interpretable, diagnostic tool for understanding the depth and fidelity of knowledge transfer during KD.

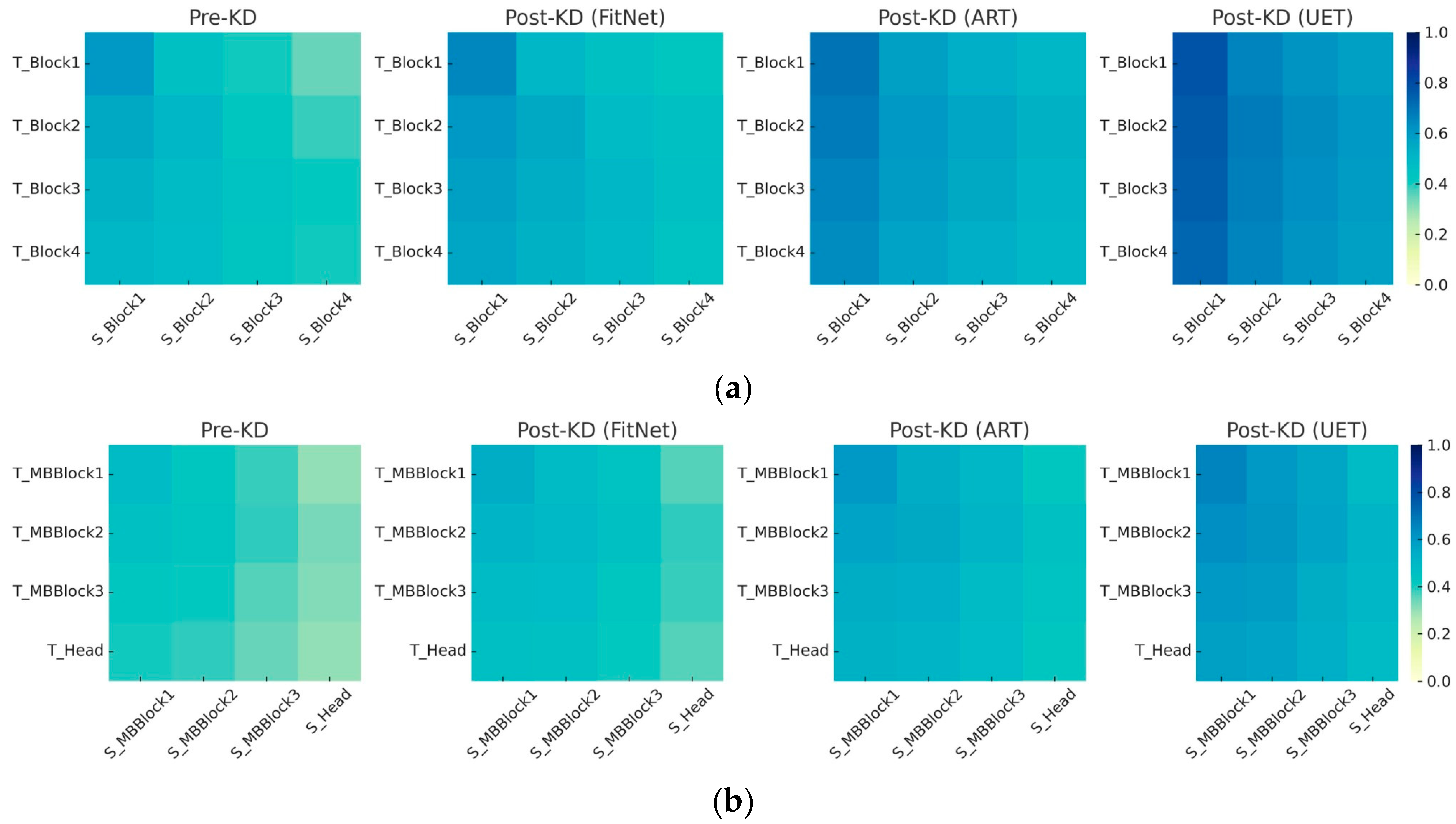

To further illustrate how KRS reflects internal knowledge retention, we present cross-layer CKA heatmaps for two object detection teacher–student pairs: WRN-40-2 → WRN 16-1 and EfficientNet-B7 → EfficientNet-Lite. These visualizations qualitatively show how different KD methods influence internal representation alignment. In the WRN pair (

Figure 12a), pre-KD alignment is weak, especially in deeper residual blocks. FitNet shows modest improvement; ART strengthens alignment across all blocks; and UET achieves the most consistent and substantial gains. These patterns align with their respective KRSs—UET > ART > FitNet—showing that KRS captures both output-level and feature-level learning. A similar pattern is seen in the EfficientNet pair (

Figure 12b). Initial alignment between MBConv blocks and head layers is low to moderate. FitNet slightly improves block-level similarity, ART enhances it further, and UET again shows the most comprehensive alignment. The corresponding KRS gains mirror these visual changes, confirming that KRS tracks improvements in internal representation fidelity, not just prediction accuracy. Together, these examples show that KRS offers a more complete view of knowledge transfer than performance metrics alone. The heatmaps act as visual validation of KRS and support its role as a diagnostic tool for evaluating distillation quality across diverse architectures.

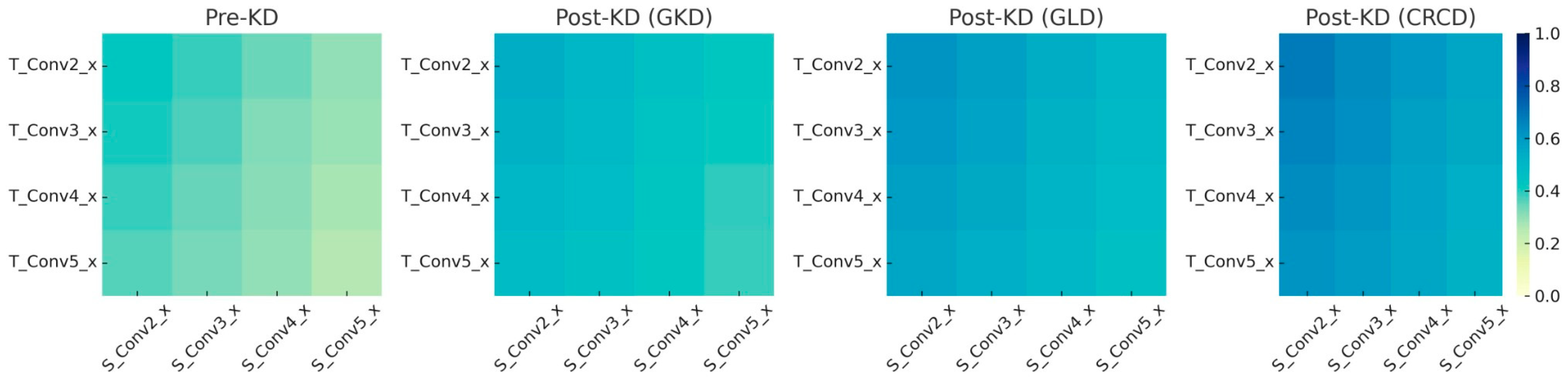

To complete the qualitative analysis across vision tasks, we examine feature alignment in semantic segmentation using the ResNet-101 → ResNet-18 pair.

Figure 13 presents CKA heatmaps before and after applying GKD, GLD, and CRCD—three relation-based KD methods.

The pre-KD heatmap shows low similarity, especially in deeper layers (Conv4_x and Conv5_x), highlighting the student’s limited ability to capture the teacher’s spatial representations despite architectural similarity. GKD brings modest improvements in Conv3_x and Conv4_x, while GLD enhances alignment more broadly. CRCD achieves the most uniform and intense alignment across all stages, reflecting a stronger spatial knowledge transfer.

These trends mirror the previously reported KRS values, where CRCD outperforms GLD and GKD in segmentation. The visual progression confirms that higher KRS correlates with deeper feature-level mimicry. In sum, this visualization affirms that KRS effectively captures structural alignment in dense prediction tasks, providing insight beyond output-level performance. It strengthens the case for KRS as a robust metric for evaluating internal knowledge retention in semantic segmentation.

To highlight KRS’s interpretability at the case level, we analyze a sample from CIFAR-100 with ground truth “Cat.”

Table 8 presents the top-1 prediction, top-3 output distribution, and KRS components (FSS, AOAg) across teacher and student models before and after KD. Before distillation, the student (ResNet-18) misclassifies the image as “Dog” (43.6%), while the teacher (ResNet-101) correctly predicts “Cat” (92.1%). The student’s confused output order—Dog, Cat, Deer—leads to low AOAg (47) and FSS (32), resulting in KRS = 42.5. After Vanilla KD, the student correctly predicts “Cat” (67.4%). Though some divergence remains (e.g., presence of “Fox”), both AOAg (67) and FSS (36) improve, raising KRS to 57.7. With SKD, the student confidently predicts “Cat” (81.5%), matching the teacher’s top-3 predictions (Cat, Dog, Tiger). This yields the highest metrics: AOAg = 83, FSS = 39, and KRS = 69.8.

This case shows that KRS captures both behavioral and representational improvements, validating it as a dual-perspective metric for evaluating knowledge transfer—not just accuracy gains but also internal feature alignment.

4.2.11. Relevance of KRS to Mobile and Edge Deployment

Modern AI applications increasingly rely on deploying compact student models on mobile and edge devices, where hardware resources are constrained, and on-device decision reliability is critical. In such scenarios, performance monitoring tools must be both lightweight and informative.

The Knowledge Retention Score (KRS) provides an interpretable metric to assess whether a compact student model faithfully retains the knowledge of its larger teacher across different task settings, even after significant compression. This is particularly important in edge AI pipelines where full accuracy metrics may not always be available post-deployment (e.g., no ground truth in real-time inference). KRS, as an internal alignment measure, can serve as a proxy to flag potential degradation in model performance. Moreover, our experiments involve student models that reflect realistic deployment targets—including ResNet-18, AlexNet, WRN-16-1, and EfficientNet-Lite—all of which are widely used in mobile AI. The consistent correlation of KRS with standard performance metrics (accuracy, mAP, IoU) across these models affirms that KRS remains stable and informative even under extreme compression. Finally, because KRS can be computed without access to ground truth (only teacher– student predictions and features are required), it is feasible to implement in privacy-preserving or low-connectivity environments. This makes it suitable for edge-based monitoring, model selection, and online re-training scenarios in mobile applications.

4.2.12. Evaluating KRS in Natural Language Processing Tasks

To evaluate the generalizability of the KRS beyond computer vision, we extended its application to Natural Language Processing (NLP) tasks. This supports our objective of positioning KRS as an evaluation metric for knowledge distillation that is applicable across different task types and responsive to variations in model architecture. Although originally formulated and validated within vision-based student–teacher frameworks, the two components of KRS—Feature Similarity Score (FSS) and Average Output Agreement (AOAg)—are equally applicable in NLP, where intermediate representations and output distributions are integral to effective model compression. To validate this cross-domain applicability, we selected three representative KD approaches in NLP that vary in complexity and distillation granularity: DistilBERT, Patient Knowledge Distillation (PKD), and TinyBERT. DistilBERT represents a vanilla KD strategy, where the student learns only from the soft target logits of a BERT-base teacher [

37]. PKD offers a more nuanced approach by transferring knowledge from selected intermediate layers of the teacher to the student [

38]. In contrast, TinyBERT implements a comprehensive, multi-objective KD framework involving the alignment of embeddings, hidden states, attention matrices, and output logits [

39]. Together, these three methods span a wide range of KD strategies, enabling a robust evaluation of KRS across diverse distillation scenarios.

Experiments were conducted on the SST-2 dataset from the GLUE benchmark, a binary sentiment classification task. In all setups, BERT-base served as the teacher model. The student models—DistilBERT, PKD-based student, and TinyBERT—were trained and fine-tuned using PyTorch 2.4.0 and the HuggingFace Transformers library, with a consistent learning rate of , batch size of 32, and three training epochs. Evaluation was carried out on the SST-2 validation set. To compute KRS, we extracted the hidden states from the final transformer layer to calculate FSS, and the softmax outputs to compute AOAg. Both scores were normalized to the [0, 1] range prior to applying the KRS formula. A sensitivity analysis was also performed by varying the alpha (FSS weight) and beta (AOAg weight) parameters from 0 to 1 in increments of 0.01 to examine the adaptability and robustness of KRS in NLP settings. All experiments were executed on an NVIDIA RTX 3070 GPU to ensure consistent computational conditions.

Table 9 presents the performance of three student models—DistilBERT, TinyBERT, and PKD—each configured with six transformer layers and evaluated on the SST-2 sentiment classification task. The table includes validation accuracy, FSS, AOAg, and the resulting KRS for both pre- and post-KD settings, with BERT-base serving as the teacher model for reference.

To compute KRS before applying KD, each student model was fine-tuned independently using only hard labels and no teacher guidance. After training, FSS was calculated via cosine similarity between the final hidden states of the teacher and student, while AOAg was derived from a KL divergence-based similarity of their softmax outputs. Both components were normalized to the [0, 1] range and combined to yield the pre-KD KRS. These values establish a baseline for evaluating how much alignment was gained through the distillation process.

All three student models showed substantial improvements in both accuracy and KRS after KD was applied. DistilBERT improved from 89.31% to 92.27% in accuracy, with a corresponding KRS increase from 0.41 to 0.68. TinyBERT and PKD exhibited similar gains, with TinyBERT improving from 85.78% to 92.88% in accuracy and PKD reaching 93.12%, both accompanied by significant rises in FSS and AOAg. These trends indicate that KD effectively enhances both internal representations and output behavior, and that KRS is sensitive to these improvements.

In computing KRS, we chose α = 0.5 and β = 0.5 to assign equal importance to representational and output alignment. This balanced weighting supports fair comparison across models with differing KD objectives—such as DistilBERT’s output-only strategy versus TinyBERT’s multi-objective design. A full sensitivity analysis of α and β is provided in the succeeding section.

Taken together with the results from vision-based tasks, the NLP findings in this section reinforce the cross-domain applicability of KRS. Despite variation in KD strategies, architecture design, and task modality, KRS consistently reflected meaningful improvements in knowledge retention and closely tracked downstream performance. This consistency across both vision and language domains affirms KRS as a generalizable and reliable metric for evaluating the effectiveness of knowledge distillation.

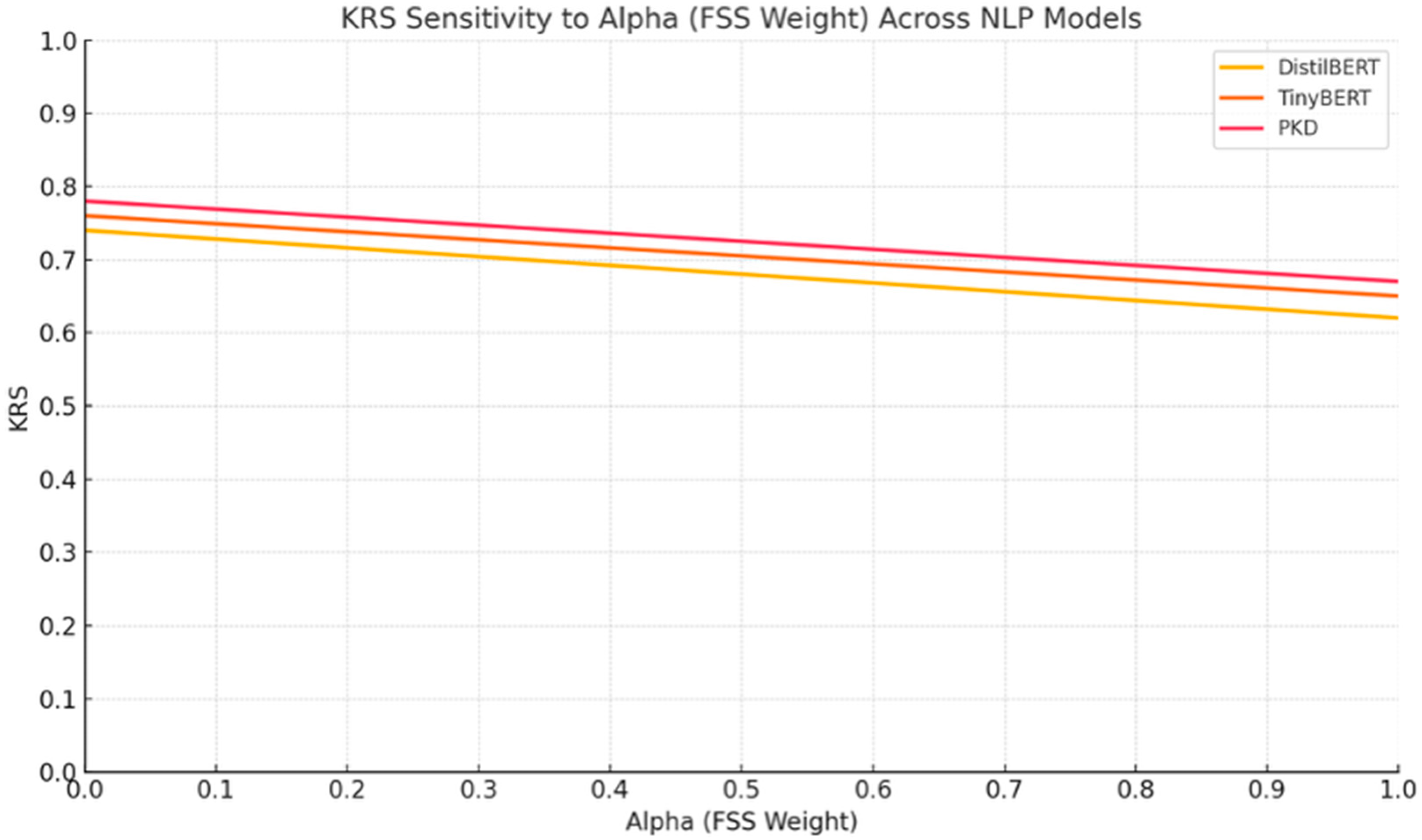

Sensitivity Analysis of α and β in NLP Knowledge Distillation

Figure 14 presents the sensitivity of the KRS to varying

α values, which control the relative weight of the FSS in the KRS formula. As

α increases from 0 to 1, all three models—DistilBERT, TinyBERT, and PKD—exhibit a smooth, linear decline in KRS. This indicates that while both FSS and AOAg contribute to knowledge retention, the AOAg component (weighted by

β = 1 −

α) tends to exert a slightly stronger influence in aligning student behavior with the teacher.

The stability of the curves across the entire α range demonstrates that KRS behaves predictably under different weighting schemes, with no abrupt changes or inconsistencies. This supports the robustness of KRS as a metric. Based on this observation, our use of α = 0.5 and β = 0.5 remains a justifiable and neutral choice. It ensures that both intermediate representation similarity and output agreement are considered, making the score applicable across distillation strategies with varying focus—whether output-only (DistilBERT), feature-based (PKD), or multi-objective (TinyBERT).

Overall, the sensitivity analysis reinforces that KRS is a stable and interpretable metric across a range of configurations and that the default setting of α = 0.5 offers a balanced view of knowledge retention.

Beyond Accuracy: Evaluating Knowledge Retention Through KRS

While validation accuracy is commonly used to evaluate the effectiveness of knowledge distillation, it provides only a surface-level measure of student performance. Our findings demonstrate that KRS offers a more nuanced perspective by simultaneously capturing both representational and output-level alignment with the teacher. For instance, although TinyBERT and DistilBERT achieved similar post-KD accuracies on SST-2 (92.88% vs. 92.27%, respectively), their KRSs revealed a wider disparity (0.71 vs. 0.68), indicating that TinyBERT retained teacher knowledge more effectively. In contrast, models with comparable accuracy but lower KRS likely relied more on task-specific learning rather than generalized knowledge transfer. This reinforces the value of KRS as a complementary metric that can distinguish between surface-level accuracy and deeper knowledge fidelity.

4.2.13. Extending KRS to Time Series Regression

To explore the broader applicability of KRS, we propose its extension to time series regression tasks. In this setting, student models are trained to mimic the behavior of a more complex teacher model when predicting continuous-valued sequences. Since classification-based similarity metrics like softmax KL divergence are no longer applicable, we reformulate the Agreement on Output Aggregation (AOAg) component to suit the regression context.

Specifically, AOAg is redefined as the inverse of the normalized mean squared error (MSE) between the teacher and student outputs, ensuring that higher agreement corresponds to lower prediction error. Given a sequence of outputs from the teacher

T = {

t1,

t2, …,

tn} and students

S = {

s1,

s2, …,

sn}, we compute:

where max(

MSE) is determined based on the worst-case deviation observed during training. This normalization bounds AOAg between 0 and 1. Feature Similarity Score (FSS) remains unchanged and is computed as the cosine similarity between latent representations of the student and teacher networks at selected layers.

The KRS formulation thus becomes directly compatible with time series tasks, providing a unified metric to assess knowledge retention across both classification and regression domains. This highlights the adaptability of KRS beyond NLP and CV, paving the way for its application in broader domains such as sensor analytics, financial forecasting, and health monitoring.

We evaluated the applicability of KRS in a time series regression setting by selecting a representative and practical task: electrical demand forecasting. For this experiment, we used the UCI Household Electric Power Consumption dataset, where the objective was to predict the next hour’s active power consumption based on the past 24 h of readings. To model the task, we adopted a two-tier architecture: a deeper LSTM network as the teacher, and a smaller, shallower LSTM as the student. The choice reflects typical constraints in real-world deployments where lightweight models are needed at the edge. We applied FitNet, as it allows the student to mimic the internal feature representations of the teacher, which is ideal for sequential data.

The results in

Table 10 highlight the performance of the student model before and after applying feature-based knowledge distillation (FKD) for a time series regression task. Notably, the student’s predictive performance improved across all metrics: the Mean Absolute Error (MAE) decreased from 0.213 to 0.167, Root Mean Square Error (RMSE) from 0.297 to 0.241, and R

2 increased from 0.802 to 0.872. These gains clearly indicate that the student model, guided by the teacher, learned a more accurate mapping from input to output.

Beyond predictive performance, the Knowledge Retention Score (KRS) provides a deeper view into how well the student internalized the teacher’s behavior. Post-KD, the Feature Similarity Score (FSS) rose from 0.43 to 0.68, indicating significantly better alignment in internal representations. Simultaneously, the Output Agreement (AOAg) improved from 0.35 to 0.70, reflecting a closer match in the shape and scale of the output sequences. As a composite of these factors, the KRS increased from 0.39 to 0.69.

This reinforces that KRS serves as a critical interpretability tool even in regression contexts. While conventional metrics like MAE and RMSE only assess output correctness, KRS reveals how much of the teacher’s learned knowledge structure the student has actually retained. This is especially valuable in time series forecasting, where model behavior over time (i.e., internal dynamics) can influence reliability, generalizability, and down-stream integration with control systems. In this light, KRS acts as a sanity check—helping us trust not just the predictions, but the process behind them.

4.4. Using KRS as a Tuning Signal in Knowledge Distillation

Beyond post-training evaluation, the Knowledge Retention Score (KRS) serves as a practical diagnostic tool during the tuning of KD models. By combining Feature Similarity Score (FSS) and Average Output Agreement (AOA), KRS provides insight into both internal representation alignment and output-level mimicry, enabling deeper monitoring of the student’s learning progress.

In vanilla KD, tuning the softmax temperature and KD-to-hard loss weight influences AOA. A higher temperature softens teacher outputs, improving the student’s ability to learn inter-class relationships—often reflected in increased AOA and KRS. Emphasizing the KD loss in the loss function can also enhance alignment with the teacher’s output distribution.

For feature-based KD methods (e.g., FitNet, CRCD, GLD), optimizing which intermediate layers to align significantly affects FSS. Higher FSS is observed when student and teacher share similar architectures, such as ResNet-101 and ResNet-18, and when alignment targets semantically rich layers. These refinements contribute to stronger knowledge retention, improving overall KRS.

Monitoring AOA and FSS during training enables real-time strategy adjustments. A high AOAg but low FSS may suggest the need for feature-level supervision, while the reverse may point to issues in output behavior requiring adjustments in temperature or loss weighting.

In sum, KRS is not just an evaluation metric—it is a dynamic, interpretable guide for hyperparameter tuning and method optimization in KD pipelines, helping to refine strategies to better match the student’s capacity and architecture.