Abstract

In post-disaster search and rescue (SAR) operations, unmanned aerial vehicles (UAVs) are essential tools, yet the large volume of raw visual data often overwhelms human operators by providing isolated, context-free information. This paper presents an innovative system with a novel cognitive–agentic architecture that transforms the UAV from an intelligent tool into a proactive reasoning partner. The core innovation lies in the LLM’s ability to perform high-level semantic reasoning, logical validation, and robust self-correction through internal feedback loops. A visual perception module based on a custom-trained YOLO11 model feeds the cognitive core, which performs contextual analysis and hazard assessment, enabling a complete perception–reasoning–action cycle. The system also incorporates a physical payload delivery module for first-aid supplies, which acts on prioritized, actionable recommendations to reduce operator cognitive load and accelerate victim assistance. This work, therefore, presents the first developed LLM-driven architecture of its kind, transforming a drone from a mere data-gathering tool into a proactive reasoning partner and demonstrating a viable path toward reducing operator cognitive load in critical missions.

1. Introduction

The first hours and days following a disaster—whether an earthquake, flooding or another natural or man-made disaster—are critical for saving lives. This initial phase is characterized by chaos, destruction and compromised infrastructure, creating extreme risk conditions that significantly hinder the rapid deployment of search and rescue teams [1]. Reduced visibility, inaccessible terrain [2] and, most importantly, a lack of accurate information about the extent of the damage and the location of victims pose major obstacles to rescue operations. A prerequisite for crisis management is that critical decisions are largely based on the availability, accuracy, and timeliness of information provided to decision makers [3]. In these emergency situations, a fast response and the ability to obtain a reliable overview are crucial for maximizing the chances of survival for those who are trapped or missing.

In addition to physical obstacles, the deployment of human resources across multiple locations presents a logistical challenge that is often impossible to overcome [4], and the success of operations depends critically on the ability to overcome these logistical barriers [5]. In this context, unmanned aerial vehicles (UAVs) have emerged as an indispensable tool for conducting search and rescue missions in the early stages of disasters. Recent simulations have demonstrated the potential of drones, with over 90% of survivors located in less than 40 min in disaster scenarios [6]. Other studies have demonstrated similar success rates through multi-UAV collaboration [7], further enhancing the efficiency of these systems.

Although unmanned vehicles have transformed the way data is collected in disaster areas by generating large amounts of real-time video and sensory information, the volume of data can be overwhelming for human operators. This information overload is a well-known issue that can lead to high cognitive load, causing operators to overlook relevant information [8,9]. Although existing computer vision systems, such as those based on YOLO (You Only Look Once) [10], can detect people, they often provide information that is isolated and lacks semantic context or state assessment. The major challenge is that detecting an object (a person) is insufficient. Future systems must enable a semantic understanding of the situation to support decision-making processes [11]. The current reliance on raw information places a significant cognitive burden on rescue teams, consuming time and increasing the risk of errors in decision-making. Therefore, there is a need to move beyond simple data collection towards contextual understanding and intelligent analysis, which could significantly ease the work of intervention teams [12].

To overcome these limitations and take advantage of the convergence of these fields, we propose a cognitive–agentic architecture that will transform drones from mere observation tools into proactive, intelligent partners in search and rescue operations. Our work’s key contribution lies in its modular agentic approach, where specialized software agents manage perception, memory, reasoning and action separately.

At the heart of this architecture is the integration of a large language model (LLM) acting as an orchestrator agent—a feature that enables the system to perform logical validation and self-correction via feedback loops.

To enable this reasoning, we have developed an advanced visual perception module based on YOLO11, which has been trained on a composite dataset. This module can perform detailed semantic classification of victims’ status, going beyond simple detection. This approach is necessary because accurately assessing disaster scenarios requires the semantic interpretation of aerial images, beyond simple detection [13].

To complete the cognitive-to-physical workflow, we integrated a module for first-aid kit delivery, enabling the drone to perform direct interventions based on its analytical findings. Using drones to rapidly deliver first aid supplies to disaster-stricken areas where access is difficult can have a significant impact on survival rates [4].

The primary objective of this integrated system is to significantly reduce the cognitive load on human operators by delivering clear, contextualized, and actionable intelligence, thereby accelerating the entire rescue workflow. The adaptable nature of this framework also ensures its applicability to other fields, such as precision agriculture or environmental monitoring [14].

This paper is structured as follows: Section 2 presents an analysis of relevant works in the field of UAVs for disaster response and AI-based SAR systems. Section 3 describes the architecture and key components of the suggested cognitive approach, including the hardware used to collect data. Section 4 describes the implementation of the person detection module, including data acquisition, model training, and performance evaluation. Section 5 presents the algorithms used to map detected persons and assign them GPS (Global Positioning System) coordinates to facilitate rescue. Section 6 provides an in-depth description of each component within the cognitive–agentic system. Section 7 presents the results of the cognitive architecture’s feedback loop. Finally, Section 8 concludes the paper and presents future research directions.

2. Related Works

The field of unmanned aerial vehicle (UAV) use in SAR operations has evolved rapidly, progressing from basic remote sensing platforms to increasingly autonomous systems. To contextualize our contribution, our analysis of the literature is structured around three complementary perspectives. First, we examine specialized UAV systems for SAR sub-tasks. Second, we review cognitive architectures for situational awareness. Finally, we discuss what we consider the most important emerging paradigm: agentic robotic systems based on large language models (LLMs).

This paper [15] proposes an innovative method of locating victims in disaster areas by detecting mobile phones with depleted batteries. The system uses a UAV equipped with wireless power transfer technology to temporarily activate these devices and enable them to emit a signal. The drone then uses graph traversal and clustering algorithms to optimize its search path and locate the signal sources, thereby improving the efficiency of search operations in scenarios where visual methods are ineffective.

Article [16] addresses the issue of planning drone routes for search and rescue missions. The authors propose a genetic algorithm that aims to minimize total mission time while simultaneously considering two objectives: achieving complete coverage of the search area and maintaining stable communication with ground personnel. The innovation lies in evaluating flexible communication strategies (e.g., data mule, relay chains), which allow the system to dynamically adjust the priority between searching and communicating. Through simulations, the authors demonstrate a significant reduction in mission time.

In [17], the authors focus on the direct interaction between the victim and the rescue drone. The proposed system uses a YOLOv3-Tiny model to detect human presence in real time. Once a person has been detected, the system enters a gesture recognition phase, thus enabling effective non-verbal communication. The authors created a new dataset comprising rescue gestures (both body and hand), which enables the drone to initiate or cancel interaction and overcome the limitations of voice communication in noisy or distant environments.

Article [18] proposes a proactive surveillance system that uses UAVs. This system is designed to overcome the limitations of teleoperated systems by exhibiting human-like behavior. The main innovation lies in the use of two key components: Semantic Web technologies for the high-level description of objects and scenarios; and a Fuzzy Cognitive Map (FCM) model to provide cognitive capabilities for the accumulation of spatial knowledge and the discernment of critical situations. The system integrates data to develop situational awareness, enabling the drone to understand evolving scenes and make informed decisions rather than relying solely on simple detection.

A significant direction in the use of autonomous systems is the complete automation of complex real-world tasks, as exemplified by the work presented in [19], which proposes an Intelligent Hierarchical Cyber-Physical System (IHCPS) for beach waste management. Their architecture, named BeWastMan, is an advanced example of multi-robot collaboration, orchestrating an Unmanned Aerial Vehicle (UAV) for aerial inspection, a Ground Station (GS) for data processing, and an Unmanned Ground Vehicle (UGV) for autonomous waste collection and sorting. In this system, “intelligence” is distributed and task-oriented: the UAV uses onboard detection to adapt its speed, the ground station employs computer vision algorithms (YOLOv5) to detect and geolocate waste from a video stream, and the ground vehicle plans an optimal route to collect the identified items. While this system demonstrates an impressive automation of a complete physical workflow—from perception to action—it operates within a task-execution paradigm. In contrast, our work addresses a fundamentally different layer of intelligence. Instead of focusing on automating a physical task, our primary objective is to reduce the cognitive load on the human operator in unpredictable Search and Rescue (SAR) scenarios. Our LLM-orchestrated cognitive–agentic architecture does not merely execute predefined steps but performs higher-order semantic reasoning. It interprets context, assesses risks, validates logical consistency, and self-corrects, transforming the drone from a simple sensor into a proactive reasoning partner. Thus, while the work in [19] represents a state-of-the-art example of robotic automation for environmental tasks, our approach explores the frontier of human–robot cognitive collaboration, where the goal of AI is not to replace, but to augment human decision-making.

This fundamental challenge is framed by the theoretical distinction between Artificial Intelligence (AI) and Artificial Cognition (ACo), as proposed in [20]. The authors argue that traditional AI is essentially disembodied and focuses on impersonal knowledge, whereas ACo is an embodied, neuroscience-inspired approach that relies on experience and interaction to achieve a deeper understanding. Adopting the ACo framework, therefore, not only diagnoses the limitations of current systems but also provides a clear mandate for a paradigm shift toward architectures capable of embodied reasoning. It suggests that true collaborative autonomy requires an architecture capable of holistic reasoning and prospection—the mental simulation of actions—rather than relying on rigid, predefined models.

The reviewed literature highlights a clear trend toward specialized systems that solve critical sub-problems in SAR missions. However, these approaches reveal a significant gap. Systems based on rigid, pre-defined models like Fuzzy Cognitive Maps [18] or Semantic Web technologies require extensive knowledge engineering and struggle to reason outside their formal structures. On the other hand, advanced automation systems like the one in [19] excel at executing physical tasks but are not designed to alleviate the analytical burden of a human operator in unpredictable scenarios. Their intelligence is task-oriented, not collaborative. A core challenge remains: the lack of a cohesive architecture that can flexibly integrate specialized perception with higher-order, common-sense reasoning to meaningfully assist human decision-making.

Our work introduces a paradigm shift from these structured models to a dynamic, cognitive–agentic architecture orchestrated by a Large Language Model (LLM). Unlike the fixed rules of an FCM or the limited vocabulary of a gesture-based system, our LLM core acts as a central reasoner. It leverages emergent, common-sense reasoning to interpret complex visual data—such as a victim’s posture and the surrounding environmental context—to infer a state of distress. This allows the system to understand the holistic scene and identify high-priority victims even in the absence of explicit, pre-defined signals.

Furthermore, our architecture’s most crucial innovation is its ability to perform robust self-correction through an internal feedback loop. This capacity for dynamic self-validation is a critical feature fundamentally absent in the rigid models previously reviewed. The agent can formulate hypotheses (e.g., “I see a potential victim”), actively seek confirmatory evidence from the data stream (“Is the entity still immobile after 10 s?”), and rectify inconsistencies before alerting the human operator. This process is key to generating high-confidence, actionable intelligence instead of a simple stream of raw alerts, directly addressing the challenge of cognitive overload.

Finally, by integrating our advanced visual perception module with the LLM’s reasoning and a physical first-aid delivery mechanism, our system closes the full cognitive workflow. This transforms the drone from a passive sensing tool into a proactive and intelligent partner, designed specifically to convert ambiguous visual data into decisive action, thereby accelerating the entire rescue workflow.

3. Materials and Methods Used for the Proposed System

This section outlines the key components and methodological approaches of the intelligent system, demonstrating how they contribute to its overall functionality and efficiency for autonomous operation in complex disaster response scenarios.

The choice of unmanned aerial vehicle (UAV) platform is critical to the development of autonomous search and rescue systems, directly influencing their performance, cost and scalability. For this study, we chose a customized multi-motor drone that balances research and development requirements with operational capabilities. Table 1 compares the technical parameters of the proposed unmanned aerial vehicle (UAV) platform with two distinct commercial systems: the professional-grade DJI Matrice 300 RTK and an affordable drone designed for recreational use.

Table 1.

Comparison of technical parameters between the proposed UAV platform and two representative commercial systems.

It is important to clarify the distinction between the “AI Capabilities” presented in Table 1. Current state-of-the-art commercial drones demonstrate remarkable advancements in AI for flight control and perception; however, their intelligence is focused primarily on tasks such as autonomous navigation, obstacle avoidance, automated mapping, and inspection routines. These systems are designed to identify what an object is or where to fly, providing the human operator with high-quality, albeit unprocessed, data.

Our work addresses a different layer of artificial intelligence: cognitive–agentic AI for decision support. We chose the DJI models as benchmarks because they exemplify the current commercial standard: highly effective for predefined tasks but lacking a cognitive architecture for autonomous reasoning. Our system is not designed to improve the drone’s autonomous flight capabilities, but rather to transform the drone into an intelligent partner that can analyze situations, infer mission context, and provide operators with actionable, synthesized insights.

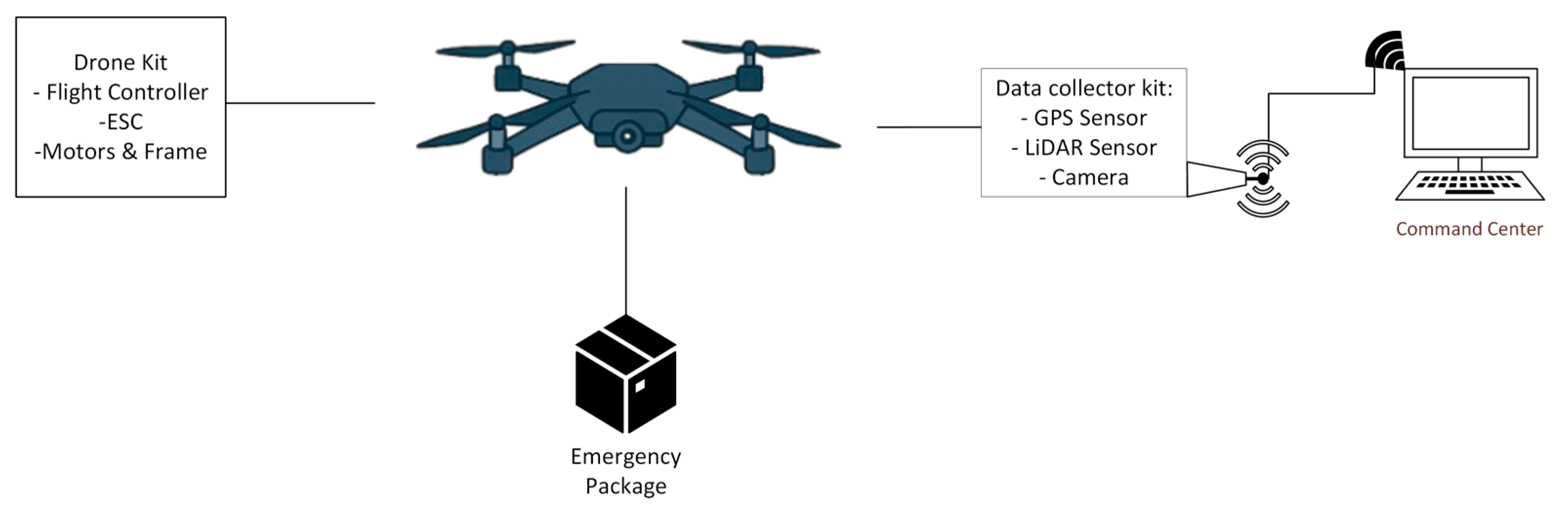

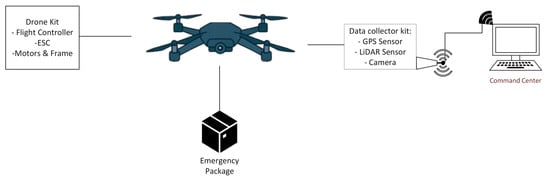

In Figure 1, the system operates via a continuous, integrated flow of data. It collects information from sensors and video streams via the ESP32S3 microprocessor unit (MPU), which is installed on board the drone. The data is transmitted in real time to the ground command center via a secure connection using the 802.11 b/g/n standard (2.4 GHz Wi-Fi for the prototype phase).

Figure 1.

Block diagram of the proposed drone system architecture.

After receiving the video stream, the ground device directs it to the YOLO11 module for preliminary identification of individuals. The processed and original video streams, along with the sensor data, are stored and transmitted to the command center, where they are analyzed in depth and concrete recommendations are generated.

Our system is strategically configured to acquire data at a frequency that strikes a balance between informational relevance and resource efficiency. Primary GPS location data is updated every second. To improve the accuracy of distance measurements, values from the LiDAR sensor are acquired twice per second. This allows two LiDAR readings to be taken for each GPS update, enabling the calculation of a median value that contributes to noise filtering and providing more robust data for environmental assessment.

The system primarily uses standard commercial components. This approach was adopted to enable rapid development and facilitate concept validation and algorithm refinement in a controlled environment. While these components are not particularly robust, they are adequate for the prototyping phase. However, for operational deployment in the field, a transition to professional-grade, hostile environment-specific hardware is anticipated.

The following sections will provide an overview of the platform’s capabilities by describing the main hardware and software elements, from data acquisition and processing to the analysis core.

3.1. Flight Module

The flight module is the actual aerial platform. It was built and assembled from scratch using an F450 frame and an FPV drone kit. This approach provided detailed control over component selection and assembly, resulting in a modular, adaptable structure. The proposed drone is a multi-motor quadcopter, chosen for its stability during hovering flight and its ability to operate in confined spaces.

Figure 2 shows the physical assembly of the platform, which forms the basis for the subsequent integration of the sensor and delivery modules.

Figure 2.

Assembled drone kit, without sensors attached.

The drone is equipped with 920 KV motors and is powered by a 3650 mAh LiPo battery, ensuring a balance of power and efficiency. This hardware configuration gives the drone an estimated payload capacity of 500–1000 g. Based on this configuration, the estimated flight time is 20–22 min under optimal conditions. While limited, this operational envelope is sufficient for the primary goal of this research stage: conducting short-duration, controlled field tests to validate the data flow and real-time performance of the cognitive–agentic architecture. However, these estimates can be directly influenced by the payload carried, especially the 300–700 g delivery package, and the flight style. These parameters are suitable for small-scale laboratory and field tests to validate cognitive architecture.

To ensure a reliable command link, the drone is controlled via a radio controller and receiver. Under optimal conditions with minimal interference, the control system’s estimated range is 500 to 1500 m. The flight control board includes an STM32F405 microcontroller (MCU); a 3-axis ICM42688P gyroscope for precise stabilization; an integrated barometer for maintaining altitude; and an AT7456E OSD chip for displaying essential flight data on the video stream. The motors are driven by a 55A speed controller (ESC). The flight controller supports power inputs from 3S to 6S LiPo. Additionally, the flight controller features an integrated ESP32 module through which we can configure various drone parameters via Bluetooth. This enables us to adjust settings at the last moment before a mission.

An important feature of the module is that the flight controller is equipped with a memory card slot. This allows for the continuous recording of all drone data, functioning similarly to an aircraft’s “black box.” Vital flight and system status information is saved locally and can be retrieved for analysis and troubleshooting.

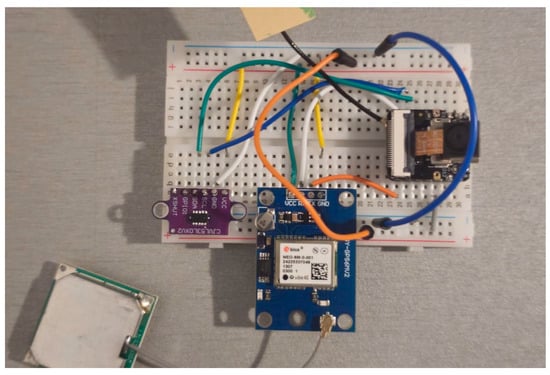

3.2. Data Acquisition Module

The data acquisition module is an independent, modular unit that acts as the drone’s sensory nervous system. It collects multimodal information from the surrounding environment. The module bridges the gap between the physical world and cognitive architecture. It plays a critical role in providing ground agents with the necessary raw data (visual, positioning, and proximity) to build an overall picture of the situation.

The data acquisition module is designed to operate independently and integrate multiple sensors:

- Main MPU: It features a 32-bit, dual-core Tensilica Xtensa LX7 processor that operates at speeds of up to 240 MHz. The MPU is also equipped with 8 MB of PSRAM and 8 MB of flash memory for ample storage of sensor data and video stream management.

- Camera: Although the ESP32S3 MPU is equipped with an OV2640 camera sensor, an OV5640 camera module has been added to improve image quality and transmission speed. This allows for higher-resolution capture and faster access times, providing the analysis module with higher-quality visual data at a cost-effective price.

- Distance Sensor (LiDAR): A VL53LDK time-of-flight (ToF) LiDAR sensor provides distance measurements. With a maximum range of 2 m and ±3% accuracy, it facilitates proximity awareness and terrain profile estimation directly beneath the drone. While unsuitable for large-scale mapping, this data is crucial for low-altitude flight and the victim location estimation algorithm.

- GPS module: A GY-NEO6MV2 module is used to provide positioning data with a horizontal accuracy of 2.5 m (circular error probable) and an update rate of 1 Hz.

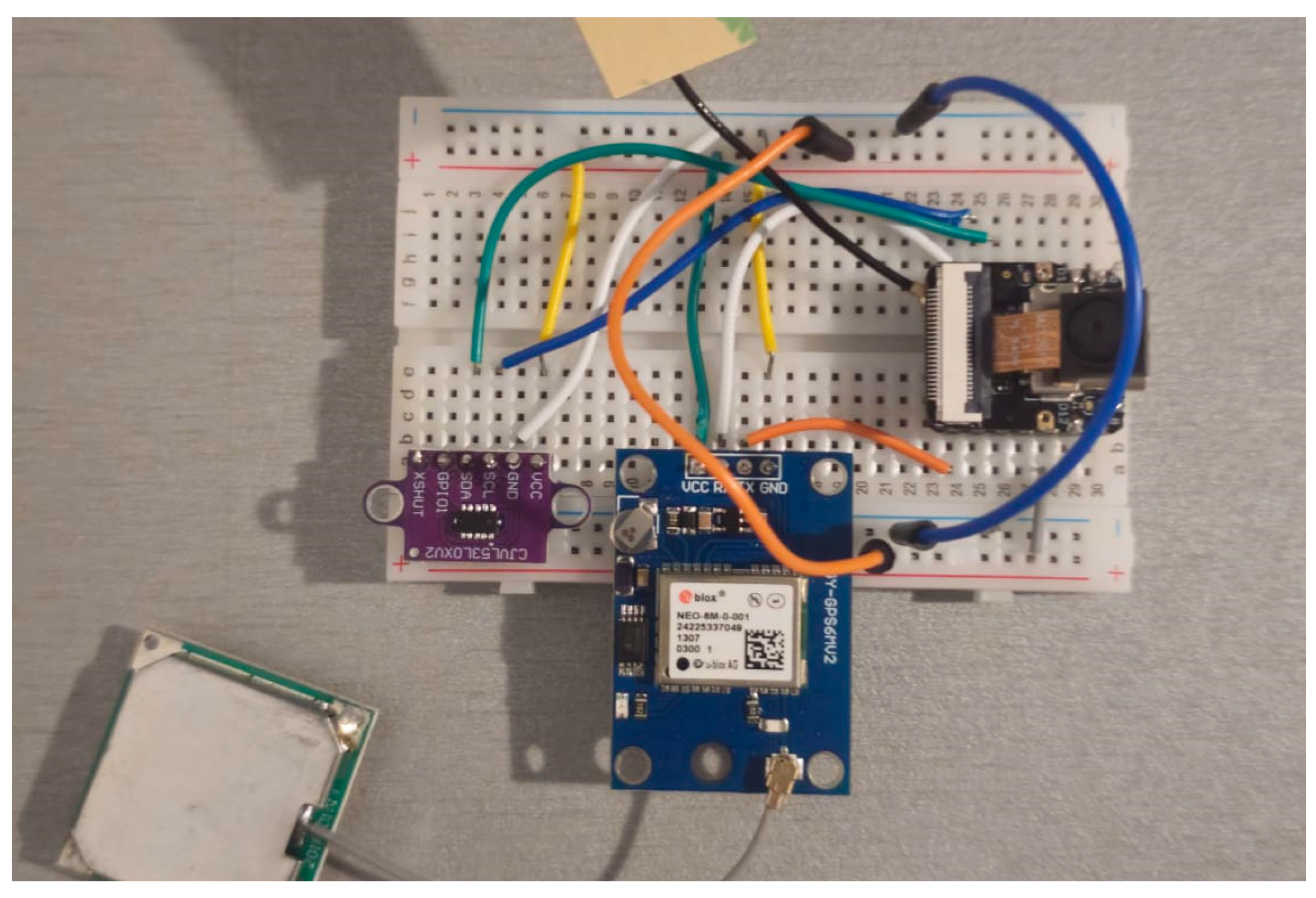

Integrating these components into a compact and efficient package is essential for the module’s functionality. Figure 3 illustrates the assembly of the acquisition system’s hardware, emphasizing that the ESP32S3 MPU serves as the central processing unit to which the camera, LiDAR sensor, and GPS module are directly connected. This minimalist configuration ensures low weight and optimized energy consumption, both of which are crucial for maintaining the drone’s flight autonomy.

Figure 3.

Hardware system with the ESP32S3 and adjacent sensors.

These sensors were chosen based on the project’s specific objectives. GPS data enables the route to be recorded, and combining information from LiDAR and the camera facilitates victim positioning and awareness of nearby obstacles.

The ESP32S3 runs optimized code to streamline video transmission and ensure optimal video quality under operational conditions. It also exposes specific endpoints that enable detailed sensor data to be retrieved on demand. Data is transmitted via Wi-Fi (2.4 GHz, 802.11 b/g/n).

The current hardware system’s main limitation is the power of each component compared to its industrial/commercial counterparts. The Wi-Fi module in the ESP32 is not suited for real disaster scenarios because it lacks the necessary error mitigation technologies to counter disturbances. The GPS lacks RTK technologies and may not be the most accurate, and the lidar is limited to 2 m. While these features are not ideal for harsh and unpredictable scenarios, they are perfect for proving the efficacy of the proposed system. For real-world scenarios, the recommended components must use a GPS capable of RTK (real-time kinematics) to achieve centimeter accuracy. They must also switch from Wi-Fi to a COFDM (coded orthogonal frequency division multiplexing) receiver and transmitter, and use a LiDAR with a greater range. If the mission type allows, a matrix LiDAR capable of stronger ground mapping should be used.

3.3. Delivery Module

In addition to its monitoring functions, the system includes an integrated package delivery module designed to quickly identify individuals. The release mechanism, which controls the opening and closing of the clamping system, is powered by a servo motor (e.g., an SG90 or similar model) that is directly integrated into the 3D-printed structure. The servo motor is remotely controlled and connected to the flight controller. This enables the human operator to control it remotely based on decisions made in the command center. The operator may receive recommendations from the cognitive architecture for assistance.

Figure 4 shows the modular design of the package’s fastening and release system. It illustrates the 3D-printed conceptual components and an example package.

Figure 4.

Modular payload lock and release mechanism.

As shown in Figure 5, the drone can transport and release controlled first aid packages containing essential items such as medicine, a walkie-talkie for direct communication, a mini-GPS for location tracking, and food rations. With an estimated maximum payload of 300–700 g, this module can transport critical resources. Integrating this module transforms the drone from a passive observation tool into an active system capable of rapidly intervening by delivering critical resources directly to victims in emergency situations.

Figure 5.

Demonstration of the drone in flight with the delivery package.

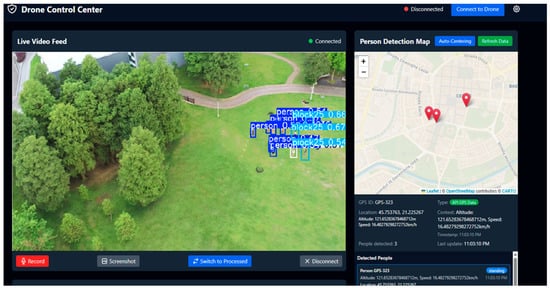

3.4. Drone Command Center

The ground control station (GCS) is the central operational and computational hub of the system. It is implemented as a robust web application with a ReactJS v19.2 front end and a Python 3.14.0 back end, ensuring a scalable and maintainable client-server architecture.

The command center’s role extends beyond that of a simple visualization interface. It serves as a central hub for data fusion, intelligent processing, and decision support. Its main functionalities are:

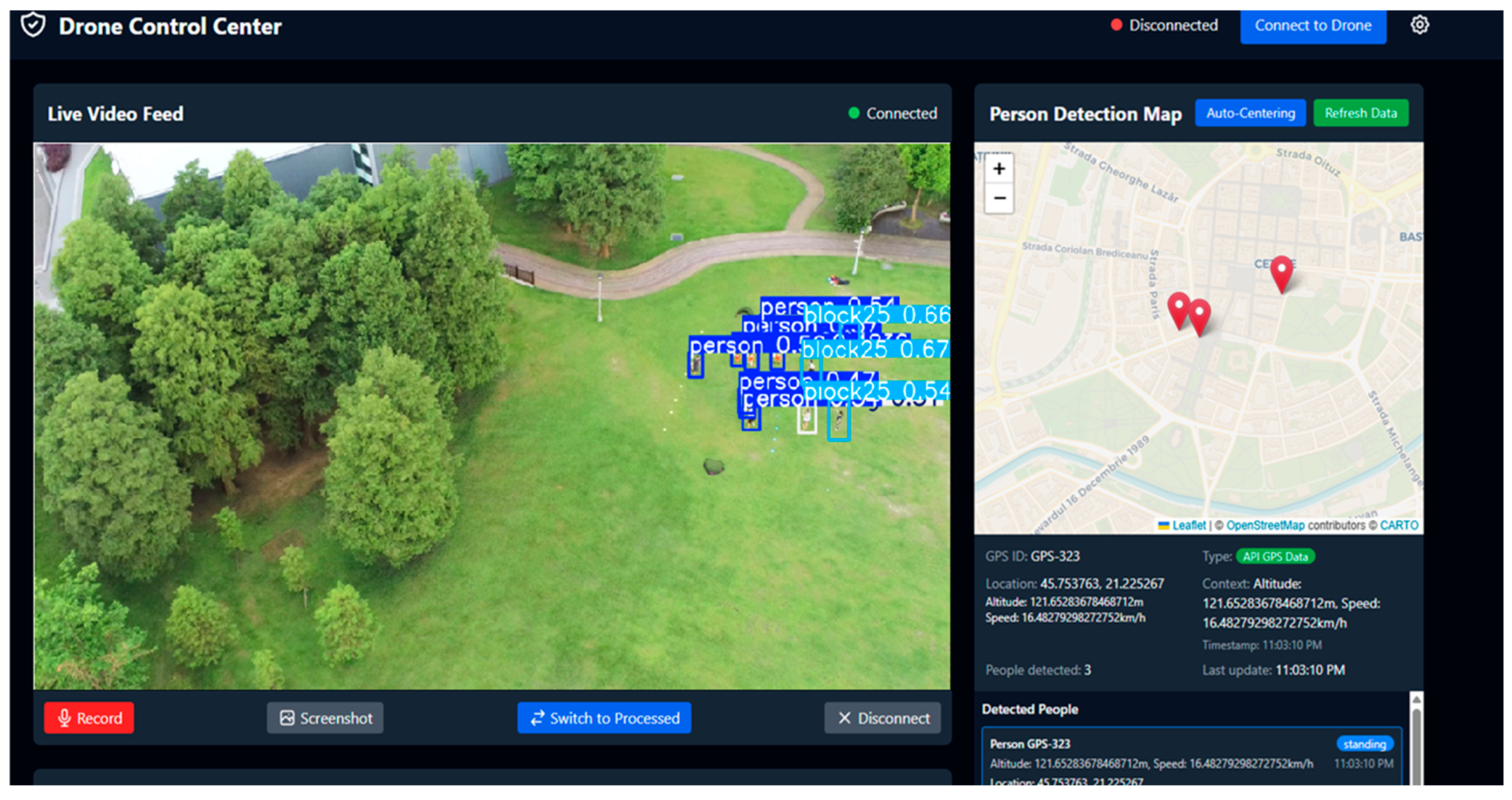

- Human–Machine Interface (HMI): The front end provides the human operator with complete situational awareness. One key feature is the interactive map that displays the drone’s position, the precise location of any detected individuals, and other relevant geospatial data in real time. Simultaneously, the operator can view the live video stream annotated in real time by the detection module, which highlights victims and classifies their status (e.g., walking, standing, or lying down). Figure 6 shows the central application’s visual interface, which consolidates these data streams.

Figure 6. Operator’s visual interface, consolidating location data and video stream.

Figure 6. Operator’s visual interface, consolidating location data and video stream. - Perceptual and Cognitive Processing: One of the fundamental architectural decisions behind our system is decoupling AI-intensive processing from the aerial platform and hosting it at the ground control station (GCS) level. The drone acts as an advanced data collection platform, transmitting video and telemetry data to the ground station. The backend then performs two critical tasks with this data:

- a.

- Visual Detection: Uses the YOLO11 object detection model to analyze the video stream and extract semantic information. This off-board approach enables the use of complex, high-precision computational models that would otherwise surpass the hardware capabilities of resource-constrained aerial platforms.

- b.

- Agentic Reasoning: The GCS hosts the entire cognitive–agentic architecture. This architecture is detailed in Section 6. All interactions between AI agents, including contextual analysis, risk assessment, and recommendation generation, occur at the ground server level.

This centralization of intelligence allows our system to surpass standard AI functionalities integrated into commercial drones, which usually focus on flight optimization (e.g., AI Spot-Check or Smart Pin & Track). Our system offers deeper reasoning and contextual analysis capabilities.

Furthermore, the command center is the interface where the cognitive–agentic architecture presents prioritized recommendations to the operator. The centralization of raw data, processed information (via YOLO), and intelligent suggestions (from the cognitive–agentic architecture) transforms the GCS into an advanced decision support system. This system enables human operators to efficiently manage complex disaster response scenarios.

4. Visual Detection and Classification with YOLO

Object detection is a fundamental task in computer vision and is essential for enabling autonomous systems to perceive and interact with their environment [24]. In the context of search and rescue (SAR) operations, the ability to quickly identify and locate victims is critical. Real-time applications, such as analyzing video streams from drones, require an optimal balance between speed and detection accuracy [25].

We selected a model from the YOLO (You Only Look Once) family for our system due to its proven performance, flexibility, and efficiency [26]. Based on our research, we selected YOLO11 because it is the fastest algorithm in its family with nearly optimal performance and effectively suits our project [27]. We trained it using specialized datasets for detecting people in emergency situations. To ensure robust performance in complex and unpredictable disaster conditions, we adopted a training strategy that focused on creating a specialized dataset and optimizing the learning process. Next, we will discuss how we adapted and trained the model to recognize not only human presence but also various actions and states, such as walking, standing, or lying down.

4.1. Construction of the Dataset and Class Taxonomy

To address the specifics of post-disaster operations and optimize processing, we implemented a model based on YOLO11 [28] that we trained. We built a composite dataset by aggregating and adapting two complementary sources to maximize the generalization and robustness of the used module:

- C2A Dataset: Human Detection in Disaster Scenarios—This dataset is designed to improve human detection in disaster contexts [29].

- NTUT 4K Drone Photo Dataset for Human Detection—It is a dataset designed to identify human behavior. It includes detailed annotations for classifying human actions [30].

To create a consistent and operationally relevant class taxonomy, we performed a label normalization step on the original annotations. While the bounding box coordinates from the source datasets were used without modification, we consolidated synonymous or semantically similar classes. For instance, labels such as “sitting” were mapped to the unified “sit” class to ensure a standardized set of categories for the model to learn. All other annotations were used as provided in the original datasets.

Our objective is to achieve a semantic understanding of the victim’s condition, which is vital information for the cognitive–agentic architecture, not just to detect human presence. To this end, we have trained the model on a detailed taxonomy of classes designed to differentiate between states of mobility and potential distress. These classes are organized into two main categories that have clear operational significance for search and rescue (SAR) missions:

- States of Mobility and Action: This category helps the system distinguish between individuals who are mobile and likely at lower risk, versus those who may be incapacitated.

- o

- High-Priority/Immobile (Sit): This is the most critical class for search and rescue (SAR) scenarios. It identifies individuals in a static, low-profile position, including sitting, lying on the ground, or being collapsed. An entity classified as “sit” is treated as a potential victim requiring urgent assessment.

- o

- Low-Priority/Mobile (Standing or Walking): These classes identify individuals who are upright and mobile. Although they are considered a lower immediate priority, they are essential for contextual analysis and distinguishing active survivors from incapacitated victims.

- o

- General Actions (Person, Push, Riding, Watch Phone): These classes are derived largely from the NTUT 4K dataset and help the model build a more robust and generalized understanding of human presence and posture. This improves the model’s overall detection reliability, even if these specific actions are less common in disaster zones.

- Visibility and Occlusion: This category quantifies how visible a person is. This is important for determining whether a victim is trapped or partially buried.

- o

- Occlusion Levels (Block25, Block50, Block75): These classes indicate that 25%, 50%, or 75% of a person is obscured from view.

The final dataset consisted of 5773 images and was randomly divided into training (80%), validation (10%), and testing (10%) sets. This division ensures that the model is evaluated using a set of images it has never seen before, providing an unbiased assessment of its generalization performance.

In Table 2, there are presented the total number of each class and their percentage in the dataset.

Table 2.

Class distribution across dataset.

All imagery used in this study was sourced exclusively from the two publicly available datasets mentioned, which are released under permissive licenses for research purposes. No new data involving human subjects was collected for this study. Our work relies on the ethical standards and clearances established by the original creators of these datasets.

4.2. Data Preprocessing and Hyperparameter Optimization

To make the most of the model’s capabilities and develop a flexible detector, we implemented two types of optimizations:

- Data Augmentation: The applied techniques consisted of fixed rotations at 90°, random rotations between −15° and +15°, and shear deformations of ±10°. These transformations were used to simulate the variety of viewing angles and target orientations that naturally occur in dynamic drone-filmed landscapes, forcing the model to learn features that are invariant to rotation and perspective.

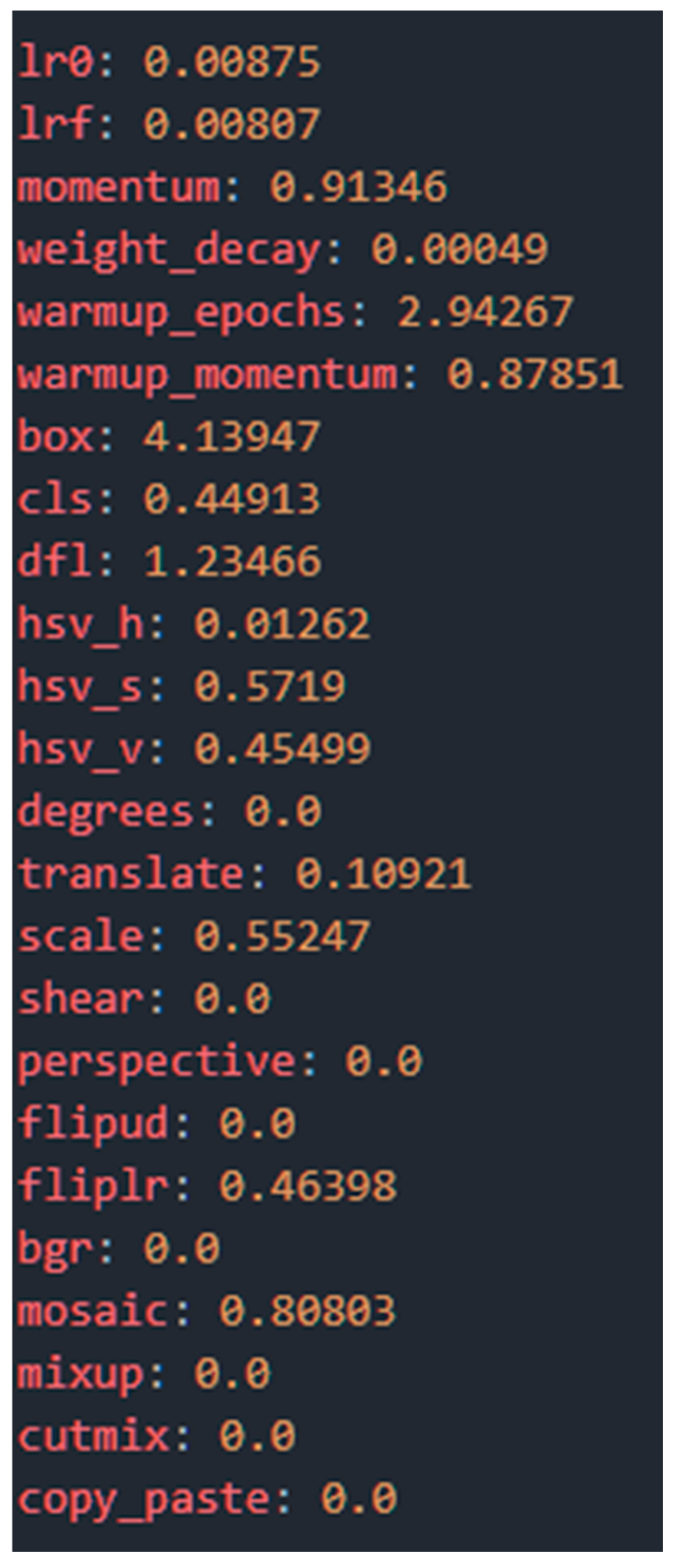

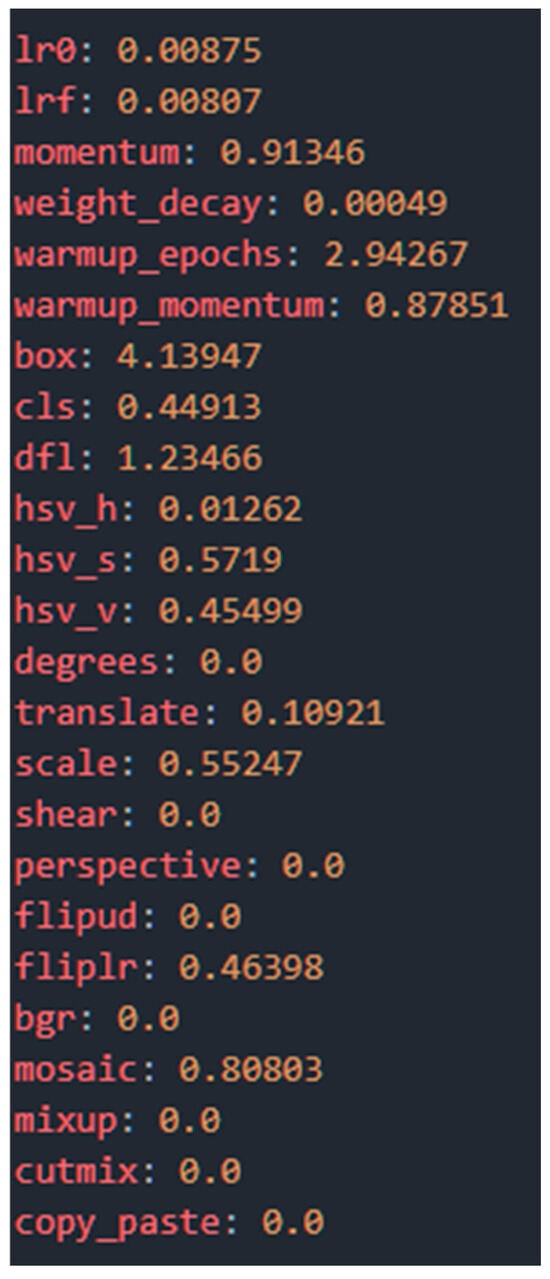

- Hyperparameter Optimization: Through a 100-iteration evolutionary tuning process, we determined the optimal set of hyperparameters for the final training. This method automatically explores the configuration space to find the combination that maximizes performance. Figure 7 shows the resulting hyperparameters, which define everything from the learning rate to the weight loss and augmentation strategies.

Figure 7. Optimal hyperparameters determined following the evolutionary adjustment process.

Figure 7. Optimal hyperparameters determined following the evolutionary adjustment process.

4.3. Experimental Setup and Validation of Overall Performance

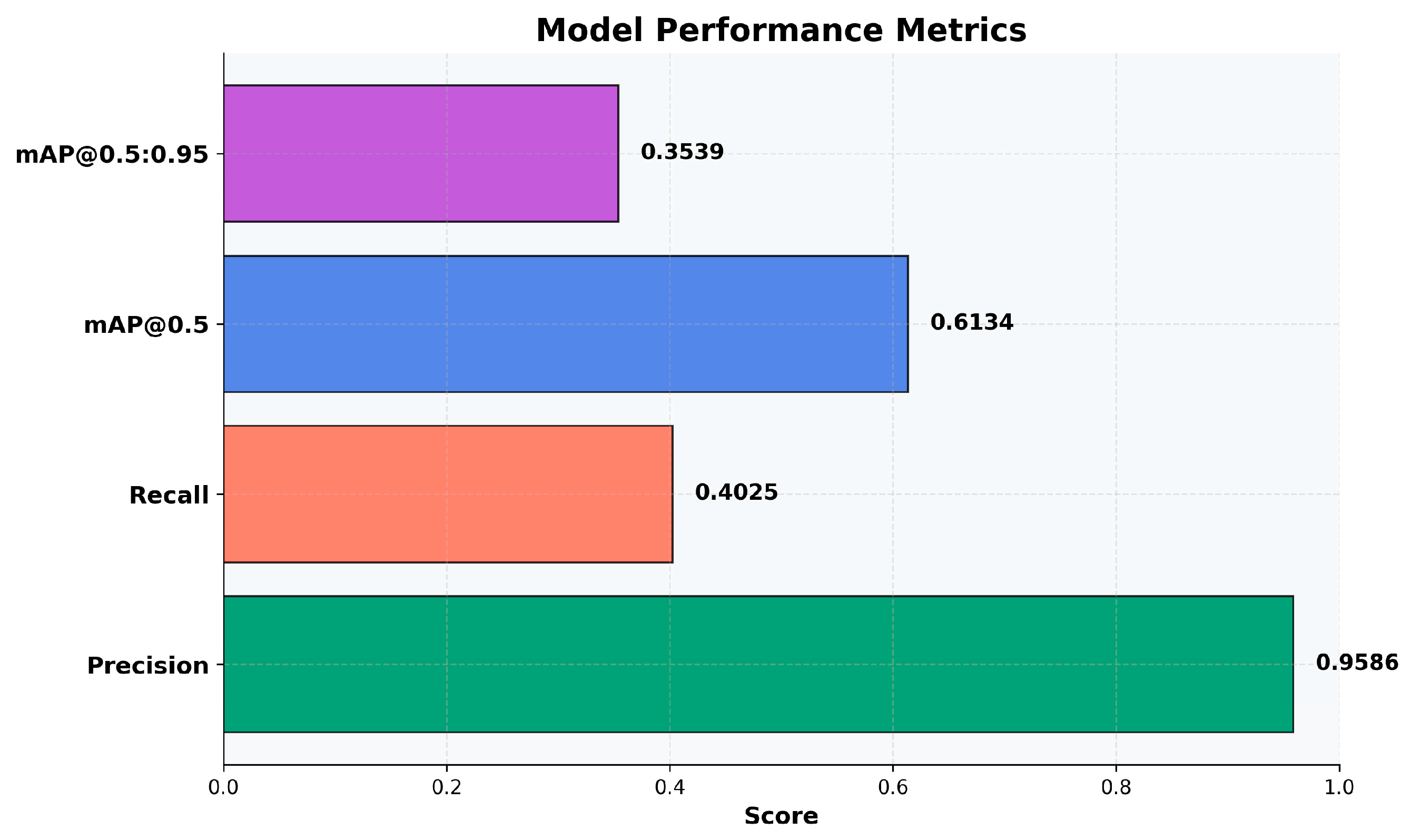

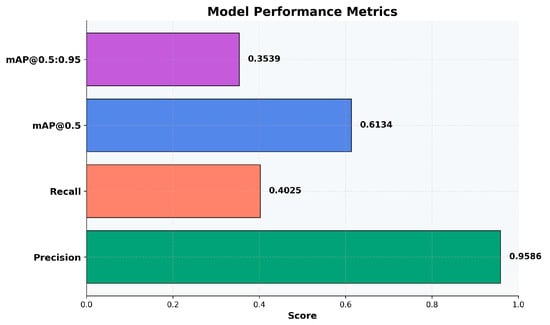

When developing an artificial intelligence model for visual tasks such as object detection, demonstrating functionality is not enough. An objective, standardized evaluation based on quantitative indicators is required. Graphically represented performance metrics clearly quantify the model’s precision, accuracy, and efficiency. This facilitates the identification of strengths and limitations and informs optimization decisions. Figure 8 presents four key performance indicators:

Figure 8.

Quantitative performance of the victim detection model.

- Precision (0.9586/95.86%) is the proportion of correct detections out of the total number of detections performed. A high value reflects a low probability of erroneous predictions and high confidence in the model’s results.

- Recall (0.4025/40.25%) expresses the ability to identify objects present in the image. This value reflects a deliberate trade-off between detection completeness and accuracy. This strategy is a consequence of the combined dataset’s complexity. It optimizes the model’s generalization and versatility rather than exhaustive identification. Additionally, it only affects a few classes, not all of them.

- mAP@0.5: (0.6134) combines precision and recall by evaluating correct detections at an intersection over union (IoU) threshold of 50%. Despite the low recall, the result indicates balanced performance.

- mAP@0.5:0.95 (0.3539): This measures the localization accuracy at strict IoU thresholds (50–95%). A value significantly lower than mAP@0.5 indicates that although the model detects objects, the generated bounding boxes do not always fit their contours well.

In summary, the findings from the quantitative analysis indicate that the trained model exhibits characteristics of a highly reliable yet conservative system. The general metrics are low because we introduced an optional class that, in our opinion, is rather important but for which we did not have many examples in the datasets: the block classes (block25, block50, and block75). These classes are intended for individuals whose form is blocked by an approximate percentage. These classes received low scores, which remains a weak point for the model. However, the metrics are high in the other classes, which demonstrates the reliability of the model. A more extensive discussion will be presented in the next subchapter.

4.4. Granular Analysis: From Aggregate Metrics to Error Patterns

In order to understand the model’s behavior in depth, it is necessary to examine performance at the individual class level and the specific types of errors committed, rather than relying on aggregate metrics.

4.4.1. Classes Performance

A detailed analysis of each class reveals a heterogeneous performance landscape, highlighting both significant strengths and critical weaknesses.

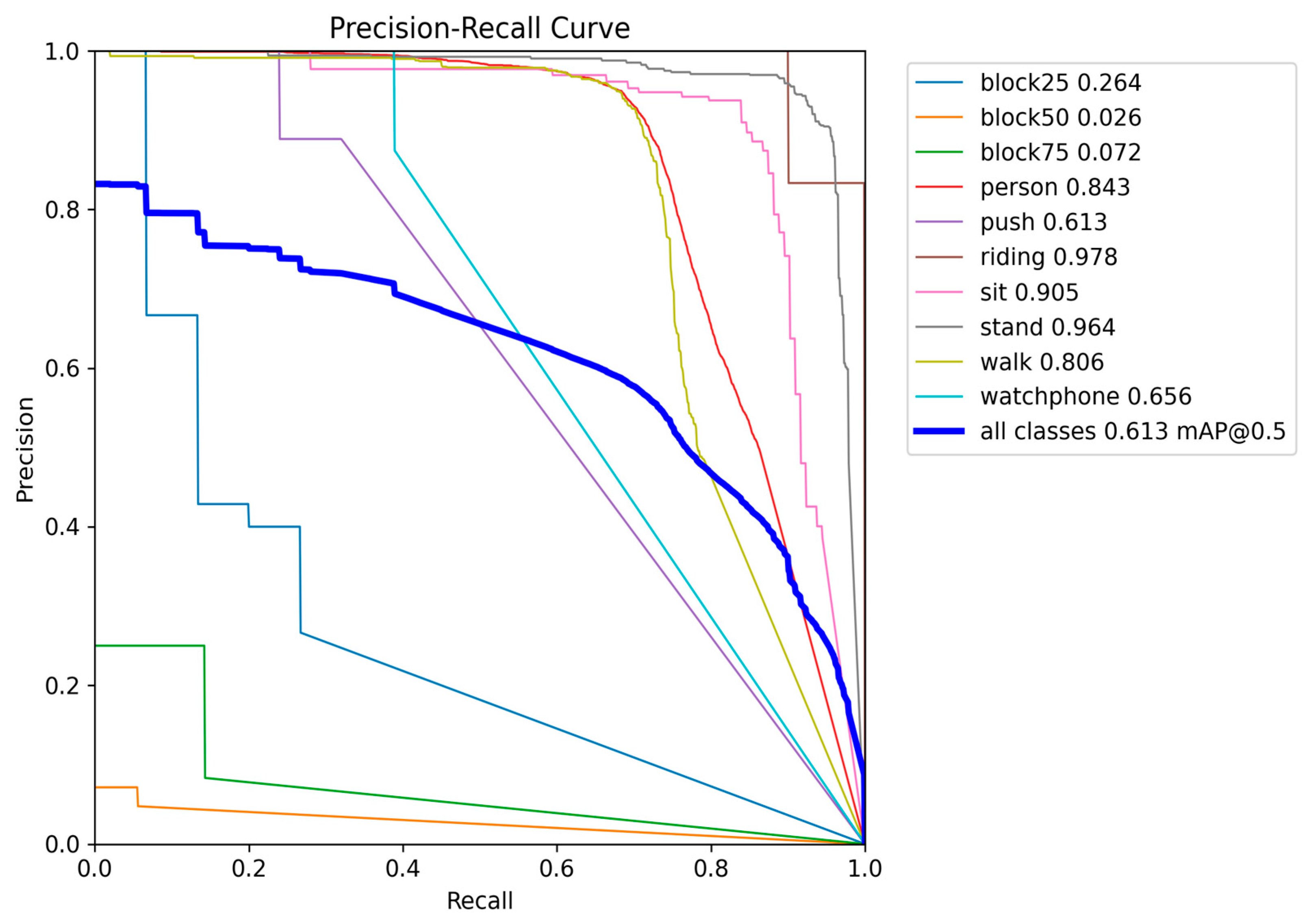

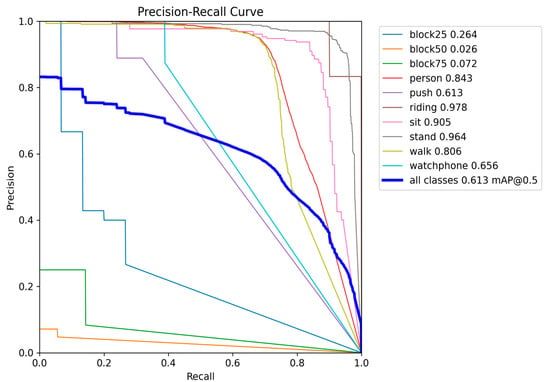

First, the model excels at identifying classes highly relevant to SAR missions. It achieves exceptional mean average precision (mAP@0.5) scores for distinct classes, such as riding (0.978), stand (0.964), and sit (0.905). Supported by precision-recall curves close to the optimal corner, these results suggest that the model identifies these states with high confidence and few false positives. Robust performance is also observed for the general person (0.843) and walking (0.806) classes, confirming solid baseline detection capability.

However, the model’s primary deficiency is its inability to handle occlusions. Performance is extremely poor for the block50 (0.026) and block75 (0.072) classes, meaning the model has difficulty identifying subjects partially obscured from view. The score for block25 (0.264) is marginally better but still unsatisfactory. Furthermore, classes such as push (0.613) and watch phone (0.656) demonstrate mediocre performance, suggesting difficulty maintaining an optimal balance between precision and recall.

In conclusion, the aggregate mAP@0.5 value of 0.613 is a reasonable indicator of overall performance. However, it is significantly impacted by the model’s poor performance on the occlusion classes. Without these classes, the average performance would be considerably higher. This finding suggests an issue related to the insufficient representation or intrinsic complexity of detecting partially occluded individuals in the dataset. Figure 9 presents a detailed analysis of the performance for each class.

Figure 9.

The model’s precision-recall curve.

4.4.2. Diagnosing Error Patterns with the Confusion Matrix

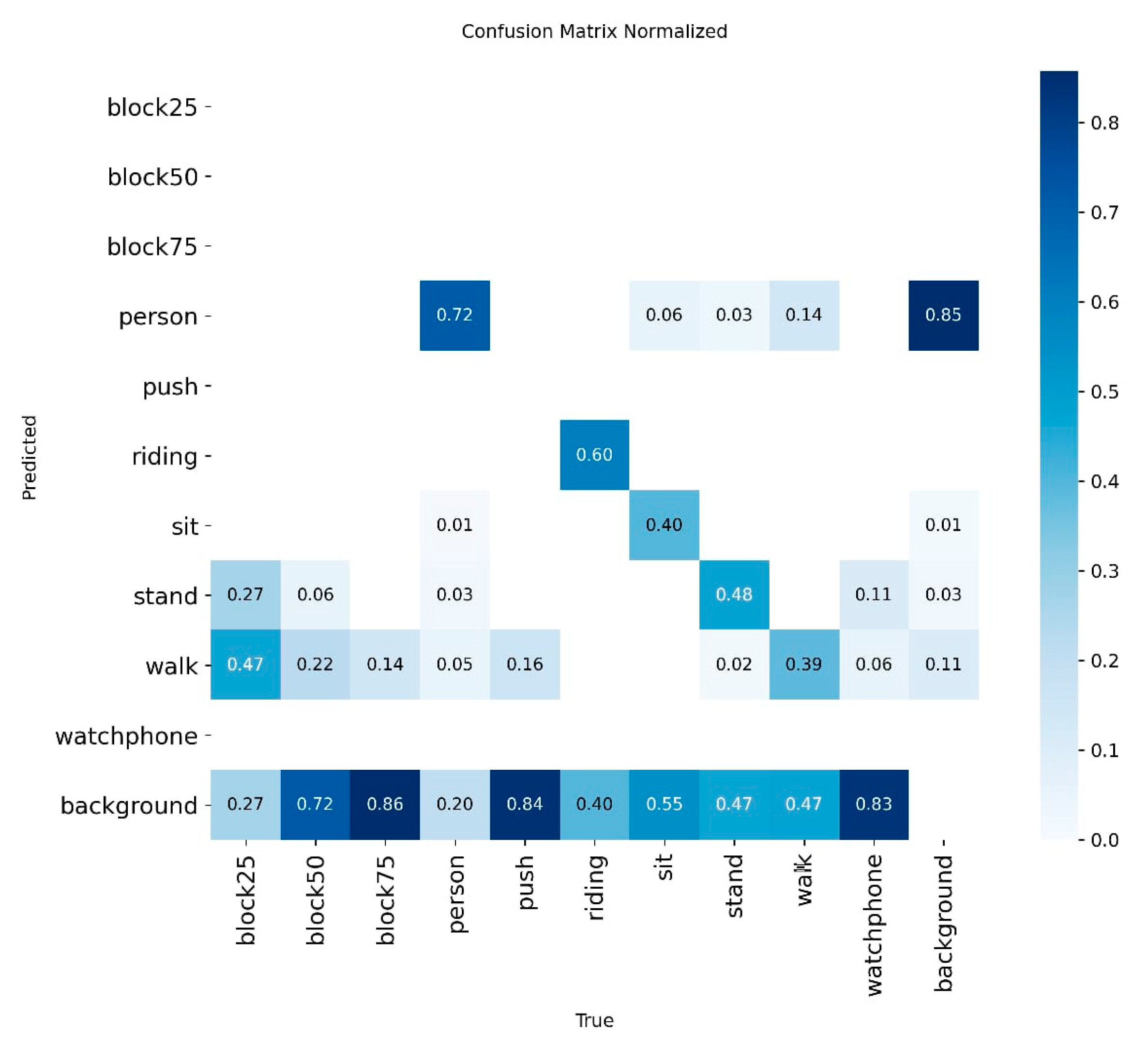

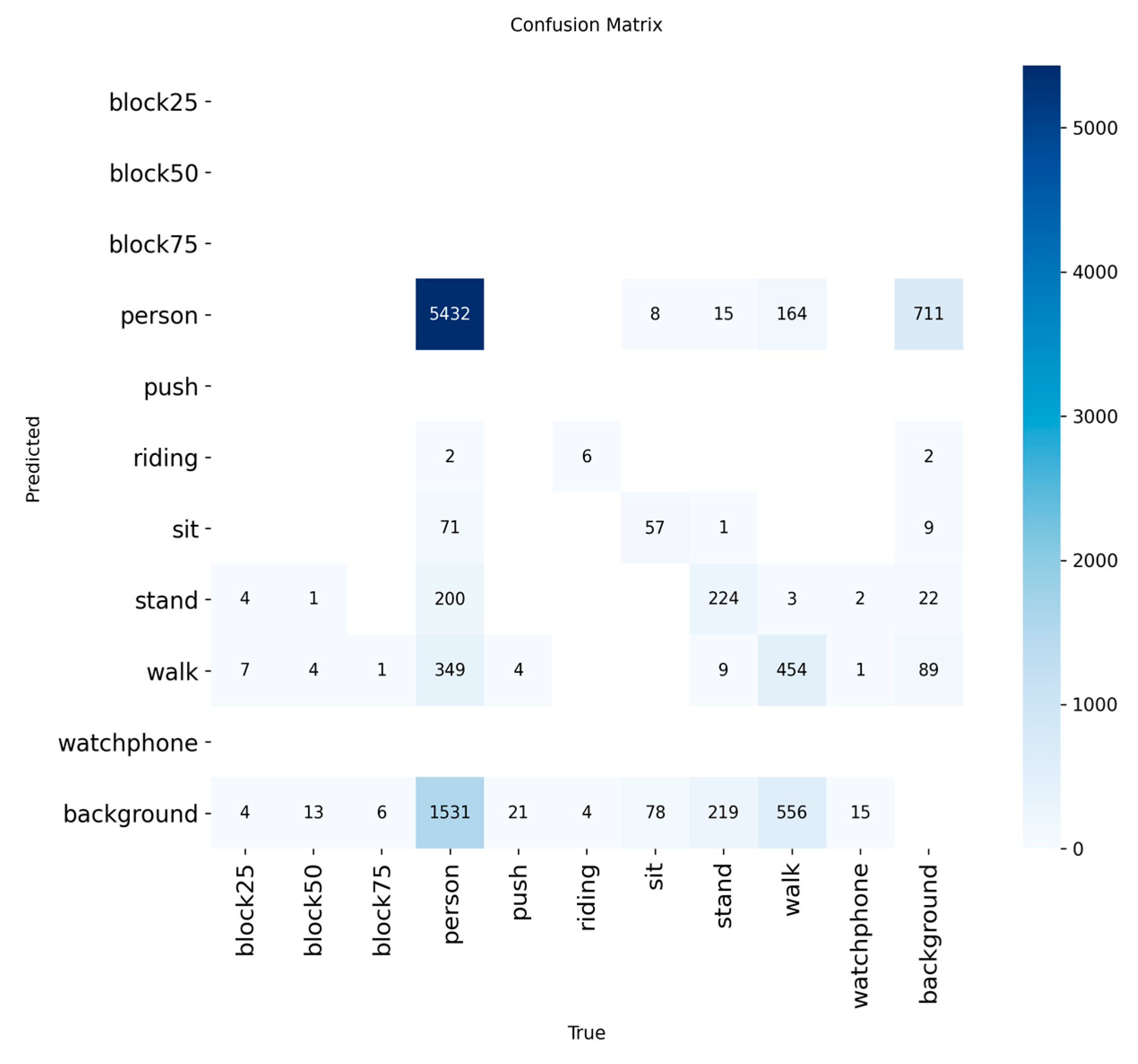

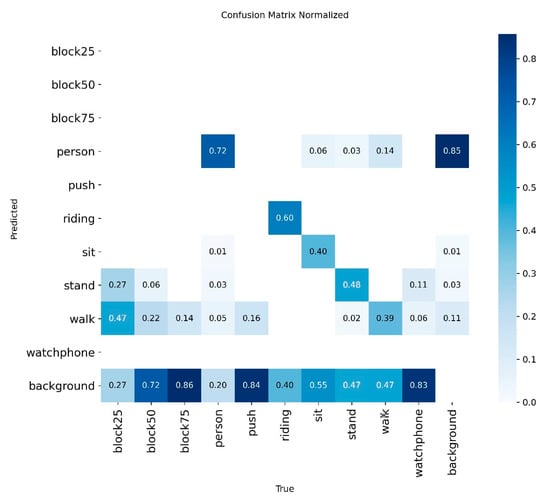

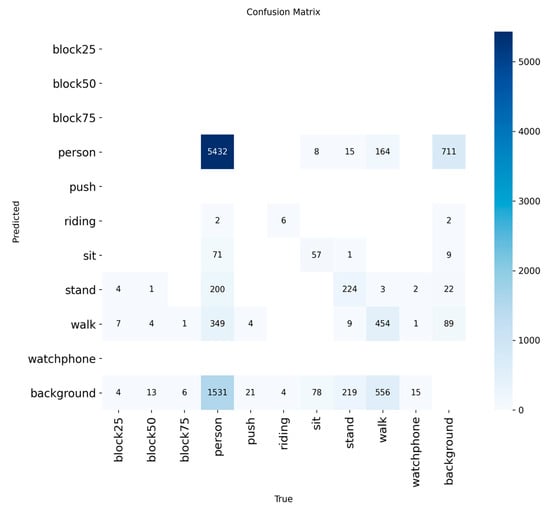

To gain a deeper understanding of interclass relationships and classification errors, Figure 10 presents a normalized confusion matrix. Figure 11 shows the non-normalized matrix, which displays the raw prediction counts. This analysis is essential for linking the model’s technical performance to its operational value in SAR scenarios, especially when addressing the low overall recall rate of 40.25%.

Figure 10.

Normalized confusion matrix of the YOLO11 model, detailing per-class performance.

Figure 11.

Non-normalized confusion matrix of the YOLO11 model, showing raw prediction counts.

The main diagonal of the matrix indicates moderate recall for specific postural states: stand (48%), sit (40%), and walk (39%). Although these figures suggest a conservative model, a granular analysis of its error patterns reveals a significant advantage in terms of operational safety.

5. Geolocation of Detected Targets

After the YOLO-based visual perception module detects and classifies a victim, determining their precise location becomes the next critical priority. These geographical coordinates are essential input for the perception agent (PAg) and, subsequently, for the entire cognitive architecture’s decision-making chain. Without accurate geolocation, the system’s ability to assess risks and generate actionable recommendations is severely compromised.

Methodology for Estimating Position

To estimate the position of a person detected in a camera image, we implemented a passive, monocular geolocation method that combines visual data and drone telemetry [31].

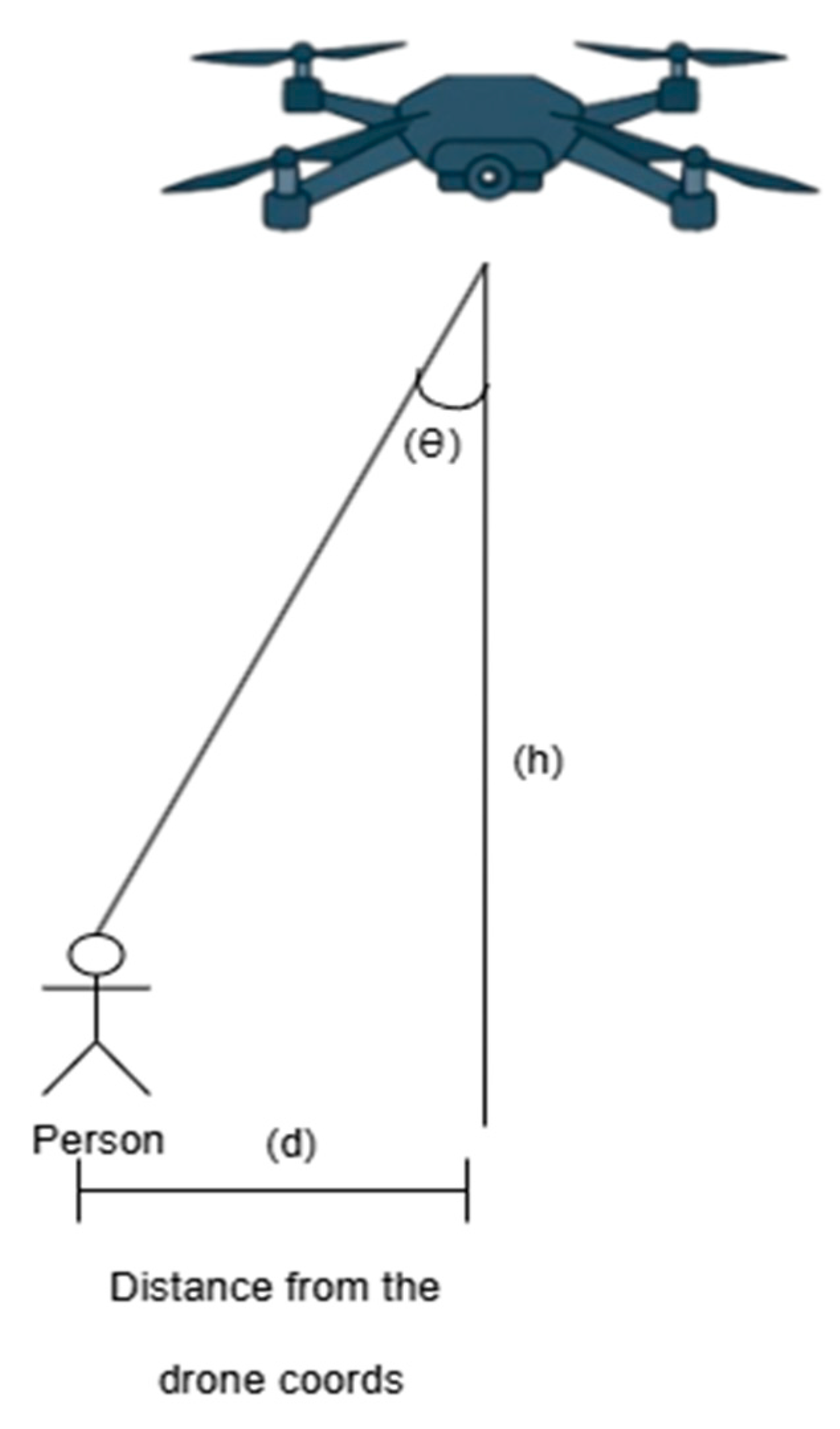

The mathematical model uses the following input parameters to calculate the geographical coordinates of the target (P) based on the drone’s position (D):

- ): The geographical coordinates (latitude, longitude) of the drone, provided by the GPS module;

- : Height of the drone above the ground;

- : The vertical deviation angle, representing the angle between the vertical axis of the camera and the line of sight to the person. This is calculated based on the vertical position of the person in the image and the vertical field of view (FOV) of the camera;

- : This value is provided by the magnetometer integrated into the flight controller’s inertial measurement unit (IMU) and is crucial for defining the projection direction of the visual vector [32];

- : The estimated geographical coordinates of the person are the final result of the calculation.

First, the horizontal distance (d) from the drone’s projection on the ground to the target is determined using simple trigonometry, according to Equation (1):

Once the starting point (GPS coordinates of the drone), distance (d), and direction (azimuth ) are known, the geographical coordinates of the target can be calculated. To do this, the spherical cosine law formulas are used, which model the Earth as a sphere to ensure high accuracy over short and medium distances. The estimated coordinates of the person are calculated using Equations (2) and (3):

where is the angular distance, and R is the mean radius of the Earth (approximately 6371 km).

We chose this method because of its computational efficiency. Error analysis shows that for an FOV of 120° and a maximum flight altitude of 120 m, the localization error is approximately one meter. This margin is considered negligible for intervention teams [33]. We consider this error to be negligible because at 20 m, the possible error drops to around 17 cm.

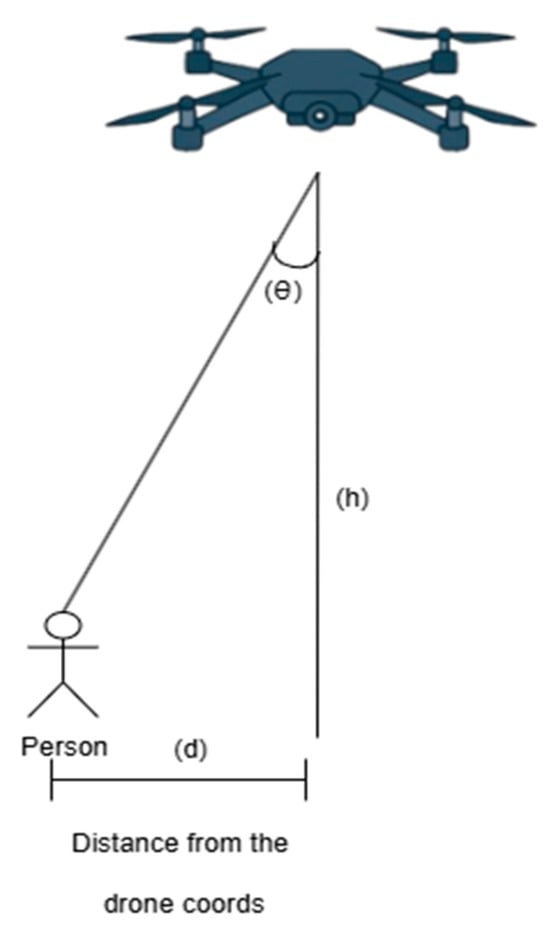

The angle is crucial for correctly positioning the person in the global coordinate system because it indicates the direction in which the drone is facing. In Figure 12, however, the angle is not explicitly visible because the figure simplifies the projection to illustrate the additional calculations compared to classical equations.

Figure 12.

Visual representation of the position estimation.

To illustrate the method, consider a practical scenario in which the drone identifies a target on the ground. The flight and detection parameters at that moment are:

- Flight height (h): 27 m;

- GPS position of the drone ): (45.7723°, 22.144°);

- Drone orientation (): 30° (azimuth measured from North);

- Vertical deviation angle (): 25° (calculated from the target position in the image).

Using Equation (1), the horizontal distance (d) is:

To convert this distance into a geographical position, Equations (2) and (3) are applied, first converting the values into radians:

- Earth’s radius (R): 6,371,000 m;

- Angular distance (): ;

- Azimuth (): ;

- Drone latitude ): ;

- Drone longitude .

Applying the equations, the estimated coordinates of the person are obtained:

These precise geographical coordinates are transmitted to the perception agent (PAg), which integrates them into the system’s cognitive model. The coordinates are then displayed on the interactive map in the command center, allowing users to visually identify the target’s location and status in the context of the mission.

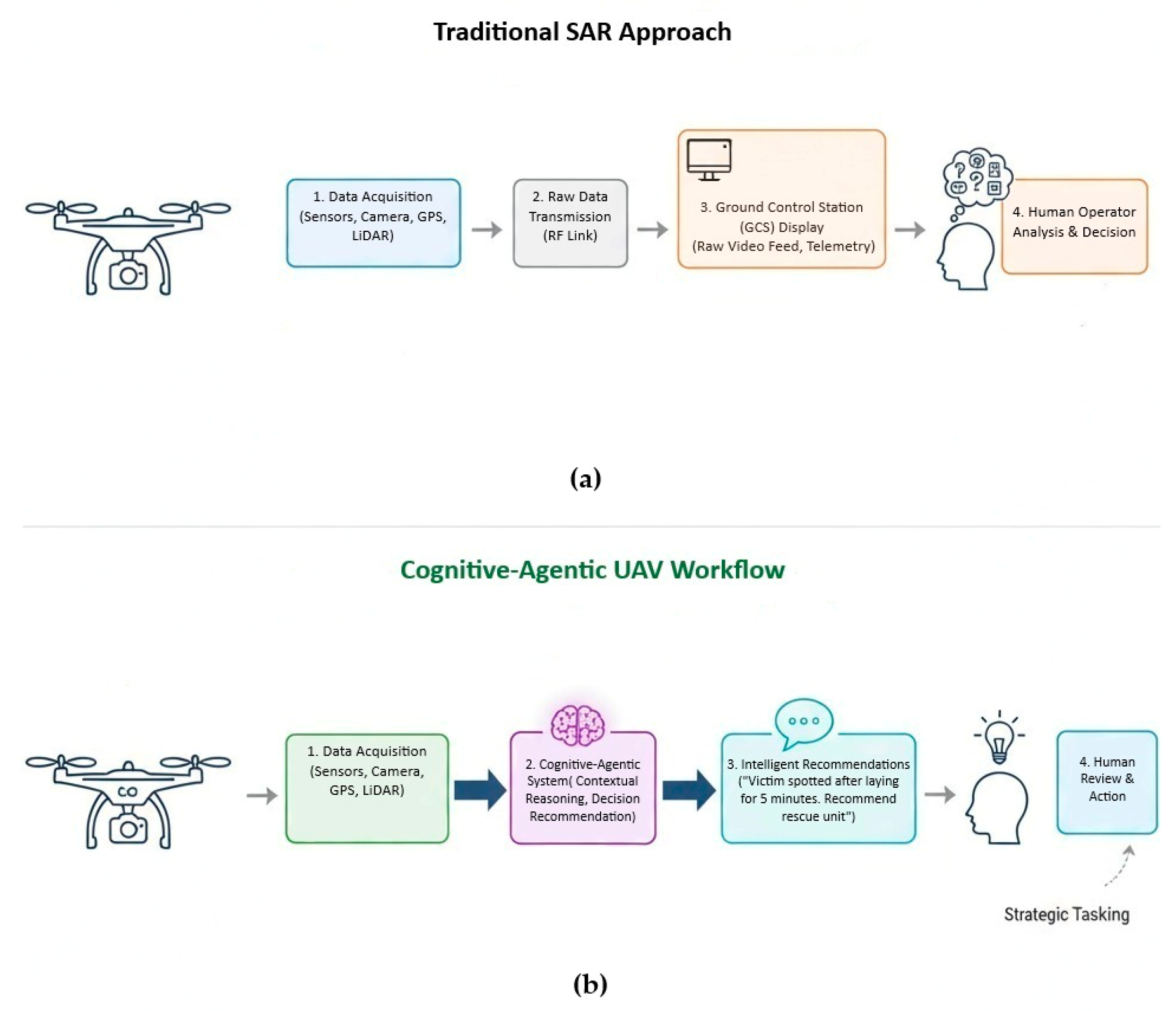

6. Cognitive Agent Architecture

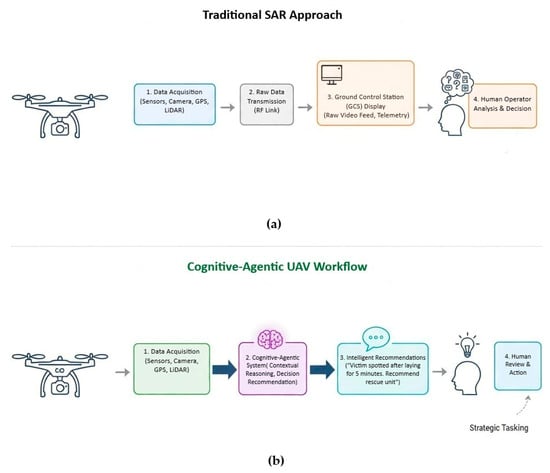

The core contribution of this research is a paradigm shift in human-drone interaction for SAR missions, a concept visualized in Figure 13. We move beyond the traditional model, where a drone acts as a teleoperated sensor streaming raw data, to a collaborative model where the UAV functions as an intelligent agent. Its onboard perception-reasoning-action loop synthesizes data into actionable insights, empowering the human operator with distilled intelligence rather than overwhelming them with unprocessed information.

Figure 13.

Comparison between traditional and our SAR drone dataflow: (a) drone acts as a remote sensor and humans are burdened with cognitive overload; (b) drone acts as an intelligent partner and AI provides actionable insights, reducing human cognitive load.

An autonomous system must have perception and action capabilities, as well as superior intelligence for reasoning, planning, and adaptation to function effectively in complex and dynamic environments such as search and rescue scenarios after disasters [34]. Traditional, purely reactive systems that only respond to immediate stimuli are inadequate for such challenges [35,36]. To overcome these limitations, we propose an agentic cognitive architecture inspired by human thought processes. This architecture provides a structured framework for organizing perception, memory, reasoning, and action modules [37].

The key contribution of this work is its modular architecture, which is centered on a large language model (LLM) that serves as the orchestrator agent. This agent performs complex reasoning, logical data validation, and self-correction via feedback loops [38,39]. By doing so, the system evolves from a simple data collector into an intelligent partner capable of processing heterogeneous information [18,40], transforming raw inputs into actionable knowledge [41], prioritizing tasks, and making optimal decisions under uncertainty and resource constraints.

This chapter deconstructs the proposed architecture, explaining the distinct roles of its specialized agents and the data exchange protocols that orchestrate the drone’s cognitive workflow, from sensing to action [42].

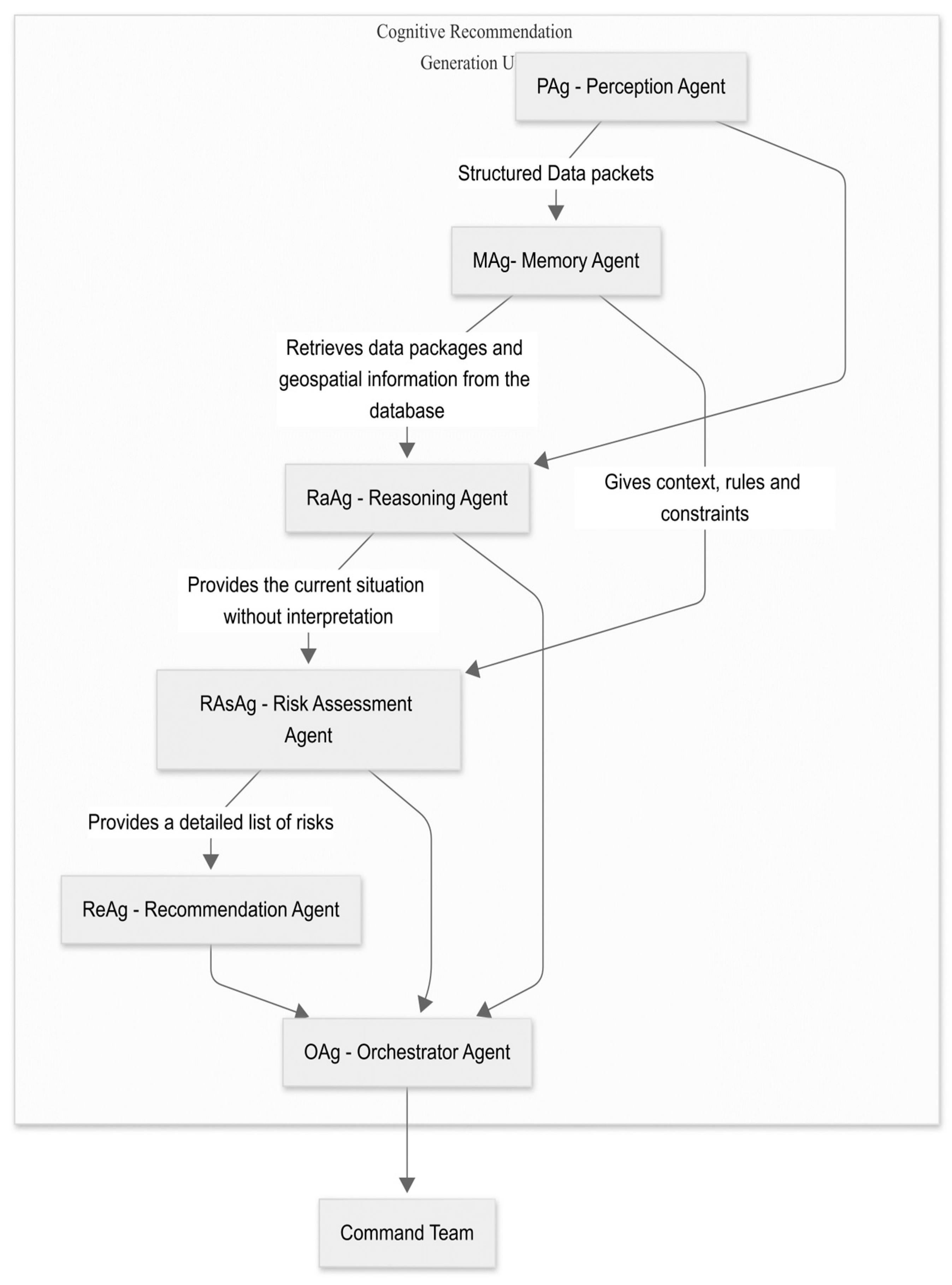

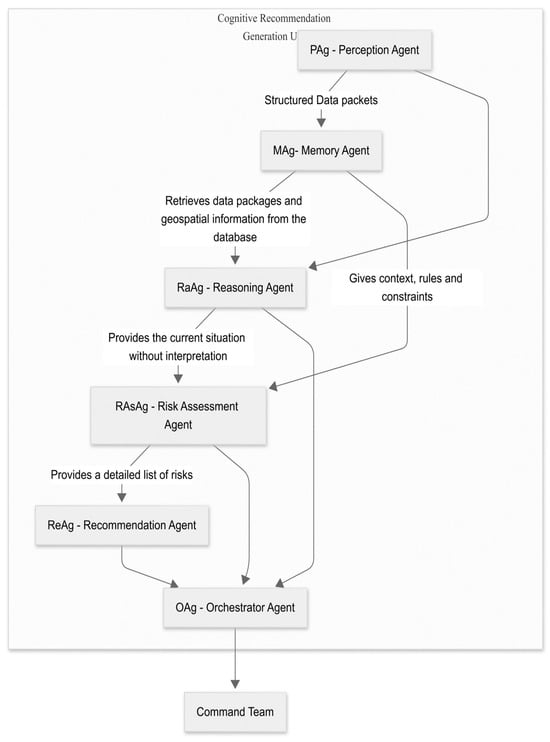

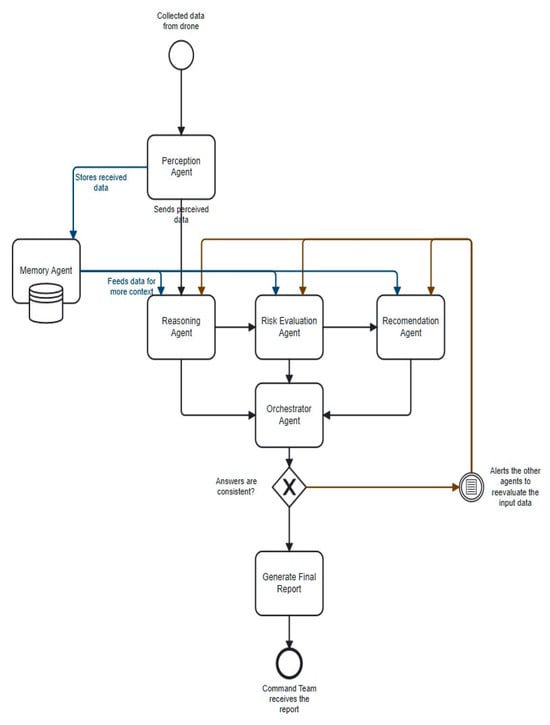

6.1. Components of the Proposed Cognitive–Agentic Architecture

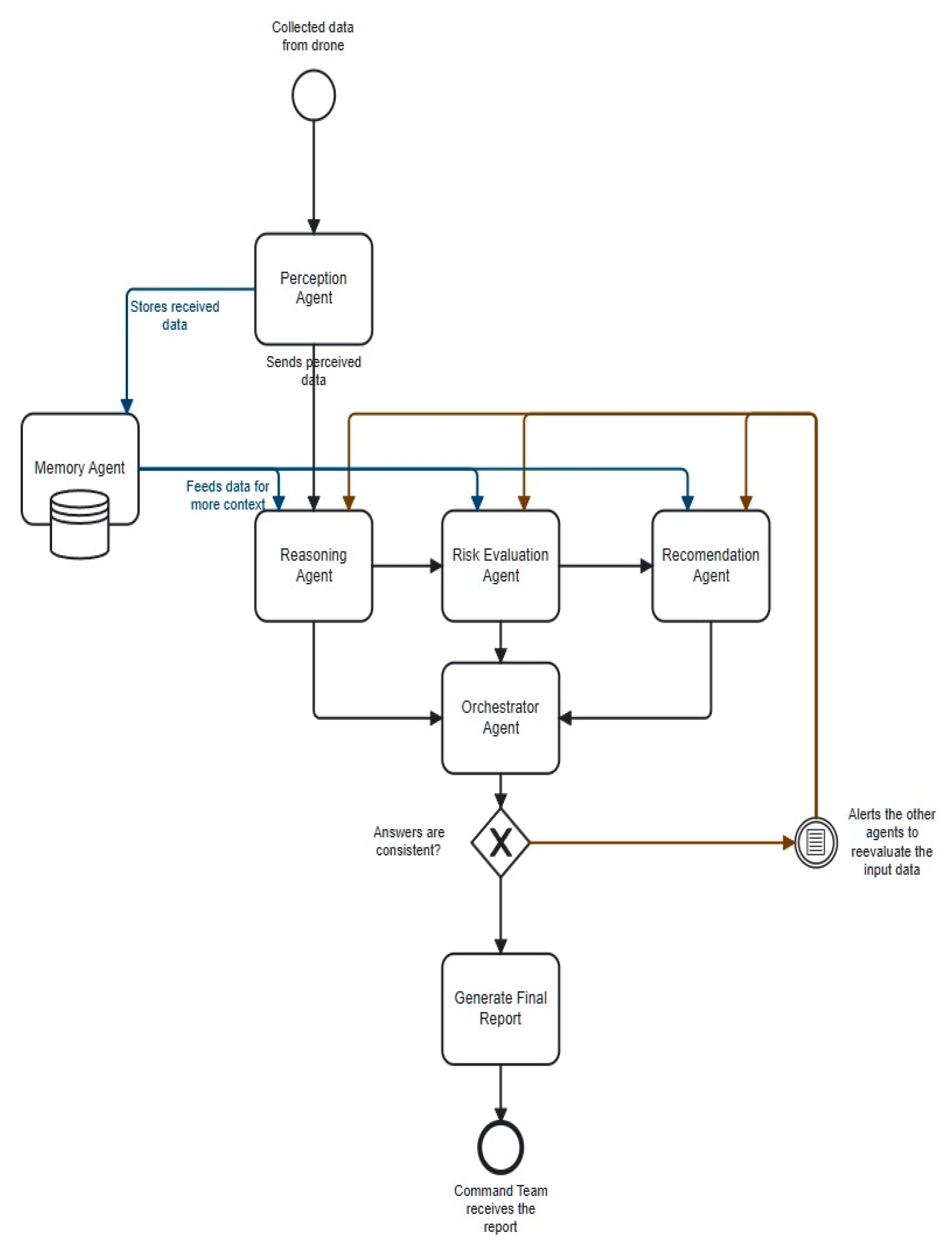

Figure 14 illustrates the system architecture, which is designed as a complete cognitive flow. It orchestrates the processing of information from data acquisition to decision consolidation for the command team. The system follows a modular, interconnected model in which each software agent plays a specialized role.

Figure 14.

The cognitive–agentic architecture for situational awareness and decision support.

The agents are implemented using Google’s Agent Development Kit (ADK) [43]. The cognitive core is the LLM Gemma 3n E4b, which enhances reasoning, contextual evaluation, and decision-making. Agents communicate via a Shared Session State, acting as a centralized working memory to ensure data consistency.

In cognitive architecture, the Perception Agent (PAg) serves as an intelligent interface between perceptual data sources and the rational core of the system. The PAg’s primary role is to aggregate heterogeneous, partially processed information, such as visual detections, GPS locations, associated activities, and drone telemetry data, and convert it into a standardized, coherent internal format.

This involves validating data integrity, normalizing format variations (e.g., GPS coordinates), and generating a consistent representation of the environment (e.g., JSON). This provides the necessary context for reasoning, planning, and decision-making agents.

6.1.1. MAg—Memory Agent

The proposed cognitive–agentic architecture incorporates an advanced memory system modeled on human cognition. This system is structured into two components: volatile short-term (working) memory and persistent long-term memory.

Working memory is implemented via the LLM’s context window. This window acts as a temporary storage space where real-time data, such as YOLO detections and drone position, as well as the current mission status and recent decision-making steps, are actively processed. However, this memory is ephemeral, and information is lost once it leaves the context window.

To overcome this limitation, the Memory Agent (MAg) manages the system’s long-term memory. The MAg serves as a distributed knowledge base that stores valuable information, such as geospatial maps, flight rules, mission history, and intervention protocols, in the long term. A MCP Server enables communication between the database and the agent and stores and retrieves this information. The dynamic interaction between immediate perception (working memory) and accumulated knowledge (long-term memory) is fundamental to the system’s cognitive abilities.

The value of this dual-memory system is best illustrated by a practical example. During a surveillance mission, for example, the drone detects a person (P1). This real-time information is stored in the agent’s working memory. To assess the context, the agent queries the MAg to determine whether the person’s location corresponds to a known hazardous area. The MAg responds that the location is a “Restricted Area.” Integrating this retrieved knowledge with the live data allows the agent to correctly identify the situation as dangerous and trigger an appropriate response, such as alerting the operator.

6.1.2. RaAg—System Reasoning

The Reasoning Agent (RaAg) is the primary semantic abstraction component of the system. It is responsible for transforming raw sensory streams into structured, contextualized representations. It receives preprocessed inputs from the Perception Agent (PAg), including GPS coordinates, timestamps, and categories of detected entities. It then correlates these inputs with related knowledge from the Memory Agent (MAg), such as maps of risk areas and operational constraints.

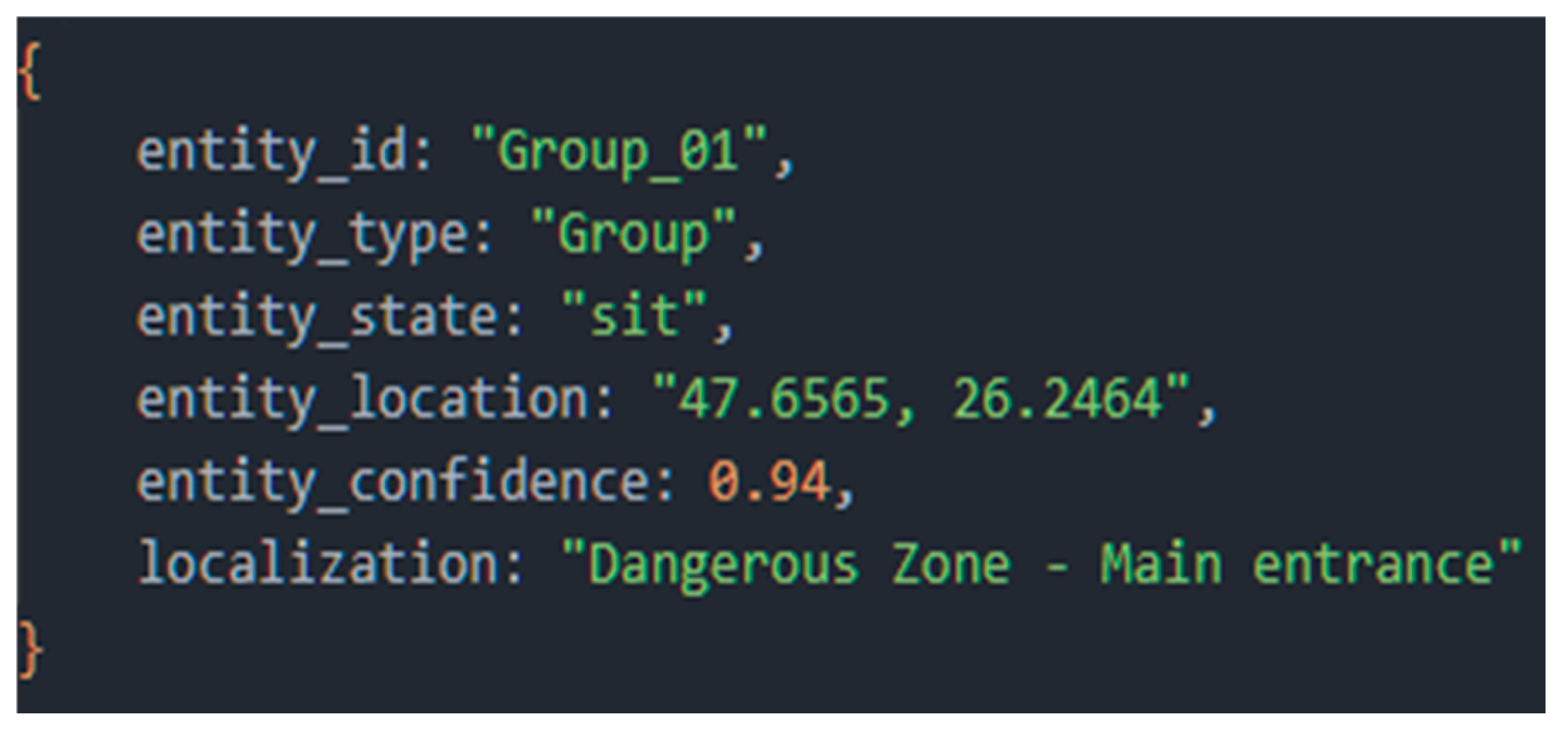

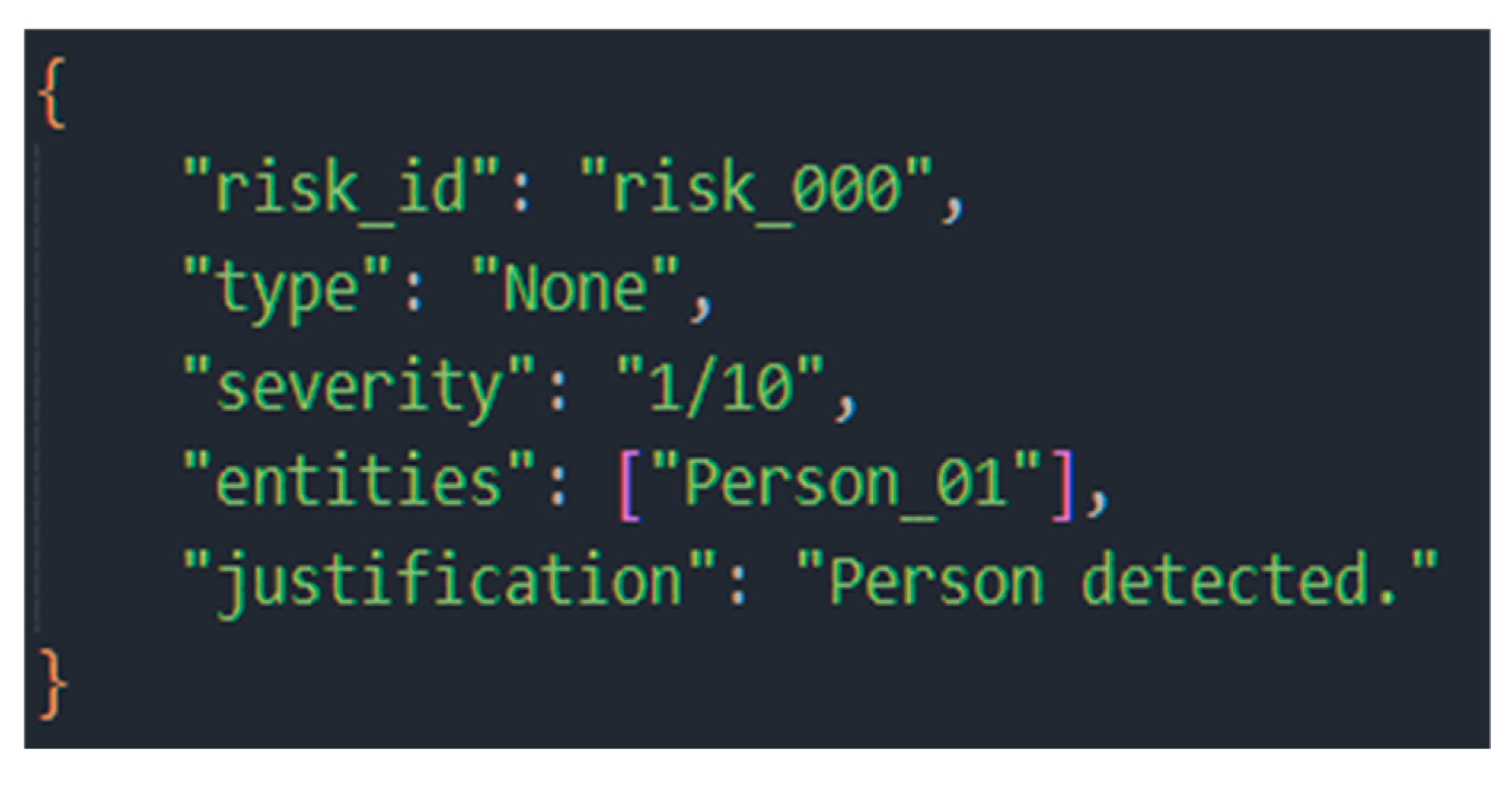

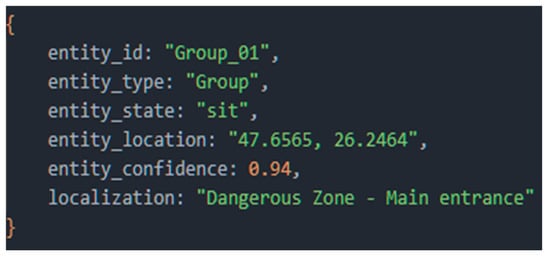

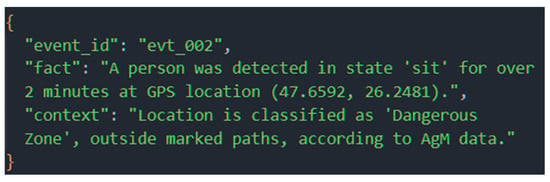

Through the fusion mechanism, RaAg generates contextualized facts. For example, reporting a person’s coordinates triggers an inter-query in MAg to determine their membership of a predefined area. The result is the semantic structure shown in Figure 15.

Figure 15.

Semantic structure resulted from RaAg.

The Reasoning Agent is responsible for performing the initial layer of intelligent analysis, which involves transforming standardized perceptual data from the PAg into a contextualized factual report. Its primary function is to take a given set of perceptual inputs, such as an entity’s GPS location and category, and enrich them by fusing them with relevant information from long-term memory. The agent is instructed to actively query the MAg to answer questions such as “Does this location fall within a predefined risk area?” and “Are there any operational constraints associated with this entity?” The expected output is a structured representation that objectively describes the situation (e.g., “Person P1 is located inside Hazardous Zone B”) without judging the level of danger.

As outlined in Section 6.1.4, this objective representation, created without an initial risk assessment, forms the basis of the Risk Assessment Agent’s (RAg) decision-making process.

6.1.3. RAsAg—Risk Assessment Agent

The Risk Assessment Agent (RAAg) receives the contextualized report from the RaAg and determines its operational significance in terms of danger. The RAag’s significant role is to convert objective facts (e.g., “Person in Zone A”) into quantitative assessments and risk prioritizations.

To accomplish this, RAsAg employs a set of rules, heuristics, and risk scenarios stored in the MAg’s long-term memory. This model is composed of a set of production rules. Each rule is defined as a tuple that maps specific conditions from RAsAg’s factual report to a structured, quantifiable risk report.

Rule R is formally defined as a 4-element tuple: R = (Rule_ID, Condition, Action, Severity) where

- Rule_ID: A unique identifier for traceability (e.g., R-MED-01).

- Condition (C): The description of the rule prerequisites it to act.

- Action (A): The process of generating the structured risk report, specifying the Type, Severity, and Justification.

- Severity (S): A numerical value that dictates the order of execution in case multiple rules are triggered simultaneously. A higher value indicates a higher priority.

Some examples are present in Table 3.

Table 3.

Example of formalized rules in the risk knowledge base.

Based on these rules, the agent analyzes the factual report and generates a risk report. This report contains:

- Type of risk

- Severity level: a numerical value, where 1 means low risk and 10 means high risk

- Entities involved: ID of the person or area affected or coordinates

- Justification: A brief explanation of the rules that led to the assessment

The Risk Assessment Agent is responsible for executing a critical evaluation function: translating the objective, contextualized facts provided by the RaAg into a quantifiable, prioritized assessment of danger. The agent takes the factual report as input and systematically compares it against a formalized set of rules, heuristics, and risk scenarios stored in long-term memory (MAg). The core instruction is to find the rule whose conditions best match the current situation and use the rule’s severity value to resolve any conflicts. The expected output is a structured risk report containing four fields: type of risk, numerical severity level, entities involved, and justification explaining the rule that triggered the assessment.

The resulting report is sent to the Recommendation and Orchestrator agents for further action.

6.1.4. ReAg—Recommendation Agent

The agent receives the risk assessment report from RAsAg and consults a structured operational knowledge base that contains response protocols for various types of hazards. Based on the severity, location, and context of the risk, the agent generates one or more parameterized actions (e.g., alerting specific authorities or activating additional monitoring). The result is a set of actionable recommendations that provide the autonomous system or human operator with justified operational options for managing the identified situation.

6.1.5. Consolidate and Interpret (Orchestrator Agent)

The final and most critical stage of the cognitive cycle is managed by the Central Orchestrator Agent (OAg). The OAg acts as the system’s ultimate cognitive authority and final decision maker.

The process begins with the ingestion and synthesis of consolidated reports from all specialized sub-agents (RaAg, RAsAg, and ReAg), forming a complete and holistic understanding of the operational situation. Crucially, its role extends beyond simple aggregation. The OAg is tasked with performing a “meta-reasoning” validation using the LLM’s capabilities to scrutinize the logical consistency of incoming information against the master protocols stored in the Memory Agent (MAg). The OAg can detect subtle anomalies that the specialized agents might overlook due to their narrower focus. For example, if RaAg reports “a single motionless person” and RAsAg assigns a “low” risk level, the Orchestrator immediately recognizes the discrepancy.

If an inconsistency is detected, the agent does not proceed with a flawed decision. Instead, it initiates a self-correcting feedback loop and issues a new corrective prompt to the agent in question (e.g., “Inconsistency detected. Reevaluate the risk for entity P1…”).

The Orchestrator only executes its final directive after achieving a fully coherent and validated state, either initially or following a corrective cycle. Using the “make_decision” tool, it analyzes the complete synthesis and issues the most appropriate command, whether a critical alert for the human operator or autonomous activation of the delivery module. This robust, iterative process ensures the system reliably closes the perception-reasoning-action loop.

6.1.6. Inter-Agent Communication and Operational Flow

Inter-agent communication is the nervous system of the proposed cognitive–agentic architecture, essential to the system’s coherence and agility. The architecture implements a hybrid communication model combining passive data flow and active, intelligent control orchestrated by the LLM core. As shown in Figure 16, this model is based on three fundamental mechanisms.

Figure 16.

Communication flow between agents.

Shared Session State: This mechanism represents the sequential analysis flow column. It acts as a shared working memory, or “blackboard,” where specialized agents publish their results in a standardized format, such as JSON. The blackboard model is a classic AI architecture known for its ability to solve complex problems. PAg initiates the flow by writing the normalized perceptual data. Then, RaAg reads the data and publishes the contextualized factual report. RAsAg takes over next to add the risk assessment. Finally, ReAg adds recommendations for action, completing the status. This passive model decouples the agents and enables clear, traceable data flow, in which each agent contributes to the progressive enrichment of situational understanding.

Control through tool invocation (LLM-driven tool calling): This active control mechanism means that the Orchestrator Agent is an active conductor, not just a reader of the shared state. It treats each specialized agent (PAg, RaAg, RAsAg, MAg, and ReAg) as a “tool” that can be invoked via a specific API call. This approach is inspired by recent work demonstrating the ability of LLMs to reason and act using external tools. When a new task enters the input queue, the Orchestrator, guided by the LLM, formulates an action plan. Execution of this plan involves sequentially or in parallel invoking these tools to collect the necessary evidence to make a decision [44,45,46,47].

Feedback and Reevaluation Loop: The most advanced mechanism in the architecture is the feedback loop, which provides robustness and self-correcting capabilities. After the Orchestrator Agent collects information by calling tools, it internally validates the information for consistency. If an anomaly or contradiction is detected, an iterative refinement cycle can be initiated. This process is also known as self-reflection or self-correction in intelligent systems (see Section 7.3). The agent uses the same tool-calling mechanism to send a re-evaluation request to the responsible agent, specifying the context of the problem. This iterative cycle serves as a validation mechanism, ensuring that the final output is based on a robust analysis, thereby preventing reliance on unprocessed initial findings.

These three mechanisms work together to transform the system from a simple data processing pipeline into a collaborative ecosystem that can reason, verify, and adapt dynamically to real-world complexity.

7. Results

7.1. Validation Methodology

Empirical validation of the cognitive–agentic architecture was performed through a series of synthetic test scenarios designed to reflect realistic situations in Search and Rescue missions. The main objective was to systematically evaluate the core capabilities of the architecture, focusing on four essential capabilities:

- Information integrity: The ability to maintain the consistency and accuracy of data throughout the cognitive cycle, from PAg to the final report.

- Temporal Coherence (Memory): The Memory Agent’s (MAg) ability to maintain a persistent state of the environment in order to avoid redundant alerts and adapt to evolving situations.

- Accuracy of Hazard Identification: The accuracy of the system in identifying the most significant threat in a given context

- Self-correction capability: The Orchestrator Agent’s ability to detect and rectify internal logical inconsistencies.

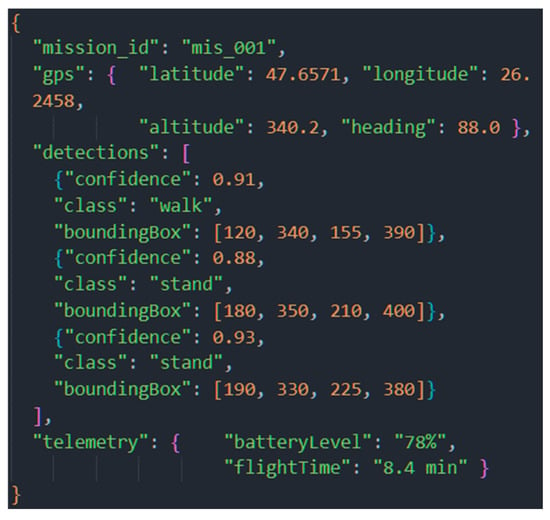

The input data for each scenario, comprising GPS location, camera detections, and drone telemetry, was formatted as a synthetic JSON object, simulating the raw output from the perception module of the ground control station.

7.2. First Scenario—Low Risk Situation

This scenario was designed to evaluate the system’s behavior in a situation often encountered in monitoring missions: detecting a group of tourists on a marked mountain trail with no signs of imminent danger.

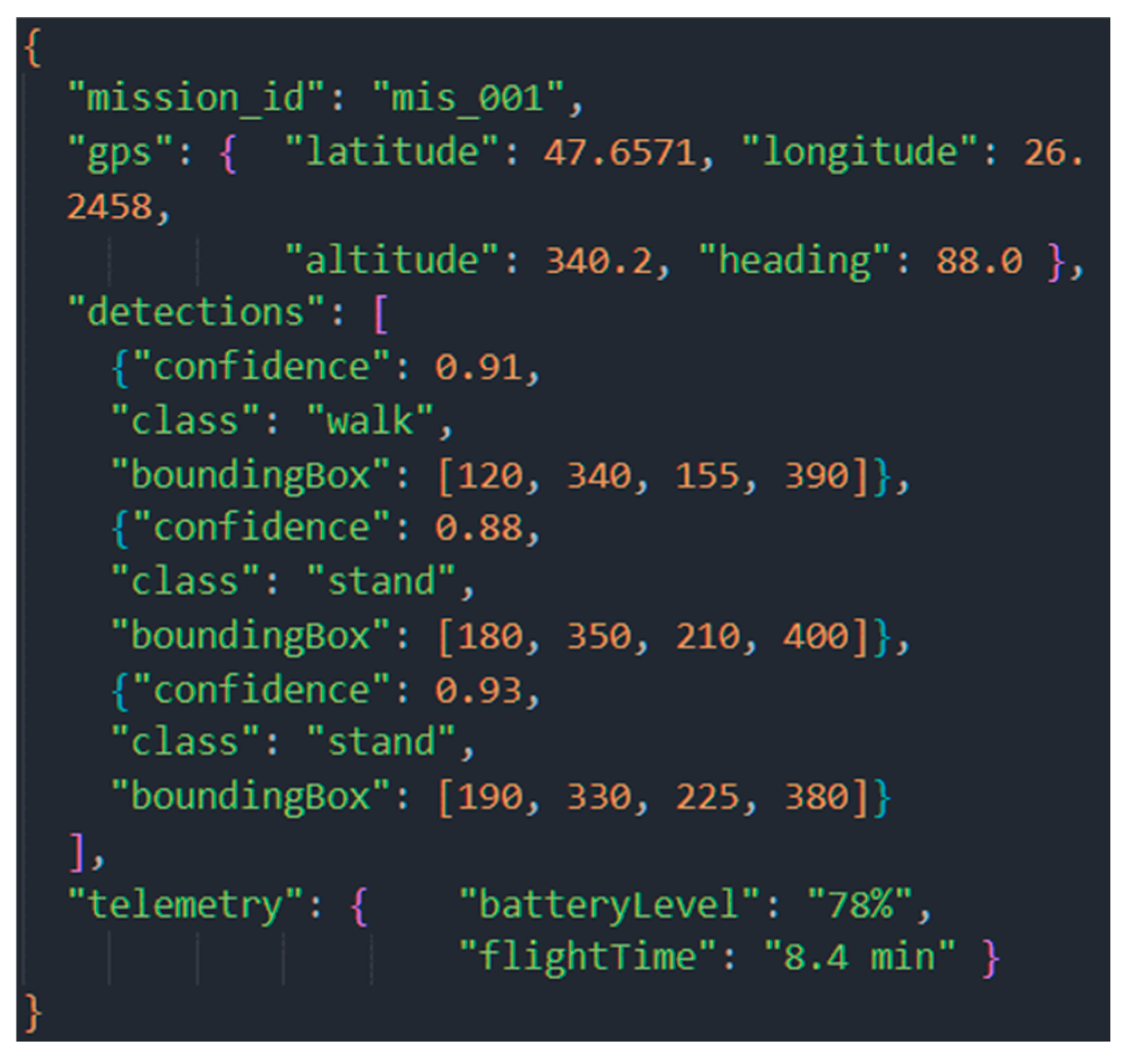

7.2.1. Initial Data

The data stream received by the Perception Agent (PAg) included GPS location information, visual detections from the YOLO model, and UAV telemetry data. The structure of the raw data is shown in Figure 17.

Figure 17.

Standardized data package generated by the Perception Agent (PAg). This structure serves as input for the entire cognitive pipeline.

PAg standardized and filtered the data, removing irrelevant fields (e.g., the empty telemetry error field). This was done to reduce information noise and prepare a coherent set of inputs for subsequent steps in the cognitive cycle.

7.2.2. Contextual Enrichment (RaAg)

After receiving the standardized dataset, the Reasoning Agent (RaAg) initiated the contextual enrichment process. First, RaAg retrieved the detections and stored them in temporary memory. Then, RaAg queried MAg, the long-term memory, which stores information about the terrain and past actions of the current mission.

The RaAg result included a specific finding: “A group of three people was detected: two standing and one walking.” It also provided the geospatial context of their location: “The location (47.6571, 26.2458) is on the T1 Mountain Trail, an area with normal tourist traffic according to the maps in MAg.”.

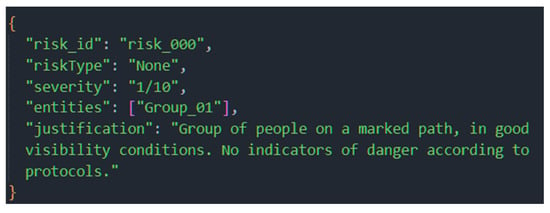

7.2.3. Risk Assessment (RAsAg)

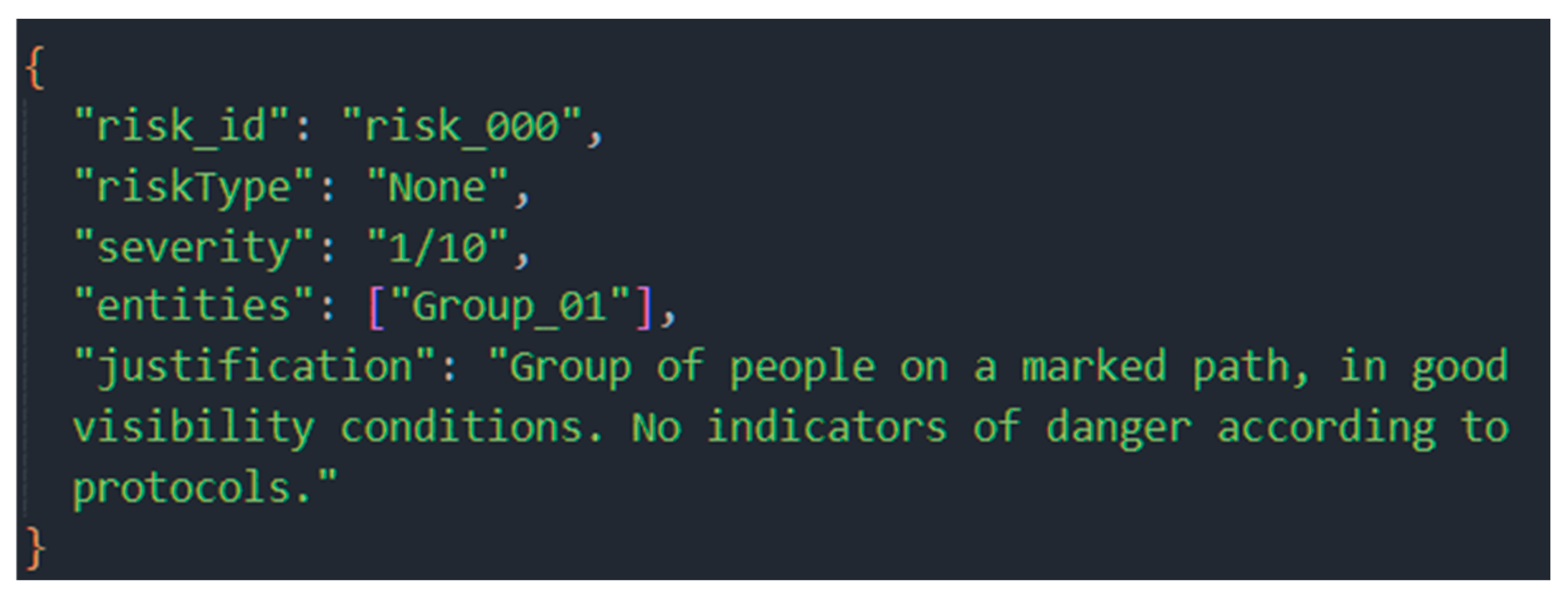

The Risk Assessment Agent (RAg) analyzed the detections and geospatial context according to the set of rules stored in the MAg database. The main factors considered were the group’s location on a marked route, its normal static and dynamic behavior, and the absence of danger signs in the telemetry data.

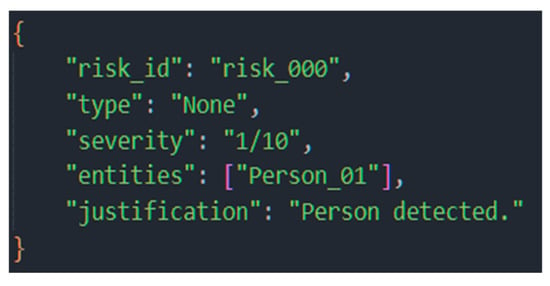

The result was a low-risk assessment, quantified as 1/10 on the severity scale. Figure 18 shows this result.

Figure 18.

Risk assessment report from the Risk Assessment Agent (RAsAg) for the low-risk scenario, showing a correct assessment and justification.

7.2.4. Determining the Response Protocol (ReAg)

Based on the assessment, the Response Agent (ReAg) consulted the operational knowledge base and selected protocol 1/10 with risk ID “risk_000.” The final recommendation was to continue the mission without taking further action, as there was no immediate danger.

7.2.5. Final Validation and Display in GCS

The orchestrator verified the logical consistency between:

- context: “group of people on a marked path”;

- assessment of “low risk (1/10)”;

- selected response protocol.

In the absence of any objections, the recommendation was approved. In the GCS interface:

- The pins corresponding to individuals were marked in green;

- The operator received an informative, non-intrusive notification.

7.3. Second Scenario—High Risk and Self-Correction

This scenario evaluated the system’s performance in critical situations by testing the accuracy of its hazard identification and self-correction capabilities.

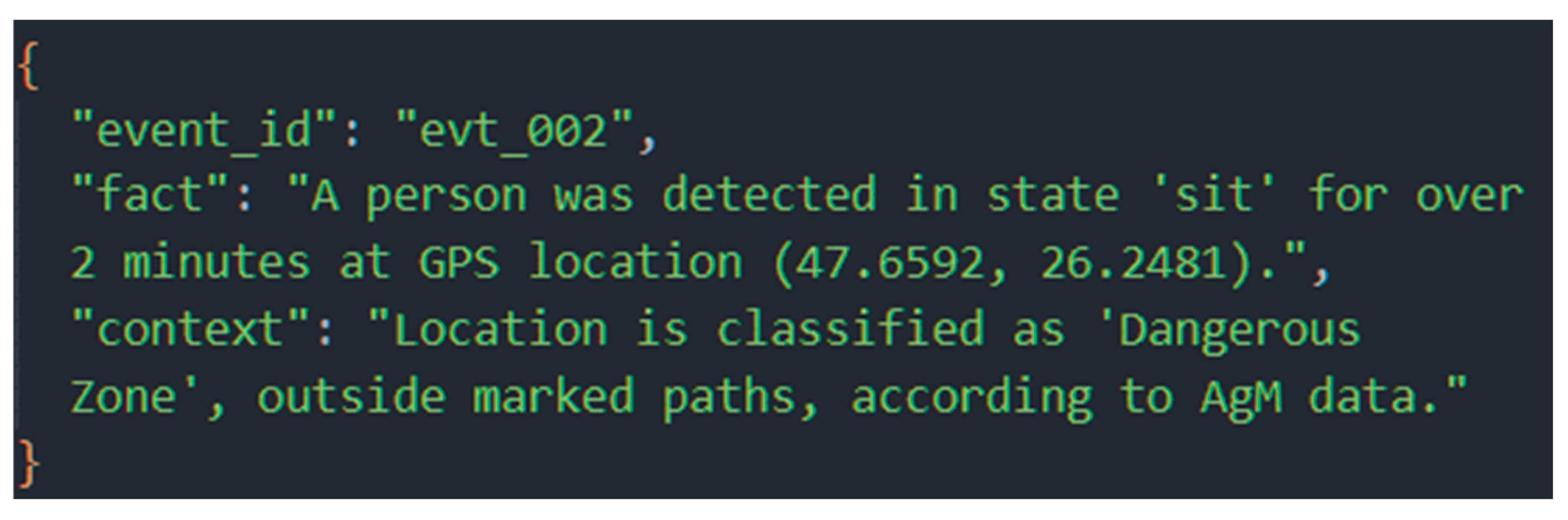

7.3.1. Description of the Initial Situation

During the initialization phase, the detection of a person in a “sitting” state was simulated, which is a significant class because it refers to individuals who may be lying on the ground or unable to move. The RaAg module’s contextualization immediately raised the alert level, transforming a simple detection into a potential medical emergency scenario. The memory agent (MAg) reported that the same entity had previously been detected at approximately the same coordinates, and that the interval between detections had exceeded two minutes. This indicated possible prolonged immobility. According to MAg data, the area in question was classified as dangerous because it was located outside the marked routes. The data provided by RaAg corresponding to this situation is presented in Figure 19.

Figure 19.

Factual report from RaAg for the high-risk scenario, correctly contextualizing the detection as a maximum alert event based on temporal and spatial data from MAg.

7.3.2. Deliberate Error and System Response

To test the self-correction mechanism, an error was intentionally introduced into the RAsAg module, generating an erroneous risk assessment that directly contradicted the input data presented in Figure 20.

Figure 20.

The risk assessment report, in which the assessment error was introduced for testing purposes.

This assessment indicated a low risk (1/10), despite the fact that the input data described a static subject in a hazardous area for an extended period of time.

7.3.3. Self-Correcting Mechanism

The Orchestrator’s supervision function immediately detected a direct conflict between the factual data (a static entity in a dangerous area for more than two minutes) and the risk assessment (a minimum score with no associated risk type).

According to its architecture, the Orchestrator did not propagate the error. Instead, it treated RAsAg as a tool that needed to be reinvoked with additional constraints. The message sent to RAsAg was: “Inconsistency detected. Reevaluate the risk for Entity Person_01 given the ‘sit’ status for over two minutes. Apply the medical emergency protocol.”

Due to the new directive, RAsAg provided an accurate assessment in accordance with the high-risk rules, which the Orchestrator validated as consistent.

7.3.4. Final Result

After validation, the high-risk alert was propagated to the GCS. The visible actions were:

- marking the victim on the interactive map with a red pin;

- displaying the detailed report in a prominent window;

- enabling immediate intervention by the operator.

The architecture’s ability to detect and automatically correct internal errors before they impact the decision chain is thus confirmed by this scenario. This capability ensures the reliability and robustness of risk assessment in critical situations.

7.4. Third Scenario—Demonstration of Adaptation

This scenario was designed to evaluate the long-term memory capacity and contextual adaptation ability of the MAg (Memory Agent) and Orchestrator components. Unlike previous tests, this one focused on temporal consistency and avoiding redundant alerts, both of which are key to reducing operator fatigue and increasing operational response efficiency.

According to Scenario 1, the MAg internal database contained an active event (evt_001), which was associated with the detection of a group of people in an area of interest. Fifteen minutes later, the PAg (Perception Agent) component transmitted a new detection: five people were identified at the same GPS coordinates as in the previous event.

The Orchestrator protocol stipulates that, before generating a new event, a query must be made in MAg to determine whether the reported situation represents:

- An update to an existing event

- A completely new situation

In this case, the check identified a direct spatial-temporal correlation with evt_001. Based on this analysis, the Orchestrator avoided creating a redundant event and instead initiated a procedure to update the existing record.

The instructions sent to MAg were explicit: “Update event EVT_001 with the new data received from PAg while maintaining the complete history of observations.”

This action resulted in the replacement of outdated information with the most recent data, while retaining the previous metadata for further analysis.

The test demonstrated the transition of the system from a purely reactive model to a proactive and contextually adaptive one, capable of constructing a persistent representation of the environment. The benefits of this approach include:

- Reducing operator cognitive fatigue by limiting unnecessary alerts;

- Increasing operational accuracy by consolidating information;

- Improving information continuity in long-term missions.

7.5. Cognitive Performance Analysis

In addition to qualitative validation, key performance indicators (KPIs) were measured. The results, summarized in Table 4, quantify the efficiency and responsiveness of the architecture.

Table 4.

Summary of Cognitive Performance Metrics in Test Scenarios.

Analysis of these metrics reveals several key observations. As expected, decision time increased in the self-correction scenario, reflecting the computational cost of the additional validation cycle. We believe this ~4 s increase is a justified trade-off for the significant improvement in reliability and prevention of critical errors.

7.6. Computational Cost and Reliability in Time-Critical Operations

Integrating a large language model (LLM) into time-critical search and rescue (SAR) operations raises valid concerns about latency, computational requirements, and the reliability of AI-driven reasoning. This section addresses these aspects directly, providing context for the performance of our proof-of-concept system and outlining the architectural choices made to ensure operational viability.

- A.

- Computational Cost and Architectural Choices

One of the key design decisions was ensuring the system could remain practical and deployable without relying on extensive cloud computing infrastructure. To this end, we selected the Gemma 3n E4b LLM, which is optimized for efficient execution on edge and low-resource devices.

Although this model has 8 billion parameters, its architecture enables it to operate with a memory footprint similar to that of a traditional 4B model, requiring only 3–4 GB of VRAM. This low computational cost enables hosting the entire cognitive architecture, including all agents and the LLM orchestrator, on a single, high-end laptop or portable GCS in the field. This makes the system self-contained and resilient to the loss of internet connectivity, which is a common issue in disaster zones.

- B.

- Reliability and Mitigation of Hallucinations

In a SAR context, the most critical concern for an LLM is the risk of “hallucinations,” or incorrect reasoning, which could have dangerous consequences. To ensure reliability, our architecture incorporates two primary, well-established mechanisms from AI safety research.

The first mechanism is grounding in a verifiable knowledge base. The Orchestrator Agent (OAg) does not operate in isolation; it validates every critical piece of information and logical step against the structured, factual data stored in the Memory Agent (MAg). The MAg acts as the system’s verifiable “source of truth.” This process anchors the LLM’s reasoning in established facts, such as operational protocols and risk area maps. It prevents the LLM from generating unverified or fabricated claims and ensures factual accuracy.

The second mechanism is a multi-agent review and self-correction loop. As demonstrated in Scenario 2, it is a practical implementation of the well-established techniques of multi-agent review and self-reflection for mitigating AI errors. The Orchestrator Agent acts as a supervisor that scrutinizes the outputs of specialized sub-agents. When the Orchestrator Agent detects a logical inconsistency, such as a low-risk assessment for a high-risk situation, it does not accept the flawed output. Instead, it initiates a corrective loop that forces the responsible agent to reevaluate its conclusion with additional constraints. This internal validation process ensures that logical errors are identified and corrected before they impact the final decision, thereby enhancing the system’s overall reliability.

- C.

- Latency Analysis and Management

To ensure operational viability and reproducibility in real-time synthetic aperture radar (SAR) scenarios, latency is managed through a concatenated processing pipeline architecture. While a complete reasoning thread takes 11–18 s, the system can initiate a new analysis cycle on incoming data streams at a high frequency (e.g., 1 Hz). This results in a high refresh rate of situational awareness and delivers a fully reasoned output on dynamic events with a median delay while continuously processing new information. The delay only occurs during complex analysis; YOLO detections are shown in real time.

8. Discussion

It is important to note that the current validation of the system has focused on demonstrating its technical feasibility and evaluating the performance of its components and algorithms in a controlled laboratory environment. The system has not yet been tested in real disaster scenarios or with the direct involvement of emergency response personnel. Therefore, the system’s practical usefulness, operational robustness, and acceptance by end users require rigorous validation in the field. Collaborating with emergency responders in environments that simulate real disaster conditions for this stage of extensive testing is a crucial direction for future research. The aim is to optimize the system for operational deployment and ensure its practical relevance.

Our first goal was to validate the cognitive architecture in a controlled setting. To transition this prototype into a robust solution, we plan a dual approach: augmenting the training dataset with images from challenging conditions and integrating an infrared camera to ensure victim detection regardless of visibility.

Our results demonstrate the feasibility of using a multi-agent cognitive architecture orchestrated by an LLM to transform a drone from a simple sensor into a proactive partner in search and rescue missions. We demonstrated the system’s ability to consume multimodal information, analyze context and potential dangers, maintain temporal persistence of information, and self-correct when erroneous elements appeared.

Unlike previous approaches that focused on isolated tasks such as detection optimization or autonomous flight, our system takes a practical, holistic approach. By integrating a cognitive–agentic system, we have overcome the limitations of traditional systems that only provide raw or semi-processed data streams without well-defined context. Our system goes beyond simple detection. It assesses the status of people on the ground based on external contexts and geospatial data. This is an essential capability that we validated in Scenario 2. This reasoning ability is a promising development for a variety of applications where artificial intelligence can collaborate in the field.

Beyond the immediate scope of search and rescue, the modularity and cognitive–agentic nature of our architecture provides significant generalizability, which broadens its potential impact across diverse critical domains. For example, in precision agriculture, the system could be adapted to automatically monitor crop health and implement targeted interventions. This would entail retraining the Perception Agent (PAg) using multispectral or hyperspectral imagery to detect subtle changes in plant health and specific pest signatures. Meanwhile, the Memory Agent (MAg) would be populated with agriculture-specific data, such as soil composition maps, crop growth cycles, and pest libraries. Then, the Reasoning Agent (RaAg) and the LLM Core would re-contextualize their objectives from human risk assessment to analyzing crop yield threats and recommending precise interventions. Similarly, for environmental monitoring and early wildfire detection and management specifically, the PAg would be reconfigured to prioritize thermal sensing for heat anomalies. The MAg would store geographical data, fuel load maps, and real-time wind patterns. The RaAg and the LLM Core would then focus on fire dynamics, predicting spread patterns and assessing environmental risks. These concrete scenarios underscore the versatility of our framework by detailing the specific adaptation requirements for each agent and demonstrating its capacity to transform human–robot collaboration in a multitude of complex, real-world challenges.

Although validation was performed by injecting synthetic data to demonstrate the logical consistency of the cognitive architecture, this method does not account for real-world sensor noise, communication packet loss, or the visual complexity of a real disaster. Additionally, although the delivery module has been conceptually validated as part of our closed-loop system, its real-world reliability has yet to be quantified. We acknowledge that the current 3D-printed mechanism serves as a functional prototype and that its performance is subject to numerous environmental and physical constraints. For operational deployment, a thorough characterization of factors such as aerodynamic drift due to wind, drop altitude, and payload weight variation is essential. Future work will address these issues with a two-pronged validation strategy. First, we will develop a physics-based simulation to model the package’s trajectory and compute a probabilistic landing zone. Second, we will conduct controlled field experiments to measure the circular error probable (CEP) and build a reliable performance profile for the system.