Gated Fusion Networks for Multi-Modal Violence Detection

Abstract

1. Introduction

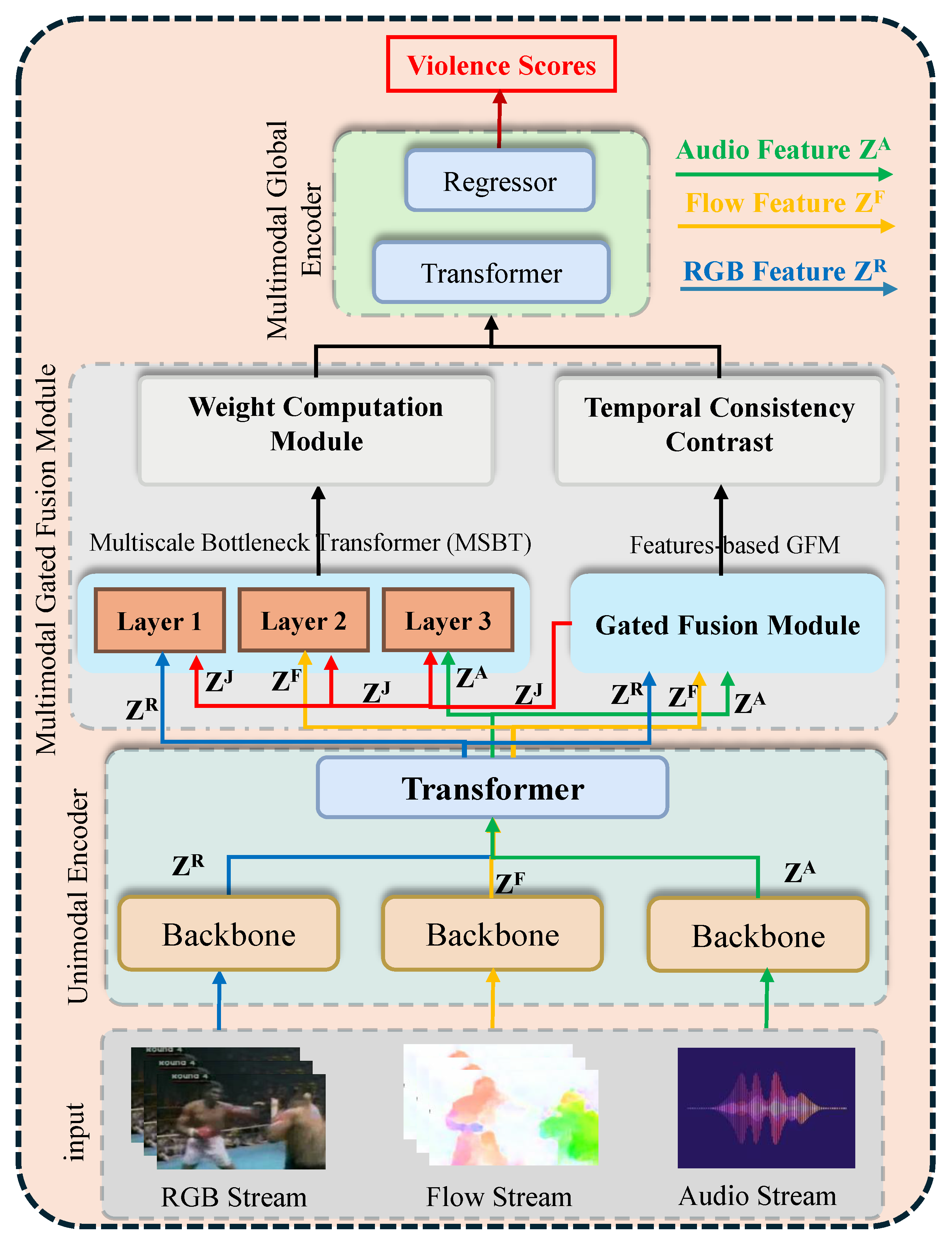

- Gated Fusion for Multi-Modal Integration: Our gated fusion technique successfully integrates multiple features, including visual, audio, and motion, to overcome the inherent modality imbalance and enhance the reliability and dynamics of multimodal data integration.

- Joint and Single Feature Passing through MSBT: To enhance the model’s ability to identify violence, we employ a multi-scale bottleneck transformer (MSBT) on individual modality features and jointly fused data. This allows the model to capture both modality-specific and holistic information.

- Temporal Consistency Contrast Loss: To ensure temporal consistency in the fused features, we introduce a unique TCC loss that addresses modality asynchrony by feature-level alignment of multimodal data. These notable enhancements in the framework demonstrate the effectiveness of our method in weakly supervised settings, where obtaining a labeled dataset can be particularly challenging.

2. Related Work

2.1. Detection of Weakly Supervised Violence

2.2. Transformers for Multi-Modal Visual, Audio, and Motion Data Fusion

- Methods of Multi-Modal Fusion: Most of the current fusion techniques (direct fusion and bilinear pooling-based fusion) are either too computationally intensive or unable to fully utilize cross-modal information [18]. The aforementioned issues can be avoided using an attention-based fusion method. One technique frequently employed in multi-modal fusion is the attention mechanism, which is often used for modal mapping.

- Single-Model Attention: Multiple research investigations have demonstrated that integrating an attention module into tasks such as object identification and picture classification can significantly enhance performance. To achieve adaptive fusion of several modalities while minimizing redundant noise, reference [11] created the fusion net, which chooses the k most representative feature maps. Zhou et al. [13] weighted the models for the network to concentrate on more advantageous fields and successfully integrated various models. To obtain additional information between models, reference [19] weighed the feature maps from two-stream Siamese networks using the weight generation subnetwork as inputs. The residual cross-modal connections were then used to acquire the improved features, which were concatenated [3]. To achieve the quality-aware fusion of several models, reference [20] created an instance adaptor that uses two completely linked layers for each modal. They then predicted the modal weight.

- Cross-Model Attention: In multimodal fusion, cross-modal mechanisms have become increasingly popular, as they enable efficient communication between various modalities. Wei et al. [21] incorporated a cross-attention mechanism into their co-attention module to facilitate cross-modal interaction. Cao et al. [5] developed a cross-modal encoder to compress features from both modalities. They then used multi-head attention to return the enlarged data to each modality. This two-stage propagation of cross-modal features reduces noise and improves audio-visual features. Similarly, Wu et al. [1] recorded audio–visual interactions using a bimodal attention module to increase the precision of highlight detection. Jiang et al. [22] presented a multi-level cross-spatial attention module that maps the weighted features back to their original dimensions. They calculated cross-modal attention by passing each encoder’s data to a cross-modal fusion module.Two fusion attention strategies, merged attention and co-attention, were investigated by [23]. While the co-attention module feeds text and visual data into independent transformer blocks and then uses cross-attention to facilitate cross-modal interaction, the merged attention module concatenates and processes these features through a transformer block.

2.3. Learning Graph Representation for Temporal Relationships

2.4. Contrastive Learning in Multiple Modes

3. Methodology

- Visual signals in RGB frames, such as aggressive behaviors or physical altercations.

- Motion characteristics in optical flow, including abrupt or erratic movements.

- Auditory cues from audio spectrograms, like screams, collisions, or alarms.

- Reconstruct normal patterns across multiple modalities:The model learns to identify and reconstruct patterns characteristic of normal and non-violent behavior within each modality. By training only on standard samples, the framework develops a baseline understanding of typical features, helping it distinguish anomalies when presented with violent events.

- Ensure temporal consistency between consecutive frames:The framework is designed to maintain consistency over time, capturing the sequential nature of video data. This involves learning the natural flow of events between frames, such as smooth transitions in motion, visual coherence, and consistent auditory signals. Any disruption in this temporal structure may indicate violent behavior.

- Maximize mutual information across complementary modalities:By aligning information from different modalities (e.g., visual, motion, and audio), the model strengthens its ability to detect violence. Maximizing mutual information ensures that the features extracted from one modality, such as optical flow, complement those from other modalities, like RGB frames or audio spectrograms, thereby creating a holistic understanding of the event.

3.1. Techniques for Multi-Modal Fusion

3.2. Offline and Online Detection

3.2.1. Offline Detection

3.2.2. Online Detection

- The transition layer transforms the input features as follows:where Conv is a convolutional layer, AvgPool performs average pooling, BN is batch normalization, and SMU represents The Smooth Monotonic Unit activation function.

- In the Smoothing Features step, the features are refined using a 1D convolution with a kernel size of 1, expressed as

- For Final Classification, the system uses a causal 1D convolution with a kernel size of 5 to compute the online detection score:where is a kernel size 5 1D causal convolution. The cumulative detection score combines online and offline features using a weighted sum:where the weighting parameter balances online and offline detection.

- Using the fused features of the multi-modal global encoder .

- Adding more detection targets to the TCC and MIL losses.

3.3. Uni-Modal Encoder

3.4. Multi-Modal Gated Fusion Module

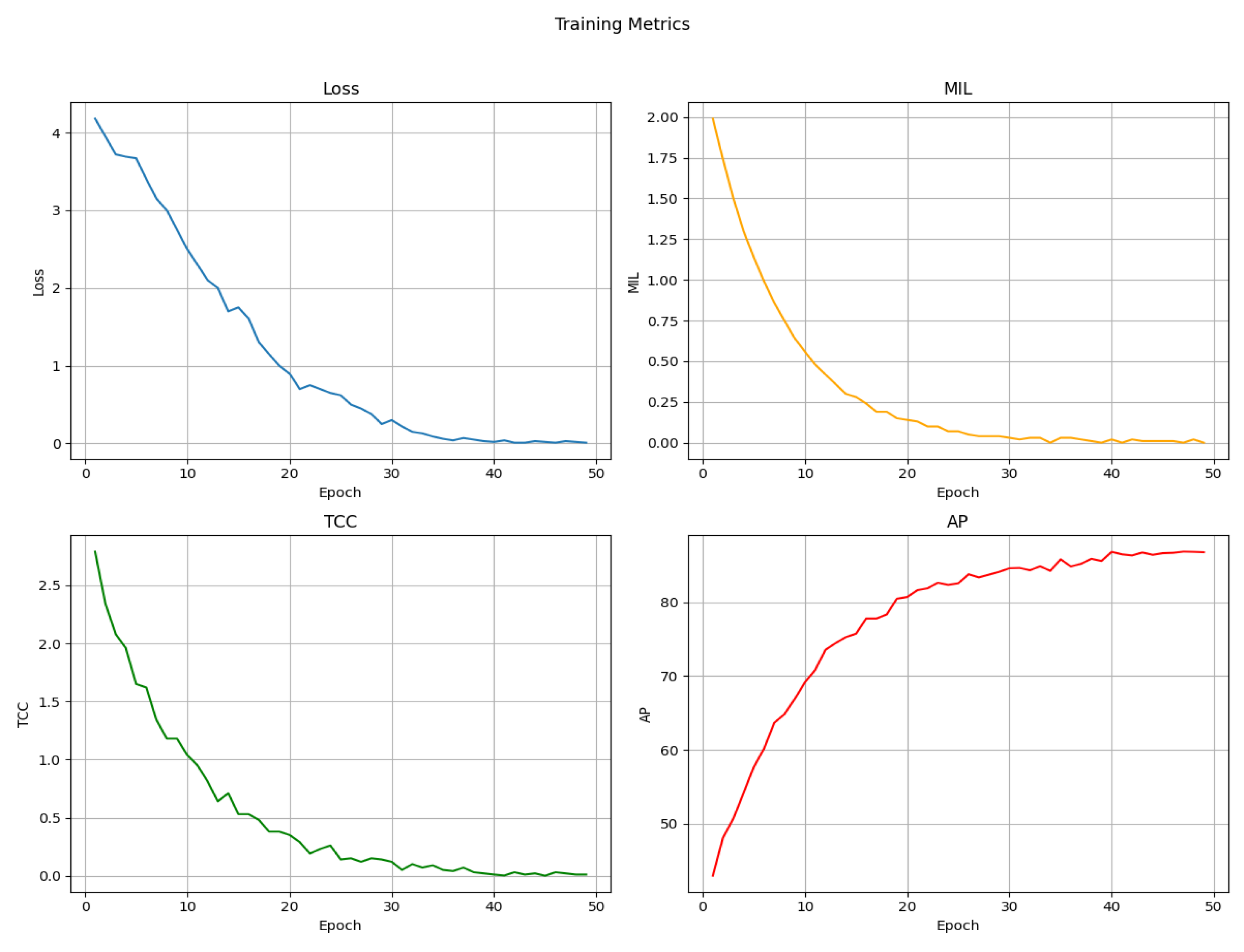

4. Experiments

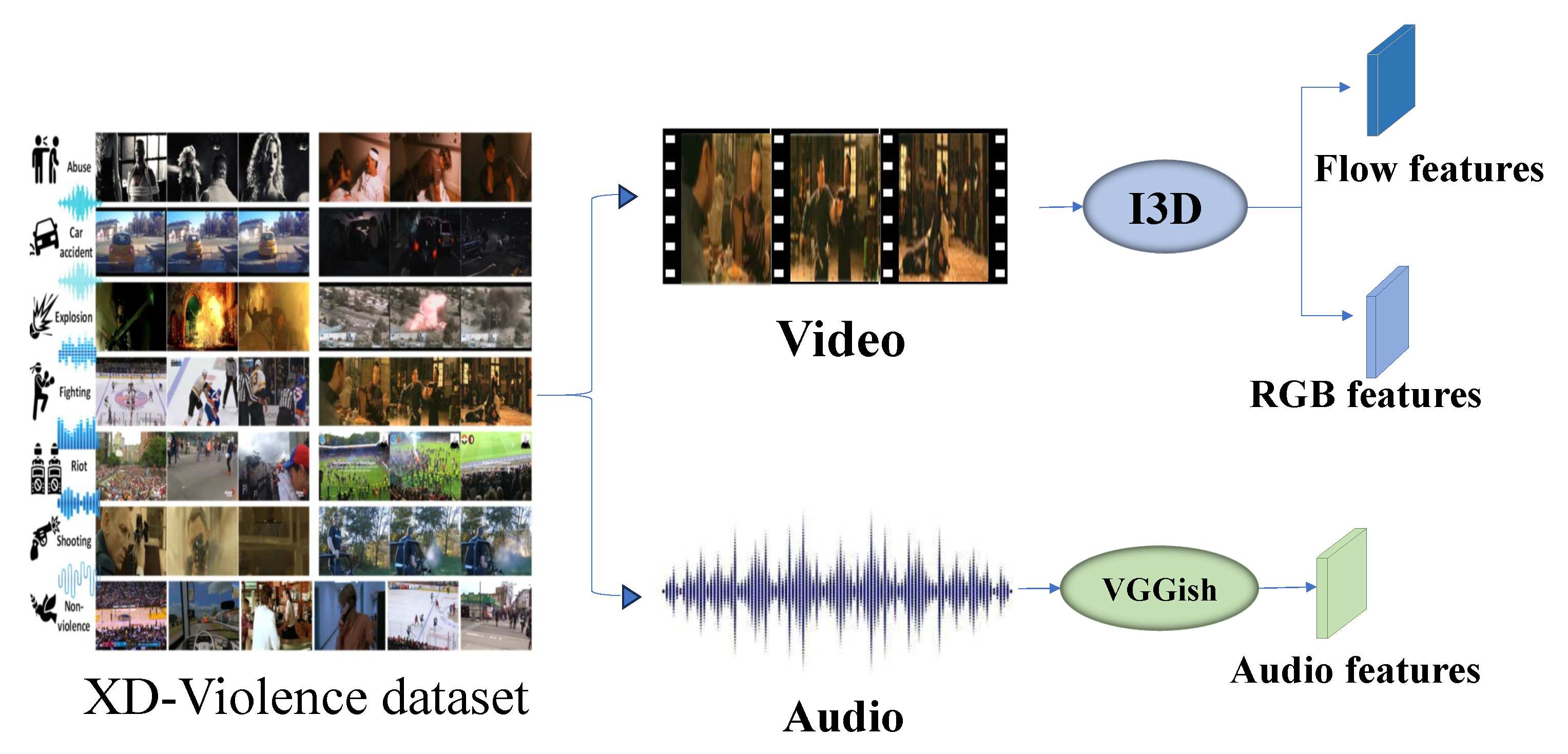

4.1. Datasets

- Flow Properties (Motion and Action Dynamics): By utilizing I3D’s optical flow processing, the movement and dynamics of actions within a scene are captured. This enables the model to identify violent movements, such as aggressive gestures, rapid motion, or sudden physical actions, which are key indicators of violence.

- RGB Features (Scene Characteristics and Visual Content): I3D also analyzes RGB frames from the video to understand the visual context of the scene. This includes recognizing key objects, spatial layouts, and environmental color patterns. Visual content helps identify violent interactions, such as people in conflict, the use of weapons, or other contextual visual cues.

- Audio Features (Sound Patterns and Auditory Cues): VGGish processes the audio track, extracting features from sound patterns. The model tracks crucial auditory signals that indicate violence, such as explosions, screams, loud impacts, or rapid movement sounds. These audio features provide additional context to the scene, capturing elements that may not be visible in the video.

4.2. Evaluation Standers

4.3. Implementation Details

4.4. Ablation Studies

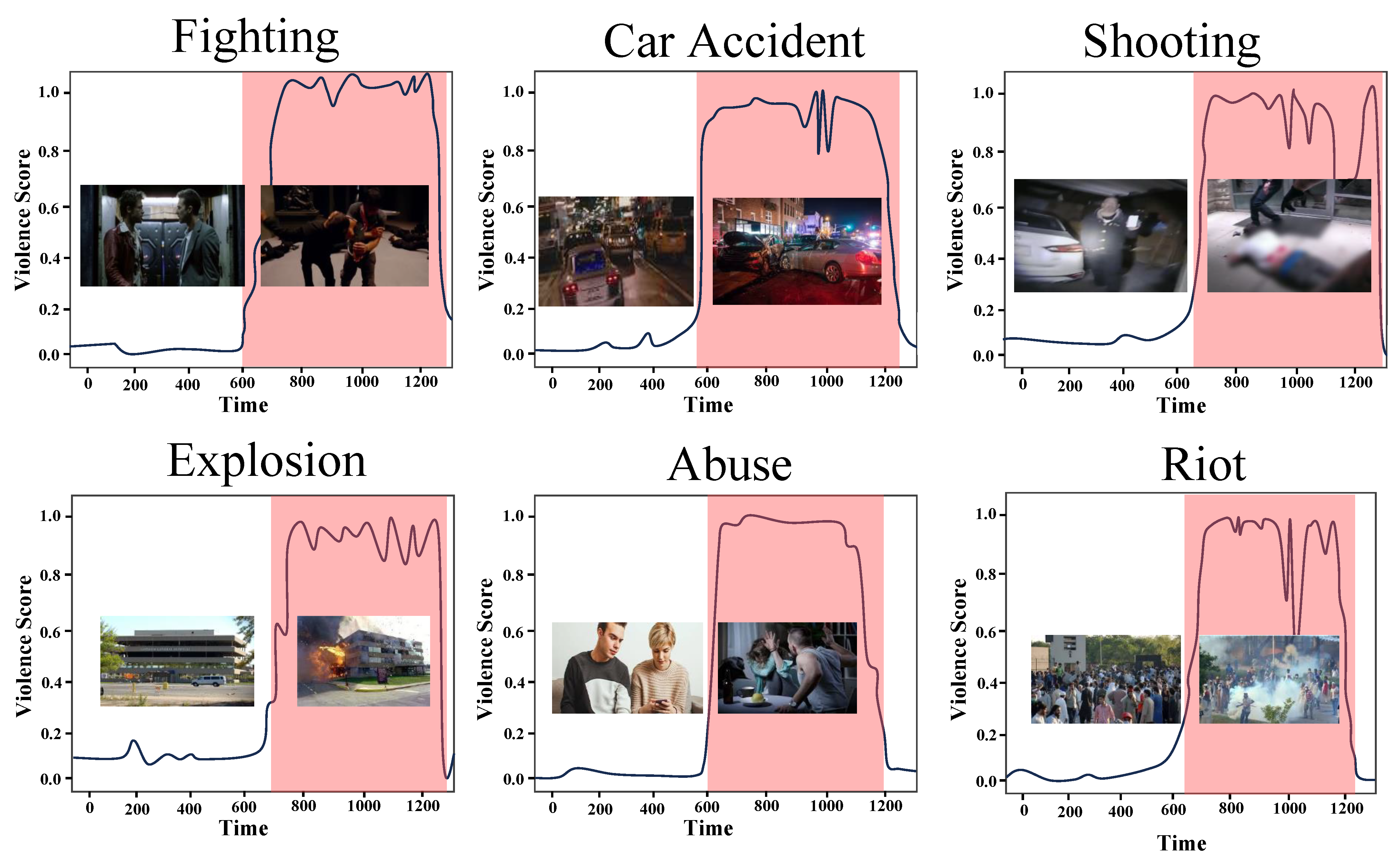

4.5. Qualitative Evaluation

4.6. Comparison with State of the Art

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wu, P.; Liu, J.; Shi, Y.; Sun, Y.; Shao, F.; Wu, Z.; Yang, Z. Not only look but also listen: Learning multimodal violence detection under weak supervision. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 322–339. [Google Scholar] [CrossRef]

- Wu, P.; Liu, X.; Liu, J. Weakly supervised audio-visual violence detection. IEEE Trans. Multimed. 2022, 25, 1674–1685. [Google Scholar] [CrossRef]

- Sun, S.; Gong, X. Multi-scale Bottleneck Transformer for Weakly Supervised Multimodal Violence Detection. arXiv 2024, arXiv:2405.05130. [Google Scholar] [CrossRef]

- Wu, Y.; Yang, H.; Tang, J.; Yu, Y. Multi-objective re-synchronizing of bus timetable: Model, complexity and solution. Transp. Res. Part C Emerg. Technol. 2016, 67, 149–168. [Google Scholar] [CrossRef]

- Cao, B.; Sun, Y.; Zhu, P.; Hu, Q. Multi-modal gated mixture of local-to-global experts for dynamic image fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 23555–23564. [Google Scholar] [CrossRef]

- Fan, Y.; Yu, Y.; Lu, W.; Han, Y. A Cross-modal and Redundancy-reduced Network for Weakly-Supervised Audio-Visual Violence Detection. In Proceedings of the 5th ACM International Conference on Multimedia in Asia, Tainan, Taiwan, 6–8 December 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Li, J.; Wang, X.; Lv, G.; Zeng, Z. GraphMFT: A graph network-based multimodal fusion technique for emotion recognition in conversation. Neurocomputing 2023, 550, 126427. [Google Scholar] [CrossRef]

- Pang, W.F.; He, Q.H.; Hu, Y.J.; Li, Y.X. Violence detection in videos based on fusing visual and audio information. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–12 June 2021; pp. 2260–2264. [Google Scholar] [CrossRef]

- Choqueluque-Roman, D.; Camara-Chavez, G. Weakly supervised violence detection in surveillance video. Sensors 2022, 22, 4502. [Google Scholar] [CrossRef]

- Tian, Y.; Pang, G.; Chen, Y.; Singh, R.; Verjans, J.W.; Carneiro, G. Weakly-supervised video anomaly detection with robust temporal feature magnitude learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4975–4986. [Google Scholar] [CrossRef]

- Li, S.; Liu, F.; Jiao, L. Self-training multi-sequence learning with transformer for weakly Supervised video anomaly detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 22 February–1 March 2022; Volume 36, pp. 1395–1403. [Google Scholar] [CrossRef]

- Pang, G.; Shen, C.; Jin, H.; van den Hengel, A. Deep weakly-supervised anomaly detection. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; pp. 1795–1807. [Google Scholar]

- Zhou, X.; Peng, X.; Wen, H.; Luo, Y.; Yu, K.; Yang, P.; Wu, Z. Learning weakly supervised audio-visual violence detection in hyperbolic space. Image Vis. Comput. 2024, 151, 105286. [Google Scholar] [CrossRef]

- Zhou, H.; Yu, J.; Yang, W. Dual memory units with uncertainty regulation for weakly supervised video anomaly detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 3769–3777. [Google Scholar] [CrossRef]

- Cai, L.; Mao, X.; Zhou, Y.; Long, Z.; Wu, C.; Lan, M. A Survey on Temporal Knowledge Graph: Representation Learning and Applications. arXiv 2024, arXiv:2403.04782. [Google Scholar] [CrossRef]

- Karim, H.; Doshi, K.; Yilmaz, Y. Real-time weakly supervised video anomaly detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 6848–6856. [Google Scholar] [CrossRef]

- Yu, J.; Liu, J.; Cheng, Y.; Feng, R.; Zhang, Y. Modality-aware contrastive instance learning with self-distillation for weakly-supervised audio-visual violence detection. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 6278–6287. [Google Scholar] [CrossRef]

- Abduljaleel, I.Q.; Ali, I.H. Deep Learning and Fusion Mechanism-based Multimodal Fake News Detection Methodologies: A Review. Eng. Technol. Appl. Sci. Res. 2024, 14, 15665–15675. [Google Scholar] [CrossRef]

- Vaswani, A. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Lu, L.; Dong, M.; Wang, S.B.; Zhang, L.; Ng, C.H.; Ungvari, G.S.; Li, J.; Xiang, Y.T. Prevalence of workplace violence against health-care professionals in China: A comprehensive meta-analysis of observational surveys. Trauma Violence Abus. 2020, 21, 498–509. [Google Scholar] [CrossRef]

- Wei, X.; Wu, D.; Zhou, L.; Guizani, M. Cross-modal communication technology: A survey. Fundam. Res. 2025, 5, 2256–2267. [Google Scholar] [CrossRef]

- Jiang, W.D.; Chang, C.Y.; Kuai, S.C.; Roy, D.S. From explicit rules to implicit reasoning in an interpretable violence monitoring system. arXiv 2024, arXiv:2410.21991. [Google Scholar] [CrossRef]

- Wei, X.; Zhang, T.; Li, Y.; Zhang, Y.; Wu, F. Multi-modality cross attention network for image and sentence matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10941–10950. [Google Scholar] [CrossRef]

- Alsarhan, T.; Ali, U.; Lu, H. Enhanced discriminative graph convolutional network with adaptive temporal modelling for skeleton-based action recognition. Comput. Vis. Image Underst. 2022, 216, 103348. [Google Scholar] [CrossRef]

- Li, H.; Wang, J.; Li, Z.; Cecil, K.M.; Altaye, M.; Dillman, J.R.; Parikh, N.A.; He, L. Supervised contrastive learning enhances graph convolutional networks for predicting neurodevelopmental deficits in very preterm infants using brain structural connectome. NeuroImage 2024, 291, 120579. [Google Scholar] [CrossRef] [PubMed]

- Ghadiya, A.; Kar, P.; Chudasama, V.; Wasnik, P. Cross-Modal Fusion and Attention Mechanism for Weakly Supervised Video Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 1965–1974. [Google Scholar] [CrossRef]

- Wei, D.L.; Liu, C.G.; Liu, Y.; Liu, J.; Zhu, X.G.; Zeng, X.H. Look, listen and pay more attention: Fusing multi-modal information for video violence detection. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 1980–1984. [Google Scholar] [CrossRef]

- Duja, K.U.; Khan, I.A.; Alsuhaibani, M. Video Surveillance Anomaly Detection: A Review on Deep Learning Benchmarks. IEEE Access 2024, 12, 164811–164842. [Google Scholar] [CrossRef]

- Wan, Z.; Mao, Y.; Zhang, J.; Dai, Y. Rpeflow: Multimodal fusion of RGB-pointcloud-event for joint optical flow and scene flow estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 10030–10040. [Google Scholar] [CrossRef]

- Yang, L.; Wu, Z.; Hong, J.; Long, J. MCL: A contrastive learning method for multimodal data fusion in violence detection. IEEE Signal Process. Lett. 2022, 30, 408–412. [Google Scholar] [CrossRef]

- Khan, M.; Saad, M.; Khan, A.; Gueaieb, W.; El Saddik, A.; De Masi, G.; Karray, F. Action knowledge graph for violence detection using audiovisual features. In Proceedings of the 2024 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 6–8 January 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Guo, Y.; Guo, H.; Yu, S.X. Co-sne: Dimensionality reduction and visualization for hyperbolic data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 21–30. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Gemmeke, J.F.; Ellis, D.P.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio set: An ontology and human-labeled dataset for audio events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 776–780. [Google Scholar] [CrossRef]

- Hershey, S.; Chaudhuri, S.; Ellis, D.P.; Gemmeke, J.F.; Jansen, A.; Moore, R.C.; Plakal, M.; Platt, D.; Saurous, R.A.; Seybold, B.; et al. CNN architectures for large-scale audio classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 131–135. [Google Scholar] [CrossRef]

- Nagrani, A.; Yang, S.; Arnab, A.; Jansen, A.; Schmid, C.; Sun, C. Attention bottlenecks for multimodal fusion. Adv. Neural Inf. Process. Syst. 2021, 34, 14200–14213. [Google Scholar]

- Yan, S.; Xiong, X.; Arnab, A.; Lu, Z.; Zhang, M.; Sun, C.; Schmid, C. Multiview transformers for video recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3333–3343. [Google Scholar] [CrossRef]

- Sultani, W.; Chen, C.; Shah, M. Real-world anomaly detection in surveillance videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6479–6488. [Google Scholar] [CrossRef]

- Clavié, B.; Alphonsus, M. The unreasonable effectiveness of the baseline: Discussing SVMs in legal text classification. In Legal Knowledge and Information Systems; IOS Press: Amsterdam, The Netherlands, 2021; pp. 58–61. [Google Scholar] [CrossRef]

- Le, X.; Yin, J.; Zhou, X.; Chu, B.; Hu, B.; Wang, L.; Li, G. SCADA Anomaly Detection Scheme Based on OCSVM-PSO. In Proceedings of the 2024 6th International Conference on Energy Systems and Electrical Power (ICESEP), Wuhan, China, 21–23 June 2024; pp. 1335–1340. [Google Scholar] [CrossRef]

- Krämer, G. Hasan al-Banna; Simon and Schuster: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Cong, R.; Qin, Q.; Zhang, C.; Jiang, Q.; Wang, S.; Zhao, Y.; Kwong, S. A weakly supervised learning framework for salient object detection via hybrid labels. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 534–548. [Google Scholar] [CrossRef]

- Wu, J.C.; Hsieh, H.Y.; Chen, D.J.; Fuh, C.S.; Liu, T.L. Self-supervised sparse representation for video anomaly detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 729–745. [Google Scholar]

- Jiang, M.; Hou, C.; Zheng, A.; Hu, X.; Han, S.; Huang, H.; He, X.; Yu, P.S.; Zhao, Y. Weakly supervised anomaly detection: A survey. arXiv 2023, arXiv:2302.04549. [Google Scholar] [CrossRef]

- Pu, Y.; Wu, X.; Yang, L.; Wang, S. Learning prompt-enhanced context features for weakly-supervised video anomaly detection. IEEE Trans. Image Process. 2024. [Google Scholar] [CrossRef] [PubMed]

| Index | Manner | AP (%) | Param. (M) |

|---|---|---|---|

| 1 | Wu et al. [1] | 79.86 | 0.851 |

| 2 | Concat Fusion [13] | 83.35 | 0.758 |

| 3 | Additive Fusion [13] | 82.41 | 0.594 |

| 4 | Bilinear and Concat [13] | 81.33 | 0.644 |

| 5 | Detour Fusion [13] | 85.67 | 0.607 |

| 6 | Gated Fusion * | 86.85 | 0.637 |

| MSBT | Gated Fusion | TCC | R+A | R+F | A+F | R+A+F |

|---|---|---|---|---|---|---|

| ✗ | ✗ | ✗ | 71.58 | 73.19 | 70.38 | 75.93 |

| ✓ | ✗ | ✓ | 82.67 | 80.46 | 78.55 | 81.74 |

| ✗ | ✓ | ✓ | 79.78 | 80.83 | 79.11 | 82.38 |

| ✓ | ✓ | ✗ | 78.16 | 76.97 | 75.71 | 80.05 |

| ✗ | ✓ | ✗ | 80.12 | 79.45 | 76.89 | 81.27 |

| ✓ | ✗ | ✗ | 77.95 | 78.23 | 75.34 | 79.68 |

| ✗ | ✗ | ✓ | 78.34 | 80.17 | 78.92 | 81.02 |

| ✓ | ✓ | ✓ | 83.24 | 81.51 | 80.63 | 86.85 |

| Manner | Method | Modality | AP (%) | Param. (M) |

|---|---|---|---|---|

| Unsupervised Learning | SVM baseline [39] | - | 50.78 | - |

| OCSVM [40] | - | 27.25 | - | |

| Hasan al-Banna [41] | - | 30.77 | - | |

| Supervised (Video) | Sultani et al. [38] | Video | 75.68 | - |

| Wu et al. [1] | Video | 75.90 | - | |

| RTFM [10] | Video | 77.81 | 12.067 | |

| MSL et al. [42] | Video | 78.28 | - | |

| S3R [43] | Video | 80.26 | - | |

| UR-DMU [14] | Video | 81.66 | - | |

| Zhang et al. [44] | Video | 78.74 | - | |

| Weakly Supervised (Audio + Video) | Wu et al. [1] | Audio + Video | 78.64 | 0.843 |

| Wu et al. [1] | Audio + Video | 78.66 | 1.539 | |

| RTFM [10] | Audio + Video | 78.54 | 13.510 | |

| RTFM [10] | Audio + Video | 78.54 | 13.190 | |

| Pang et al. [12] | Audio + Video | 81.69 | 1.876 | |

| MACIL-SD [17] | Audio + Video | 83.40 | 0.678 | |

| UR-DMU [45] | Audio + Video | 81.77 | - | |

| Zhang et al. [44] | Audio + Video | 81.43 | - | |

| HyperVD [13] | Audio + Video | 85.67 | 0.607 | |

| Weakly Supervised (Proposed) | MSBT Gated (Proposed) | Video + Audio | 83.39 | 0.743 |

| MSBT Gated (Proposed) | Audio + Video + Flow | 86.85 | 0.913 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmad, B.; Khan, M.; Sajjad, M. Gated Fusion Networks for Multi-Modal Violence Detection. AI 2025, 6, 259. https://doi.org/10.3390/ai6100259

Ahmad B, Khan M, Sajjad M. Gated Fusion Networks for Multi-Modal Violence Detection. AI. 2025; 6(10):259. https://doi.org/10.3390/ai6100259

Chicago/Turabian StyleAhmad, Bilal, Mustaqeem Khan, and Muhammad Sajjad. 2025. "Gated Fusion Networks for Multi-Modal Violence Detection" AI 6, no. 10: 259. https://doi.org/10.3390/ai6100259

APA StyleAhmad, B., Khan, M., & Sajjad, M. (2025). Gated Fusion Networks for Multi-Modal Violence Detection. AI, 6(10), 259. https://doi.org/10.3390/ai6100259