Abstract

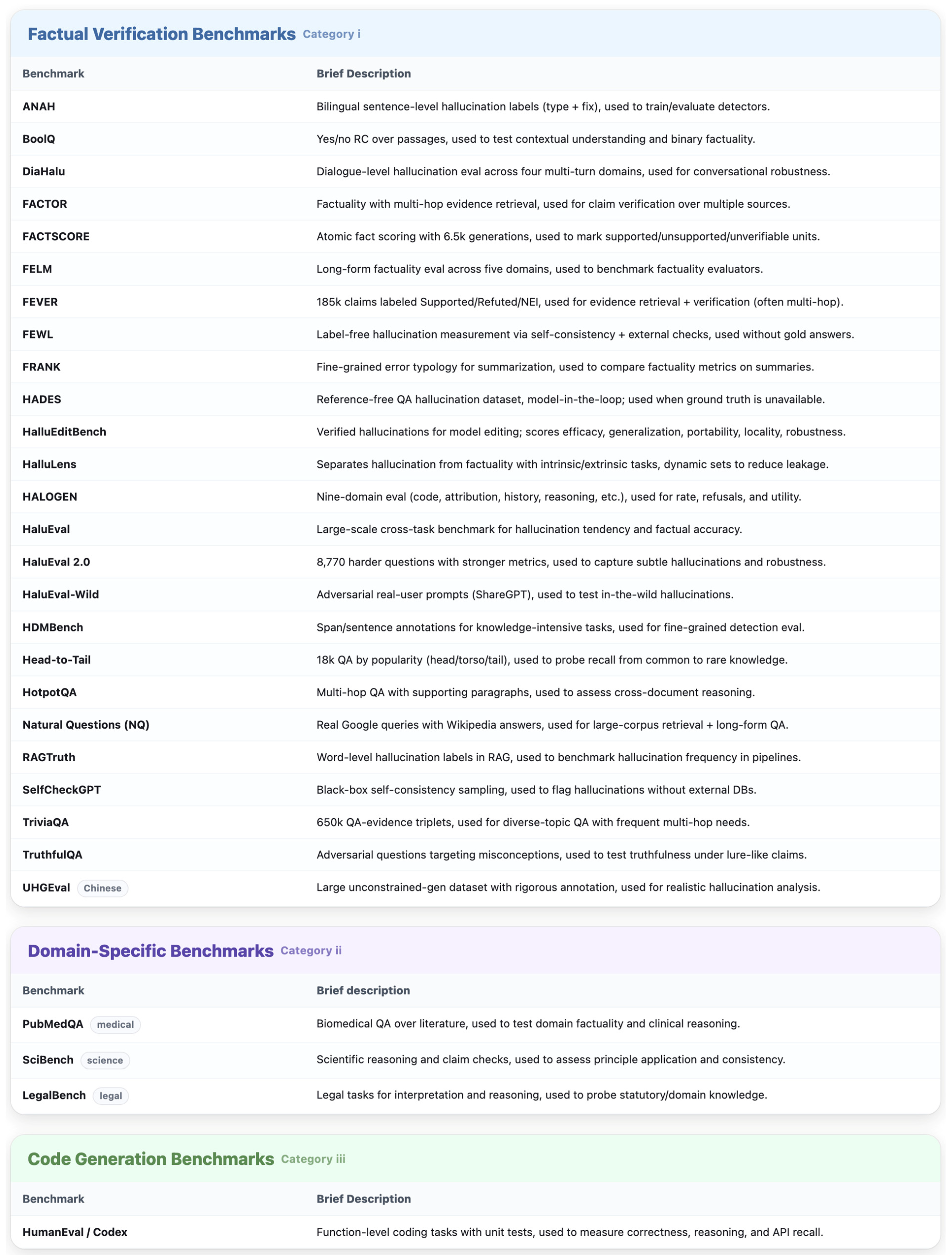

Large Language Models (LLMs) exhibit remarkable generative capabilities but remain vulnerable to hallucinations—outputs that are fluent yet inaccurate, ungrounded, or inconsistent with source material. To address the lack of methodologically grounded surveys, this paper introduces a novel method-oriented taxonomy of hallucination mitigation strategies in text-based LLMs. The taxonomy organizes over 300 studies into six principled categories: Training and Learning Approaches, Architectural Modifications, Input/Prompt Optimization, Post-Generation Quality Control, Interpretability and Diagnostic Methods, and Agent-Based Orchestration. Beyond mapping the field, we identify persistent challenges such as the absence of standardized evaluation benchmarks, attribution difficulties in multi-method systems, and the fragility of retrieval-based methods when sources are noisy or outdated. We also highlight emerging directions, including knowledge-grounded fine-tuning and hybrid retrieval–generation pipelines integrated with self-reflective reasoning agents. This taxonomy provides a methodological framework for advancing reliable, context-sensitive LLM deployment in high-stakes domains such as healthcare, law, and defense.

1. Introduction

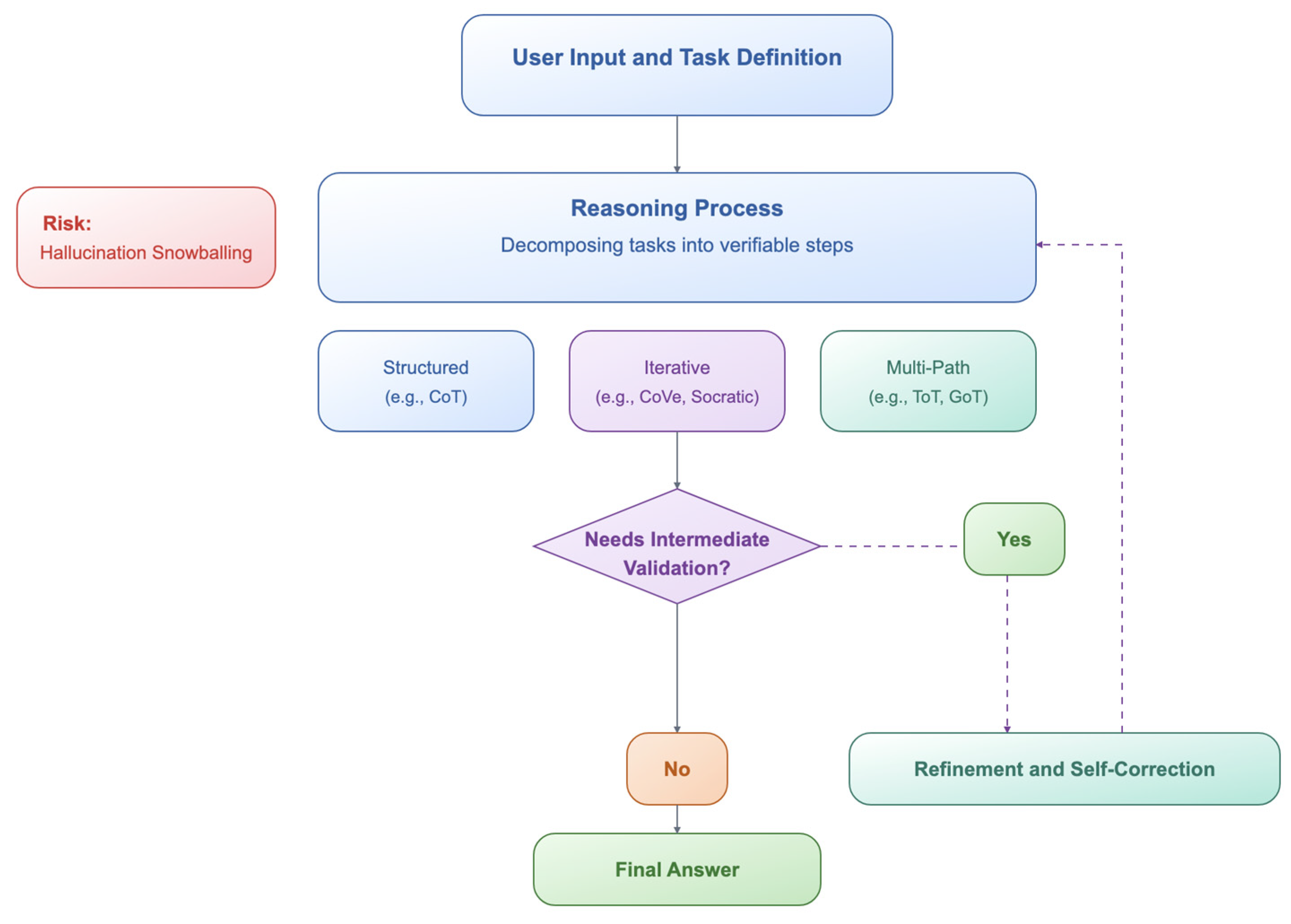

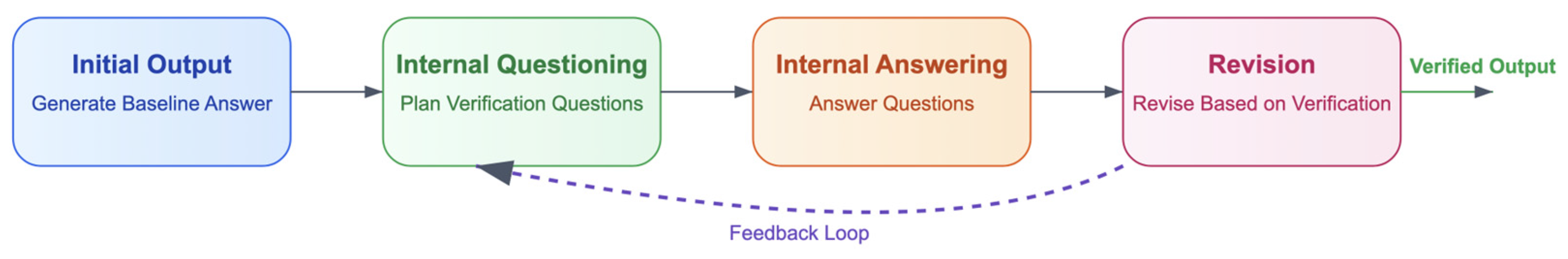

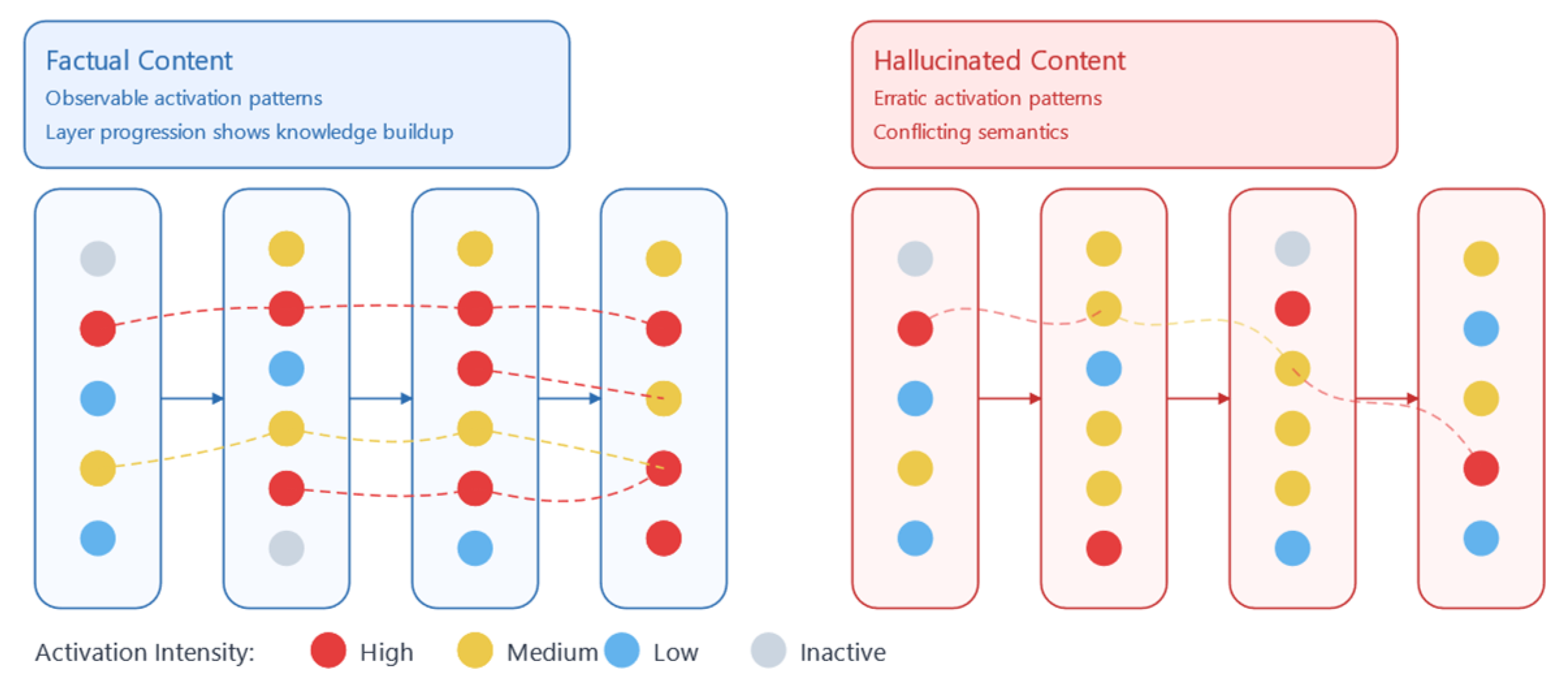

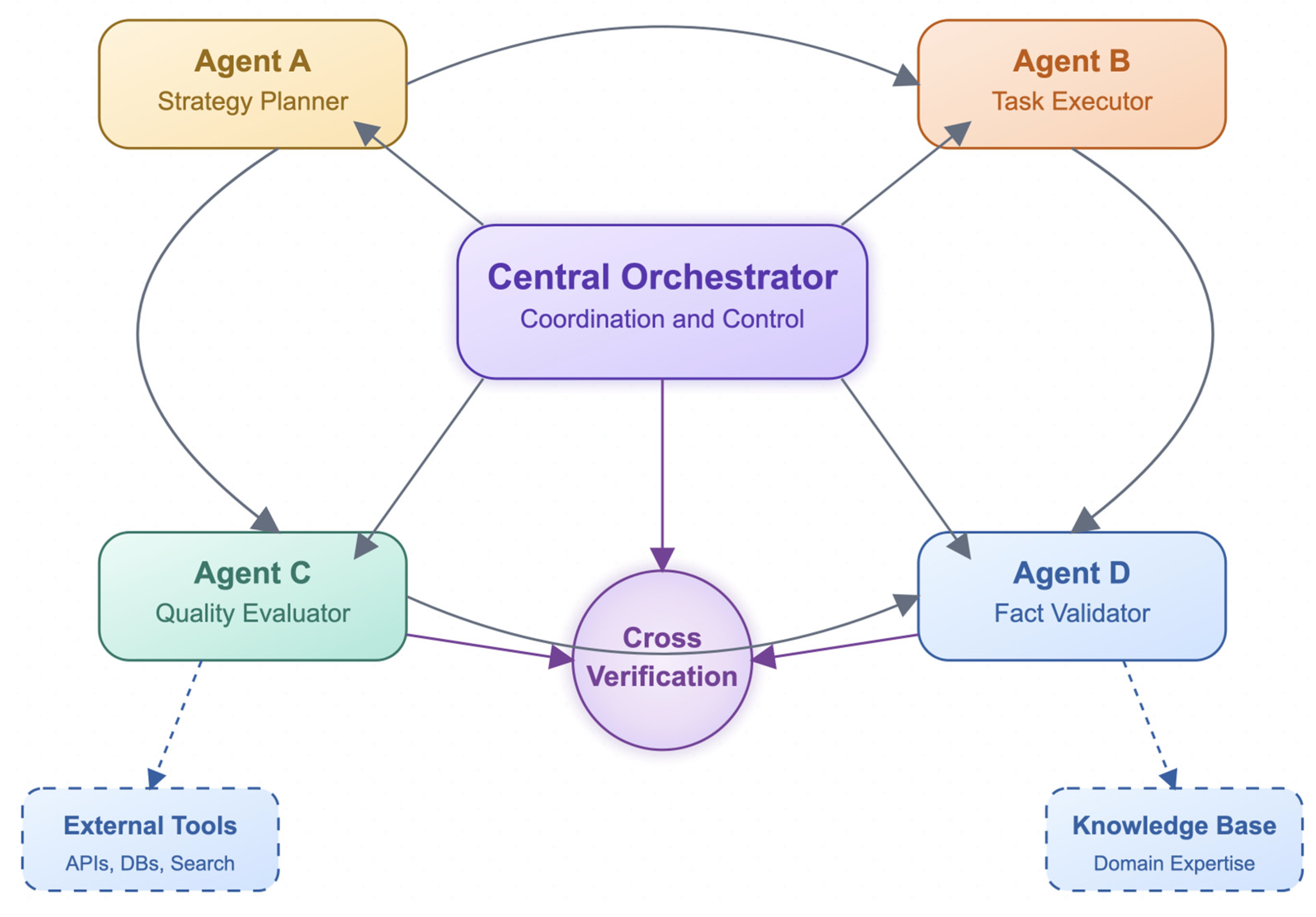

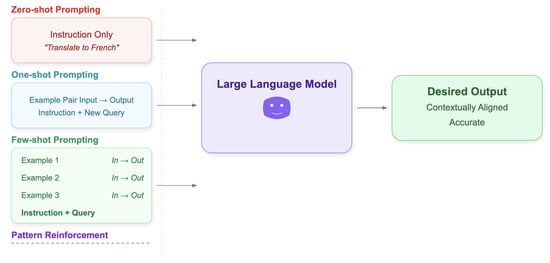

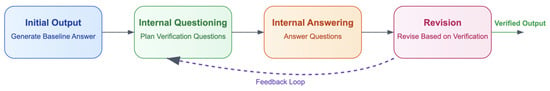

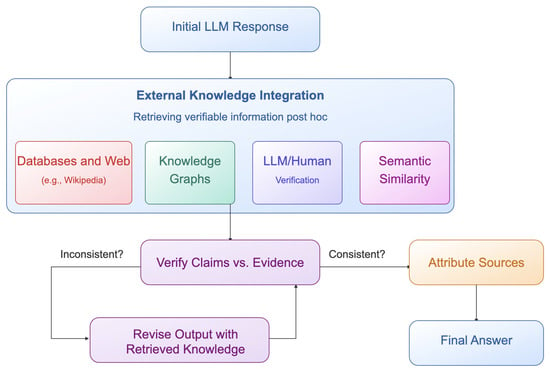

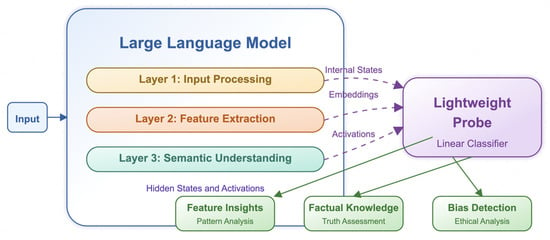

In this paper, we examine hallucinations in Large Language Models (LLMs), defined as instances where generated content appears coherent and plausible but contains factual inaccuracies or unverifiable claims [1,2,3]. These manifestations range from fabricated citations and logical inconsistencies to erroneous statistics and invented biographical details [4,5]. Such errors pose significant challenges for the use of LLMs in domains where factual accuracy is critical, including healthcare [6,7], law [8,9,10], and defense [11]. Some of the underlying causes include, but are not limited to, noisy training data, underrepresentation of minority viewpoints, outdated information, and the next-token log-likelihood objective that prioritizes plausible continuation over factual accuracy. Research has shown that hallucinations arise from the complex interplay between factual accuracy and generative capability [12,13,14]. Despite being mathematically inevitable, a plethora of mitigation strategies have been developed across pre-generation, generation, and post-generation phases. Pre-generation techniques include fine-tuning with human feedback [15] and contrastive learning [16] (distinct from contrastive decoding [17], which is applied during text generation). Architectural enhancements, by contrast, often incorporate decoding mechanisms [17], retrieval-based modules [18] and memory augmentations [19] to ground content in verifiable sources. During generation, structured prompting techniques such as Chain of Thought (CoT) [20], guide reasoning toward evidence-based conclusions while post-generation safeguards employ self-consistency checks [21], self-verification and fact-checking systems [22], and self-verification loops [21,23], along with human-in-the-loop evaluations [24] to detect and rectify factual inconsistencies. In addition, internal model probing techniques and lightweight classifiers detect hallucination patterns through latent signals and linguistic cues [25,26]. AI agentic frameworks where a number of agents consult external tools, negotiate and self-reflect on their decisions complete our taxonomy [27,28]. We have structured our taxonomy into six categories: Training and Learning Approaches, Architectural Modifications, Input/Prompt Optimization, Post-Generation Quality Control, Interpretability and Diagnostic Methods, and Agent-Based Orchestration. A detailed account of each category follows in Section 4.2.

We organize the remainder of this paper as follows: Section 2 defines and categorizes hallucinations, discusses their underlying causes, and provides a brief overview of existing mitigation methods. Section 3 reviews related research, while Section 4 presents our methodology, proposed taxonomy and the key contributions. Section 5 provides an analytical discussion of mitigation strategies and Section 6 outlines benchmarks for evaluating hallucinations in LLMs. Section 7 offers a brief outline for high-stakes applications. Finally, Section 9 discusses the challenges in addressing hallucinations, and Section 10 concludes the paper.

2. Understanding Hallucinations

2.1. Definition of Hallucinations

Hallucinations in LLMs refer to instances where the generated content appears grammatically correct and coherent, yet it is factually incorrect, irrelevant, ungrounded (i.e., cannot be traced to reliable sources), or logically inconsistent [1,2,3]. Distinct from simple errors and biases, hallucinations often appear as false claims about real or imaginary entities (people, events, places, or facts). They may also involve fabricated citations and data, such as non-existent biographical details [29,30], or take the form of erroneous statistics, inaccurate numerical values, and conclusions that do not follow logically from their premises [2,31]. Defining hallucinations is challenging because their classification depends heavily on the task, the logical relation between input and output, and pragmatic factors such as ambiguity, vagueness, presupposition, or metaphor [32,33]. The presence of hallucinations may be attributed to a multi-faceted and complex interplay of various factors such as sub-optimal training data [34,35], pre-training and supervised fine-tuning issues [36,37,38], or the probabilistic nature of sequence generation [12,13,39] as will be further analyzed in Section 2.3. Despite significant progress in LLM development, hallucinatory outputs continue to raise serious concerns about the reliability and trustworthiness of machine-generated text. These risks are particularly acute in critical domains such as healthcare [7], law [8,10] and defense [11], where considerable harm may be caused to individuals.

However, hallucinations in LLMs may be viewed not merely as technical limitations but as fundamental mathematical inevitabilities inherent to their architecture and function. Training LLMs for predictive accuracy inevitably produces hallucinations, regardless of model design or data quality, due to the probabilistic nature of language generation and the inability of LLMs to learn all computable functions [12,13,14]. This limitation is further supported by computational learning theory constraints and the lack of complete factual mappings [12,13]. The resulting tension arises because the next-token prediction objective favors statistically likely continuations, prioritizing linguistic plausibility over epistemic truth.

Despite the paradox between factual accuracy and probabilistic capability, several studies challenge the view of hallucinations as universal limitations. They argue that this inevitability is not uniformly distributed across tasks [40] and that hallucinations can function as exploitable features for adversarial robustness [41]. Research shows that intentional hallucination can generate out-of-distribution concepts, boosting performance in tasks such as poetry and storytelling by 24% through associative chain disruption [42]. However, if left unregulated, this process may lead to extreme confabulation. Layer-wise analyses further suggest that certain architectural layers in LLMs are critical points where hallucination and creativity can be balanced [43]. Similarly, in tasks such as hypothesis generation or brainstorming, the unlikely combinations of entities and reasoning processes produced by hallucinations may reveal insights that conventional methods would overlook. For instance, combinatorial entropy has been shown to generate viable hypotheses in physics and biology [44], while controlled hallucination has been applied to brainstorming through reflective prompting [45]. Given this dual nature of hallucinations, we believe that their evaluation needs to occur within the context of specific applications and under cultural sensitivity awareness, so as to distinguish between scenarios that demand factual rigor and those that benefit from creative exploration [46,47,48].

2.2. Categories of Hallucinations

We can categorize hallucinations in LLMs into distinct types based on their relationship to source material, model knowledge boundaries, and linguistic structure. Following the majority of researchers, we distinguish between intrinsic hallucinations (factuality errors) and extrinsic hallucinations (faithfulness errors) as foundational categories [2,3,31,49,50,51,52,53]. These are distinct from other phenomena such as bias, which some scholars classify as a hallucination subtype [2,3,54,55,56] while others approach as a separate representational skew not necessarily involving factual error [1,48,57]. Furthermore, we explicitly position intrinsic (factuality) and extrinsic (faithfulness) hallucinations as subcategories of the broader intrinsic/extrinsic taxonomy, thus aligning with research in [58] which emphasizes that factuality requires alignment with external truths, while faithfulness requires alignment with source material. Acknowledging the research of [59], where a task-independent categorization of hallucinations is presented (Factual Mirage and Silver Lining in addition to their subcategories), we summarize the major categories thus:

- Intrinsic hallucinations (factuality errors) occur when a model generates content that contradicts established facts, its training data, or referenced input [2,3,31,49,50,52,53]. Following the taxonomic names in [5] the subtypes of this category may include (but are not limited to):

- ○

- Entity-error hallucinations, where the model generates non-existent entities or misrepresents their relationships (e.g., inventing fake individuals, non-existent biographical details [4] or non-existent research papers), often measured via entity-level consistency metrics [60], as shown in [3,31,57,61].

- ○

- Relation-error hallucinations, where inconsistencies are of a temporal, causal, or quantitative nature, such as erroneous chronologies or unsupported statistical claims [2,31,57].

- ○

- Outdatedness hallucinations, which are characterized by outdated or superseded information, often reflecting a mismatch between training data and current knowledge as shown in [1,3,51,62].

- ○

- Overclaim hallucinations, where models exaggerate the scope or certainty of a claim, often asserting more than the evidence supports [5,49,56,57].

- Extrinsic hallucinations (faithfulness errors) appear when the generated content deviates from the provided input or user prompt. These hallucinations are generally characterized by the inability to verify the generated output which may or may not be true but, in either case, it is either not directly deducible from the user prompt or it contradicts itself [2,3,5,50,56,58]. Extrinsic hallucinations may manifest as:

- ○

- Incompleteness hallucinations, which occur when answers are truncated or necessary context that is useful is omitted [2,51,57].

- ○

- Unverifiability hallucinations, where outputs that neither align with available evidence nor can be clearly refuted are present [5,56].

- ○

- Emergent hallucinations, defined as those arising unpredictably in larger models due to scaling effects [63]. These can be attributed to cross-domain reasoning and modality fusion especially in multi-modal settings or Chain of Thought (CoT) prompting scenarios [3,63,64], multi-step inference errors [65] and abstraction or alignment issues as shown in [50,57,64]. For instance, self-reflection demonstrates mitigation capabilities, effectively reducing hallucinations only in models above a certain threshold (e.g., 70B parameters), while paradoxically increasing errors in smaller models due to limited self-diagnostic capacity [5].

More importantly, certain types of hallucinations can compound over time in lengthy prompts or multi–step reasoning tasks, thus triggering a cascade of errors. This phenomenon, known as the snowball effect, further erodes user trust and model reliability [65,66,67].

2.3. Underlying Causes of Hallucinations

Hallucinations in LLMs stem from a variety of interwoven factors spanning the entire development pipeline. Although the impact of each cause may vary depending on the task and model architecture, we present them here in a non-hierarchical format to emphasize their interconnectedness. These factors include (but are not limited to) model architecture and decoding strategies [5,12,13,14,39], data quality, bias, memorization and poor alignment [15,34,35,37,39,68,69], pre-training and supervised fine-tuning issues [36,37,38], compute scale issues and under-training [5,38], prompt design and distribution shift [70,71,72], and retrieval-related mismatches [73,74].

In terms of model architecture, the use of decoding strategies and the inherently probabilistic nature of sequence-to-sequence modeling is a primary cause of hallucinations. For instance, the next-token log-likelihood objective commonly used during training which prioritizes plausible continuation over factual accuracy [12,13] has led to a significant body of research on decoding strategies such as nucleus sampling [75], contrastive decoding [17], and confidence-aware decoding [76] among others. Recent work also connects hallucinations to a lack of explicit modeling of uncertainty or factual confidence [77,78,79].

A model’s vast and oftentimes noisy training data may often suffer from the inclusion of inaccuracies, contradictions (due to the presence of entirely or partially conflicting data also known as knowledge overshadowing), and biases, the presence of which may hinder the model’s ability to generate factually reliable content [54,80]. While biases can cause hallucinations, and hallucinations represent a significant category of LLM output errors, in this systematic review we specifically examine the nature and mitigation of generated text that is factually ungrounded or inconsistent. If, for instance, a model is trained on imbalanced data, i.e., data skewed towards a majority perspective, favoring specific cultural differences or even personal beliefs, it might generate content that contradicts the realities or experiences of minority groups due to lack of exposure to their narratives [39,46,81]. These biases may be attributed to the underrepresentation of specific groups or ideas, making it challenging for models to generate content that is truly ethical and free from prejudice.

Data duplication and repetition, both of which may lead to overfitting and memorization issues, further degenerate the quality of the model output as shown in [34,35]. Ambiguous data may hinder the quantification or elicitation of model uncertainty, with research suggesting that scaling, although generally beneficial, may have reached a fundamental limit, where models merely memorizing disambiguation patterns instead of reasoning [68]. Additionally, preference-fine-tuning achieved with Reinforcement Learning from Human Feedback (RLHF) can reward fluent but significantly polarized answers, a form of alignment-induced hallucination [38]. This kind of RL-based preference optimization has been linked to sycophantic agreement with user misconceptions further exacerbating factual inconsistencies [37] while recent work demonstrates that such hallucinations arise partly from reward hacking during alignment, where models prioritize reward signals over truthfulness and are addressed with constrained fine-tuning methods [61].

The high computational cost and prolonged training times required for training LLMs are additional factors that affect the quality of LLM generation: training data are not frequently updated thus hindering their ability to provide up-to-date and accurate information [37,38]. Controlled experiments using LLM behavioral probing have also demonstrated that verbatim memorization of individual statements at the sentence level as well as statistical patterns of usage at the corpora level (template-based reproduction) can also trigger hallucinations [35,36], while another line of research has demonstrated that many LLMs are under-trained, and that optimal performance requires scaling both model size and the number of training tokens proportionally [38].

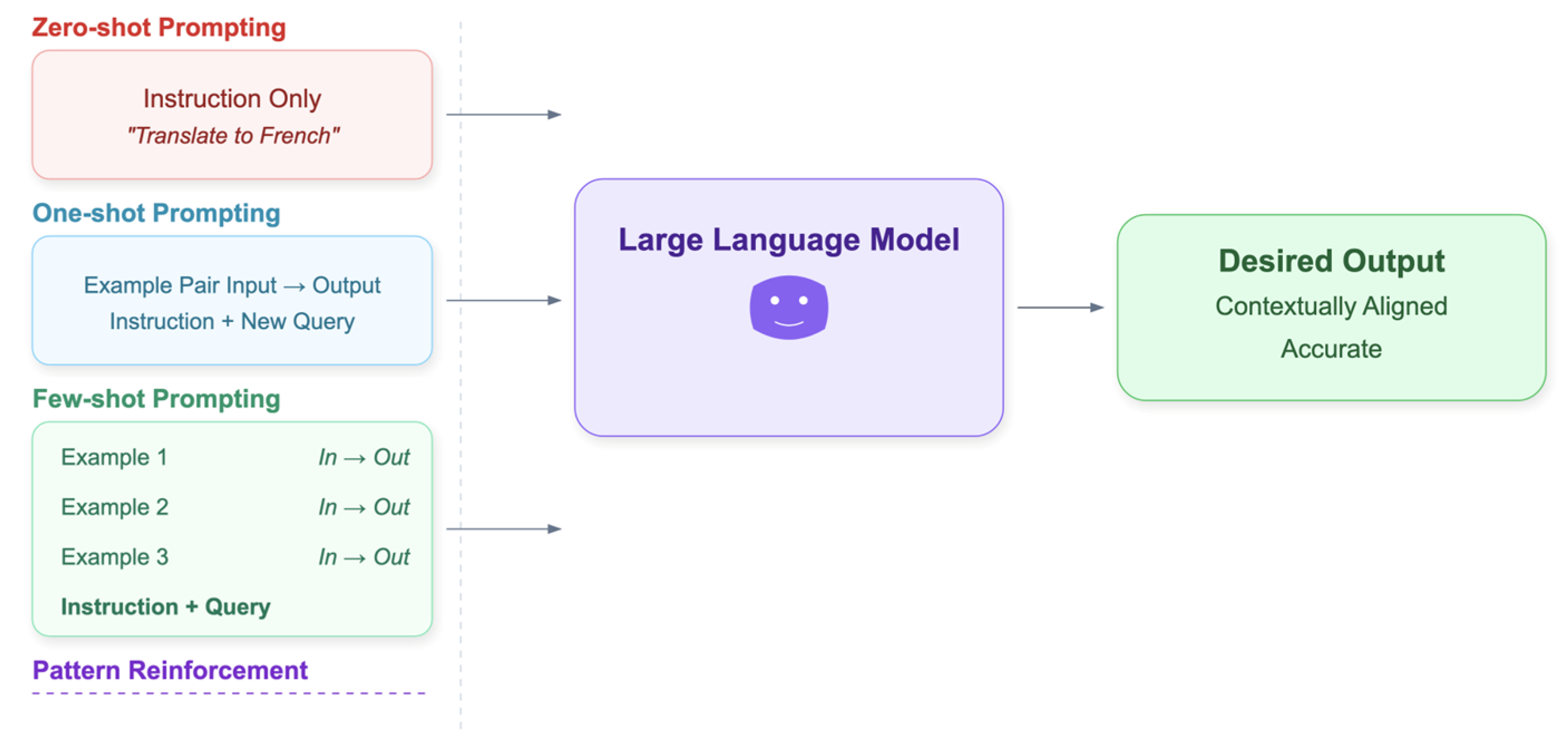

In terms of prompting techniques, poor or insufficient prompting can significantly contribute to the generation of hallucinations since the omission of important information or failure to restrict the model’s output can introduce ambiguity [82]. In many such cases, the model defaults to probabilistic pattern-matching which favors generalization over user intent as there is little or no information in the prompt that would enable it to deliver a more concise and targeted response [70,71,72]. Adversarial or out-of-distribution prompts can also have a detrimental effect as shown in [20,82] while in long-context models, inaccuracies may also emerge from summarization or retrieval failures during extended sequence attention, which can cause the model to lose track of grounding over time [67,83]. Furthermore, low-resource settings lack mitigation options requiring fine-tuning; zero-shot detection methods like self-consistency probes [84] or hesitation analysis [62] detect hallucinations without significant computational overhead.

While the above causes are presented in a non-hierarchical format to emphasize their interconnectedness, they can also be viewed as clustering around broader dimensions:

- data-related issues (e.g., noisy, biased, duplicated, or imbalanced training corpora),

- model and training-related issues (e.g., probabilistic decoding objectives, memorization, under-training, or alignment-induced effects), and

- usage-related issues (e.g., prompt design, distribution shift, and retrieval mismatches).

These categories are not mutually exclusive, but they provide a higher-level structure that connects naturally to the mitigation strategies reviewed later. For example, noisy training data is primarily addressed through Section 5.1 Training and Learning Approaches, architectural and decoding limitations through Section 5.2 Architectural Modifications, and prompt-related vulnerabilities through Section 5.3 Input/Prompt Optimization. We revisit these linkages in Section 4, where the proposed taxonomy explicitly maps mitigation strategies to the underlying causes they target.

3. Related Works

Research on hallucinations in LLMs has expanded rapidly, offering a diverse range of perspectives on their causes, forms, and mitigation strategies. Several surveys provide comprehensive overviews of hallucination mitigation across the LLM pipeline, from pre-generation to post-generation phases as seen in [1,2,3,31,49,59,85]. Much of the existing literature has adopted a task-oriented taxonomy, categorizing mitigation techniques according to downstream applications (e.g., summarization, question answering, code generation) [86] or by the level of system intervention (e.g., pre-training, fine-tuning, or decoding) [2,31,56,59]. While this body of research offers valuable insights into where hallucinations occur and what tasks they affect, it often underrepresents the how—i.e., the core methodological principles underlying mitigation strategies. Some works have begun to address this by introducing method-oriented taxonomies which classify strategies based on techniques such as data augmentation, retrieval integration, prompt engineering or external fact-checking [3,37,52]. Notably, some of these studies focus on specific mitigation classes such as retrieval-augmented generation [51,87] ambiguity and uncertainty estimation [68], knowledge graphs [88], multi-agent reinforcement learning [27], thus offering deep insights into a narrow family of techniques. Others, like [3], begin to outline broad method-based categories but do not always explore fine-grained distinctions such as whether a method is self-supervised, classifier-guided, contrastive, or interpretability-driven.

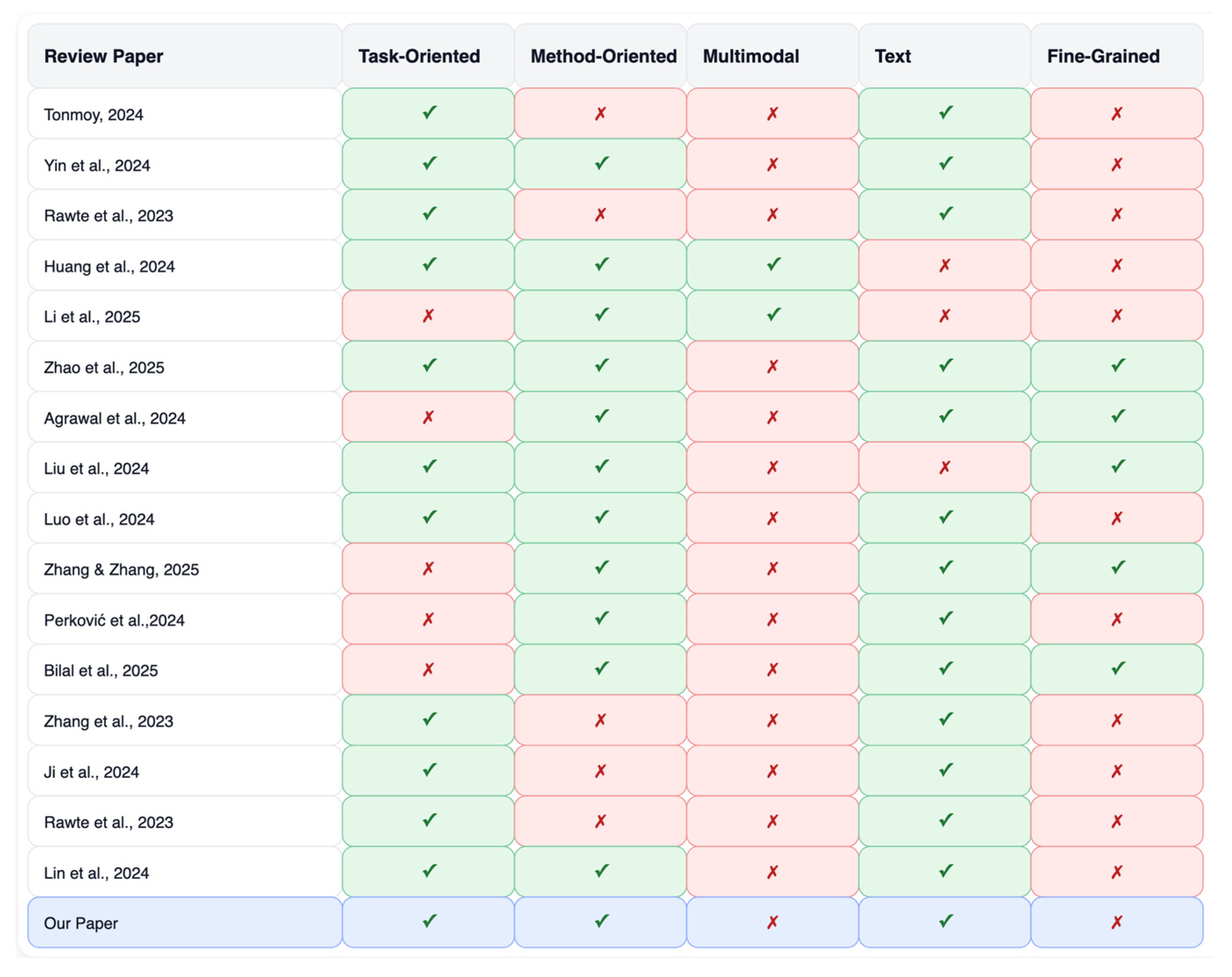

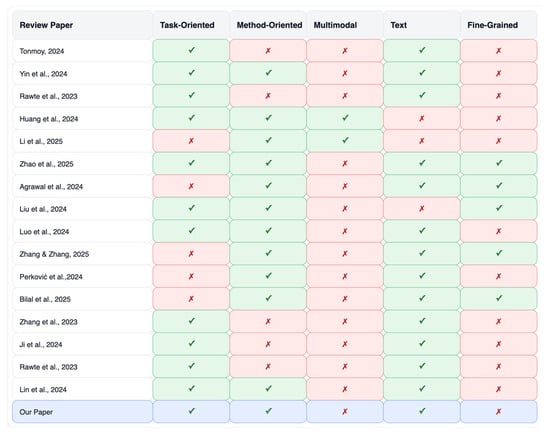

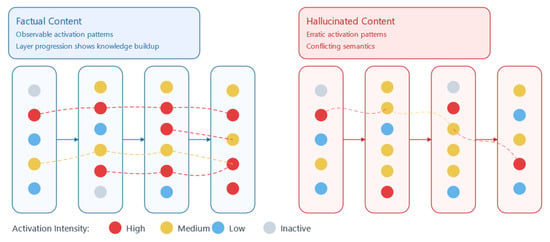

As Figure 1 illustrates, our paper aims to complement this current body of research by offering an updated, method-oriented taxonomy that focuses exclusively on hallucination mitigation in textual LLMs. Our taxonomy is designed to clarify strategies based on their methodological foundation—such as training and learning approaches, architectural modifications, prompt engineering, output verification, post-processing, interpretability, and agent-based orchestration. By delving into the structural properties of existing mitigation strategies, we hope to clarify overlapping concepts and identify gaps that may serve as opportunities for future research.

Figure 1.

Comparison of hallucination survey taxonomies in LLM research, highlighting differences in categorization schemes such as task-oriented, system-level, and method-based classifications [1,2,3,27,31,37,49,51,52,56,59,68,85,86,87,88].

4. Review Methodology, Proposed Taxonomy, Contributions and Limitations

4.1. Review Methodology

Defining a single, unchanging taxonomy to classify and address hallucinations is a challenging task that can be attributed at least partially to the complex and interwoven nature of the factors that cause them. In this paper, we try to address this challenge by presenting a 5-stage hierarchical framework:

- Literature Retrieval: We systematically collected research papers from major electronic archives—including Google Scholar, ACM Digital Library, IEEE Xplore, Elsevier, Springer, and ArXiv—with a cutoff date of 12 August 2025. Eligible records were restricted to peer-reviewed journal articles, conference papers, preprints under peer review, and technical reports, while non-academic sources such as blogs or opinion pieces were excluded. A structured query was used, combining keywords: (“mitigation” AND “hallucination” AND “large language models”) OR “evaluation”. In addition, we examined bibliographies of retrieved works to identify further relevant publications.

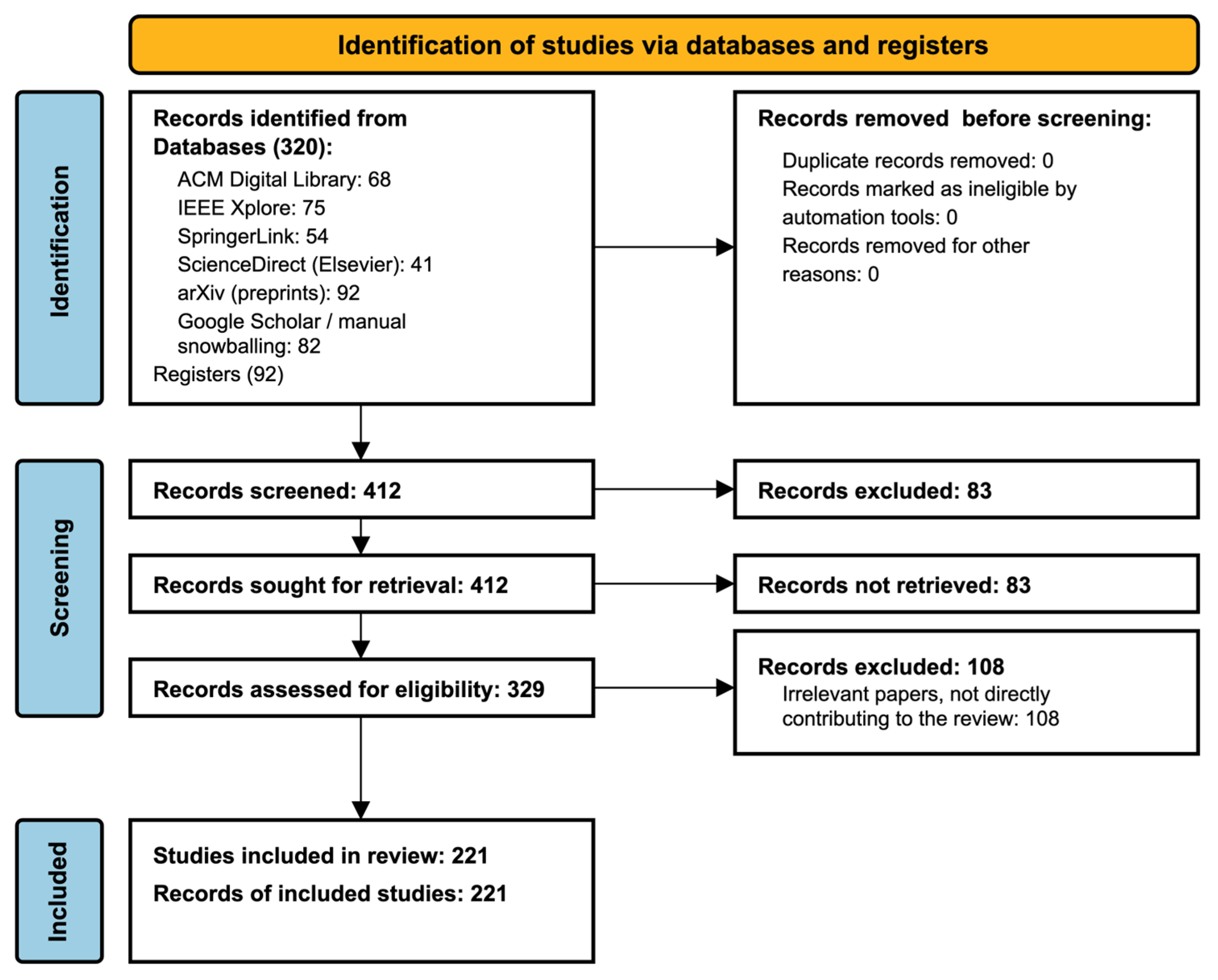

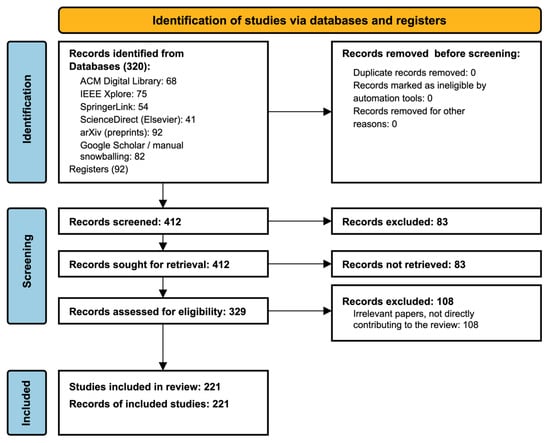

- Screening: The screening process followed a two-stage approach. First, titles and abstracts were screened for topical relevance. Records passing this stage underwent a full-text review to assess eligibility. Out of 412 initially retrieved records, 83 were excluded as irrelevant at the screening stage. The 329 eligible papers were then examined in detail and further categorized into support studies, literature reviews, datasets/benchmarks, and works directly proposing hallucination detection or mitigation methods. The final set of 221 studies formed the basis of our taxonomy. This process is summarized in Figure 2.

Figure 2. PRISMA flow diagram of study selection.

Figure 2. PRISMA flow diagram of study selection.

- Paper-level tagging, where every study was assigned one or more tags corresponding to its employed mitigation strategies. Our review accounts for papers that propose multiple methodologies by assigning them multiple tags, ensuring a comprehensive representation of each paper’s contributions.

- Thematic clustering, where we consolidated those tags into six broad categories presented analytically in Section 4.2. This enabled us to generate informative visualizations that reflect the prevalence and trends among different mitigation techniques.

- Content-specific retrieval: To gain deeper insight into mitigation strategies, we developed a custom Retrieval-Augmented Generation (RAG) system based on the Mistral language model as an additional research tool, which enabled us to extract content-specific passages directly from the research papers.

This systematic review follows the PRISMA 2020 guidelines (see the Supplementary Materials) and has been registered with the Open Science Framework (OSF) under the registration ID: zbdy2. The registration details are publicly available at https://osf.io/zbdy2 (accessed 26 September 2025).

4.2. Proposed Taxonomy and Review Organization

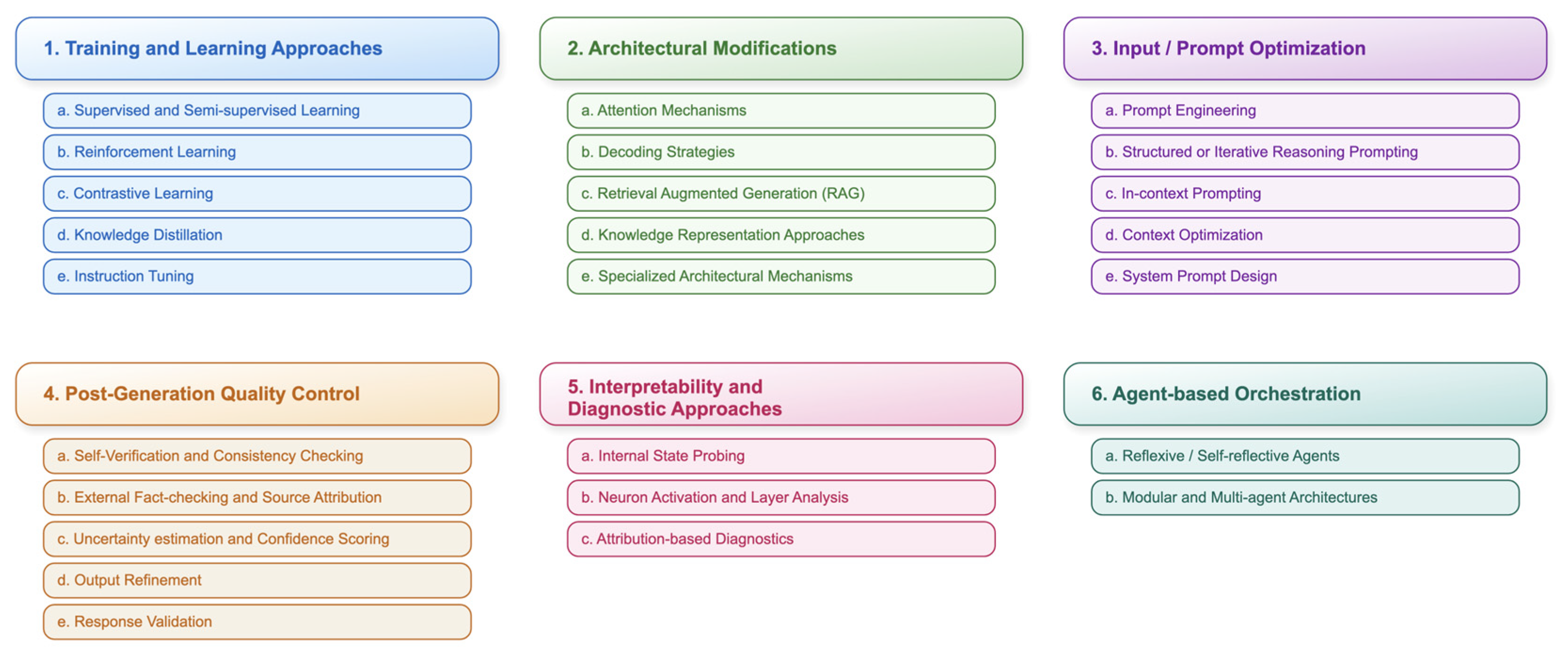

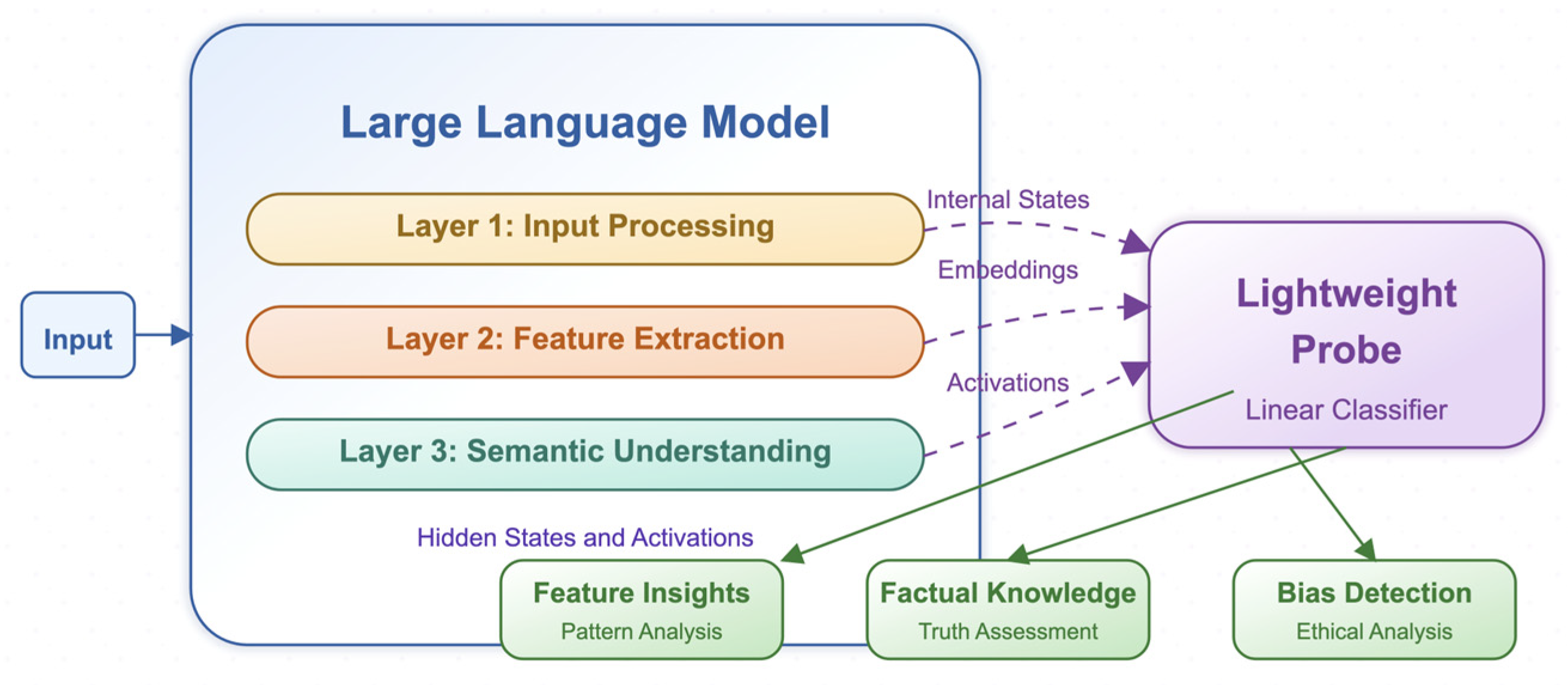

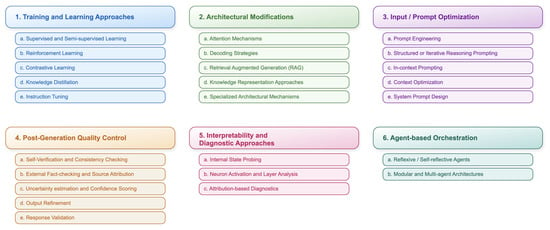

Building upon the systematic methodology outlined in Section 4.1, our review directly addresses the challenges inherent in classifying diverse hallucination mitigation techniques. A key difficulty is that the majority of the papers implement a number of strategies (oftentimes referred to as frameworks), and therefore it is not always clearly discernible what the primary mitigation method is and to what extent its results can be safely attributed to it. We acknowledge this fact by explaining at the start of every major category our placement rationale. Our taxonomy organizes hallucination mitigation strategies into six main, method-focused categories, as shown in Figure 3.

Figure 3.

Visualization of the proposed taxonomy for hallucination mitigation, organizing strategies into six method-based categories.

- Training and Learning Approaches (Section 5.1): Encompasses diverse methodologies employed to train and refine AI models, shaping their capabilities and performance.

- Architectural Modifications (Section 5.2): Covers structural changes and enhancements made to AI models and their inference processes to improve performance, efficiency, and generation quality.

- Input/Prompt Optimization (Section 5.3): Focuses on strategies for crafting and refining the text provided to AI models to steer their behavior and output, often specifically to mitigate hallucinations.

- Post-Generation Quality Control (Section 5.4): Encompasses essential post-generation checks applied to text outputs, aiming to identify or correct inaccuracies.

- Interpretability and Diagnostic Approaches (Section 5.5): Encompasses methods that help researchers understand why and where a model may be hallucinating (e.g., Internal State Probing, Attribution-based diagnostics).

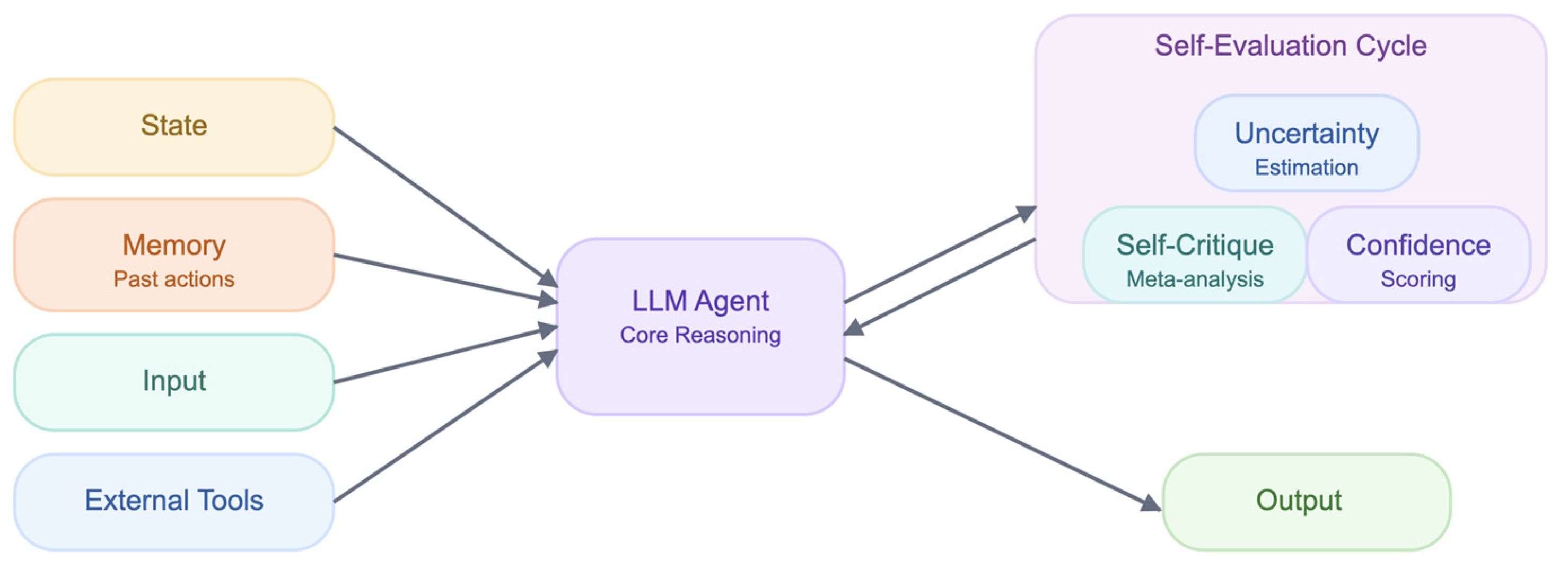

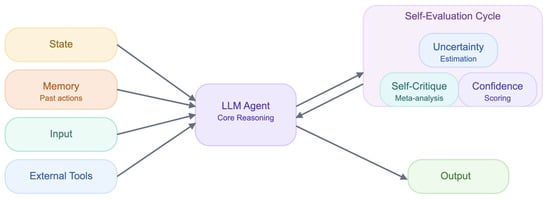

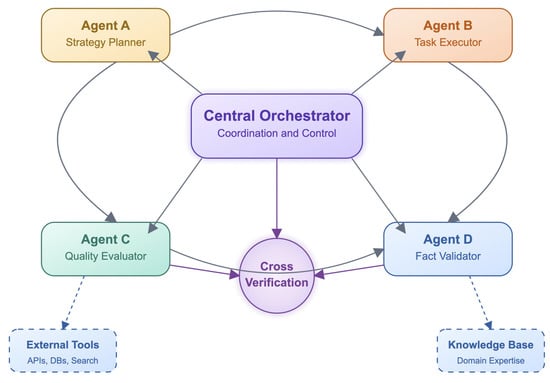

- Agent-based Orchestration (Section 5.6): Includes frameworks comprising single or multiple LLMs within multi-step loops, enabling iterative reasoning and tool usage.

In Section 5, we outline the six categories by summarizing their main principles. We then review research papers that apply methods in each category. To highlight specific details of these methods, we also include “support papers”—works that, while not directly focused on hallucination mitigation, provide relevant insights, evidence, or results related to the methods.

4.3. Contributions and Key Findings

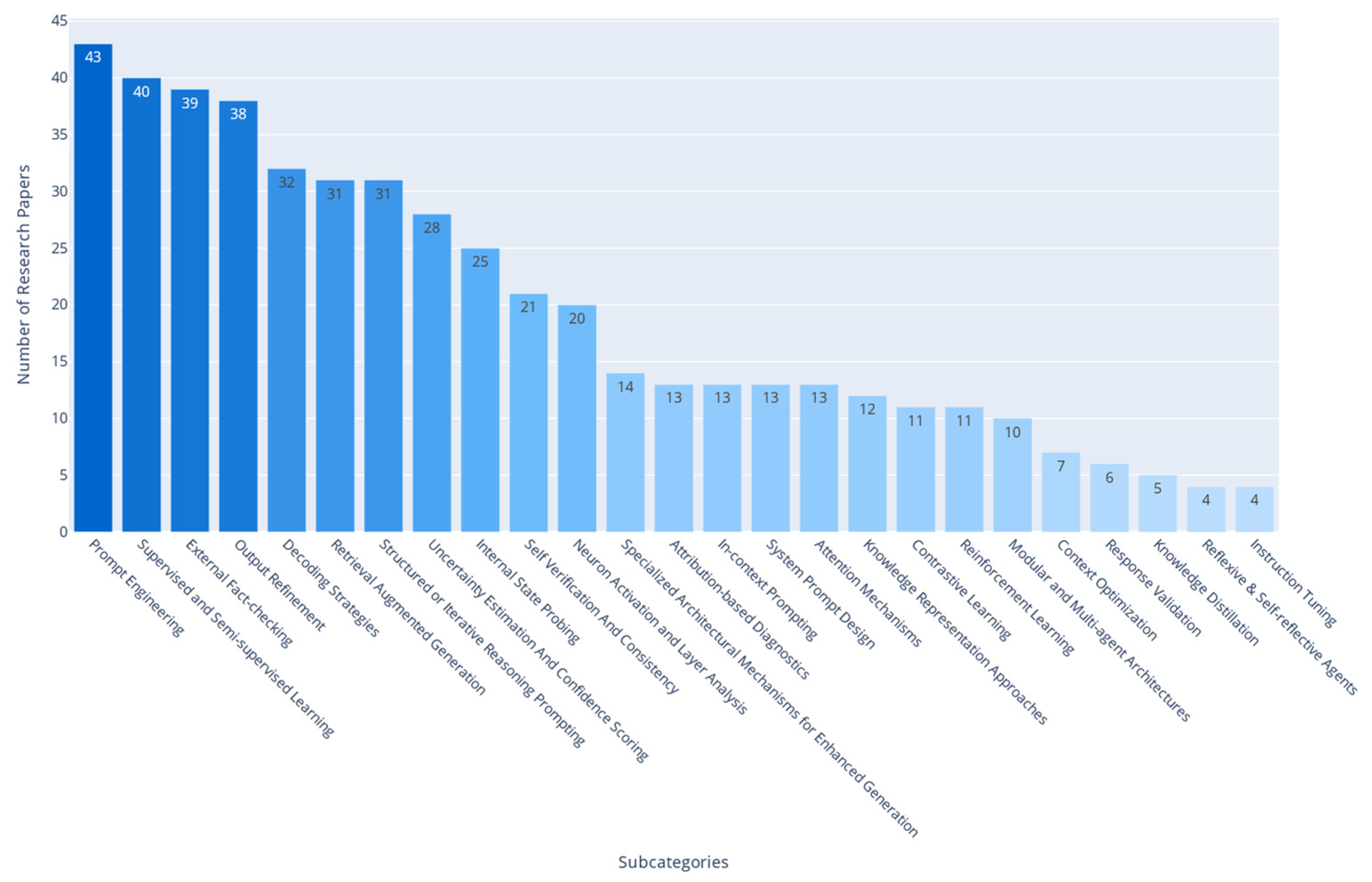

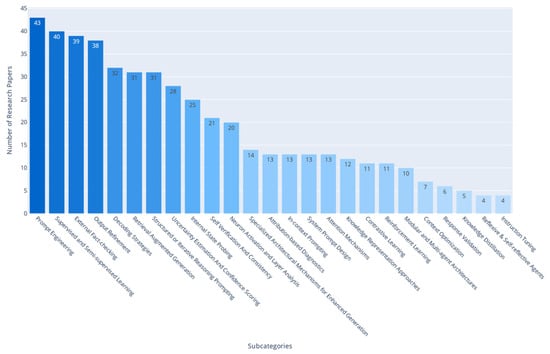

To complement our taxonomy, we present three visualizations that synthesize key trends in hallucination mitigation research and may indicate potential future research directions.

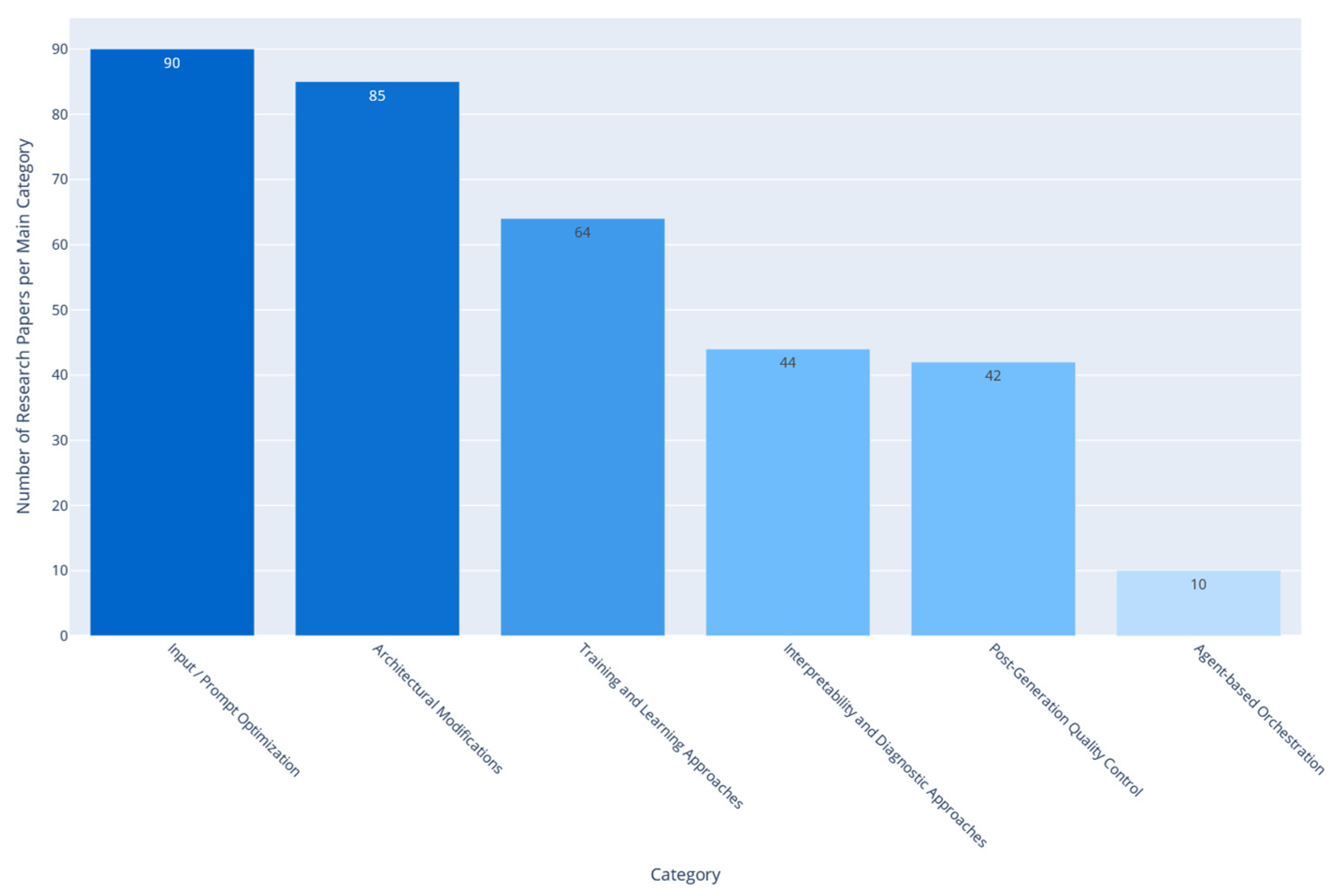

Figure 4 shows the distribution of papers across mitigation techniques. Prompt Engineering dominates due to its low cost, flexibility, and ability to guide model behavior without retraining. Supervised/Semi-supervised Learning, External Fact-checking, and Output Refinement follow, highlighting interest in post hoc refinement and verification. Less explored areas, such as Self-reflective Agents and Knowledge Distillation, point to opportunities for further research.

Figure 4.

Distribution of research papers across individual hallucination mitigation subcategories, showing the prevalence of methods such as prompt engineering, external fact-checking, and decoding strategies.

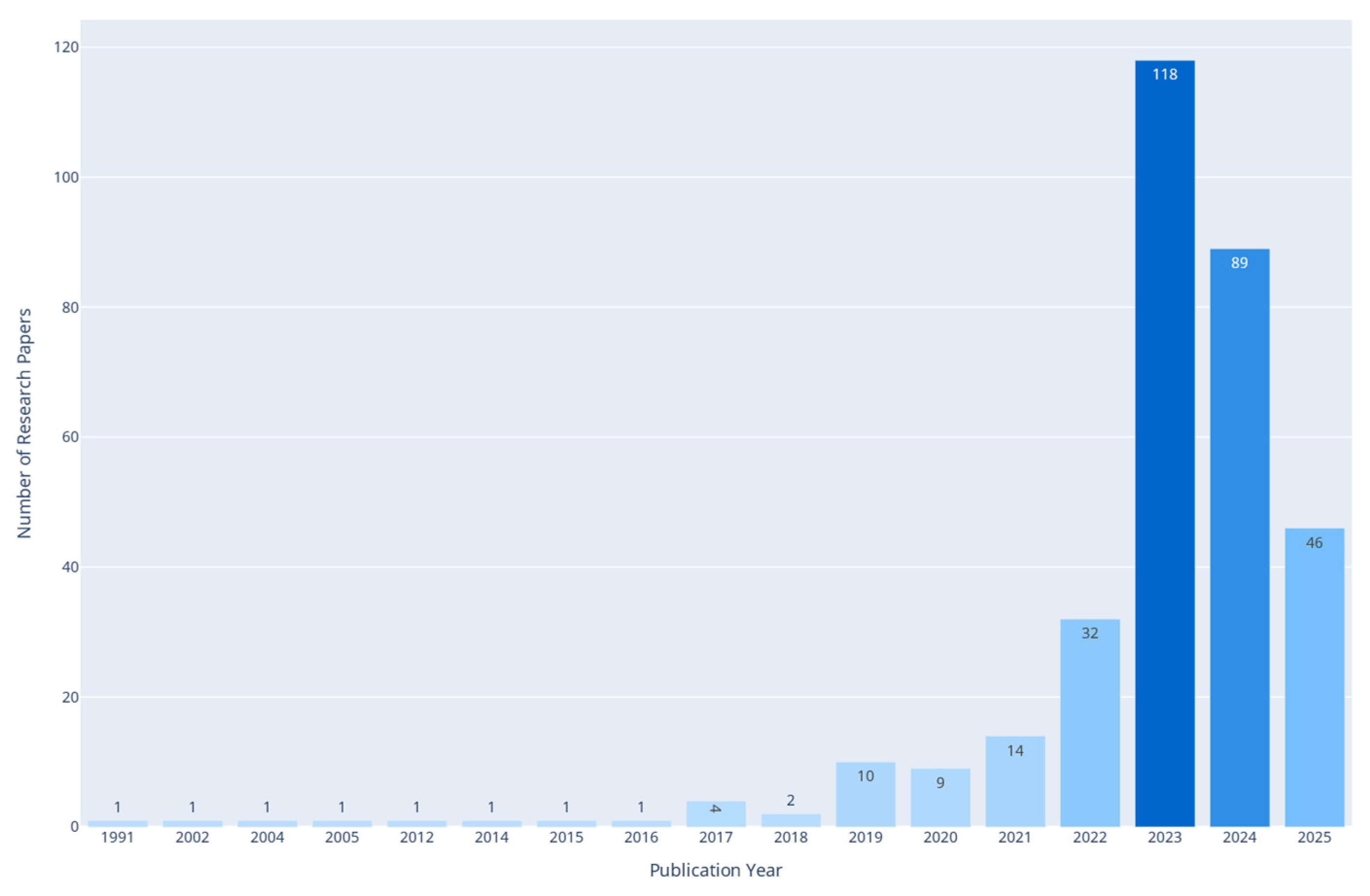

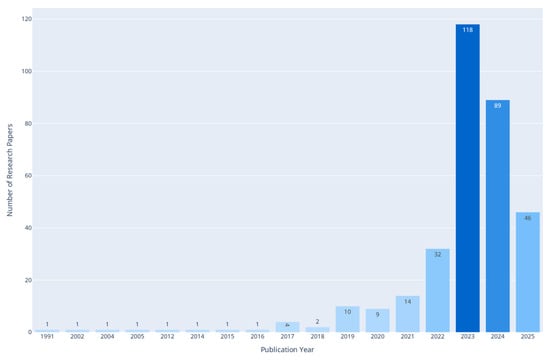

Figure 5 traces the temporal growth of hallucination mitigation research. The trendline shows a noticeable surge in publications from 2022 onward, particularly following the public attention garnered by generative LLMs like ChatGPT. Notably, over 100 papers were published in 2023 alone, signaling both the urgency and the complexity of the hallucination problem as LLMs become integrated into high-stakes applications.

Figure 5.

Yearly publication trend of hallucination mitigation research in LLMs, indicating a sharp increase in scholarly output since 2022.

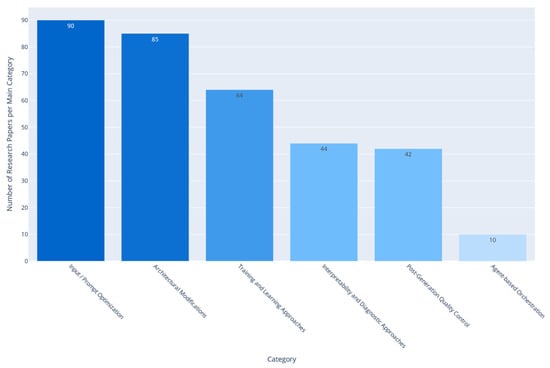

Figure 6 aggregates the number of papers per top-level category in our taxonomy, revealing that Input/Prompt Optimization, Architectural Modifications, and Training and Learning Approaches constitute the bulk of current research. This higher-level summary not only validates the comprehensiveness of the taxonomy but also provides researchers with a macro-level understanding of where scholarly attention is concentrated and where new contributions might be most impactful. Additionally, we have synthesized a comparison table of hallucination-mitigation subcategories derived from our literature review (Appendix A).

Figure 6.

Aggregated number of research papers per top-level category in the proposed taxonomy.

Our taxonomy not only organizes existing literature but also reveals research gaps and emerging directions. By categorizing hallucinations by root causes, manifestations, and domain-specific effects, it highlights uneven scholarly attention. While areas like Prompt Engineering, Supervised/Semi-supervised Learning, and External Fact-checking dominate, others—such as Knowledge Distillation, Response Validation, and Self-reflective Agents—remain underexplored, offering opportunities for further work. The sharp rise in publications since 2022, underscores growing interest and methodological evolution.

5. Methods for Mitigating Hallucinations

5.1. Training and Learning Approaches

Training and Learning Approaches encompass a diverse set of methodologies employed to train and refine AI models. We have included foundational approaches like Supervised and Semi-supervised Learning, learning via interaction and reward signals in Reinforcement Learning, and differentiating examples using Contrastive Learning. We have also included techniques such as Knowledge Distillation transferring knowledge from larger to smaller models, and instruction tuning, which aligns models to follow natural language instructions.

5.1.1. Supervised and Semi-Supervised Learning

Supervised learning [15,89] and semi-supervised learning [90,91] are foundational approaches that rely on high-quality datasets to ensure factual accuracy and effective generalization. Supervised learning uses annotated data to align model outputs with desired outcomes, while semi-supervised learning combines labeled and unlabeled data to reduce annotation costs. Their effectiveness depends on dataset quality, with well-curated and diverse examples helping to minimize bias and hallucinations [34,35,36,37,38]. Such datasets typically undergo iterative review and validation. Based on these methodologies, we group the papers in this subcategory as follows:

- Fine-Tuning with factuality objectives, where techniques such as FactPEGASUS make use of ranked factual summaries for factuality-aware fine-tuning [92] while FAVA generates synthetic training data using a pipeline involving error insertion and post-processing to address fine-grained hallucinations [93]. Faithful Finetuning applies weighted cross-entropy and fact-grounded QA losses to enhance faithfulness [94], while Principle Engraving fine-tunes LLaMA on self-aligned, principle-based responses [95]. Other work [5] examines the interplay between supervised fine-tuning and RLHF in mitigating hallucinations. Adversarial approaches build on Wasserstein GANs [90], with AFHN synthesizing features for new classes using labeled samples as context, supported by classification and anti-collapse regularizers to ensure feature discriminability and diversity.

- Synthetic Data and Weak Supervision, where studies automatically generated hallucinated data or weak labels for training. For instance, in [91] hallucinated tags are prepended to the model inputs so that it can learn from annotated examples to control hallucination levels while [96] uses BART and cross-lingual models with synthetic hallucinated datasets for token-level hallucination detection. Similarly, Petite Unsupervised Research and Revision (PURR) involves fine-tuning a compact model on synthetic data comprising corrupted claims and their denoised versions [97] while TrueTeacher uses labels generated by a teacher LLM to train a student model on factual consistency [98].

- Preference-Based Optimization and Alignment: In [99] a two-stage framework first combines supervised fine-tuning using curated legal QA data and Hard Sample-aware Iterative Direct Preference Optimization (HIPO) to ensure factuality by leveraging signals based on human preferences while in [80] a lightweight classifier is finetuned on contrastive pairs (hallucinated vs. non-hallucinated outputs). Similarly, mFACT—a metric for factual consistency—is derived from training classifiers in different target languages [100], while Contrastive Preference Optimization (CPO) combines a standard negative-log likelihood loss with a contrastive loss to finetune a model on a dataset consisting of triplets (source, hallucinated translation, corrected translation) [101]. UPRISE employs a retriever model that is trained using signals from an LLM to select optimal prompts for zero-shot tasks, allowing the retriever to directly internalize alignment signals from the LLM [102]. Finally, behavioral tuning uses label data (dialogue history, knowledge sources, and corresponding responses) to improve alignment [103].

- Knowledge-Enhanced Adaptation: Techniques like HALO injects Wikidata entity triplets or summaries via fine-tuning [104] while Joint Entity and Summary Generation employs a pre-trained Longformer model which is finetuned on the PubMed dataset, in order to mitigate hallucinations by supervised adaptation and data filtering [105]. The impact of injecting new knowledge into LLMs via supervised finetuning and the potential risk of hallucinations is also studied in [106].

- Hallucination Detection Classifiers: [107] involves fine-tuning a LLaMA-2-7B model to classify hallucination-prone queries using labeled data while in [108] a sample selection strategy improves the efficiency of supervised fine-tuning by reducing annotation costs while preserving factuality through supervision.

Beyond their primary use, supervised and semi-supervised learning also serve as enabling methods that complement approaches such as Contrastive Learning, Internal State Probing, Retrieval-Augmented Generation, and Prompt Engineering. They have been applied to tasks including answer aggregation from annotated data [109], training factuality classifiers [83,110,111,112], generating synthetic datasets [113,114], and contributing to refinement pipelines [15,53,115,116,117,118,119,120,121,122,123]. More specifically:

- Training of factuality classifiers: Supervised finetuning is used to train models on labeled text data in datasets such as HDMBENCH, TruthfulQA, and multilingual datasets demonstrating improvements in task-specific performance and factual alignment [110,111,118]. Additionally, training enables classifiers to detect properties such as honesty and lies within intermediate representations resulting in increased accuracy and separability of these concepts as shown in [83,112,117].

- Synthetic data creation, which involves injecting hallucinations into correct reasoning steps, as in FG-PRM, which trains Process Reward Models to detect specific hallucination types [113]. Approaches like RAGTruth provide human-annotated labels on response grounding to support supervised training and evaluation [114], while [124] introduces an entity-substitution framework that generates conflicting QA instances to address over-reliance on parametric knowledge.

- Refinement pipelines employ supervised training in various forms, such as critic models trained on LLM data with synthetic negatives [115], augmentation techniques like TOPICPREFIX for improved grounding [116], and models such as HVM trained on the FATE dataset to distinguish faithful from unfaithful text [119]. Related methods target truthful vs. untruthful representations [53,120,121], while self-training on synthetic data outperforms crowdsourced alternatives [122]. Finally, WizardLM fine-tunes LLaMA on generated instructions, enhancing generalization [123].

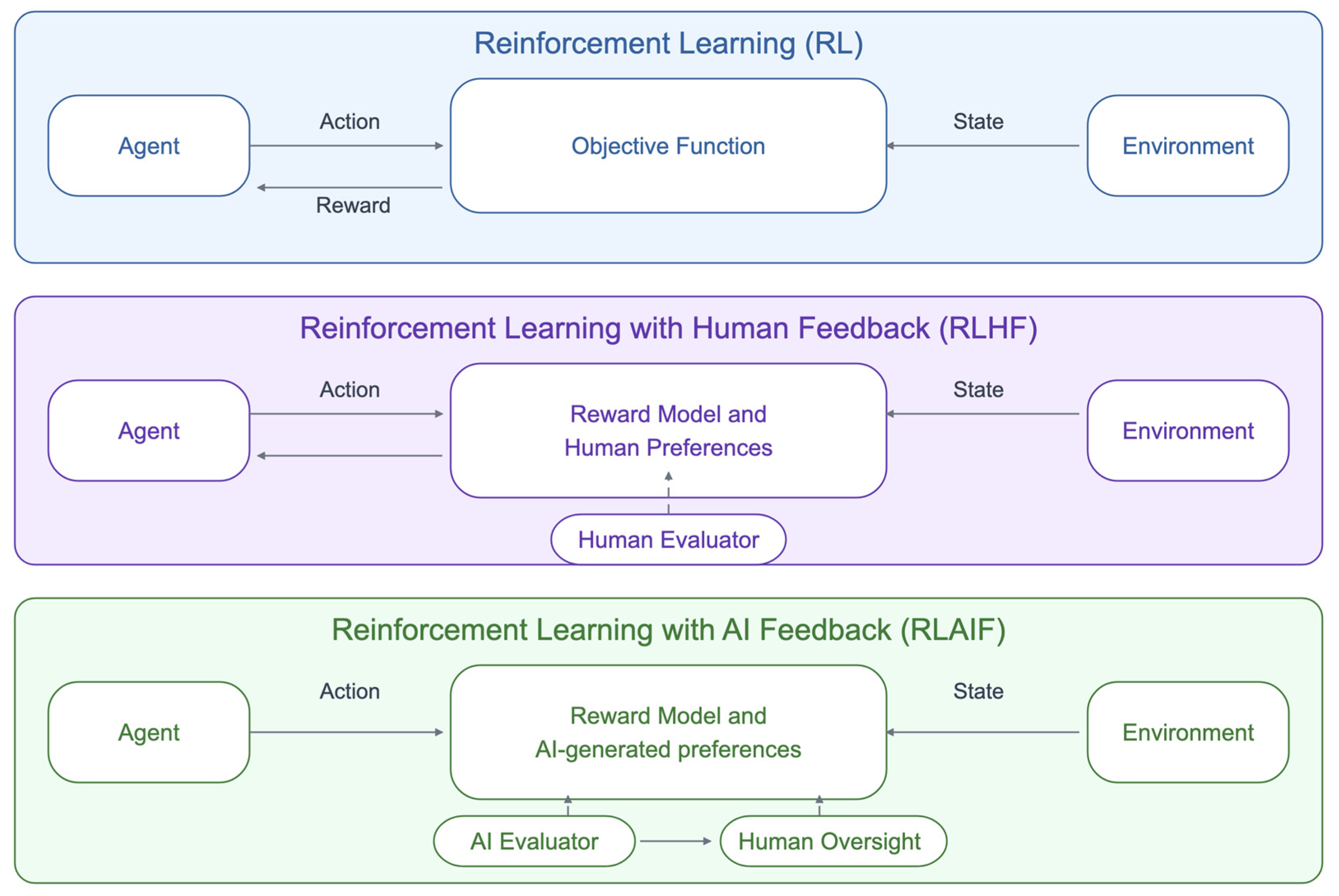

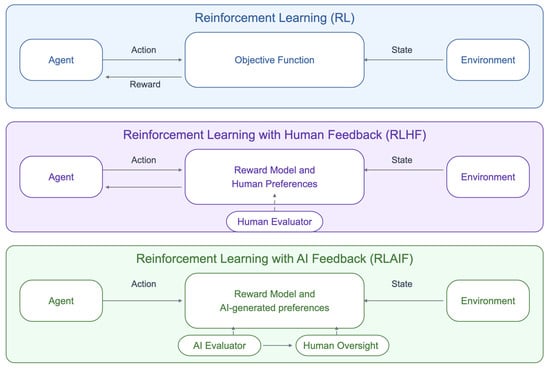

5.1.2. Reinforcement Learning

Reinforcement Learning (RL) (Figure 7) trains models by optimizing reward signals rather than relying on labeled data, learning through trial-and-error to maximize cumulative rewards [125]. For example, NLI-based reward functions evaluate whether summaries are entailed by source documents, encouraging fluent and grounded outputs with fewer hallucinations [126]. Other approaches fine-tune LLMs with factuality-based signals such as FactScore and confidence-derived metrics [127]. Additionally, techniques like process supervision improve both generation and reward modeling by ranking multi-step reasoning chains, forming the basis for systems such as InstructGPT [128].

Figure 7.

Overview of reinforcement learning for hallucination mitigation, including RLHF and RLAIF.

Defining precise and scalable reward functions in standard RL is challenging, leading to the development of Reinforcement Learning from Human Feedback (RLHF) [15,129]. In RLHF, human feedback guides model outputs to align with styles, constraints, or preferences, which helps reduce hallucinations by improving accuracy and encouraging models to acknowledge uncertainty [15,129]. The reward model provides the optimization signal, enhancing factuality, coherence, and ethical alignment [15,81,130,131]. Notable results include Anthropic’s Constitutional AI, which achieved an 85% reduction in harmful hallucinations [81], and OpenAI’s GPT-4, which showed a 19% improvement over GPT-3.5 on adversarial factuality benchmarks and strong gains on TruthfulQA [131]. More recent work explores reward-model-guided fine-tuning, where self-evaluation signals push models to abstain when queries exceed their parametric knowledge, converting potential hallucinations into refusals [132].

While RLHF has proven effective, its application is not without challenges. Research shows that extensive RL training can increase context-dependent sycophancy, with models favoring user agreement over factual accuracy [69]. Moreover, RLHF is resource-intensive, time-consuming, and subject to human and cultural biases, while inverse scaling issues may cause models to express stronger political views or resist certain instructions [37,81,133]. To address these limitations, Reinforcement Learning from AI Feedback (RLAIF) replaces or supplements human evaluators with AI systems, automating feedback generation and often surpassing RLHF in helpfulness, honesty, and harmlessness while dramatically reducing annotation costs [81,134]. For instance, RLAIF achieved a tenfold reduction in human labor, and models trained on GPT-4 critiques outperformed RLHF counterparts on MT-Bench and AlpacaEval [134]. Related approaches include Reinforcement Learning with Knowledge Feedback (RLKF), which uses factual preferences derived from the DreamCatcher tool to train reward models with PPO [135]. Similarly, HaluSearch frames response generation as a dual-process system that alternates between fast and slow reasoning, employing Monte Carlo Tree Search (MCTS) and self-evaluation reward signals to guide reasoning paths away from hallucinations through step-level verification [136].

RLHF and RLAIF fall within the broader paradigm of Alignment Learning [130], which aims to ensure AI systems act in line with human values and intentions [81,130,137,138]. Despite the costs of data collection, RL and its variants typically improve factual consistency and user satisfaction [15], while advanced forms such as hierarchical and multi-agent RL are being explored to refine alignment [139]. Reinforcement Learning has also been identified as a key enabler for multi-agent LLM systems, supporting meta-thinking abilities like self-reflection and adaptive planning, thereby fostering more trustworthy agents [27,28]. While RL/RLHF/RLAIF can make use of AI agents that function as learners or decision-makers [15,125,131], we categorize RL, RLHF, and RLAIF under Training and Learning Approaches, since their primary focus lies in optimizing behavior through iterative learning—defining reward signals, training policies, fine-tuning, and incorporating preferences. By contrast, we reserve Agent-based Orchestration for frameworks emphasizing real-time decision-making, distinguishing between approaches centered on how models learn versus how they act in real time.

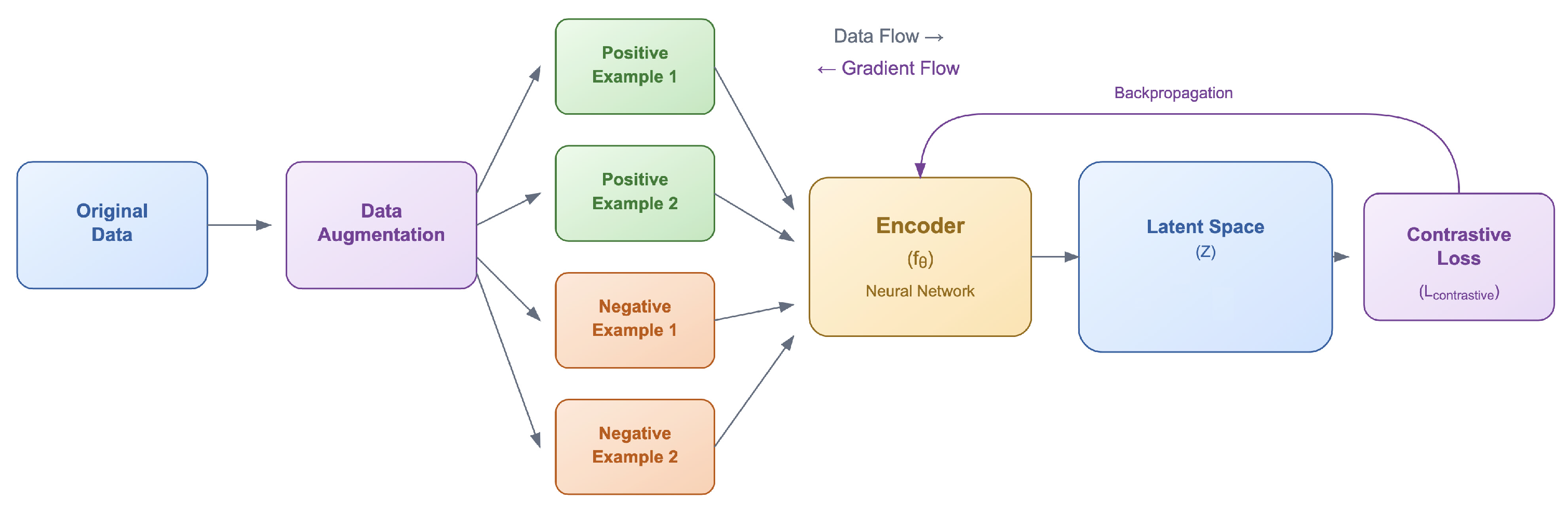

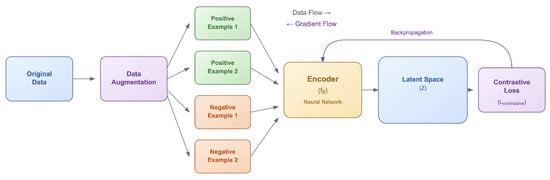

5.1.3. Contrastive Learning

Contrastive learning (Figure 8) is a training paradigm that sharpens a model’s decision boundaries when it comes to differentiating between positive examples (factually sound statements) from negative examples (incorrect information). A major benefit of contrastive learning is its ability to use unlabeled or weakly labeled data and to efficiently learn representations from unstructured data as shown in techniques such as SimCLR [140] and Momentum Contrast (MoCo) [141]. Although initially targeting computer vision, these methods were later adapted to help mitigate hallucinations by generating synthetic “negative” examples, thus demonstrating that contrastive learning can significantly reduce the need for large and curated datasets [140,141].

Figure 8.

Illustration of the contrastive learning paradigm used to distinguish hallucinated from factual content through hard negative sampling and representation alignment.

Contrastive methods can improve content quality, such as in [142] where the loss is calculated over ranked candidate summaries and the model is penalized if a low-quality summary is selected over a better one. This loss is recombined with the standard MLE loss, thus resulting in the optimization of a combined, contrastive reward loss for factuality-ranked summaries. In contrastive learning, carefully constructed negative samples that closely resemble plausible but incorrect content (known as “hard negatives”) can be particularly beneficial, as they challenge the model to learn subtle distinctions [143]. Although [143] does not directly target hallucinations, its proposed debiased contrastive loss has influenced research in hallucination mitigation that selects hard negatives for training models with stronger factual grounding such as [16,101].

Several studies have examined contrastive learning as a strategy for mitigating hallucinations in LLMs. MixCL reduces hallucinations in dialogue tasks by training models to distinguish between appropriate and inappropriate responses through negative sampling and mixed contrastive learning, though it faces challenges such as catastrophic forgetting [16]. This issue has been addressed with methods like elastic weight consolidation and experience replay, which preserve general knowledge by freezing core parameters while fine-tuning only on hallucination-prone tasks [61,122]. Other approaches, such as SimCTG, introduce contrastive objectives and decoding methods to improve coherence and reduce degeneracy [144]. Iter-AHMCL employs two guidance models—one trained on low-hallucination data and another on hallucinated samples—to provide iterative contrastive signals that adjust LLM representation layers [145]. Contrastive Preference Optimization (CPO) combines negative log-likelihood loss with contrastive loss to favor non-hallucinated translations [101], and TruthX explicitly edits LLM parameters in “truthful space” using contrastive gradients, shifting activations toward truthful outputs [121]. These methods highlight the promise of contrastive learning for hallucination mitigation, though its effectiveness varies, with some studies suggesting that contrasting hallucinated versus ground-truth outputs may actually reduce detection accuracy [85].

While contrastive learning is most often used as a training method to refine model representations, many related strategies apply its core principle of contrasting desirable and undesirable outputs. For instance, Decoding by Contrasting Retrieval (DeCoRe) penalizes hallucination-prone predictions during inference [146], while Delta compares masked and unmasked inputs to suppress hallucinated tokens [147]. LLM Factoscope uses contrastive analysis of token distributions across layers to separate factual from hallucinated outputs [148], and Self-Consistent Chain-of-Thought Distillation (SCOTT) filters rationales from teacher models, retaining those most consistent with correct answers [149]. Decoding by Contrasting Layers (DoLa) contrasts early and late-layer activations to suppress hallucinated distributions, leveraging the observation that factual knowledge often resides in deeper layers [150]. Adversarial Feature Hallucination Networks (AFHN) combine adversarial alignment with an anti-collapse regularizer to prevent mode-collapsed feature hallucinations [90]. In neural machine translation, contrastive pairs of hallucinated vs. non-hallucinated outputs have also been used as supervision for hallucination detection, albeit without a contrastive loss [151]. Although most of these methods, apart from [90,151], operate without training, they illustrate how the contrastive paradigm can enhance generation quality, and we address them further in Section 5.2.2 “Decoding Strategies,” Section 5.5.1 “Internal State Probing,” and Section 5.1.4 “Knowledge Distillation.”

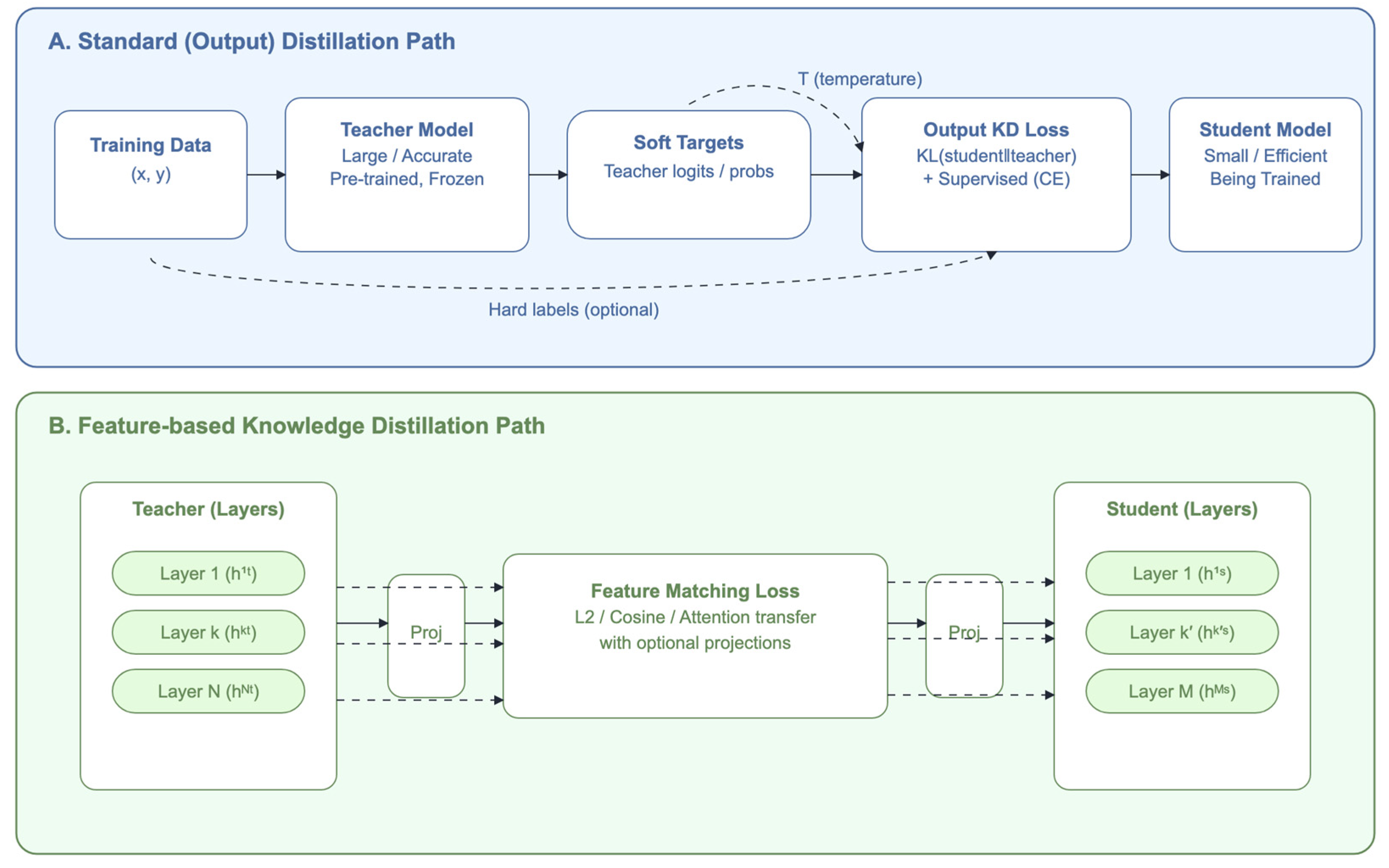

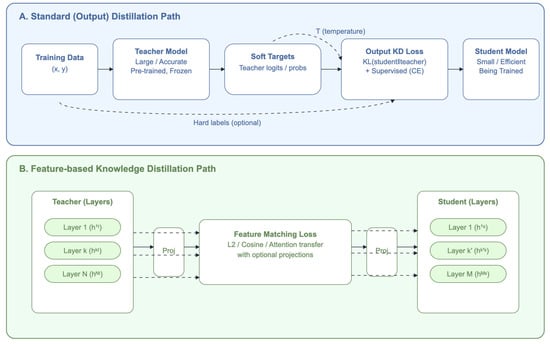

5.1.4. Knowledge Distillation

Knowledge distillation (Figure 9) involves training a smaller and more efficient student model to emulate the outputs and learned representations of a larger, more knowledgeable teacher model thereby allowing the student model to benefit from the factual grounding and calibrated reasoning of the teacher [58,122,152]. Knowledge distillation (KD) has emerged as a promising approach to enhance the factual accuracy of LLMs, particularly in tasks susceptible to hallucinations, such as summarization and question answering.

Figure 9.

Knowledge distillation framework where a student model learns to emulate a teacher model’s outputs to reduce hallucinations and improve factual consistency.

The efficacy of knowledge distillation in reducing hallucinations is shown in studies such as [58], where transferring knowledge from a high-capacity teacher to a smaller student model yields significant improvements in exact match accuracy and reductions in hallucination rates. A smoothed knowledge distillation method is introduced in [152], where soft labels mitigate hallucinations and improve factual consistency across summarization and QA. Soft labels, viewed as probability distributions, train the student to be less overconfident and more grounded. Building on these findings, Ref. [122] compares knowledge distillation with self-training in retrieval-augmented QA, showing both to be beneficial but with knowledge distillation offering slightly stronger factual accuracy. Further extensions include Chain-of-Thought (CoT) Distillation and SCOTT, which leverage CoT reasoning and self-evaluation of larger models to train smaller models for multi-step reasoning without generating lengthy intermediate steps [149,153]. Similarly, Ref. [154] employs LLM-generated explanations to fine-tune a Small Language Model (SLM), followed by reinforcement learning to reward correct reasoning paths.

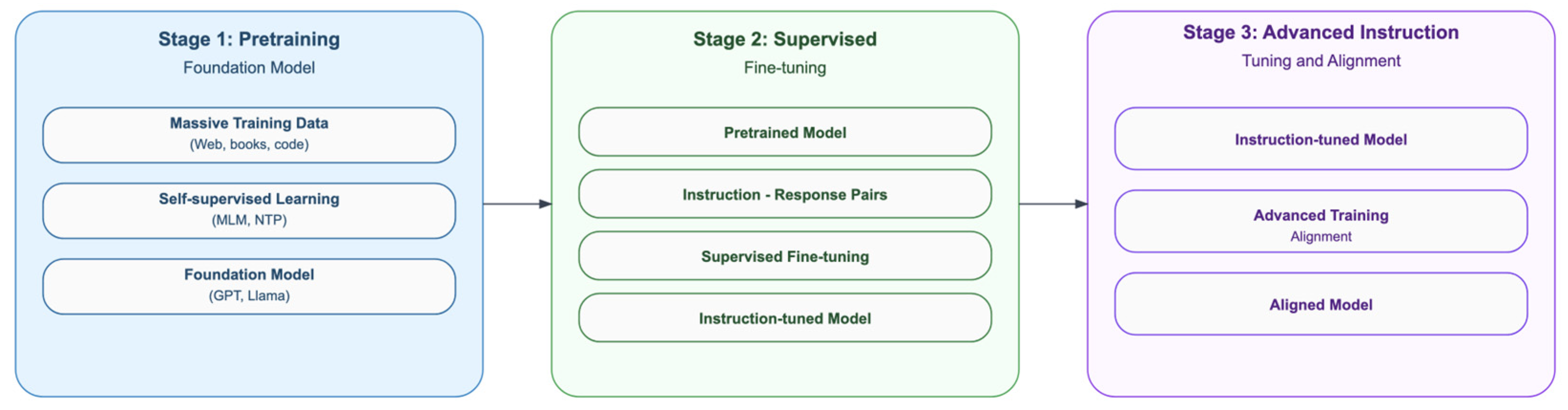

5.1.5. Instruction Tuning

Instruction tuning (Figure 10) is a specialized form of supervised learning that fine-tunes language models on datasets of input–output pairs framed as natural language instructions [128]. While it uses prompts for formatting, its focus is on training models to behave differently rather than relying on prompting at inference, distinguishing it from in-context methods discussed in “In-context Prompting.” The goal is to optimize models to follow instructions more faithfully, improving factuality and coherence to carefully designed prompts [155,156], or explicitly encouraging factual grounding while discouraging speculation [15,99,157]. Instruction tuning also benefits directly from scaling [63,156], enhancing task generalization and mitigating hallucinations by aligning outputs with factual instructions [99,127,158]. Furthermore, it enables uncertainty-aware responses [155,159] through mechanisms such as:

Figure 10.

Instruction tuning paradigm showing how models are trained on natural language instructions to improve factual alignment and task generalization.

- Factual alignment can be achieved through domain-specific fine-tuning, as in [99], where LLMs are trained on datasets of legal instructions and responses. Another approach, Curriculum-based Contrastive Learning Cross-lingual Chain-of-Thought (CCL-XCoT), integrates curriculum-based cross-lingual contrastive learning with instruction fine-tuning to transfer factual knowledge from high-resource to low-resource languages. Its Cross-lingual Chain-of-Thought (XCoT) strategy further enhances this process by guiding the model to reason before generating in the target language, effectively reducing hallucinations [160].

- Consistency alignment, which is achieved in [158] during a two-stage supervised fine-tuning process: The first step uses instruction–response pairs while in the second step, pairs of semantically similar instructions are used to enforce aligned responses across instructions.

- Uncertainty awareness, where techniques such as R-Tuning are employed to incorporate uncertainty-aware data partitioning, guiding the model to abstain from answering questions outside its parametric knowledge [15,155].

- Data-centric grounding, where Self-Instruct introduces a scalable, semi-automated method for generating diverse data without human annotation [159]. It begins with a small set of instructions and uses a pre-trained LLM to generate new tasks and corresponding input-output examples, which are then filtered and used to fine-tune the model, thus generating more aligned and grounded outputs.

Research demonstrates that instruction tuning can be effectively scaled by curating a high-quality collection of over 60 publicly available datasets spanning diverse NLP tasks [156]. Using the Flan-PaLM model, substantial gains were reported in zero-shot and few-shot generalization, with results showing that greater instruction diversity improves factual grounding and reduces hallucinations on unseen tasks by exposing models to broader factual contexts [127,156]. Additionally, incorporating Chain-of-Thought (CoT) reasoning during training further strengthens reasoning capabilities [156].

Despite its benefits, instruction tuning often exacerbates the issue of hallucinations in LLMs since fine-tuning on unseen examples can increase hallucination rates without safeguards [89] and negatively impact the calibration of a model’s predictive uncertainty leading to overconfident but hallucinated outputs [79], a finding which is also corroborated by independent observations from OpenAI in the GPT-4 “Technical Report” [131]. To mitigate these risks, techniques explicitly guide a model to say “don’t know” or generate refusal responses during the instruction tuning process in order to encourage the model to express uncertainty when appropriate [15,155], or make use of more robust decoding strategies and uncertainty estimation methods [79].

5.2. Architectural Modifications

Architectural modifications refer to structural changes and enhancements in model design or inference processes aimed at improving performance, efficiency, and generation quality. These include advances in attention mechanisms, refined decoding strategies, integration of external information through Retrieval-Augmented Generation (RAG), and the use of alternative knowledge representation approaches or specialized mechanisms to improve controllability and factual accuracy. Since some methods bridge architectural and post-generation paradigms, we categorize them based on their primary operational stage: approaches that modify model internals or inference with structured knowledge are treated as “Architectural Modifications,” while those that operate mainly on generated outputs for verification or attribution are discussed in “Post-Generation Quality Control”.

5.2.1. Attention Mechanisms

The attention mechanism, first introduced in a groundbreaking paper on neural machine translation [161] and later revolutionized by the Transformer architecture [162], transformed natural language processing by allowing models to weigh different parts of the input context dynamically, thereby focusing on the most relevant information at each step of generation. This granular control can directly reduce hallucinations by helping the model pinpoint critical pieces of factual data while research on multi-head attention has shown that heads specialized in factual recall can guide the model to learn multiple relationships in parallel [163]. Research links hallucinations to shifts in attention weights, where input perturbations can steer models toward specific outputs [164]. Attention mechanisms also help explain instruction drift, as attention decays over extended interactions [165]. Split-softmax addresses this by reweighting attention toward the system prompt, mitigating drift-related hallucinations [165]. Inference-Time Intervention (ITI) identifies attention heads containing desired knowledge and modifies their activations, with nonlinear probes extracting additional information to guide more factual outputs [166]. Other approaches adapt attention using Graph Neural Networks for better contextual focus [167], Sliding Generation with overlapping prompt segments to reduce boundary information loss [101], and novel head architectures [168].

Another line of research focuses on directly manipulating tokens. Adaptive Token Fusion (ATF) merges tokens to alter the weight distribution in attention, conditioning computation over a more efficiently structured token space [169]. Truth Forest applies multi-dimensional orthogonal probes to detect “truth representations” in hidden states, shifting them along directions of maximum factuality to bias the attention mechanism, while Random Peek extends this across wider sequence positions to reinforce truthfulness and reduce hallucinations [120]. Other methods indirectly manipulate attention by conditioning the encoder or decoder during computation. For example, Ref. [170] analyzes attention patterns in relation to factual correctness, modifying them through a decoding framework, while [146] perturbs hallucinations by masking attention heads that specialize in content retrieval, conditioning the contrastive decoding process. In [171], an NLU model parses meaning-representation (MR) pairs from utterances and reconstructs correct attribute values in reference texts, using a self-attentive encoder to produce slot-value/utterance vectors and an attentive scorer to compute semantic similarity, followed by self-training to recover semantically aligned texts. Language-specific modules have also been introduced to coordinate encoder and decoder activation, restricting them to intended input and target languages [118]. Finally, attention maps serve as diagnostic signals for factuality in approaches like AggTruth [172], which aggregates attention across layers to compute contextualized patterns and detect hallucinations in RAG settings.

5.2.2. Decoding Strategies

In this section, we present decoding strategies as intervention methods applied during the generation process of LLMs, determining how models translate their learned representations into human-readable text by balancing fluency, coherence, and factual accuracy. Hallucinations often arise because models fail to adequately attend to input context or rely on outdated or conflicting prior knowledge [173]. While standard decoding methods such as greedy decoding, beam search, and nucleus sampling [75] offer trade-offs between diversity, efficiency, and precision [5], models can still commit to incorrect tokens early in decoding and subsequently justify them, reflecting fundamental issues in decoding dynamics [21,65]. To address this, decoding strategies are frequently developed alongside complementary approaches, including attention mechanisms [118,146,170], probability-based methods [29,112,174,175], or RAG-based decoding [154,176], all aiming to enhance output quality and interpretability. Empirical evidence shows that advanced decoding strategies can substantially reduce hallucinations: for example, RAG raises FactScore from 17.5% to 42.1%, while self-correction methods have improved truthfulness scores on benchmarks like TruthfulQA [5]. Building on these insights, we categorize advanced decoding strategies as follows:

Methods in this family adjust token selection to favor context-aligned, higher-confidence outputs. Context-aware decoding amplifies the gap between probabilities with vs. without context, down-weighting prior knowledge when stronger contextual evidence is present [29,173]. Entropy-based schemes penalize hallucination-prone tokens using cross-layer entropy or confidence signals [174], while CPMI rescales next-token scores toward tokens better aligned with the source [175]. Logit-level interventions refine decoding by interpreting/manipulating probabilities during generation [112]. Confidence-aware search variants use epistemic uncertainty to steer beams toward more faithful continuations, with higher predictive uncertainty correlating with greater hallucination risk [76,177]. SEAL trains models to emit a special [REJ] token when outputs conflict with parametric knowledge and then leverages the [REJ] probability at inference to penalize uncertain trajectories [178]. Finally, factual-nucleus sampling adapts sampling randomness by sentence position, substantially reducing factual errors [116].

- Probabilistic Refinement and Confidence-Based Adjustments: Methods in this family adjust token selection to favor context-aligned, higher-confidence outputs. Context-aware decoding amplifies the gap between probabilities with vs. without context, down-weighting prior knowledge when stronger contextual evidence is present [29,173]. Entropy-based schemes penalize hallucination-prone tokens using cross-layer entropy or confidence signals [174], while CPMI rescales next-token scores toward tokens better aligned with the source [175]. Logit-level interventions refine decoding by interpreting/manipulating probabilities during generation [112]. Confidence-aware search variants—Confident Decoding and uncertainty-prioritized beam search—use epistemic uncertainty to steer beams toward more faithful continuations, with higher predictive uncertainty correlating with greater hallucination risk [76,177]. SEAL trains models to emit a special [REJ] token when outputs conflict with parametric knowledge and then leverages the [REJ] probability at inference to penalize uncertain trajectories [178]. Finally, factual-nucleus sampling adapts sampling randomness by sentence position, substantially reducing factual errors [116].

- Contrastive-inspired Decoding Strategies: A range of decoding methods build on contrastive principles to counter hallucinations. DeCoRe induces hallucinations by masking retrieval heads and contrasting outputs of the base LLM with its hallucination-prone variant [146], while Delta reduces hallucinations by masking random input spans and comparing distributions from original and masked prompts [147]. Contrastive Decoding replaces nucleus or top-k search by optimizing the log-likelihood gap between an LLM and a smaller model, introducing a plausibility constraint that filters low-probability tokens [17]. SH2 (Self-Highlighted Hesitation) manipulates token-level decisions by appending low-confidence tokens to the context, causing the decoder to hesitate before committing [179]. Spectral Editing of Activations (SEA) projects token representations onto directions of maximal information, amplifying factual signals and suppressing hallucinatory ones [180]. Induce-then-Contrast (ICD) fine-tunes a “factually weak LLM” on non-factual samples and uses its induced hallucinations as penalties to discourage untruthful predictions [181]. Active Layer Contrastive Decoding (ActLCD) applies reinforcement learning to decide when to contrast layers, treating decoding as a Markov decision process [182]. Finally, Self-Contrastive Decoding (SCD) down-weights overrepresented training tokens during generation, reducing knowledge overshadowing [54].

- Verification and Critic-Guided Mechanisms: Several strategies enhance decoding by incorporating verification signals or critic models. Critic-driven Decoding combines an LLM’s probabilistic outputs with a text critic classifier that evaluates generated text and steers decoding away from hallucinations [115]. Self-consistency samples multiple reasoning paths, selecting the most consistent answer; this not only improves reliability but also provides an uncertainty estimate for detecting hallucinations [21]. TWEAK treats generated sequences and their continuations as hypotheses, which are reranked by an NLI or Hypothesis Verification Model (HVM) [119]. Similarly, mFACT integrates a faithfulness metric into decoding, pruning candidate summaries that fall below a factuality threshold [100]. RHO (Reducing Hallucination in Open-domain Dialogues) generates candidate responses via beam search and re-ranks them for factual consistency by analyzing knowledge graph trajectories from external sources [183].

- Internal Representation Intervention and Layer Analysis: Understanding how LLMs encode replies in their early internal states is key to developing decoding strategies that mitigate hallucinations [30]. Hallucination-prone outputs often display diffuse activation patterns rather than concentrated on relevant references. In-context sharpness metrics address this by enforcing sharper token activations, ensuring predictions emerge from high-confidence knowledge areas [184]. Inference-Time Intervention (ITI) shifts activations until the response is complete [185], while DoLa contrasts logits from early and later layers, emphasizing factual knowledge embedded in deeper layers over less reliable lower-layer signals [150]. Activation Decoding similarly constrains token probabilities using entropy-derived activations without retraining [184]. LayerSkip introduces self-speculative decoding, training models with layer dropout and early-exit loss so that earlier layer predictions are verified by later ones, thereby improving efficiency [186].

- RAG-based Decoding: RAG-based decoding strategies integrate external knowledge to enhance factual consistency and mitigate hallucinations [154,176]. For instance, REPLUG prepends a different retrieved document for every forward pass of the LLM and averages the probabilities from these individual passes, thus allowing the model to produce more accurate outputs by synthesizing information from multiple relevant contexts simultaneously [176]. Similarly, Retrieval in Decoder (RID) dynamically adjusts the decoding process based on the outcomes of the retrieval, allowing the model to adapt its generation based on the confidence and relevance of the retrieved information [154].

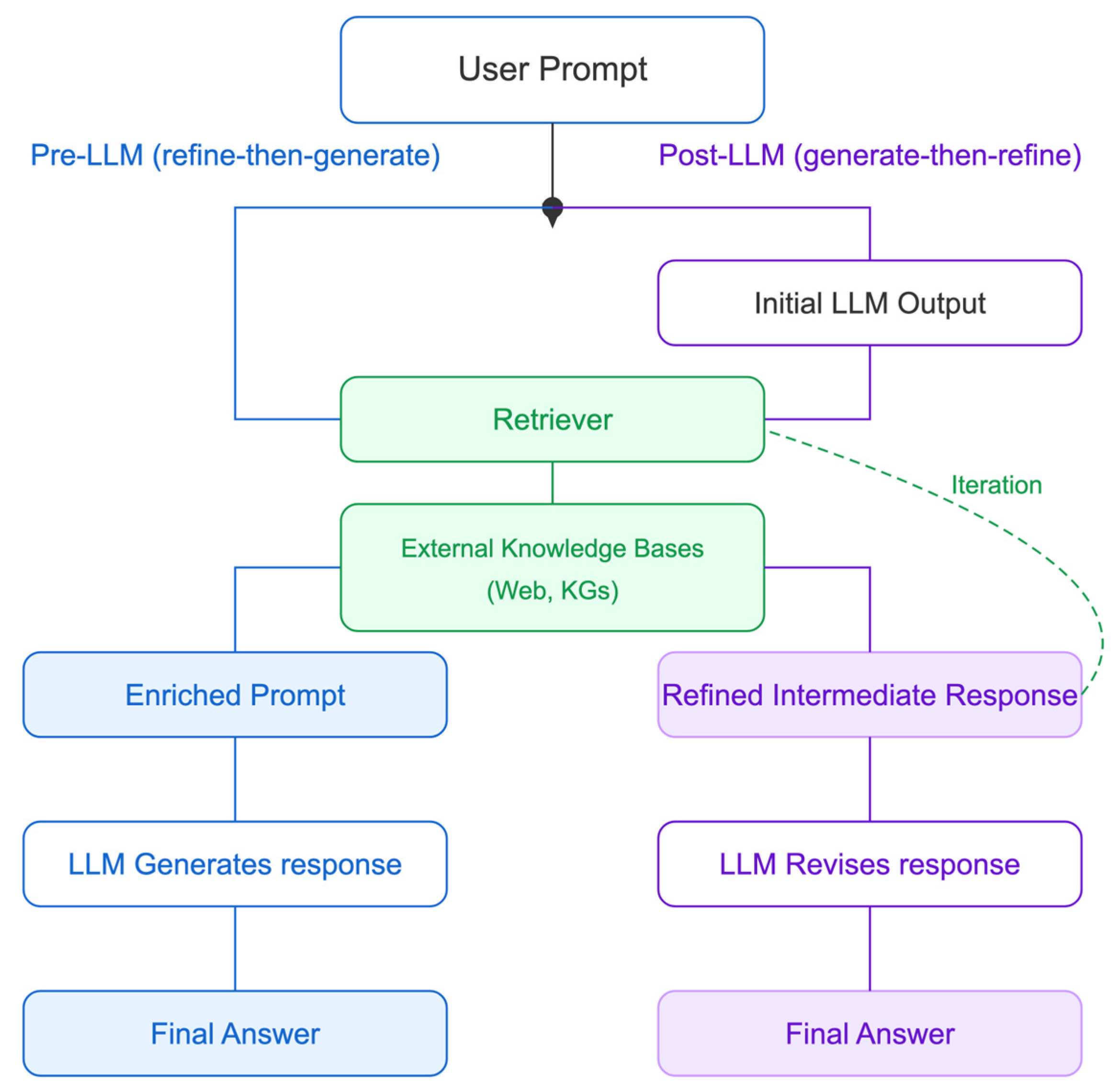

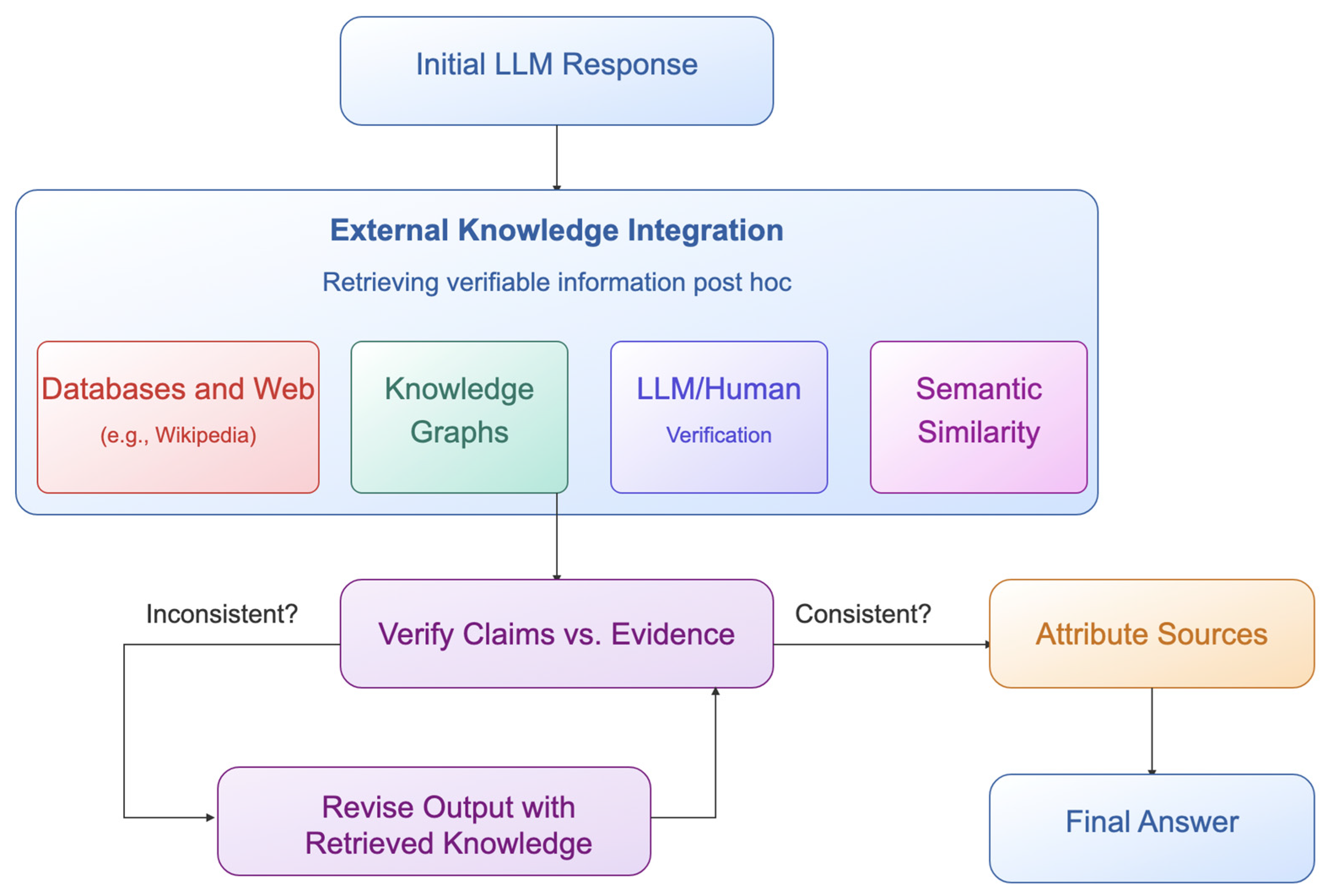

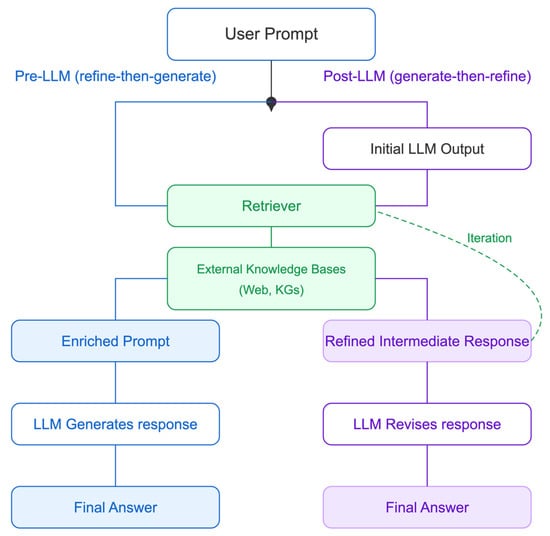

5.2.3. Retrieval-Augmented Generation

Retrieval-Augmented Generation (RAG) (Figure 11) modifies the architecture or inference pipeline by including a retrieval component. Given an initial prompt, this component fetches the most relevant documents from external sources and injects them into the prompt to enrich the prompt’s context [17]. This extends an LLM’s access beyond its training data, grounding outputs in updated references and reducing hallucinations across diverse tasks [17,40,187]. Most implementations follow a pre-LLM refine-then-generate paradigm, where retrieved documents enrich the prompt before generation, while post-LLM generate-then-refine approaches first produce an intermediate response to guide retrieval, enabling refinement with highly relevant information [28,61,188,189,190]. Retrieval typically uses dual encoders, semantic indexing, or vector similarity search [191].

Figure 11.

Simplified overview of Pre-LLM and Post-LLM retrieval-augmented generation (RAG) pipelines.

Many studies analyze how retrieval choices impact RAG performance. In [192], dense and sparse retrieval are compared, and a hybrid retriever is proposed that combines both with advanced query expansion; results are fused via weighted Reciprocal Rank Fusion. HaluEval-Wild constructs reference documents from the web using both dense and sparse retrievers to assess whether model outputs contain hallucinations [107]. HalluciNot repurposes RAG for detection: it retrieves external documents, trains a classifier on them, and then verifies whether each generated span is supported by factual evidence [111]. While RAG has proven effective in mitigating hallucinations through external source grounding, research also shows that, paradoxically, hallucination rates may increase under certain conditions. To better understand these challenges, we outline in Section 7.2 a set of practical considerations for users when applying RAG-based methods. These include:

- Dependency on Retrieval Quality: RAG-based methods rely heavily on the quality of retrieved documents and so inaccurate, outdated, noisy, or irrelevant retrievals may mislead the language model or introduce additional hallucinations [51,55,57,62,73,107,193,194,195]

- Complex Reasoning: Retrieval systems may often strive to engage in complex or multi-hop reasoning thus harming performance of natural language inference (NLI) models [51,57,62].

- Latency and Scalability: Lengthy prompts and iterative prompting improve factuality but at the cost of increased inference time and resource consumption, reducing scalability in real-world settings [51,193,194].

- Over-reliance on External Tools: Some systems require dependency on entity linkers, search engines, or fact-checkers, which can introduce errors or inconsistencies [51,62,109].

- Faithfulness and effective integration: Methods that prioritize factuality sometimes harm the fluency or coherence of generated text, leading to less natural outputs [51] while integrating it effectively into the generation process is another important factor [57].

Several extensions strengthen RAG by improving how evidence is gathered, structured, and applied. LLM-AUGMENTER retrieves external evidence, consolidates it via entity linking and evidence chaining, and feeds the structured evidence into the LLM; it iteratively flags factual inconsistencies and revises prompts until outputs align with retrieved knowledge [193]. CRAG leverages Wikipedia and Natural Questions with BM25 retrieval, using both the original query and segments of the LLM’s draft to fetch supporting passages [194]. SELF-RAG introduces a self-reflective loop in which a critic emits reflection tokens and the generator conditions on them, enabling adaptive retrieval, self-evaluation, and critique-guided decoding [196]. FreshLLMs augment with web search to detect and correct factual errors using retrieved evidence, addressing outdated parametric knowledge [62]. In [154], Mistral is modified to integrate a retrieval module that dynamically fetches top-k Wikipedia documents and generates fact-grounded responses. Finally, AutoRAG-LoRA combines prompt rewriting, hybrid retrieval, and hallucination detection to score confidence and trigger selective LoRA adapter activation [197].

The effectiveness of RAG depends heavily on the quality of retrieved content, yet this assumption of noise-free evidence does not always hold. To address noisy retrieval, Ref. [73] proposes a two-stage approach: filtering irrelevant documents with a trained classifier, followed by contextual fine-tuning that conditions the model to disregard misleading inputs. Beyond noise, the timing of retrieval has emerged as equally critical, since unnecessary retrieval can introduce irrelevant information and increase both inference time and computational cost [55]. DRAD tackles this with real-time hallucination detection (RHD) and a self-correction mechanism using external knowledge to dynamically adjust retrieval. ProbTree integrates retrieved and parametric knowledge via a hierarchical reasoning structure with uncertainty estimation [198]. Recent work has also advanced document preprocessing: a semantic chunker improves indexing by ensuring that retrieved segments are contextually meaningful and better aligned with the generation process [6].

In addition to addressing the challenges of noisy retrieval, recent research has explored a variety of new frameworks to optimize RAG-based systems. Read-Before-Generate (RBG) pairs an LLM with a reader that assigns sentence-level evidence probabilities, fuses multiple retrieved documents at inference, and uses a specialized pre-training task to promote reliance on external evidence [199]. REPLUG retrieves with a lightweight external module, prepends documents to the prompt, and reuses the same LLM to encode them [176]. Neural-retrieval-in-the-loop targets complex multi-turn dialogue, with RAG-Turn enabling synthesis across multiple documents so retrieval stays relevant to each turn, mitigating topic drift and factual errors [74]. Retrieval in Decoder (RID) integrates retrieval directly into decoding so that generation and retrieval proceed jointly [154]. Finally, SEBRAG performs substring-level extraction conditioned on document ID and span boundaries, enabling precise answers and explicit abstention when there is no answer [200].

RAG-based methods have been used in dataset creation and code generation. For instance, ANAH provides an analytically annotated hallucination dataset, where each answer sentence is paired with a retrieved reference fragment used to judge hallucination type and correct errors—highlighting the role of retrieval in annotation [57]. ChitChatQA [109] assesses LLMs on complex, diverse questions, built through a framework that invokes tools and integrates retrieved information from reliable external sources into reasoning. In code generation, RAG-based methods retrieve relevant code snippets from repositories based on task similarity, incorporating them into prompts and achieving consistent performance gains across evaluated models [66].

An increasing number of studies apply RAG to retrieve specific information from Knowledge Graphs, so as to enrich the initial prompt’s context-specificity. For instance, the Recaller module [201] retrieves entities via a two-stage highlighting process: a coarse filter flags potential hallucinations, while a fine-grained step masks and regenerates them. Graph-RAG [195] combines HybridRAG, which injects graph entities or relations into context based on semantic proximity or relation paths, with FactRAG, which evaluates factual consistency and filters hallucinated responses. Other methods use graph-based detection by extracting structured triplets from LLM outputs and performing explicit relational reasoning to compare them with reference knowledge graphs, thereby identifying unsupported or fabricated facts [202]. While most RAG methods retrieve before generation, several approaches use retrieval after generation to validate and correct outputs. A post hoc RAG pipeline in [61] extracts factual claims from model outputs and verifies them against knowledge graphs; RARR identifies unsupported claims, finds stronger evidence, and revises text accordingly [190]. Neural Path Hunter reduces dialogue hallucinations by traversing a knowledge graph to build a KG-entity memory and using embedding-based retrieval during refinement, even without a standard RAG architecture [188].

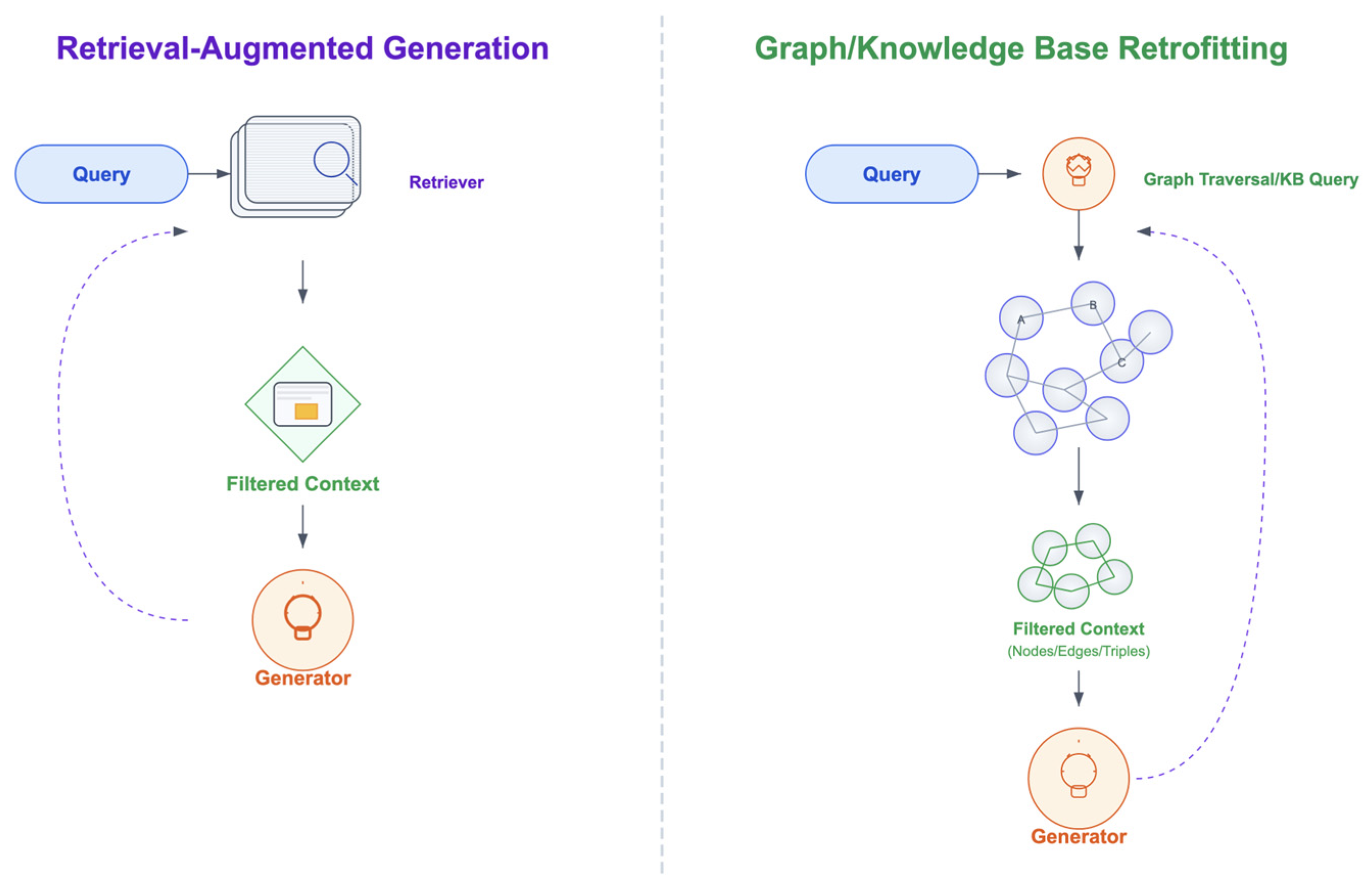

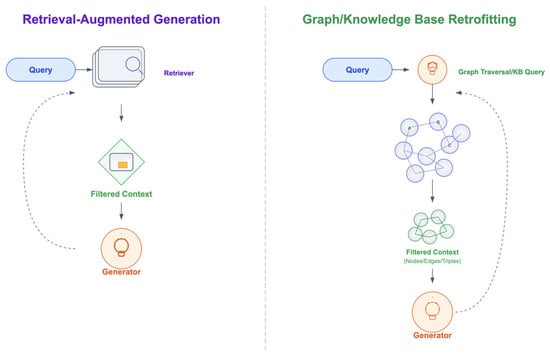

5.2.4. Knowledge Representation Approaches

Knowledge representation approaches (Figure 12) incorporate structured and semi-structured knowledge, such as knowledge graphs [88,188,201,202], ontologies [203], or entity triplets into a language model’s architecture to enhance reasoning and factual consistency [204]. These representations emphasize explicit connections, such as hierarchical classifications and causal links, thereby enabling the verification of statements against known relationships. To ensure that the integration of such knowledge representations provides satisfactory results, it is necessary to maintain high-quality and up-to-date content, which is readily available and free from ambiguities [73,201,204].

Figure 12.

Graph/Knowledge Base injection vs. RAG diagram, effectively showing how these approaches process queries differently.

Integration methods span prompting during inference [205], retrofitting external knowledge [61,195,201,206], modifying the attention mechanism [167], and directly injecting graph information into latent space [202,204]. The Recaller module [201] retrieves entities and their one-hop neighbors from a knowledge graph and guides generation in two stages: a coarse filter identifies potentially hallucinated spans, and a fine-grained step selectively masks and regenerates them, enabling more precise grounding in retrieved knowledge. This intervention improves factual accuracy while maintaining fluency, though retrievers may lack sufficient relational depth for complex queries—a limitation also noted in [73]. FLEEK [207] follows a related path by extracting factual claims, converting them into triplets, and generating questions for each triplet, which are answered via a KG-based QA system to verify the extracted facts.

Knowledge graphs (KGs) serve as external sources to enrich context before generation [88,189,195,203,204]. Graph-integration techniques include entity–relation or subgraph extraction and multi-hop reasoning via traversal [195,208], often linearized into hierarchical prompts or encoded to fit LLM inputs [208]. In [195], modular retrieval expands or re-ranks passages using entity linking, subgraph construction, or semantic path expansion, leveraging graph-based signals (e.g., proximity, relation paths) to improve factual grounding. Similarly, Ref. [203] evaluates DBpedia as an external source, concluding that while LLMs encode factual knowledge in parametric form, this implicit knowledge is limited, and external KGs remain essential. Knowledge Graph Retrofitting [61] validates only critical information extracted from intermediate responses, then retrofits KG evidence to refine reasoning. LinkQ [209], an open-source NLI interface, directs LLMs to query Wikidata, outperforming GPT-4 in several scenarios though struggling with complex queries. ALIGNed-LLM [210] integrates entity embeddings from a KGE model via a projection layer, concatenated with token embeddings, reducing ambiguity and enabling structurally grounded outputs. G-retriever [211] addresses context length constraints using soft prompting and Prize-Collecting Steiner Tree optimization to select subgraphs balancing node relevance and connection cost, thus improving retrieval quality and efficiency.

A closely related line of research focuses on embedding graph-related information directly into the latent space of a model. Approaches leverage Graph Attention Networks (GAT) and message passing to explore semantic relations among hallucinations, assuming they are structured and follow homophily—entities with similar traits tend to connect [202]. Graph Neural Networks (GNNs) integrate with the attention mechanism to form hybrid architectures that aggregate information from neighboring nodes, enhancing contextual understanding and reducing hallucinations [167]. Rho improves open-domain dialogue by grounding parametric knowledge in retrieved embeddings (local knowledge) and augmenting attention with multi-hop reasoning over linked entities and relation predicates (global knowledge), thereby generating less hallucinated content [183].

5.2.5. Specialized Architectural Mechanisms for Enhanced Generation

This category encompasses advanced frameworks that we have found difficult to classify in the previous sections of “Architectural Modifications”. A number of techniques—including diffusion-inspired mechanisms, Mixture-of-Experts frameworks [40], Ref. [212], dual-model architectures [213], LoRA adapter integration and specialized classifiers [40,111], token pruning and fusion [169,214], and Representation Engineering [112], have demonstrated significant potential in addressing hallucinations.

MoE architectures, introduced in [215] and scaled for LLMs in [216], partition models into expert modules, with a gating network selecting a few experts per input for sparse, efficient activation. By localizing knowledge and reducing interference across domains, MoEs can help mitigate hallucinations, though they typically increase parameter count. Extending this idea, Lamini-1 [40] implements a Mixture of Memory Experts (MoME) with millions of experts acting as a structured factual memory, enabling explicit storage/retrieval of facts during inference via sparse expert routing, and combining cross-attention routing, LoRA-style adapters, and accelerated kernels. Similarly, the MoE in [212] orchestrates specialized experts through input preprocessing, dynamic expert selection, decision-routing to control information flow, and majority voting—filtering erroneous responses while containing computational overhead.

In addition to MoE architectures, dual-model architectures have also been used to effectively combat hallucinations as demonstrated in [213] where the primary model generates the initial text based on the input prompt, the secondary model monitors and analyzes the output from the primary model in real-time, checking for logical and factual inaccuracies while a feedback loop mechanism further enhances the self-monitoring process by allowing for iterative improvements based on detected errors. This interplay allows the system to cross-reference its outputs, thus improving the reliability of the generated text since any inconsistencies are immediately addressed [213]. A multi-task architecture for hallucination detection, HDM-2 [111], uses a pretrained LLM backbone with specialized detectors—e.g., a classification head for common-knowledge errors—plus LoRA-adapted components and shallow context-based classifiers, allowing tailored factual verification. The RBG framework [199] jointly models answer generation and machine reading via a reader that assigns evidence probability scores and injects them into the generator’s final distribution; a factual-grounding pretraining objective further encourages reliance on retrieved documents to boost accuracy. Rank-adaptive LoRA fine-tuning (RaLFiT) [217] focuses parameter updates on truthfulness-relevant modules: it first probes each transformer module for truthfulness correlation, then assigns higher LoRA ranks to the most correlated ones, and finally fine-tunes with Direct Preference Optimization (DPO) on paired truthful vs. untruthful responses to strengthen factual alignment.