Abstract

The robots that entered the manufacturing sector in the second and third Industrial Revolutions (IR2 and IR3) were designed for carrying out predefined routines without physical interaction with humans. In contrast, IR4* robots (i.e., robots since IR4 and beyond) are supposed to interact with humans in a cooperative way for enhancing flexibility, autonomy, and adaptability, thus dramatically improving productivity. However, human–robot cooperation implies cognitive capabilities that the cooperative robots (CoBots) in the market do not have. The common wisdom is that such a cognitive lack can be filled in a straightforward way by integrating well-established ICT technologies with new AI technologies. This short paper expresses the view that this approach is not promising and suggests a different one based on artificial cognition rather than artificial intelligence, founded on concepts of embodied cognition, developmental robotics, and social robotics. We suggest giving these IR4* robots designed according to such principles the name CoCoBots. The paper also addresses the ethical problems that can be raised in cases of critical emergencies. In normal operating conditions, CoCoBots and human partners, starting from individual evaluations, will routinely develop joint decisions on the course of action to be taken through mutual understanding and explanation. In case a joint decision cannot be reached and/or in the limited case that an emergency is detected and declared by top security levels, we suggest that the ultimate decision-making power, with the associated responsibility, should rest on the human side, at the different levels of the organized structure.

1. Introduction

Robots entered the manufacturing sector in the second and third Industrial Revolutions (IR2 and IR3), with the support of information and communication technologies (ICTs). Such robots were designed for carrying out predefined routines without any interaction with humans. In contrast, IR4 robots are supposed to interact with humans in a cooperative way, in order to improve the productivity of the manufacturing process, in alternative to the mere maximization of the production volume, by enhancing the flexibility, autonomy, and adaptability that are typical of well-trained human workers. Moreover, harmonization with humans is further enhanced in IR5, but the transition from IR2/IR3 to IR4* (i.e., robots since IR4 and beyond) as regards robotics cannot consist of a mere incremental innovation but must face fundamental cognitive and epistemological problems.

As a matter of fact, collaborative robots (CoBots) have already been in the market for more than a decade: they are designed to work in the vicinity of human workers, allowing physical interaction with humans, within a shared space, without protective barriers. CoBots are currently used in a number of typical industrial applications, with strict requirements of safety, such as reactively blocking movements in case of unexpected collisions; however, they lack the degree of autonomy and flexibility that is crucial to allow them to collaborate with human partners in a deep, general sense. Beyond a basic level of safety, this should include the capacity of the robot to reorganize the interaction plan as a function of unexpected/changing conditions as well as implicit or explicit indications by the human partner on the basis of a mutual understanding of the purpose and details of their collaboration. Moreover, the issue of safety, in a more general sense, cannot rely simply on a collection of reactive mechanisms but must be based on a solid cognitive background.

In any case, as specified in the leaflet of the present Special Issue of the AI journal, “the enablers of IR4 must be capable of performing high level intellectual tasks, such as understanding (i.e., why is it happening), prediction (what will happen), and adaptation (which decisions should be taken and implemented to choose the right course of action). Therefore, artificially intelligent systems must empower the enablers”. As a matter of fact, such “high level intellectual tasks” exemplify what in cognitive neuroscience is known as prospection [,,], with an apparently small but crucial missing requirement: the goal of the task and a system that allows the cognitive agent to evaluate the degree of acceptability of a course of action not only in physically measured terms, such as speed or energy consumption, but also in more general terms that are understandable and trustable by the human partner for shared intentions.

Prospection is the crucial element for allowing a robot to operate in an autonomous as well as in a cooperative way, interacting with human partners. It is a set of mental processes that link the different time frames of purposive and collaborative action: the past (collection of experience through episodic memory), the present (through active perception), and the future/recollection (through the internal simulation of feasible scenarios). From this comes the characterization of prospection as “mental time travel” []. Prospection is related to and partly includes a large research area known as human–robot interaction, with particular reference to the use of haptic interfaces for the detection and estimation of partner intentions []. There is no doubt that present day CoBots lack the prospective capabilities to make them capable of inducing autonomous skill acquisition, and we also believe that there is no chance that such a quantum increase in cognitive skills can be achieved by fusing robotics with AI, with particular reference to large language models (LLMs). Such an opinion is based on the epistemological difference between intelligence and cognition, as explained in the following section, but this does not exclude LLMs from being used as tools for improving human–robot interaction [] and having a role in the process of cognitive bootstrapping [].

1.1. Cognition vs. Intelligence

Intelligence is frequently confused with cognition. In particular, in the robotic context, they are implicitly assumed to be synonyms; whereas, from the epistemological point of view, they represent two different methods of knowledge acquisition and knowledge construction. As explained in [], the term intelligence comes from the Latin verb intelligere that is associated with the Aristotelian hierarchical classification of the activities of living organisms:

Vegetativum → Sensitivum → Motivum → Intellectivum

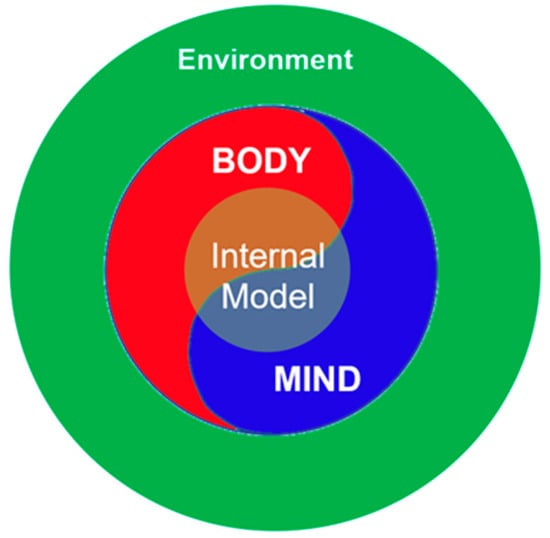

Intelligence only refers to the last item of the hierarchy, and thus, it does not include perception and action but is only focused on the ideal, impersonal forms or abstract essences of real phenomena, independent of personal experience and as far as possible from practical/bodily activities. The term cognition comes from another Latin verb cognoscere and implies the ability of an agent to learn and become aware of the surrounding reality on the basis of active interaction and personal experience. Thus, cognition is fundamentally embodied; whereas, intelligence is intrinsically disembodied. This distinction has marked the history of philosophy in different forms and schools of thought, based on a rejection of the Cartesian mind–body dualism, and it is still valid nowadays, particularly in contemporary formulations on the scientific basis of epistemology, such as the pragmatist view of John Dewey [] and the naturalized epistemology of Willard Quine []. Dewey focused on the fundamental role of experience for the acquisition of knowledge, explaining that experience consists of an interaction between living beings and their environment; thus, knowledge is not a fixed apprehension of something but a process of acting and being acted upon. Quine’s naturalized approach regards epistemology as continuous with, or even part of, natural science: the starting point of epistemology should not be the introspective awareness of our own conscious experience, typical of Cartesian dualism, but rather the conception of the larger world that we obtain from common sense and science. Moreover, we suggest that such epistemological approaches that support the crucial role of embodiment apply as well to the corresponding artificial implementations: artificial intelligence (dualistic and disembodied) vs. artificial cognition (embodied in a developing framework). Figure 1 illustrates the embodied nature of the cognitive system of natural or artificial cognitive agents. The internal model that allows prospective capabilities is a consequence of the integration between brain/mind, body, and environment.

Figure 1.

Embodied nature of the cognitive system of natural or artificial cognitive agents.

From the neurobiological point of view, support for the crucial role of embodiment comes from a large part of cognitive neuroscience [,,] and, in particular, from enactivism []. On the other hand, it is now well-established that epistemology cannot ignore the fact that the human capacity to create and manipulate knowledge is not innate but is the result of a process of cognitive development that allows children to think, explore, and figure things out. This is the subject investigated by cognitive psychology from different viewpoints, such as Piaget’s multi-stage model of cognitive development [], Bronfenbrenner’s ecological systems theory [], or Vygotsky’s theory based on social learning and the zone of proximal development (ZPD), i.e., the space between what a learner can do without assistance and what a learner can do with adult guidance or in collaboration with more capable peers []. Moreover, the acquisition of cognitive capabilities by human subjects throughout development can be organized and facilitated by means of explicit training and education on the basis of the assimilation theory proposed by Ausuble []. This theory states that, during education, meaningful learning occurs as a result of the interaction between new information that the individual acquires and a particular cognitive structure that the learner already possesses and that serves as an anchor for integrating the new content into prior knowledge (as in Vygotsky’s ZPD).

1.2. Collaborative Robots with Cognitive Capabilities

In summary, the human capacity to achieve high levels of general and specialized knowledge, essential for skilled behavior and characterized by prospection and creativity, is intrinsically made possible by development, personal features, and social interaction. However, why should this kind of developmental epistemology be of any interest for the design of collaborative robots with cognitive capabilities (CoCoBots for short) in the transition from IR2/IR3 to IR4*? One may argue that it does not make any sense to initiate an arduous process, starting with babybots and then training them to “adulthood”; whereas, the alternative roadmap of designing adult robots with sufficiently advanced cognitive architectures seems more straightforward. In our opinion, the design of fully developed “adult” cognitive architectures is only apparently more direct and simpler; while, in reality, “adult architectures” inevitably tend to incorporate “developmental tracts”. In this respect, it is worth considering that a recent review of forty years in cognitive architecture research for autonomous robotics [] clearly shows that we are still far away from a solid shared platform. The dilemma is that the blueprint of a general cognitive architecture that (as the dream of general AI) can be easily trained/adapted to any application area might indeed be an impossible dream, or even worse, it may be impossible to certify its reliability. The epistemology of the architecture can be better addressed as a developmental/assimilation process, where new experiences are always integrated into preexisting knowledge. In fact, in a recent revisitation of Ausubel’s theory of meaningful learning [], taking into account the cognition and neuroscience advancements since his original work, a conclusion has been that, while Ausubel characterized students’ previous knowledge so usefully, he paid insufficient attention to the role of “prior learning”, an aspect that we think should be explicitly addressed in CoCoBots.

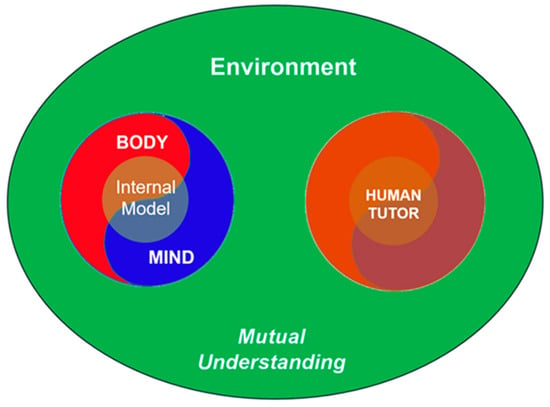

An even stronger motivation for choosing the developmental roadmap for designing CoCoBots comes from the core issue of collaboration. Collaboration between humans and robots, i.e., between two cognitive, autonomous agents, requires a high level of mutual understanding that allows us to compare prospective views and predictions, distribute the activities, and agree on alternative courses of action. Thus, we think that artificial cognition emerges through the dynamic interaction between an acting organism and its environment, mediated by a sensing and acting body, namely a purposeful, proactive, exploratory interaction capable of achieving prospective capabilities. In this framework, we suggest that the roadmap to the design of CoCoBots functionally appropriate for the transition from IR2/IR3 to IR4* should be derived from building upon two ongoing research lines active in the field for more than a couple of decades that need to be thoroughly integrated: developmental robotics and social robotics. This fundamental issue is illustrated in Figure 2, with an emphasis on mutual understanding for consolidating effective collaboration between the robot and a human partner.

Figure 2.

Achieving mutual understanding through social robotics.

1.3. The Key Roles of Developmental Robotics and Social Robotics

Developmental robotics is an interdisciplinary research area at the intersection of robotics, developmental psychology, and developmental neuroscience []. It clearly represents a brain-inspired approach to the design of robots. However, the biomimetic goal is not to imitate the brain per se, namely the performing brain of trained adults, but the process of progressive knowledge and skills acquisition typical of living systems in the early period of life, leading to autonomous decision-making ability by interaction with the physical and social environment. This progressive and harmonic development of sensory, motor, and cognitive skills in humans was analyzed from an engineering perspective, to design a similar roadmap for the realization of sensory, motor, and cognitive skills in artificial systems. The rationale being that, in a developmental approach, the system learns progressively, starting from simple built-in functions to acquire more and more complex abilities, following a more sustainable and efficient learning process. This proved to be more difficult than expected, and it is still far away from well-structured solutions both in the biological and cybernetic engineering sides. In the neurobiological case, the main difficulty is that the process of sensory–motor–cognitive development is still analyzed and, consequently, described as fragmented and individualized, far away from a continuous and seamless progression emerging from human behavior that could be “imitated” by a robotic designer; on the other hand, the vast collection of research activities clustering around developmental robotics is also rather “stroboscopic”, covering many specific items but missing the convergent picture and the temporal and functional continuity of the biological developmental process. However, in the last 30 years, the convergent approach of neuroscience, developmental psychology, computational science, and robotics is building up the shared scientific ground needed to understand which machinery is needed “to become intelligent”. In spite of this stroboscopic state of the art, the alternative roadmap for IR4 CoCoBots that focuses on the design of fully developed “adult” cognitive architectures is only apparently more direct and simpler. In contrast, the developmental roadmap, which is apparently more arduous, would take advantage of close interaction with humans and the well-developed human tools for building, communicating, and transmitting knowledge and culture in an adaptable, personalized way.

From the engineering point of view, the fundamental rationale of cognitive developmental robotics is “physical embodiment” [,,,], which enables information structuring through interactions with the environment, including other agents. This clearly resonates, from the neurobiological point of view, with the fundamental embodied nature of human cognition. Ultimately, the crucial engineering challenge for cognitive developmental robotics is the identification of a minimal set of sensory–motor–cognitive kernels capable of bootstrapping the growth, through the self-organization, interaction, and training of sensory–motor–cognitive abilities adapted to common situations but, more importantly, adaptable to unexpected ones. Optimization here does not refer to improving performance in single or known tasks but on the ability to adapt to unexpected ones. The power of this growth process is that it is always “under construction”, and its reliability is continually “under evaluation”. This engineering requirement for choosing the developmental roadmap was borderline for the IR2/IR3 robots that indeed lacked any cognitive capability.

2. Learning and Development

The identification of a minimal set of kernels (i.e., the function to be included in a minimal cognitive architecture) is also needed to overcome one of the fallacies of the developmental approach, namely that starting from simple functions to progressively acquire more and more complex ones does not take into consideration the fact that, at least in humans, the structure (e.g., the morphology of the brain and nervous system) is extremely complex from the start of the developmental process. Therefore, the simplicity of the functional start is balanced by the complexity of the morphological/structural one. Therefore, development is equivalent to a car break-in period, and developmental robotics should address more explicitly, not only the “innate” functions but also the “innate” morphology of the network and the dynamics of their interactions. The structure and the implementation of the minimalistic architecture becomes a fundamental aspect of the problem, together with the companion issue about developmental epistemology. An inspiring conceptualization of this issue may come from the general concept of cognitive bootstrapping, which is a key new idea in theories of learning and development []. It describes any process or operation in which a cognitive system uses its initial resources to develop more powerful and complex processing tools, which are then reused in the same fashion, and so on recursively, driving the accumulation of knowledge of a higher and higher level and quality. This recursive process is somehow “self-similar” and applies to child cognitive development as well as to abstract thinking, professional skill acquisition, and artistic innovation. Consider, for example, language acquisition, namely children’s ability to learn complex linguistic rules, which can be endlessly reapplied creatively, starting from extremely limited processing capabilities and data. This recursive, self-similar process is similar to the “constitutive autonomy”, described in Vernon as one of the desiderata for developmental cognitive architectures []: “the developmental processes should operate autonomously so that changes are not a deterministic consequence of external stimuli but result from an internal process of generative model construction, driven by value systems that promote self-organization”. Moreover, there is a link between cognitive bootstrapping and the previously mentioned assimilation theory [,].

Strictly speaking, cognitive bootstrapping is not a learning paradigm; although, a cognitive agent, designed along such lines, might usefully exploit traditional learning paradigms (e.g., unsupervised, supervised, and reinforcement learning) at any stage of the cognitive developmental process. Consider, in particular, the following metaphor by Yann Lecun []: “If intelligence is a cake, the bulk of the cake is unsupervised learning, the icing on the cake is supervised learning, and the cherry on the cake is reinforcement learning”. The problem is that intelligence is not a cake, and a good cake is produced by a well-schooled and well-trained chef whose skills may emerge at the end of a bootstrapping process. Sometimes, learning in a deep sense requires the creation of new representational resources that are more powerful than those present at the outset, and this is the essence of cognitive bootstrapping. It has also been suggested that the ability to “bootstrap” is the truly unique ability of humans in the animal domain. Generally speaking, bootstrapping is the process that underlies the creation of new concepts, building upon or overcoming a previous repertoire of concepts.

Thus, what is the origin of concepts, primitive clusters of knowledge that are constituents of larger mental structures? Concepts are individuated on the basis of two kinds of considerations: their reference to different entities in the world and their role in the distinct mental systems of inferential relations. How do human beings acquire concepts? Innate representations are probably the building blocks of the target concepts of interest, and then, it is necessary to characterize the growing mechanisms that enable the construction of new concepts out of the prior representations. Needless to say, this is a work in progress, but we may expect results in the near future covering different aspects of the issue. For example, the neurobiological basis of cognitive bootstrapping has been investigated [], suggesting that interactions between the frontal cortex and basal ganglia may support the bootstrapping process. As regards the question of the initial bootstrapping of the cognitive bootstrapping process, it has been observed that most animal behavior exhibited immediately after birth is too organized to be the result of clever learning algorithms—supervised or unsupervised—but a highly structured brain connectivity is encoded in the genome []. Moreover, it is suggested that, since the wiring diagram is far too complex to be specified explicitly in the genome, it must be compressed through a “genomic bottleneck” mechanism, as a regularizing constraint on the rules for wiring up a brain. A preliminary model of conceptual bootstrapping in human cognition has been proposed recently []. This model uses a dynamic conceptual repertoire that can cache and later reuse elements of earlier insights in principled ways, modelling cognitive growth as a series of compositional generalizations. Moreover, it has been proposed that the social understanding of others by robots is a fundamental bootstrapping issue [,,].

Along the same line of thought, we may consider the issue of value systems. The concept of a value system permits a biological brain to increase the likelihood of neural responses to selected external phenomena, and this is a crucial feature to support learning, but also to provide autonomous attention focus to direct learning. This combination of unsupervised attention focus and learning aims to address one of the main issues underlying autonomous mental development for developmental cognitive robotics []. As observed by Weng et al. [], autonomous mental development, both in robots and animals, is an open-ended cumulative process that cannot be captured by task-specific machine learning, whatever the number of treated tasks. This process is self-organized and is driven by a value system []. The value system detects the occurrence of salient sensory inputs, evaluates candidate actions, and chooses the most appropriate course of action, activating the generative body schema; salient features are not predefined but emerge from experience as well as through interactions with trainers [].

2.1. Emergence of Prospection Capabilities during Development and Social Interaction

In particular, the design of CoCoBots founded on the principle of developmental robotics includes the necessary computational tools for allowing the emergence of prospection capabilities as well as the integration of the evolved cognitive architecture with social interaction and the social supervision of reliability and ethical standards. Prospection cannot be achieved without representation: representations, such as building blocks, emerge in a bottom-up fashion []. This is a view that matches, at the same time, the fundamental role of embodied cognition and its ontogenetic evolution as a bottom-up process. The main representation or internal model supporting prospection is the body schema [], based on the unifying simulation/emulation theory of action [,,]. There is no evidence that the organization of the multimodal, abstract body schema is somehow innate and genetically preprogrammed. In contrast, we may expect that it is built and refined during development by exploiting plasticity, consolidating a physical self-awareness and conquering two crucial developmental targets: multimodal sensory fusion and calibration, for achieving perceptual abstraction, and kinematic–figural invariance, for general end-effectors and/or skilled tool use.

For cognitive robots conceived in the framework of developmental robotics, cognitive capabilities are not preprogrammed but can be achieved and organized through interaction with the physical and social environment. As CoCoBots need interaction with humans to thrive, they will benefit from developing a cognition bearing similarities with that of their partners []. In the neurobiological case, the crucial role of social interaction during cognitive development has been emphasized for a long time []. The social inclination at birth is not the result of supervised or unsupervised learning algorithms but an inherited predisposition to exploit social interaction for the development of cognitive abilities through the production and understanding of social signals, such as gestures, gaze direction, and emotional displays. Being immersed in the social world and predisposed to social interaction since the earliest moments of development is a foundational element of human cognition, to the point that some authors have suggested that social interaction is the brain’s default mode [].

A possible roadmap for structuring core cognitive abilities in CoCoBots based on a social perspective may start with the incorporation of social motives into artificial cognitive systems [,]; then, it may move on to emphasizing the impact of bidirectional non-verbal linguistic interactions for effective cognitive development []; finally, it may highlight the role of social interaction in consolidating cooperation, teamwork, and the transmission of ethical norms and cultural values in cognitive agents.

Along this process, a powerful contribution for orienting and focusing the development of the sensory–motor–cognitive capabilities of CoCoBots may be inspired by the educational methodologies and technologies employed for training human workers and specialized professionals. Moreover, a relevant motivation and attractive promise for pursuing this line of research is that it may allow us to overcome the explainability issue, namely the generalized level of distrust affecting general AI, and to give rise to mutual understanding. In other words, we may assume that humans may be readier to trust cognitive robotic agents if they are “well-educated”, according to clear and public training plans. We may add that, by relying on social education and training, it will be possible to differentiate between the type of skills achieved by the robotic agents in order to obtain some kind of social balance between human and robotic populations in the new IR4* society. In any case, we may expect that well-educated CoCoBots and human partners will routinely take advantage of developed and trained autonomous evaluations on the course of action to be taken or modified for unexpected occurrences and will manage to handle disagreement through mutual understanding and explanation.

2.2. Ethical Issues

Ethical norms and cultural values will certainly play a role for the CoCoBots of Industry 4.0 and beyond, and their implementation will uncover a fundamental dilemma about the distribution of responsibilities in the new organization of the manufacturing process, where humans and robots cooperate and share the ultimate goal of the process. Various kinds of concern have been raised about the risks of adopting intelligent robots in human affairs [,,], suggesting that overconfidence in the ethical or social capabilities of AI-based robots is generally dangerous (and perhaps impossible to achieve). Trust toward robots has become a central question in the field of HRI [], with a lot of evidence pointing to risks of over-trust toward (even overtly failing) robots or to the possibility that social norms, such as social influence or reciprocity, could interfere with trust judgments when robots are involved [,].

Moreover, it may be observed that fallibility may affect both the robots’ human creators/masters and the cognitive robots: humans make mistakes, robot can make mistakes, amplifying the negative effects of an over-robotized society, a danger brilliantly exemplified in Stanley Kubrick’s “2001: A Space Odyssey”. Thus, we are left with the dilemma: how and in which circumstances to allow intelligent robot assistants to disobey human requests and vice versa. On the human side, it is a fact that a consistent ethical system is far from being shared by everybody, in spite of many efforts throughout history. On the robot side, it may be suggested to extend the already mentioned research on systems of values to the ethical level; however, this line of research has been conceived from the point of view of epistemology, i.e., as a mechanism to enrich the knowledge acquired by a cognitive agent during environmental/social interaction, and there is no clear rationale for the extension. Systems of values are crucial for explaining the process of autonomous mental development but are of little help for ethical evaluations and legal implications.

However, although we think that this kind of dilemma is unsolvable in general (strictly speaking even in the case of human–human collaboration), and in particular, if we are speculating on the artificial intelligence framework, we think that, in the alternative artificial cognition approach, a more robust solution can be formalized. The CoCoBots are conceived as systems fundamentally oriented to face problems with human partners, evaluating alternatives, and ready to explain suggested actions, thus offering the possibility of solving disagreements through common analysis and distributing responsibilities to both sides in a rational way. Disagreements may arise, for example, from “asymmetric” access to information through different communication or sensory channels. Of course, this implies that both robots and humans are well-educated in their jobs, and this is the necessary starting point for the successful development of IR4* robotics. On the other hand, we suggest that, in the hopefully rare case of an emergency being called by an external level of the organized structure or in the case that a joint decision cannot be reached, the ultimate decision-making power, with the associated responsibility, should rest on the human side, without delegating crucial decisions to “smart” but fundamentally unknown algorithms. In a sense, such ultimate power and responsibility exemplifies the crucial role of the “red security button”.

This opinion is also supported by the serious security issues of cyber–physical systems []. As a matter of fact, in the context of IR4*, CoCoBots may be regarded as cyber–physical systems [,], namely integrations of computation and physical processes, where embedded computers and networks monitor and control the physical processes, usually with feedback loops, where physical processes affect computations and vice versa. In addition to the security issues mentioned above, there are considerable challenges [], particularly because the physical components of such systems introduce safety and reliability requirements qualitatively different from those well-known and well-solved in the general-purpose automated systems of IR2/IR3.

3. Conclusions and Future Directions

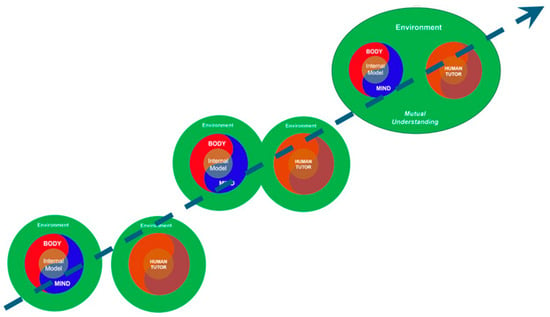

A final comment on the previously expressed opinion that it is quite unlikely that the roadmap to CoCoBots for IR4* can be based on the application to robotics of large vision–language models and generative AI paradigms: on this issue, we agree with the evaluation of a recent editorial [] about the overwhelming challenge of capturing real-world complexities, but this is only part of the challenge. The main challenge, in our opinion, is that generative AI cannot cooperate with humans, because it does not really understand humans and, thus, is unable to support mutual understanding. The alternative roadmap suggested in this perspective essay is based on generative artificial cognition, i.e., an internal prospection machinery, which is not imposed a priori from impersonal mega databases but emerges as a personal and personalized knowledge extracted from the personal interaction of a specific cognitive agent with a specific physical and social environment. This general concept is summarized in Figure 3, which emphasizes the role of embodied cognition for supporting the evolution of an internal model capable of supporting prospection; in this framework, it clarifies the crucial role of bidirectional interaction with the physical and social environment, aiming at mutual understanding with collaborating partners, on top and on the side of prospective capabilities; finally, it focuses on the strategic role of development, driven by cognitive bootstrapping, assimilation, and social learning. At the same time, we should consider that sensory–motor–cognitive development in humans is an incremental process, involving maturation, integration, and adaptation, steered by epigenetic phenomena affected by environment. Thus, human development is not a linear process but is somehow ragged. We may expect the same also of robotic development, and thus, the dashed straight line of the figure is a simplification, somehow analogue of a regression line.

Figure 3.

Evolutionary nature of artificial cognition in collaborative robots.

In comparison with the AI approach, which implies the acquisition and processing of gigantic databases, the evolutionary approach is much more frugal in terms of the amount and diversity of information required for learning: specific information filtered through focused personal experience and not a generic large set of data. Although the basic concepts have already been research topics for many years, the careful integration in a minimalistic, frugal, and well-integrated cognitive and computational architecture is a work in progress for the upcoming years, supported by IR4 and beyond.

Author Contributions

Conceptualization, methodology, formal analysis and investigation, G.S., A.S. and P.M.; writing—original draft preparation, review and editing, P.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by Fondazione Istituto Italiano di Tecnologia, RBCS Department, in relation with the iCog Initiative, and by a Starting Grant from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program. G.A. No 804388, wHiSPER.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gilbert, D.; Wilson, T. Prospection: Experiencing the future. Science 2007, 351, 1351–1354. [Google Scholar] [CrossRef] [PubMed]

- Seligman, M.E.P.; Railton, P.; Baumeister, R.F.; Sripada, C. Navigating into the future or driven by the past. Perspect. Psychol. Sci. 2014, 8, 119–141. [Google Scholar] [CrossRef] [PubMed]

- Vernon, D.; Beetz, M.; Sandini, G. Prospection in cognitive robotics: The case for joint episodic-procedural memory. Front. Robot. AI 2015, 2, 19. [Google Scholar] [CrossRef]

- Suddendorf, T.; Corballis, M.C. The evolution of foresight: What is mental time travel, and is it unique to humans? Behav. Brain Sci. 2007, 30, 299–313. [Google Scholar] [CrossRef]

- Dautenhahn, K. Socially intelligent robots: Dimensions of human-robot interaction. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2007, 362, 679–704. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Chen, J.; Li, J.; Peng, Y.; Mao, Z. Large language models for human–robot interaction: A review. Biomim. Intell. Robot. 2023, 3, 100131. [Google Scholar] [CrossRef]

- Sandini, G.; Sciutti, A.; Morasso, P. Artificial Cognition vs. Artificial Intelligence for Next-Generation Autonomous Robotic Agents. Front. Comp. Neurosci. 2024, 18, 1349408. [Google Scholar] [CrossRef]

- Legg, C.; Hookway, C. Pragmatism. In Stanford Encyclopedia of Philosophy, Summer 2021 ed.; Zalta, E.N., Ed.; The Metaphysics Research Lab, Philosophy Department, Stanford University: Stanford, CA, USA, 2021; ISSN 1095-5054. [Google Scholar]

- Quine, W. Epistemology Naturalized. In Epistemology: An Anthology; Sosa, E., Kim, J., Eds.; Blackwell Publishing: Malden, MA, USA, 2024; pp. 292–300. ISBN 978-0-631-19724-9. [Google Scholar]

- Kiverstein, J.; Miller, M. The embodied brain: Towards a radical embodied cognitive neuroscience. Front. Hum. Neurosci. 2015, 9, 237. [Google Scholar] [CrossRef]

- Maturana, H.R.; Varela, F.J. Autopoiesis and Cognition: The Realization of the Living; Reidel Publication: Dordrecht, The Netherlands, 1980; ISBN 90-277-1015-5. [Google Scholar]

- Varela, F.J.; Thompson, E.; Rosch, E. The Embodied Mind: Cognitive Science and Human Experience; MIT Press: Cambridge, MA, USA, 1991; ISBN 9780262720212. [Google Scholar]

- Sepúlveda-Pedro, M.A. Enactive Cognition: From Sensorimotor Interactions to Autonomy and Normative Behavior. In Enactive Cognition in Place: Sense-Making as the Development of Ecological Norms; Sepúlveda-Pedro, M.A., Ed.; Springer International Publication: Berlin/Heidelberg, Germany, 2023. [Google Scholar] [CrossRef]

- Piaget, J. The Origins of Intelligence; Routledge: New York, NY, USA, 1953; ISBN 9780415513623. [Google Scholar]

- Bronfenbrenner, U. Ecological systems theory. In Six Theories of Child Development: Revised Formulations and Current Issues; Vasta, R., Ed.; Jessica Kingsley Publishers: London, UK, 1992; pp. 187–249. ISBN 978-1853021374. [Google Scholar]

- Vygotsky, L.S. Interaction between learning and development. In Mind and Society: The Development of Higher Psychological Processes; Cole, M., John-Steiner, V., Scribner, S., Souberman, E., Eds.; Harvard University Press: Cambridge, MA, USA, 1978; pp. 79–91. [Google Scholar] [CrossRef]

- Ausubel, D.P. Assimilation Theory in Meaningful Learning and Retention Processes. In The Acquisition and Retention of Knowledge: A Cognitive View; Springer: Dordrecht, The Netherlands, 2000. [Google Scholar] [CrossRef]

- Kotseruba, J.; Tsotsos, J.K. A review of 40 years in cognitive architecture research core cognitive abilities and practical applications. Artif. Intell. Rev. 2020, 53, 17–94. [Google Scholar] [CrossRef]

- Bryce, T.G.K.; Blown, E.J. Ausubel’s meaningful learning re-visited. Curr. Psychol. 2024, 43, 4579–4598. [Google Scholar] [CrossRef]

- Asada, M.; Hosoda, K.; Kuniyoshi, Y.; Ishiguro, H.; Inui, T.; Yoshikawa, Y.; Ogino, M.; Yoshida, C. Cognitive Developmental Robotics: A Survey. IEEE Trans. Auton. Ment. Dev. 2009, 1, 12–34. [Google Scholar] [CrossRef]

- Pfeifer, R.; Lungarella, M.; Iida, F. Self-Organization, Embodiment, and Biologically Inspired Robotics. Science 2007, 318, 1088–1093. [Google Scholar] [CrossRef] [PubMed]

- Vernon, D. Enaction as a conceptual framework for developmental cognitive robotics. Paladyn 2010, 1, 89–98. [Google Scholar] [CrossRef]

- Vernon, D.; Hofsten, C.; Fadiga, L. Desiderata for developmental cognitive architectures. Biol. Inspired Cogn. Archit. 2016, 18, 116–127. [Google Scholar] [CrossRef]

- Vernon, D.; Von Hofsten, C.; Fadiga, L. A Roadmap for Cognitive Development in Humanoid Robots; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar] [CrossRef]

- Carey, S. Bootstrapping & the Origin of Concepts. Daedalus 2004, 133, 59–68. [Google Scholar] [CrossRef]

- LeCun, Y. Cake Analogy 2.0. 2016. Available online: https://medium.com/syncedreview/yann-lecun-cakeanalogy-2-0-a361da560dae (accessed on 22 February 2019).

- Miller, E.K.; Buschman, T.J. Bootstrapping your brain: How interactions between the frontal cortex and basal ganglia may produce organized actions and lofty thoughts. In Neurobiology of Learning and Memory, 2nd ed.; Kesner, R.P., Martinez, J.L., Eds.; Academic Press: Cambridge, MA, USA, 2007; Chapter 10; pp. 339–354. ISBN 9780123725400. [Google Scholar]

- Zador, A.M. A critique of pure learning and what artificial neural networks can learn from animal brains. Nat. Commun. 2019, 10, 3770. [Google Scholar] [CrossRef] [PubMed]

- Zhao, B.; Lucas, C.G.; Bramley, N.R. A model of conceptual bootstrapping in human cognition. Nat. Human Behav. 2023, 8, 125–136. [Google Scholar] [CrossRef]

- Breazeal, C.; Buchsbaum, D.; Gray, J.; Gatenby, D.; Blumberg, B. Learning from and about others: Towards using imitation to bootstrap the social understanding of others by robots. Artif. Life 2005, 11, 31–62. [Google Scholar] [CrossRef]

- Sciutti, A.; Sandini, G. Interacting with Robots to Investigate the Bases of Social Interaction. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 2295–2304. [Google Scholar] [CrossRef]

- Sandini, G.; Sciutti, A. Humane robots—From robots with a humanoid body to robots with an anthropomorphic mind. ACM Trans. Hum. Robot Interact. 2018, 7, 3208954. [Google Scholar] [CrossRef]

- Merrick, K. Value systems for developmental cognitive robotics: A survey. Cogn. Syst. Res. 2017, 41, 38–55. [Google Scholar] [CrossRef]

- Weng, J.; McClelland, J.; Pentland, A.; Sporns, O.; Stockman, I.; Sur, M.; Thelen, E. Autonomous Mental Development by Robots and Animals. Science 2001, 291, 599–600. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Weng, J. Value system development for a robot. In Proceedings of the IEEE International Joint Conference on Neural Networks (IEEE Cat. No.04CH37541), Budapest, Hungary, 25–29 July 2004; Volume 4, pp. 2883–2888. [Google Scholar] [CrossRef]

- Brooks, R.A. Symbolic Error Analysis and Robot Planning. Int. J. Robot. Res. 1982, 1, 29–68. [Google Scholar] [CrossRef]

- Morasso, P. A vexing question in motor control: The degrees of freedom problem. Front. Bioeng. Biotechnol. 2022, 9, 783501. [Google Scholar] [CrossRef]

- Jeannerod, M. Neural simulation of action: A unifying mechanism for motor cognition. Neuroimage 2001, 14, S103–S109. [Google Scholar] [CrossRef]

- Grush, R. The emulation theory of representation: Motor control, imagery, and perception. Behav. Brain Sci. 2004, 27, 377–396. [Google Scholar] [CrossRef]

- Ptak, R.; Schnider, A.; Fellrath, J. The dorsal frontoparietal network: A core system for emulated action. Trends Cogn. Sci. 2017, 21, 589–599. [Google Scholar] [CrossRef]

- Hari, R.; Henriksson, L.; Malinen, S.; Parkkonen, L. Centrality of social interaction in human brain function. Neuron 2015, 88, 181–193. [Google Scholar] [CrossRef]

- Hiolle, A.; Lewis, M.; Cañamero, L. Arousal regulation and affective adaptation to human responsiveness by a robot that explores and learns a novel environment. Front. Neurorobot. 2014, 8, 17. [Google Scholar] [CrossRef]

- Tanevska, A.; Rea, F.; Sandini, G.; Cañamero, L.; Sciutti, A. A socially adaptable framework for human-robot interaction. Front. Robot. AI 2020, 7, 121. [Google Scholar] [CrossRef]

- Briggs, G.; Scheutz, M. The Case for Robot Disobedience. Sci. Am. 2016, 16, 44–47. [Google Scholar] [CrossRef] [PubMed]

- Eiben, A.E.; Ellers, J.; Meynen, G.; Nyholm, S. Robot Evolution: Ethical Concerns. Front. Robot. AI 2021, 8, 744590. [Google Scholar] [CrossRef] [PubMed]

- Hutler, B.; Rieder, T.N.; Mathews, D.J.H.; Handelman, D.A.; Greenberg, A.M. Designing robots that do no harm: Understanding the challenges of Ethics for Robots. AI Ethics 2024, 4, 463–471. [Google Scholar] [CrossRef]

- Kok, B.C.; Soh, H. Trust in robots: Challenges and opportunities. Curr. Robot. Rep. 2020, 1, 297–309. [Google Scholar] [CrossRef]

- Robinette, P.; Li, W.; Allen, R.; Howard, A.M.; Wagner, A.R. Overtrust of robots in emergency evacuation scenarios. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 101–108. [Google Scholar] [CrossRef]

- Aroyo, A.M.; Pasquali, D.; Kothig, A.; Rea, F.; Sandini, G.; Sciutti, A. Expectations vs. reality: Unreliability and transparency in a treasure hunt game with icub. IEEE Robot. Autom. Lett. 2021, 6, 5681–5688. [Google Scholar] [CrossRef]

- Alguliyev, R.; Imamverdiyev, Y.; Sukhostat, L. Cyber-physical systems and their security issues. Comput. Ind. 2018, 100, 212–223. [Google Scholar] [CrossRef]

- Wolf, W. Cyber-physical System. Computer 2009, 42, 88–89. [Google Scholar] [CrossRef]

- Jazdi, N. Cyber physical systems in the context of Industry 4.0. In Proceedings of the IEEE International Conference on Automation, Quality and Testing, Robotics, Cluj-Napoca, Romania, 22–24 May 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Lee, E.A. Cyber Physical Systems: Design Challenges. In Proceeding of the 11th IEEE International Symposium on Object and Component-Oriented Real-Time Distributed Computing (ISORC), Orlando, FL, USA, 5–7 May 2008; pp. 363–369. [Google Scholar] [CrossRef]

- Will generative AI transform robotics? Nat. Mach. Intell. 2024, 6, 579. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).